Advanced Deep Transfer Learning Using Ensemble Models for

COVID-19 Detection from X-ray Images

Walid Hariri

a

and Imed Eddine Haouli

b

Labged Laboratory, Computer Science Department, Badji Mokhtar Annaba University, Annaba, Algeria

Keywords:

COVID-19, CNN, Transfer Learning, Ensemble Model, X-ray Images.

Abstract:

The pandemic of Coronavirus disease (COVID-19) has become one of the main causes of mortality over the

world. In this paper, we employ a transfer learning-based method using five pre-trained deep convolutional

neural networks (CNN) architectures fine-tuned with an X-ray image dataset to detect COVID-19. Hence,

we use VGG-16, ResNet50, InceptionV3, ResNet101 and Inception-ResNetV2 models in order to classify the

input images into three classes (COVID-19 / Healthy / Other viral pneumonia). The results of each model

are presented in detail using 10-fold cross-validation and comparative analysis has been given among these

models by taking into account different elements in order to find the more suitable model. To further enhance

the performance of single models, we propose to combine the obtained predictions of these models using the

majority vote strategy. The proposed method has been validated on a publicly available chest X-ray image

database that contains more than one thousand images per class. Evaluation measures of the classification

performance have been reported and discussed in detail. Promising results have been achieved compared to

state-of-the-art methods where the proposed ensemble model achieved higher performance than using any

single model. This study gives more insights to researchers for choosing the best models to accurately detect

the COVID-19 virus.

1 INTRODUCTION

Since the spread of COVID-19, the real-time poly-

merase chain reaction (RT-PCR) was the most popu-

lar technique applied to detect this virus. Despite the

good performance achieved by this technique, it still

has many problems like time-consuming, false nega-

tive results, and its expensive price (Altan and Karasu,

2020). Since the mortality cases with COVID-19 is

constantly increasing, the aforementioned drawbacks

of RT-PCR test could further complicate the situation.

Recently, deep learning techniques to detect and diag-

nose the COVID-19 have become an active research

area using X-ray images or (Computerized Tomogra-

phy) CT scans (Luz et al., 2022; Hariri and Narin,

2021). Their high performance achieved to detect

other diseases such as Alzheimer using transfer learn-

ing has motivated the researchers to adopt this novel

technique to prevent against the COVID-19 pandemic

(Zaabi et al., 2020). Besides the high performance,

deep learning-based techniques are very fast com-

pared to RT-PCR test (Huang and Liao, 2022). There-

fore, we propose in this paper various deep learning

a

https://orcid.org/0000-0002-5909-5433

b

https://orcid.org/0000-0002-5902-3835

based-strategies to deal with the COVID-19 detection

and diagnosis using publicly available dataset of X-

ray images. The remainder of the paper is structured

as follows: in Section II, we present the related works.

Section III explains the contribution of the paper. The

proposed method is presented in detail in Section IV.

Experimental results and comparative study are re-

ported in Section V. Conclusions end the paper.

2 RELATED WORKS

Few weeks after the propagation of COVID-19 pneu-

monia, many works to detect the virus from radiogra-

phy imaging are carried out using deep learning-based

techniques (Ali et al., 2022). Among these techniques

we can find ”traditional deep learning methods” that

aim to train deep models from scratch using a spec-

ified labeled dataset. Since the appearance of the

COVID-19 pandemic, some datasets have been intro-

duced to allow researchers to test their models. For

example, (Zheng et al., 2020) trained a supervised

deep learning model. The segmentation of the lung

region is applied using Unet model from CT-scans.

Hariri, W. and Haouli, I.

Advanced Deep Transfer Lear ning Using Ensemble Models for COVID-19 Detection from X-ray Images.

DOI: 10.5220/0011703900003417

In Proceedings of the 18th International Joint Conference on Computer Vision, Imaging and Computer Graphics Theory and Applications (VISIGRAPP 2023) - Volume 4: VISAPP, pages

355-362

ISBN: 978-989-758-634-7; ISSN: 2184-4321

Copyright

c

2023 by SCITEPRESS – Science and Technology Publications, Lda. Under CC license (CC BY-NC-ND 4.0)

355

Other methods are based on ”deep features ex-

traction” where the deep pre-trained models have

been widely used as feature extractors, in which

the last convolutional layers or the fully connected

layers are used to feed a machine learning clas-

sifier. For example, (Ismael and S¸eng

¨

ur, 2021)

applied five pre-trained models including VGG-16,

ResNet18, ResNet50, ResNet101, and VGG19 to

train an SVM classifier. Different kernel functions are

then used in the SVM classification stage such as Lin-

ear, Quadratic, Cubic, and Gaussian kernels. An other

method used AlexNet-based features to feed an SVM

classifier is introduced in (Turkoglu, 2021). In this

work, the deep features are extracted from the fully-

connected and convolution layers. Another method

proposed by (Rahimzadeh and Attar, 2020) aims to

combine the deep extracted features from Xception

(Chollet, 2017) and ResNet50V2 (He et al., 2016b)

networks. A global feature vector is then generated

to train a classifier. From another point of view, to

be able to make a real time detection of COVID-19,

training a deep model from scratch has many prob-

lems, especially the insufficiency of representative

data and also it is time-consuming and requires high

performance machines. In this case ”transfer learn-

ing (TL)” were the most useful technique to figure

out train time and data troubles. TL is one of the

deep learning approaches that consists of reusing a

pre-trained model for one job to accomplish another

one in the same domain of missions. By way of ex-

ample, (Vaid et al., 2020) applied a transfer learn-

ing method using VGG-16 pre-trained model. They

used a labeled frontal X-ray images dataset of patients

from different countries around the word. The partic-

ularity of the used dataset lies in the additional infor-

mation of each patient such as location, old and gen-

der. (Das et al., 2020), however, used the extreme

version of Inception (Xception) model, in order to

develop an automated deep transfer learning to de-

tect COVID-19 pneumonia in X-ray images. Trans-

fer learning has also been used to classify the CT

scans of lungs into COVID-19 or NORMAL cases as

presented in (Ahuja et al., 2021). Four pre-trained

models are then used including ResNet18, ResNet50,

ResNet101, and SqueezeNet. A different transfer

learning-based method using the DetRaC model is

presented in (Abbas et al., 2021). The combination

of TL and the DetRaC model makes the proposed

method able to deal with any irregularities in the im-

age dataset by investigating its class boundaries us-

ing a class decomposition mechanism. Authors in

(Nayak et al., 2020) used eight pre-trained models

namely, AlexNet, VGG-16, GoogleNet, MobileNet-

V2, Squeezenet, ResNet34, ResNet50 and Incep-

tionV3. They evaluated the pre-trained models with

X-ray illustration taken from covid-chestxray-dataset

(Cohen et al., 2020). Similar method has been pro-

posed in (Kumar and Mallik, 2022). After fine-tuning

several CNN models, the authors proposed to train

the output each models using another deep neural

network to enhance the performance. To deal with

the lack of grand amount of labeled datasets, ”gen-

erative models” have been widely used to generate

new images using the existing ones. Many strate-

gies have been carried out such as flipping the im-

age horizontally of vertically, zooming in or out.

For example, (Loey et al., 2020) proposed a model

of two axes, the first one about the data augmen-

tation using common techniques across Conditional

generative adversarial network (CGAN), the second

axe is about deep TL model, which is formed of

five model, named as following: AlexNet, VGG-16,

VGG-19, GoogleNet and ResNet50. All of these

models are fine-tuned with COVID-19 CT-image

dataset. Another data-augmentation-based method

using X-ray and CT Chest Images has been pro-

posed in (Bargshady et al., 2022). It consists of cou-

pling GANs with with trained, semi-supervised Cy-

cleGAN. Inception V3 is then fine-tuned to detect

COVID-19.

3 CONTRIBUTION OF THE

PAPER

A transfer learning-based technique is applied in

this paper to detect COVID-19 virus using labeled

datasets of X-ray images. To avoid training a deep

CNN from scratch on a limited labeled dataset, we

propose in this paper to carry out a transfer learning

technique using five pre-trained models and acquired

data only to fine-tune them. This is very useful when

the data is abound for an auxiliary domain, but very

limited labeled data is available for the domain of ex-

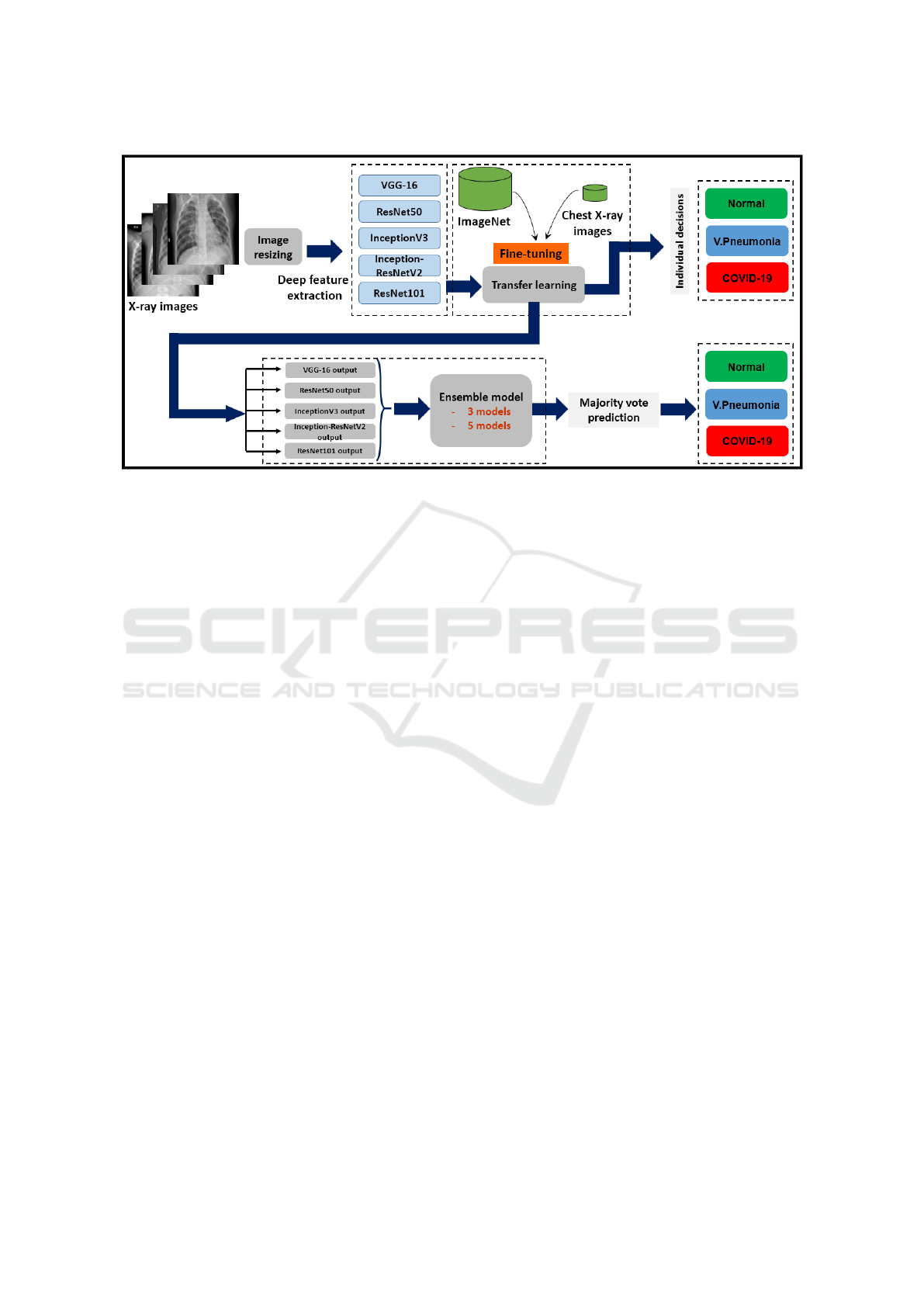

periment. Figure 1 presents our proposal overview.

We opted for the following pre-trained models:

VGG-16, ResNet50, InceptionV3, ResNet101 and

Inception-ResNetV2. This choice is based on the di-

versity of these models, the difference of their archi-

tecture as well as their structure. A comparative study

is then conducted between these models in terms of

training accuracy, loss accuracy, validation accuracy

and validation loss during the training stage. A con-

fusion matrix is then generated after the classifica-

tion of test samples. Other performance measures

are computed to show the efficiency of each model

(e.g. recall, precision, F-score). The difference be-

tween the applied models can be useful in our sec-

VISAPP 2023 - 18th International Conference on Computer Vision Theory and Applications

356

Figure 1: Overview of the different steps of our proposal.

ond step where their outputs will be combined using

an ensemble learning technique (also called ensemble

model) using the majority vote strategy. This combi-

nation enhances the classification performance of the

non-learned samples compared to the obtained rates

using each pre-trained model separately.

4 THE PROPOSED METHOD

4.1 Pre-Trained Models

In this Section, we present the architecture of the five

models that we used in our TL system. These mod-

els are pre-trained on ImageNet database (Krizhevsky

et al., 2012).

• VGG-16: (Simonyan and Zisserman, 2014) is

trained on the very large ImageNet dataset which

has over 14 million images and 1000 classes. It

contains 16 layers including 13 convolutional lay-

ers, 3 dense layers and 5 Max Pooling layers.

Each convolutional layer is 3×3 layer with a stride

size of 1 and the same padding. The pooling lay-

ers of VGG-16 are all 2×2 pooling layers with a

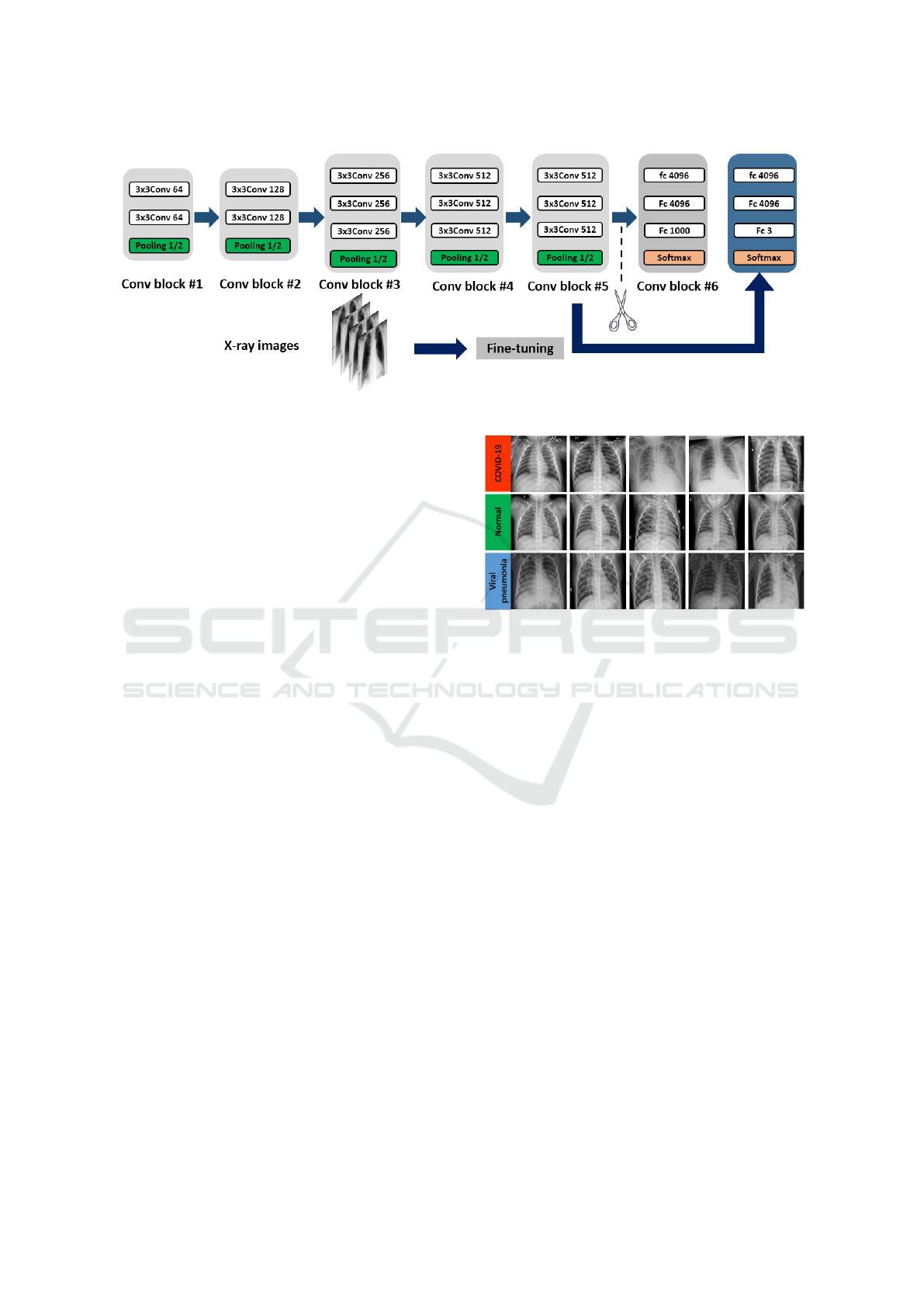

stride size of 2. Figure 2 presents modified VGG-

16 architecture as an example of TL.

• ResNet50: ResNet50 is a variant of ResNet pre-

trained model on ImageNet dataset which has 48

Convolution layers along with 1 MaxPool and 1

Average Pool layer. It has 3.8 × 10

9

Floating

points operations (He et al., 2016a).

• ResNet101: Anther variant of ResNet deep neu-

ral network series, trained on more than a million

images from the ImageNet database (Dai et al.,

2016). It consists of 101 deep layers with identity

connection between them.

• Inception-ResNetV2: A CNN that builds on the

Inception family of architectures (Szegedy et al.,

2017). Its architecture combines the Inception ar-

chitecture with residual connections. This CNN

contains 164 deep layers trained on ImageNet

dataset and is able to classify images into 1000

classes.

• InceptionV3: presented by google is the third

version of Inception DL convolution architec-

tures, with 42 deep layers contain Convolution

layer, AvgPool, MaxPool, Concat, Dropout, Fully

Connected layer and Softmax activation function

(Szegedy et al., 2016). The input layer size of

this model is different from the other models

(299 × 299 × 3 instead of 224 × 224 × 3).

4.2 Transfer Learning

We aim to transfer the acquired information from the

CNN models pre-trained on ImageNet dataset to our

specific task. The issue that needs to be addressed is

the highly dependance of these models on the initial

dataset, whereas our Chest X-ray images are differ-

ent. Consequently, the generalization of the network

will be poor since the extracted features from the large

amount of data are not inadequate to represent our

target images (to feed a classifier or softmax func-

tion). The solution consists of fine-tuning the pre-

trained models on our Chest X-ray images dataset that

is a very small dataset compared to the ImageNet. In

Advanced Deep Transfer Learning Using Ensemble Models for COVID-19 Detection from X-ray Images

357

Figure 2: The modified VGG-16 network architecture.

other words, pre-trained CNN structures are updated

to suit our classification task. This strategy is gen-

erally much faster than the traditional training of the

CNN model from scratch with arbitrary weights. For

example, using VGG-16, the total number of parame-

ters after training is 14,789,955. The number of train-

able parameters is 75,267 which is very small com-

pared to 14,714,688 of non-trainable parameters.

4.3 Deep Ensemble Learning

To enhance the classification performance, we exploit

the different architectures of the five pre-trained mod-

els and we fuse their outputs to make a global deci-

sion. Thus, we propose to apply a majority voting

strategy as an ensemble learning stage. We then use

the combination of 5 and 3 models, respectively using

the output of the last epoch (30) of the 10

th

training

fold. The obtained results are presented and discussed

in detail in the following section.

5 EXPERIMENTAL RESULTS

All the experiments were performed on Windows

7 operating system 64 bits the TensorFlow/Keras

framework of python language. The implementation

of our proposal is provided by Google Colaboratory

Notebooks. The obtained results by each pre-trained

model, and using the deep ensemble model strategy

are presented in the following.

5.1 Database Description

COVID19 Radiography Database (Rahman, 2020):

contains 3616 COVID-19 positive cases along with

10,192 Healthy, 6012 Lung Opacity (Non-COVID

Figure 3: X-ray images from COVID19 Radiography

Database (Rahman, 2020).

lung infection), and 1345 Viral Pneumonia im-

ages. In this work, we carry out 3 class classification

(COVID-19, Healthy and Viral pneumonia). Some

scans from this database are shown in Figure 3.

5.2 Method Performance

The 10 fold-cross validation setting is applied using

the 4

th

version of COVID19 Radiography Database.

It is randomly split into training and test datasets. The

initial learning rate is of 0.0003 and cross entropy

loss. The models are trained for 30 epochs where the

batch size is 32.

In the following, we show the training perfor-

mances (accuracy and loss) as well as the validation

performances using the three best models including

Inception-ResNetV2, VGG-16 and InceptionV3, re-

spectively. The performance during the fold 1, 6 and

10 are displayed in detail in Figure 7. These curves

show that using VGG-16 architecture, the highest

training accuracy is observed 98.85% in epoch 7

where the highest validation accuracy is 99.65% in

epoch 24. The loss is 0.0324 and 0.024 at epoch 30 of

training and validation respectively. All the reported

results are put out from fold 10.

VISAPP 2023 - 18th International Conference on Computer Vision Theory and Applications

358

On the contrary, Inception-ResNetV2 achieved

higher training accuracy compared to VGG-16 by

99.46% in epoch 29. The highest validation accuracy

is 100%. Training and validation loss are respectively

0.026 and 0.005 (See Figure 8). Although the initial

loss value of Inception-ResNetV2 is very high com-

pared to that of VGG-16 (1.00 vs 0.2), the previous

values show that Inception-ResNetV2 is more effi-

cient during the training and validation stages com-

pared to VGG-16 over all the epochs of the 10

th

fold.

InceptionV3, however, is the third best model among

the five models (See Figure 9).

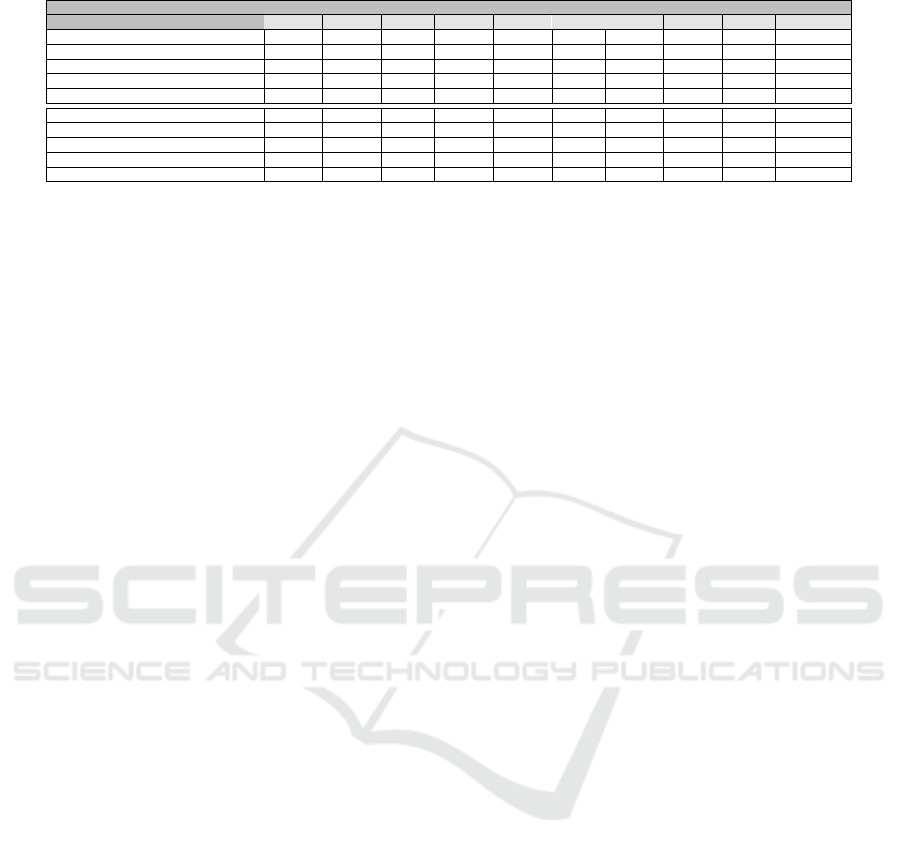

More details about the performance measures of

the best three models as well as the two remaining

ones (ResNet50 and ResNet101) are shown in Table

1. According to the displayed values of precision, re-

call, and F-score, ResNet101 is slightly better than

ResNet50. This performance can be clearly seen in

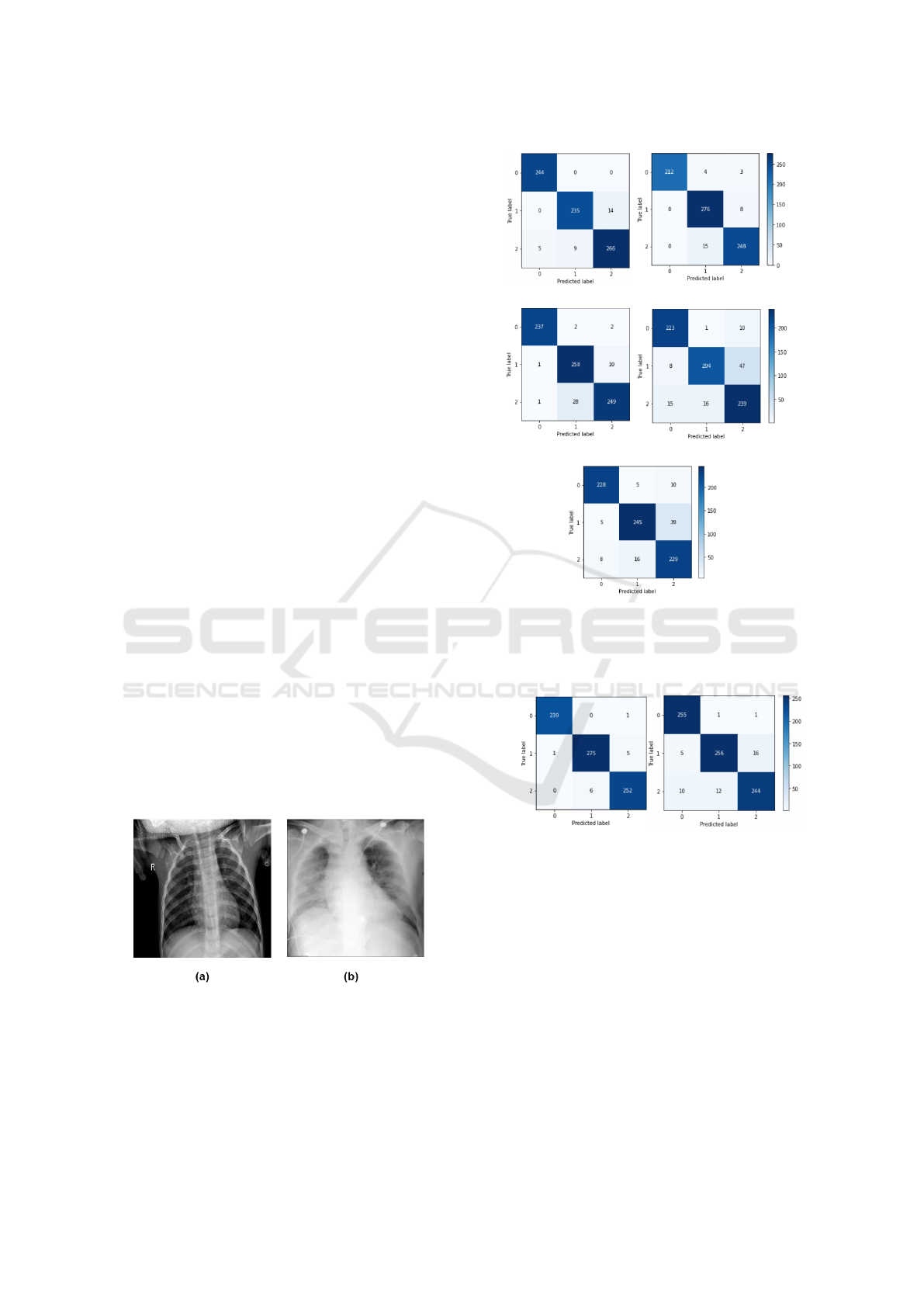

the confusion matrix of these models. Figure 5 shows

that ResNet101 has less false classifications. From

the same Figure, we can also notice that false classi-

fied samples of VGG-16 and Inception-ResNetV2 are

very limited compared to the two previous models.

This explains the very high rates registered as recall,

precision and F-score. Figure 4 presents two chal-

lenging samples of false positive and false negative

cases.

The use of ensemble learning, however, enhances

all the previous performances using the combination

of the three and five models’ outputs as shown in

the Table 1. Using this strategy, we achieve 1.00

of precision, recall and F-score when dealing with

the COVID-19 classes. Compared to recent state-

of-the-art methods, our proposed methods achieved

higher classification rate of the test set by 0.96 us-

ing the ensemble model of the combination (VGG-

16+ResNet50+ResNet101).

Figure 4: (a): False positive case, (b): false negative case.

5.3 Discussions

The experimental study proves that the TL is one of

the best deep learning techniques to efficiently detect

the COVID-19 using X-ray images.

(a) VGG-16 (b) IncResV2

(c) InceptionV3 (d) ResNet50

(e) ResNet101

Figure 5: Confusion matrix of the pre-trained models. La-

bel 0 refers to COVID-19 class, label 1 refers to Healthy

class, and label 2 refers to the VP.

(a) 5 models (b) 3 models

Figure 6: Confusion matrix of the majority vote prediction.

(a) refers to all the five models. (b) refers to the following

three models: VGG-16, ResNet50 and ResNet101.

The obtained results show that the ensemble mod-

els enhance the classification performance of the fine-

tuned CNNs. This paper proposes five pre-trained

models that give promising results which are still

competitive to those of the state-of-the-art method.

The TL strategy using the combination of the best

models outperforms the other methods and gives 0.98

accuracy of test images. The high performances

achieved are explained by the fact that TL is suitable

since the first CNN layers learn low-level features.

These features and mostly invariable from a classi-

Advanced Deep Transfer Learning Using Ensemble Models for COVID-19 Detection from X-ray Images

359

(a) Fold 1 acc (b) Fold 6 acc (c) Fold 10 acc

(d) Fold 1 loss (e) Fold 6 loss (f) Fold 10 loss

Figure 7: Training and validation accuracy / loss using VGG-16.

(a) Fold 1 acc (b) Fold 6 acc (c) Fold 10 acc

(d) Fold 1 loss (e) Fold 6 loss (f) Fold 10 loss

Figure 8: Training and validation accuracy / loss using Inception-ResNetV2.

(a) Fold 1 acc (b) Fold 6 acc (c) Fold 10 acc

(d) Fold 1 loss (e) Fold 6 loss (f) Fold 10 loss

Figure 9: Training and validation accuracy / loss using InceptionV3.

VISAPP 2023 - 18th International Conference on Computer Vision Theory and Applications

360

Table 1: Performance of 5 fine-tuned CNNs and ensemble learning with X-ray images of COVID-19, Healthy and VP cases.

Model / measure Precision Recall F-score Accuracy

Class COVID Healthy VP COVID Healthy VP COVID Healthy VP Test set

VGG-16 0.98 0.96 0.95 1.00 0.94 0.95 0.99 0.95 0.95 0.96

ResNet50 0.91 0.92 0.81 0.95 0.79 0.89 0.93 0.85 0.84 0.88

ResNet101 0.95 0.92 0.82 0.94 0.85 0.91 0.94 0.88 0.86 0.90

Inception-ResNetV2 1.00 0.94 0.96 0.97 0.97 0.94 0.98 0.95 0.95 0.96

InceptionV3 0.99 0.90 0.95 0.98 0.96 0.90 0.99 0.93 0.92 0.95

All five models 0.94 0.95 0.93 0.99 0.92 0.93 0.97 0.94 0.93 0.94

VGG-16+ResNet50+ResNet101 1.00 0.98 0.98 1.00 0.98 0.98 1.00 0.98 0.98 0.98

Pham et al. (Pham, 2021) / / / / / / / / / 0.96

Cavallo et al. (Cavallo et al., 2020) / / / / / / / / / 0.91

Rajpal et al. (Rajpal et al., 2020) 0.99 0.91 0.94 0.96 0.96 0.92 0.97 0.93 0.93 0.94

fication task to another (i.e. edges). The fine-tuning

provides specific features of the target domain such

as COVID-19 detection. It is worth noting that the

proposed method can be improved using class weight

algorithm to deal with the data imbalance issue which

is a common challenge in medical image diagnosis.

This is an important perspective since the majority

of medical datasets contains majority class of healthy

cases compared to positive cases.

6 CONCLUSION

Since the epidemic is still fast-spreading, the pro-

posed method seems to be a good solution to early di-

agnose the virus. We fine-tuned five pre-trained CNN

models to our COVID-19 dataset of X-ray images.

High classification performances have been achieved

especially with VGG-16 and Inception-ResNetV2.

Slightly lower performances have been achieved us-

ing the other models. This transfer learning technique

reduces considerably the training cost compared to

learning from scratch that becomes an amassed tech-

nique. To exploit the different features extracted by

each model, we proposed to combine their outputs to

find a global decision through a majority voting strat-

egy. This strategy further enhances the performance

of the proposed transfer learning-based method. In

future work, we look at the application of Graph neu-

ral network along with CNNs to improve the perfor-

mance and reduce the computation time. Also, the

visualisation using Grad-Cam technique can be made

to highlight the attention map of the classification.

ACKNOWLEDGEMENTS

The authors would like to thank the Agency for Re-

search Results Valuation and Technological Develop-

ment, Algeria (DGRSDT).

REFERENCES

Abbas, A., Abdelsamea, M. M., and Gaber, M. M. (2021).

Classification of covid-19 in chest x-ray images using

detrac deep convolutional neural network. Applied In-

telligence, 51(2):854–864.

Ahuja, S., Panigrahi, B. K., Dey, N., Rajinikanth, V., and

Gandhi, T. K. (2021). Deep transfer learning-based

automated detection of covid-19 from lung ct scan

slices. Applied Intelligence, 51(1):571–585.

Ali, H. A., Hariri, W., Zghal, N. S., and Aissa, D. B. (2022).

A comparison of machine learning methods for best

accuracy covid-19 diagnosis using chest x-ray images.

In 2022 IEEE 9th International Conference on Sci-

ences of Electronics, Technologies of Information and

Telecommunications (SETIT), pages 349–355. IEEE.

Altan, A. and Karasu, S. (2020). Recognition of covid-19

disease from x-ray images by hybrid model consist-

ing of 2d curvelet transform, chaotic salp swarm algo-

rithm and deep learning technique. Chaos, Solitons &

Fractals, 140:110071.

Bargshady, G., Zhou, X., Barua, P. D., Gururajan, R., Li, Y.,

and Acharya, U. R. (2022). Application of cyclegan

and transfer learning techniques for automated detec-

tion of covid-19 using x-ray images. Pattern Recogni-

tion Letters, 153:67–74.

Cavallo, A. U., Troisi, J., Forcina, M., Mari, P., Forte, V.,

Sperandio, M., Pagano, S., Cavallo, P., Floris, R., and

Geraci, F. (2020). Texture analysis in the evaluation

of covid-19 pneumonia in chest x-ray images: a proof

of concept study.

Chollet, F. (2017). Xception: Deep learning with depthwise

separable convolutions. In Proceedings of the IEEE

conference on computer vision and pattern recogni-

tion, pages 1251–1258.

Cohen, J. P., Morrison, P., Dao, L., Roth, K., Duong, T. Q.,

and Ghassemi, M. (2020). Covid-19 image data col-

lection: Prospective predictions are the future. arXiv

preprint arXiv:2006.11988.

Dai, J., Li, Y., He, K., and Sun, J. (2016). R-fcn: Object de-

tection via region-based fully convolutional networks.

arXiv preprint arXiv:1605.06409.

Das, N. N., Kumar, N., Kaur, M., Kumar, V., and Singh,

D. (2020). Automated deep transfer learning-based

approach for detection of covid-19 infection in chest

x-rays. Irbm.

Hariri, W. and Narin, A. (2021). Deep neural networks

for covid-19 detection and diagnosis using images and

Advanced Deep Transfer Learning Using Ensemble Models for COVID-19 Detection from X-ray Images

361

acoustic-based techniques: a recent review. Soft com-

puting, 25(24):15345–15362.

He, K., Zhang, X., Ren, S., and Sun, J. (2016a). Deep resid-

ual learning for image recognition. In Proceedings of

the IEEE conference on computer vision and pattern

recognition, pages 770–778.

He, K., Zhang, X., Ren, S., and Sun, J. (2016b). Iden-

tity mappings in deep residual networks. In Euro-

pean conference on computer vision, pages 630–645.

Springer.

Huang, M.-L. and Liao, Y.-C. (2022). A lightweight cnn-

based network on covid-19 detection using x-ray and

ct images. Computers in Biology and Medicine, page

105604.

Ismael, A. M. and S¸eng

¨

ur, A. (2021). Deep learning

approaches for covid-19 detection based on chest

x-ray images. Expert Systems with Applications,

164:114054.

Krizhevsky, A., Sutskever, I., and Hinton, G. E. (2012). Im-

agenet classification with deep convolutional neural

networks. Advances in neural information processing

systems, 25:1097–1105.

Kumar, S. and Mallik, A. (2022). Covid-19 detection from

chest x-rays using trained output based transfer learn-

ing approach. Neural Processing Letters, pages 1–24.

Loey, M., Manogaran, G., and Khalifa, N. E. M. (2020). A

deep transfer learning model with classical data aug-

mentation and cgan to detect covid-19 from chest ct

radiography digital images. Neural Computing and

Applications, pages 1–13.

Luz, E., Silva, P., Silva, R., Silva, L., Guimar

˜

aes, J.,

Miozzo, G., Moreira, G., and Menotti, D. (2022). To-

wards an effective and efficient deep learning model

for covid-19 patterns detection in x-ray images. Re-

search on Biomedical Engineering, 38(1):149–162.

Nayak, S. R., Nayak, D. R., Sinha, U., Arora, V., and Pa-

chori, R. B. (2020). Application of deep learning tech-

niques for detection of covid-19 cases using chest x-

ray images: A comprehensive study. Biomedical Sig-

nal Processing and Control, 64:102365.

Pham, T. D. (2021). Classification of covid-19 chest x-

rays with deep learning: new models or fine tuning?

Health Information Science and Systems, 9(1):1–11.

Rahimzadeh, M. and Attar, A. (2020). A new modified deep

convolutional neural network for detecting covid-19

from x-ray images. arXiv preprint arXiv:2004.08052.

Rahman, T. (2020). COVID-19 Radiography Database.

https://www.kaggle.com/tawsifurrahman/

covid19-radiography-database/. [Online;

accessed 05-April-2021].

Rajpal, S., Agarwal, M., Rajpal, A., Lakhyani, N., and Ku-

mar, N. (2020). Cov-elm classifier: an extreme learn-

ing machine based identification of covid-19 using

chest x-ray images. arXiv preprint arXiv:2007.08637.

Simonyan, K. and Zisserman, A. (2014). Very deep con-

volutional networks for large-scale image recognition.

arXiv preprint arXiv:1409.1556.

Szegedy, C., Ioffe, S., Vanhoucke, V., and Alemi, A.

(2017). Inception-v4, inception-resnet and the impact

of residual connections on learning. In Proceedings

of the AAAI Conference on Artificial Intelligence, vol-

ume 31.

Szegedy, C., Vanhoucke, V., Ioffe, S., Shlens, J., and Wo-

jna, Z. (2016). Rethinking the inception architecture

for computer vision. In Proceedings of the IEEE con-

ference on computer vision and pattern recognition,

pages 2818–2826.

Turkoglu, M. (2021). Covidetectionet: Covid-19 diagnosis

system based on x-ray images using features selected

from pre-learned deep features ensemble. Applied In-

telligence, 51(3):1213–1226.

Vaid, S., Kalantar, R., and Bhandari, M. (2020). Deep

learning covid-19 detection bias: accuracy through

artificial intelligence. International Orthopaedics,

44:1539–1542.

Zaabi, M., Smaoui, N., Derbel, H., and Hariri, W. (2020).

Alzheimer’s disease detection using convolutional

neural networks and transfer learning based methods.

In 2020 17th International Multi-Conference on Sys-

tems, Signals & Devices (SSD), pages 939–943. IEEE.

Zheng, C., Deng, X., Fu, Q., Zhou, Q., Feng, J., Ma, H.,

Liu, W., and Wang, X. (2020). Deep learning-based

detection for covid-19 from chest ct using weak label.

MedRxiv.

VISAPP 2023 - 18th International Conference on Computer Vision Theory and Applications

362