Targeted Adversarial Attacks on Deep Reinforcement Learning Policies

via Model Checking

Dennis Gross

1

, Thiago D. Sim

˜

ao

1

, Nils Jansen

1

and Guillermo A. P

´

erez

2

1

Institute for Computing and Information Sciences, Radboud University, Toernooiveld 212,

6525 EC Nijmegen, The Netherlands

2

Department of Computer Science, University of Antwerp – Flanders Make, Middelheimlaan 1, 2020 Antwerpen, Belgium

Keywords:

Adversarial Reinforcement Learning, Model Checking.

Abstract:

Deep Reinforcement Learning (DRL) agents are susceptible to adversarial noise in their observations that can

mislead their policies and decrease their performance. However, an adversary may be interested not only

in decreasing the reward, but also in modifying specific temporal logic properties of the policy. This paper

presents a metric that measures the exact impact of adversarial attacks against such properties. We use this

metric to craft optimal adversarial attacks. Furthermore, we introduce a model checking method that allows

us to verify the robustness of RL policies against adversarial attacks. Our empirical analysis confirms (1) the

quality of our metric to craft adversarial attacks against temporal logic properties, and (2) that we are able to

concisely assess a system’s robustness against attacks.

1 INTRODUCTION

Deep reinforcement learning (DRL) has changed how

we build agents for sequential decision-making prob-

lems (Mnih et al., 2015). It has triggered applica-

tions in critical domains like energy and transporta-

tion (Farazi et al., 2021; Nakabi and Toivanen, 2021).

An RL agent learns a near-optimal policy (based on

a given objective) by making observations and gain-

ing rewards through interacting with the environ-

ment (Sutton and Barto, 2018). Despite the success

of RL, potential security risks limit its usage in real-

life applications. The so-called adversarial attacks in-

troduce noise into the observations and mislead the

RL decision-making to drop the cumulative reward,

which may lead to unsafe behaviour (Huang et al.,

2017; Amodei et al., 2016).

Generally, rewards lack the expressiveness to en-

code complex safety requirements (Vamplew et al.,

2022; Hasanbeig et al., 2020). Therefore, for an ad-

versary, capturing how much the cumulative reward

is reduced may be too generic for attacks targeting

specific safety requirements. For instance, an RL

taxi agent may be optimized to transport passengers

to their destinations. With the already existing ad-

versarial attacks, the attacker can prevent the agent

from transporting the passenger. However, the at-

tacker cannot create controlled adversarial attacks that

may increase the probability that the passenger never

gets picked up or that the passenger gets picked up

but never arrives at its destination. More generally,

current adversary attacks are not able to control tem-

poral logic properties.

This paper aims to combine adversarial RL with

rigorous model checking (Baier and Katoen, 2008),

which allows the adversary to create so-called prop-

erty impact attacks (PIAs) that can influence specific

RL policy properties. These PIAs are not limited by

properties that can be expressed by rewards (Hahn

et al., 2019; Hasanbeig et al., 2020; Vamplew et al.,

2022), but support a broader range of properties that

can be expressed by probabilistic computation tree

logic (PCTL; Hansson and Jonsson, 1994). Our ex-

periments show that for PCTL properties, it is pos-

sible to create targeted adversarial attacks that influ-

ence them specifically. Furthermore, the combination

of model checking and adversarial RL allows us to

verify via permissive policies (Dr

¨

ager et al., 2015)

how vulnerable trained policies are against PIAs. Our

main contributions are: a metric to measure the im-

pact of adversarial attacks on a broad range of RL

policy properties, a property impact attack (PIA) to

target specific properties of a trained RL policy, and

a method that checks the robustness of RL policies

against adversarial attacks.

The empirical analysis shows that the method to at-

Gross, D., Simão, T., Jansen, N. and Pérez, G.

Targeted Adversarial Attacks on Deep Reinforcement Learning Policies via Model Checking.

DOI: 10.5220/0011693200003393

In Proceedings of the 15th International Conference on Agents and Artificial Intelligence (ICAART 2023) - Volume 3, pages 501-508

ISBN: 978-989-758-623-1; ISSN: 2184-433X

Copyright

c

2023 by SCITEPRESS – Science and Technology Publications, Lda. Under CC license (CC BY-NC-ND 4.0)

501

tack RL policies can effectively modify PCTL prop-

erties. Furthermore, the results support the theoretical

claim that it is possible to model check the robustness

of RL policies against property impact attacks.

The paper is structured in the following way. First,

we summarize the related work and position our pa-

per in it. Second, we explain the fundamentals of our

technique. Then, we present the adversarial attack

setting, define our property impact attack, and show

a way to model check policy robustness against such

adversarial attacks. After that, we evaluate our meth-

ods in multiple environments.

2 RELATED WORK

We now summarize the related work and position our

paper in between adversarial RL and model checking.

There exist a variety of adversarial attack methods

to attack RL policies with the goal of dropping their

total expected reward (Chan et al., 2020; Lin et al.,

2017b; Ilahi et al., 2022; Lin et al., 2017a; Clark et al.,

2018; Yu and Sun, 2022). The first proposed adversar-

ial attack on DRL policies (Huang et al., 2017) uses

a modified version of the fast gradient sign method

(FGSM), developed by Goodfellow et al. (2015), to

force the RL policy to make malicious decisions (for

more details, see Section 3.2). However, none of the

previous work let the attacker target temporal logic

properties of RL policies. Chan et al. (2020) create

more effective attacks that modify only one feature

(if the smallest sliding window is used) of the agent’s

observation by empirically measuring the impact of

each feature on the reward. We build upon this idea to

measure the feature impact on temporal logic proper-

ties.

3 BACKGROUND

In this section, we introduce the necessary founda-

tions.

3.1 Probabilistic Systems

A probability distribution over a set X is a function

µ : X → [0, 1] with

∑

x∈X

µ(x) = 1. The set of all dis-

tributions over X is denoted by Distr(X ).

Definition 3.1 (Markov Decision Process). A

Markov decision process (MDP) is a tuple M =

(S, s

0

, Act, T, rew) where S is a finite, nonempty set

of states, s

0

∈ S is an initial state, Act is a finite set

of actions, T : S × Act → Distr(S) is a probability

transition function. We employ a factored state rep-

resentation S ⊆ Z

n

, where each state s ∈ Z

n

is an n-

dimensional vector of features ( f

1

, f

2

, ..., f

n

) such that

f

i

∈ Z for 1 ≤ i ≤ n. We define rew: S × Act → R as

a reward function.

The available actions in s ∈ S are Act(s) = {a ∈

Act | T (s, a) 6= ⊥}. An MDP with only one action per

state (∀s ∈ S : |Act(s)| = 1) is a discrete-time Markov

chain (DTMC). Note that features do not necessarily

have to have the same domain size. We define F as

the set of all features f

i

in state s ∈ S.

A path of an MDP M is an (in)finite sequence

τ = s

0

a

0

, r

0

−−−→ s

1

a

1

, r

1

−−−→ ..., where s

i

∈ S, a

i

∈ Act(s

i

),

r

i

:

= rew(s

i

, a

i

), and T (s

i

, a

i

)(s

i+1

) 6= 0. A state s

0

is

reachable from state s if there exists a path τ from

state s to state s

0

. We say a state s is reachable if s is

reachable from s

0

.

Definition 3.2 (Policy). A memoryless deterministic

policy for an MDP M=(S, s

0

, Act, T, rew) is a function

π: S → Act that maps a state s ∈ S to an action a ∈

Act(s).

Applying a policy π to an MDP M yields an

induced DTMC, denoted as D, where all non-

determinism is resolved. We say a state s is reachable

by a policy π if s is reachable in the DTMC induced

by π. Λ is the set of all possible memoryless policies.

To analyze the properties of an induced DTMC, it

is necessary to specify the properties via a specifica-

tion language like probabilistic computation tree logic

PCTL (Hansson and Jonsson, 1994).

Definition 3.3 (PCTL Syntax). Let AP be a set

of atomic propositions. The following gram-

mar defines a state formula: Φ

:

= true | a | Φ

1

∧

Φ

2

| ¬Φ |P

p

|P

max

p

(φ) | P

min

p

(φ) where a ∈ AP, ∈

{<, >, ≤, ≥}, p ∈ [0, 1] is a threshold, and φ is a

path formula which is formed according to the follow-

ing grammar φ

:

= X Φ | φ

1

U φ

2

| φ

1

F

θt

φ

2

|G Φ with

θ = {<, ≤}.

PCTL formulae are interpreted over the states of

an induced DTMC. In a slight abuse of notation, we

use PCTL state formulas to denote probability values.

That is, we sometimes write P

p

(φ) where we omit

the threshold p. For instance, P(F

≤100

collision) de-

notes the reachability probability of eventually run-

ning into a collision within the first 100 time steps.

There is a variety of model checking algorithms

for verifying PCTL properties (Courcoubetis and

Yannakakis, 1988, 1995), and PRISM and Storm offer

efficient and mature tool support (Kwiatkowska et al.,

2011; Hensel et al., 2022). COOL-MC (Gross et al.,

2022a) allows model checking of a trained RL policy

against a PCTL property and MDP. The tool builds the

ICAART 2023 - 15th International Conference on Agents and Artificial Intelligence

502

induced DTMC on the fly via an incremental building

process (Cassez et al., 2005; David et al., 2015).

3.2 Adversarial Attacks on DRL

Policies

The standard learning goal for RL is to find a policy

π in a MDP such that π maximizes the expected ac-

cumulated discounted rewards, that is, E[

∑

L

t=0

γ

t

R

t

],

where γ with 0 ≤ γ ≤ 1 is the discount factor, R

t

is the

reward at time t, and L is the total number of steps.

DRL uses neural networks to train policies. A neu-

ral network is a function parameterized by weights θ.

In DRL, the policy π is encoded using a neural net-

work which can be trained by minimizing a sequence

of loss functions J(θ, s, a) (Mnih et al., 2013).

An adversary is a malicious actor that seeks to

harm or undermine the performance of an RL system.

For instance, an adversary may try to decrease the ex-

pected discounted reward by attacking the RL policy

via adversarial attacks.

Definition 3.4 (Adversarial Attack). An adversarial

attack δ : S → S maps a state s to an adversarial state

s

adv

(see Figure 1). A successful adversarial attack

at a given state s leads to a misjudgment of the RL

policy (π(s) 6= π(δ(s))) and an attack is ε-bounded

if kδ(s) − sk

∞

≤ ε with l

∞

-norm defined as kδ(s) −

sk

∞

= max

δ

i

∈δ

|δ

i

− s

i

|.

Recall that states are n-dimensional vectors of fea-

tures from Z

n

. Executing a policy π on an MDP M

and attacking the policy π at each reachable state s by

δ yields an adversarial-induced DTMC D

adv

. There

exist a variety of adversarial attack methods to create

adversarial attacks δ (Ilahi et al., 2022; Gleave et al.,

2020; Lee et al., 2020, 2021; Rakhsha et al., 2020;

Carlini and Wagner, 2017).

Our work builds upon the FGSM attack and the

work of Chan et al. (2020). Given the weights θ

of the neural network policy π and a loss J(θ, s, a)

with state s and a

:

= π(s), the FGSM, denoted as

δ

FGSM

: S → S, adds noise whose direction is the same

as the gradient of the loss J(θ, s, a) w.r.t the state s to

the state s (Huang et al., 2017) and the noise is scaled

by ε ∈ Z (see Equation (1)). Note that we are deal-

ing with integer ε-values because our states are com-

prised of integer features. We specify the 5-operator

as a vector differential operator. Depending on the

gradient, we either add or subtract ε.

δ

FGSM

(s) = s + ε · sign(5

s

J(θ, s, a)) (1)

A FGSM for feature f

i

, denoted as δ

( f

i

)

FGSM

(s), modifies

only the feature f

i

in state s.

δ

( f

i

)

FGSM

(s) = s + ε · sign(5

s

f

i

J(θ, s, a)) (2)

RL policy

Environment

π(s)

s

rew

(a) RL policy interaction with the environment.

RL policy

Environment

Attacker

π(s

adv

)

s

rew

s

adv

= δ(s)

rew

(b) An adversary manipulates with δ the observations of the

RL policy π and its interaction with the environment.

Figure 1: RL (a) vs. adversarial RL (b).

We denote the set of all possible ε-bounded attacks

at state s via feature f

i

, including δ

( f

i

)

(s) = s for no

attack, as ∆

( f

i

)

ε

(s).

Chan et al. (2020) first generate for all features

a static reward impact (SRI) map by attacking each

feature (in the case of the smallest sliding window)

with the FGSM attack to measure its impact (the drop

of the expected reward) offline. A feature f

i

with a

more significant impact indicates that changing this

feature f

i

via δ

( f

i

)

FGSM

will influence the expected dis-

counted reward more than via another feature f

k

with

a less significant impact. For each feature f

i

, this is

done multiple times N, where each iteration executes

the RL policy on the environment and attacks at every

state the feature f

i

via the FGSM attack δ

( f

i

)

FGSM

. Af-

ter calculating the SRI, they use all the SRI values of

the features f

i

to select the most vulnerable feature to

attack the deployed RL policy.

Adversarial training retrains the already trained

RL policy by using adversarial attacks during train-

ing to increase the RL policy robustness (Pinto et al.,

2017; Liu et al., 2022; Korkmaz, 2021b).

4 METHODOLOGY

We introduce the general adversarial setting, the prop-

erty impact (PI), the property impact attack (PIA), and

bounded robustness.

4.1 Attack Setting

We first describe our method’s adversarial attack set-

ting (adversary’s goals, knowledge, and capabilities).

Goal. The adversary aims to modify the prop-

Targeted Adversarial Attacks on Deep Reinforcement Learning Policies via Model Checking

503

erty value of the target RL policy π in its environ-

ment (modeled as an MDP). For instance, the adver-

sary may try to increase the probability that the agent

collides with another object (i.e. max

δ

P(F collision)

in the adversarial-induced DTMC).

Knowledge. The adversary that knows the

weights θ of the trained policy (for the FGSM attack)

and knows the MDP of the environment. Note that

we can replace the FGSM attack with any other at-

tack. Therefore, knowing the weights of the trained

policy should not be a strict constraint.

Capabilities. The adversary can attack the trained

policy π at every visited state s during the incremen-

tal building process for the model checking of the

adversarial-induced DTMC and after the RL policy

is deployed.

4.2 Property Impact Attack (PIA)

Combining adversarial RL with model checking al-

lows us to craft adversarial property impact attacks

(PIAs) that target temporal logic properties. Our work

builds upon the research of Chan et al. (2020). In-

stead of calculating SRIs (see Section 3.2), we calcu-

late property impacts (PIs). The PI values are used to

select the feature f

i

with the most significant PI-value

to attack the deployed RL policy in its environment

( f

i

= argmax

f

i

∈F

PI(π, P(φ), f

i

, ε)).

Definition 4.1 (Property Impact). The property im-

pact PI : Λ × Θ × F × Q → Q quantifies the impact

of an adversarial attack δ

( f

i

)

FGSM

∈ ∆

( f

i

)

ε

(s) via a feature

f

i

∈ F on a given RL policy property P(φ) ∈ Θ with

Θ as the set of all possible PCTL properties for the

MDP M.

A feature f

i

with a more significant PI-value indi-

cates that changing this feature f

i

via δ

( f

i

)

FGSM

will in-

fluence the property (expressed by the property query

P(φ)) more than via another feature f

k

with a less sig-

nificant PI-value.

We now explain how to calculate the PI-value for

a given MDP M, policy π, PCTL property query P(φ),

feature f

i

, and FGSM attack δ

( f

i

)

FGSM

. First, we incre-

mentally build the induced DTMC of the policy π and

the MDP M to check the property value r of the policy

π. We do this by using COOL-MC and inputting the

MDP M, policy π, and PCTL property query P(φ) into

it to calculate the probability r. Second, we incremen-

tally build the adversarial-induced DTMC D

adv

of the

policy π and the MDP M with the ε-bounded FGSM

attack δ

( f

i

)

FGSM

to check its probability r

adv

. To sup-

port the building and model checking of adversarial-

induced DTMCs via adv

property result, we extend

the incremental building process of COOL-MC in the

following way. For every reachable state s by the pol-

icy π, the policy π is queried for an action a = π(s).

In the underlying MDP, only states s that may be

reached via that action a are expanded. The resulting

model is fully probabilistic, as no action choices are

left open. It is, in fact, the Markov chain induced by

the original MDP M and the policy π. An adversary

can now inject adversarial attacks δ(s) at every state s

that gets passed to the policy π during the incremen-

tally building process (Zhang et al., 2020). This may

lead to the effect that the policy π makes a misjudg-

ment (π(s) 6= π(δ(s)) and results into an adversarial-

induced DTMC D

adv

. This allows us to model check

the adversarial-induced DTMCs D

adv

to gain the ad-

versarial probability r

adv

. Finally, we measure the

property impact value by measuring the absolute dif-

ference between r and r

adv

.

4.3 RL Policy Robustness

A trained RL policy π can be robust against an ε-

bounded PIA that attacks a temporal logic property

P(φ) via feature f

i

(PI(π, P(φ), f

i

, ε) = 0). However,

this is a weak statement about robustness since there

still exist multiple adversarial attacks δ

( f

i

)

(s) with

kδ

( f

i

)

(s) − sk

∞

≤ ε generated by other attacks, such

as the method from Carlini and Wagner (2017).

Given a fixed policy π and a set of attacks ∆

( f

i

)

ε

(s),

we generate a permissive policy Ω. Applying this pol-

icy π in the original MDP M generates a new MDP M

0

that describes all potential behavior of the agent under

the attack.

Definition 4.2 (Behavior under attack). A permissive

policy Ω : S → 2

Act

selects, at every state s, all actions

that can be queried via ∆

( f

i

)

s

(s). We consider Ω(s) =

S

δ

( f

i

)

i

∈∆

( f

i

)

s

(s)

π(δ

( f

i

)

i

(s)) with π(δ

( f

i

)

i

(s)) ∈ Act(s).

Applying a permissive policy to an MDP does not

necessarily resolve all nondeterminism, since more

than one action may be selected in some state(s). The

induced model is then (again) an MDP. We are able

to apply model checking, which typically results in

best- and worst-case probability bounds P

max

(φ) and

P

min

(φ) for a given property query P(φ).

We use the induced MDP to model check the ro-

bustness (see Definition 4.3) against every possible ε-

bounded attack δ

( f

i

)

(s) for a trained RL policy π in

its environment and bound the robustness to an α-

threshold (property impacts below a given threshold

α may be acceptable).

Definition 4.3 (Bounded robustness). A policy π is

called robustly bounded by ε and α (ε, α-robust) for

ICAART 2023 - 15th International Conference on Agents and Artificial Intelligence

504

property query φ if it holds that

|P

∗

(φ) − P(φ)| ≤ α (3)

for all possible ε-bounded adversarial attacks ∆

( f

i

)

ε

(s)

at every reachable state s by the permissive policy

Ω. We define α ∈ Q as a threshold (in this paper,

we focus on probabilities and therefore α ∈ [0, 1]).

|P

∗

(φ) − P(φ)| stands for the largest impact of a pos-

sible attack. We denote P

∗

as P

max

or P

min

depending

if the attack should increase (P

max

) or decrease (P

min

)

the probability.

By model checking the robustness of the trained

RL policies (as described in Section 4.3), it is pos-

sible to extract for each state s the adversarial attack

δ

( f

i

)

that is part of the most impactful attack and use

the corresponding attack as soon as the state gets ob-

served by the adversary. This is possible because

the underlying model of the induced MDP allows

the extraction of the state and action pairs (s, a

adv

)

that lead to the wanted property value modification

(a

adv

:

= π(δ

( f

i

)

(s))).

5 EXPERIMENTS

We now evaluate our PI method, property impact at-

tack (PIA), and robustness checker method in multi-

ple environments. The experiments are performed by

initially training the RL policies using the deep Q-

learning algorithm (Mnih et al., 2013), then using the

trained policies to answer our research questions.

5.1 Setup

We now explain the setup of our experiments.

Environments. We used our proposed methods

in a variety of environments (see Figure 2, Figure 4,

and Table 2). We use the Freeway (for a fair com-

parison between the SRI and PI method) and the Taxi

environment. Additionally, we use the environments

Collision Avoidance, Stock Market, and Smart Grid

(see Gross et al. (2022b) for more details).

Freeway is an action video game for the Atari

2600. A player controls a chicken (up, down, no op-

eration) who must run across a highway filled with

traffic to get to the other side. Every time the chicken

gets across the highway, it earns a reward of one. An

episode ends if the chicken gets hit by a car or reaches

the other side. Each state is an image of the game’s

state. Note that we use an abstraction of the original

game (see Figure 2).

In the Taxi environment, the agent must pick up

passengers and transport them to their destination

Figure 2: A comparison between the Atari 2600 Freeway

game (top) and our abstracted version (bottom).

without running out of fuel. The environment ends

when the agent completes a predefined number of

jobs or runs out of fuel. The maximal fuel level for the

taxi is ten and the maximal number of jobs is two. The

agent can refuel at the gas station cell (x = 1, y = 2).

The problem is formalized as follows:

S = {(x, y, Xloc,Y loc, X dest,Y dest,

f uel, done, pass, jobs, done), ...}

Act = {north, east, south, west, pick up, drop}

penal ty =

0, if passenger successfully dropped.

21, if passenger got picked up.

21 + |x − X dest|+

|y −Y dest|, if passenger on board.

21 + |x − X loc|+

|y −Y loc|, if passenger not on board.

1500, if not at gas station and out of fuel.

Properties. Table 1 presents the property queries

of the policy trained by an RL agent achieves in these

properties without the attack (=).

Trained RL Policies. We trained in a standard

way using COOL-MC (Gross et al., 2022a).

Technical Setup. All experiments were executed

on an NVIDIA GeForce GTX 1060 Mobile GPU,

16 GB RAM, and an Intel(R) Core(TM) i7-8750H

CPU @ 2.20GHz x 12. For model checking, we use

Storm 1.7.1 (dev).

5.2 Analysis

We now answer our research questions.

Targeted Adversarial Attacks on Deep Reinforcement Learning Policies via Model Checking

505

Table 1: PCTL property queries, with their labels and the original result of the property query without an attack (=). Fr stands

for Freeway, Coll. stands for Collision Avoidance, SG for Smart Grid, and SM for Stock Market.

Env. Label PCTL Property Query (P(φ)) =

Fr crossed P(F crossed) 1.0

Taxi deadlock1 P( f uel ≥ 4 U (G( jobs = 1 ∧ ¬empty ∧ pass))) 0.0

deadlock2 P( f uel ≥ 4 U (G( j obs = 1 ∧ ¬empty ∧ ¬pass))) 0.0

station empty P(((( jobs=0 U x=1 ∧ y=2) U ( jobs=0 ∧ ¬(x=1 ∧ y=2))) U empty ∧ jobs=0)) 0.0

station empty P(F (empty ∧ jobs = 0) ∧ G¬(x 6= 1 ∧ y 6= 2)) 0.0

pass empty P(F (empty ∧ pass)) 0.0

pass empty P(F (empty ∧ ¬pass)) 0.0

Coll. collision P(F

≤100

collision) 0.1

SG blackout P(F

≤100

blackout) 0.2

SM bankruptcy P(F bankruptcy) 0.0

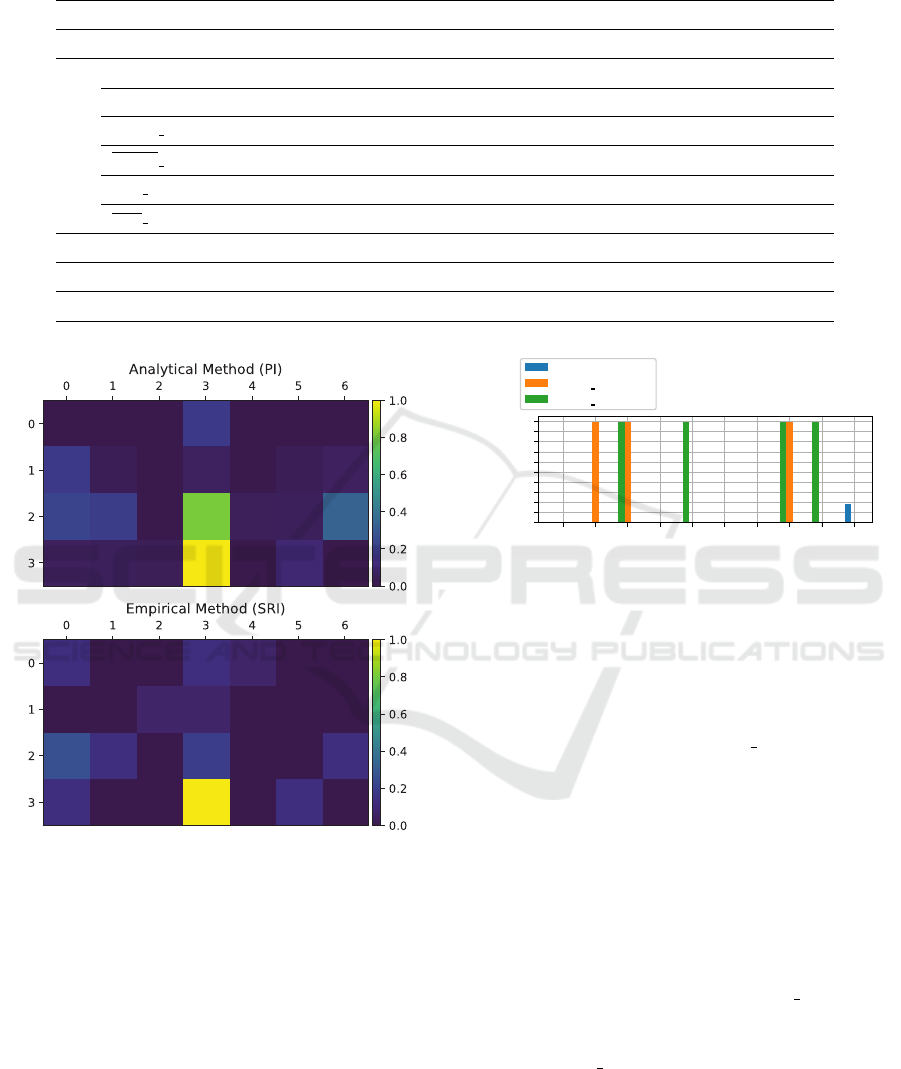

Figure 3: Freeway feature impacts (normalized between 0

and 1) for the PI and SRI method.

Does the PI method have the same behavior as the

related SRI method? We compare the results of our PI

approach to the empirical SRI approach (Chan et al.,

2020) in the Freeway environment using the reward

function and the expected reachability probability of

crossing the street (see Figure 3). We generate both

the SRI and PI maps using a sample size of N = 300

and an ε = 1. The results show that both approaches

yield similar results.

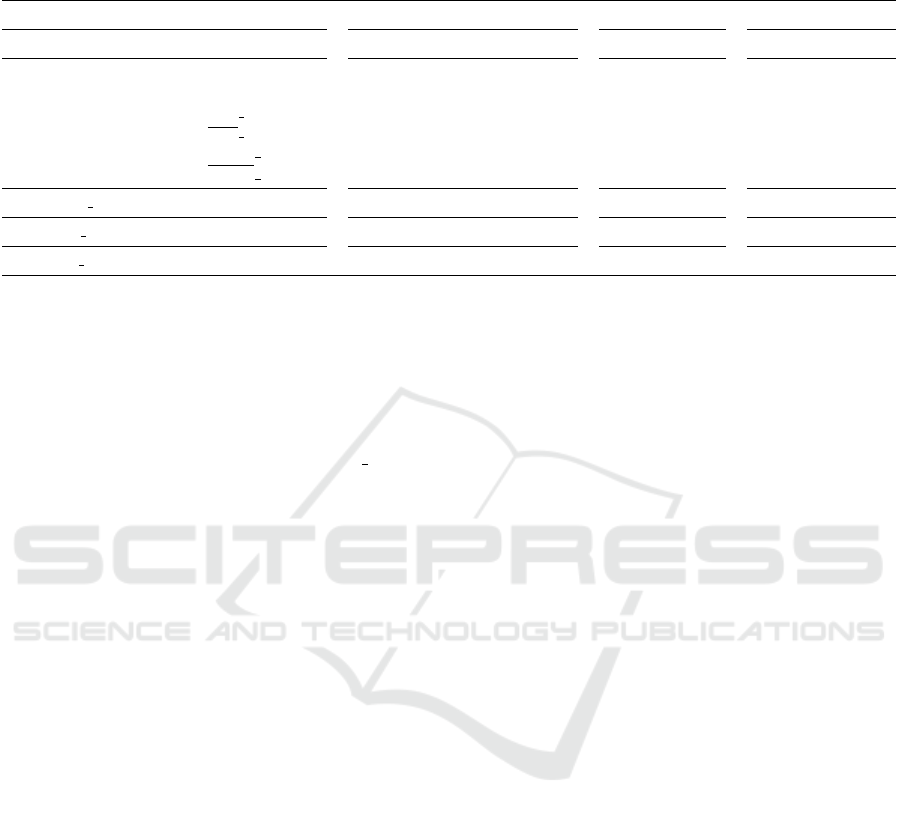

Can the PI method generate different property im-

pacts for different advanced property queries? We

now show that PI is suited to measure the property

impact for properties that can not be expressed by re-

fuel x y Xloc Yloc XdestYdest pass jobs done

Features

0.0

0.1

0.2

0.3

0.4

0.5

0.6

0.7

0.8

0.9

1.0

Impact

deadlock1

station

empty ǫ = 1

station

empty ǫ = 2

Figure 4: Taxi environment. This diagram plots different

advanced property impacts of different PIAs. The original

property values (without an attack) are all zero.

wards which we call here advanced property queries

(see Figure 4). To make the interpretation of ad-

vanced properties more straightforward, we focus on

the Taxi environment and use the advanced prop-

erty queries deadlock1 and station empty. Advanced

property queries contain, for example, the U-operator

(Definition 3.3), which allows the adversary to make

sure that certain events happen before other events.

Figure 4 shows the property impact of each attack on

the policy and different ε-bounded attacks. By attack-

ing the done feature via an PIA (with ε = 1), it is pos-

sible to drive the taxi around without running out of

fuel and not finishing jobs while having a passenger

on board (deadlock1). Figure 4 also shows that it is

possible to let the taxi drive first to the gas station and

let it run out of fuel afterwards (station empty). We

observe that for different ε-bounds, PIAs have differ-

ent impacts via features on the temporal logic proper-

ties (see station empty in Figure 4).

What are the limitations of PIAs? We now analyze

the limitations of PIAs and compare them with the

FGSM attack (baseline) and the robustness checker.

For each experiment, we ε-bounded all the generated

attacks for a fair comparison.

ICAART 2023 - 15th International Conference on Agents and Artificial Intelligence

506

Table 2: Impact* stands for the optimal adversarial attack impact (|P

max

− P|) via the feature specified in Features, P

max

for

the maximal probability P

max

(φ) with an attack, P for the original probability P(φ) (without an attack), Time in seconds, C for

Collision Avoidance, SG for Smart Grid, SM for Stock Market, Baseline is a standard FGSM attack on the whole observation.

Setup Robustness Checker PIA Baseline (FGSM)

Env. Features ε Property Query P

max

P Impact* Time Impact Time Impact Time

Taxi done 1 deadlock1 0.44 0.0 0.44 9 0.19 20 0.00 6

done 1 deadlock2 0.00 0.0 0.00 9 0.00 20 0.00 6

fuel 2 pass empty 1.00 0.0 1.00 25 0.25 20 0.00 6

y 2 pass empty 1.00 0.0 1.00 27 1.00 20 1.00 6

x 1 station empty 1.00 0.0 1.00 24 1.00 6 1.00 6

x 1 station empty 1.00 0.0 1.00 30 1.00 6 1.00 6

C obs1 x 1 collision 0.87 0.1 0.86 65 0.46 213 0.87 211

SG non renewable 1 blackout 0.97 0.2 0.95 2 0.39 2 0.98 2

SM sell price 1 bankruptcy 0.81 0.0 0.81 15 0.08 20 0.00 4

Table 2 shows that PIAs, in comparison to FGSM

attacks, have similar impacts on temporal logic prop-

erties (compare impact columns of PIA and FGSM).

For temporal logic properties where some correct

decision-making is still needed, PIAs perform bet-

ter than the FGSM attack (for instance, pass empty).

However, PIAs do not necessarily create a maximal

impact on the property values like the robustness

checker method (compare PIA impact with Impact*).

After observing the results of the three methods

(PIA, FGSM, robustness checker), we can summa-

rize. By verifying the robustness of the trained RL

policies, the adversary can already extract for each

state the optimal adversarial attack that is part of

the most impactful attack. Since PIAs build induced

DTMCs and the robustness checker induced MDPs,

PIAs are suited for MDPs with more states and tran-

sitions before running out of memory (see Gross et al.,

2022a, for more details about the limitations of model

checking RL policies).

Does adversarial training make trained RL poli-

cies more robust against PIAs? Figure 4 shows that

an adversarial attack (bounded by ε = 1) on fea-

ture done can bring the taxi agent into a deadlock

and lets it drive around after the first job is done

(deadlock1 = 0.19). To protect the RL agent from

this attack, we trained the RL taxi policy over 5000

additional episodes via adversarial training by using

our method PIA on the done feature to make the pol-

icy more robust against this deadlock attack. The ad-

versarial training improves the feature robustness for

the done feature (0) but deteriorates the robustness for

the other features (all other feature PI-values: 1). That

agrees with the observation that adversarially trained

RL policies may be less robust to other types of ad-

versarial attacks (Zhang et al., 2020; Korkmaz, 2021a,

2022).

ACKNOWLEDGEMENTS

This research has been funded by the Dutch NWO

grant NWA.1160.18.238 (PrimaVera); the Flem-

ish interuniversity iBOF “DESCARTES” and FWO

“SAILor” projects (G030020N).

REFERENCES

Amodei, D., Olah, C., Steinhardt, J., Christiano, P. F.,

Schulman, J., and Man

´

e, D. (2016). Concrete prob-

lems in AI safety. CoRR, abs/1606.06565.

Baier, C. and Katoen, J. (2008). Principles of model check-

ing. MIT Press.

Carlini, N. and Wagner, D. A. (2017). Towards evaluat-

ing the robustness of neural networks. In IEEE Sym-

posium on Security and Privacy, pages 39–57. IEEE

Computer Society.

Cassez, F., David, A., Fleury, E., Larsen, K. G., and Lime,

D. (2005). Efficient on-the-fly algorithms for the anal-

ysis of timed games. In CONCUR, pages 66–80.

Springer.

Chan, P. P. K., Wang, Y., and Yeung, D. S. (2020). Adver-

sarial attack against deep reinforcement learning with

static reward impact map. In AsiaCCS, pages 334–

343. ACM.

Clark, G. W., Doran, M. V., and Glisson, W. (2018). A

malicious attack on the machine learning policy of a

robotic system. In TrustCom/BigDataSE, pages 516–

521. IEEE.

Courcoubetis, C. and Yannakakis, M. (1988). Verifying

temporal properties of finite-state probabilistic pro-

grams. In FOCS, pages 338–345. IEEE Computer So-

ciety.

Courcoubetis, C. and Yannakakis, M. (1995). The complex-

ity of probabilistic verification. J. ACM, 42(4):857–

907.

Targeted Adversarial Attacks on Deep Reinforcement Learning Policies via Model Checking

507

David, A., Jensen, P. G., Larsen, K. G., Mikucionis, M., and

Taankvist, J. H. (2015). Uppaal Stratego. In TACAS,

pages 206–211. Springer.

Dr

¨

ager, K., Forejt, V., Kwiatkowska, M. Z., Parker, D.,

and Ujma, M. (2015). Permissive controller synthe-

sis for probabilistic systems. Log. Methods Comput.

Sci., 11(2).

Farazi, N. P., Zou, B., Ahamed, T., and Barua, L. (2021).

Deep reinforcement learning in transportation re-

search: A review. Transportation Research Interdisci-

plinary Perspectives, 11:100425.

Gleave, A., Dennis, M., Wild, C., Kant, N., Levine, S.,

and Russell, S. (2020). Adversarial policies: Attack-

ing deep reinforcement learning. In ICLR. OpenRe-

view.net.

Goodfellow, I. J., Shlens, J., and Szegedy, C. (2015). Ex-

plaining and harnessing adversarial examples. In

ICLR.

Gross, D., Jansen, N., Junges, S., and P

´

erez, G. A. (2022a).

COOL-MC: A comprehensive tool for reinforcement

learning and model checking. In SETTA. Springer.

Gross, D., Sim

˜

ao, T. D., Jansen, N., and Perez, G. A.

(2022b). Targeted adversarial attacks on deep re-

inforcement learning policies via model checking.

CoRR, abs/2212.05337.

Hahn, E. M., Perez, M., Schewe, S., Somenzi, F., Trivedi,

A., and Wojtczak, D. (2019). Omega-regular objec-

tives in model-free reinforcement learning. In TACAS

(1), pages 395–412. Springer.

Hansson, H. and Jonsson, B. (1994). A logic for reasoning

about time and reliability. Formal Aspects Comput.,

6(5):512–535.

Hasanbeig, M., Kroening, D., and Abate, A. (2020). Deep

reinforcement learning with temporal logics. In FOR-

MATS, pages 1–22. Springer.

Hensel, C., Junges, S., Katoen, J., Quatmann, T., and Volk,

M. (2022). The probabilistic model checker Storm.

Int. J. Softw. Tools Technol. Transf., 24(4):589–610.

Huang, S. H., Papernot, N., Goodfellow, I. J., Duan, Y.,

and Abbeel, P. (2017). Adversarial attacks on neural

network policies. In ICLR. OpenReview.net.

Ilahi, I., Usama, M., Qadir, J., Janjua, M. U., Al-Fuqaha,

A. I., Hoang, D. T., and Niyato, D. (2022). Chal-

lenges and countermeasures for adversarial attacks on

deep reinforcement learning. IEEE Trans. Artif. In-

tell., 3(2):90–109.

Korkmaz, E. (2021a). Adversarial training blocks general-

ization in neural policies. In NeurIPS 2021 Workshop

on Distribution Shifts: Connecting Methods and Ap-

plications.

Korkmaz, E. (2021b). Investigating vulnerabilities of deep

neural policies. In UAI, pages 1661–1670. AUAI

Press.

Korkmaz, E. (2022). Deep reinforcement learning poli-

cies learn shared adversarial features across mdps. In

AAAI, pages 7229–7238. AAAI Press.

Kwiatkowska, M. Z., Norman, G., and Parker, D. (2011).

PRISM 4.0: Verification of probabilistic real-time sys-

tems. In CAV, pages 585–591. Springer.

Lee, X. Y., Esfandiari, Y., Tan, K. L., and Sarkar, S. (2021).

Query-based targeted action-space adversarial poli-

cies on deep reinforcement learning agents. In ICCPS,

pages 87–97. ACM.

Lee, X. Y., Ghadai, S., Tan, K. L., Hegde, C., and Sarkar, S.

(2020). Spatiotemporally constrained action space at-

tacks on deep reinforcement learning agents. In AAAI,

pages 4577–4584. AAAI Press.

Lin, Y., Hong, Z., Liao, Y., Shih, M., Liu, M., and Sun, M.

(2017a). Tactics of adversarial attack on deep rein-

forcement learning agents. In ICLR. OpenReview.net.

Lin, Y., Liu, M., Sun, M., and Huang, J. (2017b). Detect-

ing adversarial attacks on neural network policies with

visual foresight. CoRR, abs/1710.00814.

Liu, Z., Guo, Z., Cen, Z., Zhang, H., Tan, J., Li, B., and

Zhao, D. (2022). On the robustness of safe rein-

forcement learning under observational perturbations.

CoRR, abs/2205.14691.

Mnih, V., Kavukcuoglu, K., Silver, D., Graves, A.,

Antonoglou, I., Wierstra, D., and Riedmiller, M. A.

(2013). Playing atari with deep reinforcement learn-

ing. CoRR, abs/1312.5602.

Mnih, V., Kavukcuoglu, K., Silver, D., Rusu, A. A., Ve-

ness, J., Bellemare, M. G., Graves, A., Riedmiller,

M. A., Fidjeland, A., Ostrovski, G., Petersen, S.,

Beattie, C., Sadik, A., Antonoglou, I., King, H., Ku-

maran, D., Wierstra, D., Legg, S., and Hassabis, D.

(2015). Human-level control through deep reinforce-

ment learning. Nat., 518(7540):529–533.

Nakabi, T. A. and Toivanen, P. (2021). Deep reinforcement

learning for energy management in a microgrid with

flexible demand. Sustainable Energy, Grids and Net-

works, 25:100413.

Pinto, L., Davidson, J., Sukthankar, R., and Gupta, A.

(2017). Robust adversarial reinforcement learning. In

ICML, pages 2817–2826. PMLR.

Rakhsha, A., Radanovic, G., Devidze, R., Zhu, X., and

Singla, A. (2020). Policy teaching via environment

poisoning: Training-time adversarial attacks against

reinforcement learning. In ICML, pages 7974–7984.

PMLR.

Sutton, R. S. and Barto, A. G. (2018). Reinforcement learn-

ing: An introduction. MIT press.

Vamplew, P., Smith, B. J., K

¨

allstr

¨

om, J., de Oliveira Ramos,

G., Radulescu, R., Roijers, D. M., Hayes, C. F.,

Heintz, F., Mannion, P., Libin, P. J. K., Dazeley, R.,

and Foale, C. (2022). Scalar reward is not enough:

a response to silver, singh, precup and sutton (2021).

Auton. Agents Multi Agent Syst., 36(2):41.

Yu, M. and Sun, S. (2022). Natural black-box adversar-

ial examples against deep reinforcement learning. In

AAAI, pages 8936–8944. AAAI Press.

Zhang, H., Chen, H., Xiao, C., Li, B., Liu, M., Bon-

ing, D. S., and Hsieh, C. (2020). Robust deep rein-

forcement learning against adversarial perturbations

on state observations. In NeurIPS, pages 21024–

21037.

ICAART 2023 - 15th International Conference on Agents and Artificial Intelligence

508