Development of a Realistic Crowd Simulation Environment for

Fine-Grained Validation of People Tracking Methods

Paweł Foszner

1,∗ a

, Agnieszka Szcz˛esna

1,∗ b

, Luca Ciampi

3,† c

, Nicola Messina

3,† d

,

Adam Cygan

5,§

, Bartosz Bizo

´

n

5,§

, Michał Cogiel

4,‡ e

, Dominik Golba

4,‡ f

, El

˙

zbieta Macioszek

2,∗ g

and Michał Staniszewski

1,∗,∗∗ h

1

Department of Computer Graphics, Vision and Digital Systems, Faculty of Automatic Control,

Electronics and Computer Science, Silesian University of Technology, Akademicka 2A, 44-100 Gliwice, Poland

2

Department of Transport Systems, Traffic Engineering and Logistics, Faculty of Transport and Aviation Engineering,

Silesian University of Technology, Krasi´nskiego 8, 40-019 Katowice, Poland

3

Institute of Information Science and Technologies, National Research Council, Via G. Moruzzi 1, 56124 Pisa, Italy

4

Blees sp. z o.o. Zygmunta Starego 24a/10, 44-100 Gliwice, Poland

5

QSystems.pro sp. z o.o. Mochnackiego 34, 41-907 Bytom, Poland

∗

Keywords:

Crowd Simulation, Realism Enhancement, People and Car Simulation, People Tracking, Deep Learning.

Abstract:

Generally, crowd datasets can be collected or generated from real or synthetic sources. Real data is generated

by using infrastructure-based sensors (such as static cameras or other sensors). The use of simulation tools

can significantly reduce the time required to generate scenario-specific crowd datasets, facilitate data-driven

research, and next build functional machine learning models. The main goal of this work was to develop

an extension of crowd simulation (named CrowdSim2) and prove its usability in the application of people-

tracking algorithms. The simulator is developed using the very popular Unity 3D engine with particular

emphasis on the aspects of realism in the environment, weather conditions, traffic, and the movement and

models of individual agents. Finally, three methods of tracking were used to validate generated dataset: IOU-

Tracker, Deep-Sort, and Deep-TAMA.

1 INTRODUCTION

Using real crowd datasets can produce effective and

reliable learning models, useful in the following ap-

plications such as object tracking (Cafarelli et al.,

2022) (Lin et al., 2017), image segmentation (Bolya

et al., 2019) (Chen et al., 2018), visual object counting

(Ciampi et al., 2022c) (Avvenuti et al., 2022) (Ciampi

et al., 2022a), individuals activity or violence recog-

nition (Ciampi et al., 2022b; Foszner et al., 2022),

crowd anomaly detection and prediction and wider

crowd management solutions monitor. However, ac-

a

https://orcid.org/0000-0001-5491-9096

b

https://orcid.org/0000-0002-4354-8258

c

https://orcid.org/0000-0002-6985-0439

d

https://orcid.org/0000-0003-3011-2487

e

https://orcid.org/0000-0002-9776-9654

f

https://orcid.org/0000-0002-4542-3547

g

https://orcid.org/0000-0002-1345-0022

h

https://orcid.org/0000-0001-9659-7451

∗∗

Corresponding author

quiring real crowd data faces several challenges, in-

cluding the expensive installation of a sensory infras-

tructure, the data pre-processing costs, and the lack

of real datasets that cover particular crowd scenarios.

Consequently, simulation tools have been adopted for

generating synthetic datasets to overcome the chal-

lenges associated with their real counterparts. Using

simulation tools that can significantly reduce the time

required to generate scenario-specific crowd datasets,

mimic observed crowds in a realistic environment, fa-

cilitate data-driven research, and build functional ma-

chine learning models (Khadka et al., 2019; Ciampi

et al., 2020) based on generated data. Simulation of-

fers flexibility in adjusting the scenarios, and gener-

ating and reproducing datasets with defined require-

ments.

The main motivation for that work was to imple-

ment more realistic crowd simulation with additional

features that can be applied in many modern artifi-

cial intelligence approaches (including the evaluation

of people tracking algorithms). The proposed crowd

simulator has the following advantages:

222

Foszner, P., Szcz˛esna, A., Ciampi, L., Messina, N., Cygan, A., Bizo

´

n, B., Cogiel, M., Golba, D., Macioszek, E. and Staniszewski, M.

Development of a Realistic Crowd Simulation Environment for Fine-Grained Validation of People Tracking Methods.

DOI: 10.5220/0011691500003417

In Proceedings of the 18th International Joint Conference on Computer Vision, Imaging and Computer Graphics Theor y and Applications (VISIGRAPP 2023) - Volume 1: GRAPP, pages

222-229

ISBN: 978-989-758-634-7; ISSN: 2184-4321

Copyright

c

2023 by SCITEPRESS – Science and Technology Publications, Lda. Under CC license (CC BY-NC-ND 4.0)

Figure 1: The visualization of tracking of multiple pedestrians in images generated by application of CrowdSim2.

• realism enhancement by application of motion

matching, people and car generation,

• automatic assessment of ground truth and detec-

tion (Figure 1) in the known format of MOT Chal-

lenge (Dendorfer et al., 2020),

• simulation placed in 3 different localisations (with

3 views) for people movement and 2 localisations

for cars,

• introduction of 4 realistic weather conditions in-

cluding sun, fog, rain, snow, and different day

time,

• many possible options for application including

object detection and tracking, action detection,

and recognition.

2 RELATED WORKS

Databases containing visual data are needed to de-

velop the detection and tracking method, including

cars and pedestrians. For that purpose the annotations

of traced objects (like a pedestrian) which includes an

approximate bounding box are necessary. The Mall

dataset (Chen et al., 2012) was collected from a pub-

licly accessible webcam with ground truth consist-

ing of annotating 60,000 pedestrians. NWPU (Wang

et al., 2020) includes approximately 5,000 images

and 2,133,375 annotated heads. JHU-CROWD++

(Sindagi et al., 2020) is another crowd dataset cap-

tured in different scenarios, and geographical loca-

tions, under weather conditions such as fog, haze,

snow, and rain. JHU-CROWD++ provides head-level

labeling which includes an approximate bounding

box. The GTA5 Crowd Counting (GCC) (Wang et al.,

2019) is an example of a large-scale visual synthetic

dataset (15,212 images, 7,625,843 persons) generated

using the well-known video game GTA5 (Grand Theft

Auto 5). AGORASET (Courty et al., 2014) is also a

visual synthetic dataset for crowd video analysis. For

a comprehensive overview of databases and simula-

tors, see the following review articles (Bamaqa et al.,

2022; Lemonari et al., 2022; Van Toll and Pettré,

2021; Yang et al., 2020). In (Amirian et al., 2020)

the analysis of the statistical properties of real word

datasets is available. Recent advancements in crowd

simulation unravel a wide range of functionalities for

virtual agents, delivering highly-realistic, natural vir-

tual crowds.

In the following work, simulated data will be

used for the evaluation of different tracking algo-

rithms (Staniszewski et al., 2016). Here the concept

of tracking by detection will be used, which means

that detection will be available along with simulated

data, and afterward tracking algorithms are applied

for joining detections in tracks. Additionally, instead

of whole-person detection, facial recognition could be

applied (P˛eszor et al., 2016). The first considered

algorithm IOU-tracker was presented in (Bochinski

et al., 2018) and it doesn’t use any image informa-

tion, which allows it to run simpler tracking algo-

rithms. Thanks to this non-image approach, it uses

much less computing power than other trackers. The

authors of the Deep-Sort method (Wojke and Bewley,

2018) - presented Simple Online and Realtime Track-

ing with a Deep Association Metric as a tracking-

by-detection method. Deep-Sort is an extension of

the SORT algorithm (Wojke et al., 2017) made to in-

tegrate appearance information based on a deep ap-

pearance descriptor. The Deep-TAMA method (Yoon

et al., 2019)– which stands for Deep Temporal Ap-

pearance Matching Association contrary to Deep-Sort

and IOU-tracker during one stage performs tracking

together with evaluation of the results. Another group

of SORT applications - Observation-Centric SORT

(Bewley et al., 2016) - is used for a multiple object

tracker. OC-SORT was built to fix limitations in the

Kalman filter and SORT algorithm. It is an online

tracker and it has improved non-linear motion and ro-

bustness over occlusion. For wide application, the

framework MMtracking (Contributors, 2020) was es-

tablished which is an open-source video perception

toolbox by PyTorch.

Development of a Realistic Crowd Simulation Environment for Fine-Grained Validation of People Tracking Methods

223

Figure 2: Exemplary views from CrowdSim2: junction and park with moving pedestrians and cars along with examples of

snow and rain weather conditions that can be generated.

Table 1: Information summarizing a number of folders, sec-

onds, and frames of data for different weather conditions.

Number of folders seconds frames

Sun 2899 86 970 2 174 250

Rain 1633 48 990 1 224 750

Fog 1653 49 590 1 239 750

Snow 1646 49 380 1 234 500

3 CROWD SIMULATOR

The proposed crowd simulator CrowdSim2

1

is

the next version of crowd simulator CrowdSim

(Staniszewski et al., 2020) especially for testing

multi-object tracking algorithms but also for action

and object detection. It uses the microscopic (or

‘agent-based’) crowd simulation methods that model

the behavior of each person, from which collective be-

havior can then emerge (Saeed et al., 2022; Van Toll

and Pettré, 2021). The simulator is developed us-

ing the very popular Unity 3D engine with particu-

lar emphasis on the aspects of realism in the environ-

ment, weather conditions, traffic, and the movement

and models of individual agents. The proposed sys-

1

The dataset is freely available in the Zenodo Reposi-

tory at https://doi.org/10.5281/zenodo.7262220

tem can be used to generate a sequence of random

images (datasets) for use in tracking and object detec-

tion algorithms evaluation but also in the crowd, car

counting, and other crowd and traffic analysis tasks.

The generated output data is in the format of the

MOT challenge. The most important components of

CrowdSim2, to support realism when rendering the

resulting image, are described below.

3.1 Agents Motion and Interactions

The component necessary for producing lively and re-

alistic virtual crowds is animating the characters, thus

creating 3D moving agents. Data-driven approaches

include methods utilizing motion capture data to use

during skeleton-based animation of 3D human mod-

els (Wereszczy

´

nski et al., 2021). This approach re-

quires many variations of data to represent move-

ments in different activities. To ensure the universal-

ity of the system and to generate animations based

on real human motion data, a motion matching al-

gorithm was used (Clavet, 2016). Motion matching

is an alternative animation system without the need

for a state machine with vectors given in Figure 3.

Thanks to this, it is possible to perform different ac-

tivities at the agent level, including dancing or fights.

In the future, it is planned to use the learned motion

matching algorithm (Holden et al., 2020) with addi-

GRAPP 2023 - 18th International Conference on Computer Graphics Theory and Applications

224

tional styling (Aberman et al., 2020; Holden et al.,

2017). Currently, we only have two styles of move-

ment male and female, which rely on separate motion

databases. The interactions are carried out based on

interaction zones. These zones are placed in the city

and define the type of interaction (for example dance,

fight). When an agent enters the zone, a set of con-

ditions is checked, and, depending on the situation,

the agent is either ignored interaction, added to the

queue, or starts interaction (see Figure 6). If an agent

is added to the queue, there are not enough agents in

the zone to start interacting. The agent moves nor-

mally while waiting in the queue, and if he moves too

far away from the zone before the interaction begins,

he is removed from the zone queue.

Figure 3: Agents movement concerning the concept of mo-

tion matching and system of features.

3.2 Photo-Realistic Rendering and

Traffic

The main element is physical volumetric light that re-

sponds adequately to dynamically changing surround-

ings. There is a dynamic volumetric fog in the sim-

ulation. Additionally, the simulation also includes

snow and rain based on particle effects (Figure 2). Fi-

nally, thanks to the use of the High Definition Render

Pipeline (HDRP) in Unity engine and physical cam-

eras, it is possible to map the lens and matrix settings

of the real camera to create photo-realistic output im-

ages. For the global agent movement on a macro

scale, the already built-in NavMesh was used. On

the micro-scale at the beginning of the study of hu-

man behavior, Emergent human behavior in Naviga-

tion was created by the application of the unique sys-

tem of features. Cars can park in randomly selected

parking bays as visible in Figure 4. At the crossing,

they also choose a random direction. Traffic is based

on a created system of nodes located on the roads,

particularly crossing, sharp turns, and parking places.

Figure 4: The navigation system for car movement with

parking area and pedestrian stops.

4 RESULTS AND DISCUSSION

4.1 Collected Dataset

CrowdSim2 was used as the main tool for the genera-

tion of many different simulated situations in 3 main

places in the virtual city. All places were observed

by 3 cameras directed at the same position to get the

general view from different angles. All videos were

recorded in the resolution of 800x600 in 25 frames

per second. Each situation lasted 30 seconds - 750

pictures were recorded. The number of pedestrians

varied from 1 to 160, and situations were influenced

by weather conditions such as sun, rain, fog, and

snow. The dataset was generated in the format of

MOT Challenge. The number of generated videos,

folders and frames is presented in Table 1.

4.2 Results

The obtained dataset was validated in two different

scenarios: 1) by verification of the influence of the

crowd density on the accuracy of tracking methods

and 2) by application of 4 different weather con-

ditions utilizing clear sunny weather (with just re-

sulting sun reflections), rain and snow with cloudy

weather influencing also background of the scene and

fog day. Finally, 3 methods of tracking were used to

test generated dataset: IOU-Tracker (Bochinski et al.,

2018), Deep-Sort (Wojke and Bewley, 2018) and

Deep-TAMA (Yoon et al., 2019). Presented methods

were chosen under two conditions - first the availabil-

ity of open source code and second finite time of exe-

cution. All methods were applied in MOT Challenge

ranking. The given evaluation was divided into two

separate subsections - 1) validation of tracking meth-

ods in changing the number of people in simulation

and 2) verification of different weather conditions.

Development of a Realistic Crowd Simulation Environment for Fine-Grained Validation of People Tracking Methods

225

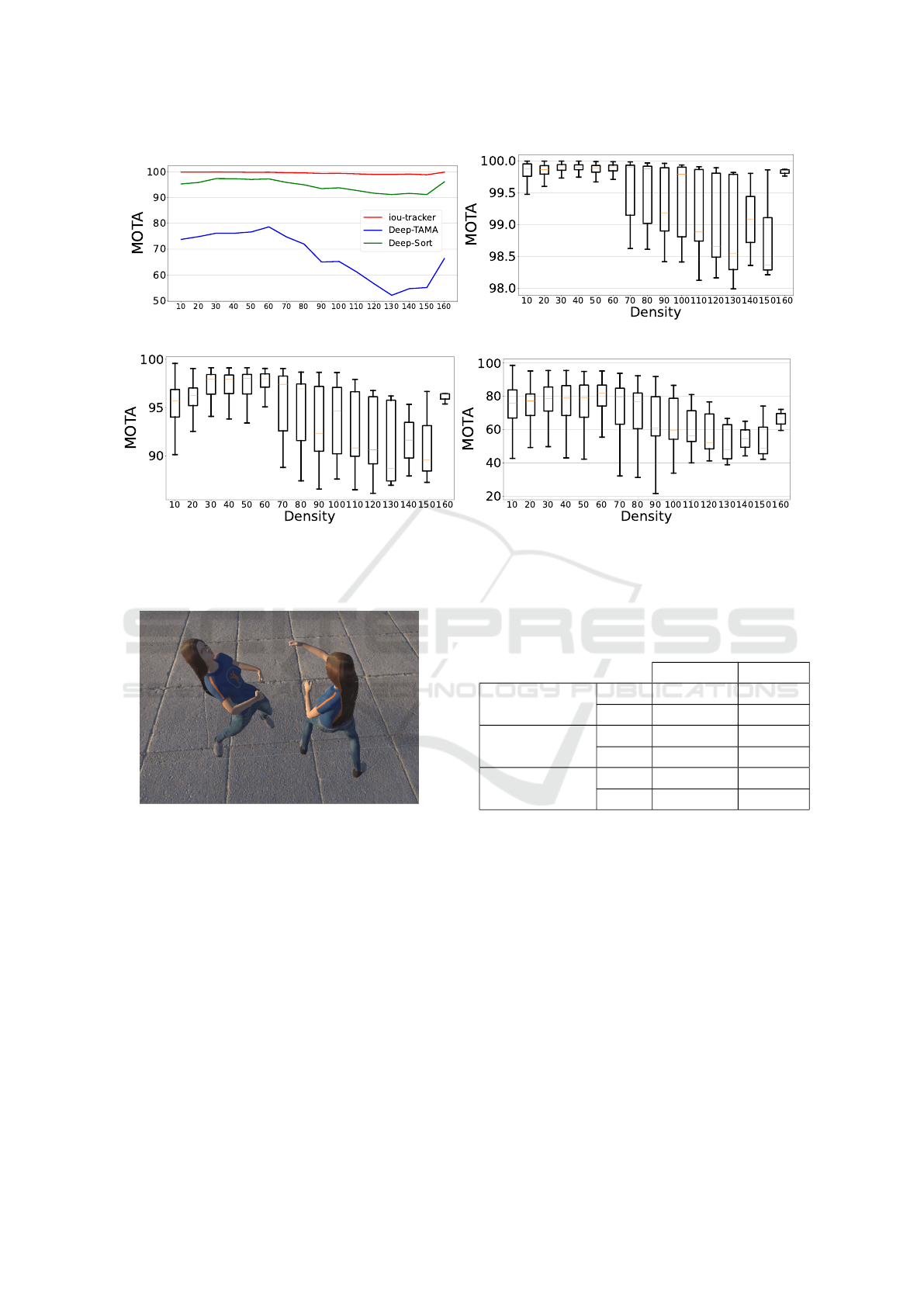

(a) MOTA for varying crowd density. (b) Varying density for MOTA on IOU-tracker.

(c) Varying density for MOTA on Deep-Sort. (d) Varying density for MOTA on Deep-TAMA.

Figure 5: Results of investigated tracking methods (IOU-tracker, Deep-Sort and Deep-TAMA) on varying crowd density data

(from 1 - 160 agents) on MOTA parameter.

Figure 6: Random animated interaction between agents

generated in CrowdSim2.

Methods were tested for the chosen parameters ap-

plied in MOT Challenge: a) MOTA - Multiple-Object

Tracking Accuracy and b) IDs - ID switches.

Crowd simulation was first run on the different

numbers of people, which varied from 1 to 160.

Thanks to that it was possible to verify what is the

influence of the number of people in tracking results.

The result of the comparison is presented in Figure 5

in the form of influence on the MOTA parameter and

also the distribution of results for the set of people. In

the second step, data were divided into weather con-

ditions - sun, rain, fog, and snow. Here it is also pos-

sible to distinguish differences in the results of meth-

ods. The final score was presented in a few aspects

- in the form of boxplots for each weather condition

Table 2: Mean and standard deviation for tracking method

results concerning exemplary evaluation parameters MOTA

(that should be high) and Ids (that should be low).

MOTA ↑ Ids ↓

Deep-Sort

Avg 96.20 362.05

STD 2.64 436.04

IOU-tracker

Avg 99.74 13.07

STD 0.36 159.64

Deep-TAMA

Avg 74.58 241.41

STD 13.36 349.84

on the MOTA parameter (Figure 7) and on different

parameters in Figure 8 and Table 2.

4.3 Discussion

The generated data was used for two different valida-

tions of tracking methods. On one side crowd den-

sity can be the first point of analysis. For the MOTA

parameter, it can be observed which method can ob-

tain better results. In all cases, the IOU-tracker gen-

erates better results which rely mainly on the assump-

tion that automatically all detections are given at once.

Deep-Sort has slightly worse results because it takes

also image context into consideration. Deep-TAMA

fails in the case of simulated data due to the size of

smaller detections. It has to be mentioned that the

GRAPP 2023 - 18th International Conference on Computer Graphics Theory and Applications

226

(a) Fog weather condition. (b) Rain weather condition.

(c) Snow weather condition. (d) Sun weather condition.

Figure 7: The influence of different weather conditions (fog, rain, snow, and sun) on the MOTA parameter for exemplary data

from CrowdSim2 presented in the form of boxplots.

number of people is not uniformly distributed - the

generation was run in the specific number of pedes-

trians but not always all detections were present and

because of it for 160 pedestrians, not so many trails

we could obtain.

In terms of weather conditions, the order of accu-

racy of methods fits the crowd density analysis. The

most challenging conditions are given for snow. It

may result from the influence of remaining snow on

the background. The same trend is visible and sat-

isfied for different parameters from the MOTA chal-

lenge. Still in that case the best results are achieved

by the IOU-tracker taking into consideration just the

bounding box position. In fact to get the conclusion

regarding validation both approaches can be used and

still conclusion of which method can give better track-

ing is possible. In all cases, in terms of MOTA, it can

be observed that a better method generates higher re-

sults. On the other hand for IDs, that value should be

lowered.

Crowd simulations and direct connection to a

graphical engine allow the generation of very accurate

detections (bounding boxes) for all visible pedestri-

ans and on each frame. After validation performance,

it could be noticed that generation of all detections

has some disadvantages. The first drawback lies in

the number of detections and their size - that means

that also very small pedestrians can be generated and

put in the ground truth. On the other hand, in prac-

tice, obtaining detection for each frame is mostly not

possible. Due to evaluation processing, any changes

in that protocol were not submitted but in the future

generated data could be randomly disturbed in terms

of the number of detections. On the other hand on

generated data, some other detection methods could

be applied to produce not ideal detections.

5 SUMMARY

In the presented work an extension of crowd simula-

tion CrowdSim2 was introduced with many advanced

features applied for the reality enhancement of gener-

ated results. To show the practical application of gen-

erated simulated data, tracking methods were run for

evaluation purposes. Algorithms were tested concern-

ing the crowd density and weather conditions show-

ing differences in final results and ordering accuracy

of methods. The obtained results confirmed that syn-

thetic data from CrowdSim2 can be used in the val-

idation process for many scenarios without the need

for real data. Besides tracking algorithms it can be ap-

plied for object detection, action detection, and recog-

nition, as a part of the testing procedure, and also in

the training of machine learning algorithms. In the fu-

ture, generated simulated dataset can be enhanced by

the used of post-processing methods to improve real-

ity.

Development of a Realistic Crowd Simulation Environment for Fine-Grained Validation of People Tracking Methods

227

(a) MOTA - Multiple-Object Tracking Accuracy. (b) IDs - ID switches.

Figure 8: The impact of weather conditions in the validation of tracking methods for different evaluation parameters for the

dataset from CrowdSim2. For MOTA the obtained results should be maximized and for IDs, the values should be lowered.

ACKNOWLEDGMENTS

This work was supported by: European Union

funds awarded to Blees Sp. z o.o. under grant

POIR.01.01.01-00-0952/20-00 “Development of a

system for analysing vision data captured by pub-

lic transport vehicles interior monitoring, aimed at

detecting undesirable situations/behaviours and pas-

senger counting (including their classification by age

group) and the objects they carry”); EC H2020

project “AI4media: a Centre of Excellence deliv-

ering next generation AI Research and Training at

the service of Media, Society and Democracy” un-

der GA 951911; research project (RAU-6, 2020) and

projects for young scientists of the Silesian University

of Technology (Gliwice, Poland); research project

INAROS (INtelligenza ARtificiale per il mOnitorag-

gio e Supporto agli anziani), Tuscany POR FSE CUP

B53D21008060008. Publication supported under the

Excellence Initiative - Research University program

implemented at the Silesian University of Technol-

ogy, year 2022. This research was supported by the

European Union from the European Social Fund in

the framework of the project "Silesian University of

Technology as a Center of Modern Education based

on research and innovation” POWR.03.05.00- 00-

Z098/17 We are thankful for students participating in

design of Crowd Simulator: Piotr Bartosz, Stanisław

Wróbel, Marcin Wola, Angelika Gluch and Marek

Matuszczyk.

REFERENCES

Aberman, K., Weng, Y., Lischinski, D., Cohen-Or, D., and

Chen, B. (2020). Unpaired motion style transfer from

video to animation. ACM Transactions on Graphics

(TOG), 39(4):64–1.

Amirian, J., Zhang, B., Castro, F. V., Baldelomar, J. J.,

Hayet, J.-B., and Pettré, J. (2020). Opentraj: As-

sessing prediction complexity in human trajectories

datasets. In Proceedings of the Asian Conference on

Computer Vision.

Avvenuti, M., Bongiovanni, M., Ciampi, L., Falchi, F., Gen-

naro, C., and Messina, N. (2022). A spatio- tempo-

ral attentive network for video-based crowd counting.

In IEEE Symposium on Computers and Communica-

tions, ISCC 2022, Rhodes, Greece, June 30 - July 3,

2022, pages 1–6. IEEE.

Bamaqa, A., Sedky, M., Bosakowski, T., Bastaki, B. B., and

Alshammari, N. O. (2022). Simcd: Simulated crowd

data for anomaly detection and prediction. Expert Sys-

tems with Applications, 203:117475.

Bewley, A., Ge, Z., Ott, L., Ramos, F., and Upcroft, B.

(2016). Simple online and realtime tracking. In 2016

IEEE International Conference on Image Processing

(ICIP), pages 3464–3468.

Bochinski, E., Senst, T., and Sikora, T. (2018). Extend-

ing iou based multi-object tracking by visual infor-

mation. In IEEE International Conference on Ad-

vanced Video and Signals-based Surveillance, pages

441–446, Auckland, New Zealand.

Bolya, D., Zhou, C., Xiao, F., and Lee, Y. J. (2019).

YOLACT: Real-time instance segmentation. In 2019

IEEE/CVF International Conference on Computer Vi-

sion (ICCV). IEEE.

Cafarelli, D., Ciampi, L., Vadicamo, L., Gennaro, C.,

Berton, A., Paterni, M., Benvenuti, C., Passera, M.,

and Falchi, F. (2022). MOBDrone: A drone video

dataset for man OverBoard rescue. In Image Anal-

ysis and Processing – ICIAP 2022, pages 633–644.

Springer International Publishing.

Chen, K., Loy, C. C., Gong, S., and Xiang, T. (2012). Fea-

ture mining for localised crowd counting. In Bmvc,

volume 1, page 3.

Chen, L.-C., Papandreou, G., Kokkinos, I., Murphy, K., and

Yuille, A. L. (2018). DeepLab: Semantic image seg-

mentation with deep convolutional nets, atrous con-

volution, and fully connected CRFs. IEEE Transac-

tions on Pattern Analysis and Machine Intelligence,

40(4):834–848.

Ciampi, L., Carrara, F., Totaro, V., Mazziotti, R., Lupori,

GRAPP 2023 - 18th International Conference on Computer Graphics Theory and Applications

228

L., Santiago, C., Amato, G., Pizzorusso, T., and Gen-

naro, C. (2022a). Learning to count biological struc-

tures with raters’ uncertainty. Medical Image Analy-

sis, 80:102500.

Ciampi, L., Foszner, P., Messina, N., Staniszewski, M.,

Gennaro, C., Falchi, F., Serao, G., Cogiel, M., Golba,

D., Szcz˛esna, A., and Amato, G. (2022b). Bus vio-

lence: An open benchmark for video violence detec-

tion on public transport. Sensors, 22(21).

Ciampi, L., Gennaro, C., Carrara, F., Falchi, F., Vairo, C.,

and Amato, G. (2022c). Multi-camera vehicle count-

ing using edge-AI. Expert Systems with Applications,

207:117929.

Ciampi, L., Messina, N., Falchi, F., Gennaro, C., and Am-

ato, G. (2020). Virtual to real adaptation of pedestrian

detectors. Sensors, 20(18):5250.

Clavet, S. (2016). Motion matching and the road to next-

gen animation. In Proc. of GDC, volume 2016.

Contributors, M. (2020). MMTracking: OpenMMLab

video perception toolbox and benchmark. https://

github.com/open-mmlab/mmtracking.

Courty, N., Allain, P., Creusot, C., and Corpetti, T. (2014).

Using the agoraset dataset: Assessing for the quality

of crowd video analysis methods. Pattern Recognition

Letters, 44:161–170.

Dendorfer, P., Rezatofighi, H., Milan, A., Shi, J., Cremers,

D., Reid, I., Roth, S., Schindler, K., and Leal-Taixé, L.

(2020). Mot20: A benchmark for multi object track-

ing in crowded scenes. arXiv:2003.09003[cs]. arXiv:

2003.09003.

Foszner, P., Staniszewski, M., Szcz˛esna, A., Cogiel, M.,

Golba, D., Ciampi, L., Messina, N., Gennaro, C.,

Falchi, F., Amato, G., and Serao, G. (2022). Bus Vio-

lence: a large-scale benchmark for video violence de-

tection in public transport.

Holden, D., Habibie, I., Kusajima, I., and Komura, T.

(2017). Fast neural style transfer for motion data.

IEEE computer graphics and applications, 37(4):42–

49.

Holden, D., Kanoun, O., Perepichka, M., and Popa, T.

(2020). Learned motion matching. ACM Transactions

on Graphics (TOG), 39(4):53–1.

Khadka, A. R., Oghaz, M., Matta, W., Cosentino, M., Re-

magnino, P., and Argyriou, V. (2019). Learning how

to analyse crowd behaviour using synthetic data. In

Proceedings of the 32nd International Conference on

Computer Animation and Social Agents, pages 11–14.

Lemonari, M., Blanco, R., Charalambous, P., Pelechano,

N., Avraamides, M., Pettré, J., and Chrysanthou, Y.

(2022). Authoring virtual crowds: A survey. In Com-

puter Graphics Forum, volume 41, pages 677–701.

Wiley Online Library.

Lin, T.-Y., Goyal, P., Girshick, R., He, K., and Dollar, P.

(2017). Focal loss for dense object detection. In 2017

IEEE International Conference on Computer Vision

(ICCV). IEEE.

P˛eszor, D., Staniszewski, M., and Wojciechowska, M.

(2016). Facial reconstruction on the basis of video

surveillance system for the purpose of suspect iden-

tification. In Nguyen, N. T., Trawi

´

nski, B., Fujita,

H., and Hong, T.-P., editors, Intelligent Information

and Database Systems, pages 467–476, Berlin, Hei-

delberg. Springer Berlin Heidelberg.

Saeed, R. A., Recupero, D. R., and Remagnino, P. (2022).

Simulating crowd behaviour combining both micro-

scopic and macroscopic rules. Information Sciences,

583:137–158.

Sindagi, V., Yasarla, R., and Patel, V. M. (2020). Jhu-

crowd++: Large-scale crowd counting dataset and a

benchmark method. IEEE Transactions on Pattern

Analysis and Machine Intelligence.

Staniszewski, M., Foszner, P., Kostorz, K., Michalczuk,

A., Wereszczy

´

nski, K., Cogiel, M., Golba, D., Woj-

ciechowski, K., and Pola

´

nski, A. (2020). Application

of crowd simulations in the evaluation of tracking al-

gorithms. Sensors, 20(17):4960.

Staniszewski, M., Kloszczyk, M., Segen, J., Wereszczy

´

nski,

K., Drabik, A., and Kulbacki, M. (2016). Recent de-

velopments in tracking objects in a video sequence. In

Intelligent Information and Database Systems, pages

427–436. Springer Berlin Heidelberg.

Van Toll, W. and Pettré, J. (2021). Algorithms for micro-

scopic crowd simulation: Advancements in the 2010s.

In Computer Graphics Forum, volume 40, pages 731–

754. Wiley Online Library.

Wang, Q., Gao, J., Lin, W., and Li, X. (2020). Nwpu-crowd:

A large-scale benchmark for crowd counting and lo-

calization. IEEE transactions on pattern analysis and

machine intelligence, 43(6):2141–2149.

Wang, Q., Gao, J., Lin, W., and Yuan, Y. (2019). Learn-

ing from synthetic data for crowd counting in the

wild. In Proceedings of the IEEE/CVF conference on

computer vision and pattern recognition, pages 8198–

8207.

Wereszczy

´

nski, K., Michalczuk, A., Foszner, P., Golba, D.,

Cogiel, M., and Staniszewski, M. (2021). Elsa: Euler-

lagrange skeletal animations - novel and fast motion

model applicable to vr/ar devices. In Computational

Science – ICCS 2021, pages 120–133, Cham. Springer

International Publishing.

Wojke, N. and Bewley, A. (2018). Deep cosine metric learn-

ing for person re-identification. In 2018 IEEE Win-

ter Conference on Applications of Computer Vision

(WACV), pages 748–756. IEEE.

Wojke, N., Bewley, A., and Paulus, D. (2017). Simple on-

line and realtime tracking with a deep association met-

ric. In 2017 IEEE International Conference on Image

Processing (ICIP), pages 3645–3649. IEEE.

Yang, S., Li, T., Gong, X., Peng, B., and Hu, J. (2020). A

review on crowd simulation and modeling. Graphical

Models, 111:101081.

Yoon, Y., Kim, D. Y., Yoon, K., Song, Y., and Jeon,

M. (2019). Online multiple pedestrian tracking us-

ing deep temporal appearance matching association.

CoRR, abs/1907.00831.

Development of a Realistic Crowd Simulation Environment for Fine-Grained Validation of People Tracking Methods

229