Domain Adaptive Pedestrian Detection Based on Semantic Concepts

Patrick Feifel

1,2

, Frank Bonarens

1

and Frank K

¨

oster

2,3

1

Stellantis, Opel Automobile GmbH, Germany

2

Carl von Ossietzky Universit

¨

at Oldenburg, Germany

3

Deutsches Zentrum f

¨

ur Luft- und Raumfahrt, Germany

Keywords:

Pedestrian Detection, Unsupervised Domain Adaptation, Interpretability.

Abstract:

Pedestrian detection is subject to high complexity with a wide variety of pedestrian appearances and postures

as well as environmental conditions. Building a sufficient real-world dataset is labor-intensive and costly.

Thus, the application of synthetic data is promising, but deep neural networks show a lack of generalization

when trained solely on synthetic data. In our work, we propose a novel method for concept-based domain

adaptation for pedestrian detection (ConDA). In addition to the 2D bounding box prediction, an auxiliary body

part segmentation exploits discriminative features of semantic concepts of pedestrians. Inspired by approaches

to the inherent interpretability of DNNs, ConDA has been shown to strengthen generalization. This is done by

enforcing a high intra-class concentration and inter-class separation of extracted body part features in the latent

space. We report performance results regarding various training strategies, feature extractions and backbones

for ConDA on the real-world CityPersons dataset.

1 INTRODUCTION

The reliable perception of vulnerable road users is a

key requisite for automated driving. State-of-the-art

deep neural networks for pedestrian detection (PD-

DNNs) are specifically designed and developed for

real datasets. A limiting factor is the labor-intensive

and expensive manual generation of ground truth an-

notations for real-world images. Contrarily, syn-

thetic data generation is more cost-effective, cus-

tomizable and scalable. The consortium project KI

Absicherung

1

(KI-A) focuses on generating synthetic

data for pedestrian detection. Unfortunately, even

photo-realistic synthetic data and real data exhibit a

great domain shift. PD-DNNs solely trained with syn-

thetic data generalize poorly to the real world. Un-

supervised domain adaptation (UDA) aims to over-

come insufficient generalization by enhancing super-

vised learning from synthetic data with unsupervised

learning from real and unlabeled data. Due to the ac-

cessibility of synthetic data, additional task-specific

labels such as body part segmentation (BPS) or in-

stance segmentation can easily be provided. Based

on this, pedestrian detection can be framed as a set of

main tasks such as localization and classification and

auxiliary segmentation.

Increasing emphasis on the segmentation of se-

1

translation: AI Safeguarding, https://www.

ki-absicherung-projekt.de/en/

mantic concepts is also consistent with recent re-

search on interpretability for DNNs. Interpretability

is increasingly seen as a requirement for models used

in safety-critical applications (Rudin, 2019). Some

works analyze if an already trained DNN embeds pre-

defined semantic concepts (Kim et al., 2018; Hasel-

hoff et al., 2021). Another line of work focuses on

the adaption of DNN architectures to enable inherent

interpretability (Chen et al., 2019; Koh et al., 2020;

Feifel et al., 2021b; Feifel et al., 2021a). The common

idea is that classes are predicted based on distances

to prototypes or concept vectors in the latent space.

Methods for inherent interpretability make heavy use

of achievements in the field of metric learning. The

common goal is to diminish the disadvantages of the

softmax loss and improve generalization.

Motivated by the interdependent benefits of inher-

ent interpretability and metric learning, we propose

a methodology for concept-based domain adaptation

for pedestrian detection (ConDA). Our contribution

can be summarized as follows:

• We show that learning an auxiliary body part seg-

mentation from synthetic data improves general-

ization on real data of a DNN for pedestrian de-

tection.

• We show that inherent interpretable DNNs in-

spired by techniques from metric learning offer

superior generalization on real data.

652

Feifel, P., Bonarens, F. and Köster, F.

Domain Adaptive Pedestrian Detection Based on Semantic Concepts.

DOI: 10.5220/0011690900003417

In Proceedings of the 18th International Joint Conference on Computer Vision, Imaging and Computer Graphics Theory and Applications (VISIGRAPP 2023) - Volume 5: VISAPP, pages

652-659

ISBN: 978-989-758-634-7; ISSN: 2184-4321

Copyright

c

2023 by SCITEPRESS – Science and Technology Publications, Lda. Under CC license (CC BY-NC-ND 4.0)

• We propose a novel methodology for concept-

based domain adaptive pedestrian detection

(ConDA) that enhances supervised learning from

synthetic data with unsupervised learning from

real and unlabeled data.

2 RELATED WORK

2.1 Pedestrian Detection

Pedestrian detection is about locating and classifying

2D bounding boxes for pedestrians in a given im-

age. Recent research has produced more and more

high-performing anchor-free approaches. Two-stage

PD-DNNs such as F2DNet (Khan et al., 2022) ap-

ply a region proposal as an intermediate step to iden-

tify possible areas of an image that might hold an ob-

ject. Contrarily, CSP (Liu et al., 2019), APD (Zhang

et al., 2020) and BGCNet (Li et al., 2020) use a simple

one-stage architecture to achieve state-of-the-art per-

formance. These PD-DNNs predict keypoints, most

commonly the center point of an object, and encode

boxes with additional regression heads for the scale

and offset of a bounding box. Our proposed ConDA

builds upon the simple single-stage architecture with-

out relying on default anchors.

2.2 Inherent Interpretability

Although a general definition of interpretability cur-

rently doesn’t exist, a widespread idea in terms of in-

herent interpretability is to enforce intra-class concen-

tration and inter-class separation based on distances

in the latent space. Hence, two opposing mechanisms

are key aspects: (1) clustering positive pairs of the

same class and (2) separation of negative samples.

The conclusive reasoning process is based on repre-

sentations that can either be unsupervised prototypes

(e.g. ProtoPNet (Chen et al., 2019), ProtoNCE (Li

et al., 2021) and CSPP (Feifel et al., 2021b)) or prede-

fined semantic concepts as supervised anchors for the

training process (e.g. SupCon (Khosla et al., 2020)

and CPD (Feifel et al., 2021a)).

Since semantic concepts or prototypes are rep-

resented by feature vectors in the latent space and

distance measures are used for predictions, inherent

interpretability is closely related to metric learning.

Proposed methods are motivated by well-known is-

sues with the softmax loss (inner product and cross-

entropy loss) arising from the use of the inner product

as a similarity measure (Peng and Yu, 2021; Ghiasi-

Shirazi, 2019). Loss formulations such as I2CS (Peng

and Yu, 2021), SupCon (Khosla et al., 2020) and Pro-

toNCE (Li et al., 2021) try to enforce intra-class con-

centration in order to learn more discriminative fea-

tures.

2.3 Unsupervised Domain Adaptation

Motivated by the easy accessibility of synthetic data

and insufficient generalization capabilities of state-of-

the-art DNNs, methods for UDA aim at closing the

occurring domain gap. Similar to our proposed ap-

proach, self-paced learning for object detection (So-

viany et al., 2021) uses a teacher-student framework.

UMT (Deng et al., 2021) follows a similar approach

where a student learns from pseudo labels but they

also apply adversarial training for cross-domain dis-

tillation. Contrarily, our proposed ConDA does not

rely on any kind of style transfer or adversarial train-

ing. Regarding UDA for semantic segmentation,

ProDA (Zhang et al., 2021) and SePiCo (Xie et al.,

2022) leverage prototypes as feature vectors in the la-

tent space to rectify noisy pseudo labels. Softmax-

based class predictions are adjusted by distances to

prototypes, allowing for more robust classification

and generalization in the presence of class imbal-

ances or outliers. Both approaches leverage promis-

ing key ideas from the field of inherent interpretability

and metric learning. Additionally, DAFormer (Hoyer

et al., 2022) specifically designs data augmentation

techniques for the target domain.

3 METHODOLOGY

3.1 Generic Pedestrian Detector

In this work, we extend the generic DNN architec-

ture for pedestrian detection (Feifel et al., 2022). We

define a PD-DNN h = f ◦ g as a composition of the

feature extraction f and multiple perception heads g.

Figure 1 shows three different PD-DNN architectures

that are investigated in our work: (1) PD-DNN for

center, scale and offset prediction (CSO), (2) CSO

with an auxiliary BPS (CSO+BPS) and (3) CSO with

an interpretable reasoning process (IRP) for an auxil-

iary BPS (CPD). An optional stacked hourglass (HG)

(Newell et al., 2016) might increase performance.

Fixed deconvolutions (Yu et al., 2018) upsample the

predicted BPS.

All PD-DNNs predict a set of 2D bounding boxes

for pedestrians. A set of 2D bounding boxes R =

{(x

1

, y

1

, x

2

, y

2

)}

K

i=1

can be defined as a set of K tu-

ples of four corner coordinates. Predicted bounding

Domain Adaptive Pedestrian Detection Based on Semantic Concepts

653

Figure 1: The baseline for our work is given by a simple PD-DNN architecture that applies center, scale and offset heads (CSO)

to predict 2D bounding boxes. CSO can be extended with an auxiliary body part segmentation (BPS), denoted as CSO+BPS.

The third method uses an interpretable reasoning process (IRP) to predict a body part segmentation for the concept-based

pedestrian detection (CPD) (Feifel et al., 2021a).

boxes

˜

R are encoded by the center, scale and off-

set head. The loss for bounding boxes is defined as

L

box

= λ

ce

L

ce

+ λ

sc

L

sc

+ λ

o

L

o

. The trade-off weights

λ

ce

, λ

sc

and λ

o

are empirically set to 0.01, 1.0 and 0.1.

The scale loss L

sc

and offset loss L

o

are defined as

smooth L1 loss. Due to the high positive-negative

sample discrepancy, L

ce

uses a focal loss with γ = 4

(Lin et al., 2017) that is further weighted with a Gaus-

sian map µ

i, j

for every pedestrian center (Liu et al.,

2019; Feifel et al., 2022). Moreover, an ignore map

o

i, j

highlights ignored bounding boxes. The final cen-

ter loss is given by

L

ce

= −

1

K

H

′

∑

i=1

W

′

∑

j=1

¯µ

i, j

(1 − o

i, j

)(1 −

¯

ν

i, j

)

γ

log

¯

ν

i, j

(1)

where

¯µ

i, j

=

1 if ξ

i, j

= 1

(1 − µ

i, j

)

β

otherwise

(2)

¯

ν

i, j

=

ˆ

ξ

i, j

if ξ

i, j

= 1

1 −

ˆ

ξ

i, j

otherwise

(3)

Predictions of the center head of the student network

h

ce

θ

for a given source image x

S

are defined as

ˆ

ξ

i, j

=

h

ce

θ

(x

S

). All binary maps (ξ

i, j

, µ

i, j

and o

i, j

) are created

based on a set of ground truth bounding boxes R.

According to Figure 1, the auxiliary BPS can be

predicted based on two different transformations: (a)

convolutional module (CM) for CSO+BPS or (b) in-

terpretable reasoning process (IRP) for concept-based

pedestrian detection (CPD) (Feifel et al., 2021a). We

use four predefined body parts and define the set C

a

=

{background, head, torso, arm, leg}. In the case of the

interpretable approach the set of classes is reduced to

semantic concepts: C

b

= {head, torso, arm, leg}. Con-

sequently, the number of classes N

C

depends on the

chosen transformation: (a) N

C

= |C

a

| = 5 or (b) N

C

=

|C

b

| = 4. The perception head for BPS of CSO+BPS

or CPD outputs the predictions

ˆ

π

k,i, j

. Fixed de-

convolutions (Yu et al., 2018) compute upsampled

confidence scores

ˆ

Π

k,i, j

: π ∈ [0, 1]

N

C

×H

′

×W

′

→ Π ∈

[0, 1]

N

C

×H×W

. Based on one-hot encoded ground

truth body part annotations Π

k,i, j

, the focal binary

cross-entropy loss is formulated as

L

seg

= −

1

N

N

C

∑

k=1

H

∑

i=1

W

∑

j=1

(1 − S

k,i, j

)

γ

log(S

k,i, j

) (4)

with

S

k,i, j

=

ˆ

Π

k,i, j

if Π

k,i, j

= 1

1 −

ˆ

Π

k,i, j

otherwise

(5)

and N = N

C

HW . The focal term S

k,i, j

is introduced

due to the high class imbalance of negative (back-

ground) and positive (body part) pixels.

We define a set of latent representations Z =

{z

i, j

}

H

′

,W

′

i, j

with z ∈ [0, 1]

64

as an encoding of an image

x output by feature extraction f (x) : X → Z. The ex-

tracted latent representations are matched with learn-

able concept vectors c

k

for four concepts (i.e., head,

torso, arm and leg) with the help of binary concept

masks π

k,i, j

. The loss for IRP of CPD is defined as

L

latent

= λ

cl

L

cl

+ λ

sc

L

con

sep

+ λ

sb

L

back

sep

. We define the

mean square loss to learn concept clusters by mini-

mizing the squared euclidean distance of latent repre-

sentations z

i, j

to concept vectors c

k

as

L

cl

=

1

M

cl

N

C

∑

k=1

H

′

∑

i=1

W

′

∑

j=1

π

k,i, j

∥c

k

− z

i, j

∥

4

(6)

VISAPP 2023 - 18th International Conference on Computer Vision Theory and Applications

654

M

cl

=

∑

N

C

k=1

∑

H

′

i=1

∑

W

′

j=1

π

k,i, j

describes the number of

positive concept pixels. To achieve a high inter-class

separation, we introduce a safety margin δ

′

k

= εδ

∗

k

with parameter ε describing a multiple of the critical

distance δ

∗

(Feifel et al., 2021a). The safety margin

δ

′

k

is used to define a weighted loss contribution based

on ψ

k

= exp

log(z)

δ

′

k

−δ

∗

k

·

δ

k

− δ

∗

k

that penalizes small

distances of negative samples. The generic separation

loss is defined as

L

sep

=

1

M

sep

N

C

∑

k=1

H

′

∑

i=1

W

′

∑

j=1

ρ

k,i, j

ψ

k

δ

′

k

− ∥c

k

− z

i, j

∥

2

2

(7)

with M

sep

=

∑

N

C

k=1

∑

H

′

i=1

∑

W

′

j=1

ρ

k,i, j

as the number of

negative pixels. In the case of separation against back-

ground (L

back

se

) or other concepts (L

con

se

), the one-hot

encoded binary mask for negative pixels ρ

k,i, j

must

be adapted accordingly (Feifel et al., 2021a).

Predicted bounding boxes post-processed

by the non-maximum suppression (NMS)

are a set of detection bounding boxes

ˆ

R. We only accept bounding boxes

ˆ

R

f

=

n

(x

1

, y

1

, x

2

, y

2

) ∈

ˆ

R |

∑

N

C

k=1

∑

y

2

i=y

1

∑

x

2

j=x

1

ˆ

Π

k,i, j

> 0

o

that contain pixels of at least one body part.

3.2 Concept-Based Domain Adaptation

We opt for a two-stage methodology for UDA simi-

lar to ProDA. Note that, unlike our work, this method

focuses on semantic segmentation and not pedestrian

detection. Our methodology proposes two consec-

utive training stages. Initially, a PD-DNN with an

auxiliary BPS is trained solely on synthetic data and

validated on real data. Hereafter, the pre-trained PD-

DNN is used as a starting point for UDA. Since our

methodology is related to inherent interpretability and

metric learning, we refer to it as concept-based do-

main adaptation for pedestrian detection (ConDA).

Due to superior generalization, we use CPD as

PD-DNN for ConDA. Consequently, ConDA utilizes

a structured latent space due to the cluster and sepa-

ration mechanism of CPD. In contrast to ProDA and

SePiCo, we integrate concept vectors (similar to their

prototypes) directly into the DNN architecture. Al-

gorithm 1 gives a detailed description of the different

training steps for ConDA. The unsupervised domain

adaptation starts with a pre-trained CPD h and param-

eters θ. It was previously trained on images x

S

∈ X

S

with labels y

S

as ground truth from a source domain

X

S

. In our case, the source domain is represented by

synthetic data. We use a conventional self-training ap-

proach where pseudo labels are fixed during the sec-

ond training stage for domain adaptation. Pseudo la-

bels are generated on-the-fly by a pseudo network

˜

h

with parameters θ for images x

T

∈ X

T

from the target

domain X

T

represented by real data.

Algorithm 1: Pseudocode for ConDA.

Input: Training dataset (X

S

, Y

S

, X

T

), CPD h

with pre-trained parameters θ and

thresholds τ

ign

, τ

ce

, τ

sep

, τ

cl

.

Output: Teacher model h

θ

′

.

1 Initialize student network h

θ

;

2 Initialize pseudo network:

˜

h

θ

← h

θ

;

3 Initialize teacher network: h

θ

′

← h

θ

;

4 while i ≤ iterations do

5 Get source images x

i

S

and ground truth

Y

S

= (ξ

i

, π

i

, Π

i

);

6 Train student h

θ

with loss L

S

:

L

S

= L

box

+ L

seg

+ L

latent

;

7 Get target images x

i

T

;

8 Get pseudo targets (ξ

′i

, π

′i

, Π

′i

) from

˜

h

θ

;

9 Train student h

θ

with loss L

T

:

L

T

= L

T

box

+ L

T

seg

+ L

T

cl

+ L

T

sep

;

10 Update teacher h

θ

′

:

- Parameters θ

′

: θ

′

i+1

← αθ

′

i

+ (1 − α)θ

i

- Running batch norm: h

θ

′

= h

θ

′

(x

T

) ;

11 end

Pseudo bounding boxes are encoded by out-

puts of the center, scale and offset heads of the

pseudo network

˜

h

θ

. Center predictions for a sam-

ple x

T

drawn from the target domain are defined

as

˜

ξ

i, j

=

˜

h

ce

θ

(x

T

). Generating pseudo bounding

boxes

˜

R = d

h

˜

ξ

i, j

> τ

ce

i

,

˜

h

sc

θ

(x

T

),

˜

h

o

θ

(x

T

)

is done

by applying a decoding function d() (including

NMS) with the scale and offset predictions as addi-

tional inputs. Applying a center threshold τ

ce

and

the Iverson bracket [·] guarantees that only bound-

ing boxes with high certainty are used as posi-

tive pseudo labels. Finally, a hard pseudo tar-

get for the center map ξ

′

i, j

can be generated from

˜

R. To efficiently denoise pseudo labels, ignored

(pseudo) bounding boxes are defined as

˜

R

ign

=

d

h

˜

ξ

i, j

> τ

ign

∧

˜

ξ

i, j

≤ τ

ce

i

,

˜

h

sc

θ

(x

T

), ...

. Based on

˜

R

ign

and ξ

′

i, j

, pseudo ignore areas o

′

i, j

are defined. To

counteract trivial solutions, bounding boxes below a

minimal threshold τ

ign

are seen as negative samples.

We empirically set the thresholds τ

ign

and τ

ce

to 0.1

and 0.6. The unsupervised center loss for center pre-

dictions of student network

ˆ

ξ

i, j

for K predicted pedes-

trians and hard pseudo targets ξ

′

i, j

for an unlabeled

Domain Adaptive Pedestrian Detection Based on Semantic Concepts

655

sample from the target domain is given by

L

T

ce

= −

1

K

H

′

∑

i=1

W

′

∑

j=1

¯µ

i, j

(1 − ˜o

i, j

)(1 −

¯

ν

i, j

)

γ

log

¯

ν

i, j

(8)

Scale and offset behave accordingly with the same ig-

nore area ˜o

i, j

.

ConDA utilizes the predicted distance-based body

part segmentation to strengthen the clustering and

further, minimize the distance of positive latent rep-

resentations to concept vectors. Soft pseudo pre-

dictions of the pseudo network for body part seg-

mentation are given by

˜

π

k,i, j

. We apply the clus-

ter threshold τ

cl

to define the pseudo concepts masks

π

′

k,i, j

=

k = argmax

k

′

˜

π

k

′

,i, j

∧

˜

π

k,i, j

> τ

cl

. We empir-

ically set the threshold τ

cl

to 0.8. The unsupervised

cluster loss with pseudo concept mask π

′

k,i, j

, concept

vectors c

k

and latent representations z

i, j

of the student

network is defined as

L

T

cl

=

1

˜

M

cl

N

C

∑

k=1

H

′

∑

i=1

W

′

∑

j=1

π

′

k,i, j

∥c

k

− z

i, j

∥

4

(9)

where

˜

M

cl

is the number of pseudo positive concept

pixels.

To avoid degenerate solutions, negative latent rep-

resentations have to be separated. The binary hard

pseudo separation mask ρ

′

k,i, j

is based on the soft

pseudo targets

˜

π

k,i, j

of the pseudo network and can be

formulated as ρ

′

k,i, j

=

˜

π

k,i, j

< τ

sep

. We empirically

set the threshold τ

sep

to 0.1. The final unsupervised

separation loss is defined as

L

T

sep

=

1

˜

M

sep

N

C

∑

k=1

H

′

∑

i=1

W

′

∑

j=1

ρ

′

k,i, j

ψ

k

δ

′

k

− ∥c

k

− z

i, j

∥

2

2

(10)

where

˜

M

sep

is the number of pseudo negative concept

pixels.

Finally, pseudo targets for the body part segmen-

tation are defined as Π

′

k,i, j

=

k = argmax

k

′

˜

Π

k

′

,i, j

.

To efficiently rectify the pseudo labels for nega-

tive and positive samples, an ignore area O

′

k,i, j

=

τ

sep

≤

˜

Π

k,i, j

≤ τ

cl

is defined. The BPS loss can be

formulated as

L

T

seg

= −

1

˜

M

seg

N

C

∑

k=1

H

∑

i=1

W

∑

j=1

(1 − O

′

k,i, j

)

(1 − S

k,i, j

)

γ

log(S

k,i, j

)

(11)

where

˜

M

seg

=

∑

N

C

∑

H

∑

W

π

k,i, j

gives the number of

non-ignored pixels for the body part segmentation.

It is well known that DNNs show a tremen-

dous bias towards texture, while shape information

is mostly neglected (Geirhos et al., 2018). Limited

simulation and rendering resources are the reason

that synthetic data is prone to show less variation in

shapes (pedestrian posture and perspective) and tex-

tures (pedestrian appearance and illumination). To re-

duce the texture bias and strengthen the shape bias

on body parts, we texturize the background and body

parts with 50 different textures randomly sampled

from the Describable Texture Dataset (DTD) (Cim-

poi et al., 2014). Furthermore, we use extensive nat-

ural data augmentation techniques to alter brightness,

contrast, blurring and other properties. Inspired by

(Geirhos et al., 2018; Liu et al., 2019), we propose a

shape-enforcing data augmentation strategy (SA) for

pedestrian detection.

4 EXPERIMENTS

In our work, we use synthetic data from KI-A, real

data from the CityPersons dataset (Zhang et al., 2017)

and the Cityscapes-Panoptic-Parts dataset (Meletis

et al., 2020) (CS). Results are provided for the val-

idation set of the CityPersons dataset. The domain

gap is analyzed based on the performance of the ora-

cle training (CS → CS), i.e., supervised training and

validation on CS as the target domain. Furthermore,

we investigate the following scenarios: Source-only

training (KI-A → CS), i.e., supervised training on the

source domain (KI-A) and validation on the target do-

main (CS) to analyze generalization and UDA, i.e.,

supervised training on source domain combined with

unsupervised training on target domain and validation

of ConDA on target domain (CS).

The most common performance metric for PD-

DNNs is the log-average miss rate for the reasonable

subset (LAMR

r

) of the CityPersons dataset (Dollar

et al., 2011). Another metric is the MR@1FPPI de-

scribing the miss rate if we accept one false posi-

tive per image (Doll

´

ar et al., 2009). To evaluate the

segmentation performance of body parts, we utilize

the reasonable subset for CityPersons and extend it to

the instance segmentation of Cityscapes and further

to the body part segmentation given by Cityscapes-

Panoptic-Parts and define the mean intersection over

union (mIoU

r

).

4.1 Implementation Details

The following feature extractions are used: MDLA-

UP-34 (Yu et al., 2018; Dai et al., 2017), CSP-

ResNet-50 (Liu et al., 2019) and FPN-ResNet-50

(Zhang et al., 2020). The Adam optimizer with a

learning rate of 1e-4 and a linear warm-up strategy

for 2k iterations is applied. With ConDA, the learn-

ing rate subsequently decreases linearly. We train for

VISAPP 2023 - 18th International Conference on Computer Vision Theory and Applications

656

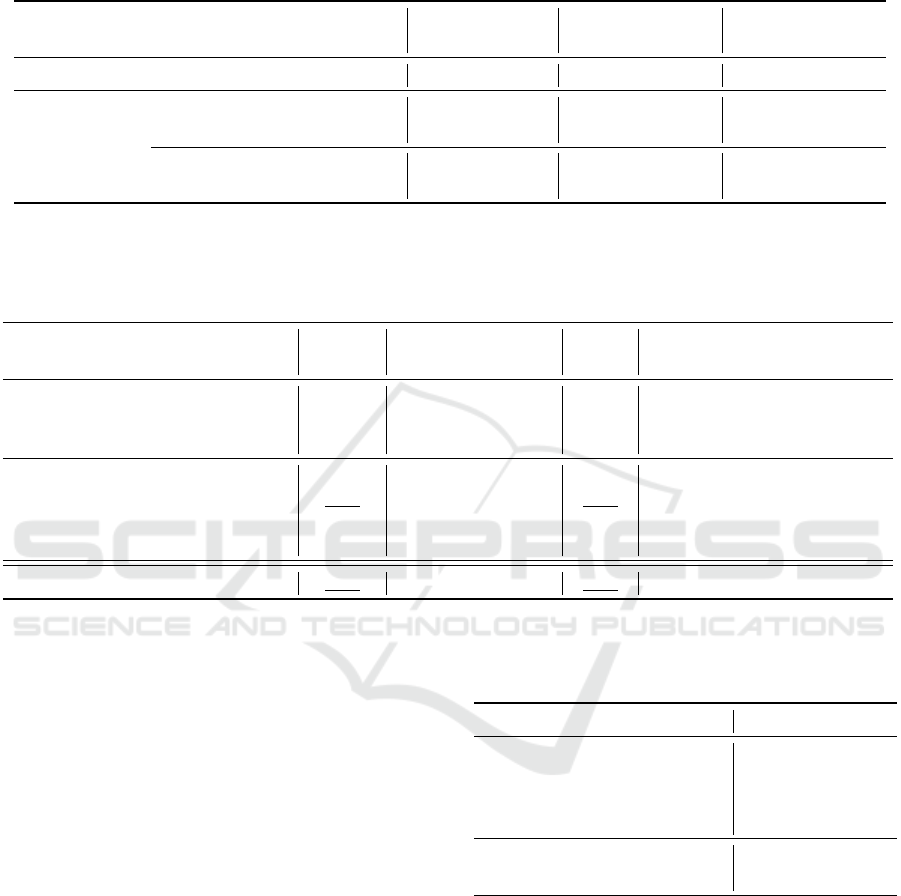

Table 1: Performance of different PD-DNNs and domain adaptation scenarios. The oracle training (CS → CS) of CSO and

CSO+BPS shows competitive performance to state-of-the-art PD-DNNs. Compared to CSO and CSO+BPS, CPD shows

superior generalization for source-only training (KI-A → CS). MDLA-UP-34 is used as feature extractor for all methods.

Prediction Method Transformation Hourglass (HG)

CS → CS KI-A → CS

LAMR

r

mIoU

r

LAMR

r

mIoU

r

2D BB CSO - - 9.6 - 39.6 -

2D BB + BPS

CSO+BPS CM

- 9.0 73.4 36.4 44.9

✓ 10.8 70.8 35.6 48.5

CPD IRP

- 14.2 59.8 41.0 58.5

✓ 13.3 46.1 34.0 62.1

Table 2: Performance for the CityPersons validation dataset considering different domain adaptation scenarios. We can see

that ConDA substantially exceeds the baseline performance (comparing underlined values) by benefiting from CPD. The

MR@1FPPI is shown for different subsets (i.e., reasonable (R), bare (B) and large (L)). MDLA-UP-34 is used as feature

extractor for all methods.

Method Scenario LAMR

r

MR@1FPPI

mIoU

r

IoU

r

R B L Head Torso Arm Leg

CSO

CS → CS

9.6 3.9 5.5 5.5 - - - - -

CSO+BPS 9.0 3.9 2.2 2.3 73.4 75.2 64.6 53.0 74.7

CPD 14.2 4.9 3.3 3.4 59.8 0.0 66.6 63.5 70.0

CSO

KI-A → CS

39.6 23.7 18.5 14.6 - - - - -

CSO+BPS 35.6 21.7 14.3 12.3 48.5 46.4 19.1 20.3 57.9

CPD 34.0 21.5 14.2 12.5 62.1 54.6 51.6 43.8 60.9

CPD (w/ SA+WU) 28.7 13.1 7.5 6.8 62.5 55.2 44.9 48.5 64.2

ConDA UDA 23.0 10.0 5.1 6.0 65.8 57.9 56.0 51.2 64.0

a maximum of 50k iterations on 2 GPUs with a batch

size of 8. The parameters θ

′

of the teacher network are

constantly averaged based on a student-teacher frame-

work (Tarvainen and Valpola, 2017). For inference,

only center points with a confidence score > 0.1 and

bounding boxes with height ≥ 50 pixels are consid-

ered. For post-processing, we apply a Greedy-NMS

with a threshold of 0.5.

4.2 Results

Since ConDA is a two-stage approach, we first an-

alyze the generalization capabilities of source-only

training (KI-A → CS) regarding the LAMR

r

and

mIoU

r

of different PD-DNNs in Table 1. The naive

source-only training is seen as our baseline for all do-

main adaptation strategies of ConDA. We can show

that CSO+BPS (w/o HG) improves the LAMR

r

per-

formance by 0.6% absolute for the oracle scenario

compared to CSO. The performance of source-only

training for CSO+BPS (w/o HG) decreases absolutely

by 27.4% LAMR

r

and 28.5% mIoU

r

compared to or-

acle training. CPD (w/ HG) shows the best gener-

alization with 34.0% LAMR

r

and 62.1% mIoU

r

. It

Table 3: Ablation study to validate the generalization of

source-only training for CPD (KI-A → CS), different train-

ing strategies and feature extractions.

Feat. Extr. SA WU LAMR

r

mIoU

r

MDLA-UP-34 - - 34.0 62.1

MDLA-UP-34 ✓ - 32.0 54.8

MDLA-UP-34 - ✓ 39.4 57.5

MDLA-UP-34 ✓ ✓ 28.7 62.5

FPN-ResNet-50 ✓ ✓ 35.7 56.3

CSP-ResNet-50 ✓ ✓ 33.0 57.4

outperforms CSO+BPS (w/ HG) by a large absolute

margin of 1.6% LAMR

r

and 13.6% mIoU

r

. The ad-

ditional stacked hourglass (HG) is particularly useful

for CPD. Due to better generalization compared to

CSO and CSO+BPS, CPD is used for our proposed

ConDA approach.

Results of the ablation study for different training

strategies are shown in Table 3 and are complemented

by the performance of two comparable feature ex-

tractions. Improving the initial performance of CPD

seems reasonable since it serves as a starting point for

Domain Adaptive Pedestrian Detection Based on Semantic Concepts

657

Figure 2: Inference results: original image (top row),

ground truth bounding boxes in blue, ignored bounding

boxes in red and ground truth body part segmentation (mid-

dle row) and ConDA predictions (bottom row).

ConDA and has a great impact on the final perfor-

mance. It can be shown that SA in combination with

a linear warm-up strategy for the learning rate (WU)

offers the best performance in terms of LAMR

r

and

mIoU

r

for MDLA-UP-34. However, Table 3 shows

an absolute LAMR

r

increase of 5.4% for WU, demon-

strating strong overfitting to the source domain. WU

can only contribute in combination with SA, which

means extensive data augmentation. CSP-ResNet-50

and FPN-ResNet-50 cannot benefit from the proposed

strategies, thus the following experiments are only

performed with MDLA-UP-34. SA and WU are also

applied to the second training stage of ConDA. Fig-

ure 2 shows exemplary inference results of ConDA

for the CityPersons validation dataset.

Compared to the naive source-only training, Ta-

ble 2 shows that ConDA achieves an absolute im-

provement of 16.6% LAMR

r

compared to CSO, stat-

ing the successful UDA towards the real CityPersons

dataset. We also show that ConDA improves seg-

mentation performance for often overlapping and thus

difficult body parts such as torso and arm. ConDA

substantially reduces MR@1FPPI compared to the

naive source-only training and misses the oracle per-

formance by only 6.1% absolute for the reasonable

subset. As to be expected, the performance gap is sub-

stantially lower for easier subsets (i.e, bare and large).

We see an absolute MR@1FPPI of only 5.1% and 6%

respectively. Hence, ConDA nearly matches the per-

formance of state-of-the-art PD-DNNs. Due to the

applied metric learning, CPD has well-separated clus-

ters of discriminative features for body parts leading

to better generalization.

5 CONCLUSION

In our work, we propose ConDA as a novel method

for domain adaptation for pedestrian detection that

enhances supervised learning from synthetic data

with unsupervised learning from real and unlabeled

data. We show that enforcing intra-class concentra-

tion of semantic concepts for pedestrians and inter-

class separation leads to a better generalization. Com-

pared to naive training on only synthetic data, ConDA

substantially increases the performance of pedestrian

detection and an auxiliary body part segmentation by

a large margin on real data. In conclusion, our pro-

posed method ConDA can be seen as a promising step

towards using low-cost synthetic data through domain

adaptation for pedestrian detection based on semantic

concepts.

ACKNOWLEDGEMENTS

The research leading to these results is funded

by the German Federal Ministry for Economic

Affairs and Energy within the project “Metho-

den und Maßnahmen zur Absicherung von KI

basierten Wahrnehmungsfunktionen f

¨

ur das automa-

tisierte Fahren (KI-Absicherung)”. The authors

would like to thank the consortium for the successful

cooperation.

REFERENCES

Chen, C., Li, O., Tao, D., Barnett, A., Rudin, C., and Su,

J. K. (2019). This looks like that: deep learning for

interpretable image recognition. Advances in neural

information processing systems, 32.

Cimpoi, M., Maji, S., Kokkinos, I., Mohamed, S., and

Vedaldi, A. (2014). Describing textures in the wild.

In Proceedings of the IEEE conference on computer

vision and pattern recognition, pages 3606–3613.

Dai, J., Qi, H., Xiong, Y., Li, Y., Zhang, G., Hu, H., and

Wei, Y. (2017). Deformable convolutional networks.

In Proceedings of the IEEE international conference

on computer vision, pages 764–773.

Deng, J., Li, W., Chen, Y., and Duan, L. (2021). Unbiased

mean teacher for cross-domain object detection. In

Proceedings of the IEEE/CVF Conference on Com-

puter Vision and Pattern Recognition, pages 4091–

4101.

Doll

´

ar, P., Wojek, C., Schiele, B., and Perona, P. (2009).

Pedestrian detection: A benchmark. In IEEE Con-

ference on Computer Vision and Pattern Recognition

(CVPR), pages 304–311.

Dollar, P., Wojek, C., Schiele, B., and Perona, P. (2011).

Pedestrian detection: An evaluation of the state of the

VISAPP 2023 - 18th International Conference on Computer Vision Theory and Applications

658

art. IEEE Transactions on Pattern Analysis and Ma-

chine Intelligence, 34(4):743–761.

Feifel, P., Bonarens, F., and K

¨

oster, F. (2021a). Leverag-

ing interpretability: Concept-based pedestrian detec-

tion with deep neural networks. In Computer Science

in Cars Symposium, CSCS ’21. Association for Com-

puting Machinery.

Feifel, P., Bonarens, F., and Koster, F. (2021b). Reevalu-

ating the safety impact of inherent interpretability on

deep neural networks for pedestrian detection. In Pro-

ceedings of the IEEE/CVF Conference on Computer

Vision and Pattern Recognition, pages 29–37.

Feifel, P., Franke, B., Raulf, A., Schwenker, F., Bonarens,

F., and K

¨

oster, F. (2022). Revisiting the evaluation

of deep neural networks for pedestrian detection. In

Proceedings of the Workshop on Artificial Intelligence

Safety 2022.

Geirhos, R., Rubisch, P., Michaelis, C., Bethge, M., Wich-

mann, F. A., and Brendel, W. (2018). Imagenet-

trained cnns are biased towards texture; increasing

shape bias improves accuracy and robustness. arXiv

preprint arXiv:1811.12231.

Ghiasi-Shirazi, K. (2019). Generalizing the convolution op-

erator in convolutional neural networks. Neural Pro-

cessing Letters, 50(3):2627–2646.

Haselhoff, A., Kronenberger, J., Kuppers, F., and Schnei-

der, J. (2021). Towards black-box explainability with

gaussian discriminant knowledge distillation. In Pro-

ceedings of the IEEE/CVF Conference on Computer

Vision and Pattern Recognition, pages 21–28.

Hoyer, L., Dai, D., and Van Gool, L. (2022). Daformer: Im-

proving network architectures and training strategies

for domain-adaptive semantic segmentation. In Pro-

ceedings of the IEEE/CVF Conference on Computer

Vision and Pattern Recognition, pages 9924–9935.

Khan, A. H., Munir, M., van Elst, L., and Dengel, A. (2022).

F2dnet: Fast focal detection network for pedestrian

detection. arXiv preprint arXiv:2203.02331.

Khosla, P., Teterwak, P., Wang, C., Sarna, A., Tian, Y.,

Isola, P., Maschinot, A., Liu, C., and Krishnan, D.

(2020). Supervised contrastive learning. Advances

in Neural Information Processing Systems, 33:18661–

18673.

Kim, B., Wattenberg, M., Gilmer, J., Cai, C., Wexler, J.,

Viegas, F., et al. (2018). Interpretability beyond fea-

ture attribution: Quantitative testing with concept ac-

tivation vectors (tcav). In International conference on

machine learning, pages 2668–2677. PMLR.

Koh, P. W., Nguyen, T., Tang, Y. S., Mussmann, S., Pier-

son, E., Kim, B., and Liang, P. (2020). Concept bottle-

neck models. In International Conference on Machine

Learning, pages 5338–5348. PMLR.

Li, J., Liao, S., Jiang, H., and Shao, L. (2020). Box Guided

Convolution for Pedestrian Detection. In 28th ACM

International Conference on Multimedia, pages 1615–

1624.

Li, J., Zhou, P., Xiong, C., and Hoi, S. C. H. (2021). Pro-

totypical contrastive learning of unsupervised repre-

sentations. In International Conference on Learning

Representations ICLR.

Lin, T.-Y., Goyal, P., Girshick, R., He, K., and Doll

´

ar, P.

(2017). Focal Loss for Dense Object Detection. In

Proceedings of the IEEE/CVF Conference on Com-

puter Vision and Pattern Recognition (CVPR), pages

2980–2988.

Liu, W., Liao, S., Ren, W., Hu, W., and Yu, Y. (2019). High-

level semantic feature detection: A new perspective

for pedestrian detection. In IEEE Conference on Com-

puter Vision and Pattern Recognition (CVPR), pages

5187–5196.

Meletis, P., Wen, X., Lu, C., de Geus, D., and Dubbelman,

G. (2020). Cityscapes-panoptic-parts and pascal-

panoptic-parts datasets for scene understanding. arXiv

preprint arXiv:2004.07944.

Newell, A., Yang, K., and Deng, J. (2016). Stacked hour-

glass networks for human pose estimation. In Euro-

pean conference on computer vision, pages 483–499.

Springer.

Peng, H. and Yu, S. (2021). Beyond softmax loss: Intra-

concentration and inter-separability loss for classifi-

cation. Neurocomputing, 438:155–164.

Rudin, C. (2019). Stop explaining black box machine learn-

ing models for high stakes decisions and use inter-

pretable models instead. Nature Machine Intelligence,

1(5):206–215.

Soviany, P., Ionescu, R. T., Rota, P., and Sebe, N. (2021).

Curriculum self-paced learning for cross-domain ob-

ject detection. Computer Vision and Image Under-

standing, 204:103166.

Tarvainen, A. and Valpola, H. (2017). Mean teachers are

better role models: Weight-averaged consistency tar-

gets improve semi-supervised deep learning results.

Advances in neural information processing systems,

30.

Xie, B., Li, S., Li, M., Liu, C. H., Huang, G., and Wang,

G. (2022). Sepico: Semantic-guided pixel contrast

for domain adaptive semantic segmentation. arXiv

preprint arXiv:2204.08808.

Yu, F., Wang, D., Shelhamer, E., and Darrell, T. (2018).

Deep layer aggregation. In IEEE Conference on Com-

puter Vision and Pattern Recognition (CVPR), pages

2403–2412.

Zhang, J., Lin, L., Zhu, J., Li, Y., Chen, Y.-c., Hu, Y., and

Hoi, C. S. (2020). Attribute-aware pedestrian detec-

tion in a crowd. IEEE Transactions on Multimedia.

Zhang, P., Zhang, B., Zhang, T., Chen, D., Wang, Y., and

Wen, F. (2021). Prototypical pseudo label denois-

ing and target structure learning for domain adap-

tive semantic segmentation. In Proceedings of the

IEEE/CVF conference on computer vision and pattern

recognition, pages 12414–12424.

Zhang, S., Benenson, R., and Schiele, B. (2017). Cityper-

sons: A diverse dataset for pedestrian detection. In

IEEE Conference on Computer Vision and Pattern

Recognition (CVPR), pages 3213–3221.

Domain Adaptive Pedestrian Detection Based on Semantic Concepts

659