Banana Ripeness Level Classification Using a Simple CNN Model

Trained with Real and Synthetic Datasets

Luis E. Chuquimarca

1,2 a

, Boris X. Vintimilla

1 b

and Sergio A. Velastin

3,4 c

1

ESPOL Polytechnic University, ESPOL, CIDIS, Guayaquil, Ecuador

2

UPSE Santa Elena Peninsula State University, UPSE, FACSISTEL, La Libertad, Ecuador

3

Queen Mary University of London, London, U.K.

4

University Carlos III, Madrid, Spain

Keywords:

External-Quality, Inspection, Banana, Maturity, Ripeness, CNN.

Abstract:

The level of ripeness is essential in determining the quality of bananas. To correctly estimate banana maturity,

the metrics of international marketing standards need to be considered. However, the process of assessing the

maturity of bananas at an industrial level is still carried out using manual methods. The use of CNN models

is an attractive tool to solve the problem, but there is a limitation regarding the availability of sufficient data to

train these models reliably. On the other hand, in the state-of-the-art, existing CNN models and the available

data have reported that the accuracy results are acceptable in identifying banana maturity. For this reason,

this work presents the generation of a robust dataset that combines real and synthetic data for different levels

of banana ripeness. In addition, it proposes a simple CNN architecture, which is trained with synthetic data

and using the transfer learning technique, the model is improved to classify real data, managing to determine

the level of maturity of the banana. The proposed CNN model is evaluated with several architectures, then

hyper-parameter configurations are varied, and optimizers are used. The results show that the proposed CNN

model reaches a high accuracy of 0.917 and a fast execution time.

1 INTRODUCTION

Nowadays, most nutritionists agree that consuming

fruits is essential to have a daily nutritious diet. Many

people consume several fruits weekly. Markets are

the main vendors of fruit, which need to offer their

customers high-quality fruit. Therefore, international

markets demand quality control of fruits by agro-

industries based on international standards (Reid,

1985; Kader, 2002). One of the parameters for

fruit quality inspection is the level of ripeness, which

is related to the consumer’s appreciation for buy-

ing the product and the consumption time of some

fruits (Wang et al., 2018; Bhargava and Bansal, 2021).

The determination of the maturity of the fruit is

carried out manually in the agro-industries. There

are several weaknesses in the manual method. For

example, it is time-consuming, labor-intensive, and

can cause inconsistencies in determining banana ma-

turity by the personnel in charge. The rise of ma-

a

https://orcid.org/0000-0003-3296-4309

b

https://orcid.org/0000-0001-8904-0209

c

https://orcid.org/0000-0001-6775-7137

chine vision technology together with the evolution

of deep learning techniques can overcome the prob-

lems mentioned above, potentially being relatively

fast, consistent, and accurate. In agriculture, inno-

vative technologies such as artificial vision are used

for various tasks, such as fruit detection, fruit clas-

sification, and fruit quality determination (apples,

bananas, mangoes, strawberries, blueberries, among

others) (Naranjo-Torres et al., 2020). For fruit qual-

ity inspection, international standards consider three

essential aspects: colorimetry (maturity), geometry

(shape and size), and defects (texture). This work fo-

cuses on colorimetry that is directly proportional to

maturity; that is, depending on the fruit’s color level,

the maturity level can be identified (Tripathi and Mak-

tedar, 2021; Sun et al., 2021; Cao et al., 2021; Naik,

2019). For example, banana ripeness has seven levels,

according to the U.S. state Department of Agriculture

(USDA). Bananas are one of the most consumed fruits

worldwide due to its good taste and its high level of

nutrients.

Convolutional Neural Network (CNN) models are

deep learning techniques applied to computer vision

to identify banana ripeness. In some works, seven

536

Chuquimarca, L., Vintimilla, B. and Velastin, S.

Banana Ripeness Level Classification Using a Simple CNN Model Trained with Real and Synthetic Datasets.

DOI: 10.5220/0011654600003417

In Proceedings of the 18th International Joint Conference on Computer Vision, Imaging and Computer Graphics Theory and Applications (VISIGRAPP 2023) - Volume 5: VISAPP, pages

536-543

ISBN: 978-989-758-634-7; ISSN: 2184-4321

Copyright

c

2023 by SCITEPRESS – Science and Technology Publications, Lda. Under CC license (CC BY-NC-ND 4.0)

banana maturity levels are considered, but in others,

there are only four maturity levels due to the low num-

ber of images per level in the datasets (Saragih and

Emanuel, 2021).

Below is an overview of state of the art on CNN

models applied to banana maturity:

(Zhu and Spachos, 2021) uses a machine learn-

ing technique called Support Vector Machine (SVM)

to compare the results with a YOLOv3 model, which

is trained on a dataset containing few images of ba-

nanas, which generates an inaccurate model. On the

other hand, the YOLOv3 model considers only two

levels of maturity (semi-ripe and well-ripe) and ob-

tains an accuracy of 90.16%. Furthermore, the level

of maturity of the banana depends on the number of

small black areas detected in the texture of the ba-

nana, the greater the number of black dots found, the

greater the maturity of the banana. However, it does

not take into account international standards recom-

mendations.

(Saragih and Emanuel, 2021) evaluates only two

CNN models with the same number of epochs but

with a different number of initial layers to identify

four banana maturity levels (unripe/green, yellowish

green, semi-ripe, and overripe). The evaluation re-

sults of the MobileNetV2 and NASNetMobile models

showed an accuracy of 96.18% and 90.84%, respec-

tively. However, it uses a poor dataset for training

and validation, so the models are likely to be inaccu-

rate. Also, it only performs an evaluation with exist-

ing models.

(Ramadhan et al., ) used a deep CNN to identify

four maturity levels of Cavendish bananas. Banana

images are segmented using YOLO and then fed to a

VGG16 model trained with two different optimizers:

Stochastic Gradient Descent (SGD) and Adam. The

optimization model with SGD has a better accuracy

of 94.12% compared to Adam, which has an accuracy

of 93.25%. In that study, the number of images in the

dataset is sparse, and more CNN models could have

been evaluated.

(Zhang et al., 2018) designed their CNN model

for banana classification considering seven maturity

levels. For the training and testing of the CNN model,

a dataset generated with a total of 17,312 images of

bananas is used. CNN performance results show an

accuracy of 95.6%. However, the research focuses on

a single CNN model. In addition, it does not present

evaluations of the proposed model against existing

models.

After reviewing the state of the art, it can be

said that one of the main problems in measuring ba-

nana maturity levels is that there are no public image

datasets robust enough to work with CNN models.

The generation of datasets might include not only real

images, but can also take advantage of some software

development tools available to generate synthetic im-

ages, using for example: Unreal Engine, Unity3D,

Dall·e mini, among others.

In this article, a CNN model is proposed to mea-

sure four levels of banana maturity, considering that

the model is not heavy and simple. Furthermore, this

work generates a robust dataset for the four banana

maturity levels by using real images plus synthetic

images. In the end, the proposed model with the gen-

erated dataset is evaluated against existing CNN mod-

els, setting specific hyper-parameters. The results ob-

tained from this evaluation verify that the proposed

CNN model obtains better metrics than existing CNN

models.

This paper is organized as follows. Section 2 de-

scribes the proposed methodology for developing the

work. Section 3 presents the results of banana matu-

rity inspection using the proposed model and evalu-

ates it with existing state-of-the-art CNN models. Fi-

nally, the conclusions are given in Section 4.

2 PROPOSED METHODOLOGY

The contributions of this paper are:

• Since there are currently no sufficiently large pub-

lic datasets with different banana maturity levels,

it is proposed to generate an extensive dataset of

images that combines synthetic and real data.

• An own CNN model to measure banana maturity

levels is proposed, the model is simple but with

good results.

• The proposed CNN model and the generated

datasets are evaluated against existing CNN mod-

els, using various configurations of the hyper-

parameters.

The generation of the dataset to be used in the

CNN models has two parts: the first is the generation

of the synthetic dataset, and the second is the gener-

ation of the real dataset. It should be noted that the

synthetic dataset is much larger than the real dataset.

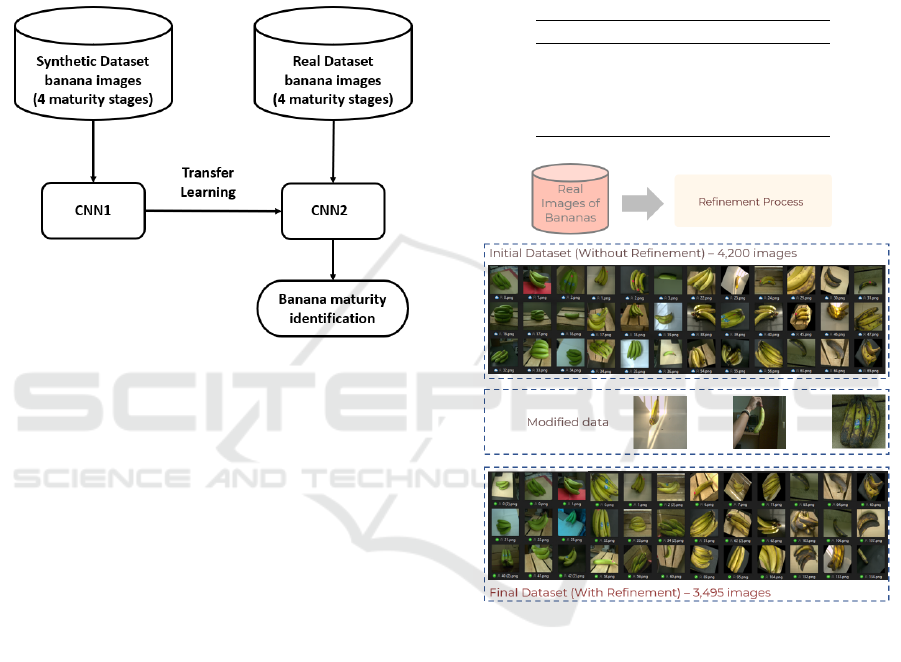

The proposed CNN model has two components:

the first component is the design and implementa-

tion of a simple CNN model called CNN1, which

is trained with the synthetic dataset, resulting in the

generation of weights. The second component is the

application of transfer learning to the same proposed

CNN model but with the configuration of weights

obtained in the CNN1 model, resulting in a CNN2

model to estimate the maturity levels of the banana,

Banana Ripeness Level Classification Using a Simple CNN Model Trained with Real and Synthetic Datasets

537

which is trained with a dataset od real images, result-

ing in a more adjusted CNN model for banana matu-

rity measurement. Finally, the CNN model is evalu-

ated by comparing it with existing CNN models such

as: InceptionV3, ResNet50, Inception-ResNetV2,

and VGG19. This evaluation considers the configu-

ration of hyper-parameters and the application of op-

timizers. For a better understanding of the methodol-

ogy, see Figure 1.

Figure 1: Banana maturity identification process.

2.1 Dataset Generation

For this work, two types of datasets are generated, one

real and the other synthetic, due to the limited num-

ber of real images available in public datasets and the

time demand in developing real image datasets. Pro-

viding a real and synthetic dataset of bananas images,

publicly available at https://github.com/luischuquim/

BananaRipeness.

2.1.1 Real Data

The dataset developed consists of 3,495 real images of

Cavendish bananas, which were taken in a laboratory

with a climate system between 15°C and 18°C for 28

days (the approximate duration of the ripening period

of this type of banana). Also, 4 levels of banana ma-

turity were considered for this work. Therefore, each

week the banana passed from one level of maturity to

another, as indicated in Table 1. However, bananas

have 7 maturity levels, but the number of images that

can be acquired per level for the dataset is low. For

this reason, it is necessary to group into 4 maturity

levels to obtain a greater number of images for the

dataset of each banana maturity level. The acquisition

of the set of images was carried out daily, considering

the maturity cycles of the banana. Therefore, we pro-

ceeded to collect 150 images per day. In the end, a

total of 4,200 images are generated, of which refine-

ment is performed, removing images with noise, low

quality, poor lighting, wrong location of the banana,

and occlusions (see Figure 2). Therefore, the number

of images per level of maturity of the banana will be

variable. It should also be mentioned that some of the

images in the last days of the last level of maturity are

considered rotting and discarded (Ramadhan et al., ).

Table 1: Banana maturity levels per day.

Duration Level of maturity

1 - 6 days A

7 - 14 days B

15 - 22 days C

23 - 28 days D

Figure 2: Real Dataset Refinement.

This procedure is costly and tedious because there

must be a staff dedicated to the data acquisition pro-

cess, during the time that the banana ripens. In ad-

dition, the conditions in which the bananas are kept

must be controlled, such as temperature. Also, it must

be taken into consideration that when moving bananas

care needs to be taken not to spoil them, because it can

cause bruises. So, to obtain a large number of images

for the dataset, which CNN models require, the ac-

quisition process must be performed multiple times,

leading to high costs and extensive staff time.

The acquisition of the images is carried out in dif-

ferent light conditions and backgrounds. However, a

refinement of the images of the real dataset is carried

out, considering several aspects, such as whether the

bananas are at the corresponding maturity levels, and

VISAPP 2023 - 18th International Conference on Computer Vision Theory and Applications

538

eliminating images where the banana is not clearly

defined. In the end, data augmentation techniques

such as rotation are applied, increasing the amount

of data. Therefore, the final number of images in the

real refined dataset is 3,495 images.

There are currently technological tools that allow

the generation of synthetic datasets. Therefore, this

type of tool is explored, such as the Unreal Engine. It

should be noted that there are other types of synthetic

image generation engines such as: Unity3D, CARLA,

or Dall·e mini (Ivanovs et al., 2022; Deiseroth et al.,

2022).

2.1.2 Synthetic Data

Synthetic datasets are an important complement to

the application in CNN models, due to the low cost

and ease of generating large numbers of synthetic

images. In addition, there are several CNN models

in the literature that make use of domain adaptation

and transfer learning techniques by applying synthetic

datasets (Charco et al., 2021).

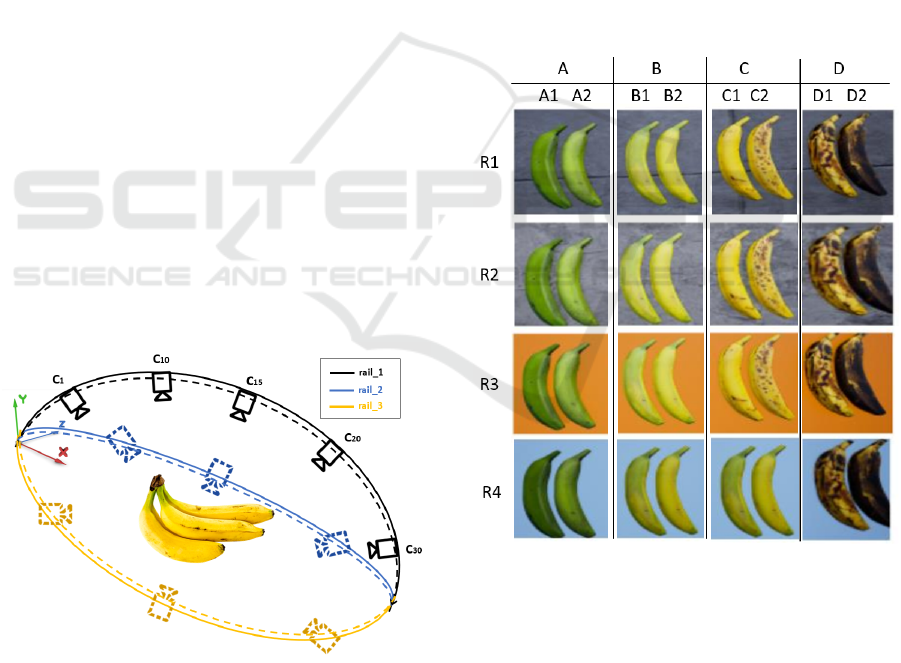

This section describes the process of generating

the synthetic banana image dataset using a 3D mod-

eled banana. The Unreal Engine tool is used to cre-

ate a virtual scenario from which the synthetic data

is generated. The virtual environment contains: three

rails (rail-1, rail-2 and rail-3), cameras in different po-

sitions and angles mounted on each rail (positions C1

- C30), which allow the acquisition of synthetic im-

ages of bananas in 3D, as shown in Figure 3. The

appearance of the artificial banana ripeness is created

using texture from the real images.

Figure 3: Virtual scenario for the generation of the synthetic

images using Unreal Engine.

Various kinematic components of Unreal Engine

are used for the synthetic dataset generation process,

such as: Camera Rig Rail (rails), Cine Camera Ac-

tor (camera), Level Sequence (sequence), HDRIB-

ackdrop (sky and light), and Material (colors and tex-

tures). The camera is fixed to the rail in different po-

sitions to capture the synthetic images (see Figure 3).

The size of the synthetic images (224x244 pixels) is

the same as the real dataset. Therefore, it must be

taken into account that the camera’s “film back” is

configured in millimeters and that it is also propor-

tional to the pixels of the image (1px = 0.26458333).

For each camera scan, 30 images are taken on each

rail (positions C1 - C30 in Figure 3).

Once the virtual scenario is ready, four banana

maturity levels are considered for the acquisition of

synthetic images as indicated in Figure 4 (labels: A,

B, C and D). Additionally, two sublevels per maturity

level are established and labeled: A1, A2, B1, B2,

C1, C2, D1, and D2. In this way, eight colorations

are used as shown in Figure 4, modifying the tonality

curves and adding spots at maturity levels C and D.

For this it was necessary to modify the texture of the

banana using Adobe Photoshop CS6 software.

Figure 4: Synthetic images of banana maturity levels using

different backgrounds.

To provide further variability to the virtual

scenery, eight different backgrounds were used to

capture the synthetic images. The background col-

ors used were: orange, purple, brown, and light blue.

In addition, materials that come by default in Unreal

Engines were used, such as: Asset Platform, Basic

Wall, Concrete Tiles (R1), and Rock Marble (R2) (see

Figure 4). For the last subclass (level D2), the back-

grounds of ”Concrete Tiles” were changed to ”Ce-

Banana Ripeness Level Classification Using a Simple CNN Model Trained with Real and Synthetic Datasets

539

ramic Tile” and ”Rock Marble” to ”Rock SandStone”

to avoid confusion in not distinguish the banana from

the background. In this way, considering the combi-

nations of the proposed scenarios, the total number of

synthetic images generated is 161,280, which is ap-

proximately 40 times greater than the number of im-

ages of the real dataset, which consists of 3,495 im-

ages. The number of images per maturity level of both

real and synthetic bananas is summarized in Table 2.

Table 2: Number of images in the banana dataset.

Banana

Maturity

Level

Number of

Synthetic

Images

Number

of Real

Images

Level A 40,320 1,429

Level B 40,320 815

Level C 40,320 559

Level D 40,320 692

On the other hand, before feeding any CNN with

an image dataset, the RGB images must be normal-

ized to obtain good results and to speed up the com-

putational calculations (Sola and Sevilla, 1997). In

addition, the sizes of the images must be standardized,

a batch size defined, and the categorical variables en-

coded in numbers. This last process is applied to both

the real and the synthetic image datasets.

2.2 Description of the Proposed Model

The proposed model (CIDIS) consists of two convo-

lution layers followed by a max pooling layer. This

configuration is repeated three times, with the rec-

tified linear units (ReLU) in the hidden layers, and

fully connected layers follow. The proposed model

(CIDIS) consists of two convolution layers followed

by a max pooling layer. This configuration is repeated

three times, with the rectified linear units (ReLU) in

the hidden layers, and fully connected layers follow.

The model receives as input images of size 224x224

pixels with a depth of three due to the RGB color

channels, and in the end, there are four outputs as-

sociated with the four levels of maturity.

Then, the transfer learning technique is applied

using the CIDIS model trained with the synthetic im-

age dataset. This model has stored the scheme and

the weights of all its layers. Subsequently, the stored

model is loaded into a new instance of the same

network, transferring all the weight matrices learned

from training with the set of synthetic images. In ad-

dition, the last layers of the network (fully connected)

had to be removed to apply the optimizers. Finally,

the training of the first layers (convolutional and pool-

ing layers) is frozen so that the learned knowledge is

not modified, so only the fully connected layers are

trained.

When the transfer learning technique is applied to

the CIDIS model, this is trained with the real image

dataset, which is refined to obtain better results. For

this training, the fully connected layers are added, and

the optimizer called Adagrad is used because a con-

siderably small dataset is used compared to the syn-

thetic image dataset. This way, the CNN2 model was

obtained.

It is important to mention that when the CNN1

model was trained directly with the real image

dataset, the accuracy values obtained were lower

compared to the CNN2 model, which was fed with

the values of CNN1 applying transfer learning, which

was trained with the synthetic dataset. The CNN2

model is optimized with the following actions such

as: changing the learning rate, using dropout layers,

changing the number of epochs, changing the batch

size value, Choose between the two proposed opti-

mizers (Nadam and Adagrad).

During the training of the CNN2 model, the

Nadam and Adagrad optimizers were used. The first

is a Nesterov accelerated adaptive moment estimation

optimizer that combines ideas from Adam (a stochas-

tic gradient descent method) that uses few computa-

tional resources and NAG (Nesterov accelerated gra-

dient), both of which apply to large datasets (Dozat,

2016). On the other hand, the second is an adaptive

algorithm that updates the learning rate as the number

of learning iterations increases and is more used in

small datasets. Both optimizers allowed to converge

quickly and efficiently depending on the dataset used.

Adding dropout layers is also considered to reduce

overfitting problems. Therefore, by modifying the

values of the hyperparameters, it is possible to verify

which ones give best results and to build a final robust

model, ready to make predictions of banana maturity

levels. Ultimately, this optimized model is evaluated

with the real image dataset.

2.3 Evaluation of the Proposed Model

For the evaluation of the proposed model, a literature

review is carried out from which the best CNN mod-

els previusoly reported in the identification of banana

maturity are chosen, such as: InceptionV3, ResNet50,

Inception-ResNetV2 and VGG19, which are men-

tioned in ascending order according to the quantity of

parameters to train (Faisal et al., 2020; Behera et al.,

2021; Mohapatra et al., 2022).

The VGG19 model within the state-of-the-art re-

view has high performance, high levels of accuracy,

and a considerably low training time (less than the In-

VISAPP 2023 - 18th International Conference on Computer Vision Theory and Applications

540

ceptionResNetV2 model) (Behera et al., 2021).

ResNet models are designed for double or triple-

layer hopping. So skipping layers reduces the dis-

appearing gradient problem. This study uses the 50-

layer ResNet-50. Transfer learning and residual learn-

ing are applied to optimize network parameters and

system development (Helwan et al., 2021).

The Inception-ResNetV2 model has 164 layers.

It is selected because it obtained a lower percent-

age of losses compared to other Inception models

in the state-of-the-art (Szegedy et al., 2017). This

model unites two concepts: Inception (reflecting)

and Residual Connection (residual connections) (He

et al., 2016). In addition, Inception models allow

for more efficient computations and increased depth

of networks through dimensionality reductions with

stacked 1x1 convolutions. Therefore, the model man-

ages to reduce the consumption of computational re-

sources and avoid overfitting (Szegedy et al., 2015).

The InceptionV3 model reduces computational power

consumption, being more efficient than the VGGNet

and InceptionV1 models (Kurama, 2020).

The results of the evaluation of the proposed CNN

model against the selected CNN models are indicated

in the section 3.

3 RESULTS

This section presents and analyzes the results ob-

tained with the generation of the synthetic banana im-

ages and with the refinement process using the real

image dataset. In addition, the results obtained with

the training of the selected CNN models are pre-

sented, as well as the application of the transfer learn-

ing technique with the final optimizations made to

the proposed CIDIS model. For the evaluation of the

models in all cases, a dataset distribution of 60% train,

20% test, and 20% validation is used.

Firstly, the selected CNN models are evaluated

without applying the transfer learning methodology,

and furthermore, they are trained only with the real

dataset. The results obtained with these models are

compared with the proposed CIDIS model using the

same conditions, Table 3 shows these results. The

metric used to evaluate the models was accuracy,

which calculates the frequency with which the pre-

dictions are equal to the proposed labels (0: level A,

1: level B, 2: level C, 3: level D). The time it takes for

a CNN model to classify an image was also measured,

as well as its total memory weight. With the results of

Table 3 a comparison of the accuracy values and the

average classification time is made. Therefore, the

CNN model with the best performance is CIDIS, and

so this is the CNN model chosen for CNN1. These

results were the starting point for this project, estab-

lishing a baseline of what can be achieved only with

the real dataset and without carrying out refinement or

transfer learning. The results of this test serve to com-

pare the accuracy between CNN1 and CNN2, and to

verify if the results obtained by applying the transfer

learning technique are viable.

The CIDIS model was evaluated with the images

of real bananas, obtaining an accuracy of 0.872. After

this, the CIDIS model (as CNN1 model) was trained

on the synthetic data and an accuracy of 1.0 was ob-

tained, which is perfect. This ideal result should not

be a good reference for the model, because the im-

ages of synthetic bananas with different objects, an-

gles and backgrounds are very similar to each other,

and therefore the model easily predicts maturity lev-

els. This means that although the CIDIS model ac-

curately predicts images of synthetic bananas, it is

necessary to train with images of real bananas to bet-

ter generalize the model. Then, the transfer learning

technique is applied to the CIDIS (CNN2) model con-

sidering the parameters and hyperparameters of the

CNN1 pre-trained model with the synthetic dataset,

and it is trained with the refined real dataset. For the

training of the CNN2 model with the real dataset, the

fully connected layers were added and the Adagrad

optimizer was selected because the real dataset is con-

siderably smaller compared to the synthetic dataset.

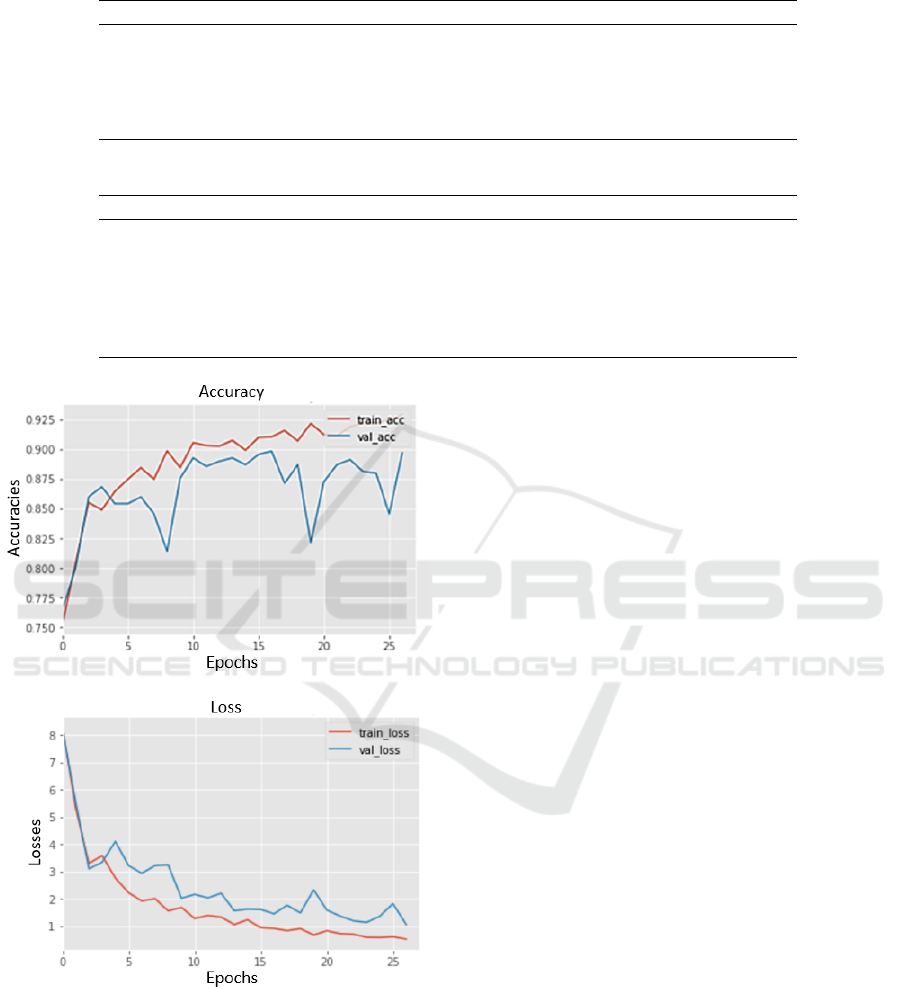

Figure 5 plots the loss and accuracy functions of the

CIDIS model as a CNN2 model, with the real dataset.

It can be seen that in the accuracy graph, the

model starts learning with an accuracy of 0.74, and

continues with an increasing trend until it stabilizes

when the validation accuracy does not improve, ob-

taining an accuracy of 0.917. The graph of the

loss has a decreasing trend until it stabilizes, without

reaching overfitting. With the results obtained from

the CNN2 model, optimizations are applied, such

as varying the hyperparameters (batch size, learning

rate, epochs) of the CNN model, in addition, optimiz-

ers are changed and Dropout layers are added. The

results can be seen in Table 4.

4 CONCLUSIONS

It was possible to build a dataset of synthetic bananas,

which required lower costs and time invested, com-

pared to taking images of real bananas, considering

large volumes of data. In this case, generating 3,495

images of real bananas took over 30 days and required

multiple people, while generating 161,280 synthetic

images took almost as long and was done by a sin-

Banana Ripeness Level Classification Using a Simple CNN Model Trained with Real and Synthetic Datasets

541

Table 3: Comparison of results with CNN models using real data and without transfer learning.

CNN Model Accuracy Model Weight (Mb) Average time (ms)

VGG19 0.562 160 364

ResNet-50 0.816 200 107

Inception-ResNetV2 0.869 1075 224

CIDIS (proposed CNN) 0.872 21 132

InceptionV3 0.849 187 79

Table 4: Results of the proposed CIDIS model using real/synthetic dataset and transfer learning.

CNN Model Optimizer Dropout Learning Rate Batch Size Accuracy

Nadam 2 (0.2) 0.001 50 0.881

Nadam 1 (0.2) 0.001 50 0.891

CIDIS Adagrad 2 (0.2) 0.01 50 0.904

Adagrad 1 (0.2) 0.001 50 0.917

Adam 2 (0.2) 0.001 50 0.916

Adam 1 (0.2) 0.001 50 0.906

Figure 5: Results of the accuracy and loss function of the

CNN2 model using transfer learning.

gle person using the Unreal Engine software. In addi-

tion, a simple own CNN model was implemented to

identify banana maturity, it was evaluated with other

state-of-the-art CNN models, using a dataset with real

images of bananas. Better results were obtained with

the new CNN model, which was selected for the de-

velopment of the proposed work.

In this work, the proposed CNN model (CNN1)

was trained with synthetic images, then the transfer

learning technique was used to a CNN model called

CNN2, which has the same simple architecture as the

proposed model. CNN2 was trained and evaluated

with a real dataset, obtaining a higher accuracy of

0.917 compared to the proposed CNN model without

transfer learning with an accuracy of 0.872. There-

fore, it was found that better results are obtained when

using the proposed methodology.

Although it is true, a balance was not made be-

tween the number of real images of each of the ba-

nana maturity levels; therefore, as future work, it is

intended to balance the amount of data to obtain bet-

ter results.

ACKNOWLEDGEMENTS

This work has been partially supported by the

ESPOL-CIDIS-11-2022 project.

REFERENCES

Behera, S. K., Rath, A. K., and Sethy, P. K. (2021). Maturity

status classification of papaya fruits based on machine

learning and transfer learning approach. Information

Processing in Agriculture, 8(2):244–250.

Bhargava, A. and Bansal, A. (2021). Fruits and vegetables

quality evaluation using computer vision: A review.

Journal of King Saud University-Computer and Infor-

mation Sciences, 33(3):243–257.

Cao, J., Sun, T., Zhang, W., Zhong, M., Huang, B., Zhou,

G., and Chai, X. (2021). An automated zizania

quality grading method based on deep classification

VISAPP 2023 - 18th International Conference on Computer Vision Theory and Applications

542

model. Computers and Electronics in Agriculture,

183:106004.

Charco, J. L., Sappa, A. D., Vintimilla, B. X., and Vele-

saca, H. O. (2021). Camera pose estimation in multi-

view environments: From virtual scenarios to the real

world. Image and Vision Computing, 110:104182.

Deiseroth, B., Schramowski, P., Shindo, H., Dhami, D. S.,

and Kersting, K. (2022). Logicrank: Logic induced

reranking for generative text-to-image systems. arXiv

preprint arXiv:2208.13518.

Dozat, T. (2016). Incorporating nesterov momentum into

adam. In Proceedings of the 4th International Confer-

ence on Learning Representations, pages 1–4.

Faisal, M., Albogamy, F., Elgibreen, H., Algabri, M., and

Alqershi, F. A. (2020). Deep learning and computer

vision for estimating date fruits type, maturity level,

and weight. IEEE Access, 8:206770–206782.

He, K., Zhang, X., Ren, S., and Sun, J. (2016). Deep resid-

ual learning for image recognition. In Proceedings of

the IEEE conference on computer vision and pattern

recognition, pages 770–778.

Helwan, A., Sallam Ma’aitah, M. K., Abiyev, R. H., Uze-

laltinbulat, S., and Sonyel, B. (2021). Deep learning

based on residual networks for automatic sorting of

bananas. Journal of Food Quality, 2021.

Ivanovs, M., Ozols, K., Dobrajs, A., and Kadikis, R. (2022).

Improving semantic segmentation of urban scenes for

self-driving cars with synthetic images. Sensors,

22(6):2252.

Kader, A. A. (2002). Us grade standards. Postharvest tech-

nology of horticultural crops, 3311(287):287–300.

Kurama, V. (2020). A review of popular deep learning

architectures: Resnet, inceptionv3, and squeezenet.

Consult. August, 30.

Mohapatra, D., Das, N., and Mohanty, K. K. (2022). Deep

neural network based fruit identification and grading

system for precision agriculture. Proceedings of the

Indian National Science Academy, pages 1–12.

Naik, S. (2019). Non-destructive mango (mangifera indica

l., cv. kesar) grading using convolutional neural net-

work and support vector machine. In Proceedings of

International Conference on Sustainable Computing

in Science, Technology and Management (SUSCOM),

Amity University Rajasthan, Jaipur-India.

Naranjo-Torres, J., Mora, M., Hern

´

andez-Garc

´

ıa, R., Barri-

entos, R. J., Fredes, C., and Valenzuela, A. (2020). A

review of convolutional neural network applied to fruit

image processing. Applied Sciences, 10(10):3443.

Ramadhan, Y. A., Djamal, E. C., Kasyidi, F., and Bon, A. T.

Identification of cavendish banana maturity using con-

volutional neural networks.

Reid, M. S. (1985). Product maturation and maturity in-

dices. Postharvest technology of horticultural crops,

pages 8–11.

Saragih, R. E. and Emanuel, A. W. (2021). Banana ripeness

classification based on deep learning using convolu-

tional neural network. In 2021 3rd East Indonesia

Conference on Computer and Information Technology

(EIConCIT), pages 85–89. IEEE.

Sola, J. and Sevilla, J. (1997). Importance of input data

normalization for the application of neural networks

to complex industrial problems. IEEE Transactions

on nuclear science, 44(3):1464–1468.

Sun, L., Liang, K., Song, Y., and Wang, Y. (2021). An

improved cnn-based apple appearance quality classi-

fication method with small samples. IEEE Access,

9:68054–68065.

Szegedy, C., Ioffe, S., Vanhoucke, V., and Alemi, A. A.

(2017). Inception-v4, inception-resnet and the impact

of residual connections on learning. In Thirty-first

AAAI conference on artificial intelligence.

Szegedy, C., Liu, W., Jia, Y., Sermanet, P., Reed, S.,

Anguelov, D., Erhan, D., Vanhoucke, V., and Rabi-

novich, A. (2015). Going deeper with convolutions.

In Proceedings of the IEEE conference on computer

vision and pattern recognition, pages 1–9.

Tripathi, M. K. and Maktedar, D. D. (2021). Optimized

deep learning model for mango grading: Hybridizing

lion plus firefly algorithm. IET Image Processing.

Wang, F., Zheng, J., Tian, X., Wang, J., Niu, L., and Feng,

W. (2018). An automatic sorting system for fresh

white button mushrooms based on image processing.

Computers and electronics in agriculture, 151:416–

425.

Zhang, Y., Lian, J., Fan, M., and Zheng, Y. (2018). Deep

indicator for fine-grained classification of banana’s

ripening stages. EURASIP Journal on Image and

Video Processing, 2018(1):1–10.

Zhu, L. and Spachos, P. (2021). Support vector machine

and yolo for a mobile food grading system. Internet

of Things, 13:100359.

Banana Ripeness Level Classification Using a Simple CNN Model Trained with Real and Synthetic Datasets

543