Persistent Homology Based Generative Adversarial Network

Jinri Bao

1,2

, Zicong Wang

1,2

, Junli Wang

1,2

and Chungang Yan

1,2

1

Key Laboratory of Embedded System and Service Computing (Tongji University), Ministry of E ducation, Shanghai, China

2

National (Province-Ministry Joint) Collaborative Innovation Center for Financial Network Security,

Tongji University, Shanghai, China

Keywords:

Generative Adversarial Network, Persistent Homology, Topological Feature.

Abstract:

In recent years, image generation has become one of the most popular research areas in the field of computer

vision. Significant progress has been made in image generation based on generative adversarial network

(GAN). However, the existing generative models fail to capture enough global structural information, which

makes it difficult to coordinate the global structural features and local detail features during image generation.

This paper proposes the Persistent Homology based Generative Adversarial Network (PHGAN). A topological

feature transformation algorithm is designed based on the persistent homology method and then the topological

features are integrated into the discriminator of GAN through the fully connected layer module and the self-

attention module, so that the PHGAN has an excellent ability to capture global structural information and

improves the generation performance of the model. We conduct an experimental evaluation of the PHGAN

on the CIFAR10 dataset and the STL10 dataset, and compare it with several classic generative adversarial

network models. The better results achieved by our proposed PHGAN show that the model has better image

generation ability.

1 INTRODUCTION

Image generation has always been a key problem

in the field of computer r esearch, and h ow to make

computers automatically generate realistic images

has always puzzled computer scholars. In 2014,

the emergence of Generative Adversarial Network

(GAN)(Goodfellow e t al., 2014) mad e significant

progress in computer-generated images. GAN con-

sists of a generator and a discriminator. The generator

is trained to generate imag e s that are as similar as pos-

sible to real images, and the discriminator is trained

to determine whether the potential distribution of the

generated images is consistent with the poten tial dis-

tribution of the real images. Through the confron ta -

tion between the generator and the discriminator, the

generato r can generate more and m ore realistic im-

ages.

After GAN was proposed, Radford et al.(Rad ford

et al., 2015) conducted further research on the un-

derlying ar c hitecture of GAN and used the convolu-

tional neural network as the underlying architecture

of the generator and discriminator of GAN. The gen-

eration perfor mance has been grea tly improved, lead-

ing to commonly use of convolutional n eural network

in subsequent GAN -based models(Zhu et al., 2017;

Wang et al., 2022) as backbones.

Although GANs based on the convolutional neu-

ral network structure have achieved success in im-

age genera tion, there are still some problems to be

solved: the model performs we ll in generating lo-

cal details but poorly in overall structure generation.

Study(Zhang et al., 2019) found that this is because

GANs based on the convolu tional neural network rely

on the convolution opera tion for feature extraction,

while the convolution operation has a limited size of

the convolution kernel. Its receptive field is limited,

and some long-distance de pendenc ies cannot be cap-

tured, so that the model does not perform well in the

overall structure.

In recent years, persistent homology

(PH)(Zom orodian and Car lsson, 2004) has at-

tracted attention in terms of data feature extraction.

Compared with existing data feature extraction

methods, persistent homology method can c onnect

algebra and topology, and provides measurable

global information. The quantitative numerical

value of the topological feature has opened up new

research dir ections in the field of c omputer science.

For example, Kindelan et al.(Kindelan et al., 2021)

and Khramtsova et al.(Khramtsova et al., 2022)

researched the classification proble m based on

persistent homology. Byrne et al.(Byrne et al., 2022)

and Li et al.(Li e t al., 2022) re searched the image

196

Bao, J., Wang, Z., Wang, J. and Yan, C.

Persistent Homology Based Generative Adversarial Network.

DOI: 10.5220/0011648200003417

In Proceedings of the 18th International Joint Conference on Computer Vision, Imaging and Computer Graphics Theory and Applications (VISIGRAPP 2023) - Volume 4: VISAPP, pages

196-203

ISBN: 978-989-758-634-7; ISSN: 2184-4321

Copyright

c

2023 by SCITEPRESS – Science and Technology Publications, Lda. Under CC license (CC BY-NC-ND 4.0)

segmentation based on persistent homology. Carriere

et al.(Carri`ere et al., 2020) and Kim e t al.(Kim et al.,

2020) proposed the topological feature layer that can

be embe dded in neural networks based on persistent

homology. Moor et al.(Moor et al., 2020) studied the

optimization of ma chine lear ning models using the

topological features of the data as a new loss term.

This paper proposes the Persistent Homology

based Generative Adversarial Network (PHGAN).

Based on the original convolutional neural network

architecture of GAN, the topological features ob-

tained by persistent homolo gy are integrated into

GAN. Th e topological features of the data make up

for the lack of the original model’s ability to capture

long-distance dependencies so tha t PHGAN can co -

ordinate the global structural features and local detail

features when genera ting images.

2 RELATED WORK

2.1 Persistent Homology

The topological features obtained by per sistent ho-

mology are generally represented by the persistent di-

agram or the persistent barcode. However, these data

formats are not suitable for subsequent machine learn-

ing tasks, so some researches a re carried out on topo-

logical feature transformation. Adams et al.(Ada ms

et al., 2017) and Cang et a l.(Cang et al., 2018) re-

searched how to transform topological features into

two-dimensional matrices or three-dimensional ten-

sors. After transformation , such data formats can be

treated as images for machine learning tasks. Mi-

leyko et al.(Mileyko et al., 20 11) proposed to use

the Wasserstein distance to measure th e proximity of

the topological features of the two da ta. Merelli et

al.(Merelli et al., 2015) proposed to use entropy to

measure the distribution of topological features and

assign a certain entropy value to the distribution of

topological features of data. Hofer et al.(Hofer et al.,

2017) p roposed to use of a neural network for topo -

logical feature transformation so tha t the neural net-

work ca n lea rn to obtain topological feature transfor-

mation parameters that are most suitable f or machine

learning tasks.

In this paper, we proposed to transform to pologi-

cal features obtained by persistent h omology into one-

dimensional vector, then the vector can be input into

neural network for processing.

2.2 Persistent Homology Based

Generative Model

Recently, som e researchers have explored the appli-

cation of persistent homology in the field of image

generation. For examp le , Khrulkov et al.(Khrulkov

and Oseledets, 2018) used the approximate value of

the topological features of the generated images and

the real im a ges a s an indicator to measure the gen-

eration performance of GANs. Coincidentally, Ho-

rak et al.(Horak et al., 2021) also proposed a differ-

ent GANs generation performance eva luation index

based on the persistent homology method. Howeve r,

what they pr oposed only u sed the topological features

of the g enerated images and real images to measure

the ge neration performance of GANs, and did not use

the topological features of the real images to guide the

generato r to generate images.

Br¨uel-Gabrielsson et al.(Gabrielsson e t al., 20 20)

proposed to use the topological features obtaine d by

persistent homology to guide GAN to genera te im-

ages, but the author only did explicit topological fea-

ture optimization for the noise input to the generator,

and the generator did not learn the topological feature

distribution of real images.

In addition, there are also some studies on the ap-

plication of persistent homology in other generative

models. For example, Schiff et al.(Schiff et al., 2022)

proposed a variational autoencoder mode l based on

the persistent homology, using the topological fea-

tures as a new reco nstruction loss term to optimize

the gen eration per formanc e of the variational autoen -

coder model.

Our proposed PHGAN uses the topological fea-

tures of real images to guide the ge nerator of GAN to

generate images, so that th e gen erator can learn the

topological feature distribution of real images.

3 METHOD

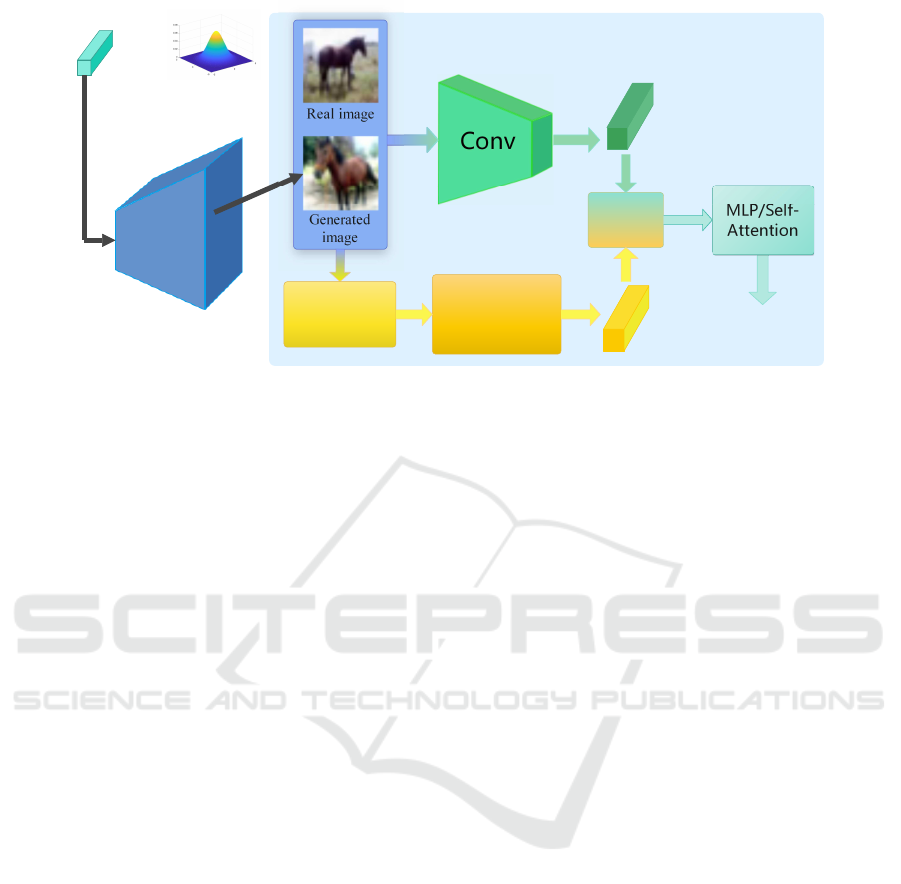

The overall model architecture of our proposed PH-

GAN is shown in Figure 1. We sample r a ndom no ise

from a Gaussian distribution, and then feed this no ise

into the g e nerator to generate an image. The gener-

ated image and the real image are input into the dis-

criminator to discrim inate the real and fake. In the

discriminator, the input image is not only processed

by the convolution module to obtain the features of

the convolutional neural network, but also processed

by the persistent homology mo dule and topological

feature transformation module to obtain the topologi-

cal features. These two features are connected in se-

ries to discriminate the real and fake images.

Persistent Homology Based Generative Adversarial Network

197

~

Noise z

Generator

Persistence

Homology

Topological

Feature

Transformation

Topology

feature

CNN feature

Concat

Real/Fake

Discriminator

Figure 1: PHGAN Architecture.

In this way, we can incorporate the topological

features wh ich reflect the global structur e of the im-

age into GAN. On the one han d, the topological fea-

tures enable the model to discriminate the real and

fake images on the glob a l structure, and on the other

hand, in the adversarial training process of the gener-

ator and the discriminator, the ability of the generator

to generate images can be improved. The specific im-

plementation details are described below.

3.1 Persistent Homology: From Image

to Topological Features

Persistent homology is a meth od for extracting the

topological features of data. The basic process is to

construct complex and complex filtering ba sed on the

original data, an d then extract the topological features

of the data. In this paper, the data sets consist of o nly

two-dimensional images, and for im a ge data, cubic a l

complex(Zio u and Allili, 2002) is the most suitable

choice for complex construction. We represent the

two-dimensional image data with a two-dimensional

array X of N

i

∗ N

j

, the value of each point X

i j

in the

array is the value of the ima ge pixel, and then we con-

struct a subset of the array X , that is, the set of pixels

in the array X that are below the threshold t, as shown

in Eq. (1). We use the S to denote a su bset of the array

X.

S(t) = U

i, j

X

i j

: X

i j

<= t (1)

where U denotes the set of pixel points.

When the th reshold t changes fr om small to large,

we can get a series of sets:

∅ ⊆ S(0) ⊆ S(t

1

) ⊆ S(t

2

) ⊆ . . . ⊆ S(1) ⊆ X (2)

Each such set of pixel points can be constructed

to form a cubical com plex, and th e cubical complex

formed by this series of sets is called a complex filter-

ing.

When the value o f t is relatively small, accord-

ing to Eq. (1), the set S consists of only a few pix-

els. As the threshold t continues to increase, new

pixels are add ed to the set S to form a new cubical

complex, and the topological features appear and dis-

appear during the transformation of the old and new

cubical complex. The persistent homology me thod

is to calculate the number o f topological features of

the cubical co mplexes for med under different thresh-

olds. We use β

k

to represent the number of topo-

logical features of k-dimen sio n: β

0

, the number of

topological feature s of 0-dimension (connected com-

ponen ts); β

1

, the number of 1- dimensional topolog -

ical features (rings/holes). Because we are study-

ing two-dimensional image data, we only involve 0-

dimensional and 1-dimensional topological features

here.

The fina l result of the persistent homology method

is the appearance and disappea rance of each topolog-

ical feature (appears at the threshold t

birth

and disap-

pears at th e threshold t

death

). We generally use a per-

sistent diagram or a persistent barcode to repre sent

the result of pe rsistent homology an alysis, as shown

in Figure 2.

For topological features, the longer the persistent

time (the disappearance time minus the app earance

time we call the p ersistent time), the more imp ortant

and meaningful the feature is. If topologica l features

are of short persistent time, we usually treat them as

noise.

VISAPP 2023 - 18th International Conference on Computer Vision Theory and Applications

198

Figure 2: Persistent diagram and persistent barcode; the

far left is 0 in the MNIST dataset. After persistent homol-

ogy analysis, 0-dimensional topological features (connected

components, red) and 1-dimensional topological f eatures

(rings/holes, blue) are obtained.

3.2 Topological Feature Transformation

After the image is processed by the persistent homol-

ogy module, the obtained topological features are ex-

pressed as a per sistent diagram or a persistent bar-

code. However, these data fo rmats are not suitable

for input into the subsequent discriminator. There-

fore, we need to transform the topological features.

We use the persistent time of each topologica l fea-

ture as a measure of th is topological feature:

τ

i

k

= d

i

k

–b

i

k

(3)

where b

i

k

, d

i

k

, τ

i

k

represent the appearance time, dis-

appearance time and the persistent time of the i-th k-

dimensional topo logical featur e .

For o ur image data, the topologic al featur es ob-

tained by persistent homology analysis have two

dimensions, one of which is the 0-dimensional

connected components, and the other is the 1-

dimensional holes. We combine the persistent time

of 0-dimensional and 1 -dimension al topological fe a-

tures contained in an image to fo rm a vector. In this

way, the topological features of the im age are trans-

formed into a vector data format.

The specific process of topological feature trans-

formation is shown in Algorithm 1

1

.

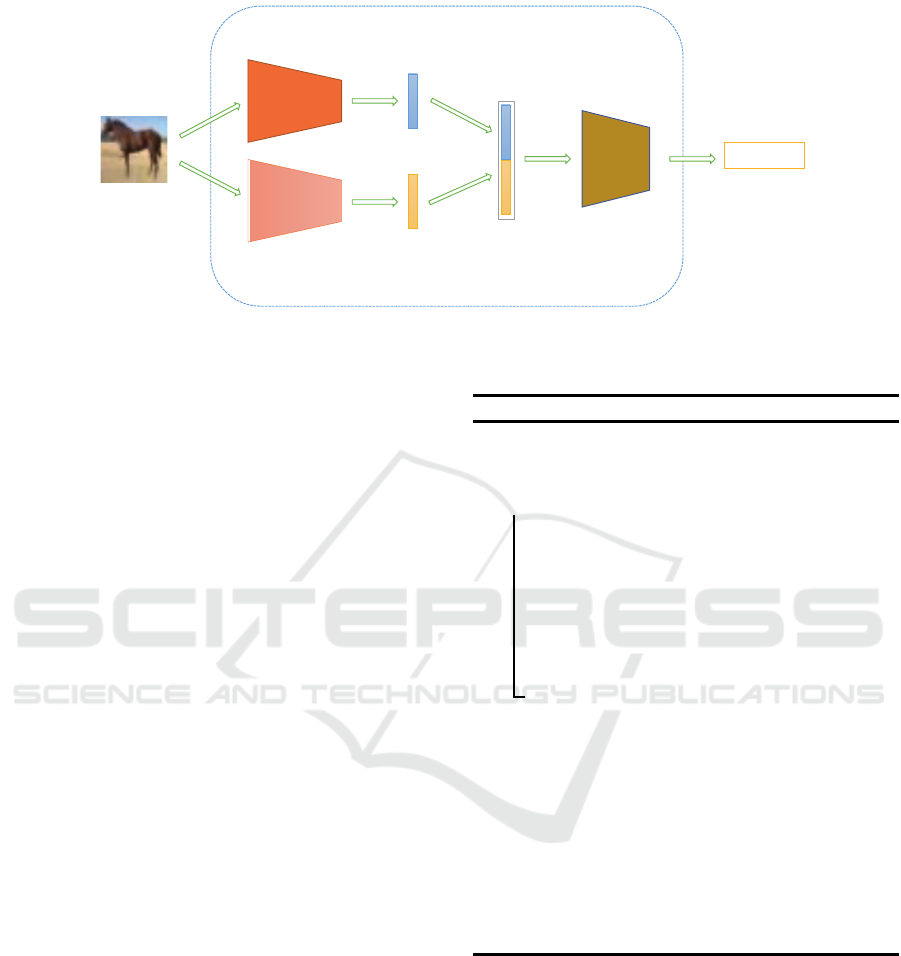

3.3 Discriminator Network

The overall network structure of the discriminator is

shown in Figur e 3.

After the image is proce ssed by the persistent ho-

mology module and fed to topological feature trans-

formation, the vector representa tion (ν

to po

) of the

topological features is obtained. In addition, we tr ans-

form the features extracted by the original convolu-

tional neural network into vector ν

conv

for rep resen-

tation and then c oncatenate these two vectors (ν

to po

,

ν

conv

) to form a vector (ν).

Here, we process the vector ν using two different

network structures: one using a fully connected layer

network and the other using a self-attention network.

1

We use the Python module Gudhi to produce the per-

sistent diagrams.

Algorithm 1: The algorithm of topological f eature transfor-

mation.

Input: image X of size H ∗W with C

channels.

Output: vector ν

to po

represents the

topological features of image.

1 For X using persistent homology, obtaining a

0-dimensional persistent diagram and a

1-dimensional persistent diagram.

2 Ob ta ining persistent time τ

i

k

using Eq. (3) for

each topological feature in 0-dimension and

1-dimension.

3 Ob ta ining ν

to po

= (τ

1

0

, τ

2

0

, τ

3

0

, ......, τ

n

0

, τ

1

1

, τ

2

1

,

τ

3

1

, ......, τ

m

1

).

4 Retur n ν

to po

.

3.3.1 Fully Connected Layer

We use the network structure of the fully connected

layer to pr ocess the input conc atenated vector ν. The

fully connected layer can combine topological fea-

tures with convolutional neural network features to

discriminate between real and fake imag es. The fully

connected layer will learn the most appropriate pa-

rameter relationship between these two features dur-

ing the training process and coordinate the influ ence

of topological fe atures and convolutional neural net-

work features on the discriminatio n result.

3.3.2 Self-Attention Network

Different from the use of the fully connected

layer network structure, the use of the self-

attention(Vaswani et al., 2017) n etwork will learn the

correlation between the convolutional neural network

features and the topological features during the train-

ing process, and then discriminate the authenticity

of the image. We input the vector ν into the self-

attention network, then obtain the vector ν

sa

after the

convolutional neural network features interacts with

the topologic a l features. we use the residual network

to add the vector ν

sa

to the original vector ν, as shown

in Eq. (4), to obtain the vector ν

′

. Finally, th e vector

ν

′

input to the fully connected layer to judge the au-

thenticity of the image.

ν

′

= γ ∗ ν

sa

+ ν (4)

Where γ denotes a learnable parameter.

3.4 Loss Function

After we incorporate topological features into GAN,

in addition to the original convolutional neural

network-based loss, a new topological feature loss

Persistent Homology Based Generative Adversarial Network

199

!"#$"%&'("#)%*

+,'-"./

0,.1(1',#2,

3"4"%"56

!"#$"%&'("#)%*

$,2'". ν

!"#$

7"8"%"5(2)%**

$,2'". ν

%"&"

!"#2)',#)',9**

$,2'". ν

!"#$%&#'"

:4)5,

8.,9(2'("#

;<0=>,%?@

A'',#'("#

:#8&'*(4)5,

Figure 3: Schematic diagram of the structure of the PHGAN discriminator based on the full y connected layer and the self-

attention network.

term is added to the discriminator. In the PHGAN,

the generator and the discriminator are alternately

trained. When training the discriminator, the top olog-

ical feature loss term will guide the discriminator to

discriminate between r eal image and gen e rated image

in term s of global struc ture, and then when training

the generator, the discriminator can guide the genera-

tor to g e nerate an image that is mo re similar in global

structure to the real image. Th e total loss function of

the discriminator and the generator a re shown in the

following Eqs. (5) and (6):

argmaxD[E

x∼P

data

log(D

conv

(x) ⊕ D

to po

(x))+

E

z∼P

z

log(1 − (D

conv

(G(z)) ⊕ D

to po

(G(z))))]

(5)

argmaxG[E

z∼P

z

log(D

conv

(G(z)) ⊕ D

to po

(G(z)))]

(6)

Where D

to po

represents discrimination based on topo-

logical features. D

conv

represents discrimination

based on convolu tional neural network features. ⊕

represents the combination of convolutional neural

network features and topological featu res through

the fully conne cted layer and self-attention network

structure for discrimination.

See Algorithm 2 for the training proc ess of the

PHGAN.

4 EXPERIMENT

4.1 Experimental Environment and

Preparation

We use the CIFAR10 d ataset(Krizhevsky, 2012) an d

the STL10(Coates et al., 2011) dataset for experi-

mental evaluation and com parative analysis with DC-

Algorithm 2: The algorithm of training PHGAN.

Input: image X of size H ∗W with C

channels.

Input: epoch: number of training iterations.

1 for epoch do

2 Obtaining noise z by randomly sampling.

3 Generating fake ima ge Y using noise z.

4 For fake image Y run steps 7-1 0,

obtaining the discriminant result.

5 For re a l image X run steps 7- 10,

obtaining the discriminant result.

6 Update generator and discr iminator

parameters using Eqs. (5) and (6).

7 For inpu t image run algorithm 1, obtaining

topological features vector representation

ν

to po

.

8 For inpu t image, obtaining convolutional

features vector representation ν

conv

by

convolutional neural network in

discriminator.

9 Ob ta ining image feature vector representatio n

ν by concatenating ν

to po

and ν

conv

.

10 Input ν into MLP/Self-Attention to get the

discriminant result.

GAN(Radford et al., 2015) , WGAN-GP(Gulrajani

et al., 2017) , and WGAN(Arjovsky e t al., 2017) .

The CIFAR10 dataset consists of 10 c a tego ries of

32x32 color images. Each category c ontains 6 000 im-

ages, of which 5 000 images are used as training sets

and 1000 images are used as test sets. The STL10

dataset consists of 10 c ategories of 96 x96 co lor im-

ages, each with 1300 images, 500 for training, and

800 f or testing. In the experiment o f this paper, the

original image is first cropped into a 32x32 size image

by cente r cropping, and then the training set is used

VISAPP 2023 - 18th International Conference on Computer Vision Theory and Applications

200

Table 1: Experimental results on the CIFAR10 dataset.

DCGAN WGAN-GP WGAN PHGAN

ml p

PHGAN

sa

IS↑ 5.01 5.05 4.43 5.24 5.37

FID↓ 66.61 65.00 70.02 64.57 62.50

GS(10

−4

) ↓ 10.20 14.50 20.04 9.48 6. 97

DCGAN WGAN-GP WGAN PHGAN

mlp

PHGAN

sa

Figure 4: Experimentally generated images base on C IFAR10 dataset.

to train the generative model. In addition to using

the FID (Fr´echet Inception Distance)(Heusel et al.,

2017) and IS (Inception Score)(Salima ns et al., 2016)

to evaluate the genera tive model performance, we

also used the GS (Geometry Score )(Khrulkov and Os-

eledets, 2018) evalu ation index: a generative adver-

sarial network model generation performance evalua-

tion based on the similarity of topological features.

Experiments are conducted on a Linux server,

Ubuntu 18.04 system, and Nvidia Tesla P40 24 GB

single graphics card. The Adam optimizer(Kingma

and Ba, 2014) with β

1

=0.5, β

2

=0.999 was used, the

batch size was set to 64, an d the learning rate during

training was 0.0002.

4.2 Experimental Results and Analysis

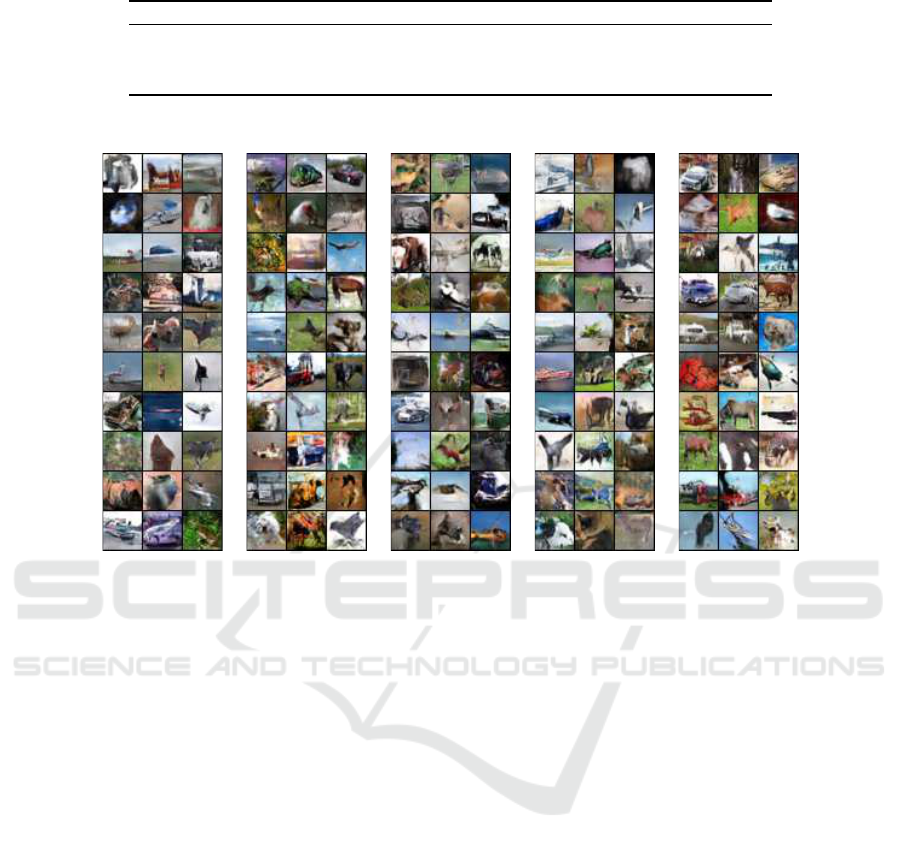

Table 1 shows the results of our proposed PHGAN on

the three image gener a tion metrics of FID, IS, and GS

on the CIFAR10 dataset, and compares it with three

classic gener a tive adversarial network models: DC-

GAN, WGAN, WGAN-GP.

It can be seen from the table that the generation

results of our proposed PHGAN outpe rform that of

the three compa rative GANs on the evaluation indica-

tors of FID and I S. Among them, the PHGAN using

the self-atten tion ne twork (PHGAN

sa

) has b etter FID

and IS evaluation indicators than the PHGAN using

the fully connected layer ( PHGAN

ml p

), so its experi-

mental performance is the best among the five GANs.

From the experimental resu lts, it can be seen that the

integration of topologica l features into the ge nera-

tive adversarial network model can enhance the image

generation performance.

In addition, we also use the GS evaluation index

to evaluate th e experimenta l results. The GS evalua-

tion index is based on the sim ilarity of the topological

features of the gener ated images and th e real images.

From the experimental results, we can see that when

we use the topological features of the real images to

guide the generative adversarial network m odel, the

generated images can better learn the top ological fea-

ture distribution of the rea l images. Figure 4 shows

the images generated by the experimental five gen-

erative adversarial network models on the CIFAR10

dataset. We observe that the images generated by the

PHGAN have a clearer overall stru cture so that it is

easier to see the category of the images.

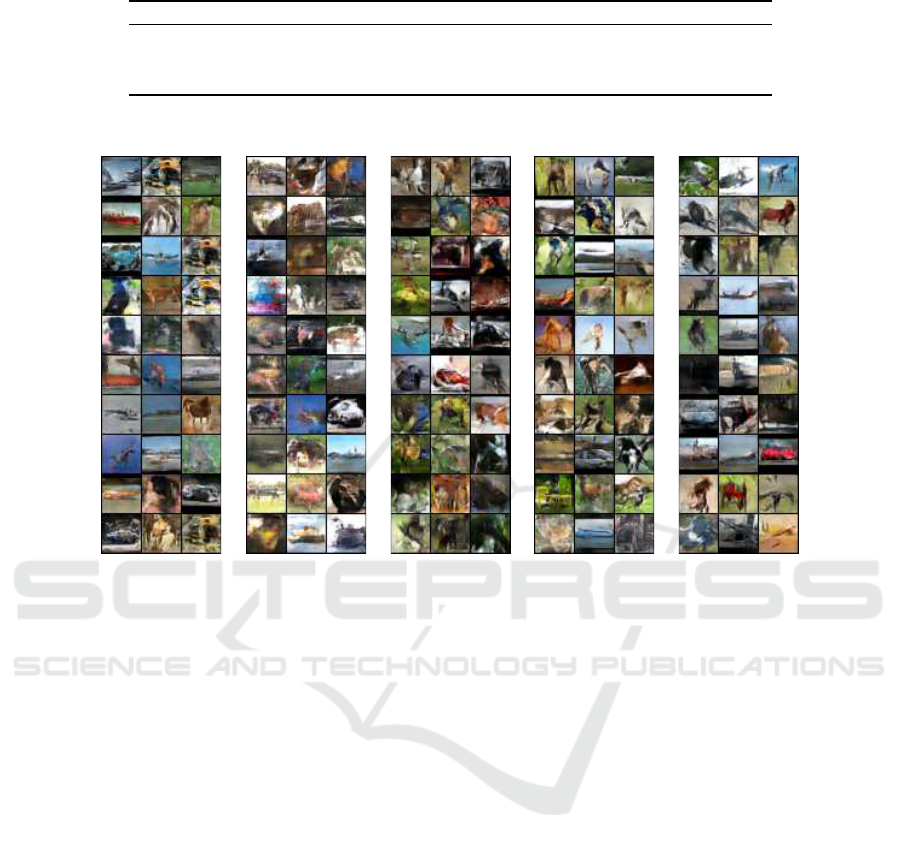

Table 2 shows the experimental results on the

STL10 dataset. Similarly, on the FID and IS evalu-

ation indicators, PHGAN p e rforms th e best. Differ-

ent from the experimental results on the CIFAR10

Persistent Homology Based Generative Adversarial Network

201

Table 2: Experimental results on the STL10 dataset.

DCGAN WGAN-GP WGAN PHGAN

ml p

PHGAN

sa

IS↑ 2.97 2.67 2.81 3.04 3.12

FID↓ 74.14 79.41 73.96 71.07 72.84

GS(10

−4

) ↓ 17.19 3 9.95 22.32 14.47 11.68

DCGAN WGAN-GP WGAN PHGAN

mlp

PHGAN

sa

Figure 5: Experimentally generated images base on STL10 dataset.

dataset, the PHGAN

ml p

is slightly better than th e

PHGAN

sa

in the FID evaluation index. It might be-

cause the STL10 dataset is relatively small. I f we use

the self-attention network to process the images, there

may be a slight overfitting phenomenon, which leads

to the FID indicator not as good as the PHGAN that

directly uses the fully connected layer.

Similarly, on the GS indicator, we can also see that

PHGAN c an learn the topological feature distribution

of the real images relatively well on this da ta set. Fig-

ure 5 shows the images generated b y the experimen-

tal five generative adversarial network models on the

STL10 dataset. We can see that the images gener-

ated by PHGAN have sharper boundaries and overall

structure.

5 CONCLUSION

This paper proposes the PHGAN which integrates the

topological features obtained by persistent homo logy

into the gen erative adversarial network model. The

PHGAN has a good ability to capture global informa-

tion. It has been verified in experiments. Compared

with the original several classic gener a tive adversarial

network models, PHGAN has achieved better results

in the evaluation matrics for image generatio n, and

the generated im ages are m ore realistic. This paper

explores the application of the persistent homology

method in image gen e ration. And the application in

other fields, such a s image editin g, and image style

transfer, is the direction that can be studied in the fu-

ture.

ACKNOWLEDGEMENTS

This work was supported in p art by the Strate -

gic Research and Consulting Project of the Chi-

nese Academy of Engineering under grant 2022-XY-

107 and in part by the Shanghai Science and Tech-

nology Innovation Action Plan Project under Grant

225111007 00.

REFERENCES

Adams, H., Emerson, T., Kirby, M., Neville, R., Peter-

son, C., Shipman, P., Chepushtanova, S., Hanson, E.,

Motta, F., and Ziegelmeier, L. (2017). Persistence im-

ages: A stable vector representation of persistent ho-

mology. Journal of Machine Learning Research, 18.

VISAPP 2023 - 18th International Conference on Computer Vision Theory and Applications

202

Arjovsky, M., Chintala, S., and Bottou, L. (2017). Wasser-

stein generative adversarial networks. In Interna-

tional conference on machine learning, pages 214–

223. PMLR.

Byrne, N., Clough, J. R., Valverde, I., Montana, G., and

King, A. P. (2022). A persistent homology-based

topological loss for cnn-based multi-class segmenta-

tion of cmr. IEEE Transactions on Medical Imaging.

Cang, Z., Mu, L., and Wei, G.- W. (2018). Representabil-

ity of algebraic topology for biomolecules in machine

learning based scoring and virtual screening. PLoS

computational biology, 14(1):e1005929.

Carri`ere, M., Chazal, F., Ike, Y., Lacombe, T., Royer, M.,

and Umeda, Y. (2020). Perslay: A neural network

layer for persistence diagrams and new graph topo-

logical signatures. In International Conference on Ar-

tificial Intelligence and Statistics, pages 2786–2796.

PMLR.

Coates, A., Ng, A., and Lee, H. (2011). An analy-

sis of single-layer networks in unsupervised feature

learning. In Proceedings of the fourteenth i nterna-

tional conference on artificial intelligence and statis-

tics, pages 215–223. JMLR Workshop and Confer-

ence Proceedings.

Gabrielsson, R. B., Nelson, B. J., Dwaraknath, A., and

Skraba, P. (2020). A topology layer for machine learn-

ing. In International Conference on Artificial Intelli-

gence and Statistics, pages 1553–1563. PMLR.

Goodfellow, I., Pouget-Abadie, J., Mirza, M., Xu, B.,

Warde-Farley, D., Ozair, S., Courville, A., and Ben-

gio, Y. (2014). Generative adversarial networks. Ad-

vances in neural information processing systems, 27.

Gulrajani, I., Ahmed, F., Arjovsky, M., Dumoulin, V., and

Courville, A. C. (2017). Improved training of wasser-

stein gans. Advances in neural information processing

systems, 30.

Heusel, M., Ramsauer, H., Unterthiner, T., Nessler, B., and

Hochreiter, S. (2017). Gans trained by a two time-

scale update rule converge to a local nash equilibrium.

Advances in neural information processing systems,

30.

Hofer, C., Kwitt, R., Niethammer, M., and Uhl, A. (2017).

Deep learning with topological signatures. Advances

in neural information processing systems, 30.

Horak, D., Yu, S., and Salimi-Khorshidi, G. (2021). Topol-

ogy distance: A topology-based approach for evaluat-

ing generative adversarial networks. In Proceedings

of the AAAI Conference on Artificial Intelligence, vol-

ume 35, pages 7721–7728.

Khramtsova, E., Zuccon, G., Wang, X., and Baktashmot-

lagh, M. (2022). Rethinking persistent homology for

visual recognition. arXiv preprint arXiv:2207.04220.

Khrulkov, V. and Oseledets, I. (2018). Geometry score:

A method for comparing generative adversarial net-

works. In International conference on machine learn-

ing, pages 2621–2629. PMLR .

Kim, K., Kim, J., Zaheer, M., Kim, J., Chazal, F., and

Wasserman, L. ( 2020). Pllay: Efficient topological

layer based on persistent landscapes. Advances in

Neural Information Processing Systems, 33:15965–

15977.

Kindelan, R., Fr´ıas, J., Cerda, M., and Hitschfeld, N.

(2021). Classification based on topological data anal-

ysis. arXiv preprint arXiv:2102.03709.

Kingma, D. P. and Ba, J. (2014). Adam: A

method for stochastic optimization. arXiv preprint

arXiv:1412.6980.

Krizhevsky, A. (2012). Learning multiple layers of f ea-

tures from tiny images. university of toronto (2012).

URL: http://www.cs.toronto.edu/kriz/cifar.html, last

accessed, 5:13.

Li, Y., Xuan, Y., and Zhao, Q. (2022). Manifold projection

and persistent homology. Measurement, page 111414.

Merelli, E., Rucco, M., Sloot, P., and Tesei, L. (2015).

Topological characterization of complex systems: Us-

ing persistent entropy. Entropy, 17(10):6872–6892.

Mileyko, Y., Mukherjee, S., and Harer, J. (2011). Proba-

bility measures on the space of persistence diagrams.

Inverse Problems, 27(12):124007.

Moor, M., Horn, M., Rieck, B., and Borgwardt, K. ( 2020).

Topological autoencoders. In International confer-

ence on machine learning, pages 7045–7054. PMLR.

Radford, A., Metz, L., and Chintala, S. (2015). Unsu-

pervised representation learning with deep convolu-

tional generative adversarial networks. arXiv preprint

arXiv:1511.06434.

Salimans, T., Goodfellow, I., Zaremba, W., Cheung, V.,

Radford, A., and Chen, X. (2016). Improved tech-

niques for training gans. Advances in neural informa-

tion processing systems, 29.

Schiff, Y., Chenthamarakshan, V., Hoffman, S. C., Ra-

mamurthy, K. N., and Das, P. (2022). Augmenting

molecular deep generative models with topological

data analysis representations. In ICASSP 2022-2022

IEEE International Conference on Acoustics, Speech

and Signal Processing (ICASSP), pages 3783–3787.

IEEE.

Vaswani, A., Shazeer, N., Parmar, N., Uszkoreit, J., Jones,

L., Gomez, A. N., Kaiser, Ł., and Polosukhin, I.

(2017). Attention is all you need. Advances in neural

information processing systems, 30.

Wang, Z., Ren, Q., Wang, J., Yan, C., and Jiang, C. ( 2022).

Mush: Multi-scale hierarchical feature extraction for

semantic image synthesis. In Proceedings of the Asian

Conference on Computer Vision, pages 4126–4142.

Zhang, H., Goodfellow, I., Metaxas, D., and Odena, A.

(2019). Self-attention generative adversarial net-

works. In International conference on machine learn-

ing, pages 7354–7363. PMLR .

Zhu, J.-Y., Park, T., Isola, P., and Efros, A. A. (2017).

Unpaired image-to-image translation using cycle-

consistent adversarial networks. In Proceedings of

the IEE E international conference on computer vi-

sion, pages 2223–2232.

Ziou, D. and Allili, M. (2002). Generating cubical com-

plexes f r om image data and computation of the euler

number. Pattern Recognition, 35(12):2833–2839.

Zomorodian, A. and Carlsson, G. (2004). Computing per-

sistent homology. In P roceedings of the twentieth an-

nual symposium on Computational geometry, pages

347–356.

Persistent Homology Based Generative Adversarial Network

203