Combining Two Adversarial Attacks Against Person Re-Identification

Systems

Eduardo de O. Andrade

1 a

, Igor Garcia Ballhausen Sampaio

1 b

, Joris Gu

´

erin

2 c

and Jos

´

e Viterbo

1 d

1

Computing Institute, Fluminense Federal University, Niter

´

oi, Brazil

2

LAAS-CNRS, Toulouse University, Midi-Pyr

´

en

´

ees, France

Keywords:

Person Re-Identification, Adversarial Attacks, Deep Learning.

Abstract:

The field of Person Re-Identification (Re-ID) has received much attention recently, driven by the progress of

deep neural networks, especially for image classification. The problem of Re-ID consists in identifying indi-

viduals through images captured by surveillance cameras in different scenarios. Governments and companies

are investing a lot of time and money in Re-ID systems for use in public safety and identifying missing per-

sons. However, several challenges remain for successfully implementing Re-ID, such as occlusions and light

reflections in people’s images. In this work, we focus on adversarial attacks on Re-ID systems, which can be

a critical threat to the performance of these systems. In particular, we explore the combination of adversarial

attacks against Re-ID models, trying to strengthen the decrease in the classification results. We conduct our

experiments on three datasets: DukeMTMC-ReID, Market-1501, and CUHK03. We combine the use of two

types of adversarial attacks, P-FGSM and Deep Mis-Ranking, applied to two popular Re-ID models: IDE

(ResNet-50) and AlignedReID. The best result demonstrates a decrease of 3.36% in the Rank-10 metric for

AlignedReID applied to CUHK03. We also try to use Dropout during the inference as a defense method.

1 INTRODUCTION

The amount of surveillance cameras is rising fast and

could reach a market of 19.5 billion euros in the year

2023 (Khan et al., 2020). This market is related to

the concept of smart cities, which aim to address sus-

tainability themes, seeking to improve the manage-

ment of risks in urban environments. As a result, the

number of systems developed to re-identify people

has increased rapidly in recent years, driven by the

progress of deep neural networks (Luo et al., 2019;

Kurnianggoro and Jo, 2017). These systems are in

high demand by companies and governments to ad-

dress problems such as public safety, tracking peo-

ple in universities and streets, behavior analysis, and

even surveillance (Islam, 2020). For example, this

approach could help countermeasure against a terror-

ist offensive (Shah et al., 2016), such as the 9/11 at-

tack

1

. However, all this technological insertion ends

a

https://orcid.org/0000-0002-5978-9718

b

https://orcid.org/0000-0002-1890-1451

c

https://orcid.org/0000-0002-8048-8960

d

https://orcid.org/0000-0002-0339-6624

1

https://www.mprnews.org/story/2021/09/10/npr-911-

travel-timeline-tsa/

up creating a scenario prone to software errors, hacks,

malware, and other criminal activities (Kitchin and

Dodge, 2019).

Even with the many hours of video generated by

an immense number of cameras, we still need many

human operators responsible for verifying incidents

through observation on many screens. Automatic

analysis of this data can considerably help human

operators and improve the efficiency of these sys-

tems (Sumari et al., 2020). The research field study-

ing this problem is called Person Re-Identification

(Re-ID). It aims to distinguish specific individu-

als through images captured by surveillance cam-

eras in different scenarios in the same environ-

ment (Galanakis et al., 2019), such as an airport.

Thanks to the large amount of data generated for Re-

ID in recent years, there has been an exponential in-

crease in publications about Re-ID systems, mostly

considering deep learning solutions. For an overview

of popular approaches for Re-ID, we refer the reader

to the following survey (Yaghoubi et al., 2021).

Despite the increased performance of Re-ID mod-

els in the last decade, they are vulnerable to attacks

called adversarial examples (Bouniot et al., 2020).

This attack can confuse deep neural networks, mak-

Andrade, E., Sampaio, I., Guérin, J. and Viterbo, J.

Combining Two Adversarial Attacks Against Person Re-Identification Systems.

DOI: 10.5220/0011623800003417

In Proceedings of the 18th International Joint Conference on Computer Vision, Imaging and Computer Graphics Theory and Applications (VISIGRAPP 2023) - Volume 5: VISAPP, pages

437-444

ISBN: 978-989-758-634-7; ISSN: 2184-4321

Copyright

c

2023 by SCITEPRESS – Science and Technology Publications, Lda. Under CC license (CC BY-NC-ND 4.0)

437

ing the classification models return erroneous predic-

tions with high confidence (Goodfellow et al., 2014).

An adversarial example attack on a Re-ID model can

be a severe risk, such as a strike against an object de-

tection system

2

. Finding efficient attacks and coun-

termeasures to mitigate them are active fields of re-

search (Chen et al., 2020). We present a literature

review about adversarial attacks in Section 2.

The main objective of our work is to strengthen

the degeneration of the classification accuracy of a

Re-ID model by combining two different types of ad-

versarial attacks. In addition, this paper also uses a

defense method for Re-ID’s hardening. The attacks

implemented and combined are 1. a modification of

the Fast Gradient Signed Method (Goodfellow et al.,

2014), known as Private Fast Gradient Signed Method

(P-FGSM) (Li et al., 2019), and 2. a state-of-the-art

method for Re-ID, called Deep Mis-Ranking (Wang

et al., 2020). For the defense, we try to apply the

method from (Sheikholeslami et al., 2019) to Re-ID,

which consists in using the Dropout layers during the

inference phase. As far as we know, the defense

method and one of the attacks have never been used

for Re-ID before.

The experiments are run using three known

datasets: Duke Multi-Tracking Multi-Camera Re-

Identification (DukeMTMC-ReID) (Ristani et al.,

2016), Market-1501 (Zheng et al., 2015) and Chinese

University of Hong Kong 03 (CUHK03) (Li et al.,

2014). For this work, we have the implementation of

two models of Re-ID systems: AlignedReID (Zhang

et al., 2017) and another system with Identification-

discriminative Embedding (IDE) (Zheng et al., 2016)

based on the known deep Residual Neural Network,

ResNet-50 (He et al., 2016).

The structure of this work is in five sections. Sec-

tion 2 starts with a discussion of the different adver-

sarial attack approaches for Re-ID present in the lit-

erature. In Section 3 we present the details of the two

attacks used in this work. Next, Section 4 presents the

experiments performed on the implemented models

and discusses the results obtained. Finally, Section 5

concludes this paper, describing some limitations and

possible future work for this work.

2 RELATED WORK

In 2014, there was an extensive study about adver-

sarial examples and their effects (Goodfellow et al.,

2

https://www.biometricupdate.com/201904/novel-

techniques-that-can-trick-object-detection-systems-

sounds-familiar-alarm

2014). The authors observed that more linear mod-

els are prone to fail under attacks. The direction of

perturbations was the most crucial feature in drasti-

cally altering neural network predictions. The authors

also showed that adversarial examples could gener-

alize across different models. Perturbations that are

more aligned with the weight vectors of the models,

learning similar functions, and training for the same

tasks, facilitate generalization. Furthermore, the neu-

ral network models that are easy to optimize were

easy to confuse. In 2018, another paper reviewed at-

tack and defense approaches for deep learning mod-

els (Yuan et al., 2019), applied to tasks such as image

classification, image segmentation, and object detec-

tion.

The Fast Gradient Signed Method (FGSM) ap-

proach emerged in 2014 and demonstrated how ef-

fective a simple, low-computation attack could be. It

consists in adding imperceptible perturbations whose

direction is the same as the gradient of the cost func-

tion concerning the data. In 2019, a variation of

FGSM called Private FGSM (P-FGSM) achieved an

excellent trade-off between the drop in classification

accuracy and distortion of private classes (Li et al.,

2019). The real purpose of class privacy is to protect

sensitive information from images when there is an

inference from a classifier. This information may in-

clude the presence of people, faces, and other content

that we cannot violate. Using a ResNet-50 model and

the Places365-Standard (Zhou et al., 2017) dataset,

the P-FGSM authors were able to fool the classifier

94.40% of the times in the top-5 classes with only

a slight average reduction, considering three image

quality measures. As far as we know, no other work

in the literature used the P-FGSM in Re-ID.

The Opposite-Direction Feature Attack (ODFA)

paper (Zheng et al., 2018), implemented in 2018, used

a Dense Convolution Network (DenseNet) with a

depth of 121 as the victim model and another ResNet-

50 model for the generation of adversarial queries.

Three datasets were part of the experiments: Market-

1501, Caltech University Birds-200-2011 (CUB-200-

2011) (Wah et al., 2011) and CIFAR-10 (Krizhevsky

et al., 2009). The Market-1501 and CUB-200-2011

had better results than CIFAR-10 as ODFA handled

the recovery task better. For Market-1501, the mean

Average Precision (mAP) metric without the attack

in a specific victim model reached an accuracy of

77.14% (Sun et al., 2018), while the attack decreased

the accuracy to 21.52% using the same model.

Another attack from 2019 has two different pro-

posals for dealing with adversarial patterns (AdvPat-

tern): EvdAttack and ImpAttack (Wang et al., 2019).

The authors used the Market-1501 and another pro-

VISAPP 2023 - 18th International Conference on Computer Vision Theory and Applications

438

prietary dataset to craft transformable patterns into

adversarial clothing. The name of this proprietary

dataset is Person Re-Identification in Campus Streets

(PRCS). Two models were part of the experiments:

a Siamese Network (A) (Zheng et al., 2017) and a

ResNet-50 capable of learning the discriminative em-

beddings of identities (B) (Zheng et al., 2016). For

Market-1501, The mAP metric values before the ap-

plication of AdvPattern are 62.7% (model A) and

57.3% (model B). Considering the dataset generated

with EvdAttack, the authors achieved 4.4% in model

A and 4.5% in model B. Using ImpAttack, the accu-

racy decreased to 9.20% in model A and 10.9% in

model B. The adoption of PRCS with the AdvPat-

tern approach differs from the attacks addressed in our

work.

In 2020, there was an opposite approach to ODFA

with the implementation of Self Metric Attack (SMA)

and Furthest-Negative Attack (FNA) (Bouniot et al.,

2020). The authors performed both attacks on

Market-1501 and DukeMTMC-ReID. They adopted

ResNet-50 architectures using two distinct types of

loss minimization: the cross-entropy (C) (Xiong

et al., 2019) and the triplet loss (T) (Hermans et al.,

2017; Schroff et al., 2015). The accuracy results

achieved with the mAP metric for Market-1501 with-

out the attacks were 67.22% for T and 77.53% for C.

Using the SMA attack, there was a decrease in ac-

curacy to 0.05% for T and 0.26% for C. The FNA

obtained 0.05% for T and 0.07% for C. For the

DukeMTMC-ReID dataset, the mAP results achieved

without the attacks were 60.33% for T and 67.64% for

C. Again, with the SMA attack, there was a decrease

to 0.05% for T and 0.32% for C. The FNA obtained

0.04% for T and 0.06% for C.

The most important paper regarding adversarial

attack approaches for this work appeared in mid-

2020 (Wang et al., 2020). The Deep Mis-Ranking

attack is responsible for most state-of-the-art results

compared to our work. It is presented in details in

Section 3.1. However, some results obtained in our

work are close to but not the same as those described

in the paper. Some of the problems in implementing

Deep Mis-Ranking included code errors to be cor-

rected. The experiments were not perfectly repro-

ducible, and results differ slightly from those initially

presented in the paper, even after corrections and us-

ing models with pre-trained weights.

3 COMBINED ATTACK

METHODS

There is little attention to the security risks and the

impact of the attacks on Re-ID systems. This section

explains the approaches used in this work: Deep Mis-

Ranking, P-FGSM, and their combination.

3.1 Deep Mis-Ranking

The Deep Mis-Ranking is a formulation to disrupt

the ranking prediction of Re-ID models. The main

characteristic of Deep Mis-Ranking is that it has high

transferability, i.e., if we implement it for dataset A, it

can generalize to another dataset B. Other characteris-

tics of Deep Mis-Ranking, include the controllability

and imperceptibility of the attack (Wang et al., 2020).

Figure 1 shows the visual representation of the

framework. The generator G produces the prelimi-

nary perturbations P

′

that, multiplied with the mask

M , originate the disturbances P for each input im-

age I. The generator G is a ResNet-50 architec-

ture, and it is trained jointly with the discriminator

D to form the general Generative Adversarial Net-

work (GAN) structure of the framework. We com-

monly use this unsupervised neural network for image

generation (Konidaris et al., 2019). L

GAN

represents

the GAN loss, whereas L

adv

etri

, L

adv xent

, and L

V P

correspond to mis-ranking, misclassification, and per-

ception losses, respectively. The T represents the at-

tacked Re-ID system and receives the adversarial im-

age

ˆ

I as input.

Figure 1: The framework structure of the Deep Mis-

Ranking attack. The main objective of the attack is to max-

imize the distance between the samples from the same cat-

egory (pull) and minimize the distance between the sam-

ples from different categories (push). Source: (Wang et al.,

2020).

Looking more closely at T , the inputs and out-

puts follow the scheme illustrated in Figure 2. We

aim to minimize the distance of each pair of sam-

ples from different categories (e.g., (

ˆ

I

k

c

, I), ∀I ∈ {I

cd

})

while maximizing the distance of each pair of sam-

ples from the same category (e.g., (

ˆ

I

k

c

, I), ∀I ∈ {I

cs

})

Combining Two Adversarial Attacks Against Person Re-Identification Systems

439

to achieve a successful attack.

Figure 2: The scheme of how the Deep Mis-Ranking attack

occurs in a Re-ID system T concerning pairs of samples

and their distances. Source: (Wang et al., 2020).

The Equation 1 corresponds to L

GAN

. While the

D discriminator tries to differentiate the real images

from the adversarial ones, the G generator tries to

produce the perturbations in the input images. The

expected value E

(I

cd

,I

cs

)

represents the expected con-

ditional of logD

1,2,3

(I

cd

, I

cs

) given I

cd

and I

cs

in the

form E

X,Y

[Y ] = E

X

[E

Y

[Y |X]].

L

GAN

= E

(I

cd

,I

cs

)

[logD

1,2,3

(I

cd

, I

cs

)]+

E

I

[log(1 − D

1,2,3

(I,

ˆ

I))]

(1)

The first loss related to a Re-ID system T is

L

adv etri

, represented by Equation 2, where the ex-

pression [x]

+

is equal to max(0, x). This mis-ranking

loss function follows the form of a triplet loss (Ding

et al., 2015), aiming to minimize the distance of mis-

matched pair, while maximizing the distance of the

matched pair. The letter K represents the set of peo-

ple’s identities. Meanwhile, C

k

is the set of sample

numbers taken from the k-th identity of a person and

I

k

c

are the c-th images of the k identity in a mini-batch.

The L2 norm used as a distance metric is represented

by || · ||

2

and ∆ is a margin threshold.

L

adv

etri

=

K

∑

k=1

C

k

∑

c=1

[ max

j̸=k

j=1...K

c

d

=1...C

j

||T (

ˆ

I

k

c

) − T (

ˆ

I

j

c

d

)||

2

2

−

min

c

s

=1...C

k

||T (

ˆ

I

k

c

) − T (

ˆ

I

j

c

s

)||

2

2

+ ∆]

+

(2)

Another loss present in the framework is L

adv xent

for non-targeted attack (Equation 3), where S de-

notes the softmax function and δ is the Dirac delta.

The term υ is the smoothing regularization, where

υ = [

1

K−1

, ..., 0, ...,

1

K−1

], where υ is always equal to

1

K−1

except in the case where k is the ground-truth

ID (K is the set including each k-th person ID). The

arg min preceded by I represents the case in which

we have the return of the indices of the minimum

values of an output probability vector, indicating the

least likely class (similar to the numpy.argmin func-

tion present in the NumPy library of Python).

L

adv xent

= −

K

∑

k=1

S(T (

ˆ

I )

k

((1 − δ)I

arg minT (I )

k

+ δ

υ

k

)

(3)

In order to improve the visual quality for T and

prevent the attack from being detected by humans, we

have the Equation 4 corresponding to the perception

loss L

V P

. The formulation of this loss function origi-

nates from an approach to the structural similarity im-

age quality paradigm (Wang et al., 2003). The com-

parison measures of contrast (c

j

) and structure (s

j

)

on the jth scale are calculated by c

j

(I ,

ˆ

I ) =

2σ

I

σ

ˆ

I

+C

2

σ

2

I

+σ

2

ˆ

I

+C

2

and s

j

(I ,

ˆ

I ) =

σ

I

ˆ

I

+C

3

σ

I

σ

⟨⊣⊔I

+C

3

, where σ

x

is the standard

deviation, σ

2

x

is the variance and σ

xy

of covariance.

The level of the scales is represented by L, where

α

L

, β

j

and γ

j

are the factors that help to re-weight

the contribution of each component. Finally, we have

the luminosity measure (l) calculated by l

L

(I ,

ˆ

I ) =

2µ

I

µ

ˆ

I

+C

1

µ

2

I

+µ

2

ˆ

I

+C

1

, where µ

x

is the mean form.

L

V P

= [l

L

(I ,

ˆ

I )]

α

L

·

L

∏

j=1

[c

j

(I ,

ˆ

I )]

β

j

[s

j

(I ,

ˆ

I )]

γ

j

(4)

The M mask determines the number of target pix-

els to attack. After multiplying the preliminary pertur-

bation P

′

with the mask M , we have the final pertur-

bation P with a controlled number of pixels enabled

to maintain discretion from the attack. The function

Gumbel softmax (Jang et al., 2016) is responsible for

choosing pixels from all possibilities. The general-

ization capacity of Deep Mis-Ranking is its main ad-

vantage. It is possible to use it with different Re-ID

systems and efficiently in black-box scenarios.

3.2 Private Fast Gradient Signed

Method

The design of the P-FGSM aims to “protect” the data

of an image through directed distortions that make

it difficult to infer a classifier. The purpose of this

approach is to maintain usefulness for social media

users. P-FGSM is based on the FGSM attack already

used in Re-ID and includes a limitation on the prob-

ability that automatic inference can expose the true

class of a distorted image. This limitation may in-

clude even more disturbances that mislead models (Li

et al., 2019).

VISAPP 2023 - 18th International Conference on Computer Vision Theory and Applications

440

Figure 3: The two images on the left side represent the true

class. On the right side, we have the distortions that make

them human-imperceptible and give a high misclassification

rate to the models. Source: (Li et al., 2019).

Figure 3 shows an example of two images after ex-

ecuting P-FGSM. The most significant difference be-

tween FGSM and other variants of this attack is irre-

versibility, i.e., the random selection of the target class

among the subset of classes that do not contain the

protected class. The target class and other classes can

denote people’s different identities in a Re-ID dataset.

The class adapted as an adversarial example

e

y

works as a function of the classification probability

vector p. So, p being equal to the vector of features

for classification, we have p’ which contains the el-

ements of p in descending order, p’ = (p

′

1

, ..., p

′

D

),

where D represents the scene classes. Equation 5

corresponds to the random choice of

e

y from the sub-

set of classes whose cumulative probability exceeds

a threshold σ in the interval [0,1], in which R is the

function that randomly picks one class label y

j

from

the input set.

e

y = R

(

y

j

:

j−1

∑

i=1

p’

i

> σ

)!

(5)

Lastly, in Equation 6, we have the generation of

the protected image ˙x = ˙x

N

for N iterations, starting

from ˙x

0

= x to a maximum number of iterations aim-

ing to increase the probability of predicting

e

y. We

can represent the cost function by J

M

, and it is used

in training to estimate the θ parameters of the classi-

fier M. The ε represents the measure of the maximum

magnitude of the adversarial perturbation and ∇ is, in

this case, the gradient vector that is related to the im-

age x.

˙x

N

= ˙x

N−1

− εsign(∇

x

J

M

(θ, ˙x

N−1

,

e

y)) (6)

4 EXPERIMENTS

We used a computer with a 2.9 GHz Intel Xeon pro-

cessor and 16 GB 2400 MHz DDR4 of RAM for eval-

uation purposes using the GPU Nvidia Quadro P5000.

The datasets used where DukeMTMC-ReID (Ristani

et al., 2016), Market-1501 (Zheng et al., 2015), and

CUHK03 (Li et al., 2014). DukeMTMC-ReID had

16,522 images (bounding boxes) with 702 identities

for training and 19,889 images with 702 other identi-

ties for testing. We used 2228 bounding boxes to cor-

rectly identify the test identities considering the query

set. For Market-1501, the composition was 12,936

images of 751 identities for training and 19,281 im-

ages of 750 identities for testing. We selected 3368

bounding boxes for the query set. Finally, CUHK03

comprised 7365 images of 767 identities for train-

ing and 6732 images of 700 identities for testing,

and the query set contained 1400 images. It is im-

portant to mention that we neglected some “junk im-

ages” from Market-1501 in our testing set. These im-

ages were neither good nor bad considering the De-

formable Part Model (DPM) bounding boxes; they

could hinder more than help, making no difference in

the re-identification process and accuracy. The DPM

is a pedestrian detector employed instead of the hand-

cropped boxes. We also did not use some images from

CUHK03 that we could not read from the MATLAB

file that composes the dataset.

The implemented models were IDE (ResNet-

50) (He et al., 2016) and AlignedReID (Zhang et al.,

2017). In addition to the Deep Mis-Ranking (Wang

et al., 2020) and P-FGSM (Li et al., 2019) that we use

as a combined attack against the models that charac-

terize the Re-ID systems, we also implemented the

Dropout at inference as a defense method. As far as

we know, this defense method was not implemented

yet for Re-ID systems.

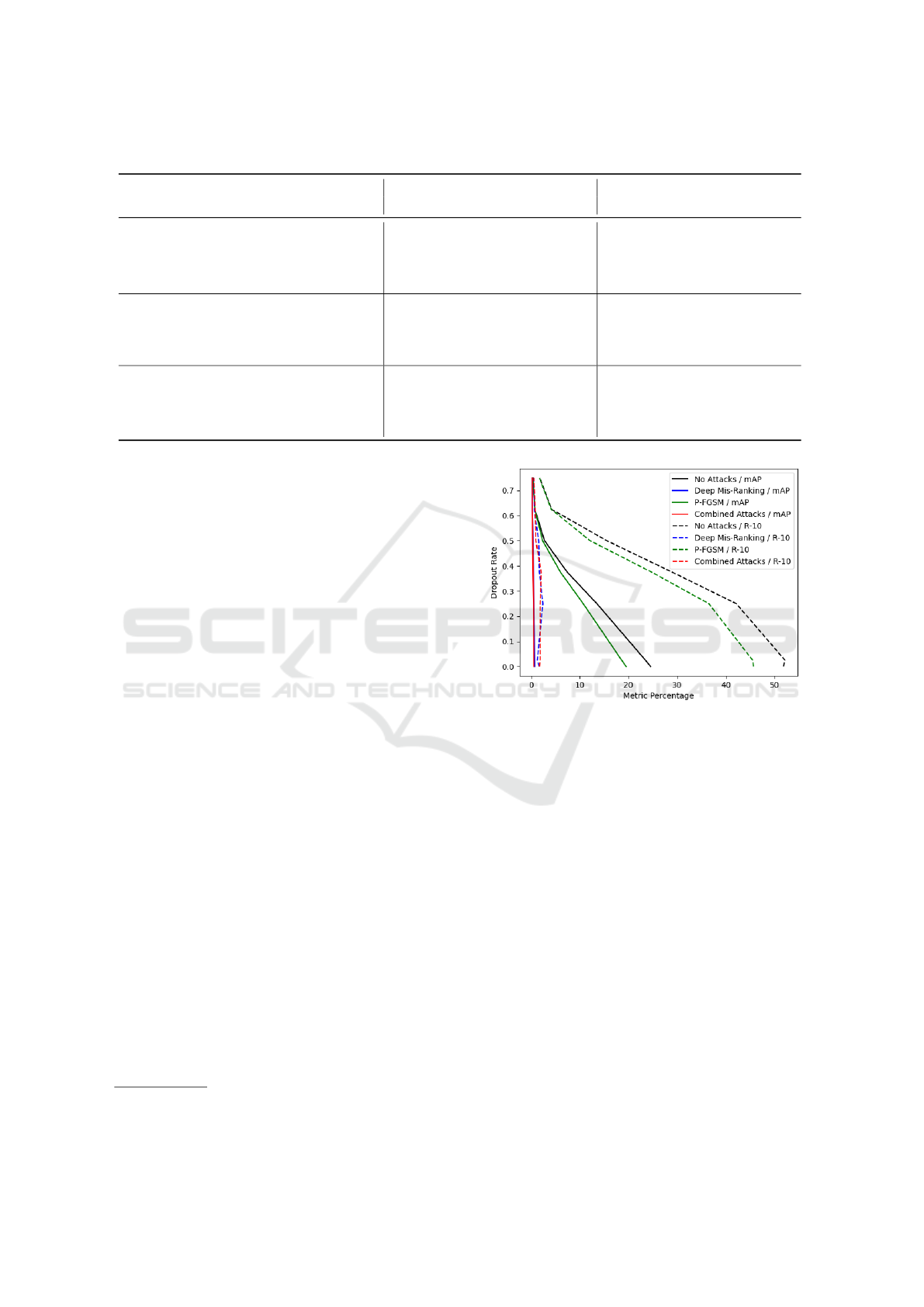

Table 1 shows the results using the metrics mean

Average Precision (mAP), Rank-1 (R-1), Rank-5 (R-

5), and Rank-10 (R-10) for the experiments with and

without the combined attacks. Considering the com-

bined attacks, we implemented one attack after the

other, using P-FGSM first. There was no significant

difference in changing the order of the attacks, and

Combining Two Adversarial Attacks Against Person Re-Identification Systems

441

Table 1: The results (in percent) with and without combined attacks for the chosen models and datasets.

Dataset Method

IDE AlignedReID

mAP R-1 R-5 R-10 mAP R-1 R-5 R-10

DukeMTMC-ReID

No Attacks

Deep Mis-Ranking

P-FGSM

Combined Attacks

58.14

4.68

56.06

4.71

76.53

5.16

75.45

5.25

86.76

8.71

86.54

9.87

89.99

11.00

90.08

11.98

69.75

3.12

67.05

3.09

82.14

3.23

81.82

3.77

91.65

6.01

91.16

7.00

94.43

7.99

93.85

8.75

Market-1501

No Attacks

Deep Mis-Ranking

P-FGSM

Combined Attacks

61.13

4.30

58.08

4.24

80.85

3.98

79.33

3.95

91.89

8.88

91.27

9.38

94.83

12.23

94.12

12.83

79.10

2.58

76.84

2.44

91.83

1.84

91.12

1.96

96.97

4.22

96.82

4.54

98.13

6.29

98.25

6.71

CUHK03

No Attacks

Deep Mis-Ranking

P-FGSM

Combined Attacks

24.54

0.77

19.53

0.57

24.93

0.29

21.14

0.07

43.29

1.00

35.79

0.79

51.79

1.71

45.64

1.71

59.65

2.19

50.05

1.76

61.50

1.36

53.50

1.14

79.43

2.50

75.07

1.93

85.79

4.36

82.21

3.36

we used the same pre-trained weights from the Deep

Mis-Ranking work

3

.

Looking again at Table 1, if we compare the re-

sults without attacks and with Deep Mis-Ranking

only, there are differences concerning the original pa-

per. For IDE (ResNet-50), for instance, the results

without attacks are equal in our experiments using the

exact implementation and different with Deep Mis-

Ranking. We used the same split for training and test

sets. So, this difference could be about the dataset and

its samples because it is no longer available on the of-

ficial repository site or even something related to the

available pre-trained weights.

We tried to strengthen the combined attacks’ de-

crease in the classification results. This decrease

occurred more times with the CUHK03 dataset, as

shown in bold at Table 1. However, if we look at all

the datasets and models, there are more times with a

slight increase in the considered metrics, but this rise

seems less critical than decreasing, even more, the re-

sults compared to the Deep Mis-Ranking attack.

Furthermore, we used Dropout during the infer-

ence as a defense method. We expected a good trade-

off for the Re-ID system against adversarial exam-

ples, changing some loss in identification results with-

out attacks for a considerable gain in decreasing the

loss in identification results, considering the attacks.

Nonetheless, unlike in other cases, we did not get sig-

nificant results using that method for the Re-ID sys-

tems. We can see the results of this trial in Figure 4

for the mAP and Rank-10 metrics with the IDE model

and CUHK03 dataset.

The Dropout behavior in Figure 4 illustrates the

insignificant gain as a defense method. We used rate

3

https://github.com/whj363636/Adversarial-attack-on-

Person-ReID-With-Deep-Mis-Ranking

Figure 4: The Dropout rate & metric percentage with and

without attacks for the mAP and Rank-10 (R-10) metrics

with the IDE model and CUHK03 dataset.

values for Dropout from 0.025 to 0.75, and the best

increase was in the R-10 metric against the Deep Mis-

Ranking attack, with a rate of 0.25, improving from

1.71% to 2.73%. Meanwhile, we have a decrease in

the mAP metric using the same rate from 0.77% to

0.60%, which does not pay off. For the other model

and datasets, the results were not good enough too.

Finally, the Dropout during the inference consid-

ered all the hidden layers of the two models. The time

for running the experiments on the testing set for IDE

(ResNet-50) model and DukeMTMC-ReID dataset

was approximately 4 minutes. For the Market-1501

dataset, 4 minutes and 30 seconds. The CUHK03

dataset spent nearly 1 minute and 30 seconds of the

execution time. Considering the AlignedReID model

and DukeMTMC-ReID dataset, we finished in ap-

proximately 8 minutes. For the Market-1501 dataset,

it was 11 minutes. Lastly, the CUHK03 dataset spent

2 minutes and 30 seconds.

VISAPP 2023 - 18th International Conference on Computer Vision Theory and Applications

442

5 CONCLUSION

In this work, we proposed the combination of two ad-

versarial attacks against Re-ID systems. As far as we

know, one of the attacks, the P-FGSM, was never im-

plemented before for this kind of system. More than

that, we used Dropout during the inference as a coun-

termeasure for the considered attacks.

We used three datasets and two models with the

best results and among the most used ones for the ex-

periments. Our tests aimed to increase the obstacles

even further for Re-ID with the combination of the

attack methods. These tests strengthen the decrease

in the classification results in some cases. However,

the proposed countermeasure did not perform well

against the attacks.

There were limitations related to the accessible

data and unexpected results considering the already

available attack implementations. However, we pre-

tend to continue exploring this problem concerning

adversarial attacks and Re-ID systems. We also hope

that combining different attack and defense methods

can be an approach for our future work and other

works.

ACKNOWLEDGEMENT

The Coordenac¸

˜

ao de Aperfeic¸oamento de Pessoal de

N

´

ıvel Superior (CAPES), Conselho Nacional de De-

senvolvimento Cient

´

ıfico e Tecnol

´

ogico (CNPq), and

PrimeUp Soluc¸

˜

oes de TI LTDA financed part of this

work.

REFERENCES

Bouniot, Q., Audigier, R., and Loesch, A. (2020). Vul-

nerability of person re-identification models to metric

adversarial attacks. In Proceedings of the IEEE/CVF

Conference on Computer Vision and Pattern Recogni-

tion Workshops, pages 794–795.

Chen, K., Zhu, H., Yan, L., and Wang, J. (2020). A survey

on adversarial examples in deep learning. Journal on

Big Data, 2(2):71.

Ding, S., Lin, L., Wang, G., and Chao, H. (2015). Deep

feature learning with relative distance comparison

for person re-identification. Pattern Recognition,

48(10):2993–3003.

Galanakis, G., Zabulis, X., and Argyros, A. A. (2019). Nov-

elty detection for person re-identification in an open

world. In VISIGRAPP (5: VISAPP), pages 401–411.

Goodfellow, I. J., Shlens, J., and Szegedy, C. (2014). Ex-

plaining and harnessing adversarial examples. arXiv

preprint arXiv:1412.6572.

He, K., Zhang, X., Ren, S., and Sun, J. (2016). Deep resid-

ual learning for image recognition. In Proceedings of

the IEEE conference on computer vision and pattern

recognition, pages 770–778.

Hermans, A., Beyer, L., and Leibe, B. (2017). In defense

of the triplet loss for person re-identification. arXiv

preprint arXiv:1703.07737.

Islam, K. (2020). Person search: New paradigm of per-

son re-identification: A survey and outlook of recent

works. Image and Vision Computing, 101:103970.

Jang, E., Gu, S., and Poole, B. (2016). Categorical repa-

rameterization with gumbel-softmax. arXiv preprint

arXiv:1611.01144.

Khan, P. W., Byun, Y.-C., and Park, N. (2020). A data ver-

ification system for cctv surveillance cameras using

blockchain technology in smart cities. Electronics,

9(3):484.

Kitchin, R. and Dodge, M. (2019). The (in) security of

smart cities: Vulnerabilities, risks, mitigation, and

prevention. Journal of Urban Technology, 26(2):47–

65.

Konidaris, F., Tagaris, T., Sdraka, M., and Stafylopatis, A.

(2019). Generative adversarial networks as an ad-

vanced data augmentation technique for mri data. In

VISIGRAPP (5: VISAPP), pages 48–59.

Krizhevsky, A., Hinton, G., et al. (2009). Learning mul-

tiple layers of features from tiny images. Master’s

thesis, Department of Computer Science, University

of Toronto.

Kurnianggoro, L. and Jo, K.-H. (2017). Identification of

pedestrian attributes using deep network. In IECON

2017-43rd Annual Conference of the IEEE Industrial

Electronics Society, pages 8503–8507. IEEE.

Li, C. Y., Shamsabadi, A. S., Sanchez-Matilla, R., Mazzon,

R., and Cavallaro, A. (2019). Scene privacy protec-

tion. In ICASSP 2019-2019 IEEE International Con-

ference on Acoustics, Speech and Signal Processing

(ICASSP), pages 2502–2506. IEEE.

Li, W., Zhao, R., Xiao, T., and Wang, X. (2014). Deep-

reid: Deep filter pairing neural network for person

re-identification. In Proceedings of the IEEE con-

ference on computer vision and pattern recognition,

pages 152–159.

Luo, H., Gu, Y., Liao, X., Lai, S., and Jiang, W. (2019).

Bag of tricks and a strong baseline for deep person re-

identification. In Proceedings of the IEEE/CVF Con-

ference on Computer Vision and Pattern Recognition

Workshops, pages 0–0.

Ristani, E., Solera, F., Zou, R., Cucchiara, R., and Tomasi,

C. (2016). Performance measures and a data set for

multi-target, multi-camera tracking. In European con-

ference on computer vision, pages 17–35. Springer.

Schroff, F., Kalenichenko, D., and Philbin, J. (2015).

Facenet: A unified embedding for face recognition

and clustering. In Proceedings of the IEEE conference

on computer vision and pattern recognition, pages

815–823.

Shah, J. H., Lin, M., and Chen, Z. (2016). Multi-camera

handoff for person re-identification. Neurocomputing,

191:238–248.

Combining Two Adversarial Attacks Against Person Re-Identification Systems

443

Sheikholeslami, F., Jain, S., and Giannakis, G. B.

(2019). Efficient randomized defense against ad-

versarial attacks in deep convolutional neural net-

works. In ICASSP 2019-2019 IEEE International

Conference on Acoustics, Speech and Signal Process-

ing (ICASSP), pages 3277–3281. IEEE.

Sumari, F. O., Machaca, L., Huaman, J., Clua, E. W., and

Gu

´

erin, J. (2020). Towards practical implementations

of person re-identification from full video frames. Pat-

tern Recognition Letters, 138:513–519.

Sun, Y., Zheng, L., Yang, Y., Tian, Q., and Wang, S. (2018).

Beyond part models: Person retrieval with refined part

pooling (and a strong convolutional baseline). In Pro-

ceedings of the European conference on computer vi-

sion (ECCV), pages 480–496.

Wah, C., Branson, S., Welinder, P., Perona, P., and Be-

longie, S. (2011). The Caltech-UCSD Birds-200-2011

Dataset. Technical Report CNS-TR-2011-001, Cali-

fornia Institute of Technology.

Wang, H., Wang, G., Li, Y., Zhang, D., and Lin, L. (2020).

Transferable, controllable, and inconspicuous adver-

sarial attacks on person re-identification with deep

mis-ranking. In Proceedings of the IEEE/CVF Con-

ference on Computer Vision and Pattern Recognition,

pages 342–351.

Wang, Z., Simoncelli, E. P., and Bovik, A. C. (2003). Mul-

tiscale structural similarity for image quality assess-

ment. In The Thrity-Seventh Asilomar Conference on

Signals, Systems & Computers, 2003, volume 2, pages

1398–1402. Ieee.

Wang, Z., Zheng, S., Song, M., Wang, Q., Rahimpour, A.,

and Qi, H. (2019). advpattern: physical-world at-

tacks on deep person re-identification via adversar-

ially transformable patterns. In Proceedings of the

IEEE/CVF International Conference on Computer Vi-

sion, pages 8341–8350.

Xiong, F., Xiao, Y., Cao, Z., Gong, K., Fang, Z., and Zhou,

J. T. (2019). Good practices on building effective cnn

baseline model for person re-identification. In Tenth

International Conference on Graphics and Image Pro-

cessing (ICGIP 2018), volume 11069, page 110690I.

International Society for Optics and Photonics.

Yaghoubi, E., Kumar, A., and Proenc¸a, H. (2021). Sss-pr:

A short survey of surveys in person re-identification.

Pattern Recognition Letters, 143:50–57.

Yuan, X., He, P., Zhu, Q., and Li, X. (2019). Adversar-

ial examples: Attacks and defenses for deep learning.

IEEE transactions on neural networks and learning

systems, 30(9):2805–2824.

Zhang, X., Luo, H., Fan, X., Xiang, W., Sun, Y., Xiao,

Q., Jiang, W., Zhang, C., and Sun, J. (2017). Aligne-

dreid: Surpassing human-level performance in person

re-identification. arXiv preprint arXiv:1711.08184.

Zheng, L., Shen, L., Tian, L., Wang, S., Wang, J., and Tian,

Q. (2015). Scalable person re-identification: A bench-

mark. In Proceedings of the IEEE international con-

ference on computer vision, pages 1116–1124.

Zheng, L., Yang, Y., and Hauptmann, A. G. (2016). Per-

son re-identification: Past, present and future. arXiv

preprint arXiv:1610.02984.

Zheng, Z., Zheng, L., Hu, Z., and Yang, Y. (2018).

Open set adversarial examples. arXiv preprint

arXiv:1809.02681, 3.

Zheng, Z., Zheng, L., and Yang, Y. (2017). A discrimi-

natively learned cnn embedding for person reidentifi-

cation. ACM transactions on multimedia computing,

communications, and applications (TOMM), 14(1):1–

20.

Zhou, B., Lapedriza, A., Khosla, A., Oliva, A., and Tor-

ralba, A. (2017). Places: A 10 million image database

for scene recognition. IEEE transactions on pattern

analysis and machine intelligence, 40(6):1452–1464.

VISAPP 2023 - 18th International Conference on Computer Vision Theory and Applications

444