A New Collision Avoidance System for Smart Wheelchairs Using

Deep Learning

Malik Haddad

1

, David Sanders

2

, Giles Tewkesbury

2

, Martin Langner

3

and Will Keeble

2

1

Northeastern University – London, St. Katharine’s Way, London, U.K.

2

Faculty of Technology, University of Portsmouth, Anglesea Road, Portsmouth, U.K.

3

Chailey Heritage Foundation, North Chailey, Lewes, U.K.

Keywords: Collision Avoidance, Smart Wheelchair, Steering, Deep Learning.

Abstract: The work presented describes a new collision avoidance system for smart wheelchair steering using Deep

Learning. The system used an Artificial Neural Network (ANN) and applied a Rule-based method to create

testing and training sets. Three ultrasonic sensors were used to create an array. The sensors measured distance

to the closest object to the left, right and in front of the wheelchair. Readings from the array were utilised as

inputs to the ANN. The system employed Deep Learning to avoid obstacles. The driving directions considered

were spin left, turn left, forward, spin right, turn right and stop. The new system drove the smart wheelchair

away from obstacles. The new system provided reliable results when tested and achieved 99.17% and 97.53%

training and testing accuracies respectively. The testing confirmed that the new system successfully drove a

smart wheelchair away from obstacles. The system can be overridden if required. Clinical tests will be carried

at Chailey Heritage Foundation.

1 INTRODUCTION

A new collision avoidance system for smart

wheelchairs using Deep Learning is presented. The

work is part of wider research carried out at the

University of Portsmouth and Chailey Heritage

Foundation supported by the Engineering and

Physical Sciences Council (EPSRC) (Sanders and

Gegov, 2018). The aim of the research is to apply AI

to powered mobility problems to improve movement

and enhance quality of life for users with

impairments.

The number of people diagnosed with disability

worldwide is on the rise. The type of disability is

shifting from mostly physical to a more complex mix

of physical/cognitive disabilities. New systems to

address that shift in disability are required. Smart

mobility is becoming more acceptable and useful to

support individuals with a disability (Haddad and

Sanders, 2020). Smart mobility is expected to

revolutionize the quality of life of people with

disabilities in the next two decades. Many researchers

have presented novel approaches for navigating

powered mobility (Sanders et al., 2010; Haddad and

Sanders, 2019; Haddad et al., 2019; Haddad et al.,

2020a; Haddad et al., 2020b) by creating Human

Machine Interfaces (Nguyen et al., 2013; Haddad et

al, 2020c; Haddad et al., 2020d; Haddad et al.,

2021a), intelligent collision avoidance systems and

intelligent controllers(Sanders and Stott, 2009;

Langner, 2012; Sanders et al., 2021), sensors and

sensor fusion (Larson et al., 2008; Milanes et al.,

2008; Sanders and Bausch, 2015; Sanders, 2016;

Sanders et al., 2019), Deep Learning (Haddad and

Sanders, 2020), expert systems (Sanders et al., 2018;

Sanders, 2020), and image processing (Sanders,

2009; Tewkesbury, 2021; Haddad et al., 2021b) and

they have analysed the behaviour of powered

wheelchair drivers to improve mobility (Sanders,

2009; Sanders et al., 2016; Sanders et al., 2017;

Sanders et al., 2019b; Haddad et al., 2020e; Haddad

et al., 2020f; Sanders et al., 2020b; Sanders et al.,

2020c; Sanders et al., 2021b).

This paper presents a collision avoidance system

used to safely steer smart wheelchairs away from

obstacles by employing Deep Learning. Section 2

presents the Deep Learning method. Section 3

presents the training and testing of the Deep Learning

architecture.

Section 4 presents some testing of the collision

avoidance system. Section 5 presents a discussion and

Haddad, M., Sanders, D., Tewkesbury, G., Langner, M. and Keeble, W.

A New Collision Avoidance System for Smart Wheelchairs Using Deep Learning.

DOI: 10.5220/0011903000003612

In Proceedings of the 3rd International Symposium on Automation, Information and Computing (ISAIC 2022), pages 75-79

ISBN: 978-989-758-622-4; ISSN: 2975-9463

Copyright

c

2023 by SCITEPRESS – Science and Technology Publications, Lda. Under CC license (CC BY-NC-ND 4.0)

75

some results. Section 6 presents conclusions and

future work.

2 THE NEW SYSTEM

A New Deep Learning architecture was created to

deliver a collision-free driving route for smart

wheelchairs. A three ultrasonic sensor array was

created. The sensors detected objects in the

wheelchair’s surroundings and calculated the

distances from to the nearest object to the right

(D

right

), in front (D

centre

), and to the left (D

left

) of the

wheelchair. The distances from the ultrasonic sensors

were considered as inputs to the Deep Learning

approach. Three inputs were considered: D

right

, D

centre

and D

left

. The new system conducted Deep Learning

and provided a safe driving direction for the smart

wheelchair based on these distances. The directions

considered were turn left, turn right, spin left, spin

right, forward and stop.

A new Artificial Neural Network (ANN) was

created and used in this paper. The structure of the

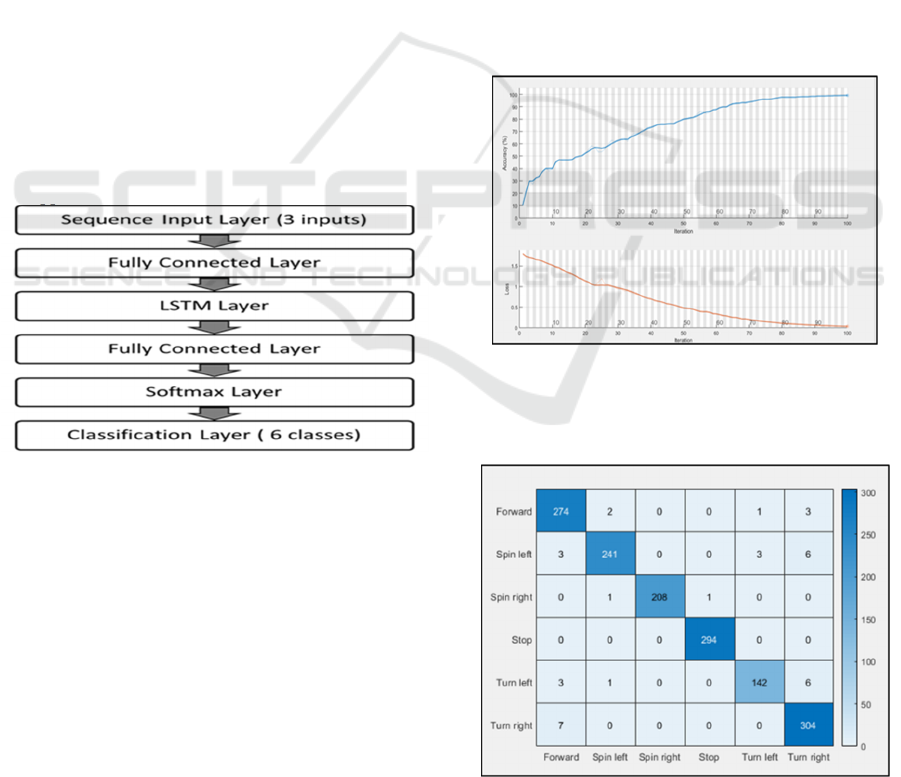

ANN is shown in figure 1.

The rule-based method was considered to create

testing and training sets for the architecture similar to

the approach used in Haddad and Sanders (2020).

Figure 1: Structure of the ANN.

The ANN consisted of six layers:

1. Input Sequence Layer (3 inputs).

2. Fully Connected Layer.

3. LSTM Layer (100 hidden nodes).

4. Fully Connected Layer.

5. Soft-Max Layer.

6. Classification Layer.

MATLAB was used to create the ANN with 3 input

units, 100 hidden units in the LSTM Layer and 6

outputs. An ADAptive Momentum algorithm

(ADAM) was considered with initial learning rate of

0.01 and 100 epochs.

3 TRAINING AND TESTING THE

DEEP LEARNING

ARCHITECTURE

MATLAB was used to train and test the Deep

Learning Architecture. A (5000x4) matrix considered

in Haddad and Sanders (2020) was used for testing

and training. The matrix was divided into a 3:7 ratio

to create testing and training sets respectively.

Figure 2 shows the training of the ANN using

0.001 as a learning rate and 100 epochs. As training

proceeded, training accuracy improved (shown as a

blue curve in the top half of figure 2). Training loss

reduced (shown as an orange curve in the bottom half

of figure 2). After completing 100 epochs, the

training accuracy increased to more than 99%. The

testing accuracy reached 97.53% when tested against

the same testing set considered in Haddad and

Sanders (2020). Figure 3 shows the confusion matrix

generated from testing the architecture against the

testing set.

Figure 2: Screenshot of training progress. Training

accuracy is shown as a blue curve (top) increasing and

training loss is shown as an orange curve (bottom)

decreasing.

Figure 3: Confusion matrix used to assess the architecture

accuracy.

ISAIC 2022 - International Symposium on Automation, Information and Computing

76

The architecture required 1 minute and 15 seconds

to finish 100 epochs with a 0.001 initial learning rate.

The training accuracy reached 99.17%. The

architecture achieved 97.53% testing accuracy.

4 TESTING

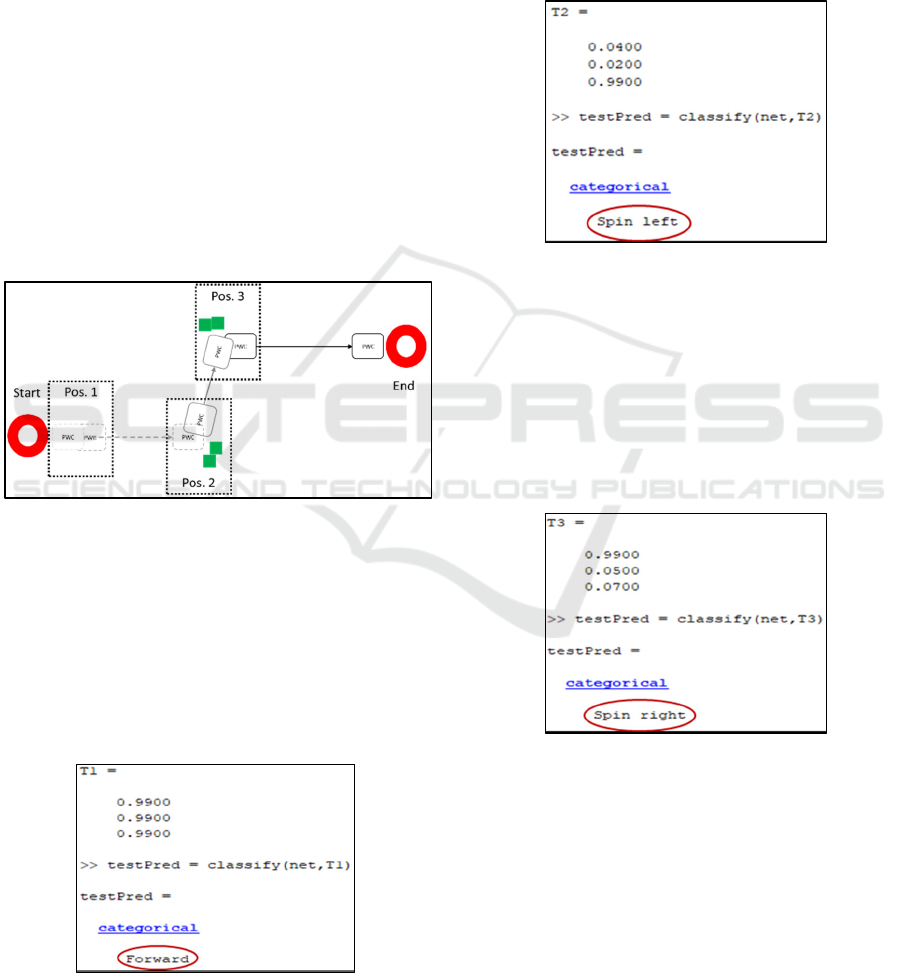

The trained and tested system was used to deliver a

safe driving route for smart wheelchairs using

ultrasonic sensor array readings. Three scenarios

were examined as the wheelchair drove through an

environment with obstacles as shown in figure 4:

Scenario 1: Nothing detected (Pos. 1 in figure 4).

Scenario 2: Obstacles detected in front and to the

right (Pos. 2 in figure 4).

Scenario 3: Obstacles detected in front and to the

left (Pos. 3 in figure 4).

Six possible outcomes were considered as

possible directions for a smart wheelchair: turn left,

turn right, spin left, turn right, forward and Stop.

Figure 4: Smart wheelchair moving in an environment

containing obstacles.

4.1 Scenario 1: No Obstacles Detected

At the start of the driving session, no obstacles were

detected by the sensors as shown at Pos. 1 in figure 4.

The value of D

right

= 0.99, D

centre

= 0.99 and D

left

=

0.99.

The collision avoidance system output was

“Forward” and shown by a red oval in figure 5.

Figure 5: Output of the collision avoidance system for

Scenario 1.

4.2 Scenario 2: Obstacle Detected to

the Right and in Front

As the wheelchair drove forward obstacles were

detected in front and to the right as shown at Pos. 2 in

figure 4. The value of D

right

= 0.04, D

centre

= 0.02 and

D

left

= 0.99.

The collision avoidance system output was “spin

left” and shown by a red oval in figure 6.

Figure 6: Output of the collision avoidance system for

Scenario 2.

4.3 Scenario 3: Obstacles Detected in

Front and to the Left

As the wheelchair moved obstacles were detected in

front and to the left as shown at Pos. 3 in figure 4. The

value of D

right

= 0.99, D

centre

= 0.05 and D

left

= 0.07.

The collision avoidance system output was “spin

right” shown by a red oval in figure 7.

Figure 7: Output of the collision avoidance system for

Scenario 3.

5 DISCUSSION AND RESULTS

A new collision avoidance system for smart

wheelchairs was created using a Deep Learning

approach. Readings from a sensor array were

considered as inputs to the Deep Learning approach.

A Rule-based method similar to the Rule-based

approach used by Haddad and Sanders (2020) was

A New Collision Avoidance System for Smart Wheelchairs Using Deep Learning

77

used to create sets used to train and test a new ANN.

A 6-Layer ANN was created using MATLAB.

MATLAB platform was used to train and test the

ANN.

The new Deep Learning architecture achieved

99.17% and 97.53% training and testing accuracies

respectively when tested against the same training

and testing sets used by Haddad and Sanders (2020).

The new architecture achieved higher accuracy than

the architecture used in Haddad and Sanders (2020).

Moreover, the new architecture required 42.75% less

time to train the new ANN using the same training

set, training platform and CPU used in Haddad and

Sanders (2020).

The new collision avoidance system was tested.

Three scenarios were considered. Readings from the

sensor array were considered as inputs to the Deep

Learning architecture. The collision avoidance

system successfully drove a smart wheelchair away

from obstacles and provided a safe route.

6 CONCLUSIONS AND FUTURE

WORK

The new collision avoidance system provided

successful results when tested and it successfully

steered smart wheelchairs away from obstructions.

The ultrasonic sensor array measured the distance

from the closest object to the left, centre, and right of

the wheelchair. Sensor readings were considered as

inputs for the ANN.

The Deep Learning approach used in this paper

provided more accurate results during training and

testing than the approach presented in Haddad and

Sanders (2020). Moreover, the new system required

42.75% less time to train the ANN against the same

training set and using the same platform and CPU

used in Haddad and Sanders (2020).

The new system could continuously learn to drive

smart wheelchairs in new environments and would

introduce some independence and reduce the need for

helpers by using other computationally inexpensive

and simple Deep Learning architectures.

The user could override the new system if

required by holding a joystick or any other input

device still in position. User input was combined so

that the system could be overridden by user. If no

obstacles were detected, then the smart wheelchair

would drive as instructed by a user.

Results showed that the system provided good

results. The new system will be tested to meet safety

standards before being trialled at Chailey Heritage

foundation.

The system presented in this paper used simple

and dynamic yet effective AI algorithms for smart

wheelchair navigation and can be expected to

improve reliance and self-confidence, enhance

mobility, increase autonomy, and provide a safe route

for smart wheelchair users.

Future work will investigate general shifts in

impairment from purely physical to more complex

mixes of cognitive/physical. That will be addressed

by considering levels of functionality rather than

disability. New transducers and controllers that use

dynamic inputs rather than static or fixed inputs will

be investigated. Different AI techniques will be

investigated and combined with the new types of

controllers and transducers to interpret what users

want to do. Smart inputs that detect sounds and

dynamic movement of body parts using new

contactless transducers and/or brain activity using

EEG caps will be considered. The use of mixes of

EEG, pre-processing, Fourier transform, and wavelet

transforms will be investigated to determine user

intentions.

REFERENCES

Haddad, M., Sanders, D., 2019. Selecting a best

compromise direction for a powered wheelchair using

PROMETHEE, IEEE Trans. Neur. Sys. Rehab. 27 2

pp 228-235.

Haddad, M., Sanders, D., 2020. Deep Learning

architecture to assist with steering a powered

wheelchair, IEEE Trans. Neur. Sys. Reh. 28 12 pp

2987-2994.

Haddad, M., Sanders, D., Gegov, A., Hassan, M., Huang,

Y., Al-Mosawi, M., 2019. Combining multiple criteria

decision making with vector manipulation to decide on

the direction for a powered wheelchair. In SAI

Intelligent Systems Conference. Springer.

Haddad, M., Sanders, D., Ikwan, F., Thabet, M., Langner,

M., Gegov, A., 2020c. Intelligent HMI and control for

steering a powered wheelchair using a Raspberry Pi

microcomputer. In 2020 IEEE 10th International

Conference on Intelligent Systems-IS. IEEE.

Haddad, M., Sanders, D., Langner, M., Bausch, N.,

Thabet, M., Gegov, A., Tewkesbury, G., Ikwan, F.,

2020d. Intelligent control of the steering for a powered

wheelchair using a microcomputer. In SAI Intelligent

Systems Conference. Springer.

Haddad, M., Sanders, D., Langner, M., Ikwan, F.,

Tewkesbury, G., Gegov, A., 2020a. Steering direction

for a powered-wheelchair using the Analytical

Hierarchy Process. In 2020 IEEE 10th International

Conference on Intelligent Systems-IS. IEEE.

ISAIC 2022 - International Symposium on Automation, Information and Computing

78

Haddad, M., Sanders, D., Langner, M., Omoarebun, P.,

Thabet, M., Gegov, A., 2020e. Initial results from

using an intelligent system to analyse powered

wheelchair users’ data. In the 2020 IEEE 10th

International Conference on Intelligent Systems-IS.

IEEE.

Haddad, M., Sanders, D., Langner, M., Tewkesbury, G.,

2021b. One Shot Learning Approach to Identify

Drivers. In SAI Intelligent Systems Conference.

Springer.

Haddad, M., Sanders, D., Langner, M., Thabet, M.,

Omoarebun, P., Gegov, A., Bausch, N., Giasin, K.,

2020f. Intelligent system to analyze data about

powered wheelchair drivers. In SAI Intelligent Systems

Conference. Springer.

Haddad, M., Sanders, D., Tewkesbury, G., Langner, M.,

2021a. Intelligent User Interface to Control a Powered

Wheelchair using Infrared Sensors. In SAI Intelligent

Systems Conference. Springer.

Haddad, M., Sanders, D., Thabet, M., Gegov, A., Ikwan,

F., Omoarebun, P., Tewkesbury, G., Hassan, M.,

2020b. Use of the Analytical Hierarchy Process to

Determine the Steering Direction for a Powered

Wheelchair. In SAI Intelligent Systems Conference.

Springer.

Langner, M., 2012. Effort Reduction and Collision

Avoidance for Powered Wheelchairs: SCAD Assistive

Mobility System, Ph.D. dissertation, University of

Portsmouth.

Larsson, J., Broxvall, M., Saffiotti, A., 2008. Laser-based

corridor detection for reactive Navigation, Ind Rob:

An int' jnl 35 1 pp 69-79.

Milanes, V., Naranjo, J., Gonzalez, C., 2008. Autonomous

vehicle based in cooperative GPS and inertial systems,

Robotica, 26 pp 627-633.

Nguyen, V., et al. 2013. Strategies for Human - Machine

Interface in an Intelligent Wheelchair. In 35th Annual

Int Conf of IEEE-EMBC. IEEE.

Sanders D, Sanders D, Gegov A and Ndzi D 2017 Results

from investigating powered-wheelchair users learning

to drive with varying levels of sensor support Proc. of

SAI Intelligent System (London) pp 241-245

Sanders, D., 2009. Comparing speed to complete

progressively more difficult mobile robot paths

between human tele-operators and humans with

sensor-systems to assist, Assem. Autom. 29 3 pp 230-

248.

Sanders, D., 2010. Comparing ability to complete simple

tele-operated rescue or maintenance mobile-robot

tasks with and without a sensor system, Sensor Rev 30

1 pp 40-50.

Sanders, D., 2016. Using self-reliance factors to decide

how to share control between human powered

wheelchair drivers and ultrasonic sensors, IEEE

Trans. Neur. Sys. Rehab.25 8 pp 1221-1229.

Sanders, D., Bausch, N., 2015. Improving steering of a

powered wheelchair using an expert system to

interpret hand tremor. In

the International Conference

on Intelligent Robotics and Applications. University of

Portsmouth.

Sanders, D., Gegov, A., 2018. Using artificial intelligence

to share control of a powered-wheelchair between a

wheelchair user and an intelligent sensor system,

EPSRC Project, 2019.

Sanders, D., Gegov, A., Haddad, M., Ikwan, F., Wiltshire,

D., Tan, Y. C., 2018. A rule-based expert system to

decide on direction and speed of a powered

wheelchair. In SAI Intelligent Systems Conference.

Springer.

Sanders, D., Gegov, A., Tewkesbury, G., Khusainov, R.,

2019. Sharing driving between a vehicle driver and a

sensor system using trust-factors to set control gains.

In Adv. Intell. Syst. Comput. Springer.

Sanders, D., Haddad, M., Langner, M., Omoarebun, P.,

Chiverton, J., Hassan, M., Zhou, S., Vatchova, B.,

2020c. Introducing time-delays to analyze driver

reaction times when using a powered wheelchair. In

SAI Intelligent Systems Conference. Springer

Sanders, D., Haddad, M., Tewkesbury, G., 2021.

Intelligent control of a semi-autonomous Assistive

Vehicle. In SAI Intelligent Systems Conference.

Springer.

Sanders, D., Haddad, M., Tewkesbury, G., Bausch, N.,

Rogers, I., Huang, Y., 2020b. Analysis of reaction

times and time-delays introduced into an intelligent

HCI for a smart wheelchair. In the 2020 IEEE 10th

International Conference on Intelligent Systems-IS.

IEEE.

Sanders, D., Haddad, M., Tewkesbury, G., Gegov, A.,

Adda, M., 2021b. Are human drivers a liability or an

asset?. In SAI Intelligent Systems Conference.Springer.

Sanders, D., Haddad, M., Tewkesbury, G., Thabet, G.,

Omoarebun, P., Barker, T., 2020. Simple expert

system for intelligent control and HCI for a wheelchair

fitted with ultrasonic sensors. In the 2020 IEEE 10th

International Conference on Intelligent Systems-IS.

IEEE.

Sanders, D., Langner, M., Gegov, A., Ndzi, D., Sanders,

H., Tewkesbury, G., 2016. Tele-operator performance

and their perception of system time lags when

completing mobile robot tasks. In 9th Int Conf on

Human Systems Interaction. University of Portsmouth.

Sanders, D., Langner, M., Tewkesbury, G., 2010.

Improving wheelchair

‐

driving using a sensor system

to control wheelchair

‐

veer and variable

‐

switches

as an alternative to digital switches or joysticks, Ind

Rob: An int' jnl. 32 2 pp157-167.

Sanders, D., Stott, I., 2009. A new prototype intelligent

mobility system to assist powered-wheelchair users,

Ind Rob: An int' jnl. 26 6 pp 466-475.

Sanders, D., Tewkesbury, G., Parchizadeh, H., Robertson,

J., Omoarebun, P., Malik, M., 2019b. Learning to

drive with and without intelligent computer systems

and sensors to assist. In Adv. Intell. Syst. Comput.

Springer.

Tewkesbury, G., Lifton, S., Haddad, M., Sanders, D.,

2021. Facial recognition software for identification of

powered wheelchair users. In SAI Intelligent Systems

Conference. Springer.

A New Collision Avoidance System for Smart Wheelchairs Using Deep Learning

79