An Artificial Intelligence Application for a Network of LPI-FMCW

Mini-radar to Recognize Killer-drones

Alberto Lupidi

1

, Alessandro Cantelli-Forti

1

, Edmond Jajaga

2,*

and Walter Matta

3

1

CNIT, National Laboratory of Radar and Surveillance Systems, Pisa, Italy

2

Mother Teresa University, Skopje, North Macedonia

3

Link Campus University, Roma, Italy

Keywords: Artificial Intelligence, Miniradar, UAS, ATS, Data Assurance.

Abstract: The foundation of Internet Information Systems has been initially inspired by military applications. Means of

air attack are pervasive in all modern armed conflicts or terrorist actions. Thus, building web-enabled, real-

time, rapid and intelligent distributed decision-making systems is of immense importance. We present the

intermediate results of the NATO-SPS project “Anti-Drones” that aims to fuse data from low-probability-of-

intercept mini radars and a network of optical sensors communicating with web interfaces. The main focus of

this paper is describing the architecture of the system and the low-cost miniradar sensor exploiting micro-

Doppler effect to detect, track and recognize threats. The recognition of the target via an artificial intelligence

system is the pillar to assess these threats in a reliable way.

1 INTRODUCTION

Killer drones represent a real threat, today we cannot

not mention them as a surprisingly lethal weapon,

e.g., in the Russian invasion in Ukraine, so far.

Unmanned Aerial system (UAS), which carry

lightweight, laser-guided bombs, normally excel in

low-tech conflicts, have carried out unexpectedly

successful attacks in the early stages of Ukraine's

conflict, before the Russians were able to set up their

air defenses in the battlefield. Commercial-derived,

self-built as a hobby, UAS have long been used for

terrorist attacks on civilians and institutions or used for

other crimes such as weapons infiltration into prisons.

To facilitate the countering of killer-drones and

minimize the risk for people and assets, a NATO SPS

Anti-Drones project 1 has been focalized on the

development of a new concept of a Threat Evaluation

Subsystem (TE) of an anti-drone system able to

detect, recognize and track killer-drones. The project

scope is to progress the state of the art applying mini-

radar technology and signal processing, web data

processing and fusion, for improving real-time

intelligence of the TE subsystem and dramatically

reducing the environmental impact (e.g., ECM

pollution) in an urban environment. The core of the

1

https://antidrones-project.org/, last access 22.08.2022

system architecture is a network of LPI (Low-

probability-of-intercept) mini-radar with FMCW or

noise-like waveform, web-interfaced with on-

demand, fully digital, optical camera-integrated

imaging capability, capable of working in all weather

conditions, to be deployed and appropriately placed

on the ground in the area of the asset to be protected.

Detection, tracking and recognition of UAS with

mini-radar using micro-Doppler features is becoming

more and more popular in last few years as noted in

Guo et al. (2019), Harman (2016) and Huizing et al.

(2019).

The optical part is essential to support correct

classification and tracking of the threat and thus to

minimize false alarms. However, this paper mostly

focuses on the proposed system from the radar point

of view.

Next, the paper is organized as follows. The

system conceptual design is described in Section 2.

System requirements are described in Section 3.

Section 4 describes the developed radar devices.

System implementation detailed design takes part in

Section 5. After the citation of the requirements

needed, some results obtained during a preliminary

measurement campaign are shown and discussed in

Section 6. Section 7 describes the implemented

320

Lupidi, A., Cantelli-Forti, A., Jajaga, E. and Matta, W.

An Artificial Intelligence Application for a Network of LPI-FMCW Mini-radar to Recognize Killer-drones.

DOI: 10.5220/0011590300003318

In Proceedings of the 18th International Conference on Web Information Systems and Technologies (WEBIST 2022), pages 320-326

ISBN: 978-989-758-613-2; ISSN: 2184-3252

Copyright

c

2022 by SCITEPRESS – Science and Technology Publications, Lda. All rights reserved

features extraction process. Finally, the paper

concludes with ending notes.

2 THE CONCEPTUAL MODEL

The solution proposed in Anti-Drones project is based

on the following subsystems:

• A network of LPI polarimeter mini-radar with

wave shape FMCW or noise-like, with low

environmental impact and capability of camera

imaging on-demand, fully digital, easy

reconfigurable and high level of flexibility and

versatility, able to work in all weather conditions,

to be deployed and opportunely positioned (on

ground) in the zone of asset to be protected.

• A data processing and fusion subsystem able to

generate the situational awareness and to enable

the fast detection, recognition and tracking of

the threats within the max time to activate the

neutralization action.

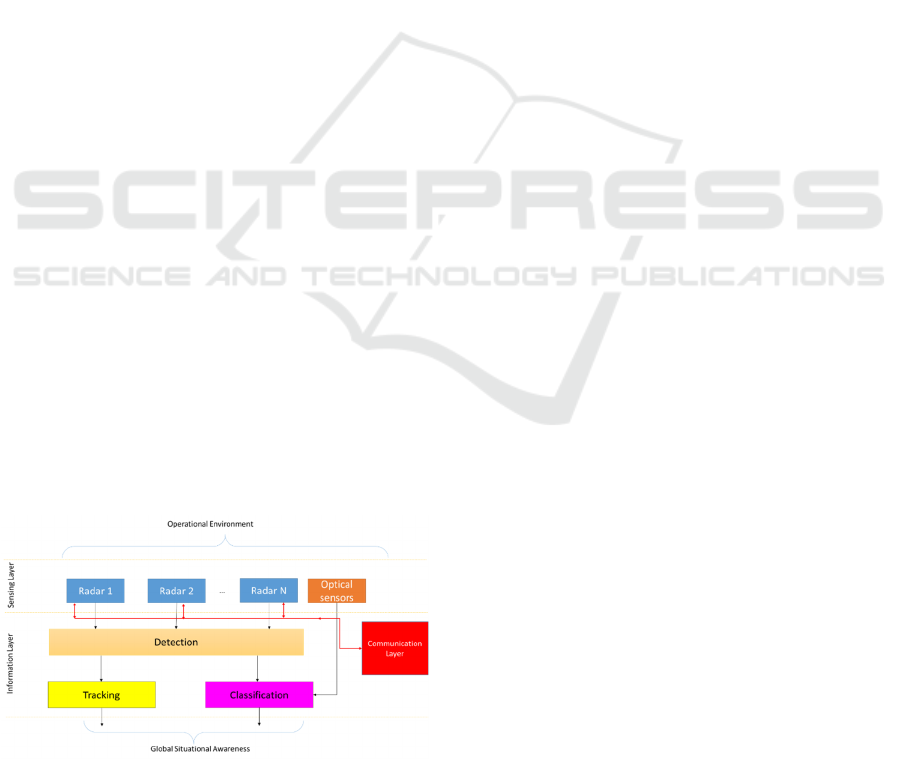

A high-level representation of the system is given in

Figure 1. As can be seen, the sensing layer is

responsible for gathering the information from the

operational environment and it includes all the

sensors involved in the proposed solution. Such

information is passed to the Information Layer that is

responsible to process and transform it in order to get

a global situational awareness of the observed scene.

More specific, such layer include the detection

process and the tracking and classification one. The

algorithm that will be used to fulfil these tasks are out

of the scope of the paper and will be not described

here. The architecture includes also a communication

layer responsible for communicating the gathered

data to the processing unit through Internet protocols.

Well-defined APIs and web services will be used for

each sensor module to support data fusion in the

Information Layer.

Figure 1: Anti-Drones high-level architecture.

3 AIM, REQUIREMENTS AND

OUTLINE

The solution proposed in Anti-Drones project is based

on the following subsystems: Air surveillance is

divided into four phases:

1) Detection

2) Recognition

3) Identification

4) Tracking.

As much for airspace surveillance in general as for

UAS’ detection specifically, the radar surveillance

means remain the primary sources of information in

the predictable future. The significance of optical,

acoustic and laser sensors is anyway rising quickly –

according to technology development. Their mutual

interconnection, interoperability and modularity

should lead to synergic effects reside at minimum in

detection probability rise and false alarms reduction,

as cited in Krátký & Fuxa (2015).

With this project, we foresee a cooperation of

sensors, aiming to a better evaluation of potential

threats.

Several requirements can be assessed in term of

the range needed

• General requirements (in clutter free

environment and for hovering drones);

Detection of the drones: 3km; Recognition:

2.5km

• The range requirements will be subject to the

constraints of the selected scenario that can limit

the radar visibility range. In this case, the

following requirements will be considered the

proper trade-off between the desired ones and

the concept demonstration (in clutter free

environment and for hovering drones);

Detection of the drones: 1.5km; Recognition:

1km

• In the case of moving drones, the following

requirements will be considered the proper

trade-off between the desired ones and the

concept demonstration;

Detection of the drones: between 500m and

1km, according to the flight trajectory, type of

drones and speed; Recognition: 300m.

An Artificial Intelligence Application for a Network of LPI-FMCW Mini-radar to Recognize Killer-drones

321

4 SENSOR PROTOTYPES

4.1 Radar

The radar sensor developed within this project in

collaboration with Italian company Echoes S.r.l.,

shown in Figure 2, is a multichannel linear frequency

modulated continuous wave (FMCW) radar system

for the detection and recognition of moving targets.

(a)

(b)

Figure 2: (a) radar structure. (b) case with antenna

connectors.

The architecture of this radar consists of one

transmitting and three receiving channels. A fourth

receiving channels can be installed if needed during

further research. The ability to use a fourth channel

allows the use of this hardware to be extended and

also reduce costs to future follow-ons of the ANTI-

DRONES project currently under review by NATO-

SPS offices for the three-year period 2023-2025. The

antennas are external to the main cabinet to allow

different acquisition geometries to be created. In fact,

this configuration allows different radar processing

techniques to be applied. For example, by assuming

that the antennas are correctly positioned, three-

dimensional interferometric inverse synthetic

aperture radar (3D InISAR) algorithms or monopulse

processing can be applied. In addition, several radar

fusion techniques can be exploited in order to

increase the detection and recognition capabilities of

the system. The sensor works at X-Band at 9.6GHz,

with a selectable bandwidth from 300 to 500MHz.

Transmit power is up to 33dBm, with a Noise Figure

of 6dB.

Table 1 shows the maximum range for each RCS

value. In our case, we should have a RCS between -

20 and -10dBm

2

Table 1: Maximum range vs. RCS with 20dBi antenna gain

and 0.5s integration time.

RCS Values Max Range [m]

-30 dBm

2

890.89

-20 dBm

2

1591.59

-10 dBm

2

2822.82

-5 dBm

2

3763.76

4.2 Camera

Optical-based detection, recognition and tracking is

based on real-time optical camera images and

sequences. This process is implemented with deep

learning (DL) methods, providing the target class, the

corresponding bounding box and accuracy rate, as

described in Jajaga et al. (2022). Namely, the camera

detection and recognition components are

implemented with the popular DL framework

YOLOv4 as explained in Bochkovskiy et al. (2020).

The model is trained following a fine-grained

methodological approach for refining the dataset

based on a number of open drone datasets.

5 IMPLEMENTATION

This section describes in more detail the processing

chain of the TE system. The flowchart shown in

Figure 3 emphasizes the dual path needed for a

reliable detection and recognition. In general, optical

and radar systems will operate together in order to

increase the probability of success in the recognition

chain. Moreover, an information fusion system will

WEBIST 2022 - 18th International Conference on Web Information Systems and Technologies

322

recommend the end user with a proposed decision to

be taken as the final operative decision.

The processing flow is the following:

1) Noise mitigation with 2D Wiener Filter

2) Moving Target Indicator (MTI) filtering to

remove clutter and radar artefacts

3) Detection of the body of the drone via CFAR

filtering

4) Kalman Filter Tracking (useful only if

multiple tracks are present), made on

subsequent data batches

5) Recognition via feature extraction (number

of blades, micro-Doppler spectrum shift)

The camera module will use the detection details

from the radar module to initiate the recognition and

tracking process of the target. Namely, for each radar-

detected target, the camera will accurately and

quickly the position in the direction of the moving

target. Radar data to support camera recognition and

tracking include the following: the target angle, the

height where it is located and the speed. The two

branches will work separately achieving their

objective.

However, the success of a single system is not

given for granted. It must be noted that the system

performance gets affected on several operating

environment circumstances, such as: day vs night,

rain vs sun, distance of the target, colour of the target,

intrinsic resolution of the sensor, etc. Given these

issues, we need a final step where we fuse the

decision achieved from the two sensors, together with

the confidence level and the possible knowledge of

false alarms.

Typically, this step is needed for confirming

sinergically the decisions of either branch, or to

overcome shortcomings of one of the two, when

particularly adverse conditions for a specific sensor

arise. Thus, in our approach the data fusion module

will be performed in heterogeneous and

homogeneous manners.

Namely, the system must fuse together

heterogeneous radar and camera data, while also

supporting fusing of homogeneous data from the

corresponding data source. Specifically, our solution

will fuse the following target attributes based on two

sensor sources:

1) Radar data: Direction of arrival, range,

angular coordinates elevation and radar

cross section.

2) Optical camera data: photo and video

images.

Figure 3: Processing Flowchart.

Data Assurance techniques for maximum

confidence in data quality are also ensured. Data from

the sensors are saved in a RAW format so phenomena

of bit-rot (the decay of electromagnetic charge in a

computer's storage) or bit-flip could alter present or

future analyses on the data collected during the trials.

For this reason, a highly resilient long-term storage

solution was chosen to avoid any impediment to the

pace of research. Such a solution inspires the so-

called next-generation "black boxes" (i.e., EVENT

DATA RECORDER) and is based on cryptographic

filesystems and Merkle trees as cited in Cantelli-Forti

& Colajanni (2019). The proposed solution has

periodic and on-the-fly self-diagnosis and self-

healing capabilities. The maximum throughput

currently achieved is 2 GB/s and ready for the next

stages of SPS-AntiDrones research.

6 EXPERIMENT DISCUSSION

The aim of the campaign was primarily to assess the

possibility of recognition of small drones and

medium-sized drones. The second objective is to

assess the possibility of tracking such UAS in a noisy

environment, and finally, to assess the possibility of

recognition among different types of UAS.

Figure 4: Photos of the hexacopter (left) and quadcopter

(right).

RCS Model

Optical Model

Co herent Imaging

Radar Detection

and Tracking

Camera

Detection and

Tracking

Recognition

Recognition

Decision

Fusion

Assessment &

Action

Dynamic

/Payload

Model

UAV plus

payload image

database

An Artificial Intelligence Application for a Network of LPI-FMCW Mini-radar to Recognize Killer-drones

323

The observation campaign was performed in a

mostly building and tree free area, where the main

source of clutter came from short grass, mainly from

the sidelobes of the radar, whose orientation was

slightly toward the sky.

Two UASs, a hexacopter and a quadcopter were

used as a test target as shown in Figure 4.

As an example, on a generic UAS, first step deals

with noise reduction. A 7x7 Wiener filtering was

performed. The drawback of this procedure is that

there is a little loss in resolution, so if the target is very

small, is probable that only a single point can be

detected, and this can be detrimental for recognition.

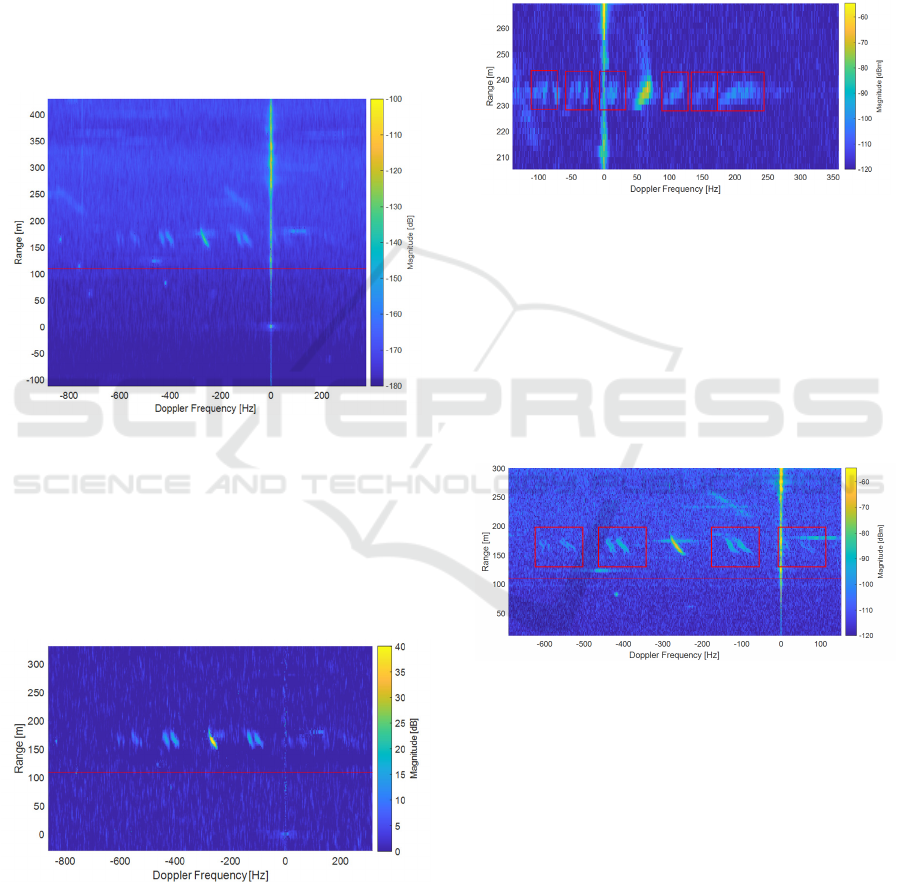

Figure 5: Range-Doppler map after smoothing.

Figure 5 shows the result of the smoothing

operation, while several artefacts and the clutter are

still visible. Figure 6 shows the result of MTI

filtering, which enhances the target and the blades

(moving parts) while cutting clutter and artefacts. Red

lines represent minimum and maximum limits for

correct detection.

Figure 6: Detail of Range Doppler map after MTI.

Figure 7 shows the results for the hexacopter. It is

interesting to observe, as shown in Figure 7, the

contribution for recognition given by the blades. A

total of six contributes from the six blades are visible,

three to the left and three to the right (highlighted in

red boxes). Usually, for a helicopter drone, for

symmetry reasons half of the rotors are in front of the

body (the signatures at the left) and half are on the

back (signatures on the right) with respect to the line

of sight. The image lacks more detail for further

identifications, but with the next images, ulterior

details will be more visible. The Doppler distance

between blades signature is about 50Hz.

Figure 7: Detail of drone body and blades for the

hexacopter.

Being it a quadcopter, we have four blade

signatures, as can be seen in Figure 8. It is interesting

to see that each of the signature of the blades has two

separate contributes. This happens because the

rotating movement of a blade makes that a part of it

moves away from the radar, and one moves toward

the radar, inducing two different micro-modulation

effects.

Figure 8: Detail of drone body and blades for the

quadcopter.

Moreover, two blades compose each blade group,

so it is possible to see that for each blade signature

group there are four lines, divided into two couples,

by accounting for the movement of each half of a

blade itself. The Doppler spacing is about 150Hz.

7 FEATURE EXTRACTION WITH

AI

The micro-Doppler signature of the blades of a UAS

(which travel at high speeds) provides an effective

WEBIST 2022 - 18th International Conference on Web Information Systems and Technologies

324

mechanism, which can discriminate targets from

objects from nature with similar characteristics, such

as birds, and differentiating between UASs.

Spectrogram is the most popular technique for

micro-Doppler analysis, as it is simple and able to

reveal the time-frequency variation of spectral

content. The spectrogram is the squared magnitude of

short-time Fourier transform (STFT), where the

STFT is done by segmenting the raw data into a series

of overlapping time frame and performing FFT on

each time frame

The clustering is performed with the spectrogram.

For each cluster, the sum of within-cluster matrix 𝐒

𝐰

and between-class matrix 𝐒

𝐛

is calculated and the

Fischer Discriminant Analysis is conducted again

(further subspace reliability analysis). The

application of between-class matrix can greatly

improve the discriminability of different classes.

Finally, the Mahalanobis distances of all training

samples to the centre of each cluster of two class are

calculated, which then undergo a min-max

normalization and are taken as the training features

feeding to the classier for model training. Here, the

Support Vector Machine (SVM) is used as the

classifier

The extracted features from the data are:

• Base velocity or body radial velocity.

• Total BW (Bandwidth) of Doppler signal.

• Offset of total Doppler.

• BW without micro-Doppler.

• Normalized standard deviation Doppler sig.

strength

• Cadence/cycle frequency.

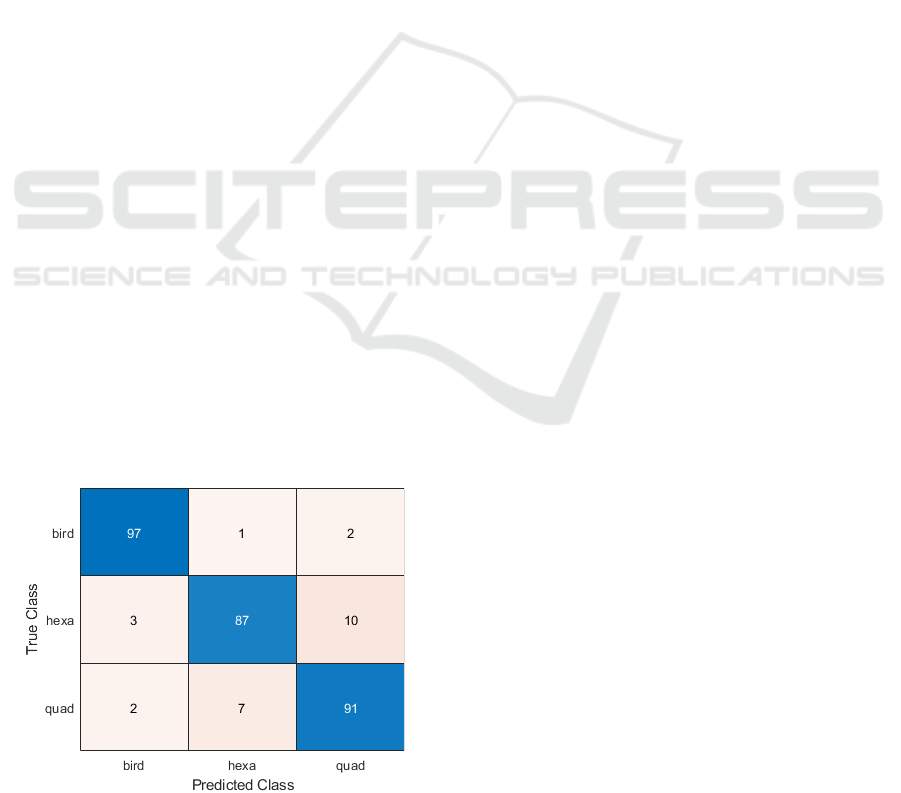

The SVM is able to correctly classify most of the

target and false alarms are higher only when

comparing, as expected, quadcopter and hexacopter,

as shown in the Confusion matrix in Figure 9.

Figure 9: Confusion matrix from SVM.

At the project status, a thorough comparative

evaluation with the optical system is still running, but

the final aim of the project itself is the merging of the

two system in order to overcome each shortcoming.

Also, a more extensive database is needed to train

better the classification system to be more efficient

for different non-cooperative scenarios.

8 CONCLUSIONS

In this paper, a solution to monitor a scenario where

potential threats posed by armed drones is proposed

by combining a network of low-power low-cost

FMCW radar and optical sensors. In this work, it was

analysed principally the radar solution, and after an

overview of the system, the results of a preliminary

measurement campaign showing the feasibility of the

solution. It has been shown how it is possible to detect

even low RCS target, given a reasonable range, and

how from the data acquired is even possible to detect

different features of different drones exploiting

micro-Doppler effects, giving also information on

rotor numbers, number of blades and rotation speed.

Future work should demonstrate how the

performance of the TE subsystem could be improved

by the development of an AI-framework (i.e.

algorithms, methodologies and techniques) on sensor

signal processing, such as radar signals and EO/IR

images, and target trajectories to enable the multi-

targets’ detection, classification and tracking.

ACKNOWLEDGEMENTS

This work was funded by NATO SPS Programme,

approved by Dr. A, Missiroli on 12 June, 2019,

ESC(2019)0178, Grant Number SPS.MYP G5633.

NATO country Project Director Dr. A. Cantelli-Forti,

co-director Dr. O. Petrovska, and Dr. I. Kurmashev.

We would like to sincerely thank:

• Dr. Claudio Palestini, officer at NATO who

oversees the project;

• Prof. Walter Matta, member of the external

advisory board, for tutoring the authors.

REFERENCES

Guo et al. (2019). ‘Micro-Doppler Based Mini-UAV

Detection with Low-Cost Distributed Radar in Dense

Urban Environment’, in 2019 16th European Radar

Conference (EuRAD), Oct. 2019, pp. 189–192.

An Artificial Intelligence Application for a Network of LPI-FMCW Mini-radar to Recognize Killer-drones

325

Harman (2016) ‘Characteristics of the Radar signature of

multi-rotor UAVs’, in 2016 European Radar

Conference (EuRAD), Oct. 2016, pp. 93–96.

Huizing et al. (2019) ‘Deep Learning for Classification of

Mini-UAVs Using Micro-Doppler Spectrograms in

Cognitive Radar’, IEEE Aerospace and Electronic

Systems Magazine, vol. 34, no. 11, pp. 46–56, Nov.

2019, doi: 10.1109/MAES.2019.2933972.

Krátký & Fuxa (2015) ‘Mini UAVs detection by radar’, in

International Conference on Military Technologies

(ICMT) 2015, May 2015, pp. 1–5

Jajaga et al. (2022) An Image-based Classification Module

for Data Fusion Anti-Drone System'. In: 5th

International Workshop on Small-Drone Surveillance,

Detection and Counteraction Techniques, in

conjunction with 21st International Conference on

Image Analysis and Processing (ICIAP), 2022, 23-27

May, Lecce, Italy [unpublished, accepted for

publication]

Bochkovskiy et al. (2020) Optimal speed and accuracy of

object detection. arXiv 2020, arXiv:2004.10934.

Cantelli-Forti & Colajanni (2019) Digital Forensics in

Vessel Transportation Systems. In International

Symposium on Foundations and Practice of Security

(pp. 354-362). Springer, Cham.

WEBIST 2022 - 18th International Conference on Web Information Systems and Technologies

326