Towards an Ontology-based Recommender System for Assessment in

a Collaborative Elearning Environment

Asma Hadyaoui

a

and Lilia Cheniti-Belcadhi

b

Sousse University, ISITC, PRINCE Research Laboratory, Hammam Sousse, Tunisia

Keywords: Recommender System, Personalization, ePortfolio, Collaborative Learning, Elearning Standard, Assessment,

Ontology.

Abstract: Personalized recommendations can help learners to overcome the information overload problem, by

recommending learning resources according to learners’ preferences and level of knowledge. In this context,

we propose a Recommender System for a personalized formative assessment in an online collaborative

learning environment based on an assessment ePortfolio. Our proposed Recommender System allows

recommending the next assessment activity and the most suitable peer to receive feedback from, and give

feedback to, by connecting that learner's ePortfolio with the ePortfolios of other learners in the same

assessment platform. The recommendation process has to meet the learners’ progressions, levels, and

preferences stored and managed on the assessment ePortfolio models: the learner model, the pre-test model,

the assessment activity model, and the peer-feedback model. For the construction of each one, we proposed a

semantic web approach using ontologies and eLearning standards to allow reusability and interoperability of

data. Indeed, we used CMI5 specifications for the assessment activity model. IEEE PAPI Learner is used to

describe learners and their relationships. To formalize the peer-feedback model and the pre-test model we

referred to the IMS/QTI specifications. Our ontology for the assessment ePortfolio is the fundamental layer

for our personalized Recommender System.

1 INTRODUCTION

Learning assessment has always been a very

important phase of any learning process. In particular

designing assessment methods that provide insight

into the success of learning activities and what can be

done to improve their effectiveness is very

challenging. This research attempts to address peer

assessment in an Online Collaborative Learning

Environment (OCLE). Students learn in groups in

such an environment by asking questions, supporting

their viewpoints, explaining their reasoning, and

presenting their knowledge to one another.

Collaboration among students has become

increasingly important over time. It is concerned with

instructional strategies that aim to increase learning

through collaborative efforts among students working

on a particular learning activity. Through various

online Information and Communication Technology

a

https://orcid.org/0000-0002-7006-8735

b

https://orcid.org/0000-0001-8142-6457

(ICT) tools, the students can share their experiences

and knowledge with other students. In previous

research work, we have already focused on the

improvement of both individual learners’ and group

performance at a given assessment activity or a set of

activities by adapting the assessment process to the

learner level (Asma Hadyaoui, Lilia Cheniti-

Belcadhi, 2022). Collaborative learning is more and

more valued in higher education because it allows

students to practice a variety of team-based tasks they

will encounter in the workplace; therefore, it applies

to real-world assessment providing a direct

opportunity for formative peer assessment (Tillema,

2010). Peer assessment is a practice in which students

provide feedback to each other (Lilia Cheniti

Belcadhi and Serge Garlatti, 2015). The goal of

providing feedback is to assist students in improving

their learning. Receiving and offering feedback from

peers is a form of learning, and learners gain from it.

294

Hadyaoui, A. and Cheniti-Belcadhi, L.

Towards an Ontology-based Recommender System for Assessment in a Collaborative Elearning Environment.

DOI: 10.5220/0011543500003318

In Proceedings of the 18th International Conference on Web Information Systems and Technologies (WEBIST 2022), pages 294-301

ISBN: 978-989-758-613-2; ISSN: 2184-3252

Copyright

c

2022 by SCITEPRESS – Science and Technology Publications, Lda. All rights reserved

They increase their grasp of the learning objectives

and success criteria by providing comments to peers.

Because they are forced to integrate the learning

objectives and success criteria in the context of

someone else's work, which is less emotionally

charged than one's own, the person giving feedback

benefits just as much as the person getting input

(Wiliam, 2006). Peer assessment in a collaborative

learning environment is grounded in some questions

that frame the assessment process of a learner: Who

would be my peer now? Where to go next? What is

my peer's next target? The recommendation is the

answer to these questions, as it allows monitoring

learners’ behavior while performing assessment

activities. Recommender Systems make use of

different sources of information to provide users with

predictions and recommendations of items. The

recommendation process has to meet the learners’

progressions, levels, and preferences. Therefore, how

can we recommend learning and assessment

resources adapted to the profile and context of each

learner? How to find the most appropriate peer for a

learner to receive feedback from, and to give

feedback to? How to collect and manage traces of

assessment activities to use them as a first layer of the

recommendation process? How can we ensure the

exchange and interoperability of assessment

resources between different tools and learning

environments? Learner characteristics such as

knowledge, affective activity statement, pre-test

achievement, and previous assessment result are

considered as a basis for providing recommendations.

Our system should be able to gather and thus save

learners' traces recorded during assessment activities

so that they can be reused. Furthermore, the proposed

system should be capable of managing such pieces of

data in the best possible way in the interest of

learners. Indeed, the learner's performance in

assessment activities can be collected as useful data

for assessment and as evidence or proof of the

learner's mastery of a certain skill. All this data will

be stored in what we call an "ePortfolio". In this

paper, we propose an assessment ePortfolio that

should allow us to structure the content of the

assessment traces to use them as evidence for the

learner’s competencies (Amira Ghedir, Lilia Cheniti-

Belcadhi, Bilal Said, Ghada El Khayat, 2018). To

model our proposed ePortfolio, we used semantic

web technologies and ontologies. The suggested

model's primary problems include interoperability,

information sharing, scalability, and dynamic

integration of different pieces of data. To overcome

this issue, we are based on e-learning standards: in

particular, we proposed the CMI5, the IMS/QTI, and

the IEEE PAPI Learner specifications. Our

assessment ePortfolio is the fundamental layer for our

Recommender System. This latter is split into two

recommendations: On the one hand, the next

assessment activity to perform, and on the other hand,

the most suitable peer to receive feedback from, and

give feedback to.

The remaining sections of the paper are structured

as follows. First, we present the use and application

of a Recommender System. After that, we present our

contribution, in the form of a general architecture

design for the proposed Recommender System and an

ontological model for the assessment ePortfolio.

Finally, concluding notes and future research actions

related to thematics are explored.

2 LITERATURE REVIEW

Users of eLearning are frequently met with an

innumerable number of products and eLearning

materials. Therefore, customization is required to

provide an exceptional user experience. This type of

recommender tool is essential in numerous Web

domains, including eLearning sites (Dhavale, 2020).

2.1 Recommender System (RS)

RSs are systems that are designed to provide

recommendations and suggestions for users based on

many different factors. They are useful for

recommending things that users have already selected

(Lilia Cheniti Belcadhi and Serge Garlatti, 2015).

There are some initiatives in the field of RS to offer

new and better recommendations to improve

performance. The recommendations are aimed to aid

users in making various decisions, such as what to

buy, what music to listen to, or what news to read.

RSs have proven to be an effective way for online

users to manage information overload, and they have

become one of the most efficient and widely used

tools in e-commerce. They produce meaningful

recommendations to a user for items or products

based on their preferences. Recommendations of

books on Amazon, movies on Netflix, or songs on

Spotify is the real-world example of RSs (Sushma

Malik, Mamta Bansal, 2019). The RS has

traditionally been used in a business context, but this

has shifted over time to include other sectors such as

health, education, and government. In (Herlocker,

Jonathan L. and Konstan, Joseph A. and Riedl, John,

2000), RS was presented as predicting a person's

Towards an Ontology-based Recommender System for Assessment in a Collaborative Elearning Environment

295

affinity for articles or information by connecting that

person's interests with the registered interests of

people and by sharing skills among peers. In

education, finding educational resources that meet

specific learning needs has become increasingly

difficult. According to (Maria-Iuliana Dascalu,

Constanta-Nicoleta Bodea, Monica Nastasia

Mihailescu, Elena Alice Tanase, Patricia Ordoñez de

Pablos, 2016), RS is gaining utility and popularity in

various fields. EduRecomSys, for instance, was

proposed in (Maritza Bustos López, Giner Alor-

Hernández, José Luis Sánchez-Cervantes, Mario

Andrés Paredes-Valverde, María del Pilar Salas-

Zárate, 2020) as an educational RS that suggests

users' educational resources based on the

preferences/interests of other users and the user's

previously detected emotion.

3 RECOMMENDER SYSTEM

DESCRIPTION

The RS is a system of users, user interfaces, datasets,

and recommendation algorithms. The data utilized to

create the recommendations are drawn from the

learners’ ePortfolios. A recommendation algorithm

can be applied based on the user requirements

deduced from his ePortfolio.

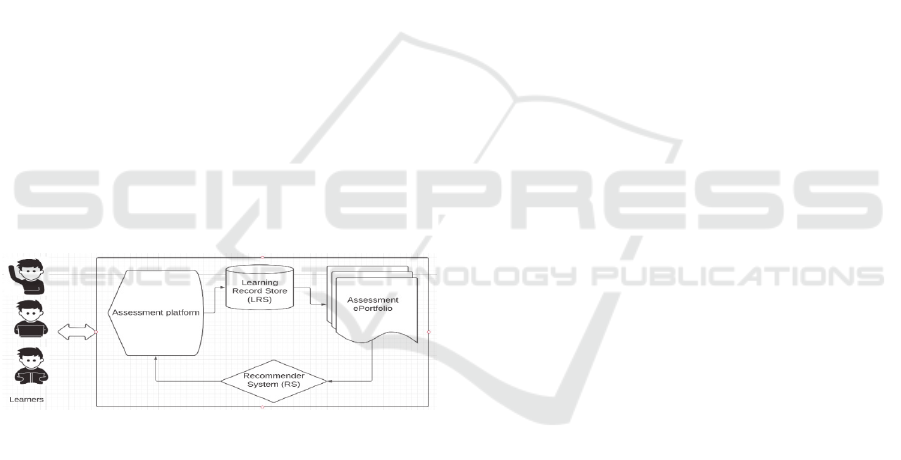

Figure 1: System design schema for the RS.

3.1 The Learning Record Store (LRS)

It is essential to add an LRS to our system to truly

support the CMI5 specifications (Rustici-Software,

2022). In addition, our assessment ePortfolio is

primarily based on stored and collected. Learners’

profiles and assessment pieces of information are

calculated and stored based on the learner’s traces

tracked while performing assessment activities. An

indicator is a significant element, identified using a

set of data, that makes it possible to evaluate a

situation, a process, a product, etc. To calibrate the

value, other variables are used. Many works on

indicators have been published, with most of them

adhering to these criteria. For example, (Olga C.

Santos, Antonio R. Anaya, Elena Gaudioso, Jesus G.

Boticario, 2003) provides a tool that calculates the

degree of involvement of each learner during the

learning unit based on the interactions.

3.2 The Assessment Platform

The collaborative online assessment platform

includes a range of situations in which interactions

take place among students. Multiple sections of the

application permit collaborative learning. It is a

platform that can be used to perform remote

collaborative tasks by allowing students to interact in

real-time and discuss collaborative topics in teams. A

pre-test is required to access the different activities.

The concept of Assessment activity is the basic

abstract concept of the platform executed by an actor,

which can be a single person or a group of learners.

Activities also have resources, representing elements

created or manipulated by the activity. These

resources are used to perform an assessment activity

that has learning outcomes or learning objectives

when it is performed.

3.3 The Recommender Engine

We used RS to enhance the learner's experience in the

assessment process. We used recommendations for

two purposes: to recommend the most appropriate

peer learner to receive and provide feedback, and then

to recommend the next assessment activity. To this

end, various data sources generate personalized

recommendations. All these data are managed on

learners' ePortfolios, which will be described later as

a learner model of our proposed intelligent system for

assessment in a collaborative e-learning environment.

A determining factor in the design of an RS is the

filtering model that is used according to the type of

system. In general, recommendations are primarily

based on three approaches, namely content-based,

collaborative filtering, and hybrid approaches

(Keyvan Vahidy Rodpysh, Seyed Javad Mirabedini,

Touraj Banirostam, 2021). There are traditionally

three content filtering approaches, which can be

categorized as Content-Based: Try to recommend a

similar article based on the user's past preferences;

Collaborative filtering: Identifies users whose tastes

match those of a particular user and recommends to

this user content that other users like, and hybrid is a

combination of the last two approaches. The three

categories all have their characteristics and suitable

application scenarios (Daniel Valcarcea,Alfonso

WEBIST 2022 - 18th International Conference on Web Information Systems and Technologies

296

Landina,Javier Parapar,Alvaro Barreiro, 2019). In

our case, a collaborative filtering approach is applied.

Collaborative filtering (CF) filters the data stream

that an RS can recommend to a target user based on

their tastes and preferences (Fkih, 2021). The CF

technique is extremely sensitive to the similarity

measure used to quantify the strength of dependency

between two users. Indeed, our proposed RS

computes the similarity between learners using the

learner's previous data collected and managed on his

assessment ePortfolio, which is based on the

Memory-based Collaborative filtering approach. Our

primary goal is to describe the degree of similarity

among learners and to discover homogeneous

learners' ePortfolios: assessment activities

achievements, scores, results, and pre-test

performance. Our proposed recommendation engine

is considered a pipelined invocation of two RSs, with

two recommendation techniques executed

sequentially. In this pipeline, the recommendations of

the successor, which is the assessment activity RS,

are limited by the predecessor, which is the peer

learner RS, implying that one RS pre-processes input

for the subsequent.

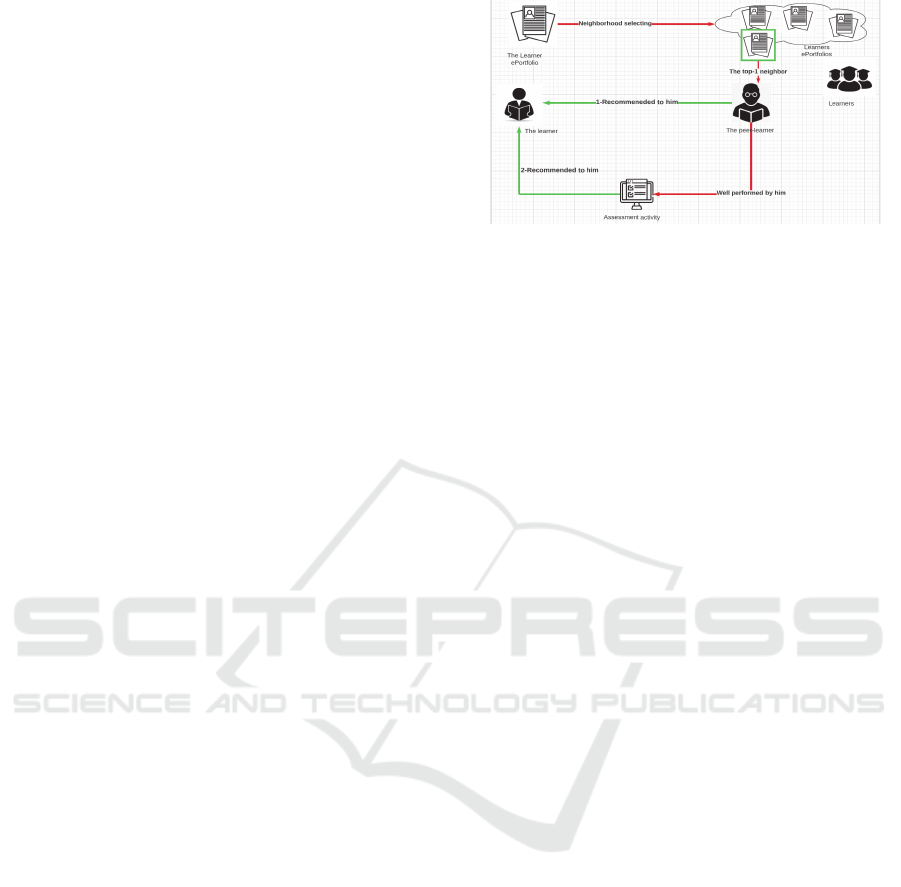

The Peer Learner RS. In this phase, we propose

to select the set of nearest learners, for each

assessment activity performed using the (top-k)

technique, which selects the k-most similar users,

k here denotes the number of users. In our case,

the k value is one because it will recommend the

most appropriate peer for the active learner to

receive and provide feedback for the current

assessment activity in a peer-assessment context.

The Assessment Activity RS. This method

involves giving recommendations based on

correlations between assessment activity

statements among system learners. The

assessment activity RS will look for the pre-

selected learner who is the most similar to the

target learner, and only assessment activities that

this later performed well will be recommended to

the target learner. The entire recommendation

process is depicted in Figure 2.

Figure 2: Description of the recommendation process.

4 ASSESSMENT EPORTFOLIO

The suggested assessment ePortfolio is split into four

models: The pre-test model, the assessment activities

model, the peer-feedback model, and the learner

model. Our proposed assessment ePortfolio manages

pre-tests, assessment activities, peer feedback, and

learner profiles. To do that, we take IMS/QTI, CMI5,

and IEEE PAPI standards into consideration and

incorporate their characteristics into our proposed

ontological model so that it conforms to international

standards. Moreover, assessment ePortfolio takes

advantage of the semantic web technologies that offer

better data organization, indexing, and management

and ensures this model's reusability, interoperability,

and extensibility. The objective of the semantic web

is to equip the current Web with metadata,

transforming it into a Web of Data that is easily

consumable by machines. Semantic-web ontologies

are the artifacts of this Web of Data, each of which

consists of a data model and data that must comply

with it; they are based on the semantic-web

technologies, i.e., RDF, RDF Schema, and OWL for

modeling structure and data, and SPARQL for

querying them (Rivero et al., 2013).

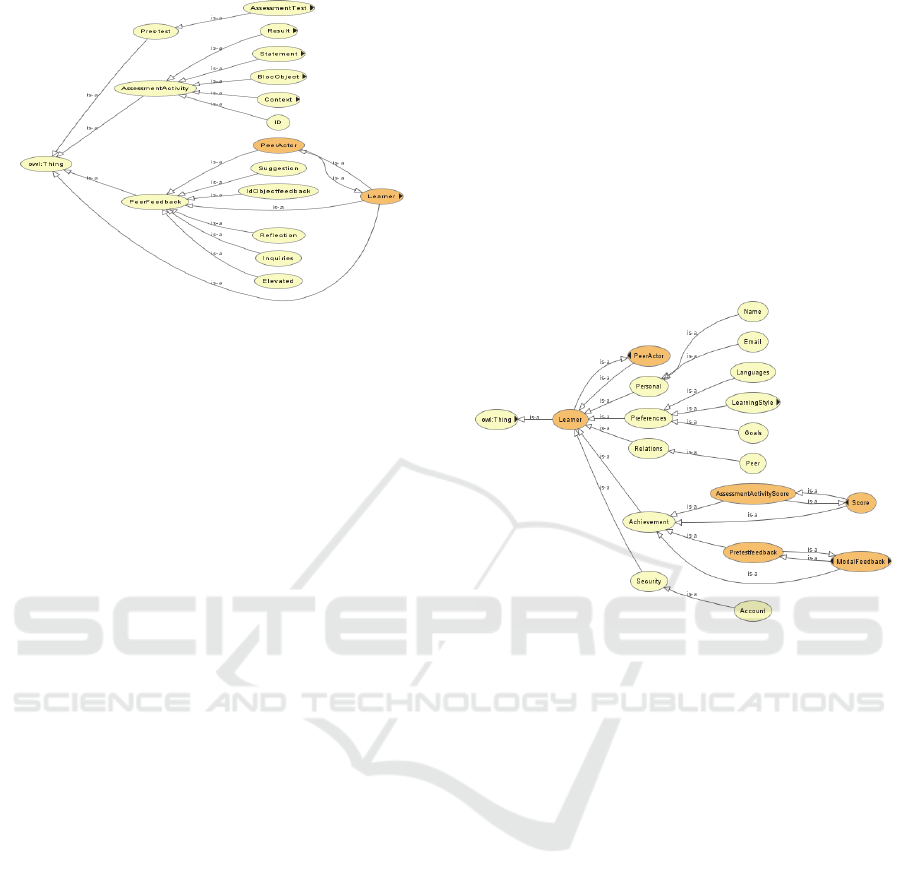

4.1 Assessment ePortfolio Model

Our proposed assessment ePortfolio brings together

the four models described before. Therefore, it

describes assessment activities in which the learner

has participated, is participating, or plans to

participate; competencies and skills of the student;

learner’s preferences; learner’s goals and plans; the

results of the pre-test, assessment activities

achievements; and peer-feedback.

Towards an Ontology-based Recommender System for Assessment in a Collaborative Elearning Environment

297

Figure 3: The ontological model of ePortfolio for peer

assessment in an online collaborative platform.

4.2 The Learner Model

Learners play a critical role in evaluating learning

activities, given that they are the individuals who use

the activities to learn. Knowledge about how they

engage with activity and, importantly, their attitudes

towards a particular activity, both provide knowledge

about the effectiveness of the activity and how it can

be improved. The learner model is a representation of

the learner profile deduced from his knowledge,

goals, experiences, interests, backgrounds, learning

styles, learning activities, and assessment results. The

user model or learner model is constructed from these

features. It is responsible for discovering the

individual learning behavior of the learner (Abdalla

Alameen, Bhawna Dhupia, 2019). The learner profile

can be conceived at the epistemic and behavioral

levels (Kirsti Lonka, Elina Ketonen & Jan D.

Vermunt, 2020). It aims to identify the individual

characteristics of each learner’s strengths,

preferences, and motivations (Hongchao Peng,

Shanshan Ma & Jonathan Michael Spector , 2019). At

the epistemic level, the data collected in the learning

environment is used to infer the learner's knowledge

status. This includes theoretical and declarative

knowledge and procedural knowledge (Katrin Saks,

Helen Ilves and Airi Noppel, 2021). The updating of

the learner profile means updating values associated

with the learner activities progression, pre-test

achievements, scores, and peer feedback received. It

is based on the learner’s assessment ePortfolio. The

process of updating the learner’s profile is necessary

to keep track of the learner’s evolving competencies.

This update affects the accuracy of the formative

assessment activities proposed to the learner, which

in turn will help increase the learner’s performance.

Standardization addresses not only the learning

objects but also the learner information; therefore,

learner characteristics should be well-defined to

facilitate their use in various e-learning platforms and

to allow for more accurate personalization. Within

this context, attempted to model the learner data in a

formal way that promotes reuse and interoperability,

that is the IEEE PAPI Learner (Public and Private

Information for Learners) because it considers the

performance information as the most important

information about a learner (Othmane Zine

,DerouichAziz,Talbi Abdennebi , 2019).

Figure 4: The ontological model of the learner model.

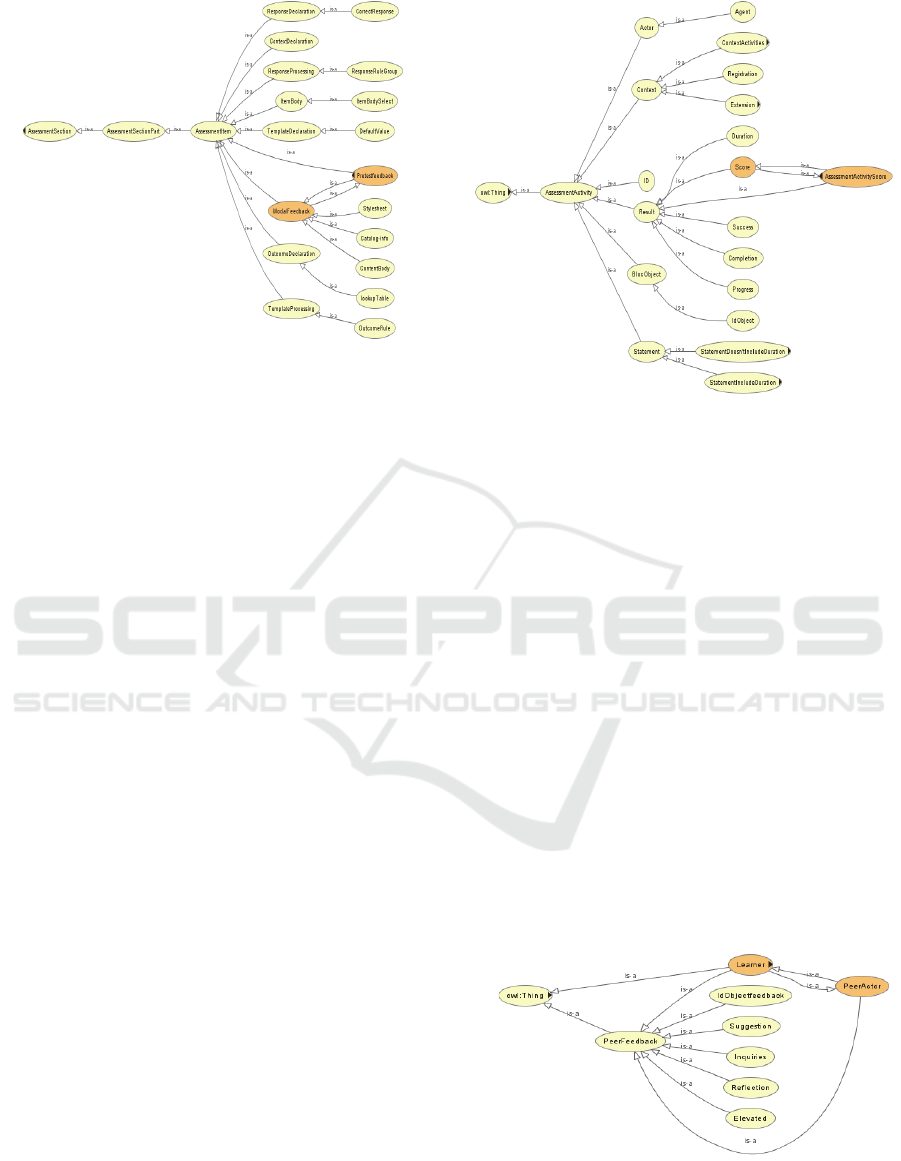

4.3 The Pre-test Model

Pre-tests are non-graded assessment tools used to

determine pre-existing subject knowledge. Typically,

pre-tests are administered before a course to

determine knowledge baseline, but here they are used

to test learners before assessment activities

performance and may let learners escape some of

them. The pre-test is an important stage to conceive

our RS as it allows us to handle the cold start problem

issue with RSs. To provide the basis for

interoperability specifications for the pre-test creation

process: from construction to evaluation, Question &

Test Interoperability (QTI) provides a good starting

point for modeling and designing the pre-test model.

The QTI standard (Consortium, 2022) specifies how

to represent pre-tests and the corresponding result

reports. Figure 5 illustrates the ontological model for

the pre-test.

WEBIST 2022 - 18th International Conference on Web Information Systems and Technologies

298

Figure 5: The ontological model of the Pre-test model.

An assessment item involves the question and the

instructions of how this question should be introduced

and the answer treatment to be applied to the

candidate’s response. Each answer to the question can

also have different structures. The response

processing determines the assessment method.

Results of a test can be recorded and saved for future

reference by other systems (Consortium, 2022).

4.4 The Assessment Activities Model

The RS protocol enables the packaging and delivery

of distributed learning resources such as traditional

courseware and content that cannot be accessed via a

web browser (e.g., mobile apps, and offline content).

To replace SCORM as the meta-data standard for

computer-based training, the CMI5 specification

duplicates SCORM features (xAPI). This allows it to

collect data with reliability (Brian Miller, Tammy

Rutherford, Alicia Pack, George Vilches, Jim Ingram,

2021). As a result, we used CMI5 specifications to

handle assessment activities in which the learner has

taken part, is taking part, or plans to take part in, using

the statement class. CMI5 is the most recent

eLearning standard. It specifies how learning

products packaged as learning objects and imported

into the framework interact with the latter to track

learner progress. Therefore, it can be considered an

excellent way to model a formative assessment. The

ontological model is represented as follows.

Figure 6: The ontological model of the assessment activities

model.

4.5 The Peer-feedback Model

Our assessment ePortfolio focuses on peer feedback

about the assessment process as it emphasizes the

value of assessment and, as a result, improves student

learning. Indeed, the peer assessment process can

improve student learning. To formalize the learners'

peer feedback, we require a response template that

follows the RISE model (Wray, 2022). When writing

their feedback, learners consider the following

prompts: a) Reflection: Recall, ponder, and share

what you've learned; b)Inquiries: questions are used

to gather information and/or generate ideas;

c)Suggestion: offer suggestions about how to

improve the present iteration; d)Elevated: in

subsequent iterations, raise the degree of intent. The

peer learner will be guided while providing his

feedback with questions related to each prompt. As

with the pre-test, the feedback questions are

consistent with IMS/QTI specifications. The

ontological model is represented as follows.

Figure 7: The ontological model of the peer-feedback

model.

Towards an Ontology-based Recommender System for Assessment in a Collaborative Elearning Environment

299

5 DISCUSSION

The following questions were posed at the beginning

of this paper: Who would be my peer now? And

where do we go from here? To address these

concerns, we proposed guiding learners through the

assessment process using personalized

recommendations in a peer assessment context. We

proposed a pipelined invocation of two RSs, with two

sequential recommendation techniques. The

recommendations of the next assessment activity to

be performed in this pipeline are constrained by the

selection of peer learners, which implies that one RS

pre-processes input for the subsequent. Several RSs

have been used in the educational field to recommend

course material for Computer Science students. In

general, an RS in an eLearning context tries

intelligently to recommend actions to a learner based

on the actions of previous learners. eLearning

Recommendation System plays an important role in

providing accurate and right information to the user

(Pradnya Vaibhav Kulkarni, Sunil Rai, Rohini Kale,

2020). Mainly, all recommendation techniques used

concentrated on a single final educational subject.

Authors (Soulef Benhamdi, Abdesselam Babouri &

Raja Chiky, 2017) developed a new approach to

personalization that provides students with the best

learning materials based on their preferences,

interests, background knowledge, and memory

capacity to store information. Barria-Pineda et al. in

(Jordan Barria-Pineda, Peter Brusilovsky, 2019) have

assisted students in reducing misunderstandings

about programming problems and suggesting the

most appropriate content. In (Safat Siddiqui, Mary

Lou Maher, Nadia Najjar, Maryam Mohseni and

Kazjon Grace, 2022), proposed a solution to aid

students in the selection of papers. The majority of

recommendation techniques suffered from the cold-

start problem in some form or another.

Because we

consider the learner ePortfolio as the first layer of the

recommendation process, our proposed approach

avoids this issue. The assessment ePortfolio is an

effective method for providing learner-centered

assessment as well as a vehicle for peer assessment.

We collected and managed data on each learner using

the assessment ePortfolio, then matched that learner's

ePortfolio to the ePortfolios of a community of

learners. We were able to structure the content of the

assessment traces using the assessment ePortfolio to

use them as evidence for the learner's competencies.

Our work is based on e-learning standards to address

interoperability, information sharing, scalability, and

dynamic integration of heterogeneous pieces of

information. As an open standard for semantic

knowledge representation, Ontology Web Language

(OWL) was adopted for ontology implementation.

Protégé 5.2 is the tool used for modeling and building

it. We utilized the Semantic Web Rule Language

(SWRL) to express the ontology's inference rules. To

assess ontology, we presented several use cases based

on a real collaborative assessment scenario involving

a group of learners. To conduct such an evaluation,

we developed instances for the proposed ontology.

6 CONCLUSION AND FUTURE

WORK

While several RSs exist in learning systems, this is

not yet the case for the personalization of the

recommendation in an online collaborative peer

assessment context. The scope of this research paper

is to recommend the assessment activity to be

performed and a peer to receive feedback from and

give feedback to. It is not only about a

recommendation process, but our aim is how to make

our recommendation personalized. To this end, we

have proposed an assessment ePortfolio as the base

layer of the RS. Our proposed ePortfolio is split into

four models: A pre-test model, an assessment model,

a peer-feedback model, and a learner model. We used

ontology to formalize and describe them. In our

scenario, the interoperability between assessment

ePortfolios is hindered. For this reason, it is desirable

to use a common standard to unify the information

description process. We referred to the CMI5

specifications to formalize the assessment activities

process, the IMS/QTI for the pre-test and the peer-

feedback models, and the IEEE PAPI Learner is used

to describe learners and their relationships. Future

work will focus on integrating other types of

assessments into our ePortfolio model such as group

assessments, to better design the assessment process

and adapt it further to learners’ context.

REFERENCES

Abdalla Alameen, Bhawna Dhupia. (2019). Implementing

Adaptive e-Learning Conceptual Model: A Survey and

Comparison with Open Source LMS. International

Journal of Emerging Technologies in Learning (iJET).

Amira Ghedir, Lilia Cheniti-Belcadhi, Bilal Said, Ghada El

Khayat. (2018). Modèles Ontologiques Pour un

Eportfolio D’évaluation des Compétences à Travers

Les Jeux Sérieux. International Conference on ICT in

Our Lives. Alexandria, Egypt.

Asma Hadyaoui, Lilia Cheniti-Belcadhi. (2022). Towards

an Adaptive Intelligent Assessment Framework for

Collaborative Learning. International Conference on

Computer Supported Education (CSEDU) (pp. 601-

608). Online streaming: SCITEPRESS.

WEBIST 2022 - 18th International Conference on Web Information Systems and Technologies

300

Brian Miller, Tammy Rutherford, Alicia Pack, George

Vilches, Jim Ingram. (2021). cmi5 Best Practices

Guide: From Conception to Conformance. Orlando,

Florida: Defense Support Services Center.

Consortium, I. G. (2022, July 01). IMS Question & Test

Interoperability (QTI) Specification. Retrieved from

imsglobal: https://www.imsglobal.org/

Daniel Valcarcea,Alfonso Landina,Javier Parapar,Alvaro

Barreiro. (2019). Collaborative Filtering Embeddings

for Memory-based Recommender Systems.

Engineering Applications of Artificial Intelligence, (pp.

347-356).

Fkih, F. (2021). Similarity measures for Collaborative

Filtering-based Recommender Systems: Review and

Experimental Comparison. King Saud University

Journal of Computer and Information Sciences.

Herlocker, Jonathan L. and Konstan, Joseph A. and Riedl,

John. (2000). Explaining Collaborative Filtering

Recommendations. ACM Conference on Computer

Supported Cooperative Work (pp. 241--250).

Philadelphia, Pennsylvania, USA: ACM.

Hongchao Peng, Shanshan Ma & Jonathan Michael Spector

. (2019). Personalized adaptive learning: an emerging

pedagogical approach enabled by a smart learning

environment. Smart Learning Environments.

Jordan Barria-Pineda, Peter Brusilovsky. (2019). Making

Educational Recommendations Transparent through a

Fine-Grained Open Learner Model. 1st International

Workshop on Intelligent User Interfaces for

Algorithmic Transparency in Emerging Technologies.

Los Angeles, USA.

Katrin Saks, Helen Ilves and Airi Noppel. (2021). The

Impact of Procedural Knowledge on the Formation of

Declarative Knowledge: How Accomplishing

Activities Designed for Developing Learning Skills

Impacts Teachers’ Knowledge of Learning Skills.

Education Sciences.

Keyvan Vahidy Rodpysh, Seyed Javad Mirabedini, Touraj

Banirostam. (2021). Resolving cold start and sparse

data challenges in recommender systems using multi-

level singular value decomposition. Computers &

Electrical Engineering, 94.

Kirsti Lonka, Elina Ketonen & Jan D. Vermunt. (2020).

University students’ epistemic profiles, conceptions of

learning, and academic performance. Higher

Education.

Lilia Cheniti Belcadhi and Serge Garlatti. (2015).

Ontology-based support for peer assessment in inquiry-

based learning. International Journal of Technology

Enhanced Learning, 6(4), 297-320.

Maria-Iuliana Dascalu, Constanta-Nicoleta Bodea, Monica

Nastasia Mihailescu, Elena Alice Tanase, Patricia

Ordoñez de Pablos. (2016). Educational recommender

systems and their application in lifelong learning.

Behaviour & information technology, 290-297.

Maritza Bustos López, Giner Alor-Hernández, José Luis

Sánchez-Cervantes, Mario Andrés Paredes-Valverde,

María del Pilar Salas-Zárate. (2020). EduRecomSys:

An Educational Resource Recommender System Based

on Collaborative Filtering and Emotion Detection.

Interacting with Computers, 32(4), 407–432.

Nixon, À. E. (2020). Building a memory-based

collaborative filtering recommender. Towards Data

Science.

Othmane Zine ,DerouichAziz,Talbi Abdennebi . (2019).

IMS Compliant Ontological Learner Model for

Adaptive E-Learning Environments. International

Journal of Emerging Technologies in Learning (iJET).

Pradnya Vaibhav Kulkarni, Sunil Rai, Rohini Kale. (2020).

Recommender System in eLearning: A Survey.

International Conference on Computational Science

and Applications (pp. 119–126). First Online: Springer.

Rustici-Software. (2022, march 30). learning-record-store.

Retrieved from xapi.com: https://xapi.com/learning-

record-store

Safat Siddiqui, Mary Lou Maher, Nadia Najjar, Maryam

Mohseni and Kazjon Grace. (2022). Personalized

Curiosity Engine (Pique): A Curiosity Inspiring

Cognitive System for Student-Directed Learning.

CSEDU. Online stream.

Soulef Benhamdi, Abdesselam Babouri & Raja Chiky.

(2017). Personalized recommender system for an e-

Learning environment. Education and Information

Technologies, 22(4), 1455–1477.

Sushma Malik, Mamta Bansal. (2019). Recommendation

System: Techniques and Issues. International Journal

of Recent Technology and Engineering (IJRTE).

Tillema, H. (2010). Formative Assessment in Teacher

Education and Teacher Professional Development. In

International Encyclopedia of Education (Third

Edition) (pp. 563-571). Elsevier.

Wiliam, D. (2006). Does assessment hinder learning? Paper

presented at ETS Invitational Seminar. ETS Invitational

Seminar. London.

Wray, E. (2022, march 29). peer-to-peer. Retrieved from

risemodel: https://www.risemodel.com/peer-to-peer

Towards an Ontology-based Recommender System for Assessment in a Collaborative Elearning Environment

301