Term-based Website Evaluation Applying Word Vectors

and Readability Measures

Kiyoshi Nagata

Faculty of Business Administration, Daito Bunka University, Tokyo, Japan

Keywords:

Website Evaluation, Similarity Measure, Word Vector, Readability Measures.

Abstract:

Now the homepage is an important means of transmitting information not only in companies but also in

any type of organization. However, it cannot be said that the page structure in the website is always in an

appropriate state. Research on websites has been actively conducted both from academic and practical aspects,

and sometime from three major categories such as web content mining, web structure mining, and web usage

mining. In this paper, we mainly focus on term-based properties and propose a system that evaluates the

appropriateness of link relationships in a given site taking those content and link structure properties into

consideration. We also consider readability of text in each webpage by applying some of readability measures

to evaluate the uniformity of them across all pages. We implement those systems in our previously developed

multilingual support application, and some results by applying it to several websites are shown.

1 INTRODUCTION

In about 30 years since when commercial use of the

internet began early in 1990s, information dissemi-

nation centering on websites has been developed in

many fields by using various techniques for Internet-

based environment. In this way, a lot of information is

now accumulated on the Internet and is used all over

the world.

On the other hand, webpages in an organization

are sometimes expanded in an ad hoc way with the

result that there are many pages containing unneces-

sary or not updated information. Network adminis-

trators focus on the smooth functioning of networks

within an organization and are often not responsible

for overall integrity or consistency. Especially in a

large organization, the contents to be described on the

homepage will be created independently, and each de-

partment decides the setting of the link in the organi-

zation. Such a method is consistent with the initial

concept of the Internet such as ”no administrator re-

quired”, and may be supported by the idea that a col-

lection of miscellaneous information is the Internet.

However, compared to general information dissemi-

nation such as in SNS, the purpose of websites oper-

ated by organizations should be clarified, and for that

purpose, overall coherence and efficiency should be

emphasized.

From this point of view, it is desirable that the

entire structure is constructed in consideration of the

link structure between webpages and of the content

relations of documents. Although it is unrealistic to

completely rebuild a website that currently has hun-

dreds, thousands, and possibly tens of thousands of

pages and links according to the overall policy, it is re-

quired to analyze the pages on the website in the orga-

nization by the link structure and the document con-

tent structure, and to find out the problem by grasping

the whole. We already proposed a system incorpo-

rating several website evaluation indicators, including

existing ones, and adapted it to actual websites, but

term-based evaluations were not sufficient inadequate

(Nagata, 2019).

In this paper, we mainly propose the clustering

system improved by following three types of values.

One is a method of finding a spectrum for an effective

graph using Kleinberg’s hub and authority weights

(Kleinberg, 1999), the second is a similarity evalu-

ation method using an extended keyword search in-

dex using word vector representation (Mikolov et al.,

2013; Mikolov et al., 2015), and the third is using the

readability index of the document.

The rest of paper is organized as follows. The ap-

plication program already constructed by implement-

ing some of the links and the terms related indexes

is introduced while showing the execution result and

pointing out the problem in the next section. In the

following sections, the improvement methods for the

Nagata, K.

Term-based Website Evaluation Applying Word Vectors and Readability Measures.

DOI: 10.5220/0011383900003318

In Proceedings of the 18th International Conference on Web Information Systems and Technologies (WEBIST 2022), pages 241-248

ISBN: 978-989-758-613-2; ISSN: 2184-3252

Copyright

c

2022 by SCITEPRESS – Science and Technology Publications, Lda. All rights reserved

241

problem will be described one by one. We also de-

scribe how to implement some of the texts readability

indexes. The last part is the conclusion and future

works.

2 EXISTING SYSTEM AND

PROBLEMS

In this section, the outline of the already developed

system is described, and the execution results of

the application program and some problems will be

pointed out.

2.1 Overview of Website Evaluation and

Our System

As a hierarchical network search engine, HyPersuit

proposed by Ron Weiss et al. (Weiss et al., 1996)

is well known and pioneering software. In the Hy-

Persuit, the content-link hypertext clustering which

organizes documents into clusters of related docu-

ments based on the hybrid function of hyperlink and

terms related structures is exploited to obtain effective

search results. To describe the hyperlink structure and

the term structure, the value of link similarity func-

tions of each of two nodes and term-based similarity

function using term frequency, size of document, and

the term attributes are applied respectively.

Chaomei Chen tried to describe the network or its

sub-networks as a graphical image of the Pathfinder

Networks models (Schvaneneldt et al., 1988) based

on pairwise integrated similarity by applying the vec-

tor space model (Salton et al., 1975). He also pub-

lished a paper for analyzing the structure of a large

hypermedia information space based on three types of

similarity measures such as hypertext linkage, content

similarity, and usage patterns (Chen, 1998). Propos-

ing website evaluation system by combining some of

website indexes and user evaluation indexes by apply-

ing user’s perspective information quality measures

(Lee et al., 2002; Cherl and Locsin, 2018; Noorzad

and Sato, 2017), we tried to construct a formula de-

scribing some of information quality measures as a

formula with link- or term-based indexes (Liang and

Nagata, 2011). In the paper (Nagata, 2019), an ana-

lyzing system for website from various perspectives

id developed which helps website managers or de-

signers improve the whole website by removing or

adding proper links and pages. In the application pro-

gram, while the user’s perspective evaluation is not

involved, collecting data from real website and an-

alyzing link and term related properties are imple-

mented (Nagata, 2019). The developing language is

Java and the JavaFx library is used for the construc-

tion of graphical interface. Here we briefly describe

some of core part of the previous system.

2.1.1 Retrieving Webpages

By giving a specific website URL, the system starts to

retrieve almost all the webpages in side the website.

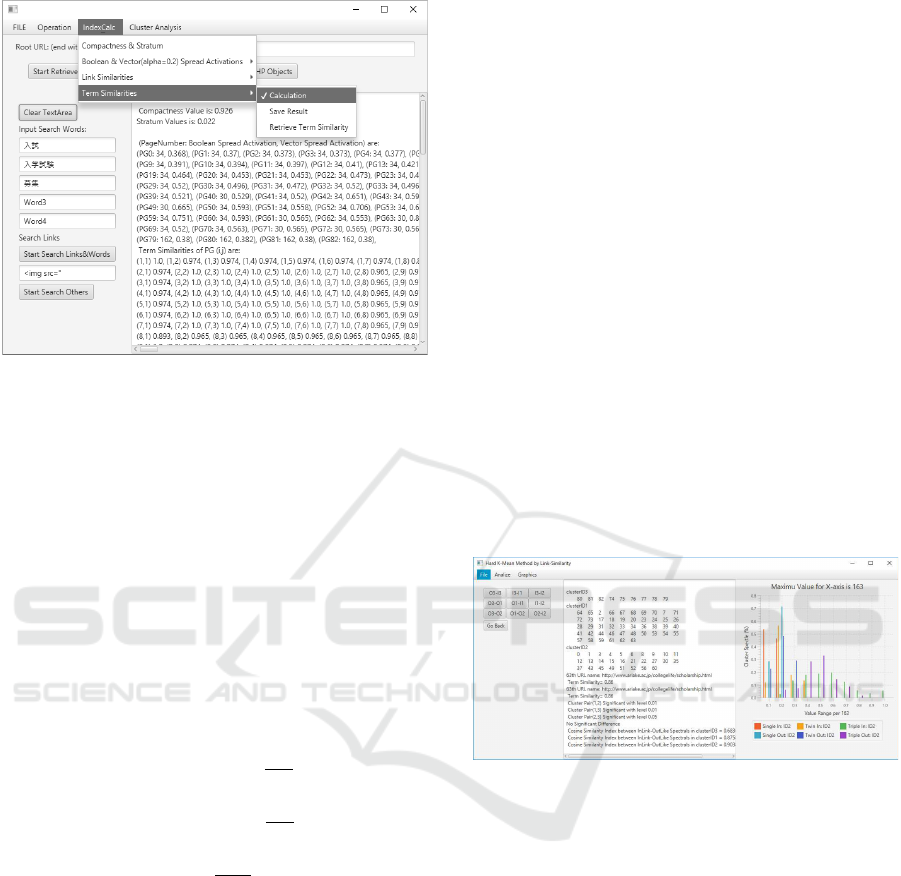

Figure 1 shows the initial page of the system.

Figure 1: Initial Window.

For its implementation, we simply get the HTML

or XML texts using the “URL” and “URLConnec-

tion” class objects, then analyzed the text to retrieve

all the proper link tags. However, we needed to cre-

ate the exception list of some types of link to be ex-

cluded, and the program sometimes failed retrieving

at the stage of iterative processing especially in over-

seas websites.

2.1.2 Link-based Index

The basic indicators for the overall link relationship

are Compactness (Cp) and Stratum (St), which must

be complementary indexes. However as shown in (1),

it is difficult to understand the clear relationship from

their defining expressions.

Cp =

K

K − 1

−

∑

i, j

c

i j

(N

2

− N)(K − 1)

,

St =

∑

i

|OD

i

− ID

i

|

LAP

,

(1)

where K is a certain big number, c

i j

represent the

shortest distance from the page P

i

to the page P

j

and

OD

i

=

N

∑

j=1

c

i j

, ID

i

=

N

∑

j=1

c

ji

,

LAP =

N

3

4

(if N is even),

N

3

− N

4

(if N is odd).

The Complete Hypertext Link Similarity(CHLS) in-

dex is proposed by Weiss et al. to express the sim-

ilarity between given two nodes by the link relation

WEBIST 2022 - 18th International Conference on Web Information Systems and Technologies

242

with other nodes, and CHLS index can be obtained

by taking the weighted average of the following three

values, (Weiss et al., 1996).

S

spl

i j

=

1

2

c

i j

+

1

2

c

ji

,

S

anc

i j

=

∑

x∈A

i j

1

2

(c

¯

j

xi

+c

¯

i

x j

)

,

S

dsc

i j

=

∑

x∈D

i j

1

2

(c

¯

j

ix

+c

¯

i

jx

)

.

Here, A

i j

is a set of nodes such that there is at least

one path to both P

i

and P

j

, D

i j

is a set of nodes such

that there is at least one path from both P

i

and P

j

, and

c

¯

j

xi

is the shortest distance from P

x

to P

i

not passing P

j

.

From these values and given weights w

s

, w

a

, w

d

, the

CHLS index S

link

i j

of each node pair (P

i

, P

j

) is defined

as follows.

S

link

i j

= w

s

S

spl

i j

+ w

a

S

anc

i j

+ w

d

S

dsc

i j

. (2)

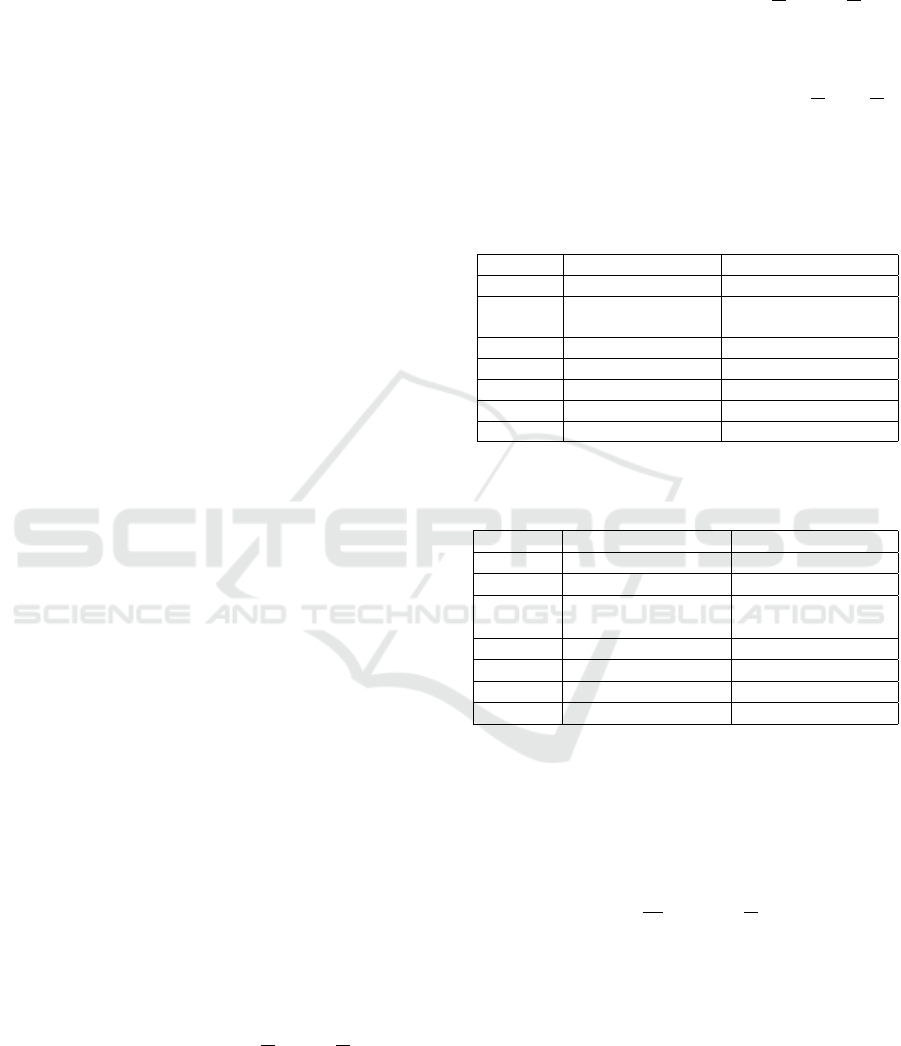

Figure 2 shows the calculation results of the link sim-

ilarities.

Figure 2: Link Similarity Calculation.

In the formula above, the shortest distance c

i j

from page P

i

to page P

j

plays an important role.

The shortest distance calculation problem is classified

into two types such as Single Source Shortest Pass

(SSSP) problem and All Pairs Shortest Pass (APSP)

one. For the SSSP problem, the Dijkstra method (Di-

jkstra, 1959) is well known algorithm whose execu-

tion time improved to O(e + n log(logn)) (e is the

number of edges), (Thorup, 2004). For the APSP

problem, the Floyd-Warshall method (Floyd, 1962;

Warshall, 1962) are known as an algorithm which re-

quires Θ(n

3

) complexity and a memory area propor-

tional to n

2

. We implemented this with the following

procedure.

1. Let C

(0)

(= A) be a matrix representing (weighted)

direct link relationships.

2. Node P

k

(k = 1, .. . , N) is sequentially added as a

transit node, and the (weighted) shortest distance

changed for each node pair (P

i

, P

j

) is a compo-

nent of the matrix C

(k)

. When the shortest dis-

tance changes, P

k

is stored as a predecessor node

from P

i

to P

j

.

2.1.3 Term-based Index

As a term related nature of a page P, we implemented

the vector spread activation defined by

RV

P,q

= S

P,q

+

∑

P

0

6=P

αa

PP

0

S

P

0

,q

, (3)

where the reduced version of TFxIDF (S

P

0

,q

) is de-

fined as follows,

S

P,q

=

M

∑

j=1

w

T F

P,Q

j

× IDF

Q

j

.

In the formula, we have w

T F

P,Q

j

and the “inverse docu-

ment frequency” IDF

Q

j

defined by

w

T F

P,Q

j

=

1

2

1 +

T F

P,Q

j

T F

P,max

IDF

Q

j

= log

N

∑

P

0

X

P

0

,Q

j

.

(4)

where X

P,Q

j

(=0 or 1) denotes the occurrence of Q

j

in

a page P, T F

P,Q

j

denotes the term frequency, the num-

ber of Q

j

s appear in a page P, and T F

P,max

denotes

the maximum number of T F

P,Q

j

through all terms Q

j

( j = 1, . . . , M).

In order to measure a similarity of two pages

P

i

and P

j

for a set of fixed query terms q =

{Q

1

, · ·· , Q

M

}, the following index is defined as the

term-based similarity index,

S

terms

P

i

,P

j

=

M

∑

l=1

w

P

i

,Q

l

w

P

j

,Q

l

s

M

∑

l=1

w

2

P

i

,Q

l

M

∑

l=1

w

2

P

j

,Q

l

(5)

where w

P

i

,Q

l

= w

T F

P

i

,Q

l

w

at

P

i

,Q

l

with

w

at

P,Q

j

=

10 if Q

j

is in title of P,

5 if Q

j

is in headers or keywords

or address in P,

1 otherwise,

(6)

Since we did not implement a method to extract tags

other than link tag from text, we set all the w

at

P,Q

j

=

1. Figure 3 shows the calculation results of the term

similarities.

Term-based Website Evaluation Applying Word Vectors and Readability Measures

243

Figure 3: Term Similarity Calculation.

2.1.4 Clustering Method

We implemented two clustering algorithms such as

the kernel k-means algorithm and “Structural Cluster-

ing Algorithm for Networks (SCAN)”.

For a fixed number of clusters k and the kernel also

denoted by k with feature map φ from a set of nodes G

to the Hilbert space H , the kernel k-means algorithm

try to find a set of clusters {C

1

, . . . ,C

k

} minimizing

the following value.

k

∑

i=1

∑

x∈C

i

k φ(x) − µ

i

k

2

, (7)

where

k φ(x) − µ

i

k

2

= k φ(x) −

1

|C

i

|

∑

x

0

∈C

i

φ(x

0

) k

2

= k(x, x) −

2

|C

i

|

∑

x

0

∈C

i

k(x, x

0

)

+

1

|C

i

|

2

∑

x

00

∈C

i

∑

x

0

∈C

i

k(x

00

, x

0

).

SCAN, proposed by Xiaowei Xu et al. (Xu et al.,

2007), outputs three types of clusters such as “hub”,

“outlier”, and ordinal clusters, by using structural

similarity and two parameters 0 ≤ ε ≤ 1 and µ ∈ Z

+

.

2.1.5 Spectrum Analysis

Shoda et al. considered all the connected sub-graph

and calculate the total weight of each of them, then

proposed to visually evaluate the similarity by graph-

ing their frequency of appearance as the spectrum

(Shoda et al., 2003).

For a weighted non-directed graph G and the set of

weight {w(P)}, they consider all the connected sub-

graphs {SG ∈ 2

G

;SG is connected} and calculate the

total weight of all node in SG as w(SG) =

∑

P∈SG

w(P)

for each SG. Then, the graph spectrum is defined

the vector with component values of numbers of SGs

whose weight are corresponding to the index number.

In the paper, the graph spectrum is used to calculate

the structural similarity of clusters and apply k-means

method to find a good cluster decomposition.

For a directed graph, we proposed the in-weight

of connected subgraph SG as follows. Given a fixed

weights (w

1

, w

2

) with w

1

< w

2

, the in-weight of a

subgraph of only two nodes, {P

1

, P

2

} is calculated

as the weighted value w

P

1

→P

2

= w

1

w(P

1

) + w

2

w(P

2

)

if there is a direct path from P

1

to P

2

. Then define

the in-weight for SG of two nodes, called twin in-

weight, w

direct

({P

1

, P

2

}) to be the average of w

P

1

→P

2

and w

P

2

→P

1

. The out-weight is also defined in a sym-

metrical way.

When SG has more than two nodes, the in-weight

can be defined as the average of all the twin in-

weights for connected twin subsets. However, the cal-

culation efforts increase exponentially proportion to

the number of nodes in SG.

In the right pane of Figure 4, the spectrum charts

corresponding to each cluster are shown.

Figure 4: Spectrum Chart in the right Pane.

3 IMPROVEMENTS

While the existing system has shown some perfor-

mance, it leaves some room for improvement. In

this section, we propose improvements in webpage

retrieving within the website, link spectral evaluation

for each in- and out-weight, and page similarity by

keyword. We also describes a sentence related evalu-

ation method that uses text readability on pages.

3.1 Webpage Retrieving

Instead of “URL” and “URLConnection” classes, we

use “Jsoup” and “Elements” classes for retrieving and

process the obtained page as a “Document” class ob-

ject. The program below describes a epitome of the

whole retrieving program, where “TAGS” is a set of

WEBIST 2022 - 18th International Conference on Web Information Systems and Technologies

244

HTLM tags such as “<h1>”, “<h2>”, “<p>” etc.:

Document document=Jsoup.connect(url).get();

System.out.println(document.text());

for(String tag:TAGS) {

ArrayList<String> strs=

(ArrayList<String>)document.

getElementsByTag(tag).eachText();

System.out.println("<"+tag+">");

for(String str:strs) {

System.out.println(str);

}

}

Elements links = document.select("a[href]");

By using these classes, we can improve the retrieval

execution time and correct unexpected page access.

3.2 Link Spectral Evaluation

The graph structure obtained from the links between

webpages is a directed graph, and it was necessary

to define input link weights and output link weights

for each node. Therefore, we defined the in-weight

of a subgraph of only two nodes, {P

1

, P

2

} as the

weighted value w

P

1

→P

2

= w

1

w(P

1

) + w

2

w(P

2

) with

a fixed weights (w

1

, w

2

) such as w

1

< w

2

if there

is a direct path from P

1

to P

2

. Then define the in-

weight for SG of two nodes, called twin in-weight,

w

direct

({P

1

, P

2

}) to be the average of w

P

1

→P

2

and

w

P

2

→P

1

.

In this definition, the weight value w(P) is the

number of link to or from any node of SG. Here

we try to improve the index by changing this to hub

weight or to authority weight according to out-link or

in-link weight calculation. These weights, proposed

by Kleinberg (Kleinberg, 1999), are importance val-

ues of each node as a referring or referred by other

node in a directed graph. They are given as each com-

ponent of the principal eigenvector (eigenvector for

the greatest positive real eigenvalue) of the matrices

AA

t

and A

t

A with the adjacent matrix A.

In a website, the number of nodes can be several

hundred to several thousand, or sometimes exceeds

10,000, it is not easy or even impossible to imple-

ment codes obtaining principal eigenvetor in our sys-

tem. Thus, we tried to use “Process” class to call

and use an external computer algebra program such

as SageMath

1

of PARI/GP

2

. Even if a computer alge-

bra system is used, when the number of nodes is large

and problems will occur in the output of the eigen-

vectors. Thus we will calculate for each cluster with

about 50 nodes at most, then apply the system.

1

https://www.sagemath.org/

2

https://pari.math.u-bordeaux.fr/

3.3 Term-based Index

In order to calculate and evaluate term-based indexes,

we need to consider the differences in languages. Al-

though almost sentences in webpages are written in

English, there are many websites where some impor-

tant information are represented only in language of

each country. Thus, we add a language selection box

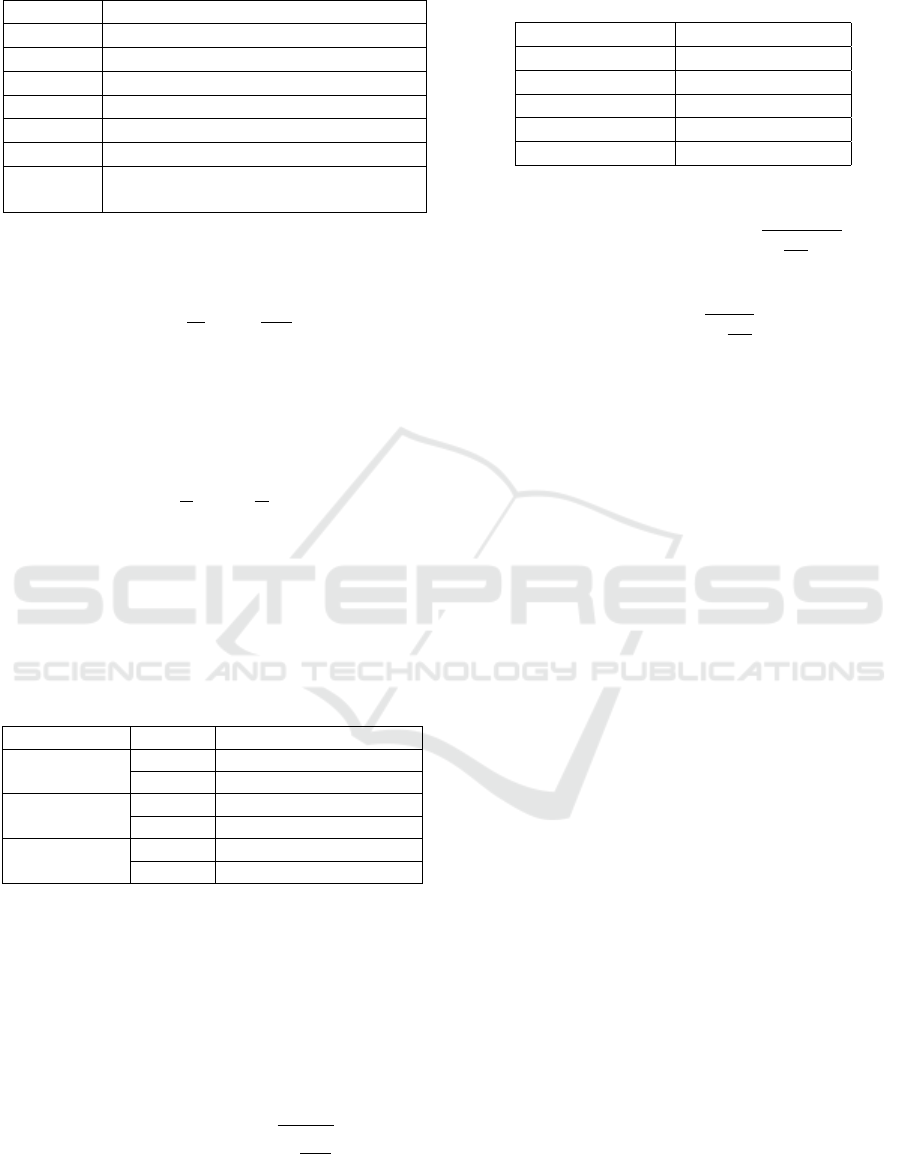

and also add the menu item “Text Analysis” shown in

the Figure 5.

Figure 5: Language Selection Box and Text Analysis menu.

When we choose to the menu, new window for

text analysis in the chosen language appears.

Figure 6: English Text Analysis Window.

In both the formulae of vector spread activation

RV

P,q

and the term-based similarity index S

terms

P

i

,P

j

, it ap-

pears the term frequency T F

P,Q

j

defined as in equation

of (4). This value originally represented the num-

ber of times a word Q

j

appeared on a webpage P,

but some extended version have been used in a vec-

tor space model (Wong et al., 1985; Tsatsaronis and

Panagiotopoulou, 2009; Waitelonis et al., 2015).

For a query word Q and a webpage P, we propose

to extend T F

P,Q

j

to be the summation of the num-

ber of appearance times the cosine similarity values

cos(W, Q

j

) of word W throughout P. Considering the

calculation cost, words are limited to specific particles

such as noun, and only those with a certain degree of

similarity or more are added. We also modify the at-

tribution weights in (5) according to tag type in the

Term-based Website Evaluation Applying Word Vectors and Readability Measures

245

following way.

w

at

P,Q

j

=

10 if Q

j

in <title>,

7 if Q

j

in <h1>

5 in <h2>,

3 in <b>, <i>, <u>,

1 otherwise,

Then we extend the equation (6) by modifying the

definitions of T F

P,Q

j

and

∑

P

0

X

P

0

,Q

j

in (4) as follows.

T F

P,Q

=

∑

Q

0

∈Sim

r

0

(P,Q)

cos(v

Q

, v

Q

0

)

X

P

0

,Q

= max{cos(v

Q

, v

Q

0

);Q

0

∈ Sim

r

0

(P, Q)},

(8)

where v

Q

denotes the word vectors corresponding to

Q and Sim

r

0

(P, Q) is the set of all nouns Q

0

appear in

the page P satisfying cos(v

Q

, v

Q

0

) ≥ r

0

.

In order to implement the system above, some

steps of natural language processing are required. At

first, we need to extract words from text while decom-

posing them into particles, then examine their rela-

tionships. For this tasks, we use Tree-Tagger as mor-

phological analysis application. The output of Tree-

Tagger is the triplet of the word itself, the particle

name, the prototype, and the symbols to represents

them are different for each language.

For the calculation of the word vector v

Q

, we

apply FastText created by Tomas Mikolov which

outputs the word distribution expression vector (the

word vector) obtained by machine learning from a

large corpus. There are files pre-learned from the

Wikipedia dump files in many languages (157 lan-

guages) whose format is available to be executed by

the fastText program.

3.4 Readability Index

As with term-based indexes, we will introduce read-

able indexes that correspond to each language.

To evaluate sentences in each webpage by their

readability, we incorporate some of the following

readability indexes with S, W , C, and Sy denoting the

number of clauses, the number of words, the num-

ber of characters, and the number of syllables of the

whole sentence, respectively.

• Flesch Reading Ease Score: A measure of text

readability, proposed by Rudolf Flesh in 1946.

(Flesch, 1946), (DuBay, 2004, p.21)

206.830 − 1.015

W

S

− 84.6

Sy

W

– Table1 shows the correspondence between the

score value, the difficulty level, and the genre

of a typical book. Since it is an index showing

readability, the lower the evaluation score, the

harder it is to read.

– In his 1978 dissertation “Wie verst

¨

andlich sind

unsere Zeitungen?”, Toni Amstad in the Z

¨

urich

University finds the formula for this index in

German as follows. 180 −

W

S

− 58.5

Sy

W

– It seems that the following formula (Huerta,

1959) by Fern

´

andez Huerta is often used as the

corresponding evaluation index calculation

formula in Spanish. 206.84 − 1.02

W

S

− 60

Sy

W

– The table 2 shows the correspondence between

the index values and the educational system in

USA and Spain.

Table 1: Flesch Reading Ease Score and Readability Com-

parison.

Score Difficulty Representative Books

∼ 30 Very Difficult Special Dissertation

30 ∼ 50 Difficult General Academic

Journals

50 ∼ 60 Somewhat Difficult high quality magazine

60 ∼ 70 Standard Article Summary

70 ∼ 80 Slightly Easy Science Fiction

80 ∼ 90 Easy Popular Novel

90 ∼ 100 Very Easy Comic

Table 2: Country-specific Flesch Reading Ease Score and

Grade Comparison.

Score USA Spain

∼ 30 University Graduate University specialty

30 ∼ 50 Grades 13-16 Elective Course

50 ∼ 60 Grades 10-11 Before Entering

University

60 ∼ 70 8-9 grades 7 and 8 grades

70 ∼ 80 Grade 7 Grade 6

80 ∼ 90 Grade 6 Grade 5

90 ∼ 100 5th Grade 4th Grade

• Dale-Chall Readability Score: In 1948, Edgar

Dale and Jeanne Sternlicht Chall revised the Flesh

Reading Ease Score and defined a readability in-

dex using an easy-to-read English word list of

about 3,000 words called a “Dale-Chall’s long

list”. (Dale and Chall, 1948), (DuBay, 2004, p.23)

0.1579

Dw

W

+ 0.0496

W

S

+ 3.6365

– Dw is the number of words not found in

“Dale-Chall’s long list”.

– The table 3 gives the correspondence between

the evaluation values and grades in USA.

• Fog Index: Robert Gunning states that “many of

the reading problems are writing problems, and

newspapers and corporate documents are covered

in Fog and filled with unnecessary complexity”.

(DuBay, 2004, p.25). In the book “Writing Tech-

nique of Clear Writing” (Gunning, 1952), he pro-

WEBIST 2022 - 18th International Conference on Web Information Systems and Technologies

246

Table 3: Dale-Chall Grade Evaluation.

Score Corresponding Grade Level (in USA)

∼ 4.9 4th Grade or Younger

5.0 ∼ 5.9 5th and 6th Grade

6.0 ∼ 6.9 7th and 8th Grade

7.0 ∼ 7.9 9th and 10th Grade

8.0 ∼ 8.9 11th and 12th Grade

9.0 ∼ 9.9 13th, 14th and 15th Grade

10.0 ∼ 16th Grade or Above

(University graduation)

posed the readability index for adults called the

“Fog Index”.

0.4(

W

S

+ 100

Sy

+

W

),

where Sy

+

represents the number of words with 3

or more syllables.

• Flesh-Kincaid Readability Grade Level: An in-

dex to measure the difficulty of reading a sen-

tence.(DuBay, 2004, p. 50)

0.39

W

S

+ 11.8

Sy

W

− 15.59

– There is no linear dependency between this

index and the aforementioned Flesh Reading

Ease Score.

– Table 4 represents an index to find the value

corresponding to the education level in the

United States.

Table 4: Flesh-Kincaid Readability Grade Level Evalua-

tion.

Reading level rating book example

Basic 0 ∼ 3 Preparation for Reading

3 ∼ 6 Picture Book

Average 6 ∼ 9 Harry Potter

9 ∼ 12 Jurassic Park

Mastery 12 ∼ 15 Historical Novel

15 ∼ 18 Academic Papers

• SMOG (Simple Measure Of Gobbledygook)

Grading: While many indicators are given in

linear form of the number of characters, the

number of syllables, etc., Gobbledygook Harry

McLaughin proposed non-linear formula for eval-

uating the level of education for understand-

ing sentences in the UK. (McLaughlin, 1969),

(DuBay, 2004, p.47)

3.1291 + 1.0430

r

30

Sy

+

S

– Table5 correspondence table with education

level in the UK.

Table 5: Correspondence between SMOG Grading Index

Value and Education Level.

Evaluation Value Education Level

∼ 4.9 Elementary School

5.0 ∼ 8.9 Secondary School

9.0 ∼ 12.9 High School

13.0 ∼ 16.9 Undergraduate

17.0 and above College Graduate

– G-SMOG by Bamberger-Vanecek is the

following equation for German.(Bamberger

and Vanecek, 1984)

q

30

Sy

+

S

− 2

– SOL is the following formula for Spanish and

French.(Contreras et al., 1999)

(3.1291 + 1.0430

q

30

Sy

+

S

) × 0.74 − 2.51

4 CONCLUSIONS

We have proposed the following improvements to the

existing website evaluation system we have devel-

oped.

• By using Java Jsoup class, the stability of all the

webpage retrieving in the website has increased.

• By making good use of computer algebra pro-

grams, it is possible to perform more precise spec-

tral analysis of directed graphs created by link

structures.

• By incorporating morphological analysis and

word dispersion expression vector as a system of

natural language processing, more flexible key-

word search can be performed.

• By implementing sentence readability index, It is

possible to evaluate the readability bias of text ex-

pressions on webpages.

• Made the entire process compatible with several

languages.

Our future task is to apply the improved program and

evaluate some websites to verify its effectiveness.

REFERENCES

Bamberger, R. and Vanecek, E. (1984). Lesen-Verstehen-

Lernen-Schreiben: Die Schwierigkeitsstufen von Tex-

ten in deutscher Sprache. Diesterweg-Sauerl

¨

ander.

Chen, C. (1998). Generalised similarity analysis

and pathfinder network scaling. Interacting with

Computers, 10(2):107–128. DOI: 10.1016/s0953-

5438(98)00015-0.

Term-based Website Evaluation Applying Word Vectors and Readability Measures

247

Cherl, N. M. and Locsin, R. J. F. (2018). Neural networks

application for water distribution demand-driven de-

cision support system. Journal of Advances in Tech-

nology and Engineering Studies, 4(4):162–175. DOI:

10.20474/jater-4.4.3.

Contreras, A., G-A., R., E., M., and D-C., F. (1999). The sol

formulas for converting smog readability scores be-

tween health education materials written in spanish,

english, and french. Journal of Health Communica-

tions, 4:21–29.

Dale, E. and Chall, S. (1948). A formula for predicting

readability. Educational Research Bulletin, 27(1):1–

20.

Dijkstra, E. W. (1959). A note on two problems in connex-

ion with graphs. Numerische Mathematik, 1(1):269–

271. DOI: 10.1007/bf01386390.

DuBay, W. H. (2004). The principles of readability. In

Impact Information, pages –, Cost Mesa California.

Flesch, R. (1946). The art of plain talk. Harper, New York.

Floyd, R. W. (1962). Algorithm 97: Shortest path.

Communications of the ACM, 5(6):345–350. DOI:

10.1145/367766.368168.

Gunning, R. (1952). The technique of clear writing.

McGraw-Hill.

Huerta, F. J. (1959). Medidas sencillas de lecturabili-

dad. In Revista pedag

´

ogica de la secci

´

on femenina

de Falange ET y de las JONS, pages 29–32.

Kleinberg, J. M. (1999). Authoritative sources in a hy-

perlinked environment. Journal of the ACM (JACM),

46(5):604–632. DOI: 10.1145/324133.324140.

Lee, Y. W., Strong, D. M., Kahn, B. K., and Wang, R. Y.

(2002). Aimq: A methodology for information quality

assessment. Information & Management, 40(2):133–

146. DOI: 10.1016/s0378-7206(02)00043-5.

Liang, G. and Nagata, K. (2011). A study on e-business

website evaluation formula with variables of informa-

tion quality score. In Proceedings of the 12th Asia

Pacific Industrial Engineering and Management Sys-

tems Conference, pages –.

McLaughlin, G. H. (1969). Smog grading-a new readability

formula. Journal of Reading, 12(8):639–646.

Mikolov, T., Chen, K., Corrado, G., and Dean, J. (2013).

Efficient estimation of word representations in vector

space. In ICLR Workshop Paper, pages –.

Mikolov, T., Sutskever, I., Chen, K., Corrado, G. S., and

Dean, J. (2015). Distributed representations of words

and phrases and their compositionality. In Advances in

Neural Information Processing Systems, pages 3111–

3119.

Nagata, K. (2019). Website evaluation using cluster struc-

tures. Journal of Advances in Technology and Engi-

neering Research, 5(1):25–36. DOI: 10.20474/jater-

5.1.3.

Noorzad, A. N. and Sato, T. (2017). Multi-criteria fuzzy-

based handover decision system for heterogeneous

wireless networks. International Journal of Technol-

ogy and Engineering Studies, 3(4):159–168. DOI:

10.20469/ijtes.3.40004-4.

Salton, G., Wong, A., and Yang, C.-S. (1975). A vec-

tor space model for automatic indexing. Com-

munications of the ACM, 18(11):613–620. DOI:

10.1109/icectech.2011.5941988.

Schvaneneldt, R. W., Dearholt, D., and Durso, F.

(1988). Graph theoretic foundations of pathfinder net-

works. Computers & Mathematics with Applications,

15(4):337–345. DOI: 10.1016/0898-1221(88)90221-

0.

Shoda, R., Matsuda, T., Yoshida, T., Motoda, H., and

Washio, T. (2003). Graph clustering with structure

similarity. In Proceedings of the 17th Annual Confer-

ence of the Japanese Society for Artificial Intelligence,

pages –, New York, NY.

Thorup, M. (2004). Integer priority queues with

decrease key in constant time and the single

source shortest paths problem. Journal of Com-

puter and System Sciences, 69(3):330–353. DOI:

10.1016/j.jcss.2004.04.003.

Tsatsaronis, G. and Panagiotopoulou, V. (2009). A gener-

alized vector space model for text retrieval based on

semantic relatedness. In Proceeding of EACL 2009

Student Research Workshop, pages 70–78, Athens,

Greece. Association for Computational Linguistics.

Waitelonis, J., Exeler, C., and Sack, H. (2015). Linked data

enabled generalized vector space model to improve

document retrieval. In CEUR Workshop Proceedings,

pages –. CEUR-WS.org.

Warshall, S. (1962). A theorem on boolean matrices. In

Proceedings of the ACM, pages –, Berlin, Germany.

ISSN 1613-0073.

Weiss, R., Velez, B., Sheldon, M., Namprempre, C., Szi-

lagyi, P., Duda, A., and Gifford, A. (1996). Hypur-

suit: A hierarchical network search engine that ex-

ploits content-link hypertext clustering. In Proceed-

ings of the 7th ACM Conference on Hypertext, pages

180–193.

Wong, S. K. M., Ziarko, W., and Wong, P. C. N. (1985).

Generalized vector spaces model in information re-

trieval. In Proceeding of the 8th SIGIR Conference on

Research and Development in Information Retrieval,

pages 18–25. ACM.

Xu, X., Yuruk, N., Feng, Z., and Schweiger, T. A. (2007).

Scan: A structural clustering algorithm for networks.

In Proceedings of the 13th ACM SIGKDD Interna-

tional Conference on Knowledge Discovery and Data

Mining, pages –, Jakarta, Indonesia. ISSN:2414-.

WEBIST 2022 - 18th International Conference on Web Information Systems and Technologies

248