Evolutional Normal Maps: 3D Face Representations for 2D-3D Face

Recognition, Face Modelling and Data Augmentation

Michael Danner

1,2

, Thomas Weber

2

, Patrik Huber

1,3

, Muhammad Awais

1

, Matthias Raetsch

2

and Josef Kittler

1

1

Centre for Vision, Speech & Signal Processing, University of Surrey, Guildford, U.K.

2

ViSiR, Reutlingen University, Reutlingen, Germany

3

Department of Computer Science, University of York, York, U.K.

patrik.huber@york.ac.uk

Keywords:

Deep Learning, Visual Understanding, Machine Vision, Pattern Recognition, 2D/3D Face Recognition, Local

Describers, Normal-Vector-Map Representation.

Abstract:

We address the problem of 3D face recognition based on either 3D sensor data, or on a 3D face reconstructed

from a 2D face image. We focus on 3D shape representation in terms of a mesh of surface normal vectors.

The first contribution of this work is an evaluation of eight different 3D face representations and their mul-

tiple combinations. An important contribution of the study is the proposed implementation, which allows

these representations to be computed directly from 3D meshes, instead of point clouds. This enhances their

computational efficiency. Motivated by the results of the comparative evaluation, we propose a 3D face shape

descriptor, named Evolutional Normal Maps, that assimilates and optimises a subset of six of these approaches.

The proposed shape descriptor can be modified and tuned to suit different tasks. It is used as input for a deep

convolutional network for 3D face recognition. An extensive experimental evaluation using the Bosphorus 3D

Face, CASIA 3D Face and JNU-3D Face datasets shows that, compared to the state of the art methods, the

proposed approach is better in terms of both computational cost and recognition accuracy.

1 INTRODUCTION

Face recognition and matching are important tech-

nologies for many application scenarios, including

identity verification, public security, human-computer

interactions, person tracking and re-identification for

process monitoring such as passenger progression

through airports, secondary authentication for mo-

bile devices, and for indexing into large multimedia

archives of media and entertainment companies. Dur-

ing the last decade, face biometrics research has been

dominated by 2D face recognition. This is primar-

ily the consequence of the recent advent of deep neu-

ral networks, which can learn face image representa-

tions that are largely invariant to nuisance factors such

as illumination and pose changes. However, since

the first computer assisted face recognition system of

Kanade (Kanade, 1977) half a century ago, there has

always been interest in 3D face recognition as the ul-

timate target technology, that has the capacity to dis-

entangle face skin texture from the 3D shape of this

intrinsically 3D object, and use these two sources of

biometric information in the most productive way.

The interest in 3D face recognition is evident not

only from the continuing research on this topic in the

literature (Kittler et al., 2005), but also from the ad-

vances in the development of 3D capture systems. 3D

capable cameras based on binocular stereo vision and

time of flight (ToF) technology are becoming more

and more affordable. For example, Apple have been

shipping user-facing depth cameras in their consumer

mobile phones for a number of years now, and Sony

have started developing next-generation 3D sensors

with ToF technology. Clearly, the quality of images

captured by such user devices is not comparable to the

output of high-resolution 3D scanners and decreases

rapidly with increasing distance, so that the working

distance is typically just a few meters. However, if

the lighting conditions are difficult, and if the subject

is unconstrained in terms of facial expression and oc-

clusions, then the recognition can be more effective

than using purely 2D RGB images. Moreover, 3D

sensing is also more robust to spoofing attacks.

Danner, M., Weber, T., Huber, P., Awais, M., Raetsch, M. and Kittler, J.

Evolutional Normal Maps: 3D Face Representations for 2D-3D Face Recognition, Face Modelling and Data Augmentation.

DOI: 10.5220/0010912000003124

In Proceedings of the 17th International Joint Conference on Computer Vision, Imaging and Computer Graphics Theory and Applications (VISIGRAPP 2022) - Volume 5: VISAPP, pages

267-274

ISBN: 978-989-758-555-5; ISSN: 2184-4321

Copyright

c

2022 by SCITEPRESS – Science and Technology Publications, Lda. All rights reserved

267

Our own interest in 3D face recognition is from

the point of view of 3D assisted 2D face recogni-

tion. Sinha et al.(Sinha et al., 2006) showed that both

photo-metric and shape cues are equally used by hu-

mans to recognise faces. The motivation for the 3D

assisted 2D face recognition approach is the disentan-

glement of shape and texture, achieved by 3D face

model fitting. The fitted 3D face should offer a bet-

ter control and aggregation of the different sources of

biometric information (shape and texture), as well as

suppression of the effect of illumination, expression

and pose. The 3D assisted 2D face recognition ap-

proach can also benefit from the availability of large

2D face databases, which are essential for effective

machine learning.

In comparison with 2D, the progress in 3D face

recognition has been hampered not only by expensive

sensor hardware and lack of data for training, but also

by the incongruence of 3D face representations in the

form of 3D meshes with data structures enabling ef-

ficient processing by convolutional neural networks.

However, this problem has recently been overcome

by means of graph neural networks (Scarselli et al.,

2009), and 3D face image remapping onto a 2D mage

structure such as isomap or Laplacian map (Feng

et al., 2018; Kittler et al., 2018). The additional prob-

lem of errors caused by reconstructing a 3D from its

2D projection has also been recently mitigated (Dan-

ner et al., 2019). Thus we are reaching the point when

the vision of 3D assisted 2D face recognition is be-

coming realistic.

This paper is concerned with 3D face recognition

in the context of 3D face biometrics per se, or as the

ultimate step in 3D assisted 2D face recognition. Our

approach involves mapping a 3D face mesh into 2D

for CNN based matching. We confine our interest to

the 3D shape information only, and investigate, how

the face shape should be represented and in what form

it should be provided to the neural network. We shall

explore a number of alternatives to the raw 3D mea-

surement information, and propose a novel represen-

tation, called evolutional normal map, which is shown

to be very effective from the 3D face shape recog-

nition point of view. We compare it with a number

of existing representation methods, and demonstrate

on several 3D databases, that it delivers impressive

recognition accuracy.

The rest of the paper is organised as follows: Sec-

tion 2 reviews the related work and recent approaches

to 3D face representations and 3D assisted 2D face

recognition. Section 3 discusses normal vector maps

as an alternative to raw 3D measurement informa-

tion and introduces our proposed Evolutional Normal

Maps. Section 4 presents the results of an extensive

evaluation of the proposed system and its compari-

son with the state of the art methods. We conduct

experiments with Bosphorus 3D Face (Aly

¨

uz et al.,

2008), CASIA 3D Face Database (Zhong et al., 2008)

and JNU-3D dataset (Koppen et al., 2018) datasets to

benchmark the 3D representations compared. Con-

clusions and future work are presented in section 5.

2 RELATED WORK

3D Face Recognition. A conventional 3D Face

recognition approach comprises methods like 3D face

landmarking, 3D face registration and facial feature

extraction. The 3D face landmarking locates the geo-

metric positions of reference points for the face. The

3D face registration aims to register 3D face scans in

a coordinate system so that the adjustment of facial

features can be carried out in a consistent manner.

Extracting facial features means creating a distinctive

face representation that should fully describe each 3D

face scan. Kakadiaris et al. (Kakadiaris et al., 2017)

already shows the effectiveness of a 3D-2D frame-

work for face recognition. The main advantage is the

more practical use than 3D-3D and higher accuracy

for 2D-2D face recognition.

Face Reconstruction. Dou et al.proposed a way for

Monocular 3D facial shape reconstruction from 2D

facial images (Dou et al., 2017) in a more effective

way than other approaches. Huber et al. (Huber et al.,

2016) also showed the power of 3D Morphable Face

Models in computer vision and the widely utilisable

reconstruction of a 3D face from a single 2D image.

These 3D Morphable Face Models can be used for

pose estimation, analysis and recognition and also for

facial landmark detection and tracking.

Depth Images and Point Clouds. Depth images,

depth point clouds, or 3D meshes have emerged as

an important tool and principle in biometrics and

face recognition research. According to Kakadi-

aris et al. (Kakadiaris et al., 2017) the existing frame-

works for face recognition vary across approaches

(e.g. model-based, data-driven and perceptual) or fa-

cial data domains (e.g. images, point clouds, depth

maps). The main benefits for the use of 3D facial

scans such as depth maps are the insensitivity to ambi-

ent influences and the colour of the skin which could

lead to missing details in 2D face images.

ToF- and Stereo-cameras. Structured-light RGB-

D and Time-of-Flight (ToF) cameras are used in 3D

VISAPP 2022 - 17th International Conference on Computer Vision Theory and Applications

268

perception tasks at close distances. While RGB-D

cameras benefit from high resolution and frame rate,

ToF-cameras have the capability to operate outdoors

and perceive details (Aleny

`

a et al., 2014).

Local Descriptors. For 3D face recognition tasks,

local descriptors have been established due to their

robustness in variations of illumination and facial ex-

pressions and achieved remarkable recognition rates.

Much work is inspired by local descriptors like

Radon transform (Jafari-Khouzani and Soltanian-

Zadeh, 2005), Textons (Lazebnik et al., 2005) and

Local Binary Patterns (LBP) (Ahonen et al., 2004).

Thanks to the high performance and computation-

ally low complexity and its flexibility to adapt, LBP

has a huge number of improved and extended succes-

sors (Pietik

¨

ainen et al., 2011; Huang et al., 2006; Oua-

mane et al., 2017). High-order local pattern descrip-

tors, like local derivative pattern on normal maps cap-

ture more detailed information by encoding various

distinctive spatial relationships (Soltanpour and Wu,

2017; Soltanpour and Wu, 2019; Zhang et al., 2010).

LBPs and other local descriptors will not be discussed

in this work due to the huge number of variations and

to the fact that this is a lossy conversion of 3D shape

information. Further use in face modeling based on

descriptors is therefore difficult. However, they can

easily be adapted on the evolutional normal maps to

further improve face recognition performance.

Normal-vector Map Representations. 3D polygo-

nal surfaces are represented through their correspond-

ing normal map, a bidimensional array which stores

mesh normal vectors as the pixel’s RGB components

of a colour image. Abate et al.calculate the differ-

ence of two normal maps (Abate et al., 2005) and

achieved remarkable results in face recognition based

on these difference maps. In 3D face recognition, nor-

mal maps having x,y,z mapped to RGB for visualisa-

tion is a common task (Kakadiaris et al., 2006). Gi-

lani et al. (Gilani and Mian, 2018; Gilani et al., 2018)

used depth images and normal vectors with azimuth

and elevation in the form of RGB images for training

a deep convolutional neural network.

3 METHODOLOGY

The values of the normal map matrix are determined

by a partial binary operation that maps the x and y co-

ordinates into unit normal vectors. The horizontal and

vertical coordinates define the resolution of the nor-

mal map and the range of this operation. In addition,

the normal map matrix elements can be represented

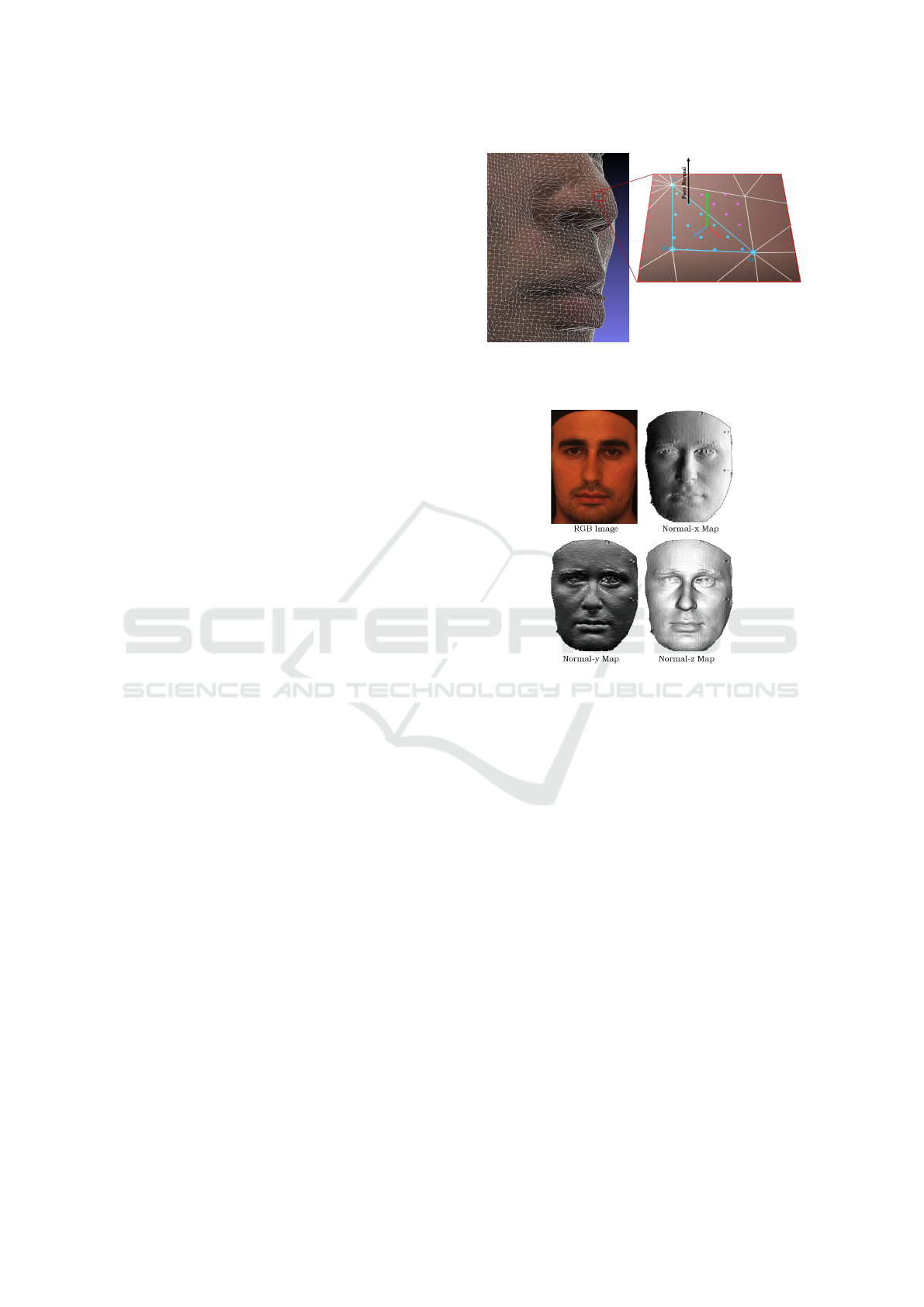

Figure 1: Each triangle is projected into the x,y-plane. the

enclosed pixels are determined and the normal vector com-

ponents are assigned to these pixels.

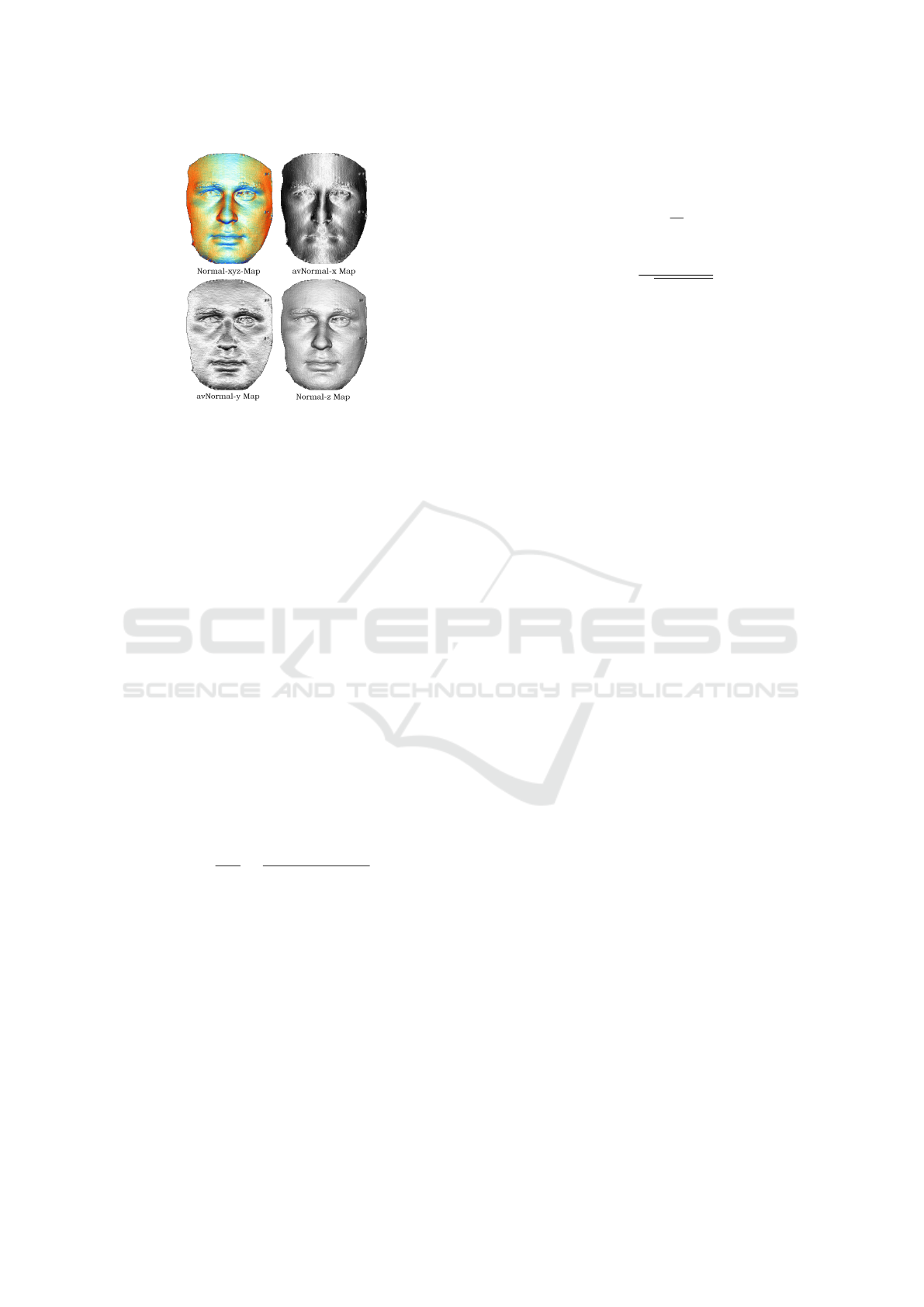

Figure 2: Portrait picture and normal maps created by con-

ventional methods on Bosphorus 3D Face Database.

by other 2D parameterisations, such as the spherical,

angle-based domain. The normal vectors can be com-

puted from the positions of the 3D points and their

neighbours while the surface is in a point cloud, a 3D

mesh or a range image representation (Daoudi et al.,

2013). The representation of the 3D face shape on

a uniform square matrix that is needed for convolu-

tional neural network, leads to the fact that all normal

maps have the same resolution.

The surface normals contain more detailed and

robust information compared to depth image for 3D

data (Li et al., 2014). Our objective is to explore dis-

criminative facial 3D representations in order to apply

them to 3D facial machine vision tasks. We propose

a refined feature extraction using the surface normals

that provides richer and distinct information, as com-

pared to the other 2D mesh representations.

3.1 Surface Normal Map

This work is inspired by recent algorithms (Mo-

hammadzade and Hatzinakos, 2013; Li et al., 2014;

Evolutional Normal Maps: 3D Face Representations for 2D-3D Face Recognition, Face Modelling and Data Augmentation

269

Figure 3: Evolutional normal maps on Bosphorus 3D Face

Database.

Emambakhsh and Evans, 2017) in which surface

normals have been applied for 3D face recognition.

These works follow normal surface estimation meth-

ods presented by Klasing et al. (Klasing et al., 2009).

Although the use of surface normals is a good prac-

tice for point clouds, it is not necessary in popular 3D

Face Databases and registered scan scenarios. A 3D

mesh consists of vertices and faces which can be used

to calculate the normal vectors, instead of estimating.

At first, we have to ensure the mesh is registered

to the x,y-plane and define the space where the ver-

tices are located. The vertices x,y-coordinates will

be mapped to the resulting normal map determining a

transformation. This transformation assigns the coor-

dinates from the domain of the mesh to the domain of

the normal matrix.

Then, for each polygon in the mesh, we calculate

triangles, if necessary. Each triangle consists of three

vertices points P = {p

i

, p

j

, p

k

} where p

n

∈ R

3

. Two

vectors are determined with v

0

= p

j

− p

i

and v

1

=

p

k

− p

i

. Then, a unit normal vector ˆn can be calculated

by

ˆn =

n

||n||

=

v

0

× v

1

||v

0

|| ||v

1

|| | sin θ|

(1)

where ˆn = [ ˆn

x

, ˆn

y

, ˆn

z

]

T

.

Furthermore, each triangle is projected into the

x,y-plane and the enclosed pixels are determined by

giving consideration to the targeted image resolution.

The process in described in Figure 1.

Azimuth and Elevation. Given the surface unit

normal vector ˆn = ( ˆn

x

, ˆn

y

, ˆn

z

) at a point, the azimuth

angle α is defined as the angle between the positive x-

axis and the projection of n to the x-y plane. The ele-

vation angle φ is the angle between n and the vector’s

orthogonal projection onto the xy plane. The eleva-

tion angle is positive when going toward the positive

z-axis from the xy plane.

α = tan

−1

ˆn

z

ˆn

x

(2)

φ = tan

−1

ˆn

y

q

ˆn

2

x

+ ˆn

2

z

(3)

For each pixel the normal vector component is

stored in a 2D-matrix, resulting in five images for x, y,

z, azimuth and elevation values. Optionally, the mini-

mum and maximum of each matrix can be determined

and the histogram values can be stretched to the full

range.

Pixels in these matrices with no assigned values

are considered as background and the background

value is assigned to them, which is 0 by default. Fi-

nally, the matrices N

x

, N

y

, N

z

are surface normal maps

having respectively x, y and z dimensions.

3.2 Evolutional Normal Maps

We now have several methods for calculation and es-

timation of normals and the corresponding algorithms

for depth map and multiple normal maps. The ques-

tion is, can the normal maps and representations based

on them still be improved without loss of informa-

tion? Applying local descriptors lead to increased his-

togram information which is beneficial to face recog-

nition but 3D shape information is lost. Indeed, based

on the fact that increasing histogram information is

helpful, the minimum and maximum values of the

normal component matrices are used to stretch them

to the full range. Additionally, on the matrices with

normal component x and y, the absolute value is

used which doubles the gradients from bright to dark.

These techniques are loss-free and can be facilely re-

versed.

Another method to manipulate histogram values

is used in inverting the values of the matrix to swap

bright and dark grey tones. In the experimental eval-

uation, diverse operations are applied to prove this as

a valuable operation on normal-maps.

It should be emphasised that the methods men-

tioned only represent part of the possibilities with

improving normal maps. That is why we call

the set of 3D representations ‘evolutional’ normal

maps because there are lots of parameters to ad-

just the outcome and they can be flexibly adapted

to many facial 3D machine vision tasks: (a) calcu-

late N

x

, N

y

, N

z

, N

a

, N

e

, Depth map or any combination

of these. (b) Invert greyscale of the normal map.

(c) Darken or lighten normal maps. (d) Calculate real

VISAPP 2022 - 17th International Conference on Computer Vision Theory and Applications

270

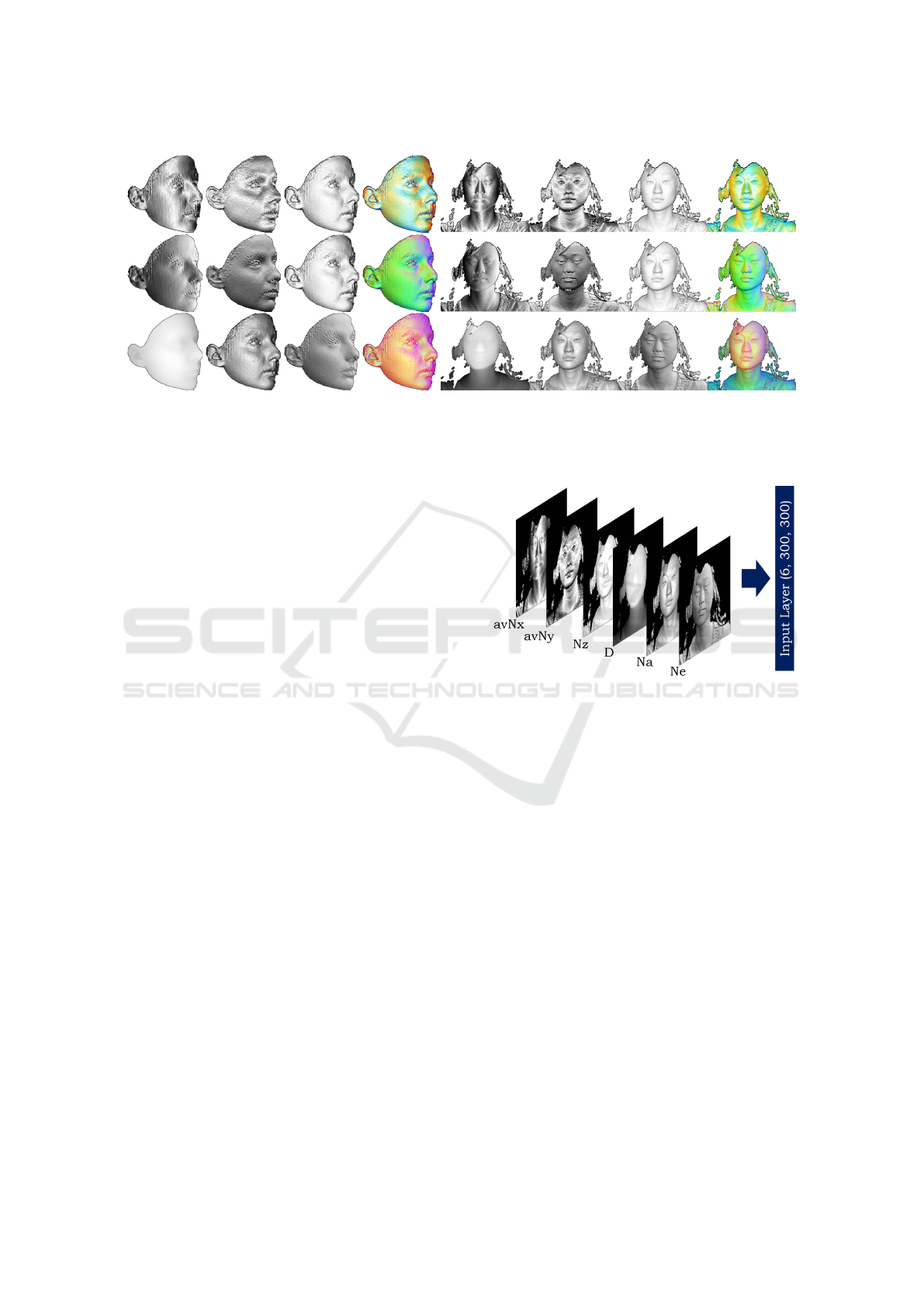

Figure 4: Example ENM set of images from Bosphorus 3D (left) and CASIA 3D (right): 1

st

row from left to right: Absolute-

Values-Normal-X-map, Absolute-Values-Normal-Y-map, Normal-Z-map, Combination of avN

x

, avN

y

, N

z

; 2

nd

row from left

to right: Normal-X-map, Normal-Y-map, Normal-Z-map, Combination of N

x

, N

y

, N

z

; 3

rd

row from left to right: depth map,

Azimuth-Normal-map, Elevation-Normal-map, Combination of depth map, N

a

, N

e

.

or absolute normal values. (e) Adjust image resolu-

tion. (f) Horizontal and vertical rotation on the mesh

for data augmentation. (g) Accessible for local de-

scribers.

4 EXPERIMENTAL EVALUATION

The datasets used in this work are CASIA, Bosphorus

and JNU-3D. The CASIA 3D FaceV1 Database con-

tains 4,674 scans of 123 subjects, where each subject

is captured with more than 35 different expressions

and poses. Bosphorus 3D Face Database comprises

105 identities and 4,666 scans and is popular because

of its rich repertoire of expressions. Beside 3D face

recognition, this database is often used for expression

recognition and facial action unit detection. The JNU-

3D data set consists of 774 3D faces and is used for

augmenting the 3D database and for accuracy tests.

Evaluation Protocol. We evaluate the depth image

and the normal maps on face recognition accuracy.

For the benchmark training the normal map size is

fixed to 300x300 since previous experiments showed

this as an efficient resolution on recognition rate and

training duration.

In total, we evaluate the accuracy with 16 different

experiments shown in fig. 4 and fig. 5 . Therefore, as a

run of the experiment we defined the basis, the ”pure”

depth map D. Followed up by the 8 different nor-

mal maps in x-dimension N

x

and avN

x

, y-dimension

N

y

and avN

y

, z-dimension N

z

and avN

z

, azimuth an-

gle N

a

and the elevation angle N

e

. Accordingly the

dimension of the network input layer is (1, 300, 300).

Figure 5: Multiple combinations of input images for the

face recogniser from single channel grey scale images up to

six channel D + avN

xy

+ N

zae

In the second run we evaluate the combination of

avN

x

, avN

y

, avN

z

, called avN

xyz

, the combination of

N

x

, N

y

, N

z

, called N

xyz

and the combination of D, N

a

, N

e

, called D + N

ae

also shown in the fig. 4. Accord-

ingly the dimension of the network input layer is (3,

300, 300).

The final run includes the combination of the

depth map and the five normal maps, called D +

N

xyzae

. Accordingly the dimension of the network

input layer is (6, 300, 300). This input for the face

recognition network is shown in fig. 5.

Each representation is trained on our face recog-

nition network for 60 epochs on Bosphorus 3D

Database, CASIA 3D and JNU-3D, respectively. On

Bosphorus, all images are used, including facial ex-

pression and partial occlusion. The first neutral sam-

ple of each subject is used as the gallery and the re-

maining scans as the probe (neutral vs. all).

Since we want to evaluate the pure 3D represen-

tations we neither apply any data augmentation meth-

ods for the training data nor use local binary pattern

Evolutional Normal Maps: 3D Face Representations for 2D-3D Face Recognition, Face Modelling and Data Augmentation

271

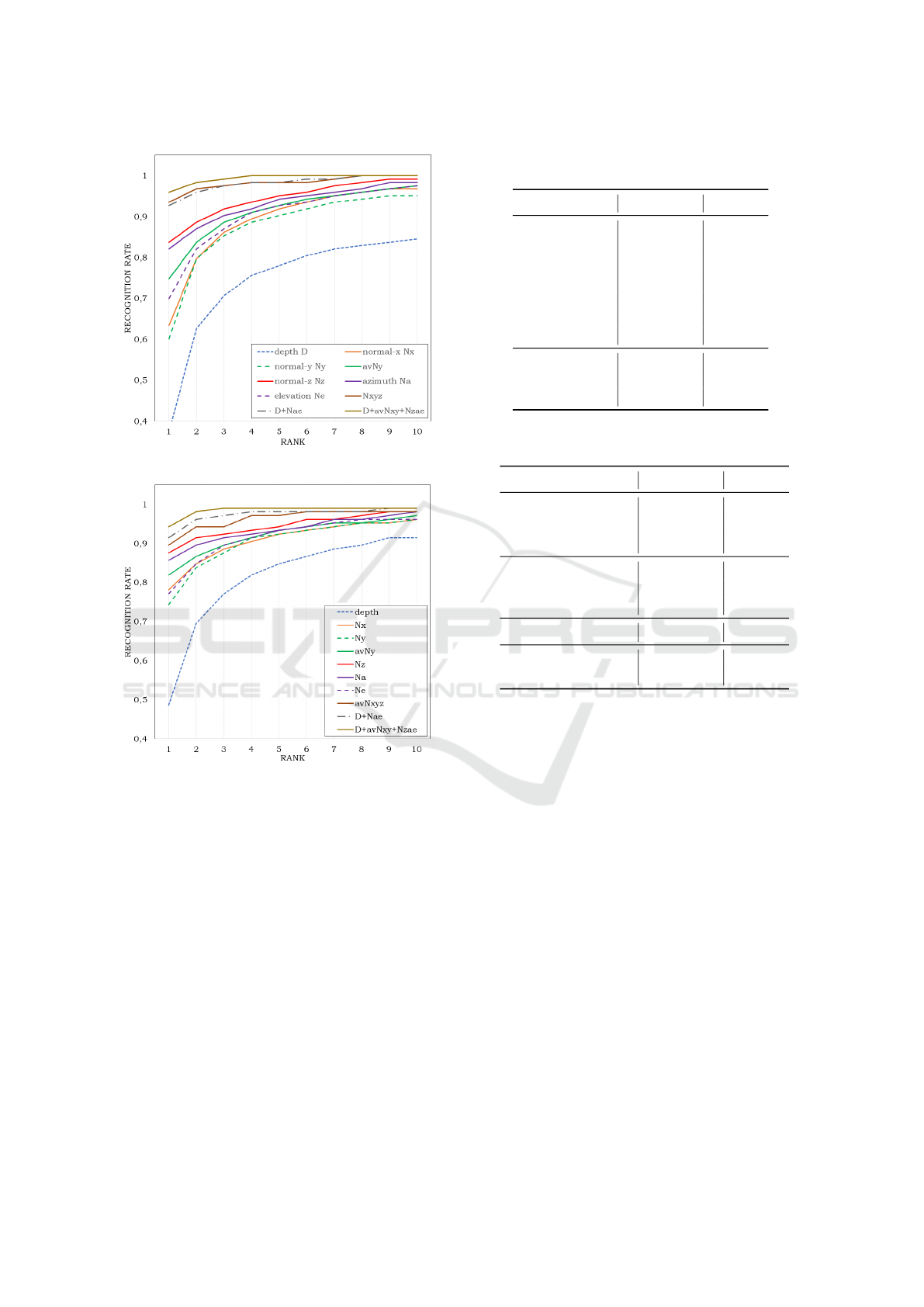

Figure 6: Recognition rate on CASIA 3D data set.

Figure 7: Recognition rate on Bosphorus 3D Face.

layers. Actually, the experiments show that with com-

bining normal maps, we are able to achieve recogni-

tion rates equal to or better than previous work with

lots of augmentation and adapted layers.

Secondary evaluation, using CASIA 3D Database,

is used under same conditions. The first scan of each

subject is considered as gallery and finally the JNU-

3D data set is used for accuracy tests on small net-

works.

Results. To illustrate recognition efficiency, the Cu-

mulative Match Characteristics (CMC) curves on CA-

SIA 3D are presented in fig. 6. Results from table 1

show our ENM achieves the best results. High detec-

tion rates in CASIA and Bosphorus are already scored

without data augmentation or the use of local binary

patterns or similar descriptors. Table 2 presents the

Table 1: Rank 1 recognition rate [%] in results on Bospho-

rus and CASIA 3D.

Bosphorus CASIA

depth map 48.6 37.4

N

x

78.1 63.4

N

y

74.3 60.2

N

z

87.6 83.7

N

a

85.7 82.1

N

e

77.1 69.9

D + N

ae

91.4 92.7

ENM avN

y

81.9 74.8

ENM avN

xyz

89.5 93.5

ENM 6-layer 94.3 95.9

Table 2: Rank 1 recognition rate [%] results on Bosphorus

and CASIA 3D with augmented data sets.

Bosphorus CASIA

GoogleNet

1

RGB 63.4 85.9

Resnet152

2

RGB 7.1 52.9

VGG-Face

3

RGB 96.4 94.1

GoogleNet 3D 26.8 50.8

Resnet152 3D 3.8 25.3

VGG-Face 3D 48.1 72.0

D + N

ae

FR3DNet

4

100.0 99.7

ENM avN

xyz

100.0 97.3

ENM 6-layer 100.0 99.8

performance of the proposed method in recognising

the 1

st

rank of the 3D faces by training with aug-

mented data. It achieves the best performance for CA-

SIA 3D faces and measures up to FR3DNet on Bosh-

porus 3D with 100% rank 1 recognition rate. The su-

periority of proposed ENM is also reflected in fig. 7.

5 CONCLUSIONS AND FUTURE

WORK

We addressed the problem of 3D face shape represen-

tation with a focus on 2D normal maps. These maps

are various functions of the 3D surface normals, that

are defined on a 3D face mesh and mapped systemat-

ically onto a 2D image. We showed that these normal

maps can be computed very efficiently from the 3D

shape mesh.

An extensive comparative evaluation of multiple

variants of these normal maps and their combinations

has been carried out using face recognition accuracy

as a measure of their effectiveness. Motivated by the

results of the comparative study, we proposed a 3D

VISAPP 2022 - 17th International Conference on Computer Vision Theory and Applications

272

face shape descriptor, referred to as Evolutional Nor-

mal Maps, that assimilates, modifies and optimises a

subset composed of six of these normal map represen-

tations. The proposed descriptor is extensively eval-

uated on three benchmarking 3D face datasets with

very promising results. The descriptor is computa-

tionally efficient and most importantly, it outperforms

the state of the art methods in 3D face recognition.

The proposed Evolutional Normal Maps have

many potential applications, apart from 3D face

recognition. For instance, can can be used for data

augmentation and in generative adversarial networks

to render and develop synthetic 3D data. The future

plans also include their use for 3D face reconstruc-

tion and 3D face analysis for emotion, pose and age

estimation.

ACKNOWLEDGEMENTS

Part of the research in this paper uses the CASIA-3D

FaceV1 collected by the Chinese Academy of Sci-

ences Institute of Automation (CASIA).

This work is partially supported by a grant of the

BMWi ZIM program, no. ZF4029424HB9.

REFERENCES

Abate, A. F., Nappi, M., Ricciardi, S., and Sabatino, G.

(2005). Fast 3d face recognition based on normal

map. In Proceedings of the 2005 International Con-

ference on Image Processing, ICIP 2005, Genoa,

Italy, September 11-14, 2005, pages 946–949. IEEE.

Ahonen, T., Hadid, A., and Pietik

¨

ainen, M. (2004). Face

recognition with local binary patterns. In Pajdla,

T. and Matas, J., editors, Computer Vision - ECCV

2004, 8th European Conference on Computer Vision,

Prague, Czech Republic, May 11-14, 2004. Proceed-

ings, Part I, volume 3021 of Lecture Notes in Com-

puter Science, pages 469–481. Springer.

Aleny

`

a, G., Foix, S., and Torras, C. (2014). Using tof and

RGBD cameras for 3d robot perception and manip-

ulation in human environments. Intelligent Service

Robotics, 7(4):211–220.

Aly

¨

uz, N., G

¨

okberk, B., Dibeklioglu, H., Savran, A., Salah,

A. A., Akarun, L., and Sankur, B. (2008). 3d face

recognition benchmarks on the bosphorus database

with focus on facial expressions. In Schouten, B.

A. M., Juul, N. C., Drygajlo, A., and Tistarelli, M., ed-

itors, Biometrics and Identity Management, First Eu-

ropean Workshop, BIOID 2008, Roskilde, Denmark,

May 7-9, 2008. Revised Selected Papers, volume 5372

of Lecture Notes in Computer Science, pages 57–66.

Springer.

Danner, M., Raetsch, M., Huber, P., Awais, M., Feng, Z.,

and Kittler, J. (2019). Texture-based 3D face recog-

nition using deep neural networks for unconstrained

human-machine interaction. In Proceedings of the

15th International Joint Conference on Computer Vi-

sion, Imaging and Computer Graphics Theory and

Applications VISAPP 2020. SCITEPRESS.

Daoudi, M., Srivastava, A., and Veltkamp, R. (2013). 3D

Face Modeling, Analysis and Recognition. Wiley.

Dou, P., Shah, S. K., and Kakadiaris, I. A. (2017). End-to-

end 3d face reconstruction with deep neural networks.

In 2017 IEEE Conference on Computer Vision and

Pattern Recognition, CVPR 2017, Honolulu, HI, USA,

July 21-26, 2017, pages 1503–1512. IEEE Computer

Society.

Emambakhsh, M. and Evans, A. N. (2017). Nasal patches

and curves for expression-robust 3d face recognition.

IEEE Trans. Pattern Anal. Mach. Intell., 39(5):995–

1007.

Feng, Z.-H., Huber, P., Kittler, J., Hancock, P., Wu, X.-J.,

Zhao, Q., Koppen, P., and R

¨

atsch, M. (2018). Evalua-

tion of dense 3D reconstruction from 2D face images

in the wild. In 2018 13th IEEE International Confer-

ence on Automatic Face & Gesture Recognition (FG

2018), pages 780–786. IEEE.

Gilani, S. Z. and Mian, A. (2018). Learning from mil-

lions of 3d scans for large-scale 3d face recogni-

tion. In 2018 IEEE Conference on Computer Vision

and Pattern Recognition, CVPR 2018, Salt Lake City,

UT, USA, June 18-22, 2018, pages 1896–1905. IEEE

Computer Society.

Gilani, S. Z., Mian, A. S., Shafait, F., and Reid, I. (2018).

Dense 3d face correspondence. IEEE Trans. Pattern

Anal. Mach. Intell., 40(7):1584–1598.

He, K., Zhang, X., Ren, S., and Sun, J. (2016). Deep resid-

ual learning for image recognition. In 2016 IEEE Con-

ference on Computer Vision and Pattern Recognition,

CVPR 2016, Las Vegas, NV, USA, June 27-30, 2016,

pages 770–778. IEEE Computer Society.

Huang, Y., Wang, Y., and Tan, T. (2006). Combining

statistics of geometrical and correlative features for 3d

face recognition. In Chantler, M. J., Fisher, R. B.,

and Trucco, E., editors, Proceedings of the British

Machine Vision Conference 2006, Edinburgh, UK,

September 4-7, 2006, pages 879–888. British Machine

Vision Association.

Huber, P., Hu, G., Tena, J. R., Mortazavian, P., Koppen,

W. P., Christmas, W. J., R

¨

atsch, M., and Kittler, J.

(2016). A multiresolution 3D morphable face model

and fitting framework. In Magnenat-Thalmann, N.,

Richard, P., Linsen, L., Telea, A., Battiato, S., Imai,

F. H., and Braz, J., editors, Proceedings of the 11th

Joint Conference on Computer Vision, Imaging and

Computer Graphics Theory and Applications (VISI-

GRAPP 2016) - Volume 4: VISAPP, Rome, Italy,

February 27-29, 2016, pages 79–86. SciTePress.

Jafari-Khouzani, K. and Soltanian-Zadeh, H. (2005). Radon

transform orientation estimation for rotation invariant

texture analysis. IEEE Trans. Pattern Anal. Mach. In-

tell., 27(6):1004–1008.

Kakadiaris, I. A., Passalis, G., Toderici, G., Murtuza, M. N.,

and Theoharis, T. (2006). 3d face recognition. In

Evolutional Normal Maps: 3D Face Representations for 2D-3D Face Recognition, Face Modelling and Data Augmentation

273

Chantler, M. J., Fisher, R. B., and Trucco, E., edi-

tors, Proceedings of the British Machine Vision Con-

ference 2006, Edinburgh, UK, September 4-7, 2006,

pages 869–878. British Machine Vision Association.

Kakadiaris, I. A., Toderici, G., Evangelopoulos, G., Pas-

salis, G., Chu, D., Zhao, X., Shah, S. K., and Theo-

haris, T. (2017). 3d-2d face recognition with pose and

illumination normalization. Comput. Vis. Image Un-

derst., 154:137–151.

Kanade, T. (1977). Computer recognition of human faces.

Interdisciplinary Systems Research, 47.

Kittler, J., Hilton, A., Hamouz, M., and Illingworth, J.

(2005). 3d assisted face recognition: A survey of

3d imaging, modelling and recognition approachest.

In IEEE Conference on Computer Vision and Pattern

Recognition, CVPR Workshops 2005, San Diego, CA,

USA, 21-23 September, 2005, page 114. IEEE Com-

puter Society.

Kittler, J., Koppen, P., Kopp, P., Huber, P., and R

¨

atsch, M.

(2018). Conformal mapping of a 3D face representa-

tion onto a 2D image for CNN based face recognition.

In 2018 International Conference on Biometrics, ICB

2018, Gold Coast, Australia, February 20-23, 2018,

pages 124–131. IEEE.

Klasing, K., Althoff, D., Wollherr, D., and Buss, M. (2009).

Comparison of surface normal estimation methods for

range sensing applications. In 2009 IEEE Interna-

tional Conference on Robotics and Automation, ICRA

2009, Kobe, Japan, May 12-17, 2009, pages 3206–

3211. IEEE.

Koppen, W. P., Feng, Z., Kittler, J., Awais, M., Christmas,

W. J., Wu, X., and Yin, H. (2018). Gaussian mixture

3d morphable face model. Pattern Recognit., 74:617–

628.

Lazebnik, S., Schmid, C., and Ponce, J. (2005). A sparse

texture representation using local affine regions. IEEE

Trans. Pattern Anal. Mach. Intell., 27(8):1265–1278.

Li, H., Huang, D., Morvan, J., Chen, L., and Wang,

Y. (2014). Expression-robust 3d face recognition

via weighted sparse representation of multi-scale and

multi-component local normal patterns. Neurocom-

puting, 133:179–193.

Mohammadzade, H. and Hatzinakos, D. (2013). Iterative

closest normal point for 3d face recognition. IEEE

Trans. Pattern Anal. Mach. Intell., 35(2):381–397.

Ouamane, A., Boutellaa, E., Bengherabi, M., Taleb-Ahmed,

A., and Hadid, A. (2017). A novel statistical and mul-

tiscale local binary feature for 2d and 3d face verifica-

tion. Comput. Electr. Eng., 62:68–80.

Parkhi, O. M., Vedaldi, A., and Zisserman, A. (2015).

Deep face recognition. In Xie, X., Jones, M. W., and

Tam, G. K. L., editors, Proceedings of the British Ma-

chine Vision Conference 2015, BMVC 2015, Swansea,

UK, September 7-10, 2015, pages 41.1–41.12. BMVA

Press.

Pietik

¨

ainen, M., Hadid, A., Zhao, G., and Ahonen, T.

(2011). Computer Vision Using Local Binary Pat-

terns, volume 40 of Computational Imaging and Vi-

sion. Springer.

Scarselli, F., Gori, M., Tsoi, A. C., Hagenbuchner, M., and

Monfardini, G. (2009). The graph neural network

model. IEEE Trans. Neural Networks, 20(1):61–80.

Sinha, P., Balas, B. J., Ostrovsky, Y., and Russell, R.

(2006). Face recognition by humans: Nineteen results

all computer vision researchers should know about.

Proceedings of the IEEE, 94(11):1948–1962.

Soltanpour, S. and Wu, Q. J. (2019). Weighted ex-

treme sparse classifier and local derivative pattern for

3d face recognition. IEEE Trans. Image Process.,

28(6):3020–3033.

Soltanpour, S. and Wu, Q. M. J. (2017). High-order local

normal derivative pattern (LNDP) for 3d face recogni-

tion. In 2017 IEEE International Conference on Im-

age Processing, ICIP 2017, Beijing, China, September

17-20, 2017, pages 2811–2815. IEEE.

Szegedy, C., Liu, W., Jia, Y., Sermanet, P., Reed, S. E.,

Anguelov, D., Erhan, D., Vanhoucke, V., and Rabi-

novich, A. (2015). Going deeper with convolutions.

In IEEE Conference on Computer Vision and Pattern

Recognition, CVPR 2015, Boston, MA, USA, June 7-

12, 2015, pages 1–9. IEEE Computer Society.

Zhang, B., Gao, Y., Zhao, S., and Liu, J. (2010). Local

derivative pattern versus local binary pattern: Face

recognition with high-order local pattern descriptor.

IEEE Trans. Image Process., 19(2):533–544.

Zhong, C., Sun, Z., and Tan, T. (2008). Learning efficient

codes for 3d face recognition. In Proceedings of the

International Conference on Image Processing, ICIP

2008, October 12-15, 2008, San Diego, California,

USA, pages 1928–1931. IEEE.

VISAPP 2022 - 17th International Conference on Computer Vision Theory and Applications

274