Enriching the Visit to an Historical Botanic Garden with Augmented

Reality

Rafael Torres

a

, Stefan Postolache

b

, Maria Beatriz Carmo

c

, Ana Paula Cl

´

audio

d

,

Ana Paula Afonso

e

, Ant

´

onio Ferreira

f

and Dulce Domingos

g

LASIGE, Departamento de Inform

´

atica, Faculdade de Ci

ˆ

encias, Universidade de Lisboa, Lisboa, Portugal

Keywords:

Augmented Reality, Mobile Applications, Botanical Gardens.

Abstract:

The use of Augmented Reality in mobile guide applications for natural parks and gardens enables compelling

and memorable experiences that enrich visits. But the creation of these experiences is still riddled with several

challenges concerning technology and content production. This paper presents guidelines for the development

of AR experiences in mobile applications that support visits to gardens or natural parks, providing a list of

technological and multimedia content elements that should be considered. We applied these guidelines in the

development of a mobile application for a Botanical Garden, implemented for Android and iOS. We conducted

a study with volunteers during visits to the garden and the results revealed high levels of perceived app usability

and strong agreements about app features, which allow us to accept that the app was evaluated positively.

1 INTRODUCTION

The JBT (Jardim Bot

ˆ

anico Tropical) Garden is lo-

cated in a monumental area of our city, Lisbon, next

to the Mosteiro dos Jer

´

onimos and occupies seven

hectares. The garden provides a rich plant collec-

tion consisting mainly of species from tropical and

subtropical regions. Besides the remarkable natural

heritage and botanic vocation of this Garden, the visi-

tors can also explore the historical buildings and stat-

uary from the 17th to the 20th centuries, observe sev-

eral bird species and a diversity of bryophytes and

lichens. The JBT organizes scientific, educational,

cultural and leisure activities for a varied audience,

such as, tourists, families with children, students, and

botanical experts. These activities are fundamental

to disseminate specialized knowledge on tropical sci-

ence and to highlight and preserve heritage and col-

lective memory concerning a remarkable period in the

History of our country.

Nowadays, it is important to complement the ex-

isting guided tours and explore the available techno-

a

https://orcid.org/0000-0001-6886-8541

b

https://orcid.org/0000-0001-5244-5704

c

https://orcid.org/0000-0002-4768-9517

d

https://orcid.org/0000-0002-4594-8087

e

https://orcid.org/0000-0002-0687-5540

f

https://orcid.org/0000-0002-7428-2421

g

https://orcid.org/0000-0002-5829-2742

logical developments to offer to visitors new interac-

tive and engaging experiences. The use of Augmented

Reality (AR) in mobile guides to enrich visits, namely

adding information to the real world, has been applied

in several projects and domains (Santos et al., 2020;

Andrade and Dias, 2020; Siang et al., 2019; Pombo

and Marques, 2019). Although AR mobile applica-

tions are now widely used, there are still several chal-

lenges related to technology and multimedia content

creation. For instance, how to select the appropriate

tracking method according to the characteristics of the

environment and visitors’ smartphones and how to de-

cide which experiences should be created and associ-

ated to which PoIs (Point of Interest).

Our main contribution is to organize the chal-

lenges and requirements associated with the develop-

ment of AR experiences in mobile applications (apps)

that support visits to gardens or natural parks and to

apply them in an actual app, JBT app, implemented

for Android and iOS.

2 BACKGROUND AND RELATED

WORK

AR makes possible the interaction with the surround-

ing real world, enriching it with a virtual world, where

real and virtual object are combined (Feiner et al.,

1993). Within this context, it is mandatory to track

Torres, R., Postolache, S., Carmo, M., Cláudio, A., Afonso, A., Ferreira, A. and Domingos, D.

Enriching the Visit to an Historical Botanic Garden with Augmented Reality.

DOI: 10.5220/0010897900003124

In Proceedings of the 17th International Joint Conference on Computer Vision, Imaging and Computer Graphics Theory and Applications (VISIGRAPP 2022) - Volume 1: GRAPP, pages

91-102

ISBN: 978-989-758-555-5; ISSN: 2184-4321

Copyright

c

2022 by SCITEPRESS – Science and Technology Publications, Lda. All rights reserved

91

the user’s viewpoint to superimpose virtual contents

over real environment views (Bekele et al., 2018).

AR tracking techniques are used to determine the

current pose (position and orientation) of users in the

environment, enabling the alignment of virtual con-

tent with the physical objects. Tracking techniques

have been categorized as sensor-based, vision-based,

and hybrid (Zhou et al., 2008; Rabbi and Ullah, 2013;

Kim et al., 2017; Siriwardhana et al., 2021).

Sensor-based tracking techniques use sensors that

are placed in the environment or integrated in the mo-

bile device. Different kinds of sensors can be used,

such as optical, acoustic, mechanical, magnetic, in-

ertial, or radio (RFID, Infrared, Beacons, NFC, Wi-

Fi, and GPS) (Jain et al., 2013). Inertial-based track-

ing uses accelerometers and gyroscopes to estimate

the user pose based on previously estimated or known

poses. The accelerometer measures the motion to cal-

culate the position of the user relative to some initial

point. The gyroscope measures the rotation to calcu-

late the orientation of the user relative to some initial

one (Bekele et al., 2018). The compasses uses mag-

netic sensors for orientation, identifying which cardi-

nal point the device is pointing to. An overview of

each kind of sensor-based tracking can be found, for

instance, in (Rabbi and Ullah, 2013).

Vision-based tracking techniques rely on the cam-

era and can use image processing techniques to deter-

mine the current pose. This type of tracking is fur-

ther divided into marker-based and markerless (also

called as natural feature tracking). Early vision-based

tracking was marker-based and used fiducial markers

(artificial landmarks) added to the environment, such

as QR codes. More recently, vision-based tracking

proposals are based on markerless approach, which

allows natural landmarks to be used for tracking, in-

stead of artificial ones. Moreover, Simultaneous Lo-

calization and Mapping (SLAM) algorithms are used

to create maps in unknown environments and, simul-

taneously, to localize users inside them. Virtual ob-

jects are added to the map and shown considering

their position and the user location (Tan et al., 2013;

Klein and Murray, 2007).

Following a different approach, image-assisted

alignment (also named Instant Tracking in (Andrade

and Dias, 2020)) transfers to users the responsibility

for the recognition and the initial alignment. In this

case, a virtual element is shown on the device screen

and the user has to align it with the corresponding real

object which is being captured by the camera of the

device.

Hybrid tracking, also called fusion tracking, com-

bines different tracking techniques to increase track-

ing robustness.

The use of AR in mobile applications for gardens

has been widely evaluated considering the educative

and the visitors experience perspectives (Huang et al.,

2016; Pombo et al., 2017; Harrington et al., 2019;

Pombo and Marques, 2019; Siang et al., 2019; Santos

et al., 2020; Andrade and Dias, 2020; Bettelli et al.,

2020; Harrington, 2020; Santos et al., 2020).

Bekele et al. (2018) identify areas in cultural her-

itage for AR mobile applications and suggest the most

appropriate technology for each case, not covering

the particular case of botanical gardens. Chong et al.

(2021) focus on usability and accessibility challenges

in using virtual reality in cultural heritage.

More aligned with our work, Santos et al. (2020)

define a framework with a set of guidelines for mobile

applications of natural parks. In (Postolache et al.,

2021), the authors systematize a set of requirements

for this type of applications, organizing them in four

main categories, namely, objectives, contents (includ-

ing its organization and presentation), enabling tech-

nology, and other non-functional requirements. While

these proposals present a broader approach, we fo-

cus on the AR experiences comprising the technology

perspective, as well as, the contents perspective.

3 AUGMENTED REALITY FOR

BOTANICAL GARDENS’ VISITS

Developing AR experiences for a botanical garden

gathers people from different backgrounds and raises

several challenges involving technological issues and

content creation. After defining the objectives of AR

experiences, we will present our strategy to the con-

ception of these experiences.

3.1 Objectives

The goal of introducing AR experiences is to enrich

the visit, providing both entertainment and educa-

tional contents in an immersive and interactive way.

In a botanical garden, flora and fauna change through-

out the seasons and the garden may have collections

that evolve over time. Therefore, during a visit, the

visitor has only a glance of the richness of the garden.

With AR, digital information can be added to the im-

age of the real world, thus allowing visitors to observe

elements that might not be visible in the garden at the

time of the visit.

3.2 Conceiving Experiences

To idealize and conceive the AR experiences it is cru-

cial the collaboration between the experts, who know

GRAPP 2022 - 17th International Conference on Computer Graphics Theory and Applications

92

the flora, fauna and the history of the garden, and the

technological team responsible for their implementa-

tion. To motivate the experts and to raise ideas and

awaken their imagination to the requirements of the

AR experiences for the JBT app, our strategy was to

show them examples of what can be done with the

available technology. In the following, we explain the

guidelines established to choose the technology and

the guiding principles to create the contents.

3.2.1 Conceiving Experiences - Technology

When choosing the technology to create the AR ex-

periences we have to consider different aspects, such

as, the tracking techniques that should be suitable for

the experiences, the type of contents that we want

to show and the tools to develop the AR experi-

ences. Considering the ”where”, ”what” and ”how”

questions (Prabhu, 2017) may help us in this pro-

cess. ”Where” is the place of use of the experiences

which is decisive for choosing the methods for recog-

nition and tracking of the AR experiences. ”What” is

the type of content to be shown in AR experiences.

”How” involves the means to implement the AR ex-

periences, namely, the software to be used in the de-

velopment.

In a botanical garden the experiences are used out-

doors which answers the ”where” question. There-

fore, we have to deal with variations in luminosity

over the day and also in the orientation of the shad-

ows cast on objects, which may impose restrictions

in the use of marker recognition. The location and

orientation of the markers need to be carefully stud-

ied to minimize appearance variations throughout the

day. Moreover, these markers should be natural ele-

ments in the garden, like tile panels, avoiding adding

physical artifacts strange to the place.

In outdoor environments, location can be obtained

by a GPS receiver, which provides tracking of user lo-

cation. However, we need to be aware of the lack of

signal in some places provoked by the treetops. Ac-

curacy problems may also occur when the orientation

is provided by sensors integrated in the smartphone,

such as, gyroscope, accelerometer or magnetometer.

Therefore using GPS and other sensors may compro-

mise the correct alignment between virtual contents

and real objects. This type of tracking may be used

when the precision of the alignment between the real

and the virtual objects is not critical.

A more precise alignment may involve the user,

as in the image-assisted alignment, where the user

is asked to perform an initial precise alignment (An-

drade and Dias, 2020).

The set of tracking techniques may be even more

limited. If we want to reach a diverse audience, the

experiences cannot require high-end equipment. This

excludes the use of tracking techniques that require

large amounts of processing power, like SLAM. In ad-

dition, to limit maintenance requirements, we should

avoid resorting to locally placed equipment such as

RFID.

The answer to the ”what” question is not straight-

forward when there is no predefined content associ-

ated with a PoI (detailed ahead). So, ideally, the best

tool is the one that supports the wider variety of con-

tent types (text, image, video, audio and 2D/3D ob-

ject) to allow greater freedom for content creation.

There are several commercial and free AR devel-

opment tools (Bekele et al., 2018), consequently, the

answer to ”how” will depend on the budget, the target

operating system (Android, iOS), the selected track-

ing methods and the type of contents supported.

3.2.2 Conceiving Experiences - Contents

Regarding the contents of AR experiences we have to

consider: the type of media used - it may be text, im-

age, video, audio or 2D/3D virtual models; the goal

of the experience - entertainment, educative or infor-

mative; period of time to which they refer - a snapshot

in time or the evolution over the year.

In any case, the development of the AR expe-

riences needs the contribution and validation of ex-

perts, either based on their scientific knowledge or

daily activities. The garden curators experience year-

round variations in the appearance of plants and the

behaviour of animals in the garden. Nowadays, with

the widespread of mobile phones with good resolu-

tion cameras, it is easy to capture images and videos

at a moment’s notice that reveal the life in the gar-

den. These snapshots may unveil to the visitors what

they may not be able to observe in their visit. Besides,

these live moments, more structured contents may be

created, for instance, to show the evolution over the

year of specific trees.

Animations with 2D/3D models may support sci-

entific explanations of occurrences in the garden or

may be used for entertainment purposes.

4 AUGMENTED REALITY AND

MULTIMEDIA EXPERIENCES

IN JBT

Considering the guidelines presented above, we de-

scribe in this section the technology adopted, the con-

tents gathered and the experiences developed.

Enriching the Visit to an Historical Botanic Garden with Augmented Reality

93

4.1 Conceived Experiences - Technology

In JBT app, as no AR experiences were previously de-

fined, the choice of tools was made towards the ones

with the best possible room for manoeuvre and would

not imply a setback in the solution’s development, es-

pecially in a way that would not jeopardize the com-

pletion of the project.

Hence, it would imply choosing the tool that offers

more functionalities to create the experiences, track-

ing techniques for aligning and to use any contents’

type, since we were sure that the ideas would arise as

a result.

Without a budget to be spent on software tools,

we decided to use licensed free or open-source aug-

mented reality tools. EasyAR

1

was chosen for the An-

droid operating system, having a free license, recog-

nition and tracking of marks and images, solving

the questions: ”Where”, being able to recognize any

JBT’s possible object, when you cannot use the GPS

component properly; and the ”What”, being able to

render over the possible objects, text, image, video

and 3D object contents. DroidAR

2

turned out to be

the second choice, complementing EasyAR. It is an

open-source framework for AR on Android, which

we had to modify and contribute with new code to fit

our needs on the creation of the AR experiences. Re-

solving the issues: ”Where”, by allowing the tracking

of the user’s GPS location and rendering text, image,

3D object and playing audio contents in specific GPS

coordinates; and the ”What”, using the inertia and ori-

entation sensors to render the same type of content at

specific points in space to the user, when it is not pos-

sible to have an adequate GPS signal, nor recogniz-

able planes or well illuminated so that the recognition

capabilities of EasyAR can be effective.

For the iOS operating system, we decided to use

its native frameworks: ARKit

3

for recognition and

tracking of marks and images to render over the possi-

ble objects, text, image, video and 3D object contents;

ARKit, SceneKit

4

and CoreMotion

5

using the iner-

tia and orientation sensors to render the same type of

content at specific points in space to the user; finally

AVKit

6

and AVFoundation

7

, to play video or audio

content.

There are cases where the capabilities of EasyAR

and ARKit cannot be effective, with no recognizable

1

https://www.easyar.com/

2

https://github.com/rafaisen/droidar

3

https://developer.apple.com/augmented-reality/arkit/

4

https://developer.apple.com/scenekit/

5

https://developer.apple.com/documentation/coremotion

6

https://developer.apple.com/documentation/avkit/

7

https://developer.apple.com/documentation/avfoundation/

or well-illuminated planes, and a more precise align-

ment is crucial in a specific PoI. So, we created an

image-assisted alignment tool for Android and iOS

with their native frameworks and libraries, capable of

rendering atop the wanted objects the same type of

content described before for each platform needs.

4.2 Conceived Experiences - Content

The development of the JBT app involved a large

group of experts from distinct areas of knowledge to

ensure that all the contents are correct and accurate

from the scientific point of view.

The creation of AR and multimedia experiences

integrated contents from different sources:

• Videos and images collected by the experts who

work or have interest in the thematic areas of the

garden;

• Videos provided by Centro de Ci

ˆ

encia do Caf

´

e

that is an institutional partner of the garden;

• Animations and 2D/3D models produced by stu-

dents;

• Scheduled image collection throughout the year

of a set of iconic trees.

In their daily activities, the curators of the garden

capture images and videos that show the living nature

in the garden. For instance, videos showing speci-

mens that are not easy for the visitor to watch in the

garden, like woodpeckers in a tree hole and a walk

of a squirrel. Moreover, the experts also purposely

prepared videos for the application, like a close up of

the beautiful Bauhinia’s pink flower. The experts also

provided photos of 36 different birds that inhabit the

garden. To match the bird’s images we searched for

each bird’s sound from the public domain to copy-

righted ones and asked each author to use them in the

experiences.

To go beyond what can be seen in the garden, the

visit to the coffee greenhouse can be complemented

with a video that describes the history and the com-

plete process to obtain a coffee drink, since the plan-

tation until the cup of the user. This video combines

3 videos provided by Centro de Ci

ˆ

encia do Caf

´

e and,

resorting to Kdenlive

8

, it includes new frames, which

add the title and the provider’s credits and logo.

Some of the contents of the experiences were pro-

duced by students of master degrees in Informatics

Engineering and Informatics Teaching.

The method that was applied to involve students

derives from previous experiences that also involved

8

https://kdenlive.org/

GRAPP 2022 - 17th International Conference on Computer Graphics Theory and Applications

94

students in the production of virtual models in digital

heritage (Redweik et al., 2017; Cl

´

audio et al., 2017).

A way of rewarding the work of students is to

pose the challenge of producing contents as part of the

evaluation elements of a curricular unit, so that the re-

sult of the work is recompensed with a grade. So, the

themes/proposals were presented in a curricular unit

in the area of modelling and animation (Animation

and Virtual Environments). Additionally, a factor that

has impact in the enthusiasm of the students is that the

garden is part of their own University.

The students created the videos using Blender

9

to learn the three-dimensional features of the tool.

Videos should have two versions, with and without

a green background, so we could choose when creat-

ing the experience the best option. The Android and

iOS AR Component created, and the AR tools use this

green background to make it invisible when recog-

nized and tracked. The EasyAR tool can only make it

possible through the Alpha Channel technique. Thus,

using the video editing software, Kdenlive, the video

was converted to an Alpha Video so that EasyAR

could make the video background invisible. This way,

the immersion of the video in the environment was

guaranteed.

Tile panels in the JBT inspired the creation of lu-

dic videos. Animals depicted in those tiles were re-

produced, animated and associated with their sound

in nature to bring them to life. The vegetation is also

animated simulating the movement with the blowing

wind. Another video created by the students gives life

to a seemingly disorganised tile panel by ordering it

and solving the puzzle.

To observe the evolution of trees throughout the

year a set of trees that show the most changes in

colour and fall of leaves, fruit and flowers over the

year was selected. Therefore, during a year, every

week, a photo session was held with these trees with

the same camera on a tripod, keeping the same posi-

tion, direction and inclination of the camera. In each

session, at least three photos were taken of each tree

with three types of focus and brightness so that when

creating the sequence there could be a wide variety of

choices for the ideal photo.

4.3 Conceived Experiences

The JBT app includes AR and multimedia experi-

ences, being each one available in a particular PoI in

the garden where it really enriches the visit. All these

places where suggested and validated by the experts.

The set of AR and multimedia experiences include:

9

https:/www.blender.org/

• Time-lapses composed by sequences of images

that show the evolution of a tree along the year;

• Animated 2D and 3D models superimposed over

tile panels;

• Virtual images of birds which, through touch, trig-

ger the emission of the respective call or song of

the specie;

• Informative and garden’s natural life videos.

A set of trees whose appearance varies signifi-

cantly throughout the year was selected. In these PoI

a sequence of images, 1 to 5 per month, depending on

the tree changes, shows the evolution of the tree over

the year. The photographs of trees taken throughout

the year passed through a stage of selection and edi-

tion using GIMP

10

graphical editor. This way, the ex-

perience would provide the visitor with a smooth se-

quence of images to feel like a timelapse (Figure 1a).

The user interface allows the visitor to know the

month of the photograph and to control the sequence

of images of the chosen tree. Thus, the user always

has the temporal perception of the sequence. In ad-

dition, three buttons were included, one for play and

pause and two for changing the direction of the se-

quence or manually changing the image. Finally, we

created an interactive seek bar with open-source li-

braries. So, the visitor can interact in different ways

with the sequence of images. The starting month is

the one the user is visiting the garden and ending in

the previous month. The result can be seen in Fig-

ure 1a. Since the appearance of trees varies over time,

image recognition alignment is not recommended. In

addition, sensor-based tracking can also bring inaccu-

racies, which is why we decided to use image-assisted

alignment to ensure the user gets the best time-lapse

experience pointing to the selected trees.

The tiles in the Garden were used as natural marks

to trigger the playback of videos inspired by these

tiles. A target image was created from the tile. This

way, the video can be reproduced exactly on top of

the real object. When appearing in the field of view

of the device’s camera, EasyAR and ARKit will rec-

ognize and reproduce the video. The result can be

seen in Figure 1b.

The AR tools would hardly recognize some tiles

and places in the Garden, whose illumination, shad-

ows or appearance interfere with its recognition. The

image-assisted alignment tool created was used in all

these situations.

Birds may not be the first thing we notice when

visiting a Botanical Garden, but within JBT it is pos-

sible to observe more than 50 bird species. To high-

light the birds that can be observed in different areas

10

https://www.gimp.org/

Enriching the Visit to an Historical Botanic Garden with Augmented Reality

95

(a) Silk Floss Tree time-

lapse experience.

(b) Tile panel AR experi-

ence.

Figure 1: AR experiences.

of JBT we designed an AR experience that associates

birds’ images with the respective call or song. This

experience may be played in different observation ar-

eas and, in each one, with the species which are more

probable to find there. The user is invited to search

around him/her the silhouettes of birds with a ques-

tion mark in the centre of the caption (Figure 2a). By

clicking on the silhouette, it turns into a coloured im-

age, the name of the specie is displayed and the re-

spective sound is played. An informative text box is

presented on the top of the screen with the number of

birds already discovered and the total number of birds

to be discovered, ending with a success message to

congratulate the visitor after all birds have been found

(Figure 2b).

These experiences rely on the sensors of the de-

vice: magnetometer and gyroscope. The silhouette

of the bird is placed where it is more likely to find

a real one, for instance, at the top of a tree, on the

soil or near the lake. To prepare these experiences,

36 photographs of the species more often observed

were edited with GIMP to cut around each bird and

remove the background. Then, they were resized to be

as close to its actual size compared to all other birds’

image sizes.

The audio files obtained for each specie were also

prepared using Audacity

11

: the sound is cut at the

maximum of 20 seconds and the background noise

existing in the various recordings was muffled. Ad-

ditionally, all recordings were placed with the maxi-

mum decibel peaks of -6, leaving approximately the

same volume levels and decibel amplitude.

Besides AR core experiences, some of the col-

11

https://www.audacityteam.org/

(a) Bird silhouette with

question mark.

(b) Mallard image with

its labelled name.

Figure 2: Birds experience.

lected videos already mentioned were associated with

PoI to enrich the visit. It did not make sense to align

these videos with any kind of AR alignment, as they

were not recorded with that purpose. The videos with

longer duration, like the explanation of coffee beans

processing, are presented with an interface that allows

the user to have greater control over the experience: to

know the duration of the content and be able to stop

or choose a part of the video that they want to review,

or even search for the part that interests them most to

freely view.

5 JBT APPLICATION

This section describes the development of JBT app

including the AR and multimedia experiences previ-

ously described and following the set of requirements

associated with the development of mobile applica-

tions to support visits to Botanical Gardens (Posto-

lache et al., 2021).

5.1 Objectives

The JBT app was developed as part of a project by the

Rectory of the University of Lisbon aiming to com-

plement the cultural and educational guided tours and

provide an interactive and engaging experience be-

tween the visitors and the Botanical Garden. The app

offers a guide for visitors providing information about

the main PoIs and provides AR and multimedia con-

tents to enrich the experience of the visitors.

GRAPP 2022 - 17th International Conference on Computer Graphics Theory and Applications

96

5.2 Methodology and Contents

The project followed a User-Centred Design (UCD)

(Abras et al., 2004) and integrated a multidisciplinary

team that included researchers from multiple disci-

plines.

The JBT app development comprised several it-

erations where the app was progressively refined ac-

cording to the feedback of domain experts and users.

The first cycles evolved the design, creation, and val-

idation of low and medium fidelity and the last ones

included the implementation, test, and validation of

the application with end-users.

The application is freely available to Android and

iOS in four languages (English, French, Portuguese,

and Spanish) and can be downloaded without requir-

ing mobile data consumption in certain locations in

the Garden. The application collects authorized de-

mographic data of the visitors and location data dur-

ing the visit to understand the visitor use patterns and

to improve the offered services.

The JBT app was developed for three main groups

of users: tourists; students and experts; and families

with children and offers four different thematic tours:

• “Trees you must see”: includes the 20 most im-

portant and emblematic plants, among the hun-

dreds present in the Garden. For each, it presents

the species name and family, taxonomic notes,

etymology, common names, common uses, geo-

graphic distribution, ecological preferences, flow-

ering and fruiting period, and interesting mor-

phological or reproductive characteristics of the

species, mainly those that can be seen by the visi-

tor;

• “Garden with history”: shows the different his-

torical layers of the JBT and presents informa-

tion about historical buildings and statuary, dating

from the 17th to the 20th century;

• “Birds”: includes information about 36 bird

species that can be observed in the Garden, that

goes from passerines to aquatic species. For each

species, it presents a description, images, species

habitats, curiosities, and details on the probability

of being seen and when does it occur (e.g., if it is

a resident, summer or winter visitor);

• “Biosensors”: presents a large number and diver-

sity of bryophytes and lichens, and their impor-

tance as environmental bio-indicators. For each

species, it shows the description, an image and a

pollution sensitivity scale of each taxa. It includes

24 most common species, 14 species of mosses, 2

species of liverworts and 8 species of lichens.

The rational for the selection of these tours, the

associated PoIs and respective contents, can be found

in (Postolache et al., 2021). In addition to these con-

tents, the three first tours also include AR and multi-

media contents (already mentioned in Section 4).

5.3 Application Overview

This project evolved the development of a mobile ap-

plication for the Botanical Garden with a list of tours

tailored to the various visitor profiles and a back-end

component of the system to manage the contents and

data that are collected and used in the mobile applica-

tion.

As such, the proposed system follows a client-

server architecture. The client component comprises

the various instances of the mobile application run-

ning on the Android and iOS operating systems,

which communicate with the server to synchronize

visitor trajectory (sequence of timestamped locations

points followed by the visitor), demographic data and

to retrieve data regarding tours and associated PoIs.

The NGINX

12

server software dispatches requests

to a web application implemented using the Lumen

Framework

13

. The web application provides a REST

API, enabling client applications to safely access the

data stored in a PostgreSQL database with the Post-

GIS extension to store geographic data.

The JBT app starts with an initial screen with the

available list of tours, and for each, indicates the es-

timated duration and the number of PoIs. Next, the

user can choose a specific tour and access to their de-

scription. All the contents associated with the tour are

dowloaded and the next screen displays a map, an or-

ange dashed line indicating the recommended route

and the icons of the PoIs (Figure 3a). The routes

design took into account several criteria, namely, in-

clusion of the most important PoIs, accessibility and

safety of the visitors, passing through strategic points

(e.g., souvenir shop and cafeteria), and the GPS signal

strength.

The PoIs are represented by coloured icons, sim-

ilar to Google map markers, with a white circle and

a number in the middle to indicate their order in the

tour. Markers are represented without the white cir-

cle, so that the visitor can recognize the PoIs already

visited. At the map screen, the visitors can tap on the

menu in the upper right corner, which gives access

to the list of PoIs or can select a marker to directly

enter the PoI description screen (Figure 3b). To rep-

resent PoIs with AR and multimedia experiences, the

respective marker appears with a green icon drawn at

12

https://nginx.org/

13

https://lumen.laravel.com/

Enriching the Visit to an Historical Botanic Garden with Augmented Reality

97

(a) Map of a tour. (b) PoI with AR.

Figure 3: Information about tours and PoIs.

the top of the marker or in the list of PoIs and de-

scription (Figure 3b). The colour palette used for all

application contents, including AR and UI contents

(e.g. PoI markers, icon tours) was chosen in order to

be accessible for people with colour blindness. The

”Garden with history” map screen also has filters to

select only the PoIs associated with a specific histori-

cal period. In ”Birds” and ”Biosensors” tours the PoI

icon represents an area of observation, where is a high

probability of encounter several birds, bryophytes and

lichens species.

5.4 Maps and Positioning Systems

The map view of JBT app is used to represent, for

each tour the PoIs, the recommended route and the

current user’s location. To obtain a map that includes

all important pedestrian paths, buildings, lakes and

other remarkable geographic features with their cor-

rect positions, we analyzed several interactive map

SDKs, such as Google Maps and Open Street Maps

(OSM). We realized that either some paths of inter-

est were not represented, or they were wrongly rep-

resented in shape or position, and it would be neces-

sary to correct and add many regions and paths. Since

this could be a time consuming and paid process (e.g.,

Google Maps) it was decided to implement our own

map of the Garden based on three main sources.

The first source considered was a map in the form

of a shapefile containing accurate data for all the

paths, buildings, lakes and other built objects that

could serve as references for the visitor. This map

has many features, like trees, elevation and buildings

outside the garden which are not relevant to the repre-

sentation of the Garden. The information was trans-

formed from shapefile to SVG using the open soft-

ware QGIS

14

and all the irrelevant details of the map

were removed using GIMP.

Additionally, as some regions and paths suffered

changes during the rehabilitation of the Garden in

2019, we considered another SVG version of the Gar-

den map. Based on this information, we incorporated

the missing features elements, such as paths in the ori-

ental garden, and other paths that lead to the green

houses. In the end, all this information was validated

in loco. Finally, the resulting version was colored us-

ing the color palette from the official map available

at the entrance of the Garden and exported to PNG

format. This format was used instead of the SVG to

support zooming, panning, and overlaying of objects

operations.

The map served also as georeferenced base for the

positioning of the visitor relative to the path and to the

PoIs in the garden. The visitor position and orienta-

tion are represented on the map by a blue circle with

white outline and an arrow in the middle of the circle,

respectively (Figure 3a). The position is calculate us-

ing the GPS receiver and the orientation is obtained

using a sensor fusion algorithm that combines data

from accelerometer, magnetometer and gyroscope to

solve problems of inaccuracy from the magnetic field

sensor and gyro drift

15

.

5.5 Integration of AR and Multimedia

The AR and multimedia experiences are associated

with PoI of the tours “Trees you must see”, “Garden

with history” and “Birds”.

To start any experience it is necessary to click on

an existing green button, already mentioned, on the

corresponding PoI interface, thus making the connec-

tion between the JBT app and the AR Component. A

PoI can be associated with 1 or 2 experiences, each

one with a specific button (Figures 3b and 4a). So

that the user could distinguish which of the experi-

ences he/she wanted to start, small cropped images

relating to those experiences were added to the upper

left corner of the buttons for these experiences.

After selecting the experience, there are instruc-

tion dialogues to guide the user (Figure 4b). When

tracking is performed with image-assisted alignment,

the image of the real-world object is displayed with a

button below showing the text ”Lined up” (Figure 5a).

The user is invited to align the image with the real-

world object and then click on the button to start the

experience.

For tracking with natural markers, a dialogue win-

dow is shown while the target image is not detected.

14

https://www.qgis.org/

15

http://plaw.info/articles/sensorfusion

GRAPP 2022 - 17th International Conference on Computer Graphics Theory and Applications

98

(a) PoI with 2 AR experi-

ences.

(b) An instruction dia-

logue.

Figure 4: AR integration.

In this case, the target image is displayed at the centre

of a transparent PNG image, delimited by 4 corners

marked in white and with an informative text box at

the centre of the screen (Figure 5b). This way the user

is always aware that the camera has not yet capture

the correct alignment and is still needed to point the

camera to the target image. When the target image is

recognized, the dialogue window disappears.

(a) Image-assisted align-

ment.

(b) Image recognition

alignment.

Figure 5: Dialogues for alignment techniques.

6 PRELIMINARY EVALUATION

The evaluation of the JBT app was concerned with

usability, given its intended general user base. To in-

crease the validity of the evaluation, we asked volun-

teers if they would like to visit the garden and try the

app there. In this way, the evaluation was carried out

in a realistic environment and the positioning on the

map and AR features were exercised.

6.1 Participants, Tasks, and Procedure

A total of 39 participants volunteered for the study,

20 female and 19 male, the median age was 23 years,

ranging from 11 to 50 years. The most common

age band was 20–30 years, corresponding to 82% of

the participants. About 74% had an Android mobile

phone and 33% had experience with digital guides. A

convenience sampling was used to select the partic-

ipants, who were recruited from social contacts and

no monetary award was offered, but the admittance to

the garden was free.

During an evaluation trial, participants carried out

tasks covering most features of the JBT app, namely:

selecting a tour; interacting with the map and its PoIs;

using the list of PoIs; reading information about a PoI;

following a tour; applying filters to the PoIs shown on

the map; and interacting with at least one AR experi-

ence of each type.

A trial started when a participant arrived at the gar-

den, after which a brief presentation about the JBT

app was given by a researcher. The participant was

informed about the rights to privacy and to leave at

anytime and then filled out an entrance questionnaire

about gender, age, model of his/her mobile phone, and

experience with digital guides. A training phase came

next, during which the participant was asked to install

the JBT app on the mobile phone and explore it freely,

putting questions if needed. The testing phase com-

prised the tasks mentioned in the previous paragraph

and lasted between 40 and 90 minutes, with a mean

time of 66 minutes. At the end of a trial, the partic-

ipant was given a link to an online system usability

scale (SUS) questionnaire (Brooke, 2013).

At the end of the SUS questionnaire, we added

a block of statements about several features of the

JBT app, that participants evaluated using a five-point

Likert scale. The statements were: 1) it was easy to

choose a tour; 2) the current position and orientation

symbol on the map helped me do the tour; 3) it was

clear on the map the PoIs about which I consulted

information; 4) it was easy to find the list with the

names and images of the PoIs of the tour; 5) the list

with the PoIs of the tour was useful; 6) the organiza-

Enriching the Visit to an Historical Botanic Garden with Augmented Reality

99

tion and presentation of information about each PoI

was adequate; 7) it was easy to see on the map which

PoI have augmented reality experiences; 8) I under-

stood the instructions for performing the augmented

reality experiences; 9) in the “Garden with history”

tour, I found the functionality to filter the PoI perti-

nent; and 10) in the “Birds” tour, in the augmented re-

ality experience, I understood the instructions to look

for the birds. Besides the Likert scale, each statement

had an optional comments field.

6.2 Results and Discussion

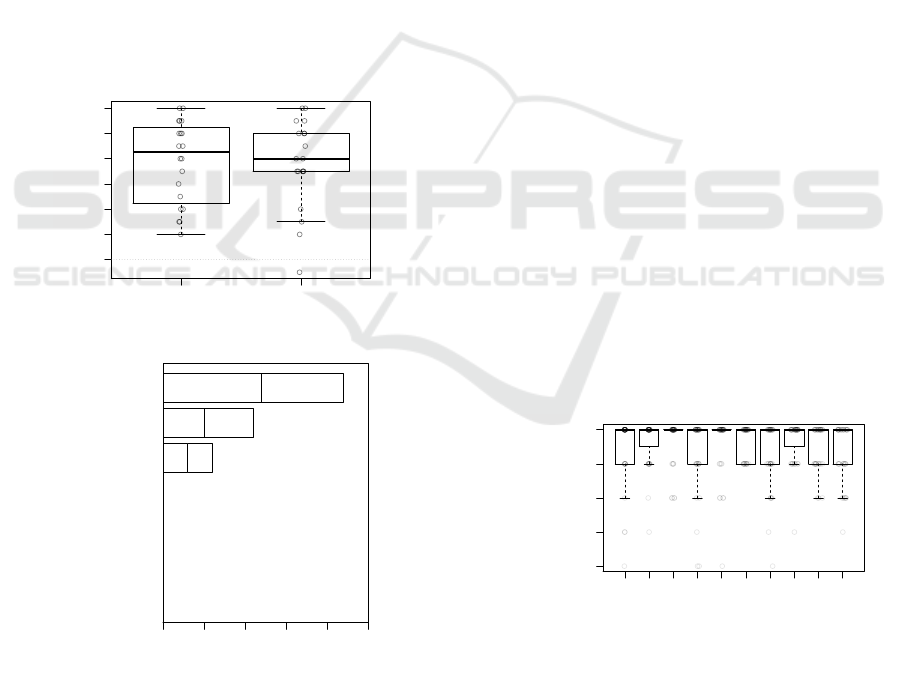

Results from the SUS questionnaire revealed high lev-

els of perceived usability for the JBT app. The median

score was 90.0, which is well above the average SUS

score of about 70 reported in the literature (Brooke,

2013). The boxplots in Figure 6a show a larger vari-

ability of scores around the median for the female par-

ticipants and also that the lowest scores were given by

male participants. Even so, the median scores were

essentially the same for both genders.

●

●

FEMALE MALE

70 75 80 85 90 95 100

SUS SCORE

AVERAGE SUS SCORE

(LITERATURE)

(a) SUS scores by gender.

COUNT

0 5 10 15 20 250 5 10 15 20 25

WORST

IMAGINABLE

AWFUL

POOR

OK

GOOD

EXCELLENT

BEST

IMAGINABLE

0

0

0

0

6

11

22

FEMALE | MALE

ADJECTIVE RATING

(b) Ranking of SUS scores.

Figure 6: Results of SUS questionnaire.

Another way of interpreting the SUS scores is to

convert them using a qualitative rating scale (Bangor

et al., 2009), as shown in Figure 6b. The majority

of scores, were for the “best imaginable” and “ex-

cellent” usability levels, with 56% and 28%, respec-

tively. However, in 16% of the cases, the usability

was “good”, which prompted us to make an analy-

sis of the responses for the individual SUS questions.

We found that the most important causes for the more

modest scores were as follows: lower intention to use

the app frequently; higher need of a technical person

to be able to use the app; and a less favourable opinion

about the integration of the app functions. The two

latter causes could be related to reported difficulties

in locating some PoI, perhaps due to the GPS inaccu-

racy, and in finding app functions, namely the filters

in the “Garden with history” tour.

Regarding the evaluation of the JBT app features

via the ten statements mentioned at the end of the pre-

vious section, Figure 7 shows a strong agreement with

all those statements. Since we always expressed the

statements in positive terms, reflecting our desire that

the app is successful, we can accept that the features

were mainly approved by the participants. To under-

stand why there were disagreements, we analysed the

comments and highlight the following: about state-

ment 2, three participants argued that the map itself

should rotate, not the symbol representing the user;

concerning statement 3, there was one opinion that

a distinction should be made between signaling that

information pages of PoIs were accessed (which can

be done at home) and indicating that PoIs were actu-

ally visited in the garden; on statement 4, three par-

ticipants did not notice or could not find the list of

PoIs; and, finally, about statement 7, the meaning of

the AR experiences symbol (an eye) was not clear for

two participants. We note that less than 8% of the

users point out some less positive detail that will help

us to improve future versions of the app.

●

●

● ●

● ●

●●●●

●●●

●

●

●

●●

●

●

●●

●

●

●

●

● ● ●

STATEMENT ABOUT APP FEATURE

1 2 3 4 5 6 7 8 9 10

STRONGLY

DISAGREE

DISAGREE

NEITHER AGREE

NOR DISAGREE

AGREE

STRONGLY

AGREE

Figure 7: Results of the app features evaluation.

The comments provided by users in the optional

field text reinforce the positive overall opinion re-

vealed by the quantitative questions. Some positive

observations are quite general, but others are more

objective, for instance, appreciating the dynamism of

the experiences, namely the evolution over the year of

GRAPP 2022 - 17th International Conference on Computer Graphics Theory and Applications

100

specific trees. The added value of this app compared

with a traditional guide in paper was also pointed out.

Moreover, some adults that visited the garden with

their children, observed that these were highly enthu-

siastic about the AR experience to find the birds, ex-

periencing it over and over again.

7 CONCLUSIONS

This paper proposes contributions for the creation of

AR experiences in mobile applications for Botanical

Gardens. Conceiving AR experiences for these natu-

ral spaces present specific challenges, namely the se-

lection of appropriate tracking methods for the expe-

riences, the type of multimedia content to be shown

and appropriate development tools. We have applied

these guidelines to the development of the JBT app,

which is already available to visitors. To evaluate the

JBT app, we conducted a user study in loco with sev-

eral participants and the results pointed to an excel-

lent usability and easy to use. Additionally, the par-

ticipants expressed an overall enthusiasm and positive

opinions about the AR experiences and the app con-

tent. The feedback received will help improve future

versions of the app.

Besides JBT app is available for over a year, due

to the pandemic and the difficulty to obtained consent

for data collection, we still do not have a significant

number of visitors. However, with the data already

collected, we are carrying out the analysis of data tra-

jectories, in order to understand the moving patterns

of visitors. We intend to analyse how much time visi-

tors spend in specific locations, the most visited PoIs

or tours, the distribution of visitors, among others.

This information will help the JBT managers to im-

prove the offered services, namely designing person-

alized tours based on the profile of the visitor or new

tours to raise the engagement in guided visits.

Finally, we intend to use the JBT app in a learning

context, aiming to validate the educational value of

the experiences and content, for example, with high

school biology students.

ACKNOWLEDGEMENTS

This work was supported by Universidade de Lisboa

and by FCT through the LASIGE Research Unit, ref.

UIDB/00408/2020 and ref. UIDP/00408/2020. We

also want to thank to the researchers and JBT col-

laborators who participated in this project, Ana God-

inho Coelho, Ana Isabel Leal, C

´

esar Garcia, Maria

Cristina Duarte, Paula Redweik, Palmira Carvalho

and Raquel Barata, to Tiago Ribeiro and Sofia Cruz

for the collaboration in the design of icons, to Pro-

fessor Pinto Paix

˜

ao for mentoring this project, to Dra

Marta Lourenc¸o for her enthusiastic support, and to

all the students who worked in producing AR con-

tents.

REFERENCES

Abras, C., Maloney-Krichmar, D., Preece, J., et al. (2004).

User-centered design. Bainbridge, W. Encyclopedia of

Human-Computer Interaction. Thousand Oaks: Sage

Publications, 37(4):445–456.

Andrade, J. G. and Dias, P. (2020). A phygital approach to

cultural heritage: augmented reality at regaleira. Vir-

tual Archaeology Review, 11(22):15–25.

Bangor, A., Kortum, P., and Miller, J. (2009). Determin-

ing what individual SUS scores mean: Adding an

adjective rating scale. Journal of Usability Studies,

4(3):114–123.

Bekele, M. K., Pierdicca, R., Frontoni, E., Malinverni, E. S.,

and Gain, J. (2018). A survey of augmented, virtual,

and mixed reality for cultural heritage. Journal on

Computing and Cultural Heritage (JOCCH), 11(2):1–

36.

Bettelli, A., Buson, R., Orso, V., Benvegn

´

u, G., Pluchino,

P., and Gamberini, L. (2020). Using virtual reality to

enrich the visit at the botanical garden. Annual Review

of Cybertherapy and Telemedine 2020, page 57.

Brooke, J. (2013). SUS: a retrospective. Journal of Usabil-

ity Studies, 8(2):29–40.

Chong, H. T., Lim, C. K., Ahmed, M. F., Tan, K. L.,

and Mokhtar, M. B. (2021). Virtual reality usability

and accessibility for cultural heritage practices: Chal-

lenges mapping and recommendations. Electronics,

10(12):1430.

Cl

´

audio, A. P., Carmo, M. B., de Carvalho, A. A., Xavier,

W., and Antunes, R. F. (2017). Recreating a medieval

urban scene with virtual intelligent characters: steps

to create the complete scenario. Virtual Archaeology

Review, 8(17):31–41.

Feiner, S., MacIntyre, B., and Seligmann, D. (1993).

Knowledge-based augmented reality. Communica-

tions of the ACM, 36(7):53–62.

Harrington, M. C. (2020). Connecting user experience to

learning in an evaluation of an immersive, interac-

tive, multimodal augmented reality virtual diorama in

a natural history museum & the importance of story. In

6th international conference of the Immersive Learn-

ing Research Network (ILRN), pages 70–78.

Harrington, M. C., Tatzgern, M., Langer, T., and Wenzel,

J. W. (2019). Augmented reality brings the real world

into natural history dioramas with data visualizations

and bioacoustics at the Carnegie Museum of Natural

History. Curator: The Museum Journal, 62(2):177–

193.

Huang, T.-C., Chen, C.-C., and Chou, Y.-W. (2016). An-

imating eco-education: To see, feel, and discover in

Enriching the Visit to an Historical Botanic Garden with Augmented Reality

101

an augmented reality-based experiential learning en-

vironment. Computers & Education, 96:72–82.

Jain, M., Rahul, R. C., and Tolety, S. (2013). A study on

indoor navigation techniques using smartphones. In

2nd international conference on Advances in Com-

puting, Communications and Informatics (ICACCI),

pages 1113–1118. IEEE.

Kim, S. K., Kang, S.-J., Choi, Y.-J., Choi, M.-H., and Hong,

M. (2017). Augmented-reality survey: from concept

to application. KSII Transactions on Internet and In-

formation Systems (TIIS), 11(2):982–1004.

Klein, G. and Murray, D. (2007). Parallel tracking and

mapping for small ar workspaces. In 2007 6th IEEE

and ACM international symposium on mixed and aug-

mented reality, pages 225–234. IEEE.

Pombo, L. and Marques, M. M. (2019). Improving

students’ learning with a mobile augmented reality

approach–the edupark game. Interactive Technology

and Smart Education.

Pombo, L., Marques, M. M., Lucas, M., Carlos, V.,

Loureiro, M. J., and Guerra, C. (2017). Moving learn-

ing into a smart urban park: students’ perceptions of

the augmented reality edupark mobile game. IxD&A,

35:117–134.

Postolache, S., Torres, R., Afonso, A. P., Carmo, M. B.,

Cl

´

audio, A. P., Domingos, D., Ferreira, A., Barata, R.,

Carvalho, P., Coelho, A. G., Duarte, M. C., Garcia,

C., Leal, A. I., and Redweik, P. (2021). Contribu-

tions to the design of mobile applications for visitors

of botanical gardens. accepted for publication in Pro-

cedia Computer Science.

Prabhu, S. (2017). Augmented reality sdks in 2018: Which

are the best for development.

Rabbi, I. and Ullah, S. (2013). A survey on aug-

mented reality challenges and tracking. Acta graph-

ica: znanstveni

ˇ

casopis za tiskarstvo i grafi

ˇ

cke komu-

nikacije, 24(1-2):29–46.

Redweik, P., Cl

´

audio, A. P., Carmo, M. B., Naranjo, J. M.,

and Sanjos

´

e, J. J. (2017). Digital preservation of cul-

tural and scientific heritage: involving university stu-

dents to raise awareness of its importance. Virtual Ar-

chaeology Review, 8(16):22–34.

Santos, L., Silva, N., N

´

obrega, R., Almeida, R., and Coelho,

A. (2020). An interactive application framework

for natural parks using serious location-based games

with augmented reality. In 15th international confer-

ence on Computer Graphics Theory and Applications

(GRAPP), pages 247–254.

Siang, T. G., Ab Aziz, K. B., Ahmad, Z. B., and Suhaifi,

S. B. (2019). Augmented reality mobile application

for museum: A technology acceptance study. In 6th

international conference on Research and Innovation

in Information Systems (ICRIIS), pages 1–6. IEEE.

Siriwardhana, Y., Porambage, P., Liyanage, M., and Yliant-

tila, M. (2021). A survey on mobile augmented reality

with 5g mobile edge computing: Architectures, appli-

cations, and technical aspects. IEEE Communications

Surveys & Tutorials, 23(2):1160–1192.

Tan, W., Liu, H., Dong, Z., Zhang, G., and Bao, H. (2013).

Robust monocular slam in dynamic environments. In

2013 IEEE International Symposium on Mixed and

Augmented Reality (ISMAR), pages 209–218. IEEE.

Zhou, F., Duh, H. B.-L., and Billinghurst, M. (2008).

Trends in augmented reality tracking, interaction and

display: A review of ten years of ISMAR. In 2008 7th

IEEE/ACM International Symposium on Mixed and

Augmented Reality, pages 193–202. IEEE.

GRAPP 2022 - 17th International Conference on Computer Graphics Theory and Applications

102