Effects of Global Illumination of Virtual Objects in 360

◦

Mixed Reality

Jingxin Zhang, Jannis Volz and Frank Steinicke

Human-Computer Interaction, University of Hamburg, Germany

Keywords:

Global Illumination, 360

◦

Mixed Reality, Spatial Localization, User Experience.

Abstract:

Consistent rendering of virtual objects in 360

◦

videos is challenging and crucial for spatial perception and the

overall user experience in so-called 360

◦

mixed reality (MR) environments. In particular, global illumination

can provide important depth cues for localizing spatial objects in MR, and, moreover, improve the overall

user experience. Previous works have introduced MR algorithms, which allow for the seamless composition

of 3D virtual objects into a 360

◦

video-based virtual environment (VE). For instance, for consistent global

illumination, the light source in the 360

◦

video can be detected and used to illuminate virtual objects and

shadow calculation, or the 360

◦

video can be reflected on specular virtual objects, known as environment

mapping. Until now, it has not been evaluated if and to what extent global illumination of virtual objects in

360

◦

MR environments improve spatial localization and user experience. To address this research question,

we performed a user study in which we compared object localization with and without global illumination in

360

◦

MR environments. The results show that the global illumination of virtual objects significantly reduces

the localization error and improves the overall user experience in 360

◦

MR environments.

1 INTRODUCTION

Head-mounted displays (HMDs) provide natural and

immersive virtual reality (VR) experiences by sup-

porting motion parallax, a wide field of view, and

stereoscopic display (Steinicke, 2016). Although,

HMDs are often used with computer-generated VR

content, VR technology also allows viewing of im-

mersive videos. Typically, such immersive videos are

captured with 360

◦

panoramic cameras, which sup-

port omnidirectional views of the surrounding envi-

ronment. It gets possible, then, to visit a remote real-

world scenario in an immersive way without the need

to physically travel to the remote place. Traditionally,

a remote scenario is displayed in the VR by attaching

the input video onto a spherical surface as movie tex-

ture (Zhang et al., 2018), or as wrapped textures of a

virtual skybox in the virtual environment (VE). Since

the captured videos have been recorded from fix lo-

cations, 360

◦

videos do not support motion parallax,

and, moreover, stereoscopic cues are limited because

the virtual scenes show VEs at larger distances. As a

result, depth cues for spatial presence are limited in

360

◦

VR (Bertel et al., 2019).

In mixed reality (MR) environments, these im-

mersive videos can be augmented by virtual objects

such as buildings, cars, or avatars (Milgram and

Kishino, 1994) to blend real content (from the im-

mersive video) with computer-generated virtual ob-

jects. The additional display of familiar objects inside

immersive videos has also the potential to improve

spatial perception (M

¨

uller et al., 2016). Such 360

◦

video-based VEs and virtual objects are referred to

as 360

◦

MR environments (Rhee et al., 2017). How-

ever, the combination of real contents and virtual ob-

jects in 360

◦

video-based VEs, raises the challenge of

consistent global illumination (Noh and Sunar, 2009;

Pessoa et al., 2010). Global illumination allows for

consistent lighting for virtual objects, reflections on

specular surfaces, and shadows on the ground or other

virtual objects (Steinicke et al., 2005). All of these ef-

fects provide users with important depth cues for vi-

sual perception and spatial localization (Zeil, 2000),

and furthermore, improve the overall user experience

(Steinicke et al., 2005).

Previous research (Rhee et al., 2017) has imple-

mented seamless composition of 3D virtual objects

into a 360

◦

video-based VE by detecting the light

source from the panoramic video and adjusting the

virtual light source accordingly to create a consistent

illumination of virtual objects. So far, it is not known

if and to what extent this global illumination of virtual

objects could improve spatial localization and user ex-

perience in 360

◦

MR environments. To address this

184

Zhang, J., Volz, J. and Steinicke, F.

Effects of Global Illumination of Virtual Objects in 360

o

Mixed Reality.

DOI: 10.5220/0010861100003124

In Proceedings of the 17th International Joint Conference on Computer Vision, Imaging and Computer Graphics Theory and Applications (VISIGRAPP 2022) - Volume 2: HUCAPP, pages

184-189

ISBN: 978-989-758-555-5; ISSN: 2184-4321

Copyright

c

2022 by SCITEPRESS – Science and Technology Publications, Lda. All rights reserved

open research question, we first implemented a 360

◦

MR environments with consistent global illumination.

Second, we conducted a user study in which we com-

pared the user’s performance in an object localization

task with and without global illumination in 360

◦

MR

environments, and furthermore, evaluated the effect

of global illumination on user experience.

The remainder of this paper is structured as fol-

lows: Section 2 resumes related works. Section 3 in-

troduces the design and procedure of the user study.

Section 4 summarizes the results and Section 5 con-

cludes the paper and discusses future work.

2 RELATED WORK

Spatial perception and localization in VEs are im-

portant research topics (Alatta and Freewan, 2017),

and a vast body of literature has considered depth

and size perception in virtual (Interrante et al., 2006;

Bruder et al., 2012; Knapp and Loomis, 2004;

Steinicke et al., 2009) and augmented reality (Her-

tel and Steinicke, 2021; Jones et al., 2011). The ma-

jority of the previous works has shown that distances

to virtual objects are often underestimated, in par-

ticular, when the objects are displayed at larger dis-

tances (Plumert et al., 2005). It has also been shown

that global illumination of virtual objects can improve

the depth perception in computer-generated VEs as

well as in AR scenarios, in which users see the real

world through an optical see-through HMD such as

Microsoft Hololens (Hertel and Steinicke, 2021).

Global illumination of virtual objects in 360

◦

MR

environments can provide important depth cues for vi-

sual perception and spatial localization, and add an

additional level of realism to virtual objects (Haller

et al., 2003), which could help to integrate virtual ob-

jects into the rendered VE based on 360

◦

immersive

videos smoothly and improve the overall user expe-

rience. For example, previous work by Steinicke et

al. (Steinicke et al., 2005) introduced a MR setup in

which a web camera was used to capture parts of the

surrounding, which was in turn used to render lights,

shadows, and reflections of specular virtual objects in

a semi-immersive projection-based VR setup. Fur-

thermore, Rhee et al. (Rhee et al., 2017) proposed an

algorithm, which achieved seamless composition of

3D virtual objects into a 360

◦

video-based VE by ex-

ploiting the input panoramic video as lighting source

to illuminate virtual objects.

Though, there is a large body of literature regard-

ing global illumination of virtual objects in typical

VR and AR environments, and relevant algorithms

to display virtual objects in 360

◦

video-based VEs

(Kruijff et al., 2010), the effect of global illumination

of virtual objects on spatial localization and user ex-

perience in 360

◦

MR environments still remain poorly

understood.

3 USER STUDY

In the user study, we evaluated the effects of global

illumination of virtual objects on spatial localization

and user experience in 360

◦

MR environments. The

experiment received approval by our local ethics com-

mittee.

3.1 Hypothesis

We formulated the following hypotheses based on the

previous findings for computer-generated VEs as de-

scribed in Section 2:

• H1: Participants will have higher localization ac-

curacy with global illumination of virtual objects

than without.

• H2: Participants will have better localization

ability at closer distances compared to larger dis-

tances.

• H3: Participants will have better user experience

with global illumination of virtual objects than

without.

3.2 Participants

15 voluntary participants (8 male, 7 female, average

age 23.73 years, STD = 2.67) participated in the user

study. All participants were students of computer sci-

ence or human-computer interaction from our univer-

sity. All participants had previous experience with

VR and HMDs.

3.3 Experimental Setups

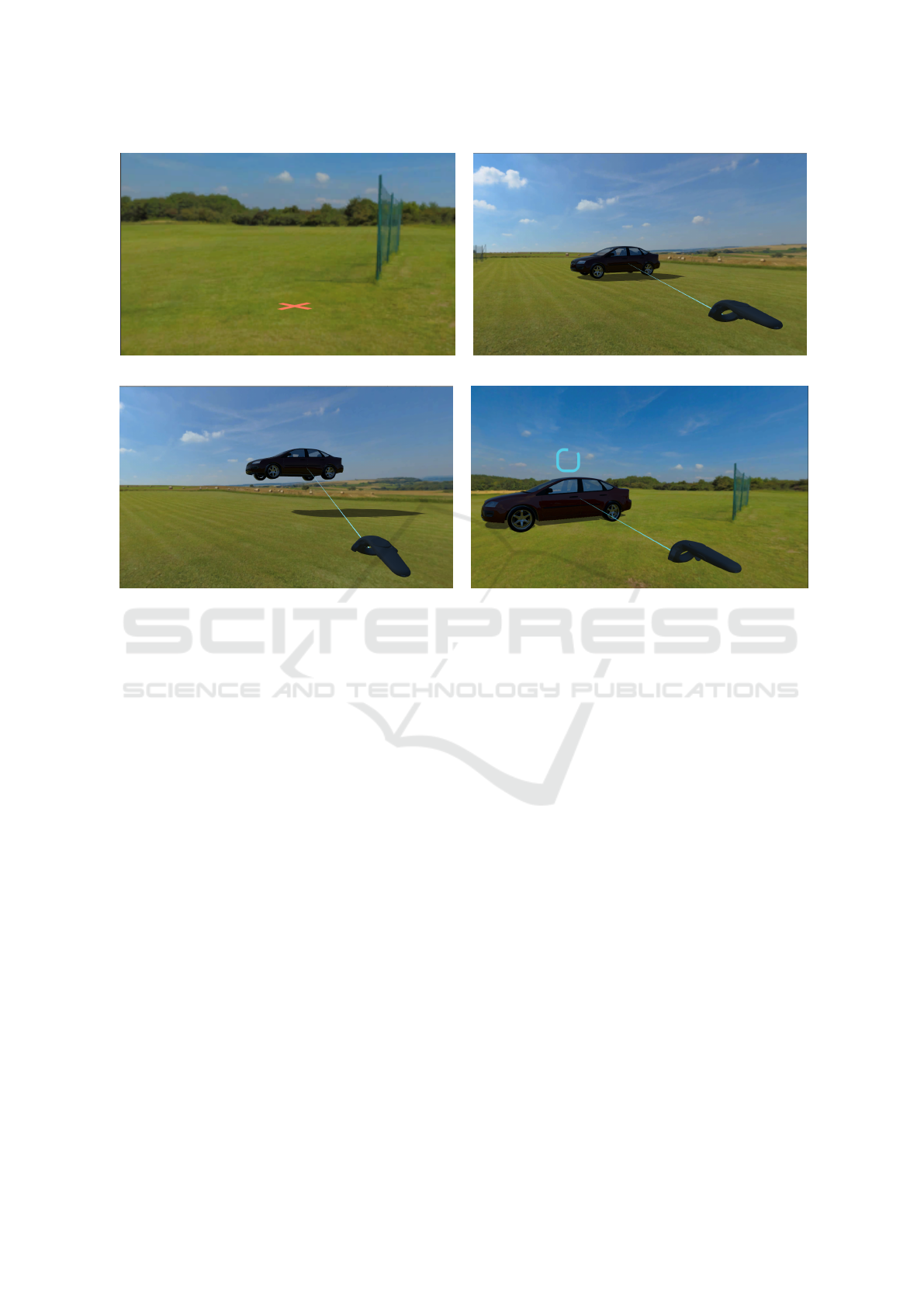

As illustrated in Figure 1, we generated a 360

◦

video-

based VE showing a wide grass field with a resolu-

tion of 8192x4096 pixels. The 360

◦

video-based VE

was rendered with the Unity Engine on a worksta-

tion, which has an Intel Core i7-4930K CPU with

3.40 GHz, an NVidia GeForce GTX 1080 Ti GPU

and a 16GB main memory. The rendered scenario

was displayed on a HTC Vive HMD with a resolu-

tion of 1080×1200 pixels per eye. The diagonal field

of view is approximately 110

◦

and the refresh rate

is 90Hz. Participants used the HTC Vive controllers

for interaction during the tasks. The global illumi-

nation of virtual objects in the 360

◦

video-based VE

Effects of Global Illumination of Virtual Objects in 360

o

Mixed Reality

185

(a) (b)

(c) (d)

Figure 1: Screenshots of the task procedure with global illumination of virtual objects (images captured through HTC Vive

Pro HMD): (a) the target position was marked with a red cross on the ground; (b) the virtual car was picked up from its default

position using VR controllers; (c) the virtual car was being moved to the target position; (d) the placing operation was being

confirmed.

was implemented based on the algorithm proposed by

Rhee et al. (Rhee et al., 2017; Rhee et al., 2018),

which supported consistent lighting (extracted from

the 360

◦

videos), shadows (dropped on the manually

defined grass ground), and virtual reflections (on the

car’s specular surface). The results are illustrated in

Figure 1

3.4 Methods

During the user study, participants were required to

stand in the center of the 360

◦

video-based VE and

move a virtual car from its default position (8m away

from participants on the right side) to the target posi-

tion (marked as a red cross on the ground) using VR

controllers (see Figure 1). The task was designed with

three factors in a 2 x 5 x 2 within-subject design: (i)

distance (2 levels: 12m, 18m), (ii) angle (5 levels: 0

◦

,

45

◦

, 90

◦

, 135

◦

and 180

◦

in yaw axis of the human

body) and (iii) global illumination effects (2 levels:

with and without).

Prior the user study, participants had to fill out a

demographic questionnaire as well as Kennedy’s sim-

ulator sickness questionnaire (SSQ) (Kennedy et al.,

1993), and perform some training trials to get famil-

iar with the operation and process. Afterwards, par-

ticipants registered to the experimental system with

their IDs and continued the formal process of the user

study. Participants stood in the center of the 360

◦

video-based VE and used VR controllers to move a

virtual car from its default position to the target posi-

tion as described above. The target position was de-

termined by corresponding combinations of distance

and angle, and marked on the ground with a red cross

when every trial began until the moving operations of

participants started. Participants were allowed to ro-

tate their body during the user study (if required) to

finish the localization task. The different stages of

this task are presented in Figure 1.

As explained above, the user study was a within-

subject design with 2 distances × 5 angles × 2 global

illumination effects = 20 conditions, and each con-

dition was repeated 6 times. Thus, every participant

needed to finish 20 conditions × 6 repetitions = 120

trials during the task. All the trials were mixed and

appeared in a randomized order to reduce the influ-

ence of participants’ ability to memorize the target lo-

cation on the localization results. For the entire user

HUCAPP 2022 - 6th International Conference on Human Computer Interaction Theory and Applications

186

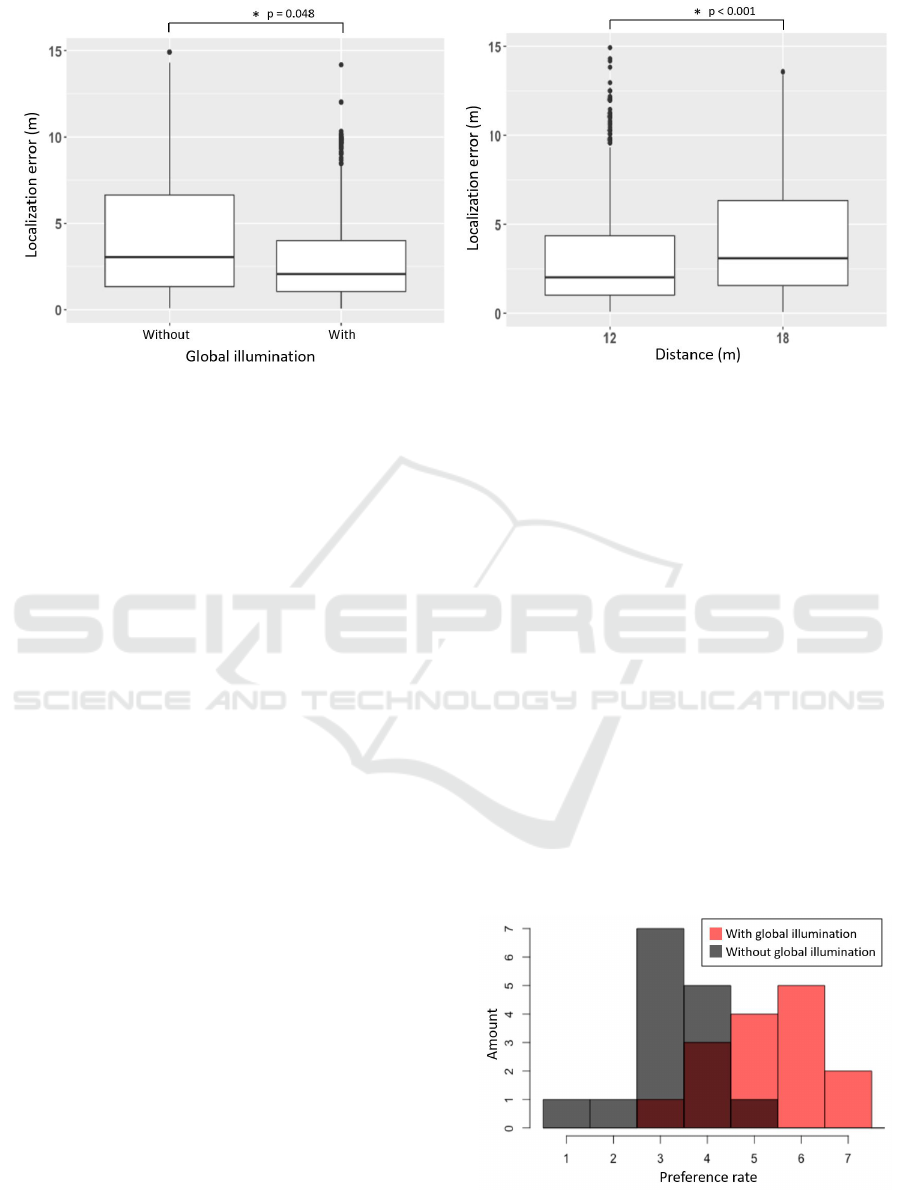

(a) (b)

Figure 2: Results of the localization task: (a) global illumination effects on the localization error; (b) distance on the localiza-

tion error.

study, 15 participants × 120 trials per participant =

1800 total trials were collected.

We measured the localization error in every trial

after the participants confirmed their placing opera-

tions. The localization error indicates a bias between

the target position and the actual location, where the

participants placed the car, i.e., the geometric center

point of the placed virtual car on the horizontal plane.

In order to guarantee that for each tested target po-

sition, participants have a fixed duration to perceive

and localize the virtual car, each trial was limited to

10 seconds.

Furthermore, after the user study, participants

were asked to compare the global illumination effects

(with vs without) and rate their preference with lik-

able scales from 1 (not like it at all) to 7 (like it very

much). Moreover, the post-SSQ questionnaire as well

as the igroup presence questionnaire (IPQ) were also

required (Schubert et al., 2001).

4 RESULTS

The experimental data was analyzed with the analysis

of variance (ANOVA). Before the analysis, we per-

formed a normality assumption check for all factor

levels using the Shapiro-Wilk test (Royston, 1982),

which did not show a strong indication of normal dis-

tribution in some cases. However, as shown in previ-

ous research (Glass et al., 1972; Harwell et al., 1992;

Lix et al., 1996), moderate deviations from normality

can be tolerated by the ANOVA analysis.

Figure 2a shows the effect of global illumination

of virtual objects on the localization error. The aver-

age localization error is 4.296m (STD = 3.582) with-

out global illumination, and 3.094m (STD = 3.05)

with global illumination. This result indicates that

the average localization error with global illumina-

tion of virtual objects was lower by 27.98% than with-

out global illumination. The ANOVA results show a

significant influence of global illumination of virtual

objects on the localization error (F

1,14

= 4.688, p =

0.048, η

2

= 0.130). These results show that the global

illumination of virtual objects significantly help users

to improve their localization accuracy in 360

◦

MR en-

vironments, which confirmed hypothesis H1.

Figure 2 presents the effect of distance on the

localization error. The average localization error is

3.179m (STD = 3.156) when the distance to the tar-

get position is 12m, and 4.21m (STD = 3.516) when

the target distance is 18m. This result indicates that

the average localization error at the distance of 12m

is lower by 24.49% than at the distance of 18m. The

Figure 3: Results of preference rate on global illumination

effects.

Effects of Global Illumination of Virtual Objects in 360

o

Mixed Reality

187

Table 1: Results of the IPQ.

With global illumination Without global illumination Significance

Score STD Score STD p < .05

Spatial presence 3.57 1.07 3.05 1.05

Involvement 3.32 1.47 2.93 1.53

Experienced realism 2.58 0.85 1.56 0.91 ∗

ANOVA result shows that the distance has a sig-

nificant influence on the localization error (F

1,14

=

38.58, p < 0.001, η

2

= 0.099). These results demon-

strate that when localizing a virtual object in 360

◦

MR

environments, human users usually have better local-

ization ability at closer distances. Thus, hypothesis

H2 was confirmed as well. However, no significant

interaction effects between factors were found.

Figure 3 shows the preference rate of participants

on the global illumination effects. For conditions

with global illumination, the average preference rate

is 5.27 out of 7, which is significantly higher than 3.27

for the situation without global illumination (con-

firmed by a one-sided Wilcoxon test with p < 0.001).

This result show that participants have better user ex-

periences when interacting with a 360

◦

MR environ-

ment with global illumination of virtual objects than

without. Thus, hypothesis H3 can be confirmed.

Furthermore, participants’ sense of presence in

360

◦

MR environments was evaluated with the data

from the IPQ. As presented in Table 1, for condi-

tions with global illumination, the scores for spa-

tial presence, involvement, and experienced realism

are all higher than conditions without global illumi-

nation. Furthermore, we found a significant influ-

ence of global illumination on the experienced real-

ism (p = 0.002), but no significant influence was ver-

ified for spatial presence and involvement. These re-

sults suggest that participants have better user experi-

ences when interacting with a 360

◦

MR environment

with global illumination than without, especially in

the aspect of experienced realism.

When participants were asked if they had any cog-

nitive strategies during the task, 4 of them specifically

reported that the global illumination and correspond-

ing shadows of virtual objects were really helpful to

estimate the placing location in 360

◦

MR environ-

ments.

5 CONCLUSION

In this paper, we reported a user study in which we

evaluated the effect of global illumination of virtual

objects on the spatial localization and user experience

in 360

◦

MR environments. The results indicate that

the global illumination of virtual objects could sig-

nificantly reduce the localization error and improve

the user experience, especially in the aspect of expe-

rienced realism. Participants also have better prefer-

ence and evaluations on the experience with global

illumination of virtual objects in 360

◦

MR environ-

ments. These results highlight the importance of us-

ing global illumination in 360

◦

MR environments, in

particular, if correct spatial perception is important.

In future work, the extent to which each of the

global illumination aspects (i.e., reflections, shadows,

lighting) contribute to the overall spatial perception,

sense of presence, and overall user experience will be

evaluated in more detail. Furthermore, the effect of

global illumination of virtual objects on other interac-

tive operations (such as 3D object selection, 3D hand

gesture interaction, etc.) in 360

◦

MR environments

will be also explored.

REFERENCES

Alatta, R. A. and Freewan, A. (2017). Investigating the ef-

fect of employing immersive virtual environment on

enhancing spatial perception within design process.

ArchNet-IJAR: International Journal of Architectural

Research, 11(2):219.

Bertel, T., Campbell, N. D., and Richardt, C. (2019). Mega-

parallax: Casual 360° panoramas with motion paral-

lax. IEEE transactions on visualization and computer

graphics, 25(5):1828–1835.

Bruder, G., Pusch, A., and Steinicke, F. (2012). Analyz-

ing effects of geometric rendering parameters on size

and distance estimation in on-axis stereographics. In

Proceedings of the ACM Symposium on Applied Per-

ception, pages 111–118.

Glass, G. V., Peckham, P. D., and Sanders, J. R. (1972).

Consequences of failure to meet assumptions under-

lying the fixed effects analyses of variance and covari-

ance. Review of educational research, 42(3):237–288.

Haller, M., Drab, S., and Hartmann, W. (2003). A real-time

shadow approach for an augmented reality application

using shadow volumes. In Proceedings of the ACM

symposium on Virtual reality software and technology,

pages 56–65.

Harwell, M. R., Rubinstein, E. N., Hayes, W. S., and

Olds, C. C. (1992). Summarizing monte carlo re-

sults in methodological research: The one-and two-

HUCAPP 2022 - 6th International Conference on Human Computer Interaction Theory and Applications

188

factor fixed effects anova cases. Journal of educa-

tional statistics, 17(4):315–339.

Hertel, J. and Steinicke, F. (2021). Augmented reality for

maritime navigation assistance-egocentric depth per-

ception in large distance outdoor environments. In

2021 IEEE Virtual Reality and 3D User Interfaces

(VR), pages 122–130. IEEE.

Interrante, V., Ries, B., and Anderson, L. (2006). Distance

perception in immersive virtual environments, revis-

ited. In IEEE virtual reality conference (VR 2006),

pages 3–10. IEEE.

Jones, J. A., Swan, J. E., Singh, G., and Ellis, S. R. (2011).

Peripheral visual information and its effect on dis-

tance judgments in virtual and augmented environ-

ments. In Proceedings of the ACM SIGGRAPH Sym-

posium on Applied Perception in Graphics and Visu-

alization, pages 29–36.

Kennedy, R. S., Lane, N. E., Berbaum, K. S., and Lilien-

thal, M. G. (1993). Simulator sickness questionnaire:

An enhanced method for quantifying simulator sick-

ness. The international journal of aviation psychol-

ogy, 3(3):203–220.

Knapp, J. M. and Loomis, J. M. (2004). Limited field

of view of head-mounted displays is not the cause

of distance underestimation in virtual environments.

Presence: Teleoperators & Virtual Environments,

13(5):572–577.

Kruijff, E., Swan, J. E., and Feiner, S. (2010). Perceptual is-

sues in augmented reality revisited. In 2010 IEEE In-

ternational Symposium on Mixed and Augmented Re-

ality, pages 3–12. IEEE.

Lix, L. M., Keselman, J. C., and Keselman, H. J. (1996).

Consequences of assumption violations revisited: A

quantitative review of alternatives to the one-way

analysis of variance f test. Review of educational re-

search, 66(4):579–619.

Milgram, P. and Kishino, F. (1994). A taxonomy of mixed

reality visual displays. IEICE TRANSACTIONS on In-

formation and Systems, 77(12):1321–1329.

M

¨

uller, J., R

¨

adle, R., and Reiterer, H. (2016). Virtual ob-

jects as spatial cues in collaborative mixed reality en-

vironments: How they shape communication behavior

and user task load. In Proceedings of the 2016 CHI

Conference on Human Factors in Computing Systems,

pages 1245–1249.

Noh, Z. and Sunar, M. S. (2009). A review of shadow tech-

niques in augmented reality. In 2009 Second Interna-

tional Conference on Machine Vision, pages 320–324.

IEEE.

Pessoa, S., Moura, G., Lima, J., Teichrieb, V., and Kelner,

J. (2010). Photorealistic rendering for augmented re-

ality: A global illumination and brdf solution. In 2010

IEEE Virtual Reality Conference (VR), pages 3–10.

IEEE.

Plumert, J. M., Kearney, J. K., Cremer, J. F., and Recker, K.

(2005). Distance perception in real and virtual envi-

ronments. ACM Transactions on Applied Perception

(TAP), 2(3):216–233.

Rhee, T., Chalmers, A., Hicks, M., Kumagai, K., Allen, B.,

Loh, I., Petikam, L., and Anjyo, K. (2018). Mr360

interactive: playing with digital creatures in 360°

videos. In SIGGRAPH Asia 2018 Virtual & Aug-

mented Reality, pages 1–2.

Rhee, T., Petikam, L., Allen, B., and Chalmers, A. (2017).

Mr360: Mixed reality rendering for 360 panoramic

videos. IEEE transactions on visualization and com-

puter graphics, 23(4):1379–1388.

Royston, J. P. (1982). An extension of shapiro and wilk’s

w test for normality to large samples. Journal of the

Royal Statistical Society: Series C (Applied Statis-

tics), 31(2):115–124.

Schubert, T., Friedmann, F., and Regenbrecht, H. (2001).

The experience of presence: Factor analytic insights.

Presence: Teleoperators & Virtual Environments,

10(3):266–281.

Steinicke, F. (2016). Being really virtual. Springer.

Steinicke, F., Bruder, G., Hinrichs, K., Lappe, M., Ries, B.,

and Interrante, V. (2009). Transitional environments

enhance distance perception in immersive virtual re-

ality systems. In Proceedings of the 6th Symposium

on Applied Perception in Graphics and Visualization,

pages 19–26.

Steinicke, F., Hinrichs, K., and Ropinski, T. (2005). Virtual

reflections and virtual shadows in mixed reality envi-

ronments. In IFIP Conference on Human-Computer

Interaction, pages 1018–1021. Springer.

Zeil, J. (2000). Depth cues, behavioural context, and nat-

ural illumination: some potential limitations of video

playback techniques. Acta Ethologica, 3(1):39–48.

Zhang, J., Langbehn, E., Krupke, D., Katzakis, N., and

Steinicke, F. (2018). A 360 video-based robot plat-

form for telepresent redirected walking. In Proceed-

ings of the 1st International Workshop on Virtual,

Augmented, and Mixed Reality for Human-Robot In-

teractions (VAM-HRI), pages 58–62.

Effects of Global Illumination of Virtual Objects in 360

o

Mixed Reality

189