Improving Semantic Image Segmentation via Label Fusion in

Semantically Textured Meshes

Florian Fervers, Timo Breuer, Gregor Stachowiak, Sebastian Bullinger, Christoph Bodensteiner

and Michael Arens

Fraunhofer IOSB, 76275 Ettlingen, Germany

Keywords:

Semantic Segmentation, Mesh Reconstruction, Label Fusion.

Abstract:

Models for semantic segmentation require a large amount of hand-labeled training data which is costly and

time-consuming to produce. For this purpose, we present a label fusion framework that is capable of improving

semantic pixel labels of video sequences in an unsupervised manner. We make use of a 3D mesh representation

of the environment and fuse the predictions of different frames into a consistent representation using semantic

mesh textures. Rendering the semantic mesh using the original intrinsic and extrinsic camera parameters

yields a set of improved semantic segmentation images. Due to our optimized CUDA implementation, we are

able to exploit the entire c-dimensional probability distribution of annotations over c classes in an uncertainty-

aware manner. We evaluate our method on the Scannet dataset where we improve annotations produced by

the state-of-the-art segmentation network ESANet from 52.05% to 58.25% pixel accuracy. We publish the

source code of our framework online to foster future research in this area (https://github.com/fferflo/

semantic-meshes). To the best of our knowledge, this is the first publicly available label fusion framework

for semantic image segmentation based on meshes with semantic textures.

1 INTRODUCTION

Semantic image segmentation plays an important role

in computer vision tasks by providing a high-level un-

derstanding of observed scenes. However, good seg-

mentation results are limited by the quality and quan-

tity of the available training data which requires a

lot of time-consuming manual annotation work. The

popular Cityscapes dataset of traffic scenes for exam-

ple reports upwards of 90 minutes per image for pixel-

wise annotations (Cordts et al., 2016).

Since labeled datasets are rare and often cover

only narrow use cases, we consider the unsupervised

enhancement of predicted image segmentations of

video sequences by defining consistency constraints

that reflect temporal and spatial structure properties

of the captured scenes.

The majority of recent works in this area has fo-

cused on methods that establish short-term pixel cor-

respondences - for example via optical flow (Gadde

et al., 2017; Mustikovela et al., 2016; Nilsson and

Sminchisescu, 2018), patch match (Badrinarayanan

et al., 2010; Budvytis et al., 2017), learned corre-

spondences (Zhu et al., 2019) or depth and relative

camera pose of subsequent frames (Ma et al., 2017;

Figure 1: Semantically textured meshes of indoor and out-

door scenes produced by our label fusion framework and

visualized with MeshLab (Cignoni et al., 2008).

Stekovic et al., 2020). In this work, we explore a

different approach by explicitly modeling the envi-

ronment as a 3D semantically textured mesh which

serves as a long-term temporal and spatial consistency

constraint.

The main contributions of this work are as fol-

lows:

Fervers, F., Breuer, T., Stachowiak, G., Bullinger, S., Bodensteiner, C. and Arens, M.

Improving Semantic Image Segmentation via Label Fusion in Semantically Textured Meshes.

DOI: 10.5220/0010841800003124

In Proceedings of the 17th International Joint Conference on Computer Vision, Imaging and Computer Graphics Theory and Applications (VISIGRAPP 2022) - Volume 5: VISAPP, pages

509-516

ISBN: 978-989-758-555-5; ISSN: 2184-4321

Copyright

c

2022 by SCITEPRESS – Science and Technology Publications, Lda. All rights reserved

509

(a) Color (b) Ground truth (c) Network prediction (d) Fused annotation

Figure 2: Results of the label fusion in the Scannet dataset. The fusion shows most improvement when seeing an object from

many different perspectives. Errors in the reconstructed mesh result in artifacts in the rendered annotations. The figure is best

viewed in color.

(1) We present a label fusion framework based on en-

vironment mesh reconstructions that is capable of im-

proving the quality of semantic pixel-level labels in an

unsupervised manner. In contrast to previous works,

we show that the proposed method improves segmen-

tation results even without requiring depth sensors for

the mesh reconstruction.

(2) We introduce a novel pixel-weighting scheme

that dynamically adjusts the contribution of individ-

ual frames towards the final annotations. This yields

a larger improvement in pixel accuracy than previous

label fusion works.

(3) Our framework uses a custom renderer and tex-

ture parametrization to optimize GPU memory uti-

lization during the label fusion process. This al-

lows us to exploit the entire c-dimensional probabil-

ity distribution of annotations over c classes in an

uncertainty-aware manner. We implement the entire

framework in CUDA (Nickolls et al., 2008), since

the texture mapping techniques of classical rendering

pipelines such as OpenGL (Woo et al., 1999) that are

used in previous works are not suited for this task.

(4) We make the code to our framework publicly

available, including all evaluation scripts used for this

paper.

2 RELATED WORK

Short-term correspondences between pixels of sub-

sequent video frames have been widely used to im-

prove semantic segmentation (Gadde et al., 2017;

Mustikovela et al., 2016; Nilsson and Sminchisescu,

2018; Badrinarayanan et al., 2010; Budvytis et al.,

2017; Zhu et al., 2019; Ma et al., 2017; Stekovic

et al., 2020). For example, Zhu et al. (2019)

and Mustikovela et al. (2016) propagate labels from

hand-annotated video frames to adjacent (unlabeled)

frames as data augmentation for training a segmenta-

tion model. Nevertheless, these methods are limited

to short-term correspondences since label accuracy

decreases with each propagation step (Mustikovela

et al., 2016).

To establish long-term correspondences, most

works explicitly represent the environment as a three-

dimensional model and find corresponding pixels via

their model projections. Voxel maps (Kundu et al.,

2014; St

¨

uckler et al., 2015; Li et al., 2017; Grinvald

et al., 2019; Rosinol et al., 2020; Jeon et al., 2018;

Pham et al., 2019) have been used for this purpose, but

suffer from discretization and high memory require-

ment. Point clouds (Floros and Leibe, 2012; Hermans

et al., 2014; Li and Belaroussi, 2016; Tateno et al.,

2017) on the other hand accurately represent tempo-

VISAPP 2022 - 17th International Conference on Computer Vision Theory and Applications

510

ral but not spatial correspondences and only model the

environment in a sparse or semi-dense way. McCor-

mac et al. (2017) use surfels as a dense representation

of the environment and model the class probability

distribution of each surfel to fuse the two-dimensional

network predictions from different view points. Sim-

ilar to our work, Rosu et al. (2020) use a semantically

enriched mesh representation of the environment to

fuse the predictions of individual frames. In contrast

to their work, we show that our framework is able

to improve segmentations even with an off-the-shelf

image-based reconstruction pipeline. Additionally,

Rosu et al. (2020) use OpenGL for rendering the se-

mantic mesh and therefore have to resort to a classical

texture mapping technique that maps the entire mesh

onto a single texture. We implement our framework

in CUDA which enables the use of a custom texture

parametrization and avoids expensive paging opera-

tions when interacting with OpenGL textures. While

Rosu et al. (2020) only use the maximum of the prob-

ability distribution, we are able to exploit the entire

c-dimensional probability distribution of pixel labels

over c classes.

3 METHOD

Our method is designed to fuse temporally and spa-

tially inconsistent pixel predictions, e.g. of a segmen-

tation network, into a consistent representation in an

uncertainty-aware manner. For this purpose, we first

establish correspondences between image pixels that

are projections of the same three-dimensional envi-

ronment primitive by using the intrinsic and extrinsic

camera parameters. The predicted class probability

distributions of corresponding pixels are then aggre-

gated resulting in a single probability distribution for

the primitive. Finally, the primitive’s fused annotation

is rendered onto all corresponding pixels to produce

consistent 2D annotation images.

3.1 Environment Mesh

To determine the three-dimensional environment

primitives, a mesh of the scene captured with a set

of images is reconstructed using off-the-shelf recon-

struction frameworks like BundleFusion (Dai et al.,

2017b), if depth information is available, and Colmap

(Sch

¨

onberger and Frahm, 2016; Sch

¨

onberger et al.,

2016b), when only working with RGB input. This

recovers static parts of the environment as well as in-

trinsic and extrinsic camera parameters of individual

frames. Pixels are then defined as correspondences if

they stem from projections of the same primitive ele-

ment in the environment mesh.

Since the reconstruction step does not explicitly

account for semantics, the geometric borders of mesh

triangles and the semantic borders of real-world ob-

jects are not guaranteed to always coincide. Indi-

vidual triangles might therefore span across multi-

ple semantic objects and lead to incorrect pixel cor-

respondences. This problem can be alleviated by in-

creasing the resolution of the mesh to include sub-

triangle primitive elements with smaller spatial ex-

tension. This reduces the total number of pixel cor-

respondences during the label fusion, but at the same

time decreases the proportion of incorrect correspon-

dences. To increase the resolution while preserving

the geometry of the mesh, we introduce semantic tex-

tures that further subdivide a triangle into smaller tex-

ture elements called texels (Glassner, 1989).

Let t ∈ T be a triangle in mesh T and X

t

the set

of texels on the triangle. We choose a uv represen-

tation for texture coordinates on the triangle (Heck-

bert, 1989) with the uv coordinates (0, 0), (0, 1) and

(1, 0) for the three vertices as well as u ≥ 0, v ≥ 0 and

u + v < 1 for any point on the triangle. The uv space

is discretized into s ∈N steps per dimension yielding

a total of |X

t

| =

s

2

+s

2

texels (cf . Figure 3). A texel

x ∈ X

t

covers the subspace [u

x

, u

x

+

1

s

) ×[v

x

, v

x

+

1

s

)

of the triangle’s uv space. To reduce the skew of texel

shapes we also choose the vertex which is located on

the triangle’s interior angle closest to a right angle as

origin of the uv space.

We choose s = max(1, dγ

√

a

t

e) based on the

worst-case frame in which the triangle occupies the

most number of pixels a

t

. We take the square root of

the area a so that |X

t

| ∈ O(a

t

). The variable γ serves

as a tunable parameter defining the resolution of the

mesh, such that for a given triangle larger γ lead to

more texels and smaller γ to fewer texels. At γ = 0

triangles are not subdivided into texels. This formula-

tion of texture resolution aims to be agnostic w.r.t. the

granularity of the input mesh: For a given γ > 0 texels

will roughly encompass the same number of pixels on

the worst-case frame regardless of the triangle size.

Let K further denote the set of all pixels in all im-

ages. For a pixel k ∈K, let t

k

denote the projected tri-

angle and (u

k

, v

k

) the corresponding uv coordinates.

We define K

x

⊂ K as the set of pixels that are pro-

jected onto a given texel x according to (1), and x

k

as the texel that pixel k is projected onto, such that

k ∈K

x

k

.

Improving Semantic Image Segmentation via Label Fusion in Semantically Textured Meshes

511

Figure 3: Example texture mapping of a single triangle. uv

dimensions are discretized into s = 6 steps each yielding

s

2

+s

2

= 21 texels.

K

x

= {k|t

k

= t

x

∧(u

k

, v

k

) ∈ [u

x

, u

x

+

1

s

) ×[v

x

, v

x

+

1

s

)} (1)

3.2 Label Fusion

In general, a single texel is projected onto a set of pix-

els in multiple images where each pixel k is annotated

with a probability distribution p

k

∈R

c

over c possible

classes. Each pixel prediction represents an observa-

tion of the underlying texel class. Potentially conflict-

ing pixel predictions are fused in an uncertainty-aware

manner into a single probability distribution p

x

∈ R

c

that represents the label of the texel x.

3.2.1 Aggregation

Let f : P(K) 7→ R

c

represent an aggregation function

that maps a set of pixels onto a fused probability dis-

tribution. The annotation p

x

of a texel x is then de-

fined as

p

x

= f (K

x

) (2)

and its argmax defines the texel’s class.

Previous work on mesh-based label fusion (Rosu

et al., 2020) uses only the maximum of the probability

distribution in the aggregator function due to perfor-

mance reasons, as shown in (3).

f

maxsum

(K) = norm

∑

k∈K

g

1

(p

k

)

.

.

.

g

c

(p

k

)

(3)

with g

i

(p) =

(

p

i

, if p

i

= max p

0, if p

i

< max p

and norm(p) =

p

kpk

1

Due to our optimized CUDA implementation and

custom texture parametrization, we are able to exploit

the entire c-dimensional probability distribution in the

label fusion. This allows us to utilize Bayesian up-

dating (McCormac et al., 2017; Rosinol et al., 2020)

in our aggregation function as shown in (4), which is

defined by an element-wise multiplication of proba-

bilities.

f

mul

(K) = norm

∏

k∈K

p

k

= norm

∏

k∈K

p

k,1

.

.

.

p

k,c

(4)

We also evaluate the average pooling aggregator

(Tateno et al., 2017) shown in (5), which uses sum-

mation of probabilities similar to Rosu et al. (2020),

but does not discard parts of the probability distribu-

tion.

f

sum

(K) = norm

∑

k∈K

p

k

= norm

∑

k∈K

p

k,1

.

.

.

p

k,c

(5)

All aggregation results are normalized to a sum of

kpk

1

= 1 to represent a proper probability distribu-

tion.

Once the pixels of all frames have been aggregated

the texels of the mesh store a consistent representa-

tion of the semantics of the environment. The mesh

can then be rendered from the reconstructed camera

poses to produce new consistent 2D annotation im-

ages corresponding to the original video frames.

Assuming non-erroneous pixel correspondences,

the rendered annotation images will statistically have

more accurate pixel labels than the original network

prediction due to the probabilistic fusion. In practice,

this fusion effect is weighed against the negative im-

pact of erroneous pixel correspondences which stem

from inaccurate mesh reconstructions and too coarse

mesh primitives.

3.2.2 Pixel Weighting

Previous works (McCormac et al., 2017; Rosu et al.,

2020) perform label fusion under the assumption

that individual pixel predictions of the segmenta-

tion network are independent and identically dis-

tributed (i.i.d.) observations of the underlying texel

class. Each pixel is therefore given an equal weight

towards the final label of its corresponding texel. This

implicitly gives higher weight to frames where the

texel occupies more pixels.

To compensate this effect, we propose a novel

weighting scheme based on the assumption that indi-

vidual images rather than pixels are i.i.d. observations

of the underlying texel class. Each image is there-

fore given an equal weight w.r.t. the final label of a

VISAPP 2022 - 17th International Conference on Computer Vision Theory and Applications

512

texel. This reduces the implicit overweight of highly

correlated pixel predictions and thereby improves the

fused annotation. Our evaluation in Section 4.3 sup-

ports this decision.

The distinction between i.i.d. pixels and i.i.d im-

ages can effectively be realized by extending (3) - (5)

with a weight factor w

k

per pixel k as shown in

(6) - (8).

f

w-maxsum

(K) = norm

∑

k∈K

w

k

g

1

(p

k

)

.

.

.

g

c

(p

k

)

(6)

f

w-mul

(K) = norm

∏

k∈K

p

w

k

k

(7)

f

w-sum

(K) = norm

∑

k∈K

w

k

p

k

(8)

The aggregator function thus interprets pixel k as

having occurred w

k

many times. Weighting pixels

equally is achieved with

w

k

= w

(P)

k

= 1 (9)

and weighting images equally is achieved with

w

k

= w

(I)

k

=

1

|K

i

∩K

x

k

|

(10)

where K

i

⊂ K is the set of pixels in image i with

k ∈K

i

.

3.3 Implementation

Our framework is divided into a module for rendering

the environment mesh and a module for the texel-wise

aggregation of probability distributions.

3.3.1 Renderer

The renderer takes as input a triangle mesh and the

extrinsic and intrinsic camera parameters of a given

frame and projects all triangles onto the camera plane.

To handle occlusion, we maintain depth information

in a z-buffer.

For each pixel k and its texture coordinates (u

k

, v

k

)

on the projected triangle t, we compute an identifier

id

x

∈{0, ··· , |R

t

|−1} for the corresponding texel x as

shown in (11).

id

x

=

bs ·u

k

c

2

+ bs ·u

k

c

2

+ bs ·v

k

c (11)

We store both a triangle identifier id

t

and the texel

identifier id

r

per pixel. This rendered image of iden-

tifiers is passed to the aggregator module.

We employ CUDA’s data parallelism over the set

of triangles to speed up the rendering process.

3.3.2 Aggregator

The aggregator fuses the pixel predictions of all

frames into a consistent representation on texel level.

We store the probability distributions of all texels

in an array P ∈ R

n

x

×c

where n

x

=

∑

t∈T

|X

t

| is the to-

tal number of texels in the mesh . The texels of all

triangles are stacked along the first dimension of the

array. Since triangles can have different number of

texels, we define the triangle identifier id

t

as its off-

set into the array along the first dimension for quick

access. The pair of triangle and texel identifiers that

are produced by the renderer module can therefore be

used to find the corresponding row in P as shown in

(12).

p

x

= P

l

with l = id

t

x

+ id

x

(12)

For each frame, we first render the mesh and pre-

dict semantic pixelwise labels using the 2D segmen-

tation model. For every pixel in the frame, the cor-

responding row in P is then updated as defined by

the aggregator function f using the pixel’s predicted

probability distribution. The aggregator function f is

defined to be permutation-invariant to achieve deter-

ministic results up to floating point inaccuracies.

4 EVALUATION

4.1 Data and Architecture

Evaluating our method requires densely labeled video

sequences which only few publicly available datasets

contain. This stems from the fact that redundant la-

bels of subsequent frames represent a low cost-benefit

ratio for other tasks like single image segmentation.

We therefore evaluate our method on the Scannet v2

dataset (Dai et al., 2017a) of indoor scenes which con-

tains densely labeled video data and corresponding

meshes reconstructed with depth sensor data. We use

the training split to evaluate the hyper-parameters of

our method and report the final results on the valida-

tion split. We also create dense reconstructions of the

first 20 scenes using Colmap and Delaunay triangula-

tion (Sch

¨

onberger et al., 2016a; Labatut et al., 2009)

to evaluate the performance on meshes created using

a multi-view stereo approach.

For the semantic segmentation we choose a set of

40 classes following the definition of the NYU Depth

v2 dataset (Nathan Silberman and Fergus, 2012). As

segmentation model, we use ESANet (Seichter et al.,

2020) trained on NYU Depth v2.

Improving Semantic Image Segmentation via Label Fusion in Semantically Textured Meshes

513

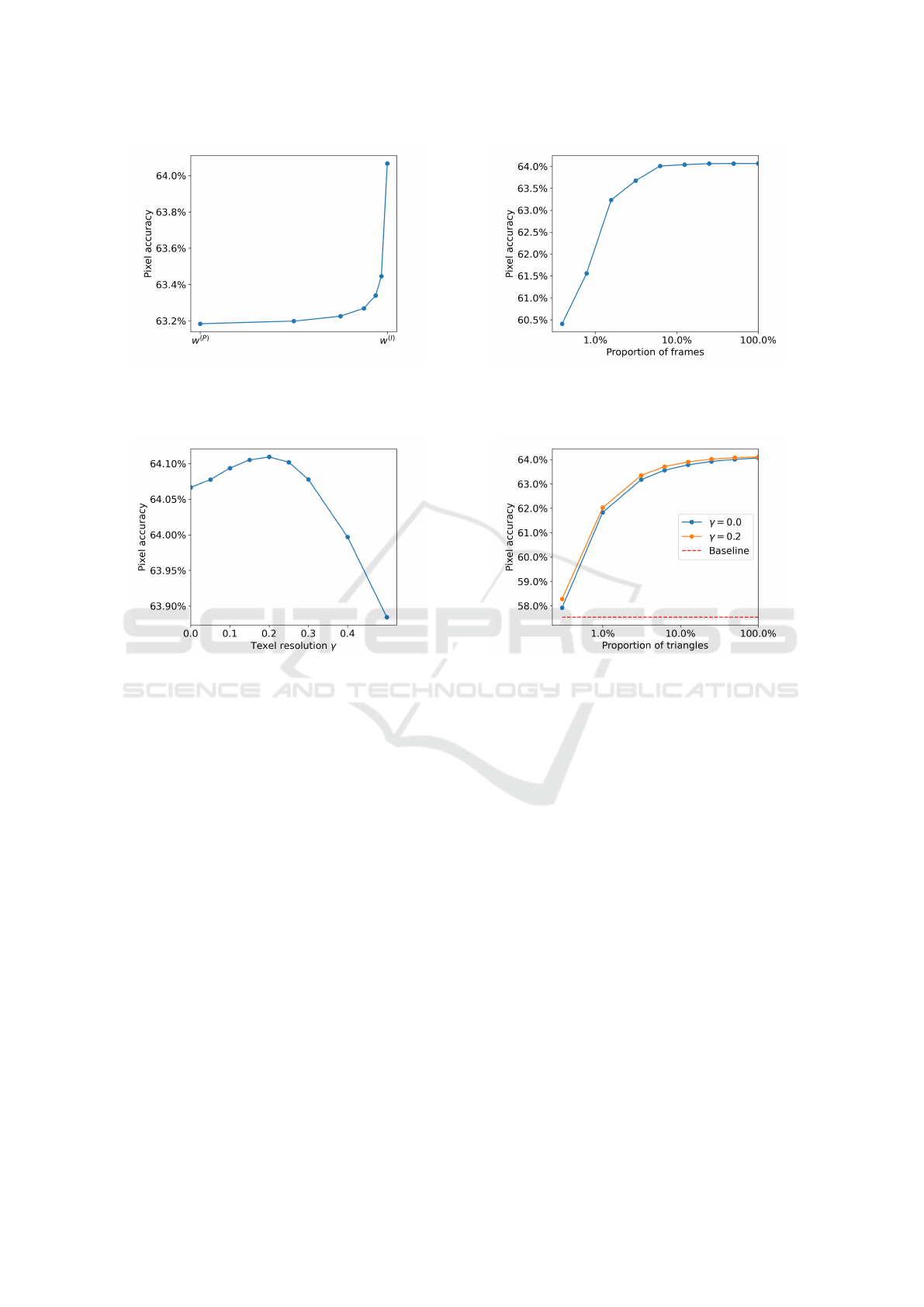

(a) Pixel accuracy without sub-division of triangles (i.e. us-

ing a texel resolution γ = 0) for different pixel weights

w ∈[w

(P)

, w

(I)

]. Values between w

(P)

and w

(I)

represent a

weighted combination of both interpretations.

(b) Pixel accuracy for different subsets of the available

frames without sub-division of triangles (i.e. using a texel

resolution γ = 0) and with pixel weights w

(I)

. Frames are

chosen at uniform intervals.

(c) Pixel accuracy for different texel resolutions γ with pixel

weights w

(I)

.

(d) Pixel accuracy for meshes simplified to a proportion of

triangles with pixel weights w

(I)

. The value γ = 0.2 is chosen

based on the results shown in Figure 4c.

Figure 4: Analysis of factors that impact the label fusion using the first 100 scenes of the training split of Scannet. The graphs

show the pixel accuracy after applying the label fusion step. The pixel weights w

(P)

and w

(I)

represent the assumptions of in-

dependent and identically distributed images and pixels, respectively. The aggregator function f

mul

is used in all experiments.

Values between individual measurements are interpolated.

4.2 Test Results

We define our baseline as the original predictions of

the segmentation network and use the validation split

of the Scannet dataset for evaluation. This corre-

sponds with a pixel accuracy of 52.05%.

Based on the results of the extended evaluation

in Section 4.3, we choose the aggregator function as

f

mul

, define pixel weights as w

(I)

and set the texture

resolution to γ = 0.2. With this setup, our label fusion

method improves the pixel accuracy on the validation

split to 58.25%.

4.3 Extended Evaluation

In the following, we examine the impact of several

factors on the relative improvement of the label fusion

over the original network prediction on the first 100

scenes of the training split of Scannet. The network

achieves 57.53% pixel accuracy on this split. We use

this evaluation to determine optimal hyper-parameters

for the test results.

Aggregator Function. Table 1 shows the resulting

pixel accuracy of different aggregator functions used

in the label fusion. Bayesian fusion with f

mul

achieves

the largest improvement, both with the i.i.d. images

and i.i.d. pixels assumptions.

Pixel Weighting. Choosing w

(I)

as pixel weight

shows improvements over w

(P)

for each aggregator

function. Our measurements suggest that pixel ac-

curacy increases monotonically for w ∈ [w

(P)

, w

(I)

]

which supports our decision to interpret the network

predictions i.i.d. images rather than i.i.d. pixels (cf .

Figure 4a).

Frame Selection. Figure 4b shows the resulting pixel

accuracy when performing the label fusion with only

VISAPP 2022 - 17th International Conference on Computer Vision Theory and Applications

514

Table 1: Pixel accuracy of different aggregators in percent

without sub-division of triangles (i.e. using the texel reso-

lution γ = 0). To the best of our knowledge, this is the first

work to fuse full probability distributions (such as in f

mul

and f

sum

) on semantic mesh textures.

Aggregator Function w

(P)

w

(I)

f

maxsum

(Rosu et al., 2020) 62.85 64.00

f

sum

(ours) 62.89 64.04

f

mul

(ours) 63.18 64.07

a subset of the available frames chosen at uniform in-

tervals. Using fewer frames also results in less in-

formation per texel that can be fused in a probabilis-

tic manner, and thus decreases pixel accuracy. Above

20% of the frames we observe that additional images

provide no significant improvement in pixel accuracy

due to the high redundancy of information in adjacent

frames.

Texel Resolution. Figure 4c shows the fused pixel

accuracy over different texel resolutions. Results im-

prove slightly from γ = 0.0 to γ = 0.2 due to a finer

texture resolution. For larger γ values accuracy starts

to decrease due to the smaller benefit of spatial con-

sistency and stronger aliasing effects.

Using sub-triangle texture primitives with γ > 0

improves accuracy by at most 0.03% compared to the

original triangles as primitives with γ = 0. This indi-

cates that the Scannet meshes already have a sufficient

granularity for the label fusion task. To test the per-

formance on coarser meshes, we simplify the Scan-

net meshes using Quadric Edge Collapse Decimation

(Cignoni et al., 2008). This results in a smaller set of

larger triangles that represent a suitable approxima-

tion of the original mesh geometry. We choose the

best texel resolution (i.e. γ = 0.2) based on the results

shown in Figure 4c and compare with the original tri-

angles as primitives (i.e. γ = 0). For a fixed γ > 0,

the number of texels is roughly constant over differ-

ent levels of simplification, while at γ = 0 the number

of primitive elements decreases with the granularity

of the mesh.

Figure 4d shows the pixel accuracy on meshes

with different levels of simplification. The relative

advantage of using sub-triangle textures increases for

coarser meshes. When reducing the number of trian-

gles to 0.3% of the input mesh, this results in a 0.3%

absolute difference in pixel accuracy. For stronger

simplifications, the geometric errors outweigh the

benefits of the probabilistic fusion. This results in

a lower pixel accuracy than the baseline annotations

produced by the segmentation network.

Input Mesh Applying the label fusion on meshes

of the first 20 scenes reconstructed with Colmap im-

proves pixel accuracy from 57.46% to 59.67%. This

demonstrates that our method can also be applied on

meshes that are created via a multi-view stereo ap-

proach.

5 CONCLUSIONS

We have presented a label fusion framework that

is capable of producing consistent semantic annota-

tions for environment meshes using a set of annotated

frames. In contrast to previous works, our method

allows us to exploit the complete probability distribu-

tions of semantic image labels during the fusion pro-

cess. We utilize the mesh representation as a long-

term consistency constraint to also improve label ac-

curacy in the original frames. We performed exten-

sive evaluation of the proposed approach using the

Scannet dataset, including the impact of factors like

aggregation functions, pixel weighting, frame selec-

tion or texel resolution. Our experiments demon-

strate that the proposed method yields a significant

improvement in pixel label accuracy and can be used

even with purely image-based multi-view stereo ap-

proaches. We make the code to our framework pub-

licly available, including a CUDA implementation for

efficient label fusion and a Python wrapper for easy

integration with machine learning frameworks.

REFERENCES

Badrinarayanan, V., Galasso, F., and Cipolla, R. (2010). La-

bel propagation in video sequences. In Conference on

Computer Vision and Pattern Recognition.

Budvytis, I., Sauer, P., Roddick, T., Breen, K., and Cipolla,

R. (2017). Large scale labelled video data augmenta-

tion for semantic segmentation in driving scenarios. In

International Conference on Computer Vision Work-

shops.

Cignoni, P., Callieri, M., Corsini, M., Dellepiane, M.,

Ganovelli, F., and Ranzuglia, G. (2008). MeshLab:

an Open-Source Mesh Processing Tool. In Scarano,

V., Chiara, R. D., and Erra, U., editors, Eurographics

Italian Chapter Conference. The Eurographics Asso-

ciation.

Cordts, M., Omran, M., Ramos, S., Rehfeld, T., Enzweiler,

M., Benenson, R., Franke, U., Roth, S., and Schiele,

B. (2016). The cityscapes dataset for semantic urban

scene understanding. In Conference on Computer Vi-

sion and Pattern Recognition.

Dai, A., Chang, A. X., Savva, M., Halber, M., Funkhouser,

T., and Nießner, M. (2017a). Scannet: Richly-

annotated 3d reconstructions of indoor scenes. In

Conference on Computer Vision and Pattern Recog-

nition.

Improving Semantic Image Segmentation via Label Fusion in Semantically Textured Meshes

515

Dai, A., Nießner, M., Zollh

¨

ofer, M., Izadi, S., and Theobalt,

C. (2017b). Bundlefusion: Real-time globally consis-

tent 3d reconstruction using on-the-fly surface reinte-

gration. ACM Transactions on Graphics, 36(4).

Floros, G. and Leibe, B. (2012). Joint 2d-3d temporally

consistent semantic segmentation of street scenes. In

Conference on Computer Vision and Pattern Recogni-

tion.

Gadde, R., Jampani, V., and Gehler, P. V. (2017). Semantic

video cnns through representation warping. In Inter-

national Conference on Computer Vision.

Glassner, A. S. (1989). An introduction to ray tracing. Mor-

gan Kaufmann.

Grinvald, M., Furrer, F., Novkovic, T., Chung, J. J., Cadena,

C., Siegwart, R., and Nieto, J. (2019). Volumetric

instance-aware semantic mapping and 3d object dis-

covery. Robotics and Automation Letters, 4(3).

Heckbert, P. S. (1989). Fundamentals of texture mapping

and image warping.

Hermans, A., Floros, G., and Leibe, B. (2014). Dense 3d se-

mantic mapping of indoor scenes from rgb-d images.

In International Conference on Robotics and Automa-

tion.

Jeon, J., Jung, J., Kim, J., and Lee, S. (2018). Semantic

reconstruction: Reconstruction of semantically seg-

mented 3d meshes via volumetric semantic fusion. In

Computer Graphics Forum, volume 37.

Kundu, A., Li, Y., Dellaert, F., Li, F., and Rehg, J. M.

(2014). Joint semantic segmentation and 3d recon-

struction from monocular video. In European Confer-

ence on Computer Vision.

Labatut, P., Pons, J.-P., and Keriven, R. (2009). Robust and

efficient surface reconstruction from range data. In

Computer Graphics Forum, volume 28.

Li, X., Ao, H., Belaroussi, R., and Gruyer, D. (2017). Fast

semi-dense 3d semantic mapping with monocular vi-

sual slam. In International Conference on Intelligent

Transportation Systems.

Li, X. and Belaroussi, R. (2016). Semi-dense 3d seman-

tic mapping from monocular slam. arXiv preprint

arXiv:1611.04144.

Ma, L., St

¨

uckler, J., Kerl, C., and Cremers, D. (2017).

Multi-view deep learning for consistent semantic

mapping with rgb-d cameras. In International Con-

ference on Intelligent Robots and Systems.

McCormac, J., Handa, A., Davison, A., and Leutenegger, S.

(2017). Semanticfusion: Dense 3d semantic mapping

with convolutional neural networks. In International

Conference on Robotics and Automation.

Mustikovela, S. K., Yang, M. Y., and Rother, C. (2016).

Can ground truth label propagation from video help

semantic segmentation? In European Conference on

Computer Vision.

Nathan Silberman, Derek Hoiem, P. K. and Fergus, R.

(2012). Indoor segmentation and support inference

from rgbd images. In European Conference on Com-

puter Vision.

Nickolls, J., Buck, I., Garland, M., and Skadron, K. (2008).

Scalable parallel programming with cuda: Is cuda the

parallel programming model that application develop-

ers have been waiting for? Queue, 6(2).

Nilsson, D. and Sminchisescu, C. (2018). Semantic video

segmentation by gated recurrent flow propagation. In

Conference on Computer Vision and Pattern Recogni-

tion.

Pham, Q.-H., Hua, B.-S., Nguyen, T., and Yeung, S.-K.

(2019). Real-time progressive 3d semantic segmen-

tation for indoor scenes. In Winter Conference on Ap-

plications of Computer Vision.

Rosinol, A., Abate, M., Chang, Y., and Carlone, L. (2020).

Kimera: an open-source library for real-time metric-

semantic localization and mapping. In International

Conference on Robotics and Automation.

Rosu, R. A., Quenzel, J., and Behnke, S. (2020). Semi-

supervised semantic mapping through label propaga-

tion with semantic texture meshes. International Jour-

nal of Computer Vision, 128(5).

Sch

¨

onberger, J. L. and Frahm, J.-M. (2016). Structure-

from-motion revisited. In Conference on Computer

Vision and Pattern Recognition.

Sch

¨

onberger, J. L., Zheng, E., Frahm, J.-M., and Pollefeys,

M. (2016a). Pixelwise view selection for unstructured

multi-view stereo. In European Conference on Com-

puter Vision.

Sch

¨

onberger, J. L., Zheng, E., Pollefeys, M., and Frahm,

J.-M. (2016b). Pixelwise view selection for unstruc-

tured multi-view stereo. In European Conference on

Computer Vision.

Seichter, D., K

¨

ohler, M., Lewandowski, B., Wengefeld, T.,

and Gross, H. (2020). Efficient RGB-D semantic seg-

mentation for indoor scene analysis. Computing Re-

search Repository, abs/2011.06961.

Stekovic, S., Fraundorfer, F., and Lepetit, V. (2020). Cast-

ing geometric constraints in semantic segmentation as

semi-supervised learning. In Winter Conference on

Applications of Computer Vision.

St

¨

uckler, J., Waldvogel, B., Schulz, H., and Behnke, S.

(2015). Dense real-time mapping of object-class se-

mantics from rgb-d video. Journal of Real-Time Im-

age Processing, 10(4).

Tateno, K., Tombari, F., Laina, I., and Navab, N. (2017).

Cnn-slam: Real-time dense monocular slam with

learned depth prediction. In Conference on Computer

Vision and Pattern Recognition.

Woo, M., Neider, J., Davis, T., and Shreiner, D. (1999).

OpenGL programming guide: the official guide to

learning OpenGL, version 1.2. Addison-Wesley

Longman Publishing Co., Inc.

Zhu, Y., Sapra, K., Reda, F. A., Shih, K. J., Newsam, S.,

Tao, A., and Catanzaro, B. (2019). Improving seman-

tic segmentation via video propagation and label re-

laxation. In Conference on Computer Vision and Pat-

tern Recognition.

VISAPP 2022 - 17th International Conference on Computer Vision Theory and Applications

516