A VR Application for the Analysis of Human Responses to Collaborative

Robots

∗

Ricardo Matias and Paulo Menezes

University of Coimbra, Department of Electrical and Computer Engineering, Institute of Systems and Robotics,

Rua S

´

ılvio Lima, Coimbra, Portugal

Keywords:

Virtual Reality, Human-robot Collaboration, Baxter Robot.

Abstract:

The increasing number of robots performing certain tasks in our society, especially in the industrial environ-

ment, introduces more scenarios where a human must collaborate with a robot to achieve a common goal

which, in turn, raises the need to study how safe and natural this interaction is and how it can be improved.

Virtual reality is an excellent tool to simulate these interactions, as it allows the user to be fully immersed in

the world while being safe from a possible robot malfunction. In this work, a simulation was created to study

how effective virtual reality is in the studies of human-robot interaction. It is then used in an experiment where

the participants must collaborate with a simulated Baxter to place objects delivered by the robot in the correct

place, within a time limit. During the experiment, the electrodermal activity and heart rate of the participants

are measured, allowing for the analysis of reactions to events occurring within the simulation. At the end of

each experiment, participants fill a user experience questionnaire (UEQ) and a Flow Short Scale questionnaire

to evaluate their sense of presence and the interaction with the robot.

1 INTRODUCTION

Robots are gradually moving from highly controlled

environments, where they work autonomously and

alone, to new ones where the operations are done with

human presence to act jointly towards the same task.

These new types of robots are known as collaborative

robots (Knudsen and Kaivo-oja, 2020).

The industrial environments are good examples of

this situation, since a lot of tasks are automated, lead-

ing to large areas where robots operate alone, how-

ever, as referred by (Liu et al., 2019), in many cases

this still needs to be complemented by human man-

ual work. The assembly lines in the automotive and

electronic equipment industries are good examples of

these situations. Moreover, the repetitive nature of

these tasks can cause fatigue and frequently lead to

lower back pain situations or spine injuries (Kr

¨

uger

et al., 2009). Introducing a robot collaborator could

be an effective measure to help in fighting these prob-

lems.

Human-robot collaboration is still far from being

effective, and safe, as is the collaboration between hu-

mans. The International Federation of Robotics only

∗

This work was supported by Fundac¸

˜

ao para a Ci

ˆ

encia e

a Tecnologia (FCT) through project UID/EEA/00048/2020

allows robots and humans to work together if they

don’t share the same workspace or if they share it but

don’t act simultaneously. This is due to a major flaw

in current day robots, the lack of awareness of their

surroundings, in particular, of their partners’ actions

and feelings, which in turn, leads to the lack of abil-

ity to adapt accordingly. As noted by (C¸

¨

ur

¨

ukl

¨

u et al.,

2010), robots in the near future will need to react and

adapt to the working partner’s presence and actions.

Similarly to the case of operators controlling large

machines, awareness is a key issue for robots, in par-

ticular those that need a lot of strength and speed,

since one wrong move can seriously injure or even

kill someone in its vicinity. We may say that safety

implies strong awareness, but even a careful car driver

cannot always predict the next move of a distracted

pedestrian. Speed limitations in urban areas have the

purpose of reducing the number of (fatal) accidents

due to unpredictable human behaviours, the same

principle has been applied to collaborative robots.

The designation collaborative robots refers in most

cases to robots whose joint torque or force is reduced

to a level where a collision with a human will make

it stop while not causing any harm. Other cases are

based on turn-taking, frequently enforced by two lat-

eral buttons that the human must press simultaneously

68

Matias, R. and Menezes, P.

A VR Application for the Analysis of Human Responses to Collaborative Robots.

DOI: 10.5220/0010829600003124

In Proceedings of the 17th International Joint Conference on Computer Vision, Imaging and Computer Graphics Theory and Applications (VISIGRAPP 2022) - Volume 1: GRAPP, pages

68-78

ISBN: 978-989-758-555-5; ISSN: 2184-4321

Copyright

c

2022 by SCITEPRESS – Science and Technology Publications, Lda. All rights reserved

to ensure that his/her hands are not inside the robot

operating volume, while it is moving.

While being a new and interesting trend we may

say that this gathering may affect the performance

of both robots and humans. Robots have to oper-

ate slower and be less powerful to avoid fatal acci-

dents, humans may need to adapt to these new situa-

tions and this adaptation may require unexpected cog-

nitive and/or emotional efforts that eventually result in

a progressive degradation of their performance and/or

physical or mental health. It is therefore important

to understand what influence a specific collaborative

setup may have in humans, in particular along a work-

day period.

1.1 Related Works

Human-Robot interaction as a multidisciplinary field

emerged in the mid-1990s and early years of 2000.

It focuses on understanding the interactions between

robots and people and how they can be shaped and

improved. Over the years, robots have seen great im-

provement, with new models constantly making the

old ones obsolete with new hardware, cognitive capa-

bilities and more. The vision of this field is to make

robots exist in our everyday lives, from helping in the

industrial environment and household chores to social

robots that can entertain or care for humans. As such,

studies on this field focus not just on the short term in-

teractions in the laboratory but also the long-term in-

teractions with systems such as a robotic weight loss

coach (Kidd and Breazeal, 2008) that helps track calo-

ries consumption to combat obesity. The introduction

of this robot in the participants’ lives had them track-

ing their calories consumption and exercising almost

twice as long, demonstrating the benefits that robots

could have if correctly introduced in our lives. This

introduction also raises the importance of the robot’s

appearance. Facial expressions are a big part of how

the user perceives the robot. However, realistic fa-

cial expressions can be tough to achieve as humans

can intuitively understand when something looks un-

usual. Leaning more towards the cartoonish and sim-

plistic facial expressions could solve this issue. Sys-

tems such as KASPER (Blow et al., 2006) use this

idea in the design to approximate the facial expres-

sions without ultra-realism.

As mentioned previously, the growth of the HRI

field leads to an increase in the number of collabora-

tive robots being introduced in our society, which in

turn raises the need to study and improve the security

and efficiency of human-robot interactions. Several

works validate the use of VR to reach this goal for

different types of interactions, be it in handing over

and receiving objects from a robot partner (Duguleana

et al., 2011) or controlling a robot arm (de Giorgio

et al., 2017). Regarding the improvement of HRI

safety, different solutions are presented to this prob-

lem. Applications such as ”beWare of the Robot”

(Matsas and Vosniakos, 2017) tackle the safety issues

through ”emergencies” or warning signals in the form

of visual and auditive stimuli.

Instead of warning the user, another way to

tackle the safety issues is to adapt the robot’s move-

ments. This can be achieved through a ”digital twin”

environment, where the physical environment is di-

rectly transcribed to a digital one, allowing for part or

all of the logic to be in the digital environment. The

robot can then use this system to avoid the human or

even stop entirely (Maragkos et al., 2019).

The introduction of biological signals in HRI

can improve the quality of the interaction. For in-

stance, it can allow the user to control a robot using

a brain-machine interface (Bi et al., 2013) or allow

the robot to recognise the human’s mental state and

physical activities through sensors monitoring heart-

rate, muscle activity, brainwaves through EEG, nose

temperature and head movement (Al-Yacoub et al.,

2020).

In particular, electrodermal activity (EDA) is

one of the leading indicators of stress. There are

various techniques and open-source software that can

be used to analyse such signals (Lutin et al., 2021).

More recently, deep learning algorithms alongside the

”classical” statistical algorithms can be used to make

more complex analysis (Aqajari et al., 2020).

1.2 Contribution and Article Structure

The presented work proposes a solution to study a

human-robot collaboration scenario in Virtual Real-

ity. The presented solution consists of a simulated

environment that the user can visualize and interact

with via a head-mounted display (HMD). It explores

a ROS (Stanford Artificial Intelligence Laboratory et

al., 2020) Motion Planning, manipulation and con-

trol tool named MoveIt to animate a model of Baxter

robot to which the user interacts. The created sys-

tem is then explored in a pilot study to evaluate how

the participants react to distractions in their simulated

workplace, and how they feel about the interaction

with the robot.

This paper is organized as follows: section 2 de-

scribes the main goal of this work and the considera-

tions taken. The architecture and implementation of

the created system are presented in section 3. Sec-

tion 4 introduces the pilot study performed with the

system created and provides an assessment and dis-

A VR Application for the Analysis of Human Responses to Collaborative Robots

69

cussion on the outcome. Finally, section 5 concludes

the article.

2 VR FOR COLLABORATIVE

ROBOTS INTERACTION

ANALYSIS

The goal of this work is to create a system to study

human-robot interaction and use it to analyse how dis-

tractors may affect a human performing a collabora-

tive task with a robot. The system consists of a vir-

tual environment where the user interacts with a sim-

ulated Baxter robot, cooperating to complete simple

tasks. During these interactions, the user’s reactions

to the robot’s actions, such as posture and physiolog-

ical signals, namely, electrodermal activity, and elec-

trocardiogram are recorded to support the evaluation

of users’ reactions to the disturbing events purposely

added.

The use of Virtual Reality to study human-robot

collaboration has the clear advantage of providing

clean, repeatable and safe support to create counter-

parts of live experiences (Duguleana et al., 2011).

This is perfect for analysing how adequate an environ-

ment or an interactive system is for human use. Be-

sides safety, these approaches may have economical

advantages as they enable the validation of hypothe-

ses without risking any kind of material losses due to

inadequate usage, or do any type of predictive analy-

sis of what will be the outcome of the introduction of

a change, such as a collaborative robot, in a specific

point of the production process.

There is however the question of knowing if the

user behaviours in a VR-based environment are dif-

ferent from the ones in a real situation.

Although other factors may contribute to the mis-

match of the above, the immersion level and sense of

presence are two crucial aspects since the results ob-

tained in a VR-based test will not be accurate if a high

sense of immersion and presence is not achieved.

For this reason, it is necessary to evaluate these

factors as perceived by each user, along with the in-

teractive task under analysis, to enable the prediction

of the accuracy of the task-related parameters under

estimation. This is commonly done through the ap-

plication of self-response questionnaires such as UEQ

and flow short scale questionnaires and by evaluating

the user’s reactions, as will be analysed later.

Another important aspect to take into account is

the realism of the robots’ movements. To obtain

results comparable to real situations, the simulated

robot has to behave like a real one in a real environ-

ment. Whenever possible, the use of a controller that

mimics or matches as close as possible that of the real

robot will bring some guarantee that in what concerns

the perception of robot motions by the user will be

similar to the real case.

3 ARCHITECTURE,

IMPLEMENTATION AND

SCENARIO

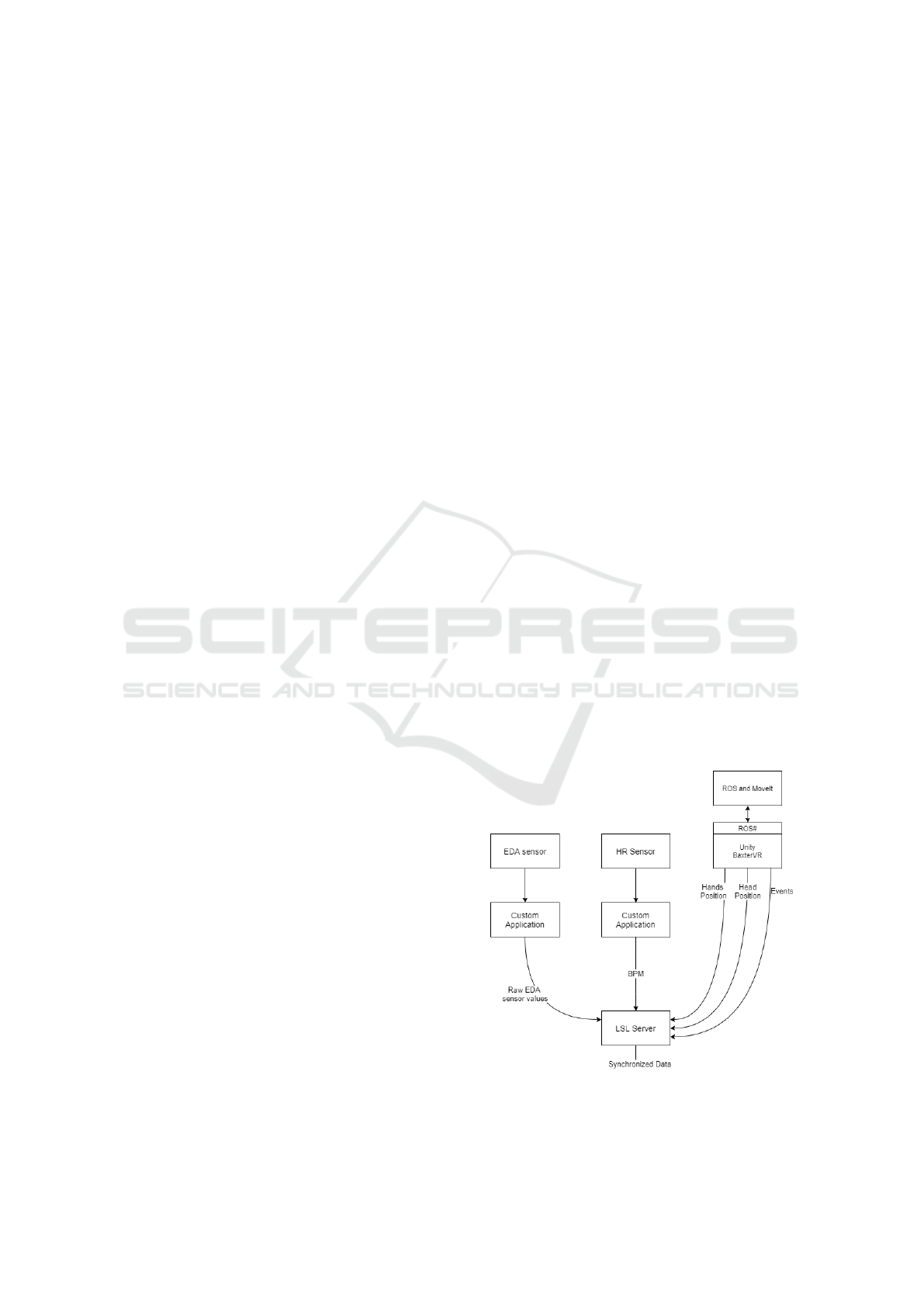

The implementation of the above resulted in a sce-

nario around the Baxter robot supported by a modular

architecture (figure 1) composed of three main ele-

ments:

• The BaxterVR application developed on Unity™.

• MoveIt - a ROS-based motion planning and con-

troller for real robots.

• A data acquisition system developed over the Lab-

streaminglayer (LSL) (Swartz Center for Compu-

tational Neuroscience, 2021) framework.

The BaxterVR application allows the users to ex-

plore a collaborative robot scenario and interact with

it via a handheld controller, as will be described

later. This application communicates with MoveIt,

the robot planner and controller supported by a ROS

(Robot Operating System) connection. BaxterVR

also uses an LSL connection for enabling the syn-

chronous registration of the generated events and

biosignals such as GSR (galvanic skin response) and

heart rate, which are captured by two other applica-

tions that use the same protocol.

Figure 1: System architecture.

GRAPP 2022 - 17th International Conference on Computer Graphics Theory and Applications

70

3.1 Implementation

To handle the communications between BaxterVR

and MoveIt, a total of three ROS nodes were cre-

ated. Their purpose is to handle the robot joint an-

gles to BaxterVR, transmit information about the VR

objects, such as their positions, and orientations to

MoveIt, and handle requests to move the robot using

an action server.

BaxterVR publishes the necessary information

about the objects in the environment, such as position,

orientation and size to the corresponding ROS node,

which uses the Planning Scene ROS API to add them

to the planning scene. The scene objects that are rel-

evant for the movement calculations are represented

in MoveIt as boxes. Using Rviz it is possible to vi-

sualize them as green boxes (figure 2). In this case,

the objects are the two conveyor belts standing next

to Baxter that will be presented in section 3.2. To re-

duce the communication overload, objects’ positions

are updated only if their changes are above a given

threshold.

Figure 2: Rviz Visualization.

To publish the robot arms joint angles to Bax-

terVR, two separate topics are used, one for each arm,

with a custom message containing seven values, cor-

responding to each of the seven joints, obtained using

a MoveIt interface.

The BaxterVR simulation needs to be able to

make requests to MoveIt to control the robot. It also

requires the ability to preempt or cancel these re-

quests, for example when the object being picked up

moves. Periodic feedback is also necessary to syn-

chronize the actions on both sides, such as attaching a

picked-up object to Baxter’s end effector in Unity’s

side at the same time it is attached in MoveIt. A

ROS action is the perfect fit for the previous descrip-

tion and as such, a ROS action server was created to

control the simulated Baxter robot. When it receives

a request, it sends the necessary information to the

motion planner using the Motion Planning API. Bax-

terVR from its side can issue the following requests:

• Move the end-effector to a specific position.

• Move the end-effector to a specific position using

a Cartesian path.

• Pick up an object, given its position.

• Detach an object from a robot end-effector.

• Change the speed of the arms.

As mentioned previously, the robot needs to react

to changes in the environment, such as movements of

the object being picked up. With the Motion Planning

API, it is possible to make asynchronous requests

to the motion planner and cancel executing requests,

however, it does not provide a simple way to know

the status of a request being executed. A solution to

this problem is to monitor the result action topic from

the action that MoveIt uses to communicate with the

motion planner, as this topic is only published when

the motion is concluded while also giving information

about its success.

3.1.1 Rosbridge and ROS#

Unity, being the support for the development of Bax-

terVR applications, does not directly support the con-

nection to ROS. However, a lot of efforts have been

made over the last few years toward this goal (Hussein

et al., 2018) and it is now possible to use Rosbridge

on the ROS side and ROS# on the Unity side to make

this connection.

Rosbridge (or rosbridge suite) is a standard ROS

library that provides a JSON interface so that non-

ROS programs can send commands to ROS. ROS#

makes use of this interface using a Unity Asset Pack-

age to give Unity-based applications the ability to

communicate with ROS. This includes publishing and

subscribing to topics, communicating through ser-

vices and actions, and even use custom messages.

ROS# also introduces some important robotics func-

tionalities such as importing a robot through a Uni-

versal Robot Description Format (URDF) file. In this

project, this functionality was used to import Baxter’s

model.

3.1.2 Synchronous Biosignals Acquisition

To identify reactions to the distracting events in

the BaxterVR application both the user’s electro-

dermal activity (EDA) and heart rate (HR) are ac-

quired and stored. EDA is acquired using a Bitalino

board (da Silva et al., 2014) with a sampling rate of

1kHz using, whereas HR frequency is obtained us-

ing Polar H10 heart rate sensor, both of them using in

house developed applications that establish the LSL

bridge with these Bluetooth-enabled devices.

A VR Application for the Analysis of Human Responses to Collaborative Robots

71

The development of the Unity Based applica-

tion (BaxterVR) included support for Lab Streaming

Layer (LSL) for enabling the capture of the evolution

of hand and head coordinates, and registering the oc-

currence of the events described in the next section

(3.2). This LSL support was also included in the de-

velopment of both the Polar H10 and the Bitalino ap-

plication.

The synchronous signals acquisition is handled by

LabRecorder, the LSL recording application, which

receives the timestamped streams and stores them.

One of the major advantages of this framework is that

all the recorded data streams can then be related as

they share the same clock reference. LSL has another

big advantage, which is the easy integration of new

data sources in data collecting experiments.

3.2 A Collaborative Robot Scenario

The designed scenario mimics an industrial environ-

ment where the human executes a task in collabora-

tion with a robot. As with any real environment, unre-

lated events will happen that may lead to distractions

of the user contributing to errors and/or fatigue.

The basis of the current scenario consists of the

robot picking objects from a conveyor belt and hand-

ing them to the human partner. The human, in turn,

has to grab these objects and place them in boxes fol-

lowing a given protocol. To grab the objects the hu-

man has to reach over to them with the controller and

press a button that closes the virtual hand and attaches

the object to it. This method to interact with the object

was considered to be the most natural option since to

press the button the user has to close its hand around

the remote as if grasping a real object.

To move around the simulation the human has two

options, move in real life or use a joystick. The main

objective is for the users to move mainly in real life

and use the joystick to make small adjustments so that

the virtual room better adequates to the real one. In

the simulation, the users always start at the centre of

the room, independently of their position in the real

room. As such, in an extreme example, if the simula-

tion is started while the user is standing next to a wall

in real life, he can no longer move in the direction

blocked by the wall without the joystick.

For exploring different situations, the Baxter robot

has two different approaches for interacting with the

human: it can either hand the object to the human

and wait for him/her to grab it or drop the object on

the ground as soon as it reaches the delivery position

(figure 3). Dropped objects break into pieces once

they touch the ground, so the human has to wait until

Baxter picks and hands another one.

Figure 3: Baxter dropping an object.

The scenario is organized in increasing difficulty

levels aiming at analysing their effect on the user per-

formance. The task of delivering an object was made

purposely complex so that it requires the user to focus

on it to successfully complete a level.

As previously mentioned, one of the objectives of

this experiment is to understand how distractions in

the surrounding environment affect the user. With this

in mind, three types of events were created to redirect

the attention of the participant during the experiment.

Event Type 1: Thirty seconds into the experiment,

and every minute after that, a door opens at one side

of the room, with the appropriate sound, and a robot

cart appears, transporting a box (figure 11). It moves

across the room until it reaches another door, exiting

the room.

Event Type 2: Another event is a box that appears in

a conveyor belt on the corner of the room in random

time intervals between 15 and 35 seconds (figure 4).

A loud alarm can be heard when it appears as well as

the sound of the conveyor belts moving.

Event Type 3: Lastly, when there are only thirty sec-

onds left to complete the level, the clock on the wall

starts ticking and flashing red (figure 8).

Despite failing the level when the timer runs out,

the user does not automatically fail the entire test.

Each level is evaluated individually, and even after

failing, he must deliver all the objects to pass to the

next level.

Figure 4: Distracting box.

GRAPP 2022 - 17th International Conference on Computer Graphics Theory and Applications

72

This scenario was designed to be simple (figure 6)

so that it is processed fast enough to not cause any

discomfort to the user while at the same time contain-

ing elements similar to the ones found in an indus-

trial environment such as conveyor belts or transporter

robots. As already mentioned, the main objective is

to grab the objects handed by Baxter and place them

inside a box (figure 5). The objects are simple rectan-

gular bars and can be of four different colours, blue,

orange, green or indigo and have to be placed inside

a box with the corresponding colour. To obtain a box,

the user must press a button with the corresponding

colour. Once a box has one or two objects inside, to

score, the user has to deliver it by placing it on top of

an elevator and pressing a button (figure 7). On one

of the walls, there is a whiteboard that displays infor-

mation about the current level, more specifically, how

much time is left, the score, objects missed and the

difficulty level (figure 8).

Figure 5: User placing an object inside the box.

Figure 6: Surrounding environment.

The simulation has a total of five levels. The robot

speed increases with each level, starting at 50% of the

maximum speed, raising 10% per level. To success-

fully complete a level, the user must deliver a preset

amount of objects within two minutes. The number

of objects to deliver increases one per level and starts

at one object.

The objects handed by the robot arrive periodi-

cally on a conveyor belt next to Baxter, being their

sequence of colours of complete random order (figure

Figure 7: User delivering a box with one object.

Figure 8: Score Board.

9). When an object gets to the pre-defined position,

close to Baxter, the conveyor belt stops for a moment,

waiting for the robot to grab it (figure 10). If Bax-

ter starts the grabbing movement, the conveyor belt

doesn’t move until the object is grabbed, otherwise,

two seconds later, it starts moving again and the ob-

ject is lost. If the robot picks the object and hands it to

the user, it must be grabbed so that Baxter can pick up

another or, if at level three or above, the human must

pick the object before the robot reaches the delivery

position, otherwise it will fall to the ground and get

broken into pieces.

Figure 9: Conveyor belt that delivers the objects to Baxter.

A VR Application for the Analysis of Human Responses to Collaborative Robots

73

Figure 10: Baxter picking up an object.

Figure 11: Transporter robot.

4 A PILOT EXPERIMENT

Using the system described previously, a pilot exper-

iment was conducted to understand how the users re-

act to the distractions while interacting with a robot in

an industrial environment and how they feel about the

interaction.

Since this experiment focuses on VR interaction,

an important aspect to take into account is the equip-

ment to use to allow the user to experience the appli-

cation created.

There is currently a vast number of HMDs on the

market. The technical evolution of processors and

displays for the smartphones industry has created the

necessary support for new all-in-one headsets, such

as Oculus Quest, or Vive Focus. These products

have seen recently a dramatic improvement in terms

of the enclosed processing power, battery autonomy,

and display quality. Nevertheless, the tethered exist-

ing versions still enable the use of high-end graphics-

enabled computers both to process and display con-

tents, without requiring extensive optimisation efforts

or sacrificing any aspect of graphical quality. An-

other important advantage of these PC connected de-

vices is the possibility of establishing simple com-

munications with other software packages running on

the same machine. These reasons led to the devel-

opment of the BaxterVR application targeting Oculus

Rift™, exploring the compatibility mode of Oculus

Quest devices through a USB-C (Oculus Link) con-

nection with the computer running the application.

This is particularly convenient as it enables ROS-

MoveIt, LSL-data collectors, and the BaxterVR ap-

plication to share the same powerful machine and ex-

ploit simplified and reliable communications between

them.

In this study, the participants interact with the sim-

ulated robot described previously, while their biolog-

ical signals are recorded. In the end, they answer a

UEQ questionnaire (Laugwitz et al., 2008), a Flow

Short Scale Questionnaire (Rheinberg et al., 2006)

and a few open answer questions to allow the partic-

ipants to give any opinion that cannot be established

by the questionnaires, such as if they had motion sick-

ness and what was the cause.

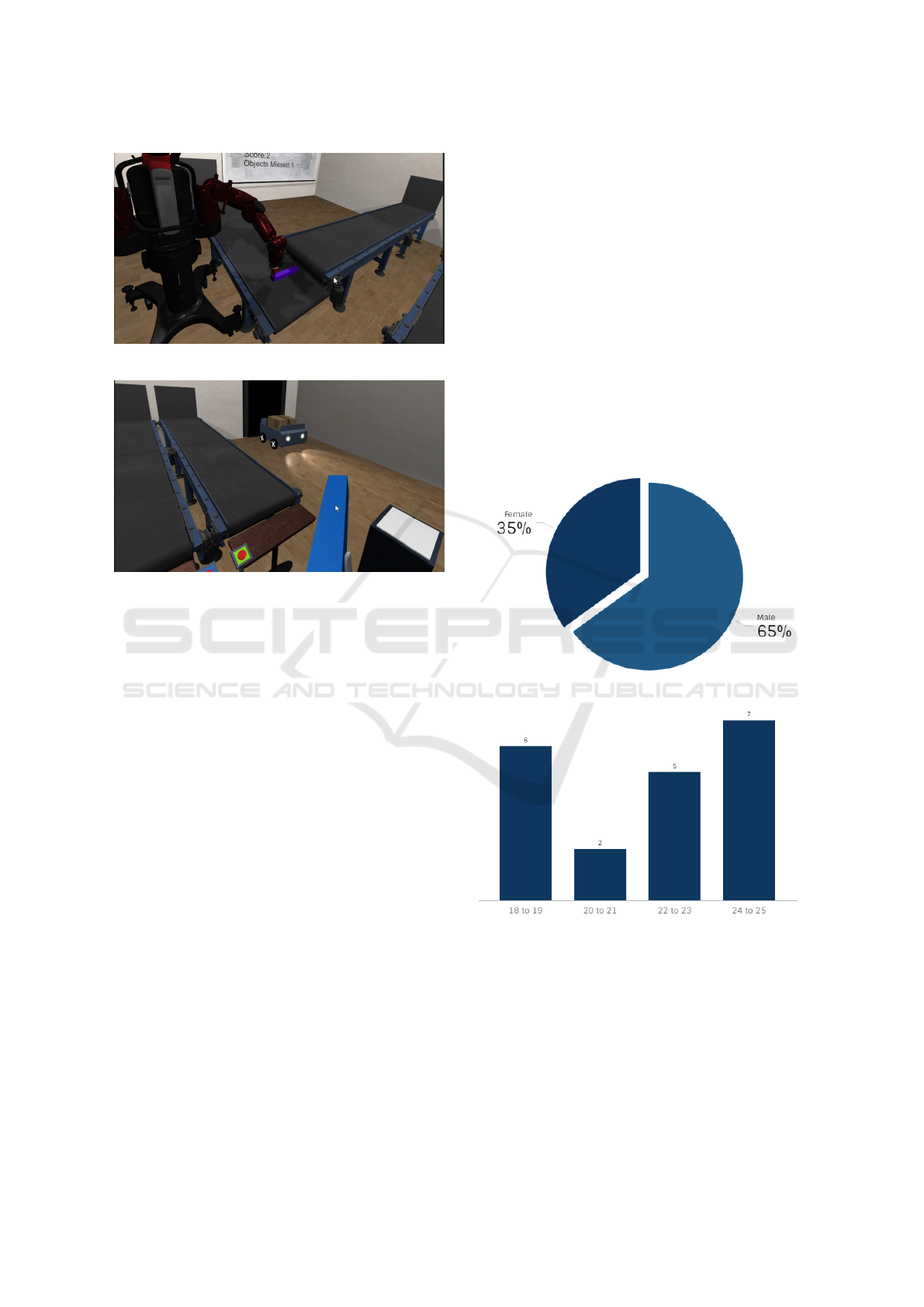

Figure 12: Gender distribution of the participants.

Figure 13: Age distribution of the participants.

This pilot experiment had the participation of 20

volunteer students of MSc and PhD courses with a

gender distribution of 35% female and 65% male (fig-

ure 12), and ages between 18 and 25 years old (figure

13).

The protocol followed in the experiment was the

following:

1. Disinfect all the equipment used.

2. The participant is introduced to the experiment,

GRAPP 2022 - 17th International Conference on Computer Graphics Theory and Applications

74

Figure 14: UEQ Questionnaire results.

explained what he/she has to do to successfully

conclude each level and what data is going to be

collected and how.

3. The participant reads and signs a consent.

4. The EDA and HR sensors are placed on the par-

ticipant.

5. The participant puts on a hygienic mask for the

VR system and the VR system itself.

6. The simulation is started and the participant

should play it following the given rules and try-

ing to complete each level.

7. If at any time the participant starts feeling un-

comfortable with the experiment, the procedure

should immediately stop. Otherwise, he/she

should finish the simulation without any kind of

interruption.

8. When the simulation ends, either because the par-

ticipant concluded the final level or because it was

stopped early, ask if he/she wants to play again

and is asked his/her opinion on the simulation.

9. The participant fills a User Experience Question-

naire and a Flow Short Scale Questionnaire to

evaluate the simulation and answers four open an-

swer questions.

4.1 Analysis of the Questionnaires

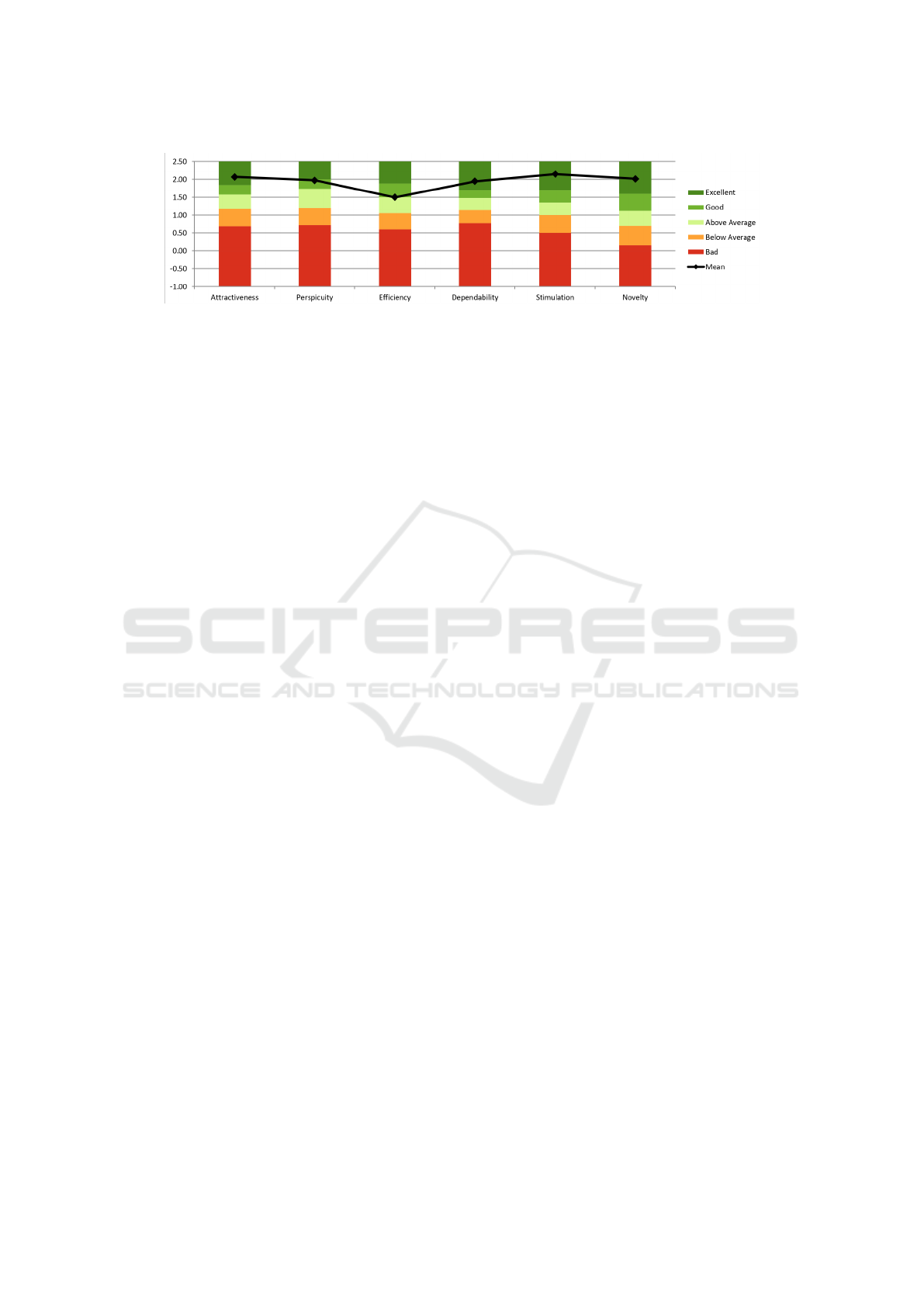

From the analysis of the UEQ questionnaire, the high-

est rating categories were dependability, stimulation

and novelty with ”Excellent”, then attractiveness and

perspicuity with ”Good” and finally, efficiency with

”Above Average” (figure 14).

One of the most interesting results is the low rat-

ing of the Efficiency category, compared to the oth-

ers. When asked the opinion about the simulation

most people said that the robot seemed slow on the

later levels. In reality, the robot was moving faster

than in the first levels, however, the participants seem

to get used to the task they have to perform and end

up finishing it before the robot grabs another piece. In

the case of this simulation, the efficiency category can

also be thought of as the efficiency of the interaction,

since the perception of the robot being slower than it

should, translates into a low efficiency rating.

The Flow Short Scale questionnaire evaluates

three aspects of the simulation on a scale of 1 to 7:

flow, anxiety level and challenge level. Flow level

indicates if the user is feeling engaged and focused

while engaged in the activity. If the flow level is high,

the participants find the activity intrinsically interest-

ing and take pleasure and enjoyment when involved

in it. Anxiety level translates to how much anxiety

the users felt and challenge level indicates if the chal-

lenges presented were too difficult (7) or too easy (1).

Table 1 presents an average and the standard devi-

ation of the flow, anxiety and challenge level. Ac-

cording to the results, the challenge level seems to be

perfectly adequate, with a score of 4 and a relatively

low standard deviation of 0.458. The anxiety level

has the lowest value with 3.85, meaning that the par-

ticipants were not in high levels of anxiety during the

simulation. However, it presents a significant stan-

dard deviation of 1.512. This is partially due to some

of the anxiety scores being a lot higher than the av-

erage, with values reaching 5, 6 or even 7. Most of

these high scores are from the participants that were

using the joystick for moving in the VR environment,

and consequently, reported motion sickness. On the

other hand, most of the lowest scores on anxiety were

reported by the participants that felt that the robot was

too slow. Lastly, a flow score of 5.525 out of 7 means

that the participants liked the activity and the simu-

lation and felt engaged and focused, although some

aspects could be improved.

In the open answer questions, the users were asked

to describe the interaction with the robot; what were

the main difficulties; what caused the most distrac-

tion; and, at last, suggestions to improve the simula-

tion.

When asked to evaluate the surrounding distrac-

tions, the result was that the ticking clock and some

of the surrounding noises were the most distracting

elements. Some participants even ignored completely

the existence of the transporter robot. The reason for

this is that they were focused on the interaction and

A VR Application for the Analysis of Human Responses to Collaborative Robots

75

the task they had, which involved a lot of visual anal-

ysis.

Concerning the interaction with the robot, as men-

tioned previously, most people felt like it was too

slow, especially in the later levels. Some people also

reported that it felt like something was wrong when

the robot stopped waiting for them to grab the object,

dropping it on the ground instead. This interaction is

intended and is meant to test if it is more natural to

have the robot behave like it is aware of the human,

waiting for him to grab the object, or behaving like

a traditional robot that is just doing its task without

considering the collaborating partner. The results are

within the expectations, meaning that, even though in-

teracting with a non-sentient robot, the human still

feels like the interaction is more natural if the robot

reacts to his actions instead of doing a pre-planned

task.

One of the main complaints about this experiment

was related to the movement inside VR. The partic-

ipants have two ways to move inside VR, either by

using a joystick or by moving in real life. The real

space where the experiment takes place was roughly

the same size as the working space inside the simula-

tion. This led to two kinds of behaviours from the par-

ticipants, some would move in real life, concluding

the experiment without touching the joystick, others

moved almost exclusively with the joystick because

they were afraid to bump into something in real life.

Table 1: Results from the Flow Short Scale Questionnaire.

Average Standard Deviation

Flow 5.525 0.667

Anxiety 3.85 1.512

Challenge 4 0.459

4.2 Biological Signals

As mentioned previously, this experiment includes

the recording of electrodermal activity and heart rate

of the participants. Later the acquired data is to be

processed for further analysis. As an example, the

raw data collected from Bitalino’s EDA sensor is con-

verted to microsiemens using a formula provided in

Bitalino’s Electrodermal Activity (EDA) Sensor User

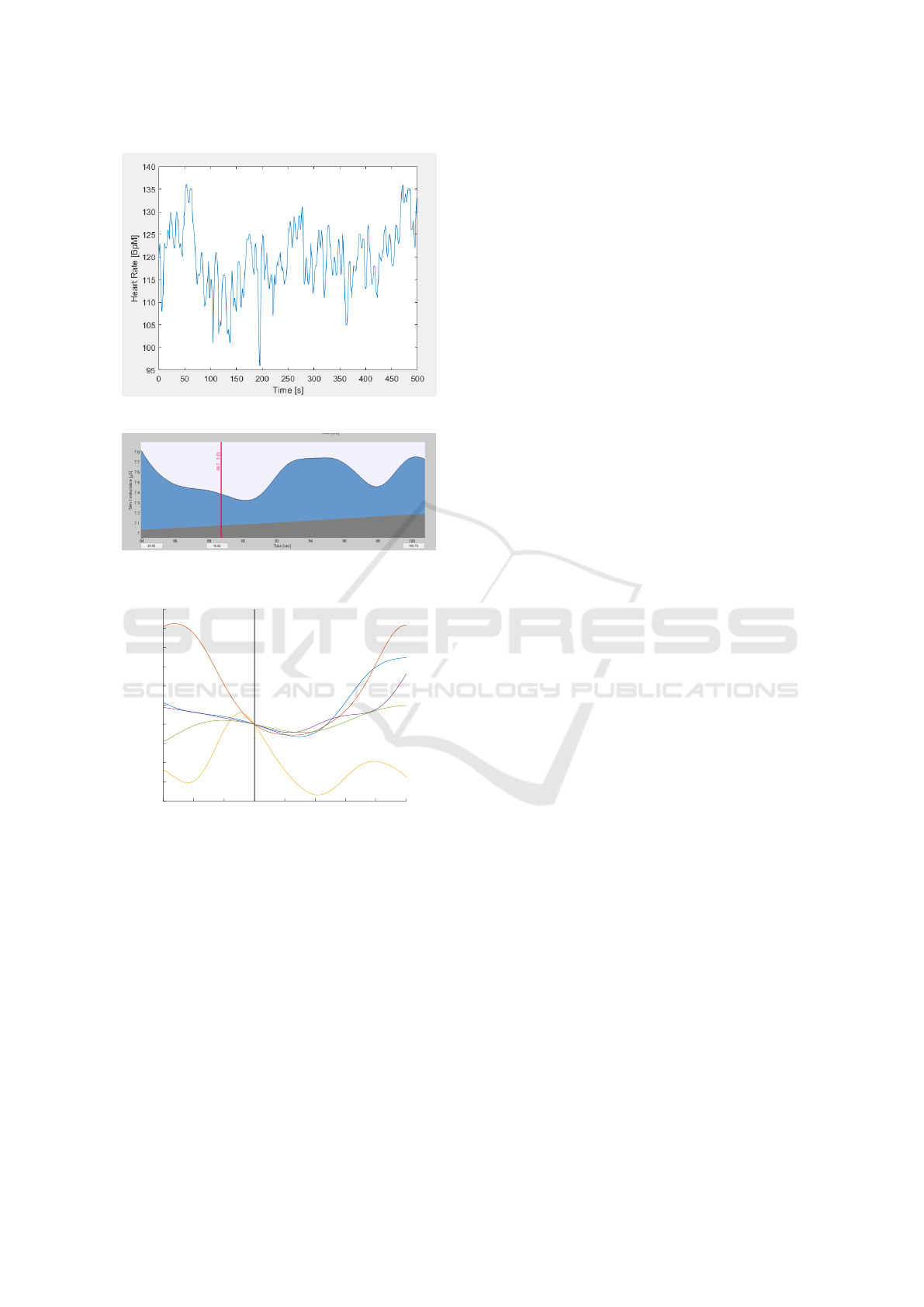

Manual (PLUX - Wireless Biosignals, 2020). Figure

15 (top) shows an example of a recorded EDA sig-

nal and figure 16 the HR of the same participant. The

blue vertical lines on figure 15 correspond to a spe-

cific event: the clock starts ticking.

As one of the objectives of this work is to try to

identify the influence of the simulation events on the

subject’s anxiety and stress, we expect to observe the

corresponding response in the acquired bio-signals.

Figure 15: Top: EDA signal of a participant. Bottom: Con-

tinuous Decomposition Analysis of the EDA signal.

Nevertheless, these signals do not have any kind of

absolute scale as their baselines and responses vary

from person to person. For example in what con-

cerns EDA signals can be divided into two parts: the

tonic component, or Skin Conductance Level (SCL)

which changes only slightly within tens of seconds,

and the phasic component or Skin Conductance Re-

sponse (SCR). The SCR represents a rapid change

that happens shortly after the onset of a stimulus, usu-

ally 1 to 5 seconds, and thus, it can be used to detect a

possible reaction to an event. When a response occurs

in the absence of a stimulus, it is called a non-specific

SCR.

To perform analysis on this signal, the free open-

source software Ledalab was used (Benedek and

Kaernbach, 2010a; Benedek and Kaernbach, 2010b).

The recorded signal is noisy and is recorded at a high

sample rate, so before the actual processing, it is nec-

essary to first downsample it and apply a moving av-

erage smoothing.

The filtered signal can then be analysed using a

Continuous Decomposition Analysis, extracting the

tonic and phasic activities (figure 15 (bottom)). In the

case of the example given (figure 15), event-related

activations are detected in the first, second and fourth

events. We can confirm these results through visual

analysis. After the first event (figure 17), a sudden

change in the skin conductance is verified with la-

tency between 2 and 3 seconds. This is in accordance

with the results obtained, which indicate an SCR af-

ter the first event, with a latency of 2.7 seconds. In

figure 18 it is possible to recognise the reaction of

five participants the first time the clock started tick-

ing. The event is represented by a vertical black line

and the conductivity values of each participant are rel-

GRAPP 2022 - 17th International Conference on Computer Graphics Theory and Applications

76

Figure 16: HR of a participant.

Figure 17: EDA response decomposition after the first

event: Gray: tonic component, Blue: phasic component.

0 1000 2000 3000 4000 5000 6000 7000 8000

Time [ms]

-0.4

-0.3

-0.2

-0.1

0

0.1

0.2

0.3

0.4

0.5

0.6

EDA [uS]

Figure 18: EDA responses to the clock ticking event, for 5

participants.

ative to the conductivity measured when the event oc-

curred.

At the time of the event, the EDA of all partici-

pants was decreasing and in a window of 1 to 4 sec-

onds after the event it changed its behaviour, present-

ing a positive slope variation. These correspond to

SCRs, which indicate a reaction to the ticking clock.

5 CONCLUSIONS

From this pilot experiment, we can extract some valu-

able information, even if only the questionnaires were

processed completely for the whole group of partici-

pants. First, from the UEQ questionnaire, we con-

clude that the overall looks and interaction in the sim-

ulation is satisfactory. This is an important aspect to

take into account because the more people test a prod-

uct, the more reliable the results are, and having them

enjoy the time spent with that product is a means to

attract more people into testing it.

Analysing the answers, the high results of the at-

tractiveness, perspicuity and stimulation categories,

together with the opinions of the participants, lead

us to believe that the objective of providing an ad-

equate VR-based alternative to real scenario exper-

iments was achieved. The lower score on the effi-

ciency category reveals that the interaction is less ef-

ficient than desired, as mentioned previously. Given

that the robot arm is moving at the maximum rec-

ommended speed in the last difficulty level, one way

to speed up the interaction and improve its efficiency

would be to use both of Baxter’s arms. In an ideal

situation, the robot would be waiting for the human

to complete his task and not the other way around.

This way the human would not feel like the robot was

holding him back, but instead, feel like it was helping

him.

The fact that most participants almost ignored the

existence of the transporter robot was not expected,

but it reveals that their focus was towards the interac-

tion and the task at hand, which ultimately is a good

thing. On the other hand, the ticking clock and sur-

rounding sounds causing the most distraction, suggest

that the most efficient distractions in these types of ex-

periments rely heavily on auditory queues.

Another aspect to take into account when deal-

ing with virtual reality applications is the surround-

ing space. Although having enough physical space

to move around, some users still prefer to move with

a joystick due to the fear of bumping into an object.

Moving with the joystick in most cases causes motion

sickness, especially if the user relies exclusively on

this type of motion. The option of using the joystick

to move in the simulation was left to the user so that

they could make small adjustments to their position in

VR to better adequate it to the real-world space. The

fact that some users chose to rely only on this type of

movement was unexpected, but since it can influence

the results, in future work, this possibility should be

removed.

One detail that also induced stress to some users,

mainly the same users that used only the joystick

movement, was the wire that connects the Oculus

Quest to the computer and the fear they would be-

come entangled. To have the best possible results, the

space that the users have to move around should be

A VR Application for the Analysis of Human Responses to Collaborative Robots

77

bigger than the one in the simulation and, ideally, the

simulation should be able to run using only a wire-

free headset so that the users can move around freely

without worrying about cables.

Future work will include a deeper analysis of the

acquired biosignals and relate them to user move-

ments and events responses recorded for the whole

group of participants. It would also be interesting to

use a signal to detect anxiety such as eyes movements.

REFERENCES

Al-Yacoub, A., Buerkle, A., Flanagan, M., Ferreira, P.,

Hubbard, E.-M., and Lohse, N. (2020). Effec-

tive human-robot collaboration through wearable sen-

sors. In 2020 25th IEEE International Conference

on Emerging Technologies and Factory Automation

(ETFA), volume 1, pages 651–658.

Aqajari, S. A. H., Naeini, E. K., Mehrabadi, M. A., Labbaf,

S., Rahmani, A. M., and Dutt, N. (2020). Gsr analy-

sis for stress: Development and validation of an open

source tool for noisy naturalistic gsr data.

Benedek, M. and Kaernbach, C. (2010a). A continuous

measure of phasic electrodermal activity. Journal of

Neuroscience Methods, 190(1):80–91.

Benedek, M. and Kaernbach, C. (2010b). Decomposition

of skin conductance data by means of nonnegative de-

convolution. Psychophysiology, 47(4):647–658.

Bi, L., xin’an, F., and Liu, Y. (2013). Eeg-based brain-

controlled mobile robots: A survey. Human-Machine

Systems, IEEE Transactions on, 43:161–176.

Blow, M., Dautenhahn, K., Appleby, A., Nehaniv, C. L.,

and Lee, D. (2006). The art of designing robot faces:

Dimensions for human-robot interaction. In Proceed-

ings of the 1st ACM SIGCHI/SIGART Conference on

Human-Robot Interaction, HRI ’06, page 331–332,

New York, NY, USA. Association for Computing Ma-

chinery.

da Silva, H. P., Guerreiro, J., Lourenc¸o, A., Fred, A., and

Martins, R. (2014). Bitalino: A novel hardware frame-

work for physiological computing. In Proceedings of

the International Conference on Physiological Com-

puting Systems - Volume 1: PhyCS,, pages 246–253.

INSTICC, SciTePress.

de Giorgio, A., Romero, M., Onori, M., and Wang, L.

(2017). Human-machine collaboration in virtual re-

ality for adaptive production engineering. Procedia

Manufacturing, 11:1279 – 1287. 27th International

Conference on Flexible Automation and Intelligent

Manufacturing, FAIM2017, 27-30 June 2017, Mod-

ena, Italy.

Duguleana, M., Barbuceanu, F. G., and Mogan, G. (2011).

Evaluating human-robot interaction during a manipu-

lation experiment conducted in immersive virtual re-

ality. In Shumaker, R., editor, Virtual and Mixed Real-

ity - New Trends, pages 164–173, Berlin, Heidelberg.

Springer Berlin Heidelberg.

Hussein, A., Garcia, F., and Olaverri Monreal, C. (2018).

Ros and unity based framework for intelligent vehi-

cles control and simulation.

Kidd, C. D. and Breazeal, C. (2008). Robots at home:

Understanding long-term human-robot interaction. In

2008 IEEE/RSJ International Conference on Intelli-

gent Robots and Systems, pages 3230–3235.

Knudsen, M. and Kaivo-oja, J. (2020). Collaborative

robots: Frontiers of current literature. Journal of In-

telligent Systems: Theory and Applications, 3:13–20.

Kr

¨

uger, J., Lien, T., and Verl, A. (2009). Cooperation of

human and machines in assembly lines. CIRP Annals,

58(2):628–646.

Laugwitz, B., Held, T., and Schrepp, M. (2008). Construc-

tion and evaluation of a user experience questionnaire.

volume 5298, pages 63–76.

Liu, H., Qu, D., Xu, F., Zou, F., Song, J., and Jia, K. (2019).

A human-robot collaboration framework based on hu-

man motion prediction and task model in virtual envi-

ronment. In 2019 IEEE 9th Annual International Con-

ference on CYBER Technology in Automation, Con-

trol, and Intelligent Systems (CYBER), pages 1044–

1049.

Lutin, E., Hashimoto, R., De Raedt, W., and Van Hoof, C.

(2021). Feature extraction for stress detection in elec-

trodermal activity. In BIOSIGNALS, pages 177–185.

Maragkos, C., Vosniakos, G.-C., and Matsas, E. (2019).

Virtual reality assisted robot programming for hu-

man collaboration. Procedia Manufacturing, 38:1697

– 1704. 29th International Conference on Flexible

Automation and Intelligent Manufacturing ( FAIM

2019), June 24-28, 2019, Limerick, Ireland, Beyond

Industry 4.0: Industrial Advances, Engineering Edu-

cation and Intelligent Manufacturing.

Matsas, E. and Vosniakos, G.-C. (2017). Design of a vir-

tual reality training system for human–robot collabo-

ration in manufacturing tasks. International Journal

on Interactive Design and Manufacturing (IJIDeM),

11(2):139–153.

PLUX - Wireless Biosignals (2020). Electro-

dermal activity (eda) sensor user manual.

https://bitalino.com/storage/uploads/media/

electrodermal-activity-eda-user-manual.pdf. Ac-

cessed: 2021-09-27.

Rheinberg, F., Vollmeyer, R., and Engeser, S. (2006). Die

erfassung des flow-erlebens.

C¸

¨

ur

¨

ukl

¨

u, B., Dodig-Crnkovic, G., and Akan, B. (2010).

Towards industrial robots with human-like moral re-

sponsibilities. In 2010 5th ACM/IEEE International

Conference on Human-Robot Interaction (HRI), pages

85–86.

Stanford Artificial Intelligence Laboratory et al. (2020).

Robotic operating system.

Swartz Center for Computational Neuroscience (2021).

Labstreaminglayer. https://github.com/sccn/

labstreaminglayer. Accessed: 2021-09-27.

GRAPP 2022 - 17th International Conference on Computer Graphics Theory and Applications

78