Brainy Home: A Virtual Smart Home and Wheelchair Control

Application Powered by Brain Computer Interface

Cihan Uyanik

1 a

, Muhammad Ahmed Khan

1 b

, Rig Das

1 c

, John Paulin Hansen

2 d

and Sadasivan Puthusserypady

1 e

1

Department of Health Technology, Technical University of Denmark, 2800 Kgs. Lyngby, Denmark

2

Department of Technology, Management and Economics, Technical University of Denmark, 2800 Kgs. Lyngby, Denmark

Keywords:

Brain Computer Interface, Virtual Smart Home, Wheelchair Control, Steady State Visual Evoked Potential.

Abstract:

In recent years, smart home applications have become imperative to improve the life quality of people, espe-

cially for those with motor disabilities. While the smart home applications are controlled with interaction tools

such as mobile phones, voice commands, and hand gestures, these may not be appropriate for people with se-

vere disabilities that impacts their motor functions, for instance locked-in-syndrome (LIS), amyotrophic lateral

sclerosis (ALS), cerebral palsy, stroke, etc. In this research, we have developed a smart home and wheelchair

control application in a virtual environment, which is controlled solely by the steady-state visual evoked po-

tential (SSVEP) based brain computer interface (BCI) system. It is a relatively low cost, easy to setup wireless

communication protocol, which offers high accuracy. The system has been tested on 15 healthy subjects and

the preliminary results comprehensively show that all the subjects completed the device interaction tasks with

approximately 100% accuracy, and wheelchair navigation tasks with over 90% accuracy. These results clearly

indicate that in future, the developed system could be used for real-time interfacing with assistive devices and

smart home appliances. The proposed system, thus may play a vital role in empowering the disabled people

to perform daily-life activities independently.

1 INTRODUCTION

Neural disorders, such as stroke, can cause long-

term or even permanent disability, resulting in detri-

mental social and economic effects. Approximately

25.7 million stroke survivors in the World (Johnson

et al., 2019) demand assistance as they suffer from

lack of interaction ability due to their physical limi-

tations. This makes them highly dependent on care-

givers, even with their daily activities on a regular day.

Thus, the development of portable and easy-to-use as-

sistive systems is crucial as well as important. Such

systems obviously may empower the disabled to live

independently.

Advancements in internet enabled devices (IoT)

have facilitated smart home systems (Del Rio et al.,

2020). Although there are numerous applications

a

https://orcid.org/0000-0003-3852-9164

b

https://orcid.org/0000-0002-8696-4227

c

https://orcid.org/0000-0001-9463-4298

d

https://orcid.org/0000-0001-5594-3645

e

https://orcid.org/0000-0001-7564-2612

within this field, almost all of them are highly de-

pendent on mobile phones or other type of inter-

action systems such as voice commands, hand ges-

tures, etc. However, people who have serious motor

control challenges (in consequence of, for example,

locked-in-syndrome (LIS), amyotrophic lateral scle-

rosis (ALS), cerebral palsy, stroke, and spinal-cord

injuries) are restricted from taking full advantage of

the available home automation technologies.

Brain computer interface (BCI) based control

methodologies, by exploiting the subjects’ electroen-

cephalogram (EEG) signals to control external de-

vices, have had significant progress in recent years.

In such systems, the target brain responses/activities

is measured and a decision-making system is imple-

mented to interact with the external devices (Mistry

et al., 2018). This allows support to people who have

difficulties to complete the tasks physically (Zhuang

et al., 2020). Development of BCI assisted devices

have greatly attracted the scientific community with

a motivation to help people who especially demands

assistance in daily tasks because of their health limi-

tations (Belkacem et al., 2020).

134

Uyanik, C., Khan, M., Das, R., Hansen, J. and Puthusserypady, S.

Brainy Home: A Virtual Smart Home and Wheelchair Control Application Powered by Brain Computer Interface.

DOI: 10.5220/0010785800003123

In Proceedings of the 15th International Joint Conference on Biomedical Engineering Systems and Technologies (BIOSTEC 2022) - Volume 1: BIODEVICES, pages 134-141

ISBN: 978-989-758-552-4; ISSN: 2184-4305

Copyright

c

2022 by SCITEPRESS – Science and Technology Publications, Lda. All rights reserved

EEG-BCI systems are based mainly on three

paradigms: P300, motor imagery (MI), and steady-

state visual evoked potential (SSVEP). Out of these

three types, the SSVEP based systems tend to be-

come dominant in real world applications due to their

high bandwidth, minimal training time, and faster cal-

ibration (Faller et al., 2017). Additionally, the use

of external stimulus source allows to integrate mul-

tiple techniques such as augmented reality (AR), eye

tracking, etc. (Putze et al., 2019). In (Saboor et al.,

2017), the authors have developed a SSVEP-BCI sys-

tem, which was stimulated by AR glasses, and suc-

cessfully achieved over 85% accuracy on the designed

control tasks, including elevator, coffee machine and

light switches. Similarly, Park et al. (Park et al., 2020)

focused on developing a SSVEP-BCI system with an

AR head set. They reported high information transfer

rate (ITR) (37.4 bits/min), and high response rate of

activation (2.6 seconds) to turn on/off of devices. In

another study, Lin and Hsieh (Lin and Hsieh, 2014)

developed a television control system and attained al-

most 99% accuracy with 4.8 seconds command acti-

vation time. Adams et al. managed to create a smart

home application by SSVEP-BCI system to control

six devices with multiple screens placed at different

locations inside the environment, and they achieved

an average accuracy of 81% (Adams et al., 2019).

Although the aforementioned systems have ac-

complished and produced significant results, mobil-

ity is still a vital concern due to wired, bulky, effort-

demanding, and expensive setups, especially in terms

of signal acquisition tools. In this paper, we demon-

strate a novel, cost-effective, user friendly, and easy

to setup wireless SSVEP-BCI control of smart home

and wheelchair application through a virtual environ-

ment.

2 MATERIALS AND METHODS

2.1 SSVEP-BCI and Data Acquisition

Setup

In SSVEP-BCI systems, a visual stimuli is responsi-

ble to present visual triggers, and each of them has

unique frequencies. When a user concentrate on one

of these visual triggers (named as flickering patterns),

a neural activation uniquely matching the focused pat-

tern occurs in the visual cortex of the user. These

neural activation is measurable via EEG acquisition

device, and the acquired signal could be processed to

decode the generated unique signature. If any visual

pattern is dedicated to perform an action based on the

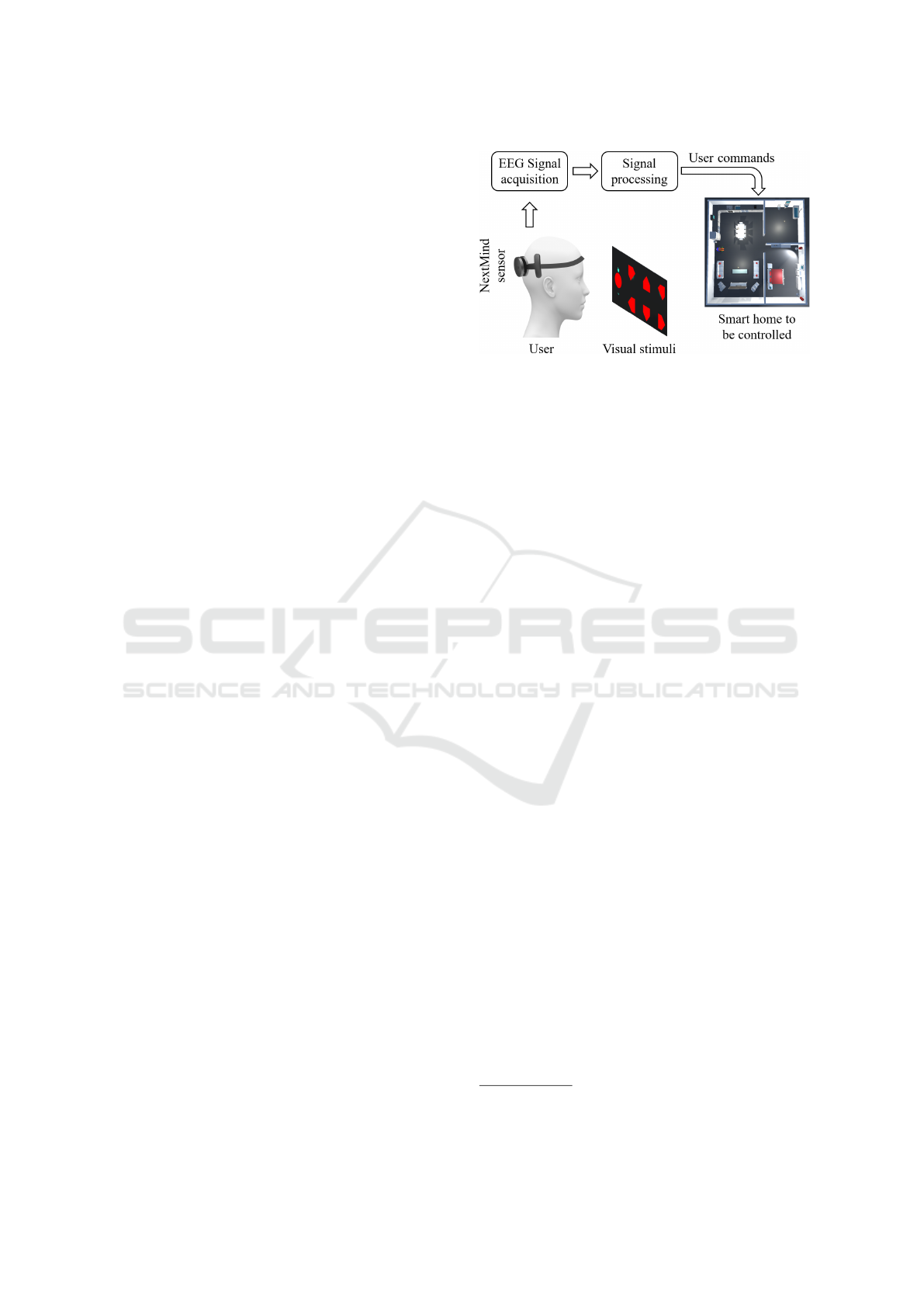

Figure 1: Schematic of SSVEP-BCI system.

current state of the controlled system/environment, an

accurate signal analysis scheme produces the correct

user commands. The described procedure is depicted

in Figure 1.

For the development of a user-friendly and

portable BCI system for smart home and wheelchair

control application, the selection of a simple and ro-

bust EEG acquisition system is of utmost importance.

For this, we used the NextMind SSVEP-BCI device,

which is non-invasive, compact and has nine high

quality dry electrodes covering the visual cortex area

of the brain. It contains Bluetooth communication

module that wirelessly transmits the acquired EEG

signals to the computing unit. The computer acts as

a main processing unit that is responsible to run a

3D simulation environment, receive and analyze EEG

signals, determine focused control pattern, and record

experimental data.

2.2 3D Environment for Control

Application

Smart home and wheelchair control environment are

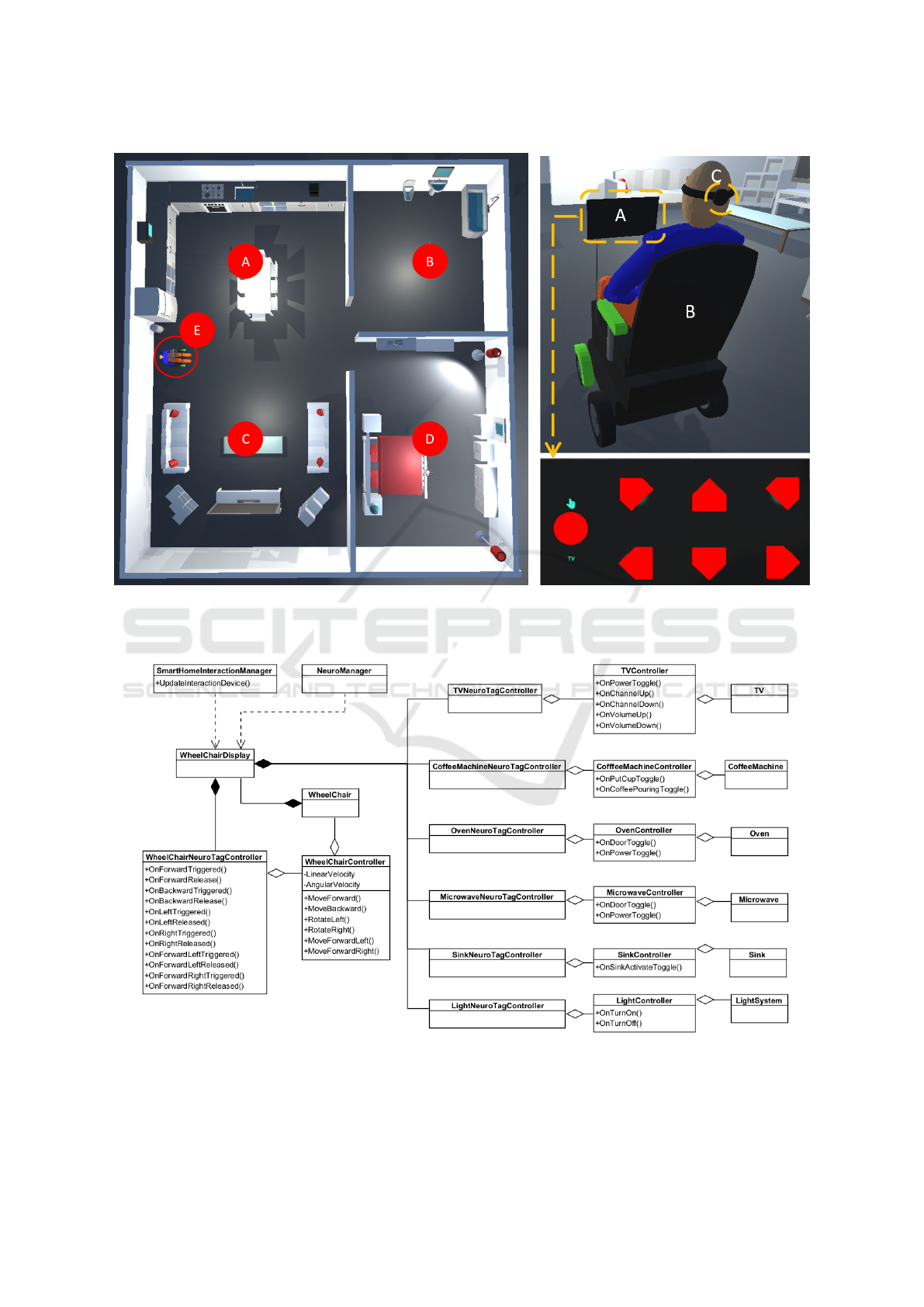

developed (Figure 2a) in Unity 3D Game Engine

1

.

The environment contains a wheelchair and various

types of daily life home appliances which are con-

trolled by the user with the BCI setup. This BCI setup

contains the NextMind device and SSVEP based in-

put screen installed on the wheelchair (Figure 2b).

More details on the developed smart home environ-

ment and wheelchair can be found at the provided

video link

2

.

Implementation of system software for wheelchair

and smart home appliances are completed in C# pro-

gramming language, which is one of the officially

supported development languages of Unity Engine.

System software architecture is depicted in Figure 3.

1

https://unity.com/

2

https://youtu.be/PqelTCLJ77U

Brainy Home: A Virtual Smart Home and Wheelchair Control Application Powered by Brain Computer Interface

135

(a) (b)

Figure 2: (a): Smart home environment (A: Kitchen, B: Bathroom, C: Living room, D: Bedroom, E: Subject on the wheelchair

with NextMind device), (b): Wheelchair and subject (A: Control display, B: Wheelchair, C: NextMind device).

Figure 3: Software architecture of the smart home and wheelchair control system.

BIODEVICES 2022 - 15th International Conference on Biomedical Electronics and Devices

136

In order to preserve simplicity of the diagram, only

the most important classes and their crucial function-

alities are demonstrated in the class blocks.

As seen from the class diagram, all smart home

environment appliances and wheelchair are controlled

by WheelChairDisplay, which is the primary inter-

action device of the user, who wears the NextMind

SSVEP-BCI acquisition device. SmartHomeInterac-

tionManager is responsible to keep the control dis-

play valid in terms of available interaction patterns.

When the wheelchair is close to a BCI controllable

appliance (television, coffee machine etc.), it forces

the display to show a sub-control menu selection pat-

tern as shown in Figure 2b.

When the subject focuses on a control pattern (red

patterns on display) to start an action, NeuroMan-

ager triggers the corresponding NeuroTagController

such as the WheelChairNeuroTagController, and the

TVNeuroTagController. Finally, the input signal for

relevant device controller (WheelChairCintroller, TV-

Controller) are delivered, and the demanded action by

the user (via brain signals) is performed by the device.

2.3 Participants

Fifteen healthy control subjects (three females and

twelve males with a mean age of 30 ± 8) voluntar-

ily participated in the experiments. Two of them have

prior experience with the BCI systems, and the rest of

them are na

¨

ıve.

2.4 Experimental Design and

Procedures

Three sets of experiments are designed to test the sub-

jects’ performance for controlling smart home appli-

ances and wheelchair navigation in the virtual envi-

ronment via the BCI system. In each trial of an exper-

iment, the ultimate expectation from a participant is

to complete the active trial as fast as possible. All ex-

periments consist of wheelchair navigation task com-

bined with a device control task. While subjects are

conducting experiments, their focus states on naviga-

tion patterns, corresponding wheelchair motions, ex-

ternal device control focus commands and relevant

timing information are recorded for further analysis.

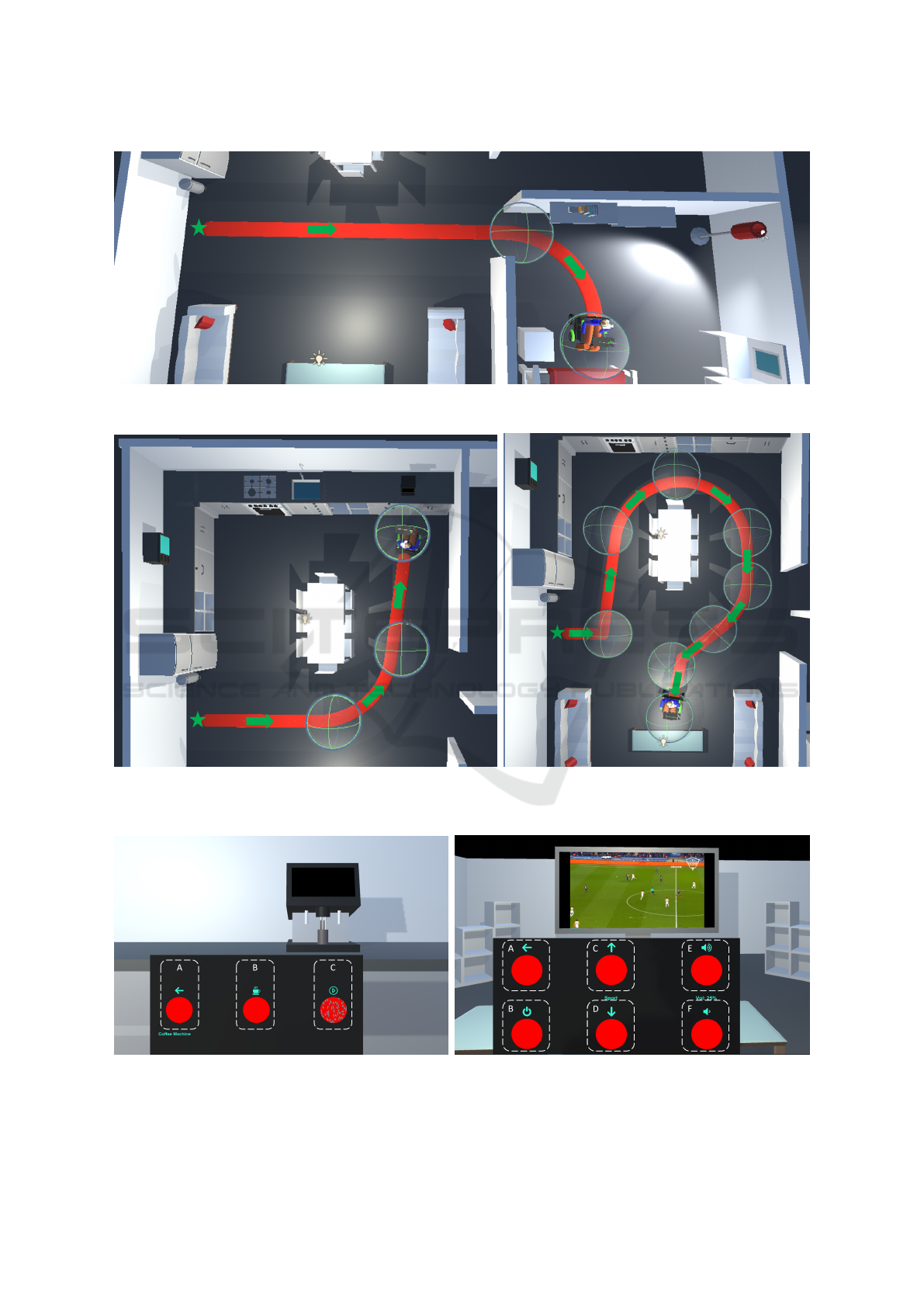

2.4.1 Experiment 1

This is the simplest of all 3 experiments. The objec-

tive is to move the wheelchair into the bedroom by fo-

cusing navigation control patterns located on the dis-

play (see Figure 2b). Figure 4 shows a completed trial

and wheelchair’s path. Transparent spheres shown in

the figure indicate subsection waypoints of the trial.

In addition to the navigation task, the subject must

turn on bedroom light when the related control pat-

tern appears on the control display. In a real-life smart

home, this would be implemented by position-based

proximity interaction (Ballendat et al., 2010). This

extra interaction task is considered as an added dis-

traction and increases the complexity of the overall

trial. Video demonstration of this experiment is pro-

vided in

3

.

2.4.2 Experiment 2

In experiment 2, the user has to complete a relatively

complex navigation task, which has three waypoints

as shown in Figure 5a. At the end of the naviga-

tion task, subject must do a coffee machine interaction

task as shown in Figure 6a. In order to complete this

task, the subject must focus on the patterns in the or-

der of B-C-C-B-A. This experiment is demonstrated

in

4

.

2.4.3 Experiment 3

It is the most complicated one, having series of navi-

gation control actions. The tracking path starts from

the same location as in the first and second experi-

ments, and ends in front of the television. In this task

(Figure 5b), the path contains eight waypoints which

must be visited. Upon completing the navigation task,

a television interaction task (shown in Figure 6b) is

presented to the subject. The expected control order

must be B-D-F-E-C-B-A. Detailed demonstration of

this experiment can be found in

5

.

3 RESULTS AND DISCUSSION

The experiments are repeated 3 times for each sub-

ject, and the trials are recorded to analyze the over-

all system performance for each subject. Task com-

pletion time (TCT(s)) and task commanding success

(TCS(%)) constitute the evaluation basis of the exper-

iments.

3.1 Task Completion Time and

Closeness

For a subject carrying out one of the experiments, the

average of trials’ duration is the TCT, which are pro-

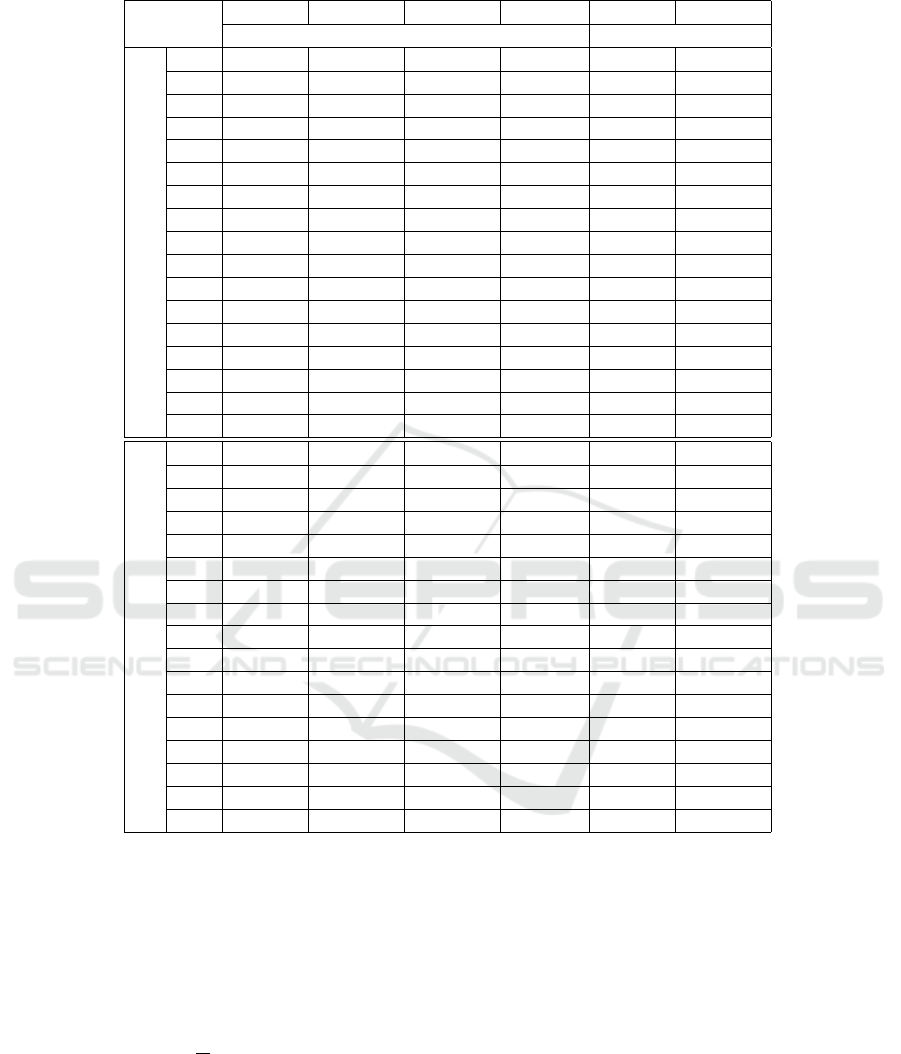

vided in Tables 1 and 2.

3

https://youtu.be/C3He41Whems

4

https://youtu.be/lmdQogPShT8

5

https://youtu.be/I2jeTJEY8eo

Brainy Home: A Virtual Smart Home and Wheelchair Control Application Powered by Brain Computer Interface

137

Figure 4: Experiments 1 navigation task (Spheres: Internal waypoints, Red lines: Motion trajectory, Green Arrows: Move-

ment direction).

(a) (b)

Figure 5: (a) and (b): Experiments 2 and 3 navigation tasks (Spheres: Internal waypoints, Red lines: Motion trajectory, Green

Arrows: Movement direction).

(a) (b)

Figure 6: (a): Experiment 2 Interaction task - Coffee machine (A: Return to wheelchair navigation control, B: Put/Remove

coffee mug onto/from the tray, C: Start/Stop filling coffee and milk). (b): Experiment 3 Interaction task - Television (A:

Return to wheelchair navigation control, B: Power On/Off Television, C/D: Next/Previous channel, E/F: Volume Up/Down).

BIODEVICES 2022 - 15th International Conference on Biomedical Electronics and Devices

138

Table 1: Results for Experiment 1.

TCT(s) CLS(%) TCS(%) E

traj

(m)

R 33.4 - - -

S

1

34.5 96.6 97.8 0.15

S

2

35.5 93.4 97.9 0.15

S

3

35.8 92.8 98.6 0.19

S

4

36.8 89.7 97.8 0.15

S

5

39.0 83.1 99.7 0.09

S

6

37.7 87.0 97.8 0.15

S

7

35.0 95.0 97.4 0.20

S

8

38.7 83.8 99.3 0.20

S

9

37.0 88.9 97.8 0.16

S

10

36.7 89.8 97.8 0.15

S

11

33.9 98.3 99.3 0.24

S

12

43.9 68.4 97.1 0.29

S

13

33.6 99.2 98.5 0.20

S

14

36.2 91.6 98.8 0.06

S

15

37.9 86.5 99.3 0.19

Avg 36.8 89.6 98.3 0.17

In order to obtain the reference baseline, the ex-

periments are conducted by using a computer mouse

control instead of BCI. In the following experimental

trials, the user related delay is minimized due to low

delay time of the mouse, which yields the lowest pos-

sible TCT. These trials are the comparison reference

(R) of a subject’s performance in terms of TCT, and

given at the first rows of the result tables.

Closeness (CLS(%)) is the measurement of how a

subject’s timing is close to the reference (R) timing

in a given experiment. It is calculated using Eq.(1),

where TCT

s,e

is the TCT for a given subject, s for the

experiment, e. TCT

R,e

is the TCT for the reference R

for the corresponding experiment, e.

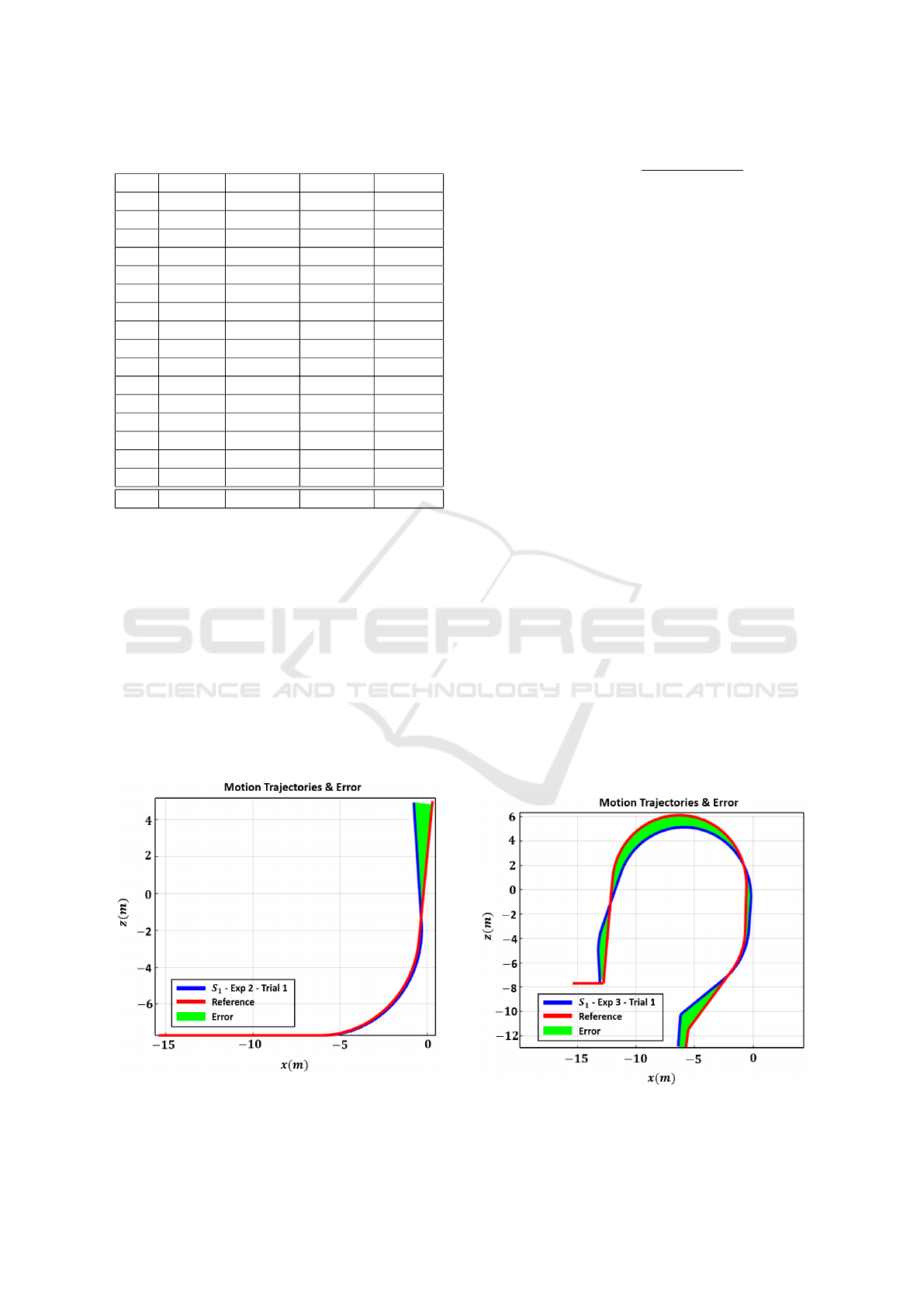

Figure 7: Motion trajectories and corresponding trajectory

errors for S

1

in experiment 2.

CLS(s, e) = 1 −

TCT

s,e

− TCT

R,e

TCT

R,e

. (1)

From the experiment results, it can be seen that

majority of the subjects completed wheelchair nav-

igation tasks over 80% closeness, except S

12

in the

first experiment (Table 1), S

5

, S

8

in the second exper-

iment (Table 2, top block), and S

4

, S

8

, S

9

in the third

experiment (Table 2, bottom block). It is noted that

S

1

and S

6

both have consistency in having high close-

ness ratios with small fluctuations over experiments.

It is due to the fact that both of them have experi-

ence in using the NextMind device, and hence the en-

vironmental factors (distractions) have less influence

on them compared to the other subjects. Another im-

portant observation from the results is regarding the

device interaction tasks for inexperienced users (in

the second and third experiments). A huge improve-

ment on TCT (eventually closeness percentage) is ob-

served from the second interaction task (coffee ma-

chine) to the third interaction task (television), even

though the television control task is more complicated

than coffee machine control task. The reason behind

this can be attributed to the fact that each subject be-

comes more trained on the smart home environment

by the time they perform the television control task.

These observations prove that an inexperienced sub-

ject can complete the given task with high closeness

value, with a very limited amount of training on the

presented environment.

3.2 Task Commanding Success

While a subject is conducting one of the experiment’s

trial, he/she should follow the predefined focus pat-

terns to complete the task. At a given point of time, if

Figure 8: Motion trajectories and corresponding trajectory

errors for S

1

in experiment 3.

Brainy Home: A Virtual Smart Home and Wheelchair Control Application Powered by Brain Computer Interface

139

Table 2: Experimental results for experiment 2 and 3 navigation and interaction tasks.

TCT(s) CLS(%) TCS(%) E

traj

(m) TCT(s) CLS(%)

Navigation Interaction

Experiment 2

R 28.1 - - - 15.9 -

S

1

30.0 93.3 97.6 0.17 16.1 98.5

S

2

30.9 89.8 93.0 0.16 17.7 88.5

S

3

28.6 98.1 98.3 0.15 16.8 94.3

S

4

28.9 97.2 94.1 0.17 19.9 74.3

S

5

36.9 68.8 96.8 0.17 20.8 68.5

S

6

32.0 86.0 97.7 0.18 17.9 86.8

S

7

32.3 85.0 92.1 0.15 18.9 81.0

S

8

35.5 73.6 95.2 0.18 19.2 78.9

S

9

29.3 95.6 97.7 0.18 16.9 93.1

S

10

28.8 97.6 98.1 0.19 18.2 85.0

S

11

28.6 98.3 97.6 0.17 18.6 83.0

S

12

30.5 91.5 98.5 0.22 19.1 79.3

S

13

29.5 95.0 97.7 0.17 18.0 86.3

S

14

28.1 99.8 92.4 0.22 17.4 90.2

S

15

30.8 90.4 98.5 0.14 17.3 90.7

Avg 30.7 90.7 96.4 0.17 18.2 85.2

Experiment 3

R 67.5 - - - 20.9 -

S

1

72.2 93.0 94.2 0.49 21.6 96.8

S

2

72.3 92.7 91.2 0.87 23.2 89.3

S

3

78.1 84.2 92.7 1.12 22.6 92.1

S

4

82.5 77.8 95.2 0.69 21.0 99.5

S

5

77.7 84.8 95.7 1.04 21.0 99.7

S

6

73.5 91.0 95.4 1.26 21.1 99.1

S

7

77.1 85.6 89.5 0.74 22.5 92.4

S

8

88.0 69.5 90.5 0.78 22.6 91.8

S

9

85.5 73.2 95.0 1.21 21.2 98.8

S

10

74.2 90.0 94.8 1.17 21.4 97.8

S

11

73.4 91.2 93.4 1.26 21.3 98.5

S

12

80.9 80.0 96.1 1.13 21.8 95.8

S

13

75.3 88.3 93.5 1.42 21.6 97.0

S

14

77.5 85.2 94.2 1.20 21.4 97.8

S

15

80.1 81.2 96.9 1.10 21.9 95.2

Avg 77.9 84.5 93.9 1.03 21.7 96.1

the subject focuses on a control pattern other than the

required one, commanding error occurs, which can

be computed as the TCS (Eq.(2)). C

f ail

(t) in Eq.(2) is

the command error function. The C

f ail

(t) value is 1 if

the focused pattern is different than the expected one,

otherwise, it is 0 at a given time, t. T is the total time

when the wheelchair in motion for a navigation task.

TCS = 1 −

1

T

Z

T

0

C

f ail

(t)dt. (2)

TCS for navigation tasks (on each experiment) of

all subjects is over 90%. As expected, the perfor-

mance of each subject decreases as the complexity

level of navigation task increases. When the number

of commands is increased, the probability of perform-

ing wrong navigation action is increased (Table 1 and

2). Surprisingly, none of the subjects failed on any

of the interaction tasks, which yields a 100% success

rate. Thus, the result indicates that the developed BCI

controlled smart home application can be used by dis-

abled users to interact with the devices with high trust

level.

3.3 Trajectory Error

The wheelchair navigation tasks have predefined mo-

tion paths which must be followed. While subject

navigates the wheelchair, its positions are recorded.

Trajectory error (E

tra j

) corresponds to the average

distance error over time to the expected motion

path. It shows the subject performance on driv-

ing wheelchair in terms of geometric distance (see

BIODEVICES 2022 - 15th International Conference on Biomedical Electronics and Devices

140

E

tra j

(m) in Tables 1 and 2). It is calculated as shown

in Eq.(3), where U (t) is the wheelchair actual posi-

tion function over time, and R

cp

(U(t)) is a function

that determines the closest position on reference tra-

jectory from wheelchair position (see Figures 7 and

8). In Eq.(3), T is the total amount of time while the

wheelchair is performing the navigation tasks.

E

tra j

=

1

T

Z

T

0

|U(t) − R

cp

(U(t))|dt. (3)

According to the results, subjects’ average navi-

gation trajectory errors for experiments 1 and 2 are

quite low (less than 20cm). However, in the third ex-

periment, the error values increase considerably due

to the complexity of the navigation path. The ob-

tained error values confirm that all the subjects are

able to navigate wheelchair on the allocated motion

path with an average error of less than the width of a

typical wheelchair.

4 CONCLUSION

In this paper, a SSVEP-BCI controlled smart home

and wheelchair application developed in Unity 3D

Game and Simulation Engine is presented. The

system has advantage of being low-cost, wireless,

portable, easy to use, and most importantly has high

control accuracy without extensive training require-

ment. Experiments conducted on 15 control subjects

show that all could complete the presented tasks with

high success rates. It is also observed that the sub-

jects’ confidence and competence to control the sys-

tem increases after each trial, even within the lim-

ited time of experimental proceedings. Moreover, the

results clearly show that our system is considerably

easy to adapt and learn by users. Preliminary testing

on subjects in the virtual environment shows promis-

ing results, which supports the feasibility of the sys-

tem for real-time device control applications in future

smart homes.

ACKNOWLEDGEMENTS

The authors would like to thank all the participants

who volunteered to participate in the experiments.

REFERENCES

Adams, M., Benda, M., Saboor, A., Krause, A. F., Rezeika,

A., Gembler, F., Stawicki, P., Hesse, M., Essig, K.,

Ben-Salem, S., et al. (2019). Towards an ssvep-bci

controlled smart home. In 2019 IEEE International

Conference on Systems, Man and Cybernetics (SMC),

pages 2737–2742. IEEE.

Ballendat, T., Marquardt, N., and Greenberg, S. (2010).

Proxemic interaction: designing for a proximity and

orientation-aware environment. In ACM International

Conference on Interactive Tabletops and Surfaces,

pages 121–130.

Belkacem, A. N., Jamil, N., Palmer, J. A., Ouhbi, S., and

Chen, C. (2020). Brain computer interfaces for im-

proving the quality of life of older adults and elderly

patients. Frontiers in Neuroscience, 14.

Del Rio, D. D. F., Sovacool, B. K., Bergman, N., and

Makuch, K. E. (2020). Critically reviewing smart

home technology applications and business models in

europe. Energy Policy, 144:111631.

Faller, J., Allison, B. Z., Brunner, C., Scherer, R., Schmal-

stieg, D., Pfurtscheller, G., and Neuper, C. (2017). A

feasibility study on ssvep-based interaction with mo-

tivating and immersive virtual and augmented reality.

arXiv preprint arXiv:1701.03981.

Johnson, C. O., Nguyen, M., Roth, G. A., Nichols, E.,

Alam, T., Abate, D., Abd-Allah, F., Abdelalim, A.,

Abraha, H. N., Abu-Rmeileh, N. M., et al. (2019).

Global, regional, and national burden of stroke, 1990–

2016: a systematic analysis for the global bur-

den of disease study 2016. The Lancet Neurology,

18(5):439–458.

Lin, J.-S. and Hsieh, C.-H. (2014). A bci control system for

tv channels selection. International Journal of Com-

munications, 3:71–75.

Mistry, K. S., Pelayo, P., Anil, D. G., and George, K. (2018).

An ssvep based brain computer interface system to

control electric wheelchairs. In 2018 IEEE Interna-

tional Instrumentation and Measurement Technology

Conference (I2MTC), pages 1–6. IEEE.

Park, S., Cha, H.-S., Kwon, J., Kim, H., and Im, C.-H.

(2020). Development of an online home appliance

control system using augmented reality and an ssvep-

based brain-computer interface. In 2020 8th Interna-

tional Winter Conference on Brain-Computer Inter-

face (BCI), pages 1–2. IEEE.

Putze, F., Weiβ, D., Vortmann, L.-M., and Schultz, T.

(2019). Augmented reality interface for smart home

control using ssvep-bci and eye gaze. In 2019 IEEE

International Conference on Systems, Man and Cy-

bernetics (SMC), pages 2812–2817. IEEE.

Saboor, A., Rezeika, A., Stawicki, P., Gembler, F., Benda,

M., Grunenberg, T., and Volosyak, I. (2017). Ssvep-

based bci in a smart home scenario. In Inter-

national Work-Conference on Artificial Neural Net-

works, pages 474–485. Springer.

Zhuang, W., Shen, Y., Li, L., Gao, C., and Dai, D. (2020). A

brain-computer interface system for smart home con-

trol based on single trial motor imagery eeg. Interna-

tional Journal of Sensor Networks, 34(4):214–225.

Brainy Home: A Virtual Smart Home and Wheelchair Control Application Powered by Brain Computer Interface

141