Sparse Decomposition as a Denoising Images Tool

Hatim Koraichi, Chakkor Otman

National School of Applied Sciences of Tetuan Morocco

Groupe de recherche NTT (New Technology Trends)

Keywords: Sparse signals, denoising, matching pursuit, OMP, KSVD, LARS.

Abstract: The sparse representation and Elimination of image noise has been largely used successfully by the signal

processing community. In this work, we present its benefits particularly in image denoising applications. The

general purpose of sparse representation of data is to find the best approximation of a target signal applying

a linear combination of a few elementary signals from a fixed collection. Several methods have been found

for sparse decompositions to remove noise from the image, and there are other problems, like How to

decompose a signal with a dictionary, which dictionary to use, and learning the dictionary.

1 INTRODUCTION

The adopted approach of image denoising is based on

sparse redundant representations compared to trained

dictionaries. Several algorithms are proposed to build

this type of dictionaries. Among them, the K-SVD

algorithm is used to obtain a dictionary that can

effectively describe the image. In addition, some

greedy algorithms are used to perform sparse coding

of the signal.

Since the K-SVD is limited in handling small image

fixes, we are expanding its deployment to arbitrary

image sizes by defining a global front image that

forces sparse fixes at each location in the image. We

show how these methods lead to a simple and efficient

denoising algorithm. This leads to a denoising

performance equivalent to and sometimes better than

the most recent alternative denoising methods.

The first problem is divided according to the type

of imagery

The first problem is divided according to

the type of imagery, then which dictionary we are

going to use then the sparse coding task, i.e. which

algorithm we are going to use, that's our goal, we are

looking for the most parsimonious algorithm possible,

ie the closest solution to the problem.

2 FORMULATION

The general objective of the sparse representation is

to seek an approximate representation of a signal

chosen by applying a linear combination of some

elementary signals of a fixed collection. In practice,

there are several sparse decomposition algorithms

used to solve this type of problem.

The problem is to find the exact decomposition

which minimizes the number of non-zero coefficients:

𝐦𝐢𝐧

𝒙

‖

𝒙

‖

𝟎

𝒔. 𝒕 𝒚 𝑫𝒙 (1)

𝑥 ∈ ℝ and K is the sparse representation of y.

And

‖

𝑥

‖

0

the norm 𝑙

0

of 𝑥 and corresponds to

the number of non-zero values of

𝑥.

The dictionary D is made up of K columns dk,

𝑘 1,...,𝐾

, called atoms, each atom supposed to

be normalized.

In theory, there is an infinity of solutions to the

problem, and the goal is to find the possible

sparseness solution, that is to say the one with the

lowest number of non-zero values in x.

In practice, we seek an approximation of the

signal and the problem becomes (2.1):

𝐦𝐢𝐧

𝒙

|| 𝒚 𝑫𝒙 ||

𝟐

𝒔. 𝒕 || 𝒙 ||

𝟐

𝑳 (2)

with L > 0 the constraint of sparsity, that is to say an

integer representing the maximum number of non-

zero values in

𝑥 .

We can use a 𝝉 𝟎 parameter to balance the

dual purpose of minimizing error and sparsity :

𝐦𝐢𝐧

𝒙

𝟏

𝟐

‖

𝒚𝒅𝒙

‖

𝟐

𝟐

𝝉|| 𝒙 ||

𝟎

(3)

440

Koraichi, H. and Otman, C.

Sparse Decomposition as a Denoising Images Tool.

DOI: 10.5220/0010736100003101

In Proceedings of the 2nd International Conference on Big Data, Modelling and Machine Learning (BML 2021), pages 440-443

ISBN: 978-989-758-559-3

Copyright

c

2022 by SCITEPRESS – Science and Technology Publications, Lda. All rights reserved

Solving this problem is NP-hard, which precludes any

exhaustive search for the solution. This is why sparce

decomposition algorithms have emerged in order to

find an approximation of the solution.

3 SPARSE DECOMPOSITION

ALGORITHMS

Many approximation techniques have been proposed

for this task. We have proposed the following

algorithms:

Matching pursuit (MP), Orthogonal Matching

Pursuit algorithm (OMP), LASSO algorithm, and

least angle regression LARS.

who find approximate solutions:

3.1 Matching Pursuit (MP)

Algorithm 1: Matching pursuit (MP)

𝐦𝐢𝐧

𝛂∈

ℝ

𝐦

𝟏

𝟐

‖

𝐱 𝐃𝛂

‖

𝟐

𝟐

𝐬. 𝐭. ||𝛂 ||

𝟎

𝐋

1. Initialization: 𝛂𝟎; residual 𝐫𝐱

2. while ||𝛂 ||

𝟎

𝐋

3. Select the element with maximum

correlation with the residual

̂

𝐚𝐫𝐠 𝐦𝐚𝐱

𝐢𝟏,…,𝐦

𝐝

𝐢

𝐓

𝐫

4. Update the coefficients and residual

𝛂

̂

𝛂

𝐢

𝐝

𝐢

𝐓

𝐫

𝐫𝐫𝐝

̂

𝐓

𝐫 𝐝

𝐢

5. End while.

3.2 Orthogonal Matching Pursuit

Algorithm 2: Orthogonal matching pursuit (OMP)

𝐦𝐢𝐧

𝛂∈

ℝ

𝐦

𝟏

𝟐

‖

𝐱 𝐃𝛂

‖

𝟐

𝟐

𝐬.

𝐭

. ||𝛂 ||

𝟎

𝐋

1. Initialization: 𝜶𝟎 residual 𝒓𝒙

active

set 𝛀 ∅

2. while ||𝛂 ||

𝟎

𝐋

3. Select the element with maximum

correlation with the residual

̂

𝐚𝐫𝐠 𝐦𝐚𝐱

𝐢𝟏,…,𝐦

𝐝

𝐢

𝐓

𝐫

4. Update the active set, coefficients and

residual

𝛀 𝛀∪

̂

𝜶

𝛀

𝒅

𝛀

𝑻

𝒅

𝛀

𝟏

𝒅

𝛀

𝑻

𝒓

𝒓𝒙𝒅

𝛀

𝜶

𝛀

5. End while.

3.3 The LASSO Algorithm

This approach consists in replacing the combinatorial

function 𝑙

in the formul (1) by the norm 𝑙

. The norm

𝑙

is the closest convex function to the function 𝑙

,

which gives convex optimization problems admitting

exploitable algorithms.

The convex relaxation of problem (1) becomes:

𝐦𝐢𝐧

𝒙

‖

𝒙

‖

𝟏

𝒔. 𝒕 𝒚 𝑫𝒙 (4)

The mixed formulation (3) becomes

𝐦𝐢𝐧

𝒙

𝟏

𝟐

‖

𝒚𝒅𝒙

‖

𝟐

𝟐

𝝉|| 𝒙 ||

𝟏

(5)

Here, 𝝉 𝟎 is a regularization parameter whose

value determines the sparcity of the solution, high

values generally produce clearer results.

3.4 Least Angle Regression Algorithm

(LARS)

A fast algorithm known by (LARS) can make a small

modification to solve the LASSO problem, and its

computational complexity is very close to that of

greedy methods. However, the LARS algorithm only

permits us to choose one atom in the atom selection

process, that why strongly encourages us to select

more atoms in each iteration to speed up convergence.

We note another common formulation

𝐦𝐢𝐧

𝒙

|| 𝒙 ||

𝟏

𝒔. 𝒕 || 𝑫𝒙 𝒚 ||

𝟐

ε (6)

which explicitly sets the error constraint.

LARS also only allows one atom to be chosen in

the atom selection process, which provides a strong

incentive to select more atoms with each iteration in

order to speed up convergence.

4 DICTIONARY LEARNING

It is important to take consider that the quality of

sparse representation of a signal depends on the space

in which it is represented. Learning the dictionary is

a key point to make atoms as efficient as possible for

a particular type of data. It has been shown that a

learned dictionary has the power to provide better

reconstruction quality than a predefined dictionary.

This section addresses the problem of dictionary

learning. Several algorithms are used, learning

dictionaries without constraint, dictionaries

themselves sparse, or dictionaries with a constraint of

non-negativity.

Sparse Decomposition as a Denoising Images Tool

441

For dictionary learning, we choose the K-SVD

algorithm:

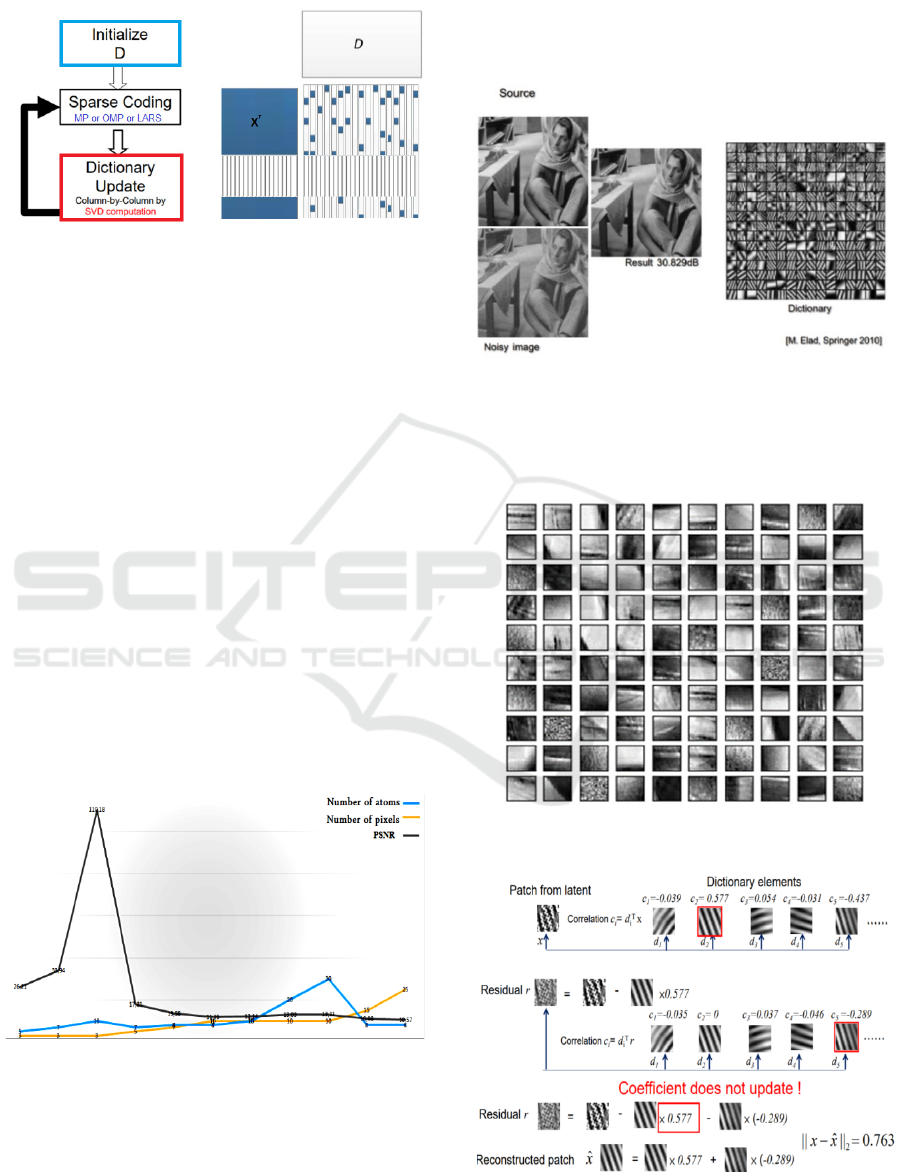

Figure 1: Principle of the K-SVD algorithm

5 SIMULATION

Image denoising is a difficult and open problem.

Mathematically, the nature of image denoising is an

inverse problem, and its solution is not unique. Thus,

additional assumptions must be made in order to

obtain a practical solution. since it is difficult to find

and remove noise for all types of images, much

research is carried out and various techniques are

developed to promote the performance of denoising

algorithms, In the following, we have presented tests

to compare methods which give the best

approximation in the context of the image denoising

problem.

We used several approaches for the simulations,

for the first test, we used the OMP algorithm for an

image by fixing the number of atoms, and changing

the pixel number values, and for the second test, we

used the OMP algorithm for the same image by

setting the pixel number and changing the atom

number values.

Figure 2: Comparison at PSNR level

5.1 The Principle of Dictionary

Learning

In the denoising application, the objective is to restore

an image degraded by noise (often an additive

Gaussian white noise), abrod we must put the image

in white and black then we make a simulation where

half of the image is affected by a white Gaussian noise,

We use half of the received image to reconstruct the

image using dictionary decomposition.

Figure 3: The principle of dictionary learning

We have chosen different simulations for learning

the dictionary for the same image:

number of pixels 20, number of atom 2, we find:

Figure 4: Example of dictionary

Figure 5: Dictionary elements

BML 2021 - INTERNATIONAL CONFERENCE ON BIG DATA, MODELLING AND MACHINE LEARNING (BML’21)

442

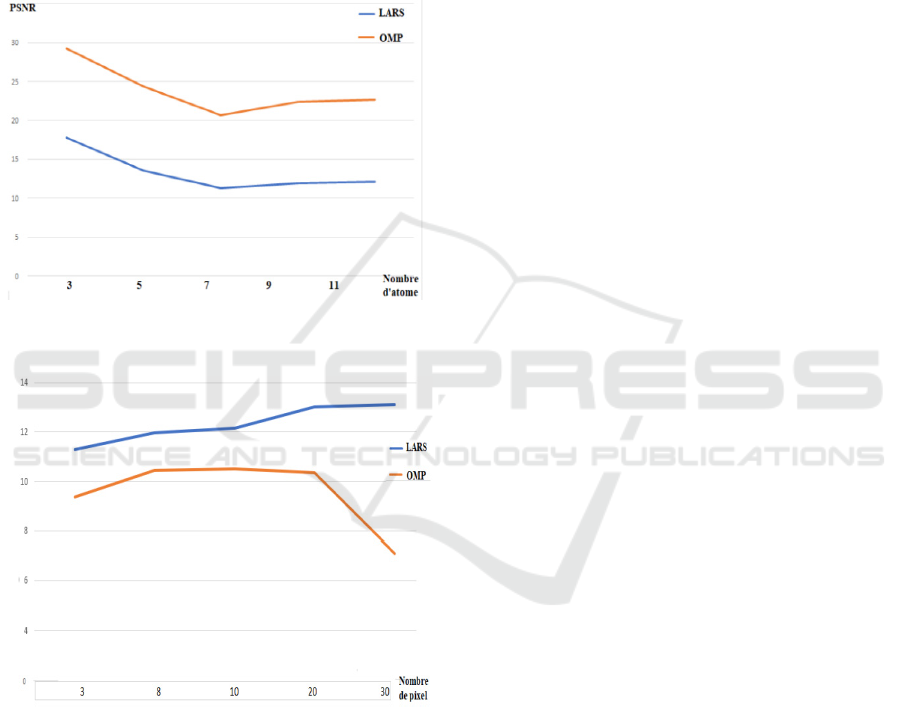

5.2 Comparison between OMP and

LARS

Based on simulations, it is clear that LARS is better

than OMP in terms of the efficiency and PSNR of the

results, but the drawback is that the computation time

is very slow compared to the first method. These

diagrams clearly show the difference and comparison

between the two methods at PSNR level and the

calculation time:

Figure 6: Comparison between OMP and LARS at the

number of atoms

Figure 7: Comparison between OMP and LARS at pixel

number level

6 CONCLUSION

Finally, we note that the KSVD is a fast

approximation tool for updating the dictionary, which

depends on the dictionary learning algorithm. The

results obtained demonstrate the best performance of

the proposed method in terms of training. This

learning algorithm is therefore perfectly suited to

certain signal processing applications.

In addition, there are several methods of reducing

image noise by sparse decomposition, and since

greedy algorithms such as MP or OMP, are capable

of offering good reconstruction performance, are

relatively complex because of the comparisons

necessary to each iteration with each atom of the

dictionary. so do OMP and LARs remain the most

efficient, and KSVD also remain the best

approximation for dictionaries?

REFERENCES

Michal Aharon, Michael Elad, and Alfred Bruckstein, K-

SVD: An Algorithm for Designing Overcomplete

Dictionaries for Sparse Representation, IEEE

Transactions on Signal Processing, Vol. 54, No. 11,

November 2006.

Michael Elad and Michal Aharon. Image Denoising Via

Sparse and Redundant Representations Over Learned

Dictionaries. 3736 IEEE Transactions on Image

Processing, Vol. 15, No. 12, December 2006.

Kai Cao, An Introduction to Sparse Coding and Dictionary

Learning", January 14, 2014.

Joel A. Tropp and Stephen J. Wright, Computational

Methods for Sparse Solution of Linear Inverse

Problems», Caltech ACM Technical Report 2009-01 1

S. Anitha, Dr. S. Nirmala Representation of Digital Images

Using K-SVD Algorithm International Journal of

Electronics and Computer Science Engineering 1459

Jérémy Aghaei Mazaheri. Représentations parcimonieuses

et apprentissage de dictionnaires pour la compression et

la classifcation d’images satellites". Traitement du

signal et de l’image. Université Rennes 1, 2015.

Français.

Abdeldjalil Aissa El Bey. "Représentations parcimonieuse

et applications en communication numérique".

Traitement du signal et de l’image. Université de

Bretagne occidentale - Brest, 2012.

Michal Aharon and Michael Elad, Sparse and Redundant

Modeling of Image Content Using an Image-Signature-

Dictionary", SIAM J. Imaging Sciences c 2008 Society

for Industrial and Applied Mathematics Vol. 1, No. 3,

pp. 228–247

Sparse Decomposition as a Denoising Images Tool

443