RNN Classifier to Identify the Influence of Oud Master on the Way to

Play of Oud Player

Mehdi Zhar, Omar Bouattane and Lhoussain Bahatti

SSDIA Laboratory, Ecole Normale Supérieur de l’Enseignement Technique, Morocco

Keywords: Artificial intelligence, Deep Learning, Machine learning, KNN, SVM, LSTM, RNN, Music, Univariate

Feature Selection, Timbre.

Abstract: We propose in this work, a model for recognizing the effect of the famous Oud masters on the Oud player's

style, and for identifying which Oud academy, an Oud player belongs to, based on extracted attributes using

deep learning classification algorithm RNN. The subsequent enhancements are often focused on the

integration of a screening mechanism for the optimum properties. In this initiative, functional cases have also

been built to assess the validity and reliability of our model.

1 INTRODUCTION

The Oud is the lute's and guitar's forefather. It's a

fretless string instrument that's been used in several

oriental musical styles

Many works in literature explain how to identify

a singer without discriminating between instrumental

and singing sounds. (Ratanpara and Patel, 2015) We

consider an investigation into how artificial neural

networks can be trained on a large corpus of melodies

and converted into automatic music composers

capable of providing new phrases that are consistent

with the style on which they were trained, and

(Colombo and al, 2016) we consider an examination

into how artificial neural networks could be trained

on a large corpus of phrases and transformed into

audio data composers able to produce new

compositions that are compatible with the genre on

which they were trained. On (Bahatti and al, 2013), a

series of sinusoidal descriptors are described with the

aim to characterize musical signals and recognize the

maximum information included in that signal.

Various learning techniques were developed and

tested in (Herrera and al, 2003), (Kaminsky and al,

1995), (Peeters, 2003), (Dhanalakshmi et al, 2008),

(Hochreiter, 1998) to accomplish audio identification

work. In the learning process (Gers and al, 1999),

(Graves and al, 2013), (Chung and al, 2014),

(Sutskever and al, 2014), (Graves , 2013) the neural

networks are going to be very helpful. The

algorithmic composition model (Karpathy, 2015)

provides a deep (multilayer) method of monophonic

melodies based on neural RNN networks with gated

recurrent units (GRUs). However, the RFE-SVM is

egoism, which only seeks to determine the optimal

combination of classification (Lamine and al, 2012).

There appears to have been a consensus among many

techniques, based on its versatility, computational

effectiveness, the capability of handling high-density

data, and the revenue for selection of characteristics,

using Superstar Vectors (SVM) (Guo and Li, 2003),

(Zhiquan and al, 2013), (Moraes and al, 2012). The

(Bahhati and Bouattane, 2016) model is an efficient

audio classification system based on SVM to

recognize the composer. In (Zhar and al, 2020)

authors present an algorithm that allows to artificially

compose oriental music based on calculated features.

In (Zhar and al, 2020), we find a new mechanism to

classify the influence of oud master on the way of

play of oud player, this model is based on the KNN

algorithm. In (Zhar and al, 2020), we find an artificial

algorithm of oriental music composition based on

oriental gramma.

In this work, we propose a classification model to

identify the degree of influence of master styles on

oud players. To do that, we chose three famous oud

schools:

- The oriental school: ‘Farid EL ATTRACHE’.

- The modern school: ‘Naseer SHAMMA’

- The Iraqi school: ‘Munir BASHIR’.

Our approach is to propose a model that provides

audio from the musical components of the three Oud

Zhar, M., Bouattane, O. and Bahatti, L.

RNN Classifier to Identify the Influence of Oud Master on the Way to Play of Oud Player.

DOI: 10.5220/0010735300003101

In Proceedings of the 2nd International Conference on Big Data, Modelling and Machine Learning (BML 2021), pages 399-403

ISBN: 978-989-758-559-3

Copyright

c

2022 by SCITEPRESS – Science and Technology Publications, Lda. All rights reserved

399

masters noted above. Distinctions in play style can

then be identified for each Master, showing holes and

alterations, and then predicting how Oud Master Play

affects the Oud Player. The impact percentage helps

to classify the Oud Player according to playing style.

The majority of the article has the following

structure: The classification algorithms are presented

in Section 2. Section 3 describes in detail the

extraction techniques, the reduction dimensionality

technique, and the application of a classification

algorithm solution consisting of audio segmentation.

Section 4 includes descriptions of the practical

application. Finally, we end the article in Section 5

and discuss appropriate future studies.

2 DEEP LEARNING

ALGORITHM (RNN)

An artificial neural network with recurrent

connections is a recurring neural network. An

ongoing neural network is composed of non-linear

interconnected units (neurons) for whom there is a

structure with at least one cycle. The units are

connected by weight-rich arcs (synapses). The

neuron's output is a non-linear combination of input.

Neural recurring networks are appropriate for

variable-size input data. They are especially suitable

for analyzing time series.

3 PROPOSED MODEL

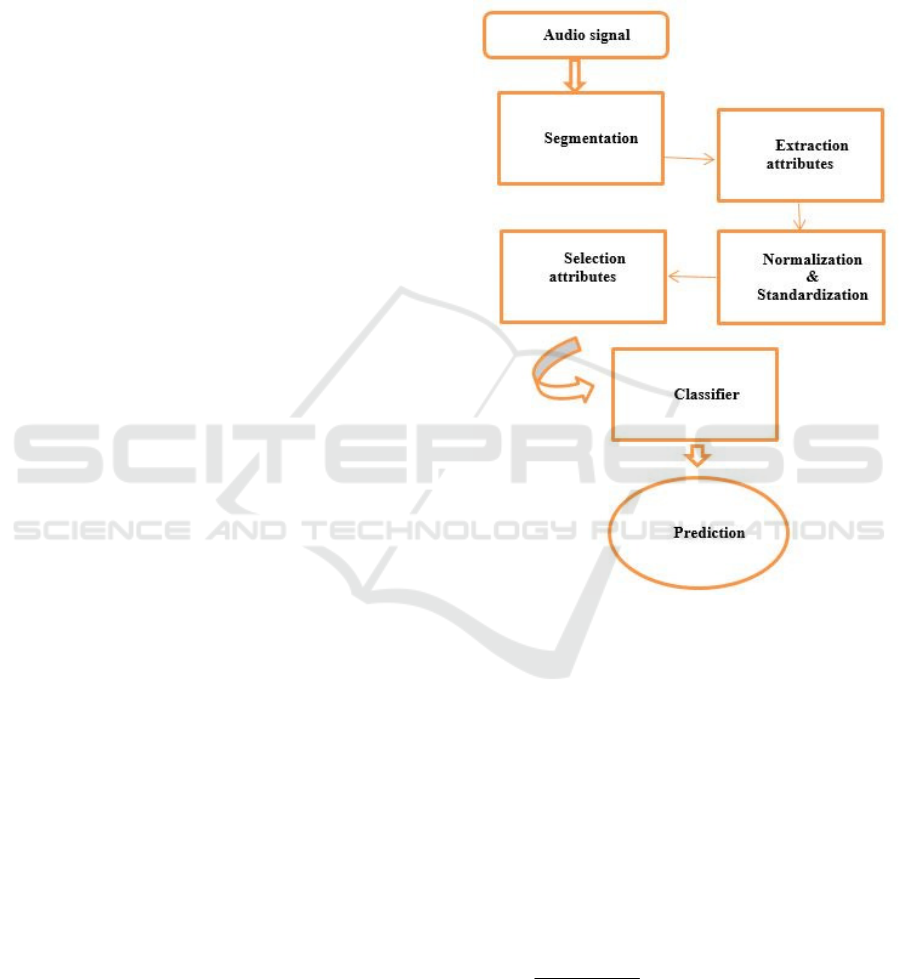

Our proposal focuses on six key components, namely

audio segmentation, mathematical attributes analysis

extraction, standardization, and data normalization,

attributes selection, and the use of the greatest precise

algorithm in-depth classification method RNN and

finally the prediction. The diagram illustrating our

plan appears in Figure 1.

3.1 Audio Segmentation

The fragments are divided into different periods

between 5 seconds and 100 seconds then the whole of

the proposed model classification algorithm has been

completed in several tests, with the aim to build the

maximum precision time frames. Duration with the

perfect time is 5 seconds.

3.2 Extraction Attributes

Our method consists of exploring the parameters of

an audio signal via mathematical equations of signal

processing. A lot of information has been extracted

with the help of signal processing elements as an

example :

Zero-Crossing Rate, Energy, Entropy of Energy,

Spectral Centroid, Spectral Spread, Spectral Entropy,

Spectral Flux, Spectral Rollof, MFCCs, Chroma

Vector, Chroma Deviation.

Figure 1: Block diagram of our classification process

3.3 Normalization & Standardization

Auto-learning algorithms will not function correctly

without normalization. The range of all entities must

therefore be normalized to ensure that each entity

leads to the final interval approximately and

proportionately.

We converted then the data to a level [0, 1] using the

formula for the aim to make better use of the

information’s generated and start reducing the range

of values:

Xsc is the normalized value, where X is an original

value.

minmax

min

XX

XX

X

sc

(1)

BML 2021 - INTERNATIONAL CONFERENCE ON BIG DATA, MODELLING AND MACHINE LEARNING (BML’21)

400

3.4 Selected Attributes

In this model, the Univariate Feature Selection uses

certain statistical tests, such as chi-square, F-test,

Mutual information to determine the force of the

connection among each factor and the target variable.

The characteristics of the model are classified

systematically according to their strength with the

results. All functions are deleted from the current

function space, other than a predisposed number of

markers. The other characteristics are then used for

the training, testing, and validation of machine

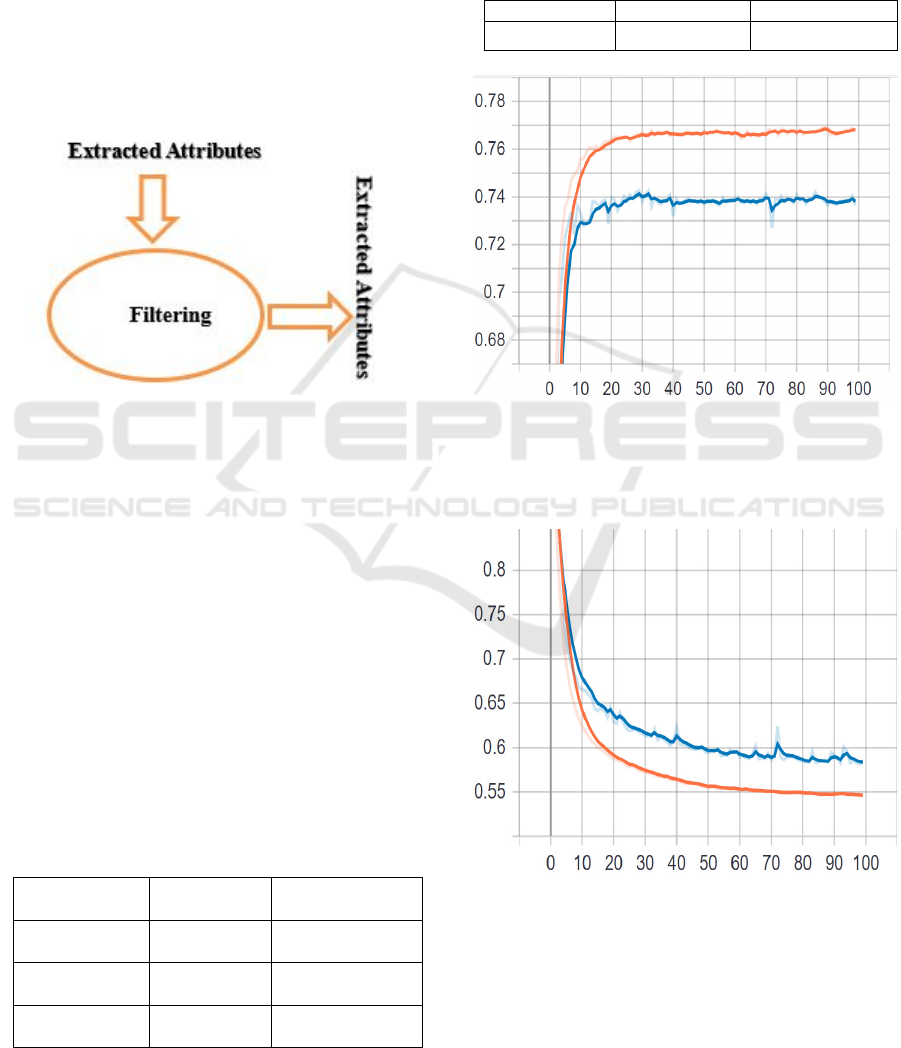

models. The figure below illustrates the filtering

mechanism used.

Figure 2: The features selection approach

3.5 Classification Algorithm

In this part, we chose to design a deep learning-based

classifier RNN to produce performance with greater

accuracy after passing all segmentation, extraction,

normalization, and parameter selection steps.

For training and testing, the data was split into 80%

and 20%. After testing several training

opportunities between 10% and 90%, this division is

the best solution.

Accuracy established is: 73%

4 PROCESS IMPLEMENTATION

The following table shows the pieces used:

Table 1: Pieces used for classification.

Oud Player Durations Number of inputs

of 5 seconds

Farid EL

ATTRACHE

02 : 15 : 34 1 629

Mounir

BACHIR

05 : 07 : 57 3 700

Nasseer

Shamma

05 : 03 : 09 3 645

The table below describes the wave format inputs of

our model, we have here 3 master oud players that we

will study in depth.

After testing a series of differentiation times

between 2 and 20, we chose a 5 second for every

piece to discover the perfect choice.

Table 2: Accuracy and Loss for 100 epochs.

Accurac

y

100 e

p

ochs 73 %

Loss 100 epochs 58 %

Figure 3: Train and validation epoch accuracy.

the figure below illustrates the accuracy for training

and testing according to the epoch

Figure 4: Train and validation epoch loss.

the figure below illustrates the loss for training and

testing according to the epoch.

RNN Classifier to Identify the Influence of Oud Master on the Way to Play of Oud Player

401

5 CONCLUSIONS

In this manuscript we presented a model of

categorization of the effect of oud master on oud

players, this model is based on a deep learning

approach including an input layer, a middle layer, and

an output layer. All layers contain several nodes for

each layer based on several tests, the practical results

show that the system is capable to classify according

to a rate of accuracy equal to 73 percent and loss equal

to 58 percent, this result remains to be improved,

that's why we opt in the perspectives of

conceptualizing other models for the aim to reach an

accuracy more than 90 percent and loss least than 20

percent.

ACKNOWLEDGEMENTS

The H2020 Project SybSPEED, N 777720, supports

this work

REFERENCES

Bahatti, L., Rebbani, A., Bouattane, O., Zazoui, M., 2013.

Sinusoidal features extraction: Application to the

analysis and synthesis of a musical signal. 2013 8th

International Conference on Intelligent Systems:

Theories and Applications (SITA), Rabat, 2013, pp. 1-

6.DOI: 10.1109/SITA.2013.6560805.

Bahatti, L., Bouattane, O., 2016. An Efficient Audio

Classification Approach Based on Support Vector

Machines, International Journal of Advanced Computer

Science and Applications. June 2016.

Colombo, F., Muscinelli, S., Seeholzer, A., Brea, J.,

Gerstner, W., 2016. Algorithmic Composition of

Melodies with Deep Recurrent Neural Networks.

Published in ArXiv 2016.

doi:10.13140/RG.2.1.2436.5683.

Chung, J., Gulcehre, C., KyungHyun, C., Bengio, Y., 2014

Empirical evaluation of gated recurrent neural networks

on sequence modeling, arXiv preprint

arXiv:1412.3555, 2014.

Dhanalakshmi, P., Palanivel, S., Ramalingam, V., 2008.

Classification of audio signals using SVM and RBFNN,

Expert Systems with Applications (2008);

doi:10.1016/j.eswa.2008.06.126.

Gers, F., Schmidhuber, J., Cummins, F., 1999. Learning to

forget: Continual prediction with lstm, Neural

computation, 12(10):2451-2471, 1999.

Guo, G., Li, Z., 2003. Content-Based Audio Classification

and Retrieval by Support Vector Machines, IEEE

transactions on neural networks, vol. 14, no. 1, January

2003. 209. DOI: 10.1109/TNN.2002.806626.

Graves, A., 2013. Generating sequences with recurrent

neural networks, arXiv preprint arXiv:1308.0850,

2013.

Graves, A., Mohamed, A., Hinton, G., 2013. Speech

recognition with deep recurrent neural networks, In

Acoustics, Speech and Signal Processing (ICASSP),

2013 IEEE International Conference on, pages 6645-

6649. IEEE, 2013

Herrera, P., Peeters, G., Shlomo, D., 2003. Automatic

classification of musical sound. Journal of New Music

Research, 32:1 pages 2–21, 2003.

http://dx.doi.org/10.1076/jnmr.32.1.3.16798.

Hochreiter, S., 1998. The vanishing gradient problem

during learning recurrent neural nets and problem

solutions, International Journal of Uncertainty,

Fuzziness, and Knowledge-Based Systems, 6(2):107-

116, 1998. doi.org/10.1142/S0218488598000094.

Kaminsky, I., Materka, A., 1995. Automatic source

identification of monophonic musical instrument

sounds. Neural Networks, 1995. Proceedings, IEEE

International Conference on. Vol. 1. IEEE, 1995. DOI:

10.1109/ICNN.1995.488091.

Karpathy, A., 2016. The unreasonable effectiveness of

recurrent neural networks,

http://karpathy.github.io/2015/05/21/rnn-

effectiveness/, 2015. [Online; accessed 1-April 2016].

Lamine, M., Camara, F., Ndiaye, S., Slimani, Y., Esseghir,

M., 2012. A Novel RFE-SVM-based Feature Selection

Approach for Classification, International Journal of

Advanced Science and Technology; Vol. 43, June

2012.

Moraes, R., and al. 2012. Document-level sentiment

classification: An empirical comparison between SVM

and ANN, Expert Systems with Applications (2012),

http://dx.doi.org/10.1016/j.eswa.2012.07.059.

Peeters, G., 2003. Automatic classification of large musical

instrument databases using hierarchical classifiers with

inertia ratio maximization, In Proc. of AES 115th

Convention, New York, USA,2003

Ratanpara, T., Patel, N., 2015. Singer identification using

perceptual features and cepstral coefficients of an audio

signal from Indian video songs. EURASIP Journal on

Audio, Speech, and Music Processing 2015, 2015:16.

doi:10.1186/s13636-015-0062-9.

Sutskever, I., Vinyals, O., Quoc, L., 2014. Sequence to

sequence learning with neural networks, In Advances

in neural information processing systems, pages 3104-

3112, 2014.

Zhiquan, Q., Tian, Y., Shi, Y., 2013. Robust twin support

vector machine for pattern classification, Pattern

Recognition 46.1 (2013): 305-316.

doi:10.1016/j.patcog.2012.06.019.

ZHAR, M., Bahatti., L., Bouattane, O., 2020. New

Algorithm for the Development of a Musical Words

Descriptor for the Artificial Composition of Oriental

Music. Advances in Science, Technology and

Engineering Systems Journal Vol. 5, No. 5, 434-443

(2020). DOI: 10.25046/aj050554.

ZHAR, M., Bahatti., L., Bouattane, O., 2020. Classification

algorithms to recognize the influence of the playing

BML 2021 - INTERNATIONAL CONFERENCE ON BIG DATA, MODELLING AND MACHINE LEARNING (BML’21)

402

style of the Oud masters on the musicians of the Oud

Instrument International Journal of Advanced Trends in

Computer Science and Engineering 9(1.5):111-118.

(2020) DOI:10.30534/ijatcse/2020/1791.52020.

ZHAR, M., Bahatti., L., Bouattane, O., 2020. New

Algorithm for The Development of a Musical Words

Descriptor for The Artificial Synthesis of Oriental

Music. 2020 1st International Conference on Innovative

Research in Applied Science, Engineering and

Technology (IRASET). (2020) DOI:

10.1109/IRASET48871.2020.9092157.

RNN Classifier to Identify the Influence of Oud Master on the Way to Play of Oud Player

403