The Application of Recurrent Neural Networks for the Diagnosis of

Industrial Systems

Amri Omar

a

and Belmajdoub Fouad

Laboratory of industrial technologies, Faculty of sciences and technologies, University Sidi Mohamed Ben Abdellah

Fes, Morocco

Keywords: Industrial Systems, Monitoring, Artificial Intelligence, Recurrent Neural Networks, Diagnosis.

Abstract: The complexity of industrial equipment is constantly increasing, which makes the task of monitoring more

and more complex. In this context, the use of artificial intelligence techniques offers very practical solutions

to deal with this task, especially artificial neural networks, because thanks to their learning capacity and their

automatic and intelligent algorithms, they can handle perfectly industrial system monitoring problems. In

these papers, we are mainly interested in recurrent neural networks, which are a specific kind of artificial

neural network, which provides excellent dynamic behaviour. In the literature, several architectures of

recurrent neural networks have been proposed and implemented, and each one offers some strengths and

weaknesses. Therefore, in the following papers, we present state of the art as well as a comparative study

between the most relevant architectures that can be used to ensure the operation of the diagnosis, which is

considered a significant phase of industrial system monitoring.

1 INTRODUCTION

The neural networks have represented extraordinary

progress in the field of artificial intelligence so that,

the consequences of this progress extend to several

industries and applications (T. Cambrai, 2019),

especially in form recognition, which has been widely

applied in various engineering fields, mainly

industrial systems monitoring (Msaaf and

Belmajdoub, 2015), especially the dynamic and static

diagnosis (Koivo, 1994), (H. Wang and P. Chen,

2011), (R. Patton, J. Chen, and T. Siew, 1994) as well

as the prognosis (DePold and Gass, 2014),(Tobon-

Mejia et al., 2012).

According to Lefebvre (Lefebvre D., 2000), the

principle of monitoring is to detect and classify

failures by observing the system’s evolution, than to

diagnose them by locating the faulty elements and

identifying the primary causes. Therefore, the

application of neural networks in the diagnosis can be

considered as a kind of classification or form

recognition (Msaaf and Belmajdoub, 2015), so that

each observed failure is associated with its probable

fault class. From the different sensor signals

generated by the desired system, the neural networks

a

https://orcid.org/0000-0001-7331-2089

provide an output that indicates the probably current

state (Zemouri et al., 2003). In practice, the sensor’s

outputs changes during the system functioning

(Palluat et al., 2005). Therefore it can be considered

as a time series dataset. In this context, the use of

recurrent neural networks (RNNs), which are

dedicated to treating this kind of data, seems

interesting. These papers aim to present an overview

and a comparative study of the relevant recurrent

neural network architectures, which can be used to

ensure industrial system diagnosis.

The rest of these papers will be as follow: In the

next section, a general context about neural networks

will be presented. The third part gives an overview of

recurrent neural networks and a classification of the

several architectures of RNNs, as well as a

comparison between the different architectures to

justify the choice of the appropriate architecture to

accomplish the diagnosis function.

2 FORMAL NEURONE

In 1943, Mc. Culloh et al. developed the first

mathematical and computer model that mimics the

60

Omar, A. and Fouad, B.

The Application of Recurrent Neural Networks for the Diagnosis of Industrial Systems.

DOI: 10.5220/0010728400003101

In Proceedings of the 2nd International Conference on Big Data, Modelling and Machine Learning (BML 2021), pages 60-66

ISBN: 978-989-758-559-3

Copyright

c

2022 by SCITEPRESS – Science and Technology Publications, Lda. All rights reserved

functioning of the biological neurons (Mcculloch and

Pitts, 1943), which is the single neuron or formal

neuron (figure 1). It consists of a binary neuron, i.e.

whose output is 0 or 1. The behaviour of a formal

neuron is composed of two main phases:

1

Phase or the activation phase, in this phase; a

weighted sum of the entries is calculated so that:

𝑎

∑

𝑊

∗𝑥

𝑏

(1)

Such as 𝑊

represents the weights of the neuron and

𝑏

the bias of the neuron.

2

Phase is the phase of calculating the neuron

output. From the value of 𝑎

the output

𝑌

𝑖

is

calculated so that:

𝑌

𝑓𝑎

(2)

Such as the function 𝑓 is a transfer function (or

activation function) applied to 𝑎

.

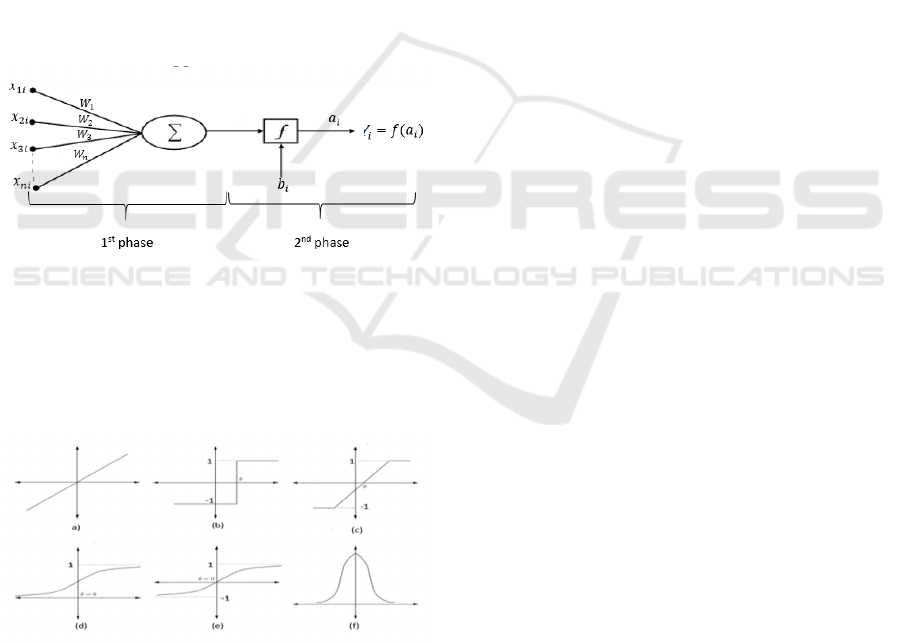

Figure 1: Formal neurone.

The activation function is the transfer function that

connects the weighted summation to the output

signal. There are different types of activation

functions. Figure 2 shows the most commonly used

ones (Msaaf and Belmajdoub, 2015).

Figure 2: The most frequently used activation functions, A)

Linear Function. B) Threshold function. C) Linear Function

by Piece D) Sigmoid Function. E) Hyperbolic Tangent

Function. F) Gaussian Function.

The formal neuron does not have a tremendous

computational capacity, but this strength appears

when it is interconnected with other formal neurons.

A neural network is formed by a set of formal neurons

connected and organized in layers. There are three

main classes of layers: the input layer, the output layer

and the hidden layer. Thus, each node is connected to

the node in the next layer (in the case of a feedforward

neural network), or can be linked to any other node or

even to itself (in the case of a recurrent neural

network).

3 RECURRENT NEURAL

NETWORKS

3.1 General Context

The recurrent neural networks (RNNs) is a particular

class of artificial neural networks dedicated to time-

series datasets, i.e. a sequence of data that varies over

time. The main feature of an RNN is that the network

disposes on feedback connection or internal loop

(“Recurrent Neural Network - an overview |

ScienceDirect Topics,” 2020), which permits

continuing information related to past knowledge so

that it handles sequences by iterating through the

elements of the series and maintaining a state, which

contains information relative to all the details

presented to the network (Chollet, 2017), which give

to the RNN the ability to treat the current element

while keeping memories of what came before. In the

literature, several architectures of RNNs have been

proposed to ensure different operations. In the next

section, we will present the main relevant ones, which

can be used to guarantee industrial systems diagnosis.

3.2 Recurrent Neural Networks

Architectures

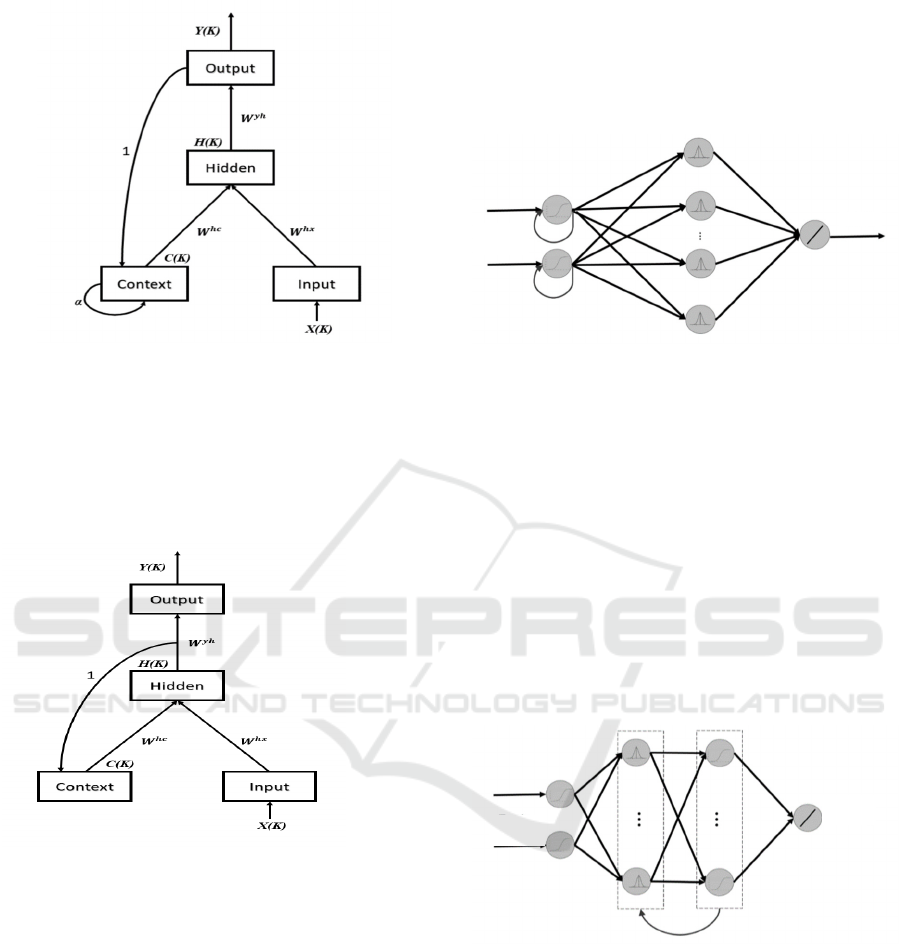

3.2.1 Jordan’s Architecture

In (Jordan M. I., 1989), the author proposed one of

the first architectures of recurrent neural networks,

which is the Jordan architecture. In this architecture,

the units of the output layer are duplicated on a

context layer. The units of this layer also consider

their state at the previous state, which gives the neural

network a dynamic or individual memory (Zemouri,

2003). The output of this layer is calculated according

to the following equation:

𝐶

𝑘

𝛼𝐶

𝑘1

𝑌𝑘 1 (3)

𝑌

The Application of Recurrent Neural Networks for the Diagnosis of Industrial Systems

61

Figure 3: Jordan’s architecture

3.2.2 Elman’s Architecture

Elman's architecture (Elman J. L., 1990) is inspired by

a large part of Jordan's architecture; this time, instead

of duplicating the network output, it is the hidden layer

units that are reproduced in the context layer.

Figure 4: Elman’s architecture

Remarque

Elman’s and Jordan’s architecture are considered

simple recurrent neural networks (Dinarelli and

Tellier, 2016).

3.2.3 Recurrent Radial Basis Function

(RRBF)

Contrary to Jordan’s and Elman’s architecture, the

RRBF neural network (Zemouri, 2003) obtains its

dynamic aspect by a recurrence of the connections at

the level of the neurons of the input layer, which

provide the input neurons with a capacity to take into

account the different data already presented (Zemouri

et al., 2003), (Zemouri et al., 2006). As a result, The

RRBF disposes of two types of memory:

Dynamic memory that takes into account

the dynamics of the input data.

Static memory for prototype storage.

Figure 5: RRBF’s architecture

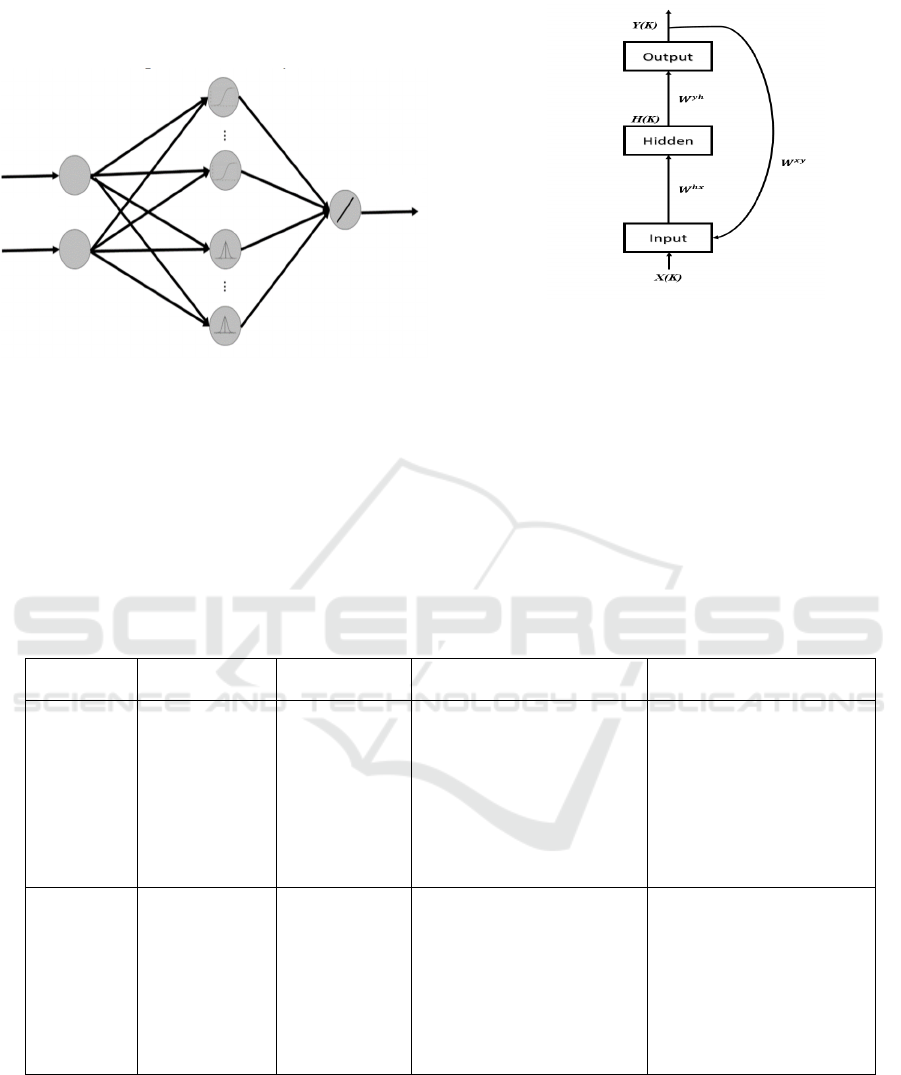

3.2.4 Recurrent Radial Basis Function

(R2BF)

The R2BF (Frasconi et al., 1996) model has been

developed to have a behaviour comparable to a finite

state automaton. This network is composed of 4

layers: Input, output, and two hidden layers, the first

hidden layer has Gaussian neurons, which are fully

connected to the second one that has sigmoid neurons,

the production of these neurons is connected to the

output layer and at the same time reinjected to the first

hidden layer as the input of this layer is represented

by two vectors, which are the current input and the

output of the second layer of the previous state.

Figure 6: R2BF’s architecture

3.2.5 Dynamic General Neural Network

(DGNN)

This architecture is considered as the combination of

two neural networks: the multi-layer perceptron and

the radial basic function (Palluat et al., 2005), (Ferariu,

L. and T. Marcu, 2002) (For further information about

these two architecture show (Msaaf and Belmajdoub,

2015)). This architecture is composed of three layers:

input and output layer and a hidden layer (Scarselli et

al., 2009), which contains two types of neurons

X(K)

Y(K)

X(K)

Y(K)

BML 2021 - INTERNATIONAL CONFERENCE ON BIG DATA, MODELLING AND MACHINE LEARNING (BML’21)

62

(Sigmoid neurons and Gaussian neurons), this

network has recurrent internal connections in the

neurons of the output and hidden layer.

Figure 7: DGNN’s architecture.

3.2.6 Jordan’s/Elman’s architecture variant

In (Dinarelli and Tellier, 2016), the authors have

proposed a variant of Jordan’s and Elman's

architecture. In this architecture, the authors proposed

that the outputs predicted by the network at the stages

of the sequence processing are returned to the input

of the network, which allows the returned data to

traverse the network in its entirety.

Figure 8: Jordan’s/Elman’s architecture variant.

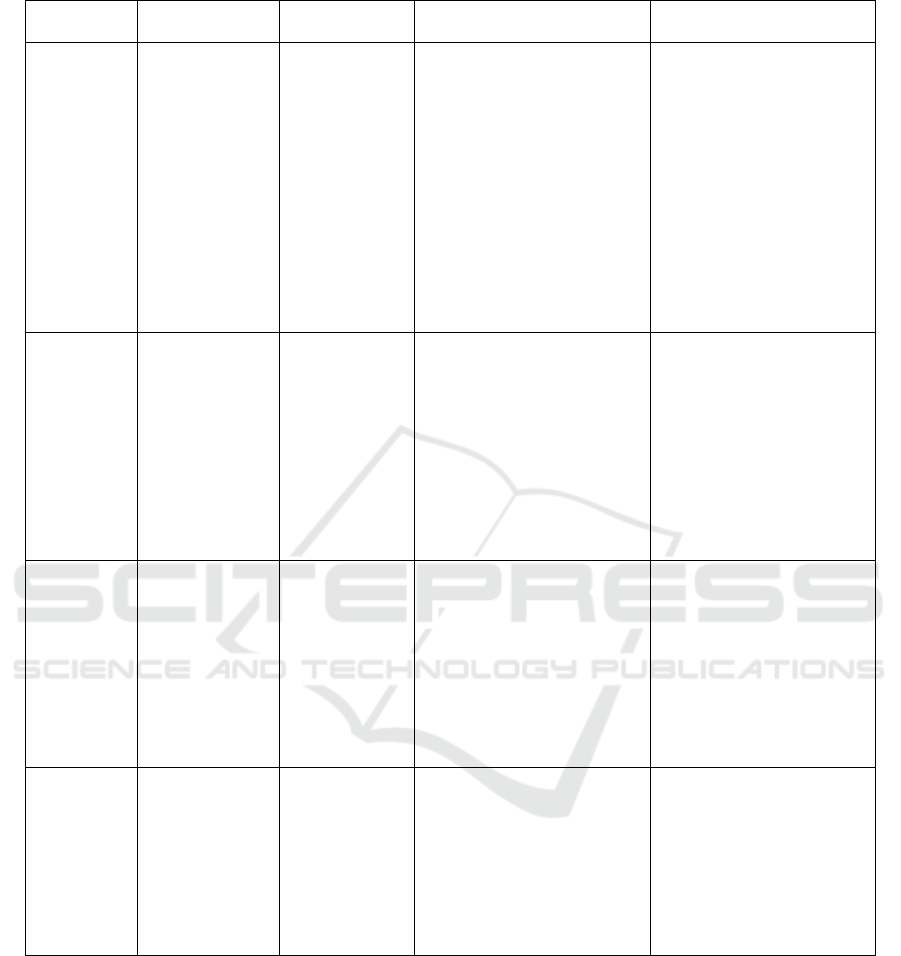

In practice, each of these architectures presented

above shows some advantages and disadvantages.

Therefore, based on a thorough analysis of the several

case studies presented in the following researches

(Zemouri et al., 2003), (Palluat et al., 2005),

(Dinarelli and Tellier, 2016), (Zemouri et al., 2006),

(Wysocki and Ławryńczuk, 2015), and (Tong et al.,

2009) it was possible to extract the several strengths

and weaknesses, which provides each method in

practice (table1).

Table 1: The advantages and disadvantages of some recurrent neural networks architectures.

Neuronal

Architecture

Author(s)/Date

of Publication

Recurrence type Advantages Disadvantages

Jordan

Jordan M.

I./1989

Between the

output layer and

the context

layer.

- Simple architecture

-Fast learning

-Adapts to several tasks that

consist of predicting sequential

information.

-Use the label predicted in the

current state.

-Sometimes, the context layer

exhibits forgetting behaviour.

-Only a part of the network is

affected by the recurrent

information, which results in

more or less inefficient

learning.

-Vulnerable to error

p

ropagation.

Elman

Elman J. L./

1990

Between the

hidden layer and

the context

layer.

-Simple architecture.

-Fast learning.

-Adapts to several tasks that

consist of predicting sequential

information.

-Avoid the forgetting

behaviour presented to

Jordan's architecture.

-Only a part of the network is

affected by the recurrent

information, which results in

more or less inefficient

learning.

X(K)

Y

(

K

)

The Application of Recurrent Neural Networks for the Diagnosis of Industrial Systems

63

Table 2: The advantages and disadvantages of some recurrent neural networks architectures (cont.).

Neuronal

Architecture

Author(s)/Date of

Publication

Recurrence type Advantages Disadvantages

RRBF

Zemouri, R.,

Racoceanu, D.

and Zerhouni,

N./2003

Recurrence of

the connections

at the input

layer.

-Architecture is easy to

understand and to implement.

-The recurrent information

traverses the entire network,

affecting all the network

layers, resulting in efficient

learning.

-Fast learning with high

accuracy.

-Architecture dedicated to

industrial system diagnosis

and prognosis.

-Simple and stable learning

algorithm.

-Complex learning.

-Require a high

computation capacity.

R2BF

Frasconi, P.,

Gori, M.,

Maggini, M.,

Soda, G./1996

Between the

outputs of the

neurons of the

hidden layer and

the one.

-R2BF have a very intriguing

relationship with high order

recurrent networks (The

"order" of a neural network

refers to the dimensionality of

the product terms in the

weighted sum (Frasconi et al.,

1996)).

-Fast learning with high

accuracy but lower than

RBBF’s accurac

y

.

-Require a high computation

capacity.

- Only a part of the network

is affected by the recurrent

information, which results in

more or less inefficient

learning.

DGNN

Scarselli, F.,

Gori, M., Tsoi, A.

C.,Hagenbuchner,

M., Monfardini,

G./2009

Internal

recurrent

connections in

the neurons of

the hidden layer

and the output

layer.

-Simple architecture with good

accuracy and capacity for

generalization.

-Interesting results may be

obtained with a high number

of hidden layers.

- Interesting results may be

obtained by using genetic

algorithms or other artificial

intelli

g

ence al

g

orithms.

- High response time.

- Sometimes it is necessary

to do several training on

different bases to obtain

interesting results.

- Only a part of the network

is affected by the recurrent

information, which results in

more or less inefficient

learnin

g

.

Jordan/Elman variant

Dinarelli, M.

Tellier, I./2016

-Recurrence

between output

and input layer

-The information traverses the

network in its totality, which

allows more effective learning.

-The recurrent connection

between the output and input

layers makes the model much

more robust.

-Require a high computation

capacity.

-Significant learning time.

This comparative study shows that:

-The extension of Jordan and Elman's architecture

and the RBBF have a recurrence between the output

of the network, which allows the recurrent

information to traverse the entire network, affecting

all the layers without exception, which provides much

more efficient learning. In the other architecture, only

a part of the network is affected by the recurrence,

which results in more or less inefficient learning.

Besides, the forgetting behaviour of Jordan's

architecture cannot be tolerated because most of the

industrial systems are complicated systems, which

generate very long sequences, directly affecting the

reliability and accuracy of the diagnosis result.

-RRBF, R2BF, and DGNN provide a good capacity

of generalization and a fascinating accuracy;

however, RRBF is distinguished by its architecture

that is dedicated to industrial system diagnosis, in

addition to its high accuracy in comparison to the

others; also the use of an increased number of hidden

BML 2021 - INTERNATIONAL CONFERENCE ON BIG DATA, MODELLING AND MACHINE LEARNING (BML’21)

64

layer in the case of DGNN affects directly the training

and response time, which may be incompatible for the

online diagnosis, the DGNN requires in some

applications to perform several pieces of training with

several training databases to obtain interesting results,

which is not possible in some problems of industrial

diagnosis, because sometimes the data are minimal.

-Concerning the high computing capacity required

by the RRBF, R2BF, and Jordan/Elman variant’s

architectures, there is no longer a real problem thanks

to the potent processors developed in the last few

years.

The outcome of this comparison shows that the

RRBF provides some advantages, which another

architecture cannot deal with. Thanks to its high

accuracy and its simple architecture, which is

dedicated directly to industrial systems diagnosis, in

addition to its easiness of understanding and

implementation, make it the recurrent neural network

architecture, which can deal perfectly with the

diagnosis operation.

4 CONCLUSIONS

The implementation of a diagnosis module for an

industrial system imposes different requirements to

be taken into consideration. In these papers, we

highlight the use of recurrent neural networks to

ensure the diagnosis operation; through a

comparative study between the relevant architectures

presented in the literature, we found the RRBF could

deal perfectly with industrial system diagnosis, which

another cannot deal with, thanks to the strengths

offered by this architecture. As an extension of this

work, we will use the RRBF neural network to

elaborate a diagnosis module to ensure discrete event

system diagnosis, which is considered an important

class of industrial systems.

ACKNOWLEDGEMENTS

This research was financially supported by the

National Center for Scientific and Technical

Research of Morocco. The authors wish to give their

sincere thanks to this organism for the valuable

cooperation as well as we would like to thank the

editors and reviewers for their constructive comments

and suggestions, which helped us to improve the

quality of this paper.

REFERENCES

Chollet, F., 2017. Deep Learning with Python, 1st edition.

ed. Manning Publications, Shelter Island, New York.

DePold, H.R., Gass, F.D., 2014. The Application of Expert

Systems and Neural Networks to Gas Turbine

Prognostics and Diagnostics. Presented at the ASME

1998 International Gas Turbine and Aeroengine

Congress and Exhibition, American Society of

Mechanical Engineers Digital Collection.

https://doi.org/10.1115/98-GT-101

Dinarelli, M., Tellier, I., 2016. Étude de réseaux de

neurones récurrents pour l’étiquetage de séquences, in:

Traitement Automatique Des Langues Naturelles

(TALN). Paris, France.

Elman J. L., 1990. Finding structure in time. Cogn. Sci. 142

14, 179–211.

Ferariu, L. et T. Marcu, 2002. Evolutionary Design of

Dynamic Neural Networks Applied to System

Identification. 15th IFAC World Congr. Barcelone Esp.

Frasconi, P., Gori, M., Maggini, M., Soda, G., 1996.

Representation of finite state automata in Recurrent

Radial Basis Function networks. Mach. Learn. 23, 5–

32. https://doi.org/10.1007/BF00116897

H. Wang and P. Chen, 2011. Intelligent diagnosis method

for rolling element bearing faults using possibility

theory and neural network. Comput. Ind. Eng. 511–518.

Jordan M. I., 1989. Serial order : A parallel, distributed

processing approach. In J. L. ELMAN & D. E.

RUMELHART, Eds., Advances in Connectionist

Theory : Speech. Hillsdale, NJ : Erlbaum.

Koivo, H.N., 1994. Artificial neural networks in fault

diagnosis and control. Control Eng. Pract. 2, 89–101.

https://doi.org/10.1016/0967-0661(94)90577-0

Lefebvre D., 2000. Contribution à la modélisation des

systèmes dynamiques à événements discrets pour la

commande et la surveillance..

Mcculloch, W.S., Pitts, W., 1943. A LOGICAL

CALCULUS OF THE IDEAS IMMANENT IN

NERVOUS ACTIVITY. Bull. Math. Biophys. 5, 115–

133.

Msaaf, M., Belmajdoub, F., 2015. L’application des

réseaux de neurone de type `` feedforward ’ ’ dans le

diagnostic statique. Xème Conférence Internationale :

Conception et Production Intégrées.

Palluat, N., Racoceanu, D., Zerhouni, N., 2005. Utilisation

des réseaux de neurones temporels pour le pronostic et

la surveillance dynamique. Etude comparative de trois

réseaux de neurones récurrents. Rev. Intell. Artif. 19,

913–950. https://doi.org/10.3166/ria.19.913-950

R. Patton, J. Chen, and T. Siew, 1994. Fault diagnosis in

nonlinear dynamic systems via neural networks.

Recurrent Neural Network - an overview | ScienceDirect

Topics [WWW Document], 2020. URL

https://www.sciencedirect.com/topics/computer-

science/recurrent-neural-network (accessed 3.25.21).

Scarselli, F., Gori, M., Ah Chung Tsoi, Hagenbuchner, M.,

Monfardini, G., 2009. The Graph Neural Network

Model. IEEE Trans. Neural Netw. 20, 61–80.

https://doi.org/10.1109/TNN.2008.2005605

The Application of Recurrent Neural Networks for the Diagnosis of Industrial Systems

65

T. Cambrai, 2019. Intelligence Artificielle : Comment les

algorithmes et le Deep Learning dominent le monde.

Independently published, 2019. [WWW Document].

Rakuten Kobo. URL

https://www.kobo.com/us/en/ebook/l-intelligence-

artificielle-expliquee-comment-les-algorithmes-et-le-

deep-learning-dominent-le-monde-2e-edition

Tobon-Mejia, D.A., Medjaher, K., Zerhouni, N., 2012.

CNC machine tool’s wear diagnostic and prognostic by

using dynamic Bayesian networks.

https://doi.org/10.1016/J.YMSSP.2011.10.018

Tong, X., Wang, Z., Yu, H., 2009. A research using hybrid

RBF/Elman neural networks for intrusion detection

system secure model. Comput. Phys. Commun. 180,

1795–1801. https://doi.org/10.1016/j.cpc.2009.05.004

Wysocki, A., Ławryńczuk, M., 2015. Jordan neural

network for modelling and predictive control of

dynamic systems, in: 2015 20th International

Conference on Methods and Models in Automation and

Robotics (MMAR). Presented at the 2015 20th

International Conference on Methods and Models in

Automation and Robotics (MMAR), pp. 145–150.

https://doi.org/10.1109/MMAR.2015.7283862

Zemouri, R., 2003. Contribution à la surveillance des

systèmes de production à l’aide des réseaux de neurones

dynamiques: Application à la e-maintenance. Autom.

Robot. Univ. Franche-Comté Fr. Fftel-00006003ff 278.

Zemouri, R., Racoceanu, D., Zerhouni, N., 2006. Réseaux

de neurones récurrents à fonctions de base radiales :

RRFR. Artificial Intell. Rev. 16 (03), 33.

Zemouri, R., Racoceanu, D., Zerhouni, N., 2003. Réseaux

de neurones récurrents à fonctions de base radiales.

Application à la surveillance dynamique. J. Eur.

Systèmes Autom. 37, 49–81.

https://doi.org/10.3166/jesa.37.49-81

BML 2021 - INTERNATIONAL CONFERENCE ON BIG DATA, MODELLING AND MACHINE LEARNING (BML’21)

66