From Implicit Preferences to Ratings: Video Games Recommendation

based on Collaborative Filtering

Ros

´

aria Bunga

1

, Fernando Batista

1,2 a

and Ricardo Ribeiro

1,2 b

1

ISCTE - Instituto Universit

´

ario de Lisboa, Av. das Forc¸as Armadas, Portugal

2

INESC-ID Lisboa, Portugal

Keywords:

Recommendation System, Collaborative Filtering, Implicit Feedback.

Abstract:

This work studies and compares the performance of collaborative filtering algorithms, with the intent of

proposing a videogame-oriented recommendation system. This system uses information from the video game

platform Steam, which contains information about the game usage, corresponding to the implicit feedback that

was later transformed into explicit feedback. These algorithms were implemented using the Surprise library,

that allows to create and evaluate recommender systems that deal with explicit data. The algorithms are eval-

uated and compared with each other using metrics such as RSME, MAE, Precision@k, Recall@k and F1@k.

We have concluded that computationally low demanding approaches can still obtain suitable results.

1 INTRODUCTION

There has been a rapid growth of content, products,

and services provided by sites like Google, Youtube,

Amazon, Netflix, Steam, among others. The great di-

versity of information available in the Internet eas-

ily began to overwhelm users, leaving them indeci-

sive and thus hindering the decision-making process.

This large amount of information, instead of gener-

ating a benefit, became a problem for users. While

choice is good, more choice is not always better (Ricci

et al., 2011). Then, as this phenomenon intensified,

the more important became to help users filtering the

relevant items from a whole range of available alter-

natives, in order to facilitate the choice of products

or services that best suited them. To minimize or

solve this information filtering problem, recommen-

dation systems emerged and started to assist the de-

cision making process by providing personalized rec-

ommendations to users (Jannach et al., 2011).

Recommendation is something we are all famil-

iar with, whether a friend recommends a new book to

read or a movie to watch, it is all about giving good

options and helping you make a choice. These rec-

ommendations are usually given based on knowledge

about what we like. Recommendation systems work

in exactly the same way: the system tries to use the

a

https://orcid.org/0000-0002-1075-0177

b

https://orcid.org/0000-0002-2058-693X

users history or profile to predict which products or

services to recommend. All of the platforms men-

tioned above have started implementing some sort of

recommendation system that caters to the needs of

their users, providing personalized content with the

goal of providing the user with a better experience,

thereby increasing user loyalty, selling more and di-

verse products, and ultimately, improving the revenue

of these companies (Ricci et al., 2011).

In this work, we focus on the video games domain,

mainly due to the exponential growth of this market

and its particularities. As case study, we use the video

game platform Steam

1

. According to Steam’s web-

site, they currently provide 30,000 different games for

Windows, Mac, and Linux operating systems. In such

a fast-moving market, which has a large amount of

classic games, new releases, and indie games, dozens

of games are coming out every month. Given such

multitude of choice, users/players find it very difficult

to find new games they might be interested in.

With a market as diverse as that, there is clearly

a need to implement recommendation systems with

high accuracy that provide users with relevant un-

known games and/or new releases that cater to their

tastes. This becomes even more evident, when we

look at Steam ’s 2014 records, where about 37% of

games purchased were never played by the users who

bought them. A user plays his favorite games often,

1

https://store.steampowered.com

Bunga, R., Batista, F. and Ribeiro, R.

From Implicit Preferences to Ratings: Video Games Recommendation based on Collaborative Filtering.

DOI: 10.5220/0010655900003064

In Proceedings of the 13th International Joint Conference on Knowledge Discovery, Knowledge Engineering and Knowledge Management (IC3K 2021) - Volume 1: KDIR, pages 209-216

ISBN: 978-989-758-533-3; ISSN: 2184-3228

Copyright

c

2021 by SCITEPRESS – Science and Technology Publications, Lda. All rights reserved

209

but also wants to discover new games that are relevant

to him. This presents itself as a form of challenge for

this market: the need for video games that encour-

age the user to come back and help users find new

games that will be consumed as much as the ones they

already liked. Recommendation systems can greatly

benefit the video game market by their ability to sug-

gest new games to users in a personalized way. Nev-

ertheless, there is still much to be explored about rec-

ommendation systems in the video game domain.

The goal of this work is to develop a recommen-

dation system that provides video game suggestions

to a user based on his gaming history and the tastes

of users similar to him. We will explore several ap-

proaches, based on different collaborative filtering al-

gorithms, using data from the Steam platform. The

major challenge is on understanding how to use im-

plicit data to infer explicit ratings of the users.

We aim to answer the following research ques-

tions with reference to the video game domain:

RQ1: What is the performance of different col-

laborative filtering recommendation approaches,

when we applied on implicit data?

RQ2: Can playing time of the users serve as an

adequate implicit representation of the users pref-

erences?

This paper is organized as follows. Section 2

overviews the existing literature concerning collab-

orative filtering approaches and video game recom-

mendation. Section 3 presents the dataset. Sec-

tion 4 describes the process of obtaining explicit

ratings from the existing information. Section 5

overviews the the recommendation approaches used

in this work. Section 6 analyses and discusses the

obtained results. And, finally, Section 7 presents the

overall conclusions and pinpoints future work direc-

tions.

2 RELATED WORK

Collaborative filtering based recommendation ap-

proaches are still an active topic in this research

area. P

´

erez-Marco et al. (P

´

erez-Marcos et al., 2020)

present a hybrid video game recommendation system,

through the use of collaborative filtering and content-

based filtering. The system takes as input a list of

ratings of a user, the communities of the games the

system contains, and the communities of the users

the system contains. Content-based filtering is ap-

plied first: for each item of the active user, the system

searches for the games that are in the closest com-

munity to that item. As a result, a list of the games

that are most similar to those of the active user is ob-

tained. Then, collaborative filtering is applied, re-

stricted to the items obtained in the previous step,

using ratings. In the end, a matrix with the recom-

mended games and their predicted ratings is returned.

In the case of content-based filtering, they use graph-

based techniques to find games similar to those of the

active player, reducing the computational load of the

system because recommendations are made on a sub-

set of items. Anwar et al. (Anwar et al., 2017) pro-

pose a recommendation system that uses a collabo-

rative filtering technique to suggest video games to

users. The recommendation system was implemented

using item-based collaborative filtering and Pearson

correlation to identify unrated games, finding similar-

ities between unrated and highly rated games by the

user. Next, the system employs user-based collabo-

rative filtering. However, the system was not able to

provide a better accuracy in the case of the cold boot

scenario.

Many traditional collaborative filtering algorithms

fail to consider that Users’ preferences often vary over

time. (Joorabloo. et al., 2019) proposes a recommen-

dation method that predicts the similarity between

users in the future, and forecasts their similarity trends

over time. Experimental results show that the pro-

posed method significantly outperforms classical and

state-of-the-art recommendation methods.

Given its current popularity, artificial neural

networks-based approaches are also being explored

as recommendation methods. STEAMer (Wang et al.,

2020), a video game recommendation system for the

Steam platform, uses Steam user data in conjunc-

tion with a Deep Autoencoders learning model (a

specific type of neural network architecture that at-

tempts to force the network to learn a compressed

representation of the original input data). Cheuque

et al. (Cheuque et al., 2019) propose recommenda-

tion models based on Factorization Machines (FM),

Deep Neural Networks (DeepNN) and a combination

of both (DeepFM), chosen for their potential to re-

ceive multiple inputs as well as different types of in-

put variables. All algorithms achieve better results

than an ALS (Alternating Least Squares Model) base-

line. Despite being a simpler model than DeepFM,

DeepNN was found to be the best performing al-

gorithm: it was able to better exploit user-item re-

lationships, achieving consistent results on different

datasets. They also analyzed the effect of sentiment

extracted directly from game reviews and concluded

that it is not as relevant for recommendation as one

might expect.

Closer to our work, also exploring implicit feed-

back, Bertens et al. (Bertens et al., 2018) propose a

KDIR 2021 - 13th International Conference on Knowledge Discovery and Information Retrieval

210

system that recommends video games to users based

on their experience and behavior, i.e., playing time

and frequency of activity. They explore two models:

Extremely Randomized Trees (ERTs) and DeepNNs.

The results show that the prediction performance of

DeepNNs and ERTs is similar, with the ERT model

achieving a marginally better performane and scaling

more easily in a production environment. Pathak et

al. (Pathak et al., 2017) proposed a recommendation

system based on latent factor models, in particular

Bayesian Personalized Ranking (BPR), that is trained

using implicit ranking (i.e., purchases vs. no pur-

chases), and that uses the trained features of an item

recommendation model to learn personalized rank-

ings over bundles. The authors showed that the model

is robust to cold bundles and that new bundles can be

generated effectively using a greedy algorithm. Their

main focus is on item-to-group compatibility, generat-

ing and evaluating custom package recommendation

on the Steam video game platform. Finally, Sifa et

al. (Sifa et al., 2015) present two approaches for Top-

N recommendation systems: a matrix factoring-based

model and a user-based neighborhood model operat-

ing in low dimensions. Both models are based on

archetypal analysis, a method similar to cluster anal-

ysis, thus grouping users into archetypes. The data

used for this analysis is composed of implicit feed-

back, specifically information about game ownership

and play times, with the ultimate goal of recommend-

ing games that have the longest predicted play time.

The authors compare their algorithm to several base-

lines and an item-based neighborhood model, which

they were able to outperform.

3 DATASET

In this work we use the “Steam Video Game and

Bundle Data”

2

shared by Pathak et al. (Pathak et al.,

2017). The dataset consists of the purchase history of

Australian users of the Steam platform, indicating for

each one the list of items purchased with a small col-

lection of metadata related to game playing time. We

expanded each users item list or item bundle from the

original dataset, so that each record in the set could be

viewed as a user/item interaction.

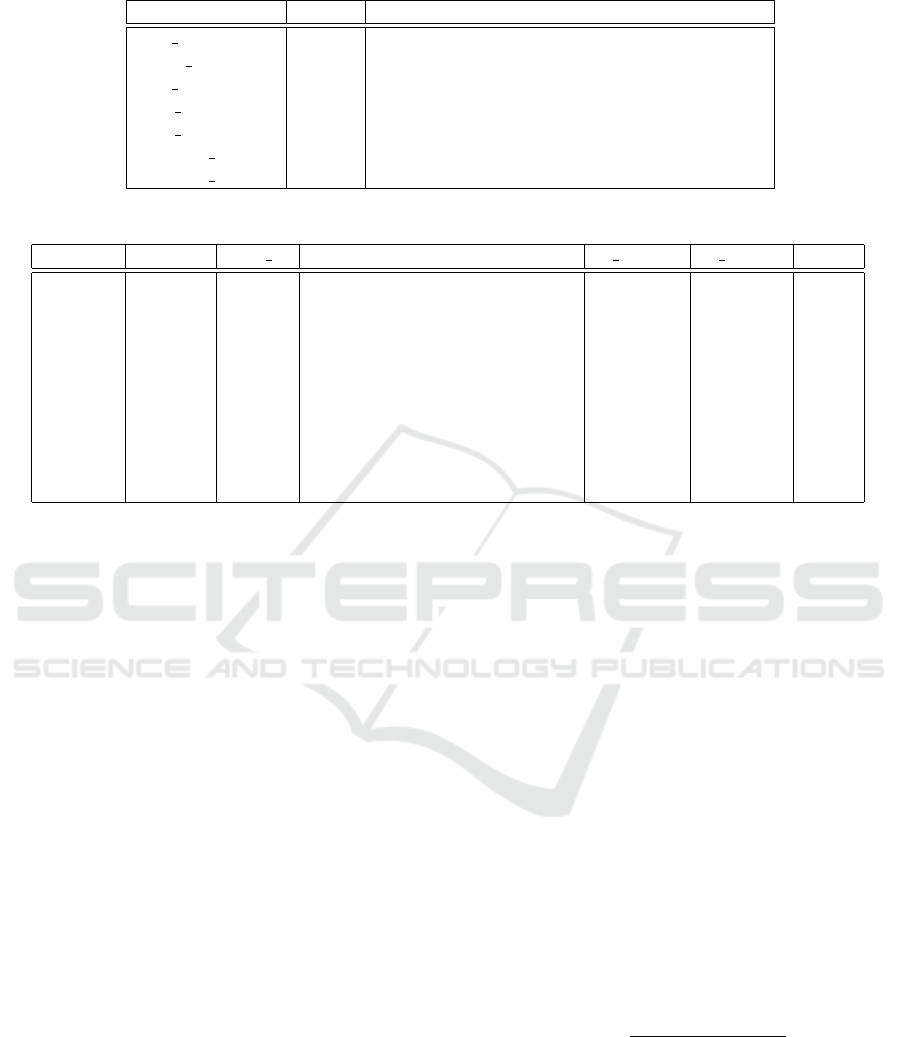

Table 1 shows the attributes of the original dataset.

As it is possible to observe, there is no rating that can

be used as an explicit measure of users preference,

i.e., our dataset has no explicit ratings. Therefore, for

experimenting the recommendation algorithms we re-

sorted to implicit information.

2

http://cseweb.ucsd.edu/

˜

jmcauley/datasets.html

For our experiments, we filtered the dataset: we

have removed all players with less than 30 games and

all games that were played by less than 10 players.

Only games that had a playing time of more than zero

minutes were considered. This data filtering step led

to a new dataset containing 33,307 unique users and

6,387 unique games, with about 2.8 million records.

4 FROM IMPLICIT

PREFERENCES TO RATINGS

As previously described, the dataset only provides in-

formation related to game playing time. However,

for collaborative filtering algorithms we need user rat-

ings. Most approaches to understanding user prefer-

ences are based on having explicit user ratings. How-

ever, in many real-life situations, we need to rely on

implicit ratings, such as how many times a user has

listened to a song or played a game. Considering the

importance mentioned in the literature on the relation-

ship between explicit and implicit ratings in recom-

mendation systems (Parra and Amatriain, 2011; Yi

et al., 2014), we chose the total playing time, “play-

time forever”, to infer the users explicit ratings, and

to understand the users preferences. Although im-

plicit ratings can be collected constantly and do not

require additional efforts from the user when inferring

the users preferences from their behavior, one cannot

be sure, if that behavior is interpreted correctly. Still,

Schafer et al. (Schafer et al., 2006) report that in some

domains, such as personalized online radio stations,

collecting implicit ratings can even result in more ac-

curate user profiles than what is achieved with explicit

ratings. Furthermore, it has also been discussed that

implicit preference data may actually be more objec-

tive, since there is no bias arising from users respond-

ing in a socially desirable way (Buder and Schwind,

2012). This also emphasizes that the proper interpre-

tation of implicit ratings can be highly dependent on

the domain.

We focus on the total playing time, in minutes,

playtime forever, to quantify the relevance of an item

to a specific user. Our assumption is that if a user

plays a game for a long time, he likes that game. For

the task of converting implicit ratings into explicit rat-

ings, we applied the Python cut() function to group

the game times into five intervals. Then we applied

the rank() function to assign a rank – equal values

are assigned a rank that is the average of the ranks of

those values. Our ranking system uses a scale ranging

from 1 to 5. Table 2 contains an excerpt of the final

result, where pt 2weeks and pt forever correspond to

playtime 2weeks, and playtime forever, respectively.

From Implicit Preferences to Ratings: Video Games Recommendation based on Collaborative Filtering

211

Table 1: Dataset attributes.

Attribute Type Description

user id string User

steam id integer Steam user identification

user url string User URL

item id integer Game identification

item name string Game title

playtime 2weeks integer Number of minutes played in the last two weeks

playtime forever integer Total number of minutes played

Table 2: Excerpt of the final dataset, containing only the most relevant fields.

steam id item id item name pt 2weeks pt forever rating

2794716 ...5400 45770 Dead Rising 2: Off the Record 0 72 3

2794717 ...5400 46510 Syberia 2 0 40 2

2794718 ...5400 466500 35MM 0 19 1

2794719 ...5400 55230 Saints Row: The Third 0 1029 5

2794720 ...5400 6300 Dreamfall: The Longest Journey 57 68 2

2794721 ...5400 6310 The Longest Journey 42 42 2

2794722 ...5400 72850 The Elder Scrolls V: Skyrim 0 2842 5

2794723 ...5400 7670 BioShock 0 219 4

2794724 ...5400 8850 BioShock 2 0 131 3

2794725 ...5400 8870 BioShock Infinite 0 438 4

5 APPROACHES TO

RECOMMENDATION

There are three common types of methods for rec-

ommendation systems in the literature: content-based

filtering, collaborative filtering, and hybrid filtering.

In this paper, we focus on collaborative filtering. In

collaborative filtering, recommendations for each user

are generated by making comparisons with their lik-

ing for an alternative against other users who have

rated the product similarly (Shah et al., 2017). A com-

mon approach is to split the dataset into two sets: the

training set and the test set. A recommendation model

is built with respect to the training set. And the test

set, in turn, is subdivided into two: the query set and

the target set. Based on the query set, the model sug-

gest items or predict ratings for the items in the target

set. For the implementation of the various recommen-

dation algorithms, we use Surprise, Simple Python

Recommendation System library (Hug, 2020). This

library provides several recommendation algorithms

and tools to evaluate, analyze, and compare algo-

rithms.

5.1 Nearest Neighbors-based

Algorithms

Neighborhood-based algorithms use user-user sim-

ilarity or item-item similarity to make recommen-

dations from a ratings matrix (Aggarwal, 2016).

KNNBasic is a neighborhood-based collaborative fil-

tering algorithm. The concept of neighborhood im-

plies that we need to determine similar users or sim-

ilar items to make predictions. The prediction ˆr

ui

is

defined in Equation 1, where sim(u, v) can be calcu-

lated based on the cosine similarity, based on Pear-

son’s correlation coefficient, or based on the similar-

ity of the mean squared difference. ˆr

ui

indicates a

predicted ranking, as opposed to one that has already

been observed in the original rank matrix. N

k

i

(u) rep-

resents the set of k users closest to the target user u,

who specified ratings for item i.

ˆr

ui

=

∑

v∈N

k

i

(u)

sim(u, v) · r

vi

∑

v∈N

k

i

(u)

sim(u, v)

(1)

KNNWithMeans is identical to KNNBasic, but takes

into account the average ratings of each user. The

weighted average of the mean-centric rating of an

item in the top-k peer group of the target user u is used

to provide a mean-centric prediction. The average rat-

ing of the target user is then added back to this predic-

KDIR 2021 - 13th International Conference on Knowledge Discovery and Information Retrieval

212

tion to provide a raw rating prediction ˆr

ui

of the target

user u, for item i, as defined in Equation 2. There is

also an item-based version of this algorithm, in which

we check the k closest items rated by user u and use

their ratings and similarities to item i to predict the

rating of item i (Mittal and Subraveti, 2017).

ˆr

ui

= µ

i

+

∑

j∈N

k

u

(i)

sim(i, j) · (r

u j

− µ

j

)

∑

j∈N

k

u

(i)

sim(i, j)

(2)

5.2 Algorithms based on Matrix

Factorization

In its basic form, matrix factorization characterizes

items and users by vectors of factors inferred from

item rating patterns. A high correspondence be-

tween item and user factors leads to a recommenda-

tion. These algorithms have become popular in recent

years, combining good scalability with predictive ac-

curacy. In addition, they offer a lot of flexibility to

model various real-life situations. Recommendation

systems rely on different types of input data, which

are usually placed in a matrix with one dimension rep-

resenting the users and the other dimension represent-

ing the items of interest. The most convenient data is

high quality explicit ratings, which includes explicit

input from users about their interest in products (Ko-

ren et al., 2009). The Singular Value Decomposition

(SVD) maps users and items to a common latent fac-

tor space of dimensionality f , so that user-item inter-

actions are modeled as inner products in that space.

The latent space attempts to explain ratings by char-

acterizing products and users into factors automati-

cally inferred from user feedback. Thus, each item

i is associated with a vector q

i

∈ R

f

, and each user

u is associated with a vector p

u

∈ R

f

. For a given

item i, the elements of q

i

measure the extent to which

the item has these factors, positive or negative. For

a given user u, the elements of p

u

measure the ex-

tent of interest the user has in items that are high in

the corresponding factors, again, they can be positive

or negative. The resulting scalar product, q

T

i

p

u

, cap-

tures the interaction between user u and item i, i.e., the

users overall interest in the features of the item. The

final rating is created by also adding baseline predic-

tors that depend only on the user or item. Equation 3

shows how the prediction is calculated, where µ is the

global average, b

u

is the user tendency, b

i

is the item

tendency, and q

T

i

p

u

is the interaction between the user

and the item (Koren et al., 2009).

ˆr

ui

= µ + b

u

+ b

i

+ q

T

i

p

u

(3)

SVD++ is an extension of SVD that takes implicit rat-

ings into account. In cases where independent im-

plicit feedback is absent, a significant signal can be

captured by considering which items users rate, re-

gardless of their rating value. This has led to several

methods that model a users factor by the identity of

the items he rated. One of these is SVD++, which

has been shown to perform better than SVD. For this

purpose, a second set of item factors is added, relat-

ing each item i, to a vector of factors y

i

∈ R

f

. These

new item factors are used to characterize users based

on the set of items they have rated. The prediction is

given by Equation 4, where R

u

is the items evaluated

by user u, and u is modeled as p

u

+ |R

u

|

−

1

2

∑

j∈R

u

y

j

.

p

u

is learned based on the provided explicit classifi-

cation.

ˆr

ui

= µ + b

u

+ b

i

+ q

T

i

p

u

+ |R

u

|

−

1

2

∑

j∈R

u

y

j

!

(4)

Non-Negative Matrix Factorization (NMF) is a matrix

factorization algorithm, in which the user-item matrix

is decomposed into user and item factors, that have

non-negative values. The user and item factors are

initialized with random values and the optimization

is done by stochastic gradient descent (SGD). This

algorithm is highly dependent on the initialized val-

ues for the factors. User and item baselines can also

be incorporated, but the model becomes susceptible

to oversizing, which, however, can be controlled by

a good choice of the regularization parameter (Luo

et al., 2014). The prediction is given by Equation 5.

ˆr

ui

= q

T

i

p

u

(5)

5.3 SlopeOne

It works on the intuitive principle of a popularity dif-

ferential between items for users. In pairs, it deter-

mines how much better one item is liked than an-

other. One way to measure this differential is to sim-

ply subtract the average rating of the two items (Jan-

nach et al., 2011). In turn, this difference can be used

to predict another users rating of one of these items,

given the rating of the other. Many such differen-

tials exist in a training set for each unknown classi-

fication and an average of these differentials can be

used. Thus, given a training set and any two items i

and j with ratings r

ui

and r

u j

, respectively, in some

user evaluation u the average deviation of item i from

item j is given by Equation 6.

dev(i, j) =

1

|U

i j

|

∑

u∈U

i j

r

ui

− r

u j

(6)

The algorithm considers that each evaluation of user u

that does not contain r

ui

and r

u j

will not be included in

From Implicit Preferences to Ratings: Video Games Recommendation based on Collaborative Filtering

213

the sum. U

i j

is the set of all users that classified items

i and j. The prediction is given by Equation 7 (Lemire

and Maclachlan, 2005).

ˆr

ui

= µ

u

+

1

|R

i

(u)|

∑

j∈R

i

(u)

dev(i, j) (7)

5.4 Co-clustering

Clustering is an unsupervised learning technique that

seeks to group similar objects together. The main

idea of this algorithm is to simultaneously obtain user

and item neighborhoods by co-clustering and gener-

ate predictions based on the average ratings of the

co-clusters (user-item neighborhoods), taking into ac-

count individual user and item trends. Users and

items are assigned to clusters C

u

and C

i

, and co-

clusters C

ui

, and the prediction is given by Equation 8,

where C

ui

is the average classification of co-cluster

C

ui

, and C

u

and C

u

are the average classification of co-

clusters C

u

and C

i

, respectively (Lemire and Maclach-

lan, 2005).

ˆr

ui

= C

ui

+ (µ

u

−C

u

) + (µ

i

−C

i

) (8)

6 ANALYSIS AND DISCUSSION

OF RESULTS

The proposed algorithms were evaluated in terms of

MAE, RMSE, Precision@k, Recall@k and F1@k.

The dataset has a total of 2,794,726 records, each with

unique information about the user-item interactions.

This set was divided, in the proportion of 80/20. Be-

ing 80% for the training set (2,235,780) and 20% for

the test set (558,946). The test set was subdivided into

a query set of 10% and a target set of 10%.

6.1 Evaluation Metrics

Recommendation system research has used various

types of metrics to evaluate the quality of a recom-

mendation system. We use metrics that calculate the

error between the actual classification and the pre-

dicted user classification, such as MAE and RMSE.

From these metrics it is possible to infer how close

the predicted values are to the actual ratings given

by users. However, accuracy metrics are not suit-

able for measuring the classification performance of

recommendation systems. Therefore, the study also

conducted an evaluation based on Precision@k, Re-

call@k and F1@k ranking metrics. These metrics

take into account the number of true positives (TP),

false negatives (FN), false positives (FP) and true neg-

atives (TN) present in the recommendation list.

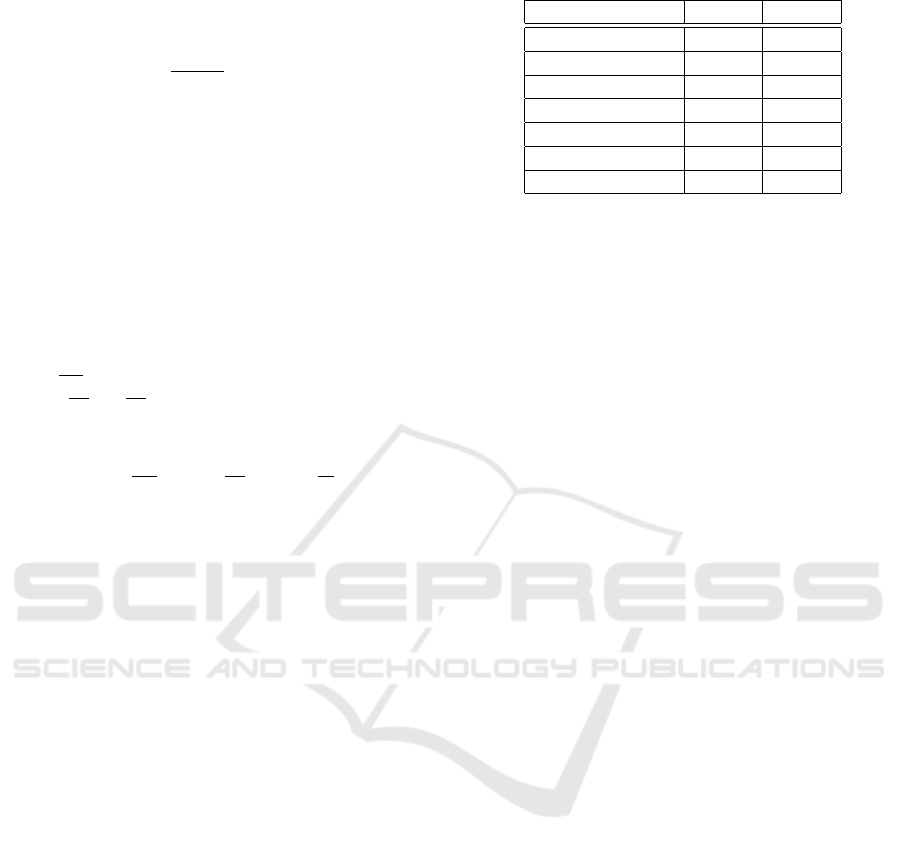

Table 3: Results achieved for each one of the methods.

RMSE MAE

KNNBasic 1.2270 1.0159

KNNWithMeans 1.2483 1.0356

SVD 1.2386 1.0049

SVD++ 1.2179 0.9727

NMF 1.2229 1.0011

SlopeOne 1.1977 0.9831

CoClustering 1.2354 1.0212

MAE: The Mean Absolute Error is the average de-

viation of the recommendations from their actual

user-specified values. It is the average over the

test sample of the absolute differences between

the prediction and the actual observation, where

all individual differences have equal weight. The

smaller the MAE, the more accurately the recom-

mendation engine predicts the users ratings (Sar-

war et al., 1998);

RMSE: The Root Mean Square Error is the square

root of the mean of the difference between pre-

dicted and actual ratings (Afoudi et al., 2019).

Precision@k: is the proportion of recommended

items in the top-k set that are relevant. A high

precision@k score indicates that most of the rec-

ommendations are relevant;

Recall@k: is the fraction of relevant items recom-

mended;

F1@k: is a way to combine precision@k and re-

call@k of the model, and is defined as the har-

monic mean of the precision and recall of the

model.

6.2 Results

We calculated the RMSE and MAE to evaluate the

performance, for all the collaborative filtering algo-

rithms we set out to implement, using k-fold cross-

validation with k = 5.

Table 3 shows the results for each algorithm. We

find that for this dataset the algorithms have sim-

ilar performances. However, the best results were

achieved by SlopeOne and SVD++. Considering the

RMSE, SlopeOne exhibited the best result when com-

pared to the other algorithms, followed by SVD++.

With the predicted ranks deviating from the actual

ranks ≈ 1.1977 for SlopeOne and ≈ 1.2179 for

SVD++. Concerning the MAE, SVD++ showed the

lowest MAE value (≈ 0.9727) followed by SlopeOne

(≈ 0.9831). For these metrics, KKNWithMeans had

the worst results in both metrics. The distribution of

the predicted ratings on the test set is noticeably dif-

KDIR 2021 - 13th International Conference on Knowledge Discovery and Information Retrieval

214

Table 4: Values of precision@k, recall@k, and F1@k, with k = 5, 10, 20.

precision recall F1

@5 @10 @20 @5 @10 @20 @5 @10 @20

KNNBasic 0.7374 0.7144 0.7064 0.2866 0.3718 0.3954 0.4128 0.4891 0.5070

KNNWithMeans 0.7293 0.7043 0.6955 0.2767 0.3595 0.3841 0.4012 0.4760 0.4951

SVD 0.7619 0.7162 0.7003 0.3367 0.4609 0.5028 0.4670 0.5608 0.5854

SVD++ 0.7757 0.7309 0.7145 0.3397 0.4640 0.5095 0.4725 0.5677 0.5948

NMF 0.7525 0.7073 0.6920 0.3275 0.4470 0.4882 0.4564 0.5478 0.5725

SlopeOne 0.7840 0.7336 0.7183 0.3391 0.4535 0.4920 0.4735 0.5605 0.5840

Co-Clustering 0.7713 0.7288 0.7150 0.3174 0.4229 0.4558 0.4498 0.5352 0.5567

ferent for all algorithms, not reflecting the actual dis-

tribution of implicit ratings.

Table 4 compares precision@k, recall@k, and f-

measure@k for different k on the target test set. Con-

cerning precision, SlopeOne achieved the best re-

sult – when considering precision@5, 78% of the

recommendations made by SlopeOne are relevant to

the user (71% for precision@20). Concerning re-

call, we found that SVD++ performed better (≈ 0.5

for recall@20), although there is not much difference

with SVD. Overall, we observed that SlopeOne and

SVD++ achieved the best results for all metrics. How-

ever, the training and testing time may be relevant

for certain applications, and concerning the average

training time, SlopeOne takes about 24 seconds while

SVD++ takes about 4904 seconds (≈200x), which

corresponds to a significant difference. Concerning

the testing time, SlopeOne takes about 77 seconds

while SVD++ takes 110 seconds, being about 42%

slower.

7 CONCLUSIONS AND

RECOMMENDATIONS

In this work, we addressed the topic of recommen-

dation systems in the video game domain, based on

implicit user information. We compared recommen-

dation algorithms based on collaborative filtering, us-

ing user data from the Steam platform. We performed

data pre-processing tasks before training the recom-

mendation algorithms and the conversion of implicit

information into explicit ratings. This conversion was

based on the use of total playing time as an implicit

rating, transforming it into an explicit rating, ranging

from 1 to 5.

We explored seven recommendation algorithms,

using the Surprise library, with k-fold, k = 5, cross-

validation. To assess the performance of the differ-

ent recommendation approaches, when using implicit

information, we used RMSE, MAE, precision@k,

recall@k and F1@k, common metrics used in the

evaluation of recommendation systems. The results

show that SlopeOne and SVD++ were the best per-

forming recommendation algorithms. Taking into ac-

count the computational cost of each recommendation

algorithm, SlopeOne seems to be the top contender.

RQ1: What is the performance of different collabo-

rative filtering recommendation approaches, when we

applied on implicit data? The results show that for

the used dataset there is not a significant difference

between the explored algorithms.

RQ2: Can users playing time serve as an adequate

implicit representation of the users preferences? The

results show that it is possible to use playtime to in-

fer explicit ratings for making recommendations in

the video game domain and specifically on the Steam

platform.

The presented algorithms, besides being of easy

applicability and lower computational cost when

compared to more complex algorithms, can produce

good recommendations. For future work, it is impor-

tant to explore other methods of transforming play-

ing time into explicit ratings. Another possibility is

to explore other types of implicit expression of pref-

erences, assuming that they are available.

ACKNOWLEDGEMENTS

This work was supported by PT2020 project num-

ber 39703 (AppRecommender), and by national funds

through FCT – Fundac¸

˜

ao para a Ci

ˆ

encia e a Tecnolo-

gia with reference UIDB/50021/2020.

REFERENCES

Afoudi, Y., Lazaar, M., and Al Achhab, M. (2019). Collab-

orative filtering recommender system. In Advances

in Intelligent Systems and Computing, volume 915,

pages 332–345. Springer.

Aggarwal, C. C. (2016). Recommender Systems: The Text-

book. Springer Publishing Company, Incorporated, 1

edition.

From Implicit Preferences to Ratings: Video Games Recommendation based on Collaborative Filtering

215

Anwar, S. M., Shahzad, T., Sattar, Z., Khan, R., and Majid,

M. (2017). A game recommender system using col-

laborative filtering (GAMBIT). Proceedings of 2017

14th International Bhurban Conference on Applied

Sciences and Technology, IBCAST 2017, pages 328–

332.

Bertens, P., Guitart, A., Chen, P. P., and Perianez, A. (2018).

A Machine-Learning Item Recommendation System

for Video Games. In IEEE Conference on Compu-

tatonal Intelligence and Games, CIG, volume 2018-

Augus.

Buder, J. and Schwind, C. (2012). Learning with person-

alized recommender systems: A psychological view.

Computers in Human Behavior, 28(1):207–216.

Cheuque, G., Guzm

´

an, J., and Parra, D. (2019). Recom-

mender systems for online video game platforms: The

case of steam. The Web Conference 2019 - Compan-

ion of the World Wide Web Conference, WWW 2019,

2:763–771.

Hug, N. (2020). Surprise: A python library for recom-

mender systems. Journal of Open Source Software,

5(52):2174.

Jannach, D., Zanker, M., Felfernig, A., and Friedrich, G.

(2011). Recommendation system: An Introduction,

volume 91. Cambridge University Press, New York.

Joorabloo., N., Jalili., M., and Ren., Y. (2019). A new tem-

poral recommendation system based on users’ simi-

larity prediction. In Proceedings of the 11th Inter-

national Joint Conference on Knowledge Discovery,

Knowledge Engineering and Knowledge Management

- KDIR,, pages 555–560. INSTICC, SciTePress.

Koren, Y., Bell, R., and Volinsky, C. (2009). Matrix factor-

ization techniques for recommender systems. Com-

puter, 42(8):30–37.

Lemire, D. and Maclachlan, A. (2005). Slope one predictors

for online rating-based collaborative filtering. Pro-

ceedings of the 2005 SIAM International Conference

on Data Mining, SDM 2005, pages 471–475.

Luo, X., Zhou, M., Xia, Y., and Zhu, Q. (2014). An

efficient non-negative matrix-factorization-based ap-

proach to collaborative filtering for recommender sys-

tems. IEEE Transactions on Industrial Informatics,

10(2):1273–1284.

Mittal, A. and Subraveti, S. (2017). Comparison

of Recommendation Models On the Amazon Au-

tomotive Dataset. https://github.com/abhaymittal/

Recommendations-on-Amazon-Automotive-Dataset.

Parra, D. and Amatriain, X. (2011). Walk the Talk: Analyz-

ing the relation between implicit and explicit feedback

for preference elicitation. Proc. of the 19

th

interna-

tional conference on User Modeling, Adaption, and

Personalization, pages 255–268.

Pathak, A., Gupta, K., and McAuley, J. (2017). Generating

and personalizing bundle recommendations on steam.

SIGIR 2017 - Proceedings of the 40th International

ACM SIGIR Conference on Research and Develop-

ment in Information Retrieval, pages 1073–1076.

P

´

erez-Marcos, J., Mart

´

ın-G

´

omez, L., Jim

´

enez-Bravo,

D. M., L

´

opez, V. F., and Moreno-Garc

´

ıa, M. N.

(2020). Hybrid system for video game recommen-

dation based on implicit ratings and social networks.

Journal of Ambient Intelligence and Humanized Com-

puting.

Ricci, F., Rokach, L., Shapira, B., and Kantor, P. B. (2011).

Recommender Systems Handbook. Springer.

Sarwar, B. M., Konstan, J. A., Borchers, A., Herlocker,

J., Miller, B., and Riedl, J. (1998). Using filtering

agents to improve prediction quality in the grouplens

research collaborative filtering system. In Proc. of the

1998 ACM Conf. on Computer Supported Cooperative

Work, page 345–354. ACM.

Schafer, B. J., Frankowski, D., Herlocker, J., and Sen, S.

(2006). Collaborative filtering recommender systems.

Research Journal of Applied Sciences, Engineering

and Technology, 5(16):4168–4182.

Shah, K., Salunke, A., Dongare, S., and Antala, K. (2017).

Recommender systems: An overview of different ap-

proaches to recommendations. In Proceedings of 2017

International Conference on Innovations in Informa-

tion, Embedded and Communication Systems, ICI-

IECS 2017, pages 1–4.

Sifa, R., Drachen, A., and Bauckhage, C. (2015). Large-

scale cross-game player behavior analysis on steam.

Proceedings of the 11th AAAI Conference on Arti-

ficial Intelligence and Interactive Digital Entertain-

ment, AIIDE 2015, 2015-Novem:198–204.

Wang, D., Moh, M., and Moh, T. S. (2020). Using deep

learning and steam user data for better video game

recommendations. ACMSE 2020 - Proceedings of the

2020 ACM Southeast Conference, pages 154–159.

Yi, X., Hong, L., Zhong, E., Liu, N. N., and Rajan, S.

(2014). Beyond clicks: Dwell time for personaliza-

tion. RecSys 2014 - Proceedings of the 8th ACM Con-

ference on Recommender Systems, pages 113–120.

KDIR 2021 - 13th International Conference on Knowledge Discovery and Information Retrieval

216