Developing a Machine Learning Workflow to Explain Black-box Models

for Alzheimer’s Disease Classification

Louise Bloch

1,2 a

and Christoph M. Friedrich

1,2 b

1

Department of Computer Science, University of Applied Sciences and Arts Dortmund,

Emil-Figge-Str. 42, 44227 Dortmund, Germany

2

Institute for Medical Informatics, Biometry and Epidemiology (IMIBE), University Hospital Essen, Essen, Germany

Keywords:

Interpretable Machine Learning, Alzheimer’s Disease Classification, Shapley Values, Alzheimer’s Disease

Neuroimaging Initiative, Australian Imaging and Lifestyle Flagship Study of Ageing.

Abstract:

Many research articles used difficult-to-interpret black-box Machine Learning (ML) models to classify

Alzheimer’s disease (AD) without examining their biological relevance. In this article, an ML workflow was

developed to interpret black-box models based on Shapley values. This workflow enabled the model-agnostic

visualization of complex relationships between model features and predictions and also the explanation of

individual predictions, which is important in clinical practice. To demonstrate this workflow, eXtreme Gradi-

ent Boosting (XGBoost) and Random Forest (RF) classifiers were trained for AD classification. All models

were trained on the Alzheimer’s Disease Neuroimaging Initiative (ADNI) or Australian Imaging and Lifestyle

flagship study of Ageing (AIBL) dataset and were validated for independent test datasets of both cohorts. The

results showed improved performances for black-box models in comparison to simple Classification and Re-

gression Trees (CARTs). For the classification of Mild Cognitive Impairment (MCI) conversion and the ADNI

training dataset, the best model achieved a classification accuracy of 71.03 % for the ADNI test dataset and

67.65 % for the entire AIBL dataset. This RF used a logical long-term memory test, the count of Apolipopro-

tein E ε4 (ApoEε4) alleles and the volume of the left hippocampus as the most important features.

1 INTRODUCTION

Alzheimer’s Disease (AD) is a neurodegenerative

disease (Alzheimer’s Association, 2020), the most

frequent cause of dementia and a globally grow-

ing health problem (Patterson, 2018). Small brain

changes begin decades before clinical symptoms were

noted (Lloret et al., 2019). The early identification of

patients at risk is important to recruit and monitor sub-

jects for therapy studies as there currently is no causal

therapy (Alzheimer’s Association, 2020). Many ap-

proaches investigated the early AD diagnosis using

Machine Learning (ML). Some approaches used in-

terpretable models like Decision Trees (DTs) or logis-

tic regression to investigate general associations (Li

et al., 2020; Mofrad et al., 2018). However, black-

box models like eXtreme Gradient Boosting (XG-

Boost) (Chen and Guestrin, 2016), Random Forests

(RF) (Breiman, 2001) or Convolutional Neural Net-

works (CNNs) (LeCun et al., 2015) often achieved

a

https://orcid.org/0000-0001-7540-4980

b

https://orcid.org/0000-0001-7906-0038

improved performances (Bloch and Friedrich, 2019;

Grassi et al., 2019) but were challenging to interpret.

Moreover, most AD ML models lacked for external

validation (Samper-Gonz

´

alez et al., 2018).

Interpretable ML was developed to explain black-

box models. This is relevant in AD as it was not com-

pletely understood yet. Additionally, interpretable

ML enables the inspection of the biological relevance

of black-box ML models. There have been some ar-

ticles using interpretable ML in AD diagnosis. For

example, (Pelka et al., 2020) trained Long Short-

Term Memory (LSTM) (Hochreiter and Schmidhu-

ber, 1997) based Recurrent Neural Networks (RNN)

(Rumelhart et al., 1986) to classify Cognitive Normal

(CN) vs. Mild Cognitive Impaired (MCI) subjects.

The paper compared different techniques to fuse so-

ciodemographic and genetic data with Magnetic Res-

onance Imaging (MRI). The models were evaluated

for an AD subset (Dlugaj et al., 2010) of the Heinz

Nixdorf Risk Factors Evaluation of Coronary Calci-

fication and Lifestyle (RECALL) (HNR) (Schmer-

mund et al., 2002) (61 MCI and 59 CN) and the

Bloch, L. and Friedrich, C.

Developing a Machine Learning Workflow to Explain Black-box Models for Alzheimer’s Disease Classification.

DOI: 10.5220/0010211300870099

In Proceedings of the 14th International Joint Conference on Biomedical Engineering Systems and Technologies (BIOSTEC 2021) - Volume 5: HEALTHINF, pages 87-99

ISBN: 978-989-758-490-9

Copyright

c

2021 by SCITEPRESS – Science and Technology Publications, Lda. All rights reserved

87

Alzheimer’s Disease Neuroimaging Initiative (ADNI)

(Petersen et al., 2010) study (397 MCI and 227 CN).

Gradient-weighted Class Activation Mapping (Grad-

CAM) (Selvaraju et al., 2017) was used to visually ex-

plain individual model decisions. Initial observations

showed a focus on biologically plausible regions.

(Das et al., 2019) presented a new interpretable

model, based on distinct weighted rules. The model

was evaluated for 151 subjects of the ADNI cohort

(97 AD and 54 CN). The framework was trained in

two stages, the first stage used plasma features to train

the interpretable model. Subjects with an unclear pre-

diction were propagated to the second stage, where a

Support Vector Machine (SVM) (Cortes and Vapnik,

1995) was trained using invasive Cerebrospinal Fluid

(CSF) markers. The evaluation included both, Cross-

Validation (CV) and an independent test dataset. For

the test dataset an Area Under the Receiver Operating

characteristics Curve (AUC) of 0.81 was reached.

(Hammond et al., 2020) used RF feature im-

portance to examine the predictive influence of β-

amyloid plaques, tau tangles and neurodegeneration

during the disease progress. The experiments were

performed for 405 ADNI subjects (148 CN, 147

MCI and 110 AD). β-amyloid Positron Emission

Tomography (PET) was used to detect β-amyloid

plaques, invasive CSF features surrogated tau-tangles

and MRI and Fluorodeoxyglucose (FDG) PET scans

were used to determine neurodegeneration. Models

trained to classify the early AD stages preferred fea-

tures representing tau tangles and β-amyloid-plaques,

whereas models for later stage classification favoured

surrogates for neurodegeneration. The observations

were reproduced using SHapley Additive exPlana-

tions (SHAP) (Lundberg and Lee, 2017) and Gradient

Tree Boosting (GTB) (Friedman, 2001). The RF clas-

sifier and the entire feature set reached accuracies of

73.17 %, 71.01 %, and 90.34 % for the CN vs. MCI,

MCI vs. AD, and CN vs. AD classifications.

(Rieke et al., 2018) compared the four heatmap

visualisation methods sensitivity analysis (Simonyan

et al., 2014), guided backpropagation (Springenberg

et al., 2015), occlusion (Zeiler and Fergus, 2014) and

brain area occlusion inspired by (Yang et al., 2018) for

3D-CNNs. The CNN model was trained using 969

MRI scans of 344 ADNI subjects (151 CN and 193

AD). It is important to ensure independent training

and test datasets if multiple scans per subject were

used (Wen et al., 2020) which was unclear for this

article. Thus, the CV accuracy of 77 % ± 6 %, was

questionable. The heatmaps of all the methods were

focused on AD-related anatomical brain areas.

(Wang et al., 2019) introduced an interpretable

deep learning model, consisting of a Generative Ad-

versarial Network (GAN) (Goodfellow et al., 2014)

to extend the training dataset, a regression network

to generate feature vectors from adjacent visits and

a classification model. The regression model itera-

tively estimated the feature vector at a prospective

visit and the classification model does the final pre-

diction. Longitudinal volumetric MRI features were

used to classify 101 progressive MCI (pMCI) vs. 115

stable MCI (sMCI) ADNI subjects. The model out-

performed SVMs and artificial neural networks.

In this article, an ML workflow was developed, to

interpret black-box models based on model-agnostic

Shapley values. In comparison to classical feature im-

portance methods, the advantage was to obtain an in-

dividual explanation for each subject and to observe

complex relationships between the features and the

prediction. The workflow was evaluated by train-

ing XGBoost and RF models to inspect different AD

stages but was not limited to those models. Two

different cohorts were used for model training, the

ADNI and the Australian Imaging and Lifestyle flag-

ship study of Ageing (AIBL) (Ellis et al., 2009). Each

model was trained on one cohort and evaluated for the

independent test datasets of both cohorts. The train-

ing has been performed for two different non-invasive

feature sets. Section 2 describes the datasets and in-

terpretation methods. The ML workflow is delineated

in Section 3. The experimental results are explained

in Section 4. Section 5 finally discusses the results

and gives an outlook about future work.

2 MATERIALS AND METHODS

2.1 Dataset

Data used in the preparation of this article were ob-

tained from the ADNI (Petersen et al., 2010) and

the AIBL (Ellis et al., 2009) cohorts. The datasets

included 1700 ADNI and 612 AIBL subjects. The

ADNI dataset consisted of 512 CN, 853 MCI and

335 AD subjects. The MCI subjects were split into

two subsets. 401 Baseline (BL) MCI subjects had

no diagnostic changes in all visits and were classi-

fied as sMCI subjects. 319 BL MCI subjects con-

verted to AD at subsequent visits and were classified

as pMCI. BL MCI subjects, who reverted to CN for

at least one visit, pMCI subjects who reverted to MCI

and subjects with no follow-up diagnosis assigned to

none of these two classes. ADNI subjects from the

study phases ADNI-1 (809 subjects), ADNIGO (127

subjects) and ADNI-2 (764 subjects) were included.

The time between the BL and the final diagnosis for

sMCI and pMCI subjects ranged between 4.7 and

HEALTHINF 2021 - 14th International Conference on Health Informatics

88

Table 1: ADNI demographics at BL. The mean and standard deviation are given for all continuous variables.

n Age (in years) Gender/ females (in %) MMSE CDR ApoEε4 (0/1/2) (in %)

CN 512 74.2±5.8 51.8 29.1±1.1 0.0±0.0 (71.3 / 26.2 / 2.3)

MCI 853 73.1±7.6 40.8 27.6±1.8 0.5±0.0 (49.4 / 39.5 / 10.8)

sMCI 401 73.2±7.5 40.4 27.8±1.8 0.5±0.0 (56.9 / 33.9 / 9.2)

pMCI 319 74.0±7.1 40.1 27.0±1.7 0.5±0.0 (34.2 / 49.5 / 16.3)

AD 335 75.0±7.8 44.8 23.2±2.1 0.8±0.3 (33.1 / 47.2 / 19.1)

Σ 1700 73.8±7.2 44.9 27.2±2.7 0.4±0.3 (52.8 / 37.0 / 9.9)

156.2 months. The same inclusion criteria were ap-

plied to the AIBL cohort. This resulted in 447 CN,

94 MCI, 16 sMCI, 18 pMCI and 71 AD subjects and

the time between the BL and the final diagnosis for

sMCI and pMCI subjects ranged between 16.9 and

55.1 months. The demographic data of the ADNI and

AIBL datasets are summarized in Tables 1 and 2.

For each subject, the BL 1.5 T or 3 T T1-weighted

Magnetization-Prepared Rapid Gradient-Echo (MP-

RAGE) MRI scan was selected. In this study, fully

preprocessed ADNI scans (Jack et al., 2015) were

used. There are no preprocessed AIBL MRI scans

available, thus unprocessed scans were used. Volu-

metric features were extracted from the MRI scans

using FreeSurfer v6.0 (Fischl, 2012). Volumetric

features of 34 cortical areas per hemisphere of the

Desikan-Killiany atlas (Desikan et al., 2006), 34 sub-

cortical areas (Fischl et al., 2002) and the estimated

Total Intracranial Volume (eTIV) were extracted. The

volumetric features were normalized by eTIV as rec-

ommended for volumes in (Westman et al., 2012).

The experiments were performed using two fea-

ture sets. Feature Set 1 (FS1) included 103 MRI fea-

tures, age, gender and count of Apolipoprotein E ε4

(ApoEε4) alleles (106 features). Gender and ApoEε4

were coded as two and three binary dummy variables.

APOE4.X (X∈{0,1,2}) indicated if a subject had X

ApoEε4 alleles. PTGENDER.MALE specifies if the

subject was male and PTGENDER.FEMALE if it was

female. All dummy variables had a value of one, if

the expression is true, and zero otherwise. In Feature

Set 2 (FS2) Mini-Mental State Examination (MMSE),

Clinical Dementia Rating (CDR), and logical tests

to evaluate the long-term (Logical memory, delayed

(LDELTOTAL)) and the short-term memory (Logical

memory, immediate (LIMMTOTAL)) were added to

FS1 (110 features). The CDR score was excluded for

sMCI vs. pMCI classification due to small variance.

2.2 Interpretable ML

An overview of different methods to interpret ML

models can be found in (Molnar, 2019). Some mod-

els like DTs and logistic regression models are in-

terpretable by design. However, the interpretation

of black-box models, which often achieve better re-

sults, is more complicated. This article used model-

agnostic Shapley values. In contrast to the model-

specific approaches, it is suitable to explain any type

of ML model, and enabled comparability. Addition-

ally, Shapley values are local models, which explain

individual observations and thus achieve a high clini-

cal benefit and good performances.

2.2.1 Shapley Values

Shapley values (Shapley, 1953) are affiliated to coali-

tion game theory. The aim is to determine the effect

of a single feature of an observation on the overall

prediction. Shapley values explain the differences be-

tween the average model prediction and the prediction

of an observation by different features. Thus, Shap-

ley values are based on the additive linear explanation

model shown in equation 1. The model prediction

f (x) of an observation x is explained by the feature

effects Φ

j

and the average model prediction Φ

0

. x

0

is

a simplified representation of the observation x. For

tabular data, this is a binned binary feature represen-

tation. N is the number of simplified features.

f (x) = Φ

0

+

N

∑

j=1

Φ

j

x

0

j

(1)

Shapley values explain the overall prediction of an ob-

servation by fairly distributed feature effects. There-

fore, they are defined as the average contribution of

a feature expression for the prediction in all subsets,

described in equation 2. To calculate the Shapley

value Φ

i

of a feature i and an observation, it was re-

quired to determine all subsets S of the entire feature

set F. It was necessary to retrain and evaluate the

black-box model f

S

(S) for each subset S. The differ-

ences in model performance trained with ( f

S∪i

(S ∪ i))

and without ( f

S

(S)) the feature at interest i were cal-

culated. The Shapley value is the weighted average

difference of all subsets. The weights depend on the

total number of model features |F| and the number of

feature expressions |S| in the subset S. High weights

were assigned to both, subsets with few and with

many features, to support the estimation of the main

individual effects and the total effects.

Developing a Machine Learning Workflow to Explain Black-box Models for Alzheimer’s Disease Classification

89

Table 2: AIBL demographics at BL. The mean and standard deviation are given for all continuous variables.

n Age (in years) Gender/ females (in %) MMSE CDR ApoEε4 (0/1/2) (in %)

CN 447 72.5±6.2 57.0 28.7±1.2 0.0±0.1 (70.7 / 26.4 / 2.9)

MCI 94 75.3±7.0 46.8 27.1±2.2 0.5±0.1 (51.1 / 37.2 / 11.7)

sMCI 16 76.4±7.7 50.0 27.6±2.4 0.5±0.0 (56.2 / 37.5 / 6.2)

pMCI 18 78.0±7.1 50.0 26.8±2.0 0.5±0.0 (16.7 / 66.7 / 16.7)

AD 71 73.3±7.9 59.2 20.5±5.7 0.9±0.6 (32.4 / 49.3 / 18.3)

Σ 612 73.0±6.6 55.7 27.5±3.5 0.2±0.4 (63.2 / 30.7 / 6.0)

Φ

i

=

∑

S⊆F\{i}

|S|!(|F| − |S| −1)!

|F|!

f

S∪{i}

(S∪i)− f

S

(S)

(2)

The computational effort for the exact calculation of

Shapley values increases exponentially with the num-

ber of features. In this paper, Shapley sampling values

(

ˇ

Strumbelj and Kononenko, 2013) which are based on

Monte-Carlo sampling estimated the Shapley values

to avoid time-consuming repeated training and eval-

uation. The estimation of a Shapley value

b

Φ

i

(x) for

a feature i and an observation x is demonstrated in

equation 3. M is the number of Monte-Carlo simu-

lations and x

m

±i

is the observation x with some feature

expressions replaced by random values. In each simu-

lation, x

m

+i

and x

m

−i

are identical, except that the feature

i was randomly replaced in x

m

−i

and not in x

m

+i

. f is the

black-box model. Each Shapley sampling value is the

mean of the differences of M samples.

b

Φ

i

(x) =

1

M

M

∑

m=1

f (x

m

+i

) − f (x

m

−i

)

(3)

3 ML WORKFLOW

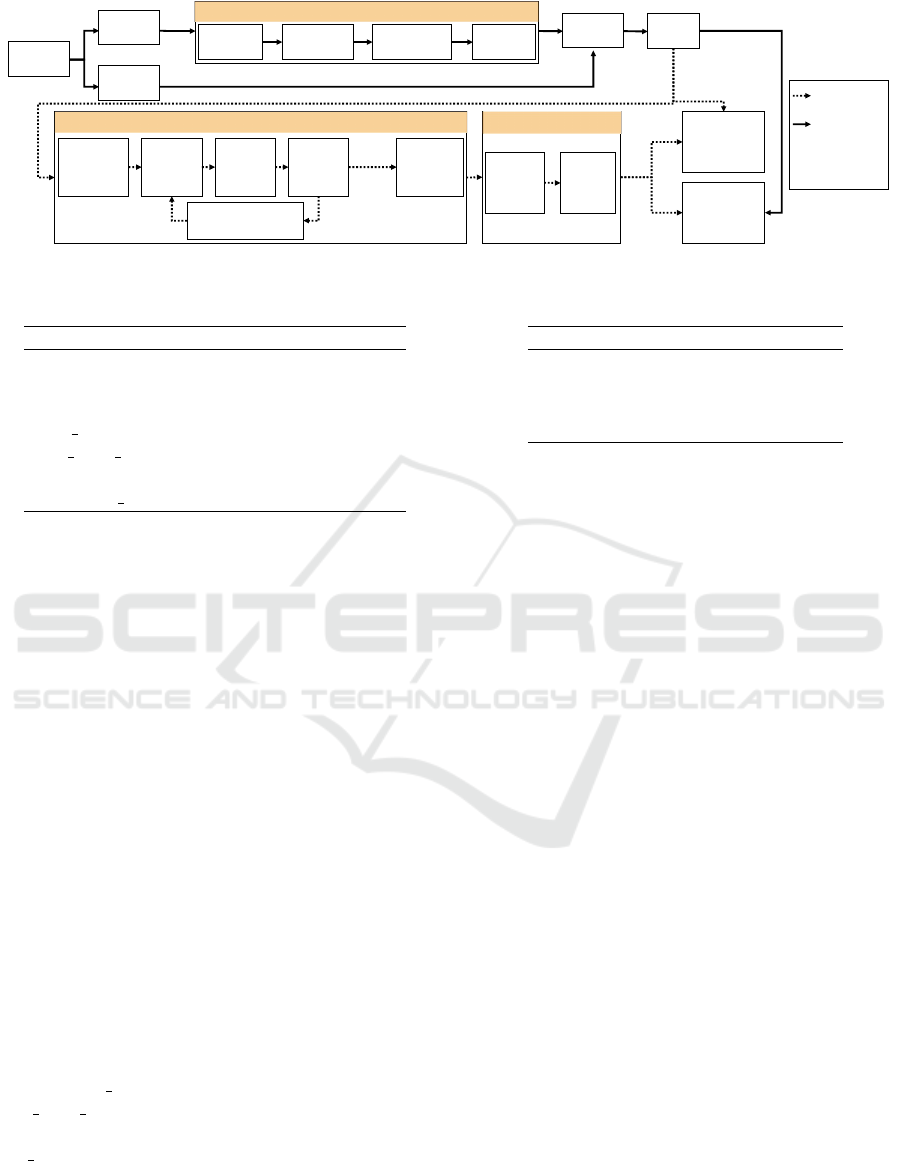

The ML workflow, shown in Figure 1 and im-

plemented using the programming language R ver-

sion 3.5.3 (R Core Team, 2019), allows the interpreta-

tion of black-box models trained to classify early AD.

Subject and feature selection and image process-

ing are described in Section 2.1. Feature fusion was

performed by a concatenation. The dataset was dis-

tinctly split into an 80 % training and a 20 % test

dataset. This split was performed within the diagnosis

groups. Model training, hyperparameter tuning and

model interpretation was carried out for the training

dataset and the performance evaluation was executed

for the independent test datasets of both cohorts.

3.1 Hyperparameter Tuning

Bayesian optimization (Mo

ˇ

ckus, 1975) was imple-

mented using the R package rBayesianOptimization

version 1.1.0 (Yan, 2016) to tune the model hyper-

parameters. The idea is, to model the dependency

of the hyperparameters and the model performance

by a Gaussian Process (GP). Ten nearly random ini-

tial parameters were defined by a Latin hypercube

design (LHD) (McKay et al., 1979), which was im-

plemented using the R package SPOT version 2.0.3

(Bartz-Beielstein et al., 2005). The range of each

parameter was split into ten intervals to randomly

choose one sample per interval. The resulting ten

samples per parameter were randomly matched across

the parameters.

The ML model was evaluated for these combi-

nations using 10 × 10-fold CV (Refaeilzadeh et al.,

2009) to estimate the model performance for the test

dataset using the hyperparameters. 10 × 10-fold CV

was implemented by splitting the training dataset

into ten distinct folds using the R package caret ver-

sion 6.0-82 (Kuhn, 2019). The splits were executed

separately for each diagnosis. Ten iterations were per-

formed, each with a different fold used as validation

dataset (10 %). The training dataset included the re-

maining nine folds (90 %). This procedure was re-

peated for ten times, with shuffled data in each run.

The GP was fitted to predict the average CV ac-

curacy for the initial parameter combinations. After-

wards, the model was optimized to choose the next

parameter combination. Here, the Upper Confidence

Bounds (UCB) acquisition function (equation 4) was

used. ˆµ

Θ

is the performance estimation of the GP and

ˆ

Σ

Θ

is the covariance at parameter combination Θ. κ

weights the influence of exploitation and exploration

during the optimization. A high κ-value preferred ex-

ploration and small values favour exploitation. All

experiments used the default value of 2.576.

UCB(Θ) = ˆµ

Θ

+ κ ·

ˆ

Σ

Θ

(4)

Iteratively, the new hyperparameter combination was

evaluated using CV and the results were added to re-

fine the GP and to determine a new combination. This

procedure was repeated 25 times. The best combina-

tion was chosen to train the final model.

HEALTHINF 2021 - 14th International Conference on Health Informatics

90

Final model generation

Volume

calculation

SegmentationRegistration

Pre-

processing

Hyperparameter tuning

Evaluation

Image processing

Data

splitting

Subject

selection

Model

training

Resampling

Pre-

processing

Model

training

Pre-

processing

Test dataset

Training

dataset

Initialize

parameter

combinations

Determine promising

parameter combination

Determine

best

parameter

combination

Met exit

condition

Continue

parameter tuning

Feature

selection

MRI scan

selection

Feature

fusion

Generate

interpretation

model

Training cohort

Training and

External

Validation

cohort

Figure 1: ML workflow.

Table 3: XGBoost hyperparameters and intervals.

Name Minimum Maximum

nrounds 1 500

eta 0 1

gamma 0 20

max depth 1 20

min child weights 1 30

subsample 0 1

colsample bytree 0 1

3.2 Model Training

The preprocessing included centering, scaling, me-

dian imputation and Synthetic Minority Over-

sampling Technique (SMOTE) (Chawla et al., 2002)

to compensate class imbalances. It was implemented

using the R package caret version 6.0-82 (Kuhn,

2019). Afterwards, the ML models were trained.

XGBoost (Chen and Guestrin, 2016) is an im-

plementation of gradient boosting (Friedman, 2001),

distributed as an open-source software library. The

idea of boosting algorithms is, to iteratively combine

multiple weak classifiers to get a strong joint clas-

sifier. Gradient boosting meets this assumption by

learning the gradients of the previous classifier. The

sum of the weak classifier results is the final predic-

tion. The main advantages of XGBoost are scalability,

parallelization and distributed execution. The XG-

Boost hyperparameters are summarized in Table 3.

nrounds sets the number of boosting iterations and

eta was the learning rate, which controls the prefer-

ence of classifiers at early iterations. The minimum

loss reduction required to split a node is defined by

gamma, max depth sets the maximum tree depth and

min child weights was the minimum number of ob-

servations in a child node. subsample and colsam-

ple bytree set the proportion of randomly subsampled

training instances and features per iteration. An XG-

Boost tree classifier was implemented using the R

package xgboost version 0.82.1 (Chen et al., 2019).

RF (Breiman, 2001) is an ensemble black-box

Table 4: RF hyperparameters and optimization intervals.

Name Minimum Maximum

mtry 2 # features

ntree 250 1250

nodesize 1 30

maxnodes 50 100

model based on multiple DTs and was implemented

using the R package randomForest version 4.6-14

(Liaw and Wiener, 2002). Each DT was trained us-

ing randomly chosen features and bootstrap sampling

(Efron and Tibshirani, 1986) of the training dataset.

The results of the DTs were finally summarized using

a majority voting. The hyperparameter ntree sets the

number of DTs. Each split considered a random sub-

set of mtry features. The minimum size of leaf nodes

was defined by nodesize and maxnodes determines the

maximum number of leaf nodes per DT. Table 4 sum-

marizes the RF hyperparameters.

Classification and Regression Trees (CARTs)

(Breiman et al., 1984) were chosen as interpretable

models and implemented using the R package rpart

version 4.1-13 (Therneau and Atkinson, 2018). Dur-

ing the training of CARTs, successively decision rules

of the form x ≤ t for numerical or x ∈ t for categorical

features were learned with t as a threshold or a subset.

Each possible split was ranked by the Gini-coefficient

(equation 5) to find the best rule. In this equation, c is

the total number of classes and p(i), is the proportion

of observations of class i in a node.

f (p) =

c

∑

i=1

p(i) ·

1 − p(i)

(5)

This method was iteratively repeated as long as no

split with a minimum improvement of the complexity

parameter cp can be performed. This hyperparameter

was tuned in a range between 0 and 1.

Developing a Machine Learning Workflow to Explain Black-box Models for Alzheimer’s Disease Classification

91

3.3 Interpretation Model

Shapley sampling values were implemented using the

R package fastshap version 0.0.5 (Greenwell, 2020)

to explain the black-box models. All experiments per-

formed 5000 Monte-Carlo simulations to estimate the

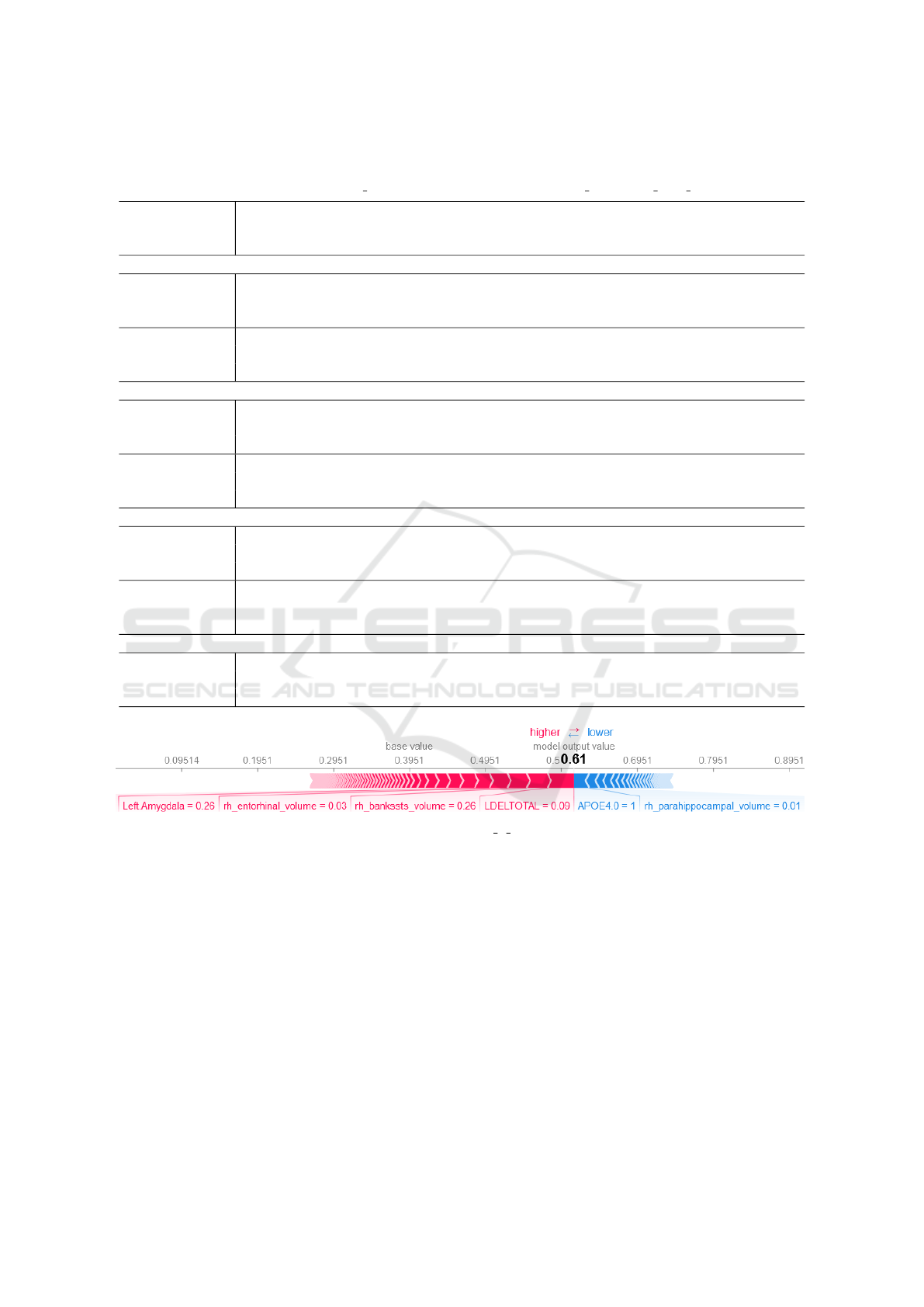

Shapley values. The SHAP force plot in Figure 3 ex-

plains the model prediction of an individual observa-

tion. The plot shows, that the individual prediction

consists of the sum of all feature Shapley values and

the average model prediction. Feature expressions

with high positive Shapley values had a strong pos-

itive effect on the prediction and small negative Shap-

ley values represent small negative effects.

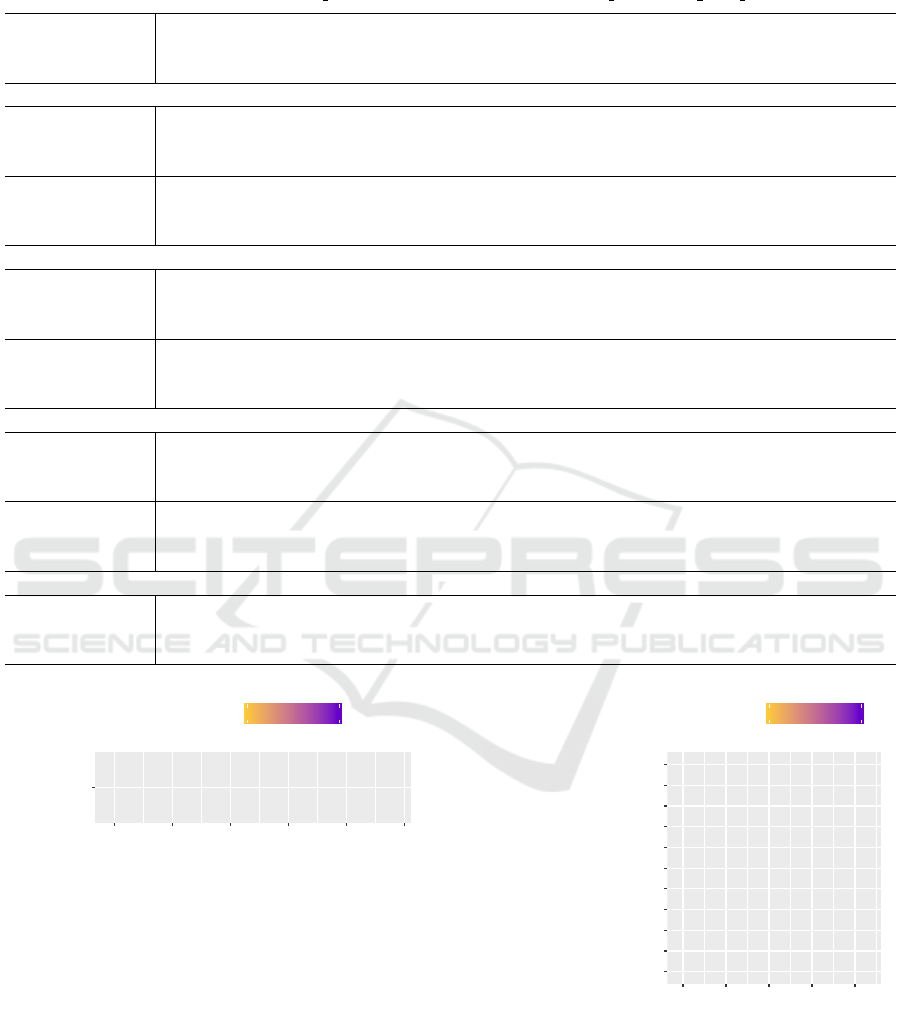

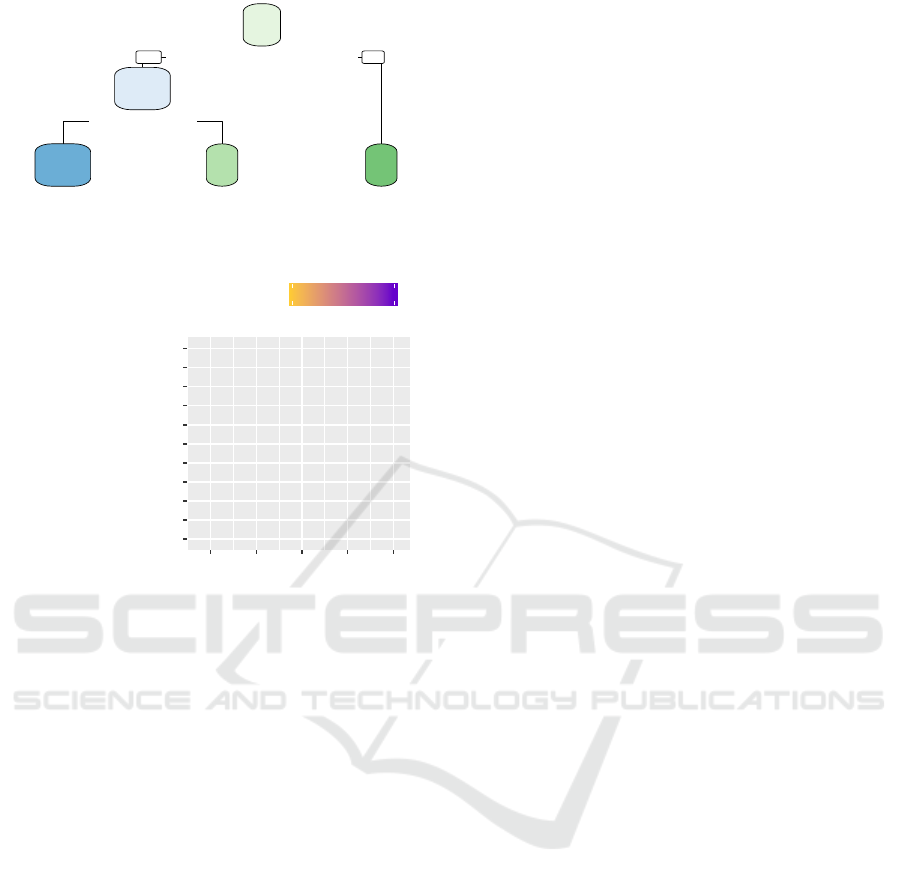

SHAP summary plots (Lundberg et al., 2018) ex-

plain the model results for the entire training dataset.

Each point shows a Shapley value for a subject and a

feature and is colored depending on the feature value.

The vertical axis represented the features, ordered by

the mean absolute Shapley values, and their distribu-

tion. The plots were limited to the top ten features.

4 RESULTS

The results of the experiments with FS1 and FS2 are

summarized in Tables 5 and 6. For both feature sets,

the best results were achieved for CN vs. AD clas-

sification. For example, a perfect CV accuracy was

achieved by training an RF with FS2 of the ADNI

dataset. The CART, trained with FS2 of the ADNI

dataset, reached a perfect classification for the ADNI

test dataset. The best accuracy for the AIBL test

dataset was 99.05 % and was achieved by training an

XGBoost model with FS2 of the AIBL dataset.

Only 16 sMCI and 18 pMCI AIBL subjects were

selected, thus, AIBL was only used for external vali-

dation in this case. For FS1 and FS2 the RF achieved

the best results to classify MCI conversion. The FS2

model yielded an accuracy of 71.03 % for the inde-

pendent ADNI and 67.65 % for the AIBL test dataset.

For FS1, accuracies of 68.28 % for the ADNI and

79.41 % for the AIBL test datasets were reached.

4.1 Feature Set 1 vs. Feature Set 2

In general, the performances reached with FS2 (Ta-

ble 6) outperformed the results achieved with FS1

(Table 5). Adding cognitive test results to FS1 im-

proved the distinction of the BL diagnoses. Minor im-

provements were noted for the MCI conversion pre-

diction. For example, the RF trained with FS2 for

MCI conversion prediction outperformed the model

trained with FS1 by 2.75 % for the ADNI test dataset

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

● ●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

● ●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

● ●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

● ●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

● ●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●●

●

●

●

●

●

●

●

●

●

●

●

● ●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●