Digital Technology Implementation for Students’ Involvement Base on

3D Quest Game for Career Guidance and Estimating Students’ Digital

Competences

Oleksandr V. Prokhorov

1 a

, Vladyslav O. Lisovichenko

1 b

, Mariia S. Mazorchuk

2 c

and

Olena H. Kuzminska

3 d

1

National Aerospace University H. E. Zhukovsky “Kharkiv Aviation Institute”, 17 Chkalova Str., Kharkiv, 61070, Ukraine

2

V. N. Karazin Kharkiv National University, 4 Svobody Sq., Kharkiv, 61022, Ukraine

3

National University of Life and Environmental Sciences of Ukraine, 15 Heroyiv Oborony Str., Kyiv, 03041, Ukraine

Keywords:

Virtual Reality, Quest Game, 3D Model, Career Guidance, Computer Science, Higher Education.

Abstract:

This paper reveals the process of creating a career guidance 3D quest game for applicants who aim to apply

for IT departments. The game is based on a 3D model of the computer science and information technologies

department in the National Aerospace University “Kharkiv Aviation Institute”. The quest challenges aim to

assess the digital competency level of the applicants and first-year students. The paper features leveraged the

theoretical background, software tools, development stages, implementation challenges, and the gaming ap-

plication scenario. The game scenario provides for a virtual tour around a department of the 3D university. As

far as the game replicates the real-life objects, applicants can see the department’s equipment and class-rooms.

For the gaming application development, the team utilized C# and C++, Unity 3D, and Source Engine. For

object modeling, we leveraged Hammer Editor, Agisoft PhotoScan Pro, and the photogrammetry technology

that allowed for realistic gameplay. Players are offered various formats of assessment of digital competen-

cies based on the Digital Competence Framework for Citizens (DigComp 2.1): test task, puzzle, assembling

a computer, and setting up an IT-specialist workplace. The experiment conducted at the online open house

day 2020 proved the 3D quest game efficiency. The applicants estimated a 3D quest, as more up-to-date and

attractive engagement. According to the results of the 3D quest, applicants demonstrated an average level of

digital competence with some certain items difficulties at 0.5. Several psychometric item characteristics were

analyzed in detail that would allow us to improve the item’s quality.

1 INTRODUCTION

Augmented and virtual reality (AR and VR) are popu-

lar tools to introduce any concept more attractively or

interactively. Utilizing AR and VR are most common

for medicine, geospatial applications, manufacturing,

tourism, and cultural heritage (Frontoni et al., 2019;

Lavrentieva et al., 2020) .

The choice of technology and how to apply it, in

particular in the higher education field, depends on

the research subject, resourcing, and the teachers’ and

students’ competency. The experimental research on

digital competency proved: the readiness level to start

a

https://orcid.org/0000-0003-4680-4082

b

https://orcid.org/0000-0002-2159-5731

c

https://orcid.org/0000-0002-4416-8361

d

https://orcid.org/0000-0002-8849-9648

digital education is high enough (Kuzminska et al.,

2018). Thus, arises the question of creating virtual

objects and a methodology on how to utilize them in

the educational process. For instance, the paper by

Th

¨

urkow et al. (Th

¨

urkow et al., 2005) explains the

experience of utilizing landscapes and excursions as a

means of training in geography. Also, the research by

Patiar et al. (Patiar et al., 2017) describes the students’

experience with an innovative virtual field trip around

hotels.

Among the virtual objects’ representation formats,

gamification gains special importance, since it pro-

vides for additional motivation and active participa-

tion of the student (Tokarieva et al., 2019; Vlachopou-

los and Makri, 2017).

The training games include quests, arcades, sim-

ulator games, virtual simulators, and interactive

676

Prokhorov, O., Lisovichenko, V., Mazorchuk, M. and Kuzminska, O.

Digital Technology Implementation for Students’ Involvement Base on 3D Quest Game for Career Guidance and Estimating Students’ Digital Competences.

DOI: 10.5220/0010927400003364

In Proceedings of the 1st Symposium on Advances in Educational Technology (AET 2020) - Volume 1, pages 676-690

ISBN: 978-989-758-558-6

Copyright

c

2022 by SCITEPRESS – Science and Technology Publications, Lda. All rights reserved

courses (Demirbilek and Koc¸, 2019; Kompaniets

et al., 2019; Vakaliuk et al., 2020). We considered

quests to be the most interesting genre among the

above mentioned (Barab et al., 2005; Shepiliev et al.,

2021). Villagrasa and Duran (Villagrasa and Duran,

2013) analyses the effectiveness of utilizing gamifica-

tion to motivate Spanish students into studying with a

3D visualization as support for Problem-Based Learn-

ing (PBL) and Quest-Based Learning (QBL) to stu-

dents’ collaborative work. Rankin et al. (Rankin

et al., 2006) investigated the cognitive and motiva-

tional influence of 3D games on studying the second

language and creating a digital learning environment

for second language acquisition (SLA). At the same

time, the transition to e-learning requires additional

research on approaches not only for designing virtual

objects and digital educational environments to stim-

ulate students’ motivation to study (Katsko and Moi-

seienko, 2018; Ma et al., 2012), but also to assess stu-

dents’ knowledge and confidence acquired competen-

cies (Kuzminska et al., 2018).

The specifics of the paper-based versus computer-

based testing results application and comparison be-

came extremely relevant during the COVID-19 pan-

demic when the majority of students switched to dis-

tance learning. In particular, this problem stands

out for the high schools (

¨

Ozalp-Yaman and C¸ a

˘

gıltay,

2010; Ita et al., 2014; Garas and Hassan, 2018).

According to previous studies, in particu-

lar,

¨

Ozalp-Yaman and C¸ a

˘

gıltay (

¨

Ozalp-Yaman and

C¸ a

˘

gıltay, 2010), students’ performance does not de-

pend on the testing method – the results showed sim-

ilar scores for both computer-based and paper-based

testing. However, the researchers consider the digi-

tal educational environment and the computer-based

testing (or e-testing) environment improvements to

be perspective. Whereas, the students claim that e-

testing has several limitations, such as lack of com-

munication with the teacher, inability to determine the

order of test questions, and error analysis sessions.

Thus, despite the attractiveness of digital technology,

students prefer paper-based testing.

The research on the relationship between stu-

dents’ confidence and self-efficacy is also very rel-

evant during e-learning (Blanco et al., 2020). Stu-

dents’ motivation, cognitive activity, and the desire

for self-regulated learning and self-improvement in-

fluence their self-efficacy. The degree to which stu-

dents’ self-efficacy skills are acquired and improved,

in particular during monitoring and final assessment

through testing, varies depending on students’ char-

acteristics, and factors related to the testing process

and educational environment.

In our opinion, it is possible to make electronic

testing simpler for students by combining gamifica-

tion, case technology, and virtual reality. However,

the equally important task is to determine the item

test structure that would allow for objectively assess-

ing the knowledge and skills acquired by students.

De Carvalho Filho (de Carvalho Filho, 2009) stud-

ied the influence of metacognitive skills and types of

tests on students’ results, confidence in their judg-

ments, and the accuracy of these judgments. In par-

ticular, the study concentrated on how students with

different cognitive and metacognitive skills processed

four types of test questions (multiple-choice, short an-

swer, single-choice: “yes” or “no”, essay tests). The

results proved it is impractical to use the same type

of test question sets. This claim corresponds to the

recommendations of the DigComp 2.1 framework for

assessing students’ digital competencies, performed

by the authors in previous studies (Kuzminska et al.,

2018, 2019).

However, due to COVID-19, the question of find-

ing a way to conduct career guidance and advertising

campaigns in a remote format became relevant.

Since the career guidance of the future special-

ist is on-trend today, universities suggest many for-

mats of how students can get to know the university,

and use various forms of online communication with

applicants. The career guidance is now on-demand,

and recommendations on how to pursue a career path,

in particular how to prepare for external independent

evaluation, or recommendations on informal educa-

tion, can be beneficial in helping students to manage

their education and career. This can influence the stu-

dents’ consciousness and help to improve the educa-

tional system’s effectiveness, as well as the equation

of demand and supply at the labor market (OECD,

2017).

The research aims to create a career guidance 3D

quest game to estimate the students’ competency, and

as well, to attract more applicants, and increase the

visibility of the department.

2 THE PROJECT

IMPLEMENTATION

2.1 Problem Definition

• Target audience:

– applicants: assessing the digital competency

level to understand if the applicant is ready to

enter the computer science department, career

guidance, department promotion;

Digital Technology Implementation for Students’ Involvement Base on 3D Quest Game for Career Guidance and Estimating Students’

Digital Competences

677

– first-year students: assessing the digital com-

petency level to adjust the program of educa-

tion, introducing the department’ activities, ca-

reer guidance;

– developers of the gamified applications: speci-

fication to the technical implementation of the

gamified application “Passcode”.

• The technical implementation defines the follow-

ing scope of tasks:

– free movement, acting, and selecting players

according to the game scenario;

– analyzing data on the users’ actions;

– assessing users’ actions, demonstrating the

users’ progress;

– the current score showing and saving feature;

– utilizing a database to simulate challenges.

• Expected results of using the gamified application

“Passcode”:

– enlarging the target audience to provide for ca-

reer guidance activities;

– boosting the applicants’ motivation to study

and providing them with career guidance;

– assessing digital competency of intendant IT-

specialists for further adjusting the educational

plans to suit their skills and level of knowledge;

– assisting in the development of gamified appli-

cations that utilize 3D models.

• Summarizing the numerous study results, we can

highlight the main points we considered when for-

mulating the task to develop the gamified applica-

tion 3D quest “Passcode”:

– the impact values on the test results for paper

and computer testing are usually statistically in-

significant (p > 0.05). Thus, there is no signif-

icant difference between these approaches, and

computer-based testing can grant for objective

assessment;

– to determine the categories of digital compe-

tence assessment, we utilized the framework

of digital competence of citizens DigComp

2.1, recommended as a system that “takes

into account” both cognitive and metacogni-

tive skills of respondents. However, the con-

tent of the items test was prepared considering

the specifics of the training for future IT profes-

sionals;

– developing the testing environment and build-

ing a test in form of a quest with the appropriate

logic, tasks, prompts, and voice guidance can

reduce participants’ concerns about their per-

formance and help receive accurate assessment

results;

– the 3D quest game that is based upon the 3D

model of the computer science and informa-

tion technologies department at the National

Aerospace University “Kharkiv Aviation Insti-

tute” will motivate future students to get ac-

quainted with the university and help them not

to feel like being examined or controlled.

2.2 Means of Technical Implementation

To develop our 3D application, we leveraged Unity as

the main engine (Finnegan, 2015; Haranin and Moi-

seienko, 2018). Unity is a cross-platform tool for de-

veloping 2D and 3D games and applications that sup-

port several operating systems. We developed a game

for MS Windows. The main language we use was C#,

though we also utilized JavaScript and Boo for simple

scripts. Also, we utilized the DirectX library, where

the main shader language is Cg (C for Graphics) de-

veloped by NVidia.

The input data is not only the users’ actions but

the current condition of the game world, as the game

is a sequence of conditions, where each iteration de-

fines the following one. The artificial intelligence

that controls the game characters, random events, and

the game mechanics mathematical tool influence the

game as well.

The game objects (including the characters, items,

etc.) are samples of classes that define their behavior.

The game actions (effects, scenes, etc.) are defined by

scripts. The game process is defined by the combined

action of managers where each controls a certain part

of the gameplay:

• GameManager – controls the game cycle and

serves as a linker for the elements of game archi-

tecture;

• InterfaceManager – controls the user interface,

including the graphical interface and the input

equipment;

• PlayerManager – controls the main character’s be-

havior and condition (main character here is the

one controlled by the player);

• UnitManager – controls the units;

• SceneManager – controls the game levels.

All of the managers are implemented based on the

Singleton pattern. They are universal for the whole

game, and each exists in a single copy. The managers

are called by type. The main game objects base on the

Finite State Machine pattern, which allows for easily

controlling the game object and controlling its behav-

ior.

AET 2020 - Symposium on Advances in Educational Technology

678

The computer game is a complicated system build

of separate subsystems integrated into a program ar-

chitecture. Our game application has the following

subsystems: for finding a way for a character; for user

graphic interface; for objects interaction and an addi-

tional control subsystem.

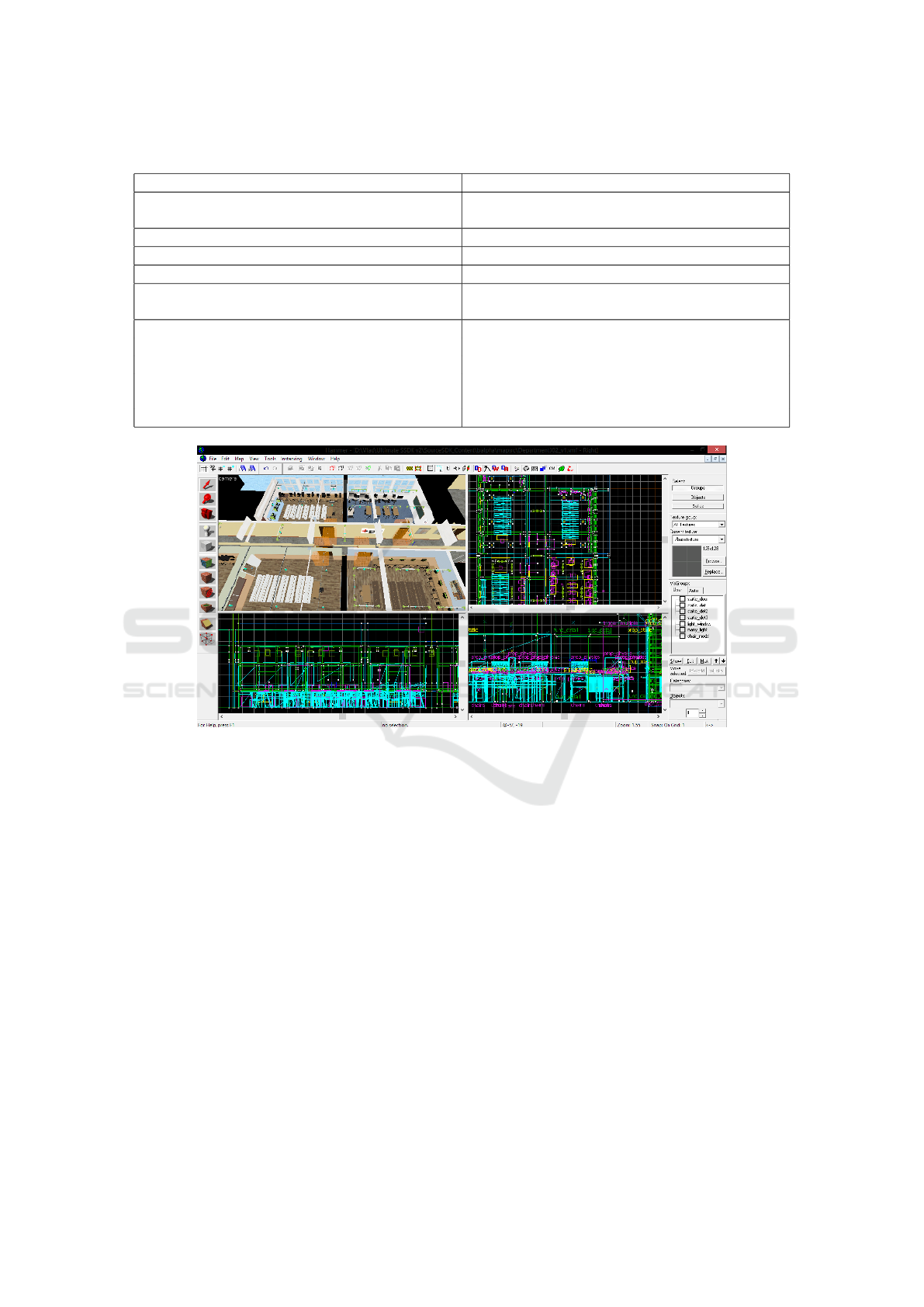

We implemented the application in several stages

and each stage has its tasks (table 1). In addition to

Unity, we also utilized the Source Engine. Due to the

utilities stated in table 1, we created an application for

OS Windows and Android, and also a WebGL library

for running in browsers.

2.3 Aspects of Technical

Implementation

Creating the classrooms’ 3D models was the most

complicated part of the development that is why fur-

ther we describe some implementation details.

To create the classrooms’ 3D models, we lever-

aged separate models of special photos made in ad-

vance. Then we utilized Agisoft PhotoScan, which

provides for the photogrammetry function (Anuar,

2006). Due to some technology constraints at the mo-

ment, building a fully-featured rooms model was a

complicated task. Every gleam, as well as translucent

materials, causes significant miscalculations. That

can be fixed with a flattening spray, though that won’t

work for rooms, and that costs a penny. Thus, we

utilized photogrammetry technology to get objects of

correct shapes and sizes (figure 1). Also, we mod-

eled the objects’ textures, those we edited via Adobe

Photoshop and attached to the models. Using Agisoft

PhotoScan we created the model of a classroom and a

model of a computer architecture showcase.

Figure 1 demonstrates the 3D-modeled output

level of the department rooms. The room modeling

was done by brushing geometry, as thus no additional

physical attachment model is required.

Figure 2 demonstrates a part of the level, one of

the departments’ classrooms. Most of the detailed

parts were converted into special mdl format for mod-

els to allow for optimizing objects in a scene.

The detailed objects in figure 3 were converted

into mdl via the proper plugin. After that, we could

utilize the graphics power with the model reduction in

distance technology – LOD.

When the scene is settled, we can import it into

3DS Max utilizing the WallWorm plugin (figure 4).

3DS Max allows for exporting the scene in FBX

format compatible with the Unity engine. In addition

to the model itself, it stores data about lightning, ma-

terials, and structures.

To make sure that the scene was imported cor-

rectly we utilized the projection reflection modes. In

figure 5 we can see that the grid is in its normal state.

Unity does not automatically create objects’ phys-

ical models as it does not allow for brushing geome-

try. We have to optimize the model in the Unity scene

and add a physical model of a connection mesh col-

lider or box collider. The WebGL technology allows

for running the project in the Internet browser. This

technology is yet imperfect, however, if we optimize

the scene it will work well. The mobile systems re-

quire the controls to let the user run the game, for the

mobile devices do not have keyboards and a mouse

pointing device.

Figure 6 demonstrates the controlling elements,

the motion controls on the left, and the sight controls

on the right.

The home screen interface is a menu that includes

options “New game”, “Load a game”, “Settings”,

“Exit”. After the user loads the game the menu ex-

tends with more options. The players can move with

the mouse and the keyboard, or via sensor controls.

The controls can be set in Settings, in the Keyboard

tab. The graphical interface is an upper layer of the

graphical system that allows for creating realistic 3D

scenes on that basis. These scenes can have their sce-

nario that may be changeable depending on the users’

actions.

The game’s current version has a static back-

ground, though it can dynamically change to another

background after each time the player reloads the

game. Also, the vital part of the application devel-

opment process was the scenario creation and quest

development.

The scenario development: the game challenges

utilize various objects, such as scripted sequence that

allows the characters for moving and performing the

required actions; logic relay that is used to create

the series of events started with some item when

it’s necessary; point template – a container for stor-

ing task objects; ambient generic – used to play au-

dio; logic compare – compares the numbers to decide

on what to do next; info node – creates the naviga-

tion grid nodes for the non-game characters (the way

searching system utilizes the key info node elements),

etc. We implemented these elements based on the Fi-

nite State Machine pattern, which allows for control-

ling the game object condition and its behavior. For

the quest development there are several algorithms to

utilize, though since the game model is 3D, we imple-

mented the way search via the navigation grids algo-

rithm.

The Navmesh or Node Graph navigation grid is

an abstract data structure that is usually utilized by AI

Digital Technology Implementation for Students’ Involvement Base on 3D Quest Game for Career Guidance and Estimating Students’

Digital Competences

679

Table 1: Tasks and tools for implementation.

Tasks Tools

Creating 3D models of rooms Source Engine, Agisoft PhotoScan Pro, GUI Stu-

dioMDL

Editing objects Hammer World Editor, MilkShape 3DL

Creating the objects’ textures Adobe Photoshop, VTF Edit

Creating levels and lightning for some items Hammer World Editor

Scenes editing, processing, and exporting to the

format

3D Studio MAX, plugin Wall Worm

Scenes optimization

Unity

Adding the physical model of connection

Creating game objects and events

Developing the game manager, interface manager,

player, units, and levels

Scripts writing

Figure 1: Hammer Editor with a model of department rooms.

applications, to allow the movement agents through

big and geometrically complicated 3D objects. AI

considers objects that are not static to be a dynamic

hindrance. This is another advantage of utilizing our

approach to solve the challenge of searching the right

way. The agents that can approach the navigation grid

do not count these hindrances when building their

track. Thus, the navigation grids method allows us

to shorten expenses on calculations and makes find-

ing the agents that encounter dynamic hindrances less

pricey. The navigation grids are usually implemented

as graphs, so we can utilize them for several algo-

rithms defined for those structures. Figure 7 demon-

strates the navigation grid utilized to calculate the way

for non-game characters.

2.4 Application Scenario

The 3D quest “Passcode” can be downloaded via the

following link: https://afly.co/xxn2. To start the quest,

the user selects the language, as the game contains

tips and subtitles, adjusts the keyboard settings, and

on-demand can go to help for instructions in the corre-

sponding menu section. The article (Prokhorov et al.,

2020) provides for a simplified game description used

for the pilot mode. We updated the game and added

more advanced features in the latest release. Thus,

further in this paper, we explain the game scenario

and provide a detailed description of all functional el-

ements implementation.

The quest contained different challenges to evalu-

ate different groups of digital competencies according

to the Digital Competence Framework for Citizens

DigComp 2.1, that is information and data literacy,

communication and collaboration, digital content cre-

ation, safety, problem-solving (Carretero et al., 2017).

The tasks had different constructs and were not

limited to linear tests. This allowed us to assess the

various cognitive and metacognitive skills of students

who participated in the game.

AET 2020 - Symposium on Advances in Educational Technology

680

Figure 2: Hammer Editor with a model of department rooms.

Figure 3: Hammer Editor with a model of department rooms.

Though, to determine the total score we lever-

aged the approach typical for the majority of com-

puter grading systems: each task has a time limit, and

while assessing the performance we consider both the

scores and the time spent on the task.

The task constructs in these cases are complex,

though due to multi-platform Unity tooling and op-

timal subsystems interaction, we implemented the

complicated game elements and complex evaluation

system.

We should note that the game has two modes –

the learning mode and the assessing mode. The train-

ing mode provides users with a set of prompts and

hints and the function that allows for interrupting or

canceling the task at any minute. The player can can-

cel the task with the appropriate button. Also, dur-

ing the game, the player can see the information on

the statuses of completed tasks. Until all tasks are

completed, the user will be suggested a new task each

time he completes or interrupts the selected task. New

tasks will appear until the user completes them all and

after that game is considered to be over.

The evaluation mode provides for the limited time

on each task, and once the time is over the task is

interrupted. In this case, the users’ scores are based

on their performance. If the task was completed to

the fullest extent, the user gets the maximum num-

ber of points. If the task was completed partially,

the user can score half, quarters, or three-quarters of

the points. The evaluation mode doesn’t have any

prompts that help to complete the tasks, only the

prompts that navigate the user through the game. The

user is free to choose the order to do tasks, and they

also can get back to the postponed tasks unless the

time for those tasks has not expired yet.

The game has the following scenario: every-

thing starts when the player appears info player

start; throughout the parent parameter, the

Digital Technology Implementation for Students’ Involvement Base on 3D Quest Game for Career Guidance and Estimating Students’

Digital Competences

681

Figure 4: Hammer Editor with a model of department rooms.

Figure 5: Hammer Editor with a model of department rooms.

Figure 6: Hammer Editor with a model of department

rooms.

env entity maker (cam i playersstart maker)

is attached. The env entity maker

(cam i playersstart maker) includes the

point template (cam inmenu point template)

container with the point viewcontrol

Figure 7: Hammer Editor with a model of department

rooms.

(cam menuv1 point

viewcontrol) camera, the func brush

(cam menuv1 fadebr) that overshadows the menu

background and the info target (player old).env fade

that transitions the screen from black to normal.

AET 2020 - Symposium on Advances in Educational Technology

682

After the player appears, the rigger teleport

(player start trigger teleport) moves him to

info teleport destination (playerspawn depstart) –

the end of the corridor in the department that has

certain coordinates.

The player receives a number

of messages env message: (Depart-

ment WelkomeKhaiDepartment), (Depart-

ment TasksButtons), (Department tasksstart compl)

and (Department interrupt task). After 6.5 sec-

onds, logic auto activates trigger teleport (tele-

port to buttons) and moves the player to info target

(tele player buttons) – the task menu. Before the

player can select a task in the menu, env entity maker

(cam i playersstart maker) leaves the container

point template (cam inmenu point template) in the

previous location of the player.

The task menu consists of five func button (But-

ton activate quest 1-5), script intro (effect in menu)

shows the camera effect in the menu. Each button

activates its corresponding task script. Any of these

buttons refer to logic relay (buttons common relay)

when activating the task that disables the menu effect,

extra sounds, and messages.

We should note that the game is intended for

Ukrainian students and supports only Ukrainian and

Russian localizations.

Once the task is selected, trigger teleport moves

the player to their previous location info target

(player old) so that a player can start a new mission.

The entity responsible for the task com-

pletion sends a request to the corresponding

env texturetoggle (Texture Button activate quest 1-

5), which changes the buttons’ state to “completed”.

The math counter (Math Completed procent)

counts the number of completed tasks, and

logic compare (Compare Completed pr1-5) com-

pares and shows the player their performance in

percentage via env message (Completed pr1-5).

Once the player completes the task, they should

select the next task from the proposed.

For instance, estimating the level of competence

working with data, the users have to answer multiple-

choice questions that cover the information compe-

tency (Item 1). These tests can have from two to four

questions depending on the test. When the user se-

lects an answer, it is supplied with a corresponding

comment and highlighted red (for incorrect answers)

or green (for correct answers). For both cases, the

user receives a text message with the correct answer.

After the user completed test questions, the program

counts correct and incorrect answers and displays the

results in a message, and voices it over. Figure 8

demonstrates an example of a closed test question,

where the user has to choose the correct answer by

tapping the number of the computer monitor in the

virtual classroom.

The task has the following implementation.

In the beginning, the player sees the message

env message (Department Quest 1 502 goto504)

that tells the player an audience to go. Once the user

is in the right audience, trigger multiple (Depart-

ment Quest 1 504 as1 triggershowMSG) activates

the task.

The player sees the message env message

(Department Quest 1 502) on the screen, then

several buttons appear: func button (button que1 1-

4), logic case (quest1 logic case) and the user

should randomly select the first question. For each

question, QUE1 1-16 relay utilizes math counter

(quest1 math voice number) that announces the

question’s number, ana func

brush (QU1 monitor image *) displays the picture on

the in-game monitor.

To estimate the users’ competence in problem-

solving and communication, we developed the “Find

the academic record book” challenge (Item 2) (fig-

ure 9). The scenario supposes the user to commu-

nicate with the Student character, ask her questions

on the educational process and decide where to go to

find the academic record book. To provide for an ad-

ditional challenge, this item randomly appears in one

of the departments.

When the user finds the object, he receives a mes-

sage about discovery and he can go find the academic

record book. After he gets the academic record book

in his hands, he leaves the department and the chal-

lenge is over. The game counts the number of steps

the user made to complete the task.

When the user finds the object, he receives a mes-

sage about discovery and he can go find the Student.

After he gets the academic record book in his hands,

he leaves the department and the challenge is over.

The game counts the number of steps the user made

to complete the task.

To accomplish this task, we utilized:

info node, scripted sequence, npc template maker,

npc natasha, env message, logic relay,

logic choreographed scene, filter activator name,

trigger multiple, logic case trigger teleport,

fun tempergetge, info thanplate.

Let’s consider these entities closer. info node

is a node intended for creating a navigation net-

work, required to move non-game characters in three-

dimensional space. Each info node has an ID. For a

particular task, such a character is npc natasha a stu-

dent who uses the network to move around the level.

The more info node will be used on the level, the bet-

Digital Technology Implementation for Students’ Involvement Base on 3D Quest Game for Career Guidance and Estimating Students’

Digital Competences

683

Figure 8: An example of the question from the 3D quest game.

Figure 9: An example of the question from the 3D quest game.

ter the navigation network will be. Though the aim is

to build a correct and efficient network, that means

that the nodes should be used even for the narrow

doorways to connect different rooms in a network.

Otherwise, the non-game characters won’t be able to

walk through the doors because they cannot get from

one isolated network to another. Besides, the non-

game characters will always choose the shortest way

through the network from point A to point B. In case if

the character encounters two roads of identical length

the character will choose the road with a smaller ID

number. The npc natasha entity uses other entities in-

tended to implement various actions. The npc natasha

entity is a source for cloning. This is a non-game char-

acter that is a female 3D model, which implements

basic AI functionality. To move around the level this

character utilizes the script files with scripts and the

navigation network.

Other entities and their purpose are listed in ta-

ble 2.

The “Clean the classroom” challenge (figure 10)

aims to evaluate the user’s ability to solve technical

problems, follow the rules of safety, and treat the tech-

nical equipment and computers (Item 3). The user has

to place computers, screens, mouses, and keyboards

around the classroom in the right places. The game

counts the number of steps the user made to complete

the task.

In another classroom, the user has to set up a

computer out of suggested elements (the computer

cabinet, processing unit, mother card, power source,

cooler, graphics adapter, RAM, etc.). This challenge

counts the order; thus, the user can’t place the cooler

before settling the processing unit into the mother

card. After the computer was set up, the user is told

the number of a room where to take the computer. The

task is considered to be complete when the user takes

the computer to the given classroom.

AET 2020 - Symposium on Advances in Educational Technology

684

Table 2: The entities used for the 3D game scenario implementation.

Entities Purpose of use

scripted sequence required for programming the script scenes with non-game characters. Allows

for the characters moving to specified locations, playing animations, and playing

sound files.

npc template maker a container for creating a non-game character at the selected moment, for exam-

ple, if the player wants to repeat a task.

env message displays the message at the player’s screen. The messages are stored in a text

document and can be edited.

logic relay triggers the selected chain of actions of the scene level. Can be performed either

once or several times.

logic choreographed scene stores a link to a scene file via Face Poser. Scenes contain the advanced combina-

tion of character animations, facial animation, and their speech. One scene allows

for managing several characters simultaneously.

filter activator name serves for filtering the entities by name. Required in places of various objects

interaction. For example, according to the task, the player cannot give the student

a chair instead of the record book. In this case, we filter the record book by name

using this entity.

trigger multiple is a three-dimensional, geometrically constructed trigger at the level, activated

when physically encounters the activator. Any entity can be an activator.

logic case the trigger required to activate the random chain of events. Utilized for the test

tasks.

info target a target or a point. The point can locate at any coordinates within the scene.

info target is utilized by other entities as a target or location.

trigger teleport it is a trigger for moving entities to a specific point specified in it. The point is

defined in the entity info target.

point template an entity that serves to create and clone other entities on call. Mostly used when

a player wants to replay a task.

func physbox is a three-dimensional entity of the convex shape that behaves like a physical

object. For example, the test book in the task.

prop dynamic override an entity that serves to create a dynamic model bypassing the constraint criteria

(dynamic/static). This entity can play animations.

ambient generic stores the links to the audio files. The entity is used for all tasks, allowing for

looping the sounds.

func door rotating serves to create doors that can be opened by the player.

prop door rotating serves to create doors that can be opened by non-game characters. The non-game

characters can open these doors if they are closed or block the way.

func button the trigger button that a user can press to initiate a certain sequence of actions.

Used in-game to select tasks, and for the tests and puzzles that allow for inter-

rupting the task.

For the task we use the following entities:

info target, prop dynamic override, prop physics,

trigger teleport, func brush, math counter, fil-

ter activator name, func button, ambient generic,

env message (table 2).

Also, we used the entity math counter to perform

such arithmetic operations as addition, subtraction,

multiplication, and division. This entity is applied for

counting the player’s score or the number of tests.

The next task is to “Assemble the computer” (Item

4). The player is asked to assemble a system unit from

various components (figure 11). The player has the

following items: the case, processor, motherboard,

power supply, cooler, video card, RAM, hard drive,

and side cover. For this task matters the order of ac-

tions, for example, you cannot install a cooler until

the processor is installed on the motherboard.

Due to the technical aspects, the components must

be installed only inside the system unit, but not out-

side it. That means the user cannot install the proces-

sor in the motherboard outside the system unit. Once

the system unit is complete, the user is told which au-

dience to take the computer. After the player brings

the computer to the right place, the task will be com-

Digital Technology Implementation for Students’ Involvement Base on 3D Quest Game for Career Guidance and Estimating Students’

Digital Competences

685

Figure 10: The user got the task to settle the classroom and completed it.

Figure 11: The player received and completed the task to assemble the computer.

pleted.

This task consists of the func detail,

point message, env message, filter activator name,

func button, ambient generic, env projectedtexture,

trigger teleport, info target, logic relay, func brush,

func physbox, point ountericplate (table 2) entities.

The additional entities for this task were:

• func detail – three-dimensional convex-shaped

entity used to create walls and structures, has no

name and should not be taken into account when

creating level scene optimization;

• point message entity that displays text prompts

located in three-dimensional space. Used to sug-

gest component names in a task where the player

has to assemble a system unit;

• env projectedtexture entity used to create a dy-

namic light source with a shadow. Located in

places where it is required to highlight some stage

areas for better convenience. Highlights the areas

in tasks with assembling the computer block and

puzzle games.

To evaluate the users’ abilities for self-education

and career guidance (Item 5), the user has to put to-

gether the “IT specialist jigsaw puzzle”. The task is

to group 30 suggested elements according to 10 given

IT-related occupations: Mobile Developer Android,

Mobile developer iOS, Frontend developer, Backend

developer, Project manager, Java developer, .NET de-

veloper, UX/UI designer, QA tester, Database devel-

oper. The number of pieces for each occupation varies

from 3 to 6, similar pieces can belong to different oc-

cupations. The order in this challenge doesn’t matter,

and the number of attempts is not limited. The as-

sessing mode has a time limit. The pieces that do not

match automatically drops away, denoting the mis-

take. The challenge is complete after all the pieces

are together (figure 12).

During the challenge, the user can get tip mes-

sages by clicking the occupation name, and it shows

up for 10 seconds. The tips number is limited, and the

game counts how many of that user took.

The task with puzzles includes the follow-

ing entities env message, filter activator name,

func button, ambient generic, env projectedtexture,

trigger teleport, info target, logic relay, func brush,

func physbox, point template, game textter, englec.

All entities were previously considered for

other tasks (table 2), the additional entity here is

phys keep upright, used to hold physical objects in

a defined position, allowing for setting the angle. The

entity serves to keep puzzles in a certain position.

The tasks are meant not only to evaluate the users’

digital competence but also to learn about the faculty

life and educational system as the game models reflect

real objects.

AET 2020 - Symposium on Advances in Educational Technology

686

Figure 12: The user processing the jigsaw puzzle task.

At the moment, the quest has 5 challenges, though

we have an opportunity to make changes to the tasks

pull. To succeed, the user has to complete all of the

challenges, yet the order can be random. To choose

the challenge the user just picks one by clicking on

it, and the voice behind the scene explains the mes-

sage and the point for him to go. As the user reaches

the right classroom the voice behind the scene pro-

vides detailed instructions for the challenge. When

already moving, the quit option becomes available

for the user. To disrupt the challenge the user should

press the corresponding button in the classroom or use

a keyboard shortcut.

Completing each task is always written, its color

changes from red to green. During the process, the

user sees the score of the challenges he completed.

There are certain evaluation criteria, though every

task is scored 4 points. The maximum score is 20

points. Depending on the complexity the tasks value

differently. The system defines the applicants who

scored less than 10 points to have a low level of digital

competence, from 10 to 15 points – the middle level,

and those who scored above 15 – to have a high level

of digital competence. Also, each challenge has no

time limits, yet the quest time was limited. Thus, we

could evaluate the users’ ability to plan their time and

decide on the order and timing for the challenges they

take.

3 THE EXPERIMENT RESULTS

To evaluate the quest efficiency we held an ex-

periment at the IT championship for the applicants

at the computer science and information technolo-

gies department in the National Aerospace Univer-

sity “Kharkiv Aviation Institute”, which results are

demonstrated in the article (Prokhorov et al., 2020).

This paper compares the results of students who

passed the quest on a computer and in real life. The

analysis proved that the difference in results of two

groups of students who participated in the IT cham-

pionship is not significant, and confirms the results

of the previous research (

¨

Ozalp-Yaman and C¸ a

˘

gıltay,

2010; Ita et al., 2014). However, the teenagers were

mostly attracted to the 3D game.

In 2020 the championship occurred in the Uni-

versity for the fourth time, and the applicants were

suggested the 3D game challenge. Due to the pan-

demic, all students participated in the championship

online, while we calculated their scores and de-

fined the winners (https://www.youtube.com/watch?

v=3HRz2GoudeA).

In general, we registered 180 students from 35

schools, though only 116 students participated in the

game and completed all tasks.

There were 84 boys that equaled 72% and 32 girls

that equaled 28% of participants. To process the over-

all applicants’ results we applied statistical analysis

from the R packages (Kabacoff, 2021; Field et al.,

2012). We calculated the average for girls and boys.

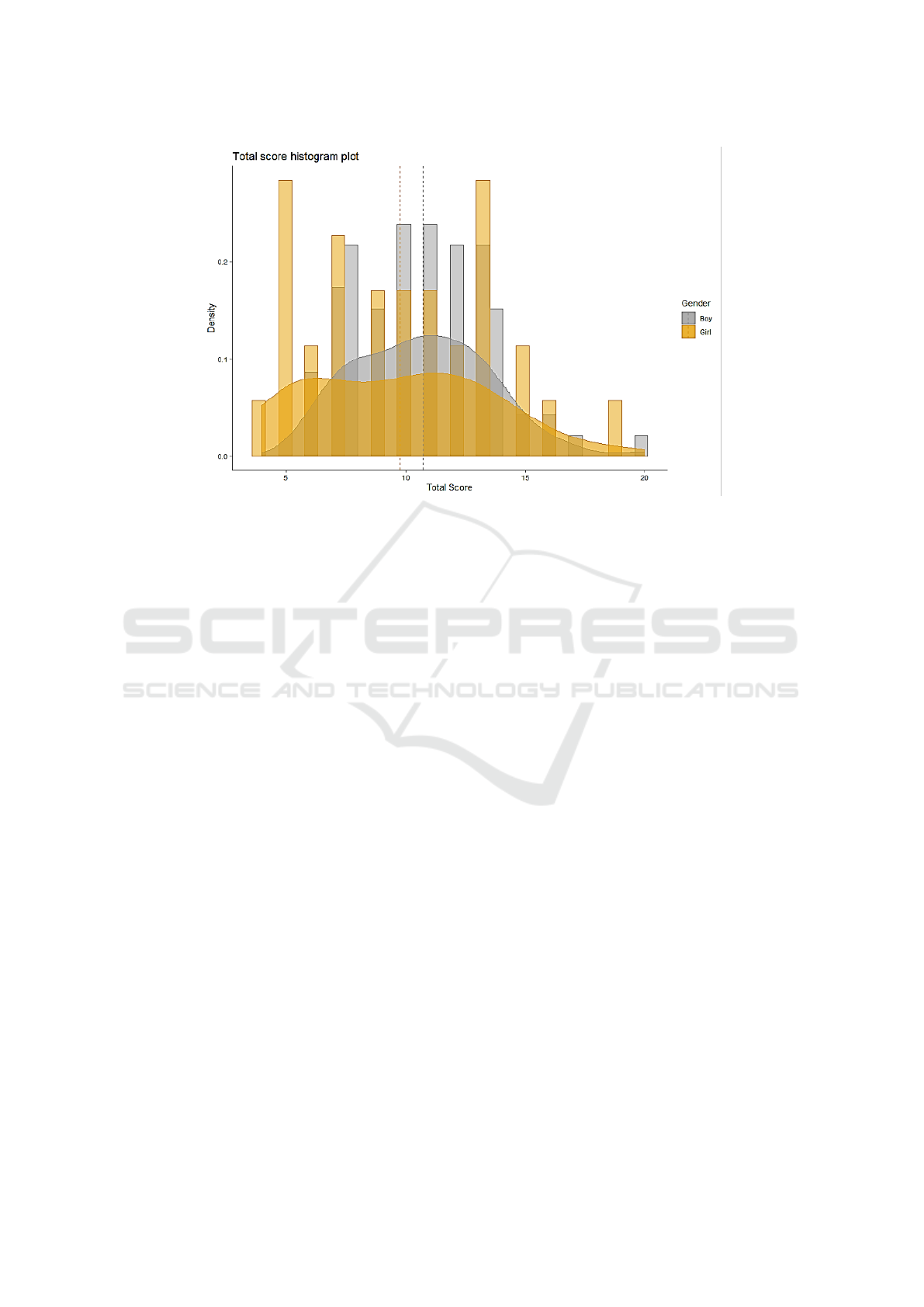

Figure 13 provides for the distribution of the scores.

Boys demonstrated better results (average score equal

10.7) than girls (average score equal 9.8), but the dif-

ference isn’t statistically significant (the Students’ cri-

teria equals 1.18 at p=0.23).

To verify that the tasks are valid and applicable to

access the applicants’ skills in the field of computer

science we carried out the psychometric analysis. We

defined the task complexity score that demonstrates

Digital Technology Implementation for Students’ Involvement Base on 3D Quest Game for Career Guidance and Estimating Students’

Digital Competences

687

Figure 13: Scores distribution on the 3D game results.

the participants’ performance on certain tasks and the

coefficient of correlation of tasks to the total score,

that characterizes the consistency of test tasks. The

obtained results are in table 3.

The results prove that items 1 and 5, those sug-

gested to answer the questions related to the IT in-

dustry and to assemble a puzzle were the most com-

plicated in the 3D game (47% and 46% of students

accordingly got the maximum scores). This proves

that we should carefully approach creating the tests,

considering the target audience’s cognitive skills and

the test intended use.

Items 2-4 appeared to be easier for the students

(63%, 52%, and 52% of students accordingly got

the maximum scores) since they didn’t aim to as-

sess the knowledge but to assess the ability to navi-

gate through the game and concentrate. Thus, in ac-

complishing these items, students had to demonstrate

metacognitive skills.

Though, considering the correlation of scores for

these items to the general score, the fiesta item corre-

lates the most (the correlation coefficient equals 0.6).

That means that the students who answered IT-related

questions better showed overall higher results. The

general difficulty on all test tasks is 0.2, that is the

middle level of difficulty. The results confirmed that

the tasks are reliable and adequate for determining the

level of digital competencies precisely for future ap-

plicants of the IT departments.

The analysis concluded that the format of test

tasks allows not only to determine the level of par-

ticipants’ competencies but also to define the skills

that should be developed. The tests in the format of a

game that automatically collects data allow for defin-

ing the digital competencies profile for each partici-

pant. This profile can be analyzed and compared to

a sample “desired” profile to decide on career guid-

ance and training strategy. The profile analysis allows

defining the competencies that do not require devel-

opment, the competencies that require development,

and the missing competencies. To provide for an inte-

grated assessment of the participants’ competencies,

we used the weighting and ranking method. As a

result, we received a clear picture of the skills that

the participant has already obtained and the skills that

should be developed so that a participant could en-

ter the IT-related department and successfully study

there.

However, the middle level of the participants’ dig-

ital competence didn’t decrease their interest in our

evaluation approach. This fact is important not only

for the future IT specialists but also for the depart-

ments’ occupational guidance process. The students

mostly coped with the tasks and provided positive

feedback on participating in the game.

4 CONCLUSION

Out of the aim of this research and the particular tasks

we faced developing a 3D quest game, as well as the

results of assessing the application efficiency in career

guidance we came up with the following conclusions.

The game application development technology we

AET 2020 - Symposium on Advances in Educational Technology

688

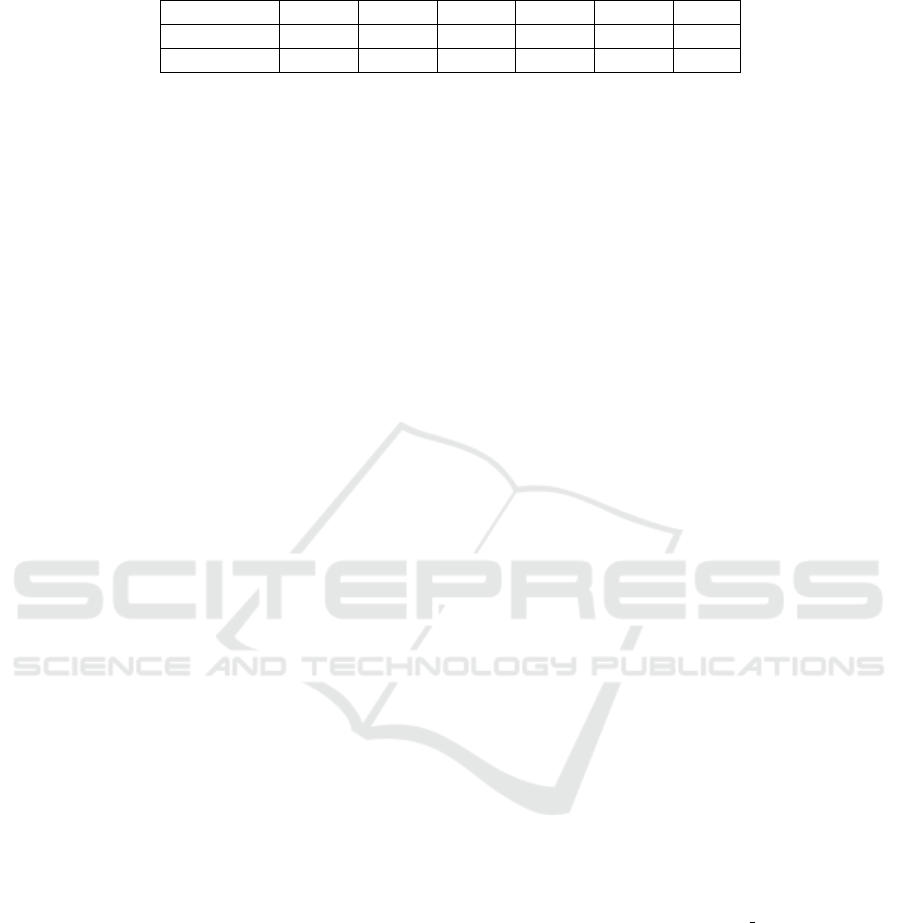

Table 3: Statistics of items.

Item 1 Item 2 Item 3 Item 4 Item 5 Total

Difficulty 0.47 0.63 0.52 0.51 0.46 0.52

Correlation 0.60 0.59 0.51 0.51 0.43

suggest can be utilized by 3D models and game devel-

opers, in particular for training future IT specialists.

We utilized various technologies to implement the

application idea. Leveraging Unity 3D and Source

Engine as the main engines allowed for creating a

3D model of a game and its main objects. We

edited objects via Hammer Editor and created a real-

istic department’s classroom model with the Agisoft

PhotoScan Pro tool and photogrammetry. Searching

the right way was implemented via navigation grids,

which allow through the geometrically complicated

3D objects.

The game scenario provides for a virtual tour

around a department of the 3D university. As far as

the game replicates the real-life objects, applicants

can see the department’s equipment and classrooms.

During the quest development, we considered the

requirements to the participants’ characteristics, game

environment, and utilizing various types of tests with

hints and voicing over, that contributed to the accurate

evaluation and increasing the students’ motivation to

acquire the IT-related profession, in particular build-

ing the models and researching.

The quest includes several different challenges

meant to evaluate the applicants’ digital competence

connected to the DigComp 2.1 framework compo-

nents such as information and data literacy, commu-

nication and collaboration, digital content creation,

safety, problem-solving. The tasks also allow for un-

derstanding the applicants’ ability to work efficiently

and to use computers in real life.

The experiment results prove the 3D quest to be

effective. According to the results of the 3D quest, ap-

plicants demonstrated an average level of digital com-

petence with a certain item test difficulty at 0.5. This

indicates that applicants made a conscious choice of

the faculty and they are ready for further study. Our

psychometric analysis confirmed the reliability and

consistency of the test tasks we developed.

The applicants estimated a 3D quest, as more up-

to-date and attractive engagement. Also, they claimed

this up-to-date approach would influence their choice

of a university. The general results of the test tasks

outlined the areas for enhancement and showed what

digital competencies the students yet have to obtain.

Thus, our 3D quest application can grow the au-

dience for career guidance activities and improve the

public image of the university. Besides, applicants

can use this 3D quest to decide on their future occu-

pation.

In addition to campaigning and career guidance,

this application can help to teach and test students. To

do this, several psychometric indicators of 3D quest

tasks were analyzed to allow further improvement for

the items’ quality.

The prospective research aims become pending

due to switching to digital learning. These aims are to

create a convenient and effective environment for dig-

ital learning using VR and AR technologies, to utilize

the application for evaluating the digital competence

of the future IT specialists, and adjusting the educa-

tional plan for the university’s first-year students.

REFERENCES

Anuar, A. (2006). Digital Photogrammetry: An Ex-

perience of Processing Aerial Photograph of UTM

Acquired Using Digital Camera. Paper pre-

sented at the AsiaGIS 2006, Johor, Malaysia.

http://eprints.utm.my/id/eprint/490/.

Barab, S., Thomas, M., Dodge, T., Carteaux, R., and Tuzun,

H. (2005). Making learning fun: Quest Atlantis, a

game without guns. In Educational Technology Re-

search and Development, number 53, pages 86–107.

Blanco, Q. A., Carlota, M. L., Nasibog, A. J., Rodriguez,

B., Salda

˜

na, X. V., Vasquez, E. C., and Gagani, F.

(2020). Probing on the Relationship between Stu-

dents’ Self-Confidence and Self-Efficacy while en-

gaging in Online Learning amidst COVID-19. Journal

La Edusci, 1(4):16–25.

Carretero, S., Vuorikari, R., and Punie, Y. (2017).

DigComp 2.1: The Digital Competence

Framework for Citizens: With eight profi-

ciency levels and examples of use. http:

//publications.jrc.ec.europa.eu/repository/bitstream/

JRC106281/web-digcomp2.1pdf (online).pdf.

de Carvalho Filho, M. K. (2009). Confidence judgments

in real classroom settings: Monitoring performance in

different types of tests. International Journal of Psy-

chology, 44(2):93–108.

Demirbilek, M. and Koc¸, D. (2019). Using Computer

Simulations and Games in Engineering Education:

Views from the Field. CEUR Workshop Proceedings,

2393:944–951.

Field, A., Miles, J., and Field, Z. (2012). Discovering

Statistics Using R. SAGE Publications.

Finnegan, T. (2015). Learning Unity Android Game Devel-

opment. Packt Publishing, Birmingham.

Frontoni, E., Paolanti, M., Puggioni, M., Pierdicca, R.,

and Sasso, M. (2019). Measuring and assessing

Digital Technology Implementation for Students’ Involvement Base on 3D Quest Game for Career Guidance and Estimating Students’

Digital Competences

689

augmented reality potential for educational purposes:

Smartmarca project. In De Paolis, L. T. and Bourdot,

P., editors, Augmented Reality, Virtual Reality, and

Computer Graphics, pages 319–334. Springer Inter-

national Publishing, Cham.

Garas, S. and Hassan, M. (2018). Student Performance

on Computerbased Tests Versus Paper-Based Tests in

Introductory Financial Accounting: UAE Evidence.

Academy of Accounting and Financial Studies Jour-

nal, 22(2):1–14.

Haranin, O. and Moiseienko, N. (2018). Adaptive artificial

intelligence in RPG-game on the Unity game engine.

CEUR Workshop Proceedings, 2292:143–150.

Ita, M. E., Kecskemety, K. M., Ashley, K. E., and Morin,

B. C. (2014). Comparing Student Performance on

Computer-Based vs. Paper-Based Tests in a First-

Year Engineering Course. In 121st ASEE Annual

Conference & Exposition, pages 24.297.1–24.297.14,

Indianapolis, Indiana. https://peer.asee.org/20188.

Kabacoff, R. I. (2021). R in Action. Manning Publications,

third edition.

Katsko, O. O. and Moiseienko, N. V. (2018). Devel-

opment computer games on the Unity game engine

for research of elements of the cognitive thinking in

the playing process. CEUR Workshop Proceedings,

2292:151–155.

Kompaniets, A., Chemerys, H., and Krasheninnik, I.

(2019). Using 3D modelling in design training sim-

ulator with augmented reality. CEUR Workshop Pro-

ceedings, 2546:213–223.

Kuzminska, O., Mazorchuk, M., Morze, N., Pavlenko, V.,

and Prokhorov, A. (2018). Digital competency of the

students and teachers in Ukraine: measurement, anal-

ysis, development prospect. CEUR Workshop Pro-

ceedings, 2104:366–379.

Kuzminska, O., Mazorchuk, M., Morze, N., Pavlenko, V.,

and Prokhorov, A. (2019). Study of digital compe-

tence of the students and teachers in Ukraine. Com-

munications in Computer and Information Science,

1007:148–169.

Lavrentieva, O., Arkhypov, I., Kuchma, O., and Uchitel,

A. (2020). Use of simulators together with virtual and

augmented reality in the system of welders’ vocational

training: Past, present, and future. CEUR Workshop

Proceedings, 2547:201–216.

Ma, M., Bale, K., and Rea, P. (2012). Constructionist learn-

ing in anatomy education. In Ma, M., Oliveira, M. F.,

Hauge, J. B., Duin, H., and Thoben, K.-D., editors,

Serious Games Development and Applications, pages

43–58, Berlin, Heidelberg. Springer Berlin Heidel-

berg.

OECD (Accessed 21 Mar 2017). Learning for

Jobs: Synthesis Report of the OECD Re-

views of Vocational Education and Training.

OECD Reviews of Vocational Education and

Training. OECD Publishing, Paris. https:

//www.researchgate.net/publication/266265826

Learning for Jobs Synthesis Report of the OECD

Reviews of Vocational Education and Training.

Patiar, A., Kensbock, S., Ma, E., and Cox, R. (2017). In-

formation and Communication Technology–Enabled

Innovation: Application of the Virtual Field Trip in

Hospitality Education. In Journal of Hospitality &

Tourism Education, volume 29, pages 129–140.

Prokhorov, O. V., Lisovichenko, V. O., Mazorchuk, M. S.,

and Kuzminska, O. H. (2020). Developing a 3D

quest game for career guidance to estimate students’

digital competences. CEUR Workshop Proceedings,

2731:312–327.

Rankin, Y. A., Gold, R., and Gooch, B. (2006). 3d role-

playing games as language learning tools. In Brown, J.

and Hansmann, W., editors, 27th Annual Conference

of the European Association for Computer Graphics,

Eurographics 2006 - Education Papers, Vienna, Aus-

tria, September 4-8, 2006, pages 33–38. Eurographics

Association.

Shepiliev, D. S., Semerikov, S. O., Yechkalo, Y. V.,

Tkachuk, V. V., Markova, O. M., Modlo, Y. O., Mintii,

I. S., Mintii, M. M., Selivanova, T. V., Maksyshko,

N. K., Vakaliuk, T. A., Osadchyi, V. V., Tarasenko,

R. O., Amelina, S. M., and Kiv, A. E. (2021). Devel-

opment of career guidance quests using WebAR. Jour-

nal of Physics: Conference Series, 1840(1):012028.

Th

¨

urkow, D., Gl

¨

aßer, C., and Kratsch, S. (2005). Virtual

landscapes and excursions-innovative tools as a

means of training in geograph. In Proceedings

of ISPRS VI/1 & VI/2 Workshop on Tools and

Techniques for E-Learning, pages 61–64. https:

//www.isprs.org/proceedings/xxxvi/6-w30/paper/

elearnws potsdam2005 thuerkow.unlocked.pdf.

Tokarieva, A. V., Volkova, N. P., Harkusha, I. V., and

Soloviev, V. N. (2019). Educational digital games:

models and implementation. CEUR Workshop Pro-

ceedings, 2433:74–89.

Vakaliuk, T., Kontsedailo, V., Antoniuk, D., Korotun, O.,

Mintii, I., and Pikilnyak, A. (2020). Using game sim-

ulator Software Inc in the Software Engineering edu-

cation. CEUR Workshop Proceedings, 2547:66–80.

Villagrasa, S. and Duran, J. (2013). Gamification for learn-

ing 3D computer graphics arts WebAR. In TEEM ’13:

Proceedings of the First International Conference on

technological ecosystem for enhancing multicultural-

ity, pages 429–433.

Vlachopoulos, D. and Makri, A. (2017). The effect of

games and simulations on higher education: a system-

atic literature review. International Journal of Educa-

tional Technology in Higher Education, 14:22.

¨

Ozalp-Yaman, S¸ . and C¸ a

˘

gıltay, N. E. (2010). Paper-based

versus computer-based testing in engineering educa-

tion. In IEEE EDUCON 2010 Conference, pages

1631–1637.

AET 2020 - Symposium on Advances in Educational Technology

690