A Combination between Textual and Visual Modalities for Knowledge

Extraction of Movie Documents

Manel Fourati, Anis Jedidi and Faiez Gargouri

MIR@CL Laboratory, University of Sfax, Sfax, Tunisia

Keywords:

Description, Segmentation, Movie Document, Textual, Visual Modalities, Knowledge, LDA.

Abstract:

In view of the proliferation of audiovisual documents and the indexing limits mentioned in the literature, the

progress of a new solution requires a better description extracted from the content. In this paper, we propose an

approach to improve the description of the cinematic audiovisual documents. However, this consists not only

in extracting the knowledge meaning conveyed in the content but also combining textual and visual modalities.

In fact, the semiotic desription represents important information from the content. We propose in this paper an

approach based on the use of pre and post production film documents. Consequently, we concentrate efforts to

extract some descriptions about the use not only of the probalistic Latent Dirichlet Allocation (LDA) model but

also of the semantic ontology LSCOM. Finally, a process of identifying a description is highlighted. In fact,

the experimental results confirmed the importance of the performance of our approach through the comparison

of our result with a human jugment and a semi-automatic method by using the MovieLens dataset.

1 INTRODUCTION

Audiovisual documents provide a wide range of con-

tent descriptions across different descriptors of differ-

ent types of media. Therefore, the extraction of these

descriptions has received increased attention by sev-

eral researchers (Tang et al., 2019) (Sanchez-Nielsen

et al., 2019). Besides, the process requires some tech-

niques for the extraction of information from the con-

tent. All this has an impact on filmic documents

which are rich in content and therefore can be a

source of complete description. Although, various

techniques have been proposed for this process, se-

mantic descriptions are still lacking. In fact, the de-

scriptions of audiovisual documents must be enriched

from the content while exploiting the knowledge pro-

vided in the document.

Knowing that our objective in this paper is to ex-

tract semiotic descriptions from the content, we pro-

pose a solution for the extraction of descriptions by

applying a multimodal approach based on an auto-

matic process. Therefore, our focus is on the de-

scription of filmic document. A film product passes

through three phases, namely, a pre-production phase,

a production phase and a post-production phase. In

our work, we take advantage of these phases to ex-

ploit the following documents about the extraction of

semiotic descriptions:

• The textual documents used in the filmic pre-

production phase, namely, the script.

• The audiovisual stream in its phase of filmic post-

production through the text superposed on the im-

ages.

• The cinematographic structure of the audiovisual

document in the pre and post-production phase.

Based on these documents, the proposed approach

helps extract hidden knowledge from the content.

Therefore, we propose the use of two types of analy-

ses, the first is statistical while the second is semantic.

On the statistical level, we focus on the use of the La-

tent Dirichlet Allocation (LDA) probabilistic model

while on the semantic level, we opt for the LSCOM

ontology. Thereafter, we propose a process to identify

the pertinent description related to the movie based on

the pertinence and the weighed measure.

In what follows, we will present our proposed ap-

proach for the extraction of semiotic descriptions. In

section 2, we will present a background of the semi-

otic description used in this paper and an overview

of the methods proposed in the literature, which are

related to the description and identification of topics

and themes in audiovisual documents. Then, in sec-

tion 3, we will discuss our solution for the automatic

extraction and identification of the thematic descrip-

tion. Subsequently, section 4 will be devoted to the

Fourati, M., Jedidi, A. and Gargouri, F.

A Combination between Textual and Visual Modalities for Knowledge Extraction of Movie Documents.

DOI: 10.5220/0008494902030214

In Proceedings of the 11th International Joint Conference on Knowledge Discovery, Knowledge Engineering and Knowledge Management (IC3K 2019), pages 203-214

ISBN: 978-989-758-382-7

Copyright

c

2019 by SCITEPRESS – Science and Technology Publications, Lda. All rights reserved

203

already carried out presentation of the various experi-

ments.

2 RELATED STUDIES

2.1 Background

The semiotic has received great attention in the last

decades by many researchers in several disciplines,

such as, narrative, image and audiovisual semiotics.

In our research, we are interested mainly in the au-

diovisual semiotics, which is defined as a process in

which the meanings related to the content of audiovi-

sual documents are taken into account (Martin, 2005).

In this respect, Peter Stockinger (Stockinger, 2003),

(Stockinger, 2011) and (Stockinger, 2013) ), was in-

terested, in his researchs studies, in the audiovisual

semiotics of movie documents. Indeed, he proved that

the audiovisual semiotics helps approach the seman-

tics of the content and the history it presents. In fact,

it is in this respect that our research was conducted. It

defines different thematic descriptions, such as, such

as dominant themes, discourse themes, taxeme and

specified themes.

• Dominant Theme: It represents the pertinent

themes in the audiovisual segment, which is con-

sidered as the topic of this segment.

– Discourse Theme: It represents the different

theme treated in the audiovisual segment. other

words, the theme is used to identify the seman-

tic space of an audiovisual segment.

– Taxeme: It represents the most relevant theme

of discourse in the topic or dominant theme.

– Specified Theme: It allows the identification of

each term related to the theme of the discourse

identified.

2.2 Description of Topics in Audiovisual

Documents

The goal behind a better description that reflects the

content of the document, is undoubtedly, a reliable

modeling of the content. In fact, the need for us-

ing it is to obtain the required knowledge that takes

into account the growth of the interest of the descrip-

tion of the audio-visual documents. The objective is

to extract descriptions of the audiovisual content of a

”film” nature.

In addition to the keywords extracted from the

content, the topics and themes represent an important

source of knowledge extracted from the film. Indeed,

these themes are classified among the most natural

descriptions inspired by a user. Several efforts have

been made to extract this type of description from the

content of the film documents.

wWe focus in our state of the art on the presenta-

tion of the state of the art studies related to the filmic

and textual document. Then, in the framework of the

thematic description of the filmic documents, a rather

primitive solution has already been used in the litera-

ture. It consists mainly in extracting subjects through

low-level features.

Recently, the authors in (Bougiatiotis and Gian-

nakopoulos, 2016) have proposed a method of mod-

eling subjects of films based on low level character-

istics. Such a method aims at combining different

modalities, namely, the subtitles of the films, the au-

dio characteristics and the generic metadata. In or-

der to carry out this fusion process, the authors sug-

gested defining a characteristic vector of each modal-

ity. For the first modality, the probabilistic model

”LDA” is applied to the bag of words (BoW) result-

ing from a pre-processing step on the subtitles. The

second modality focuses on on the classification of

audio segments to audio events and musical genres.

Finally, the third modality makes it possible to select

a generic description set from Imdb, namely, genre,

directors, etc. The basic goal of topic modeling is,

roughly, to measure the similarity between the films.

Each film is presented as a label vector to create a

”Ground Truth” similarity matrix between the films.

The proposed method is tested on a basis of 160 films

selected from Imdb’s ”Top 250 Movies”

1

. The gener-

ated results generated are based on the measurement

of recommendation percentages for each film.

In the same context, the authors of (Mocanu et al.,

2016) proposed a method for the search of filmic

documents based on the extraction of subjects. This

method is based on the identification of different sub-

jects existing in the subtitles of the filmic documents.

In order to identify the topics, the authors consid-

ered the following approach. First, a scene tempo-

ral segmentation step (Tapu and Zaharia, 2011) asso-

ciated with the subtitles is set up. Then key words

and phrases were proposed to define a term vector for

each subtitle. For the definition of this vector, a pre-

processing step which considers different strategies

of a natural language processing applied to the sub-

titles, namely, tokenization, deletion of punctuation

characters, etc, was applied. Finally, after extracting

the key terms, an extraction step of subjects is consid-

ered. In fact, the objective of this step is to determine

for each term vector a set of subjects. For this re-

spect, a structuring algorithm based on graph is pro-

1

http ://www.imdb.com/chart/top

KEOD 2019 - 11th International Conference on Knowledge Engineering and Ontology Development

204

posed. Then, the evaluation of this method is tested

on a basis of 10 videos selected from a TV archive

in France. Then, a comparative study between the re-

sults obtained from the proposed method and those

obtained through five human observers showed the

performance of this method. In fact, the results gen-

erated have an average accuracy rate greater than 0.5.

The authors of (Kurzhals et al., 2016) proposed

a multimodal method for the analysis of movies in

thematic scenes. Indeed, they opted for a low level

analysis. Since they proposed a method based on tex-

tual documents related to the film such as scripts and

subtitles. In fact, the basic objective of this thematic

analysis is to explore filmic documents based on the

following four aspects: who?, What? and where?. In

this respect, the authors suggested combinig the in-

formation extracted from textual and visual modali-

ties. Indeed, from the audiovisual stream, they have

discussed the detection of plans and movements that

capture the scenes. In addition, they selected a set

of linguistic structural elements from the scenes of

movie scripts and associated them with the subtitles.

Based on the various extracted knowledge and inter-

rogation techniques, a semantic analysis technique is

discussed. This technique facilitates the comparison

between the different scenes on an image and on a se-

mantic shot. In fact, the evaluation of this method is

carried out based on a case study of a popular film,

namely, ”The Lord of the Rings”.

Then, the study of the methods proposed for

the description of the topic of the filmic documents

showed their reliability at the level of the used infor-

mation sources and the considered modalities. Never-

theless, the extraction techniques used here are based

on statistical analyzes that cannot semantically pro-

vide important knowledge. In addition, a complete

evaluation through known film bases is missing. In

what follows, we will present the various studies re-

lated to the extraction of topics from textual docu-

ments.

Actually, the thematic segmentation of textual

documents has attracted great attention in the Natural

Language Processing domain through several fields,

such as, Key phrase extraction via topic, summa-

rization and other disciplines which are interested in

the information extraction of textual content. Conse-

quently, several research studies proposed to extract

topics or theme documents. These existing meth-

ods can be divided into three main classes: Linguis-

tic analysis-based approach, Statistical analysis-based

approach and Semantic analysis-based approach.

The first is based on the extraction of linguistic

terms or markers in order to detect the definition of

a new subject in the text (Rahangdale and Agrawal,

2014). Ragarding the second approach, it was con-

sidered very important by some literature studies as it

proved to be efficient in detecting the textual topic and

thematic. In fact, among the various proposed models

we can cite the TextTiling model (Chabi et al., 2011),

LSA (Latent Semantic Analysis) model (Bellegarda,

1997) (Atkinson et al., 2014), PLSA (probabilistic la-

tent semantic analysis) (Hofmann, 1999) and LDA

(Blei et al., 2003). As it is indicated, the presented

models are not really recent but are still very used in

the literature, such as, the LDA model.

However, a literature study revealed that each

model has constraints. For instance, the latent seman-

tic analysis (LSA) techniqueis effective only for in-

formation retrieval tasks, while the probabilistic latent

semantic analysis (PLSA) is not suitable for the treat-

ment of documents that do not belong to the training

data, On the other hand, the linear discriminant anal-

ysis (LDA) uses a very large Bag of Words (BoW)

model.

In fact, several research studies proved the effi-

ciency of the LDA model in extraxting hidden topics.

For this reason, we propose the use of this model try-

ing to reduce the Bag of Words (BoW) model.

The Outcome: In this literature review, we have

presented some research studies that focus on the de-

scription of themes and topics based on film and tex-

tual documents. More particularly, an examination

of the textual documents revealed that the statisti-

cal model ”LDA” is the most adapted, the most rel-

evant and the most used for the extraction of topics

and themes through textual documents(Jelodar et al.,

2019) (Cheng and Hung, 2018).

Despite the shortcomings presented by some stud-

ies, we propose to adapt this model in order there are

still to improve the performance of the obtained re-

sults as well as to overcome the badly treated prob-

lems. In fact, our study of the filmic document re-

vealed that several recent studies have been interested

in this problem as they have focused on the use of dif-

ferent modalities for the extraction of subjects. More-

over, despite the importance of these studies some

shortcomings some of which are caused by the non-

use of use of semantic techniques. In fact, since the

key terms extracted through low-level characteristics

can not present the semantic reasoning of the content,

we then opted for a description method based on the

semantic analysis. Indeed, the model presented han-

dles the document as a bag of words which allows

obtaining a large number of sample data. Accord-

ingly, LDA does not explicitly model the relationship

between topics. In this work, we focus on topic ex-

traction by the correspondance process between a list

of topic and an ontology to overcome these problems

A Combination between Textual and Visual Modalities for Knowledge Extraction of Movie Documents

205

handled poorly.

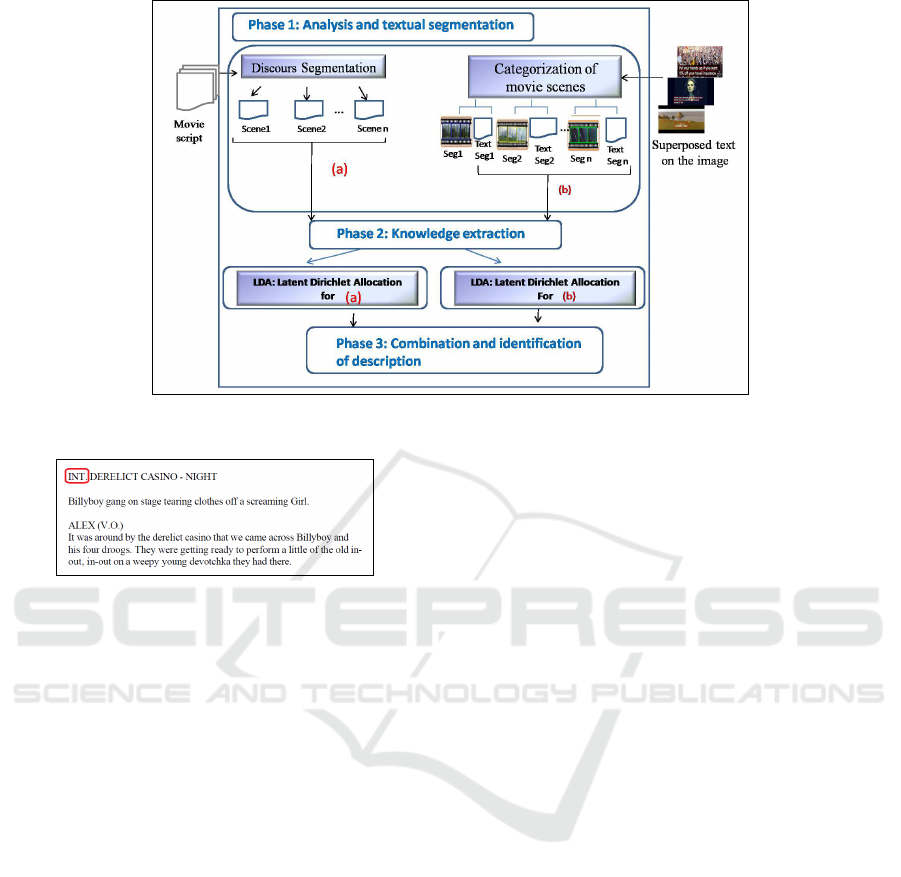

3 PROPOSED APPROACH

Due to the persistence of the dissatisfaction of the

users’ needs of the audiovisual documents and the

lack of reliability of the studies conducted on this

topic, an approach of knowledge extraction is pro-

posed in order to describe audiovisual documents.

Such a semantic and automatic description guaran-

tees the interrogation process of reaching a high-level

structure. We are interested in the description of

filmic audiovisual documents. The input of our ap-

proach are a movie script and a set of superposed

text on the image.The output are a set of filmic seg-

ments. Each sement is defined by a set of knowl-

edges. These knowledges are represented by semi-

otics descriptions ( Dominant theme, taxeme, Dis-

cours themes and specified themes) as is indiquated

in Figure 8. Therefore, we devote this section to pro-

vide an overview of our proposed approach which the

contribution finds its originality in:

• The extraction of knowledges from the content.

These knowledges are represented as semiotic de-

scriptions of different analytical modalities (tex-

tual and visual).

• The automation of the description process.

• The fusion between the modalities to improve the

semantic description of the film.

The objectif behind the extraction of this type of kg-

nowledge is is undoubtly a better interrogation of au-

diovisual document. In fact, the general principle of

our approach consists of three phases: i) the analy-

sis and textual segmentation phase ii) the knowledge

extraction phase and iii) the combination and identifi-

cation of description phase.

3.1 The Analysis and Textual

Segmentation

Knowing that the movie audiovisual document in-

volves mainly three necessary phases; a pre-

production phase, a production phase and a post-

production phase, we find it essential to exploit some

documentations used in these phases in order to ex-

tract the semantic description. In this paper, we

should be based on the documentation prepared in the

pre-production phase such as the script of the film

through a textual analysis. As this first analysis can

hide some realities linked to the content of the film

after production, we suggest analyzing the movie in

its post-production phase, using the visual modality.

A primordial phase in such a process consists of the

segmentation and the analysis of the used textual doc-

uments, namely, the scenario and the texts superposed

on the images.

On the other hand, given that the text in the au-

diovisual documents represents an important source

of description, we interest in (Fourati et al., 2015b)

to extract superposed texts from the images of audio-

visual documents through the visual modality. The

proposed approach comprises of two phases: a learn-

ing phase based on the neural network learning and

text detection phase. The latter consists of three steps:

a pre-treatment step consists in grouping adjacent re-

gions with the morphological operations, then the sec-

ond step consists in extracting the candidate text areas

and finally, the refinement step through a Sobel filter.

In the text detection phase, the steps previously cited,

are essentially preceded by a temporal segmentation

step to obtain the text localization. Once the text is

localized, we used the Optical Character Recognition

Tesseract (OCR) for the recognition of the superposed

text on the images. More precisely, we adopted the

impute of Tesseract (OCR) by our result of the pro-

cess text localization.

Besides, our aim is to take advantage of the dis-

course and the different knowledge included in the

script in order to extract the descriptions. Then,

once the inputs of our approach are obtained, we

propose an analysis of the discourse present in the

pre-production (the script) and the post-production

(the superposed text in the image) phases in order

to extract the semiotic description proposed by Peter

Stockinger (Stockinger, 2013).

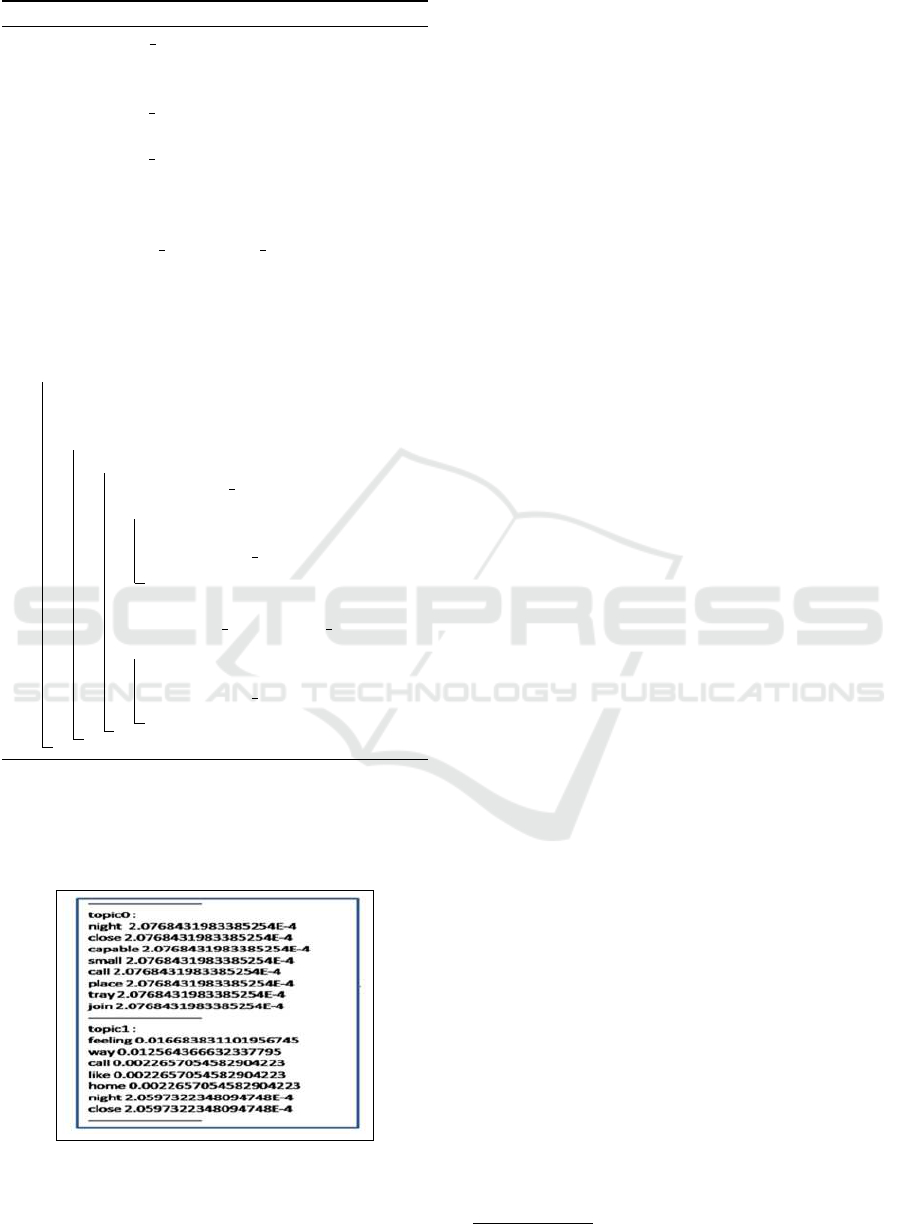

In order to segment the script, we are based on the

structure of the scripts writed written by the script-

writer of films. This structure follows a well-defined

model that presents each scene separately through a

”slug line”. In fact, this line starts by necessarily with

”.EXT”, ”.INT”, or ”scene1”, ”scene2”, etc. The fol-

lowing figure shows an example of a secene with a

slug line that starts with ”INT.”.Based on these lines,

we propose segmenting the script into speech units.

Once the speech units are obtained, we will cat-

egorize the movie scene in order to bring back the

textual segment of the superposed text segment. In

fact, we propose to measure the similarity between the

superposed texts of the images and the speech units.

Therefore the results achieved in this phase are:

• (A): A set of speech units that containt a discourse

in each scene.

• (B): A set of superposed texts in each movie seg-

ment.

These results were taken as starting points for our

KEOD 2019 - 11th International Conference on Knowledge Engineering and Ontology Development

206

Figure 1: The proposed approach.

Figure 2: Example of a scene with a slug line.

knowledge extraction phase, in which the semiotic de-

scriptions are extracted from the content of the movie.

3.2 The Knowledge Extraction

Based on the results achieved in the previous phases

(a) and (b), we propose in this phase to extract knowl-

edge separately from each result. Therefore, we find

it essential to identify both the theme and the topic.

Consequently, it is interesting to collect the obtained

ideas and knowledge from the state of the art to the

extraction of topic and themes. In fact, different re-

lated studies proved the performance of LDA prob-

abilistic technique for the extraction of topics from

textual documents. The principle of this probabilistic

technique is described in the two following steps:

1. Step1: A Preatreatment Step: In order to reduce

the number of the sample data, we proposed to

refine the given input text through the following

pretreatment step:

• The Removal of stop words :all insignificant

terms.

• The Lemmatization of all the terms through the

Stanford lemmatizer.

• The measurement of the pertinence of each

term: We propose to measure the relevance of

each term in the appropriate segment. We use

the metric TF-IDF (Term Frequency-Inverse

Document Frequency) as in the previous result

proposed by (Fourati et al., 2014) (in the genre

and key word extracted from the synopses). In

order to extend the scope of the terms, we mea-

sure the pertinence of the term with all its syn-

onyms and hyponyms of all genres, which are

extracted from the content defined by the Word-

net. In fact, the following algorithm presents

the steps to follow when measuring the perti-

nence of the terms.

2. Step2: Elicitation of Topics: The LDA (Blei

et al., 2003) represents a probabilistic technique

that enables to explore the hidden topic in the tex-

tual document with word distribution associated

with each topic and its pertinence value. In order

to apply this technique, a set of estimated param-

eters can be manually defined, such as, K, α and

β.

Hence, the general principle of the LDA model is

described as follows:

• K: Represents the number of topics. To select

this number, we start with the assumption that

a movie segment cannot present more than a

topic. In fact, we define k = n/2 with n repre-

sents the number of segment in the document.

• α: Represents the priori weight of topic k in a

document.

• β: Represents the priori weight of word w in a

topic.

In this phase, to apply the LDA method in each

result of the first phase separeatly (LDA with (a) and

A Combination between Textual and Visual Modalities for Knowledge Extraction of Movie Documents

207

Algorithm 1: Pertinence Measure.

Input : XML File: Represent the keywords

and the genre,

Keyword[l]:table of keyword,

Matr mot[n,m]: matrix represent all

segment and their term

Matr genre[gn,sh]: matrix

represent genre of document and synonym

and hypernyms

Output: Tabword =

word1 pert,word2 pert,etc,

Pert[m] : table o f pertinence,

n : number o f segments,

m : number o f terms et

l : number o f keywords

1 begin

2 k < −0

3 M < −Wordnetresult

4 for ( i ∈ [1..n] ) do

5 for ( j ∈ [1..m] ) do

6 if exist(Matr mot[i],Keyword) =

true then

7 Pert[k] := T F −

IDF(Matr mot[ j], Doc)

K := k + 1

8 else if

exist(Matr mot[i],Matr genre) =

true then

9 Pert[k] := T F −

IDF(Matr mot[ j], Doc)

K := k + 1

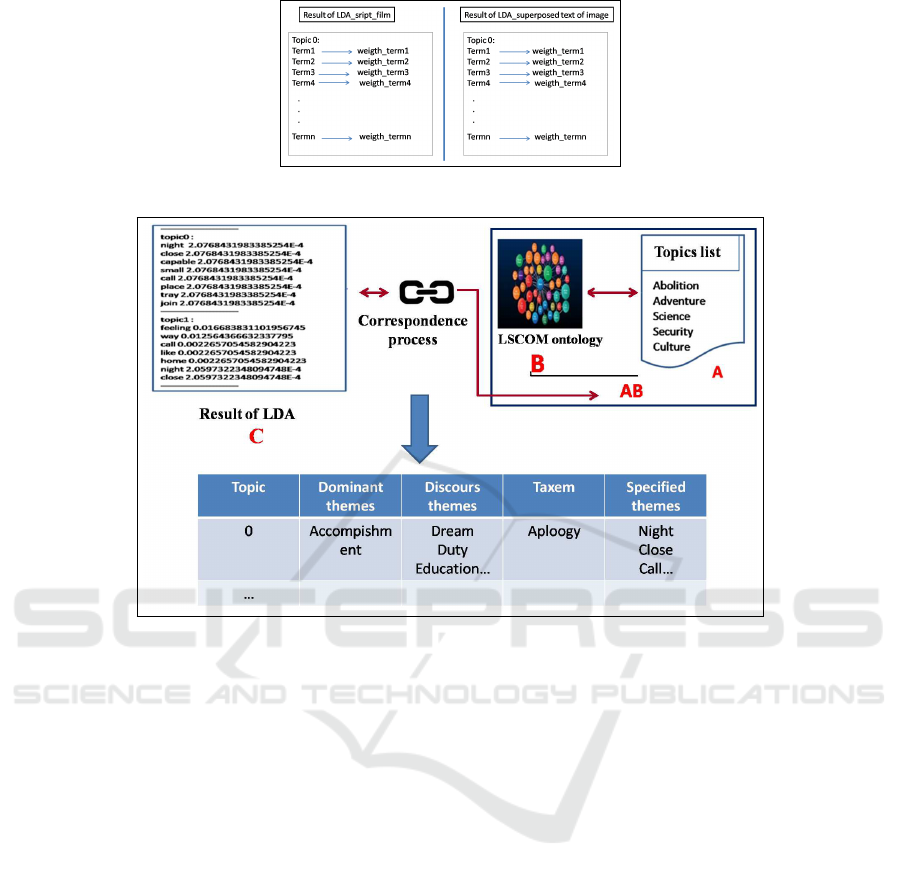

LDA with (b)). The result of this step helped obtain

a set of terms each of which represents a topic related

to the film document. Figure 3 shows a sample of the

result of the ”LDA” model.

Figure 3: Extract of the result of the LDA model.

As mentioned in Figure 3, the result of the adapted

”LDA” model shows discrete subjects including a set

of keywords. This set of keywords is considered as a

set of ”specified themes” each of which is segmented

to obtain a text from the audiovisual document. Fig-

ure 4 shows an example of the result achieved in the

previous phase.

3.3 The Combination and Identification

the Descriptions

Once knowledges are extracted from each ressource,

we focus in this phase not only on the combination

between the results obtained from each modality, but

also on the identification of the semiotic descriptions.

In fact, the herein phase includes essentially two pro-

cesses: the correspondance between the topic and the

theme and the modality combination and identifica-

tion of the semiotic descriptions.

3.3.1 Correspondance between the Topic and

the Theme

As previously mentionned, the obtained result with

the LDA technique is a set of abstract topics with-

out the indication of the semantic of each topic. In

fact, we chose to use a second technique for the iden-

tification of topics in each theme set and keywords.

In order to extract the possible semiotic descriptions,

we propose to correspond each considered topic to a

generic concept that refers to a topic of the movie. In

fact, we are interested in the extraction of the con-

cepts of the topic based on a correspondance process

between the result of the LDA of each imput and

a source of the semantic analysis. In this case, we

need a vocabulary of concepts in order to identify the

generic concept of a set of themes. Moreover, in this

respect, we propose the use of two sources of knowl-

edge as mentionned in Figure 8.

• (A): a theme list of proprietary and non-

exhaustive film themes collected from the Internet

(1000 themes).

• (B): The ontology LSCOM

2

(Large Scale Con-

cept ontologyMultimedia) (1000 concepts where

each concept is described by a definition). Be-

sides, the concepts are organized into six cate-

gories: objects, event activities, places, people,

graphics, and program categories.

It is on the basis of these sources of knowledge (A)

and (B)) as well as on our results of the ”LDA” model

( (C)) that we propose a solution for the definition

2

http ://www.ee.columbia.edu/ln/dvmm/lscom/

KEOD 2019 - 11th International Conference on Knowledge Engineering and Ontology Development

208

Figure 4: Example of the result of the knowledge extraction phase.

Figure 5: Correspondance process.

of the topic and the different semiotics descriptions.

Such a solution is based on two levels of correspon-

dence.

The first level is to match the list of (A) topics

with the ontology concepts and their definitions (B).

In fact, the main objective of this level is to enrich

the semantics related to each theme and etend the the-

matic concept field.

The second level consists in matching the first

level (AB) result with the ones of the LDA model (C).

We propose to measure the similarity between themes

/ concepts in order to discriminate the semantics be-

tween themes / concepts. In this respect, several

works of literature have demonstrated the importance

of the taxonomic measurement ”Wu and Palmer”

(Dascalu et al., 2013), (Harispe et al., 2014) (He et al.,

2016) to measure the similarity between terms and

concepts. Therefore, the most similar theme to our

results of the LDA model (C) represents the domi-

nant theme. In addition, each extracted theme having

a similarity between the result of ’C’ and that of (AB)

different from zero is considered as a theme of the

discourse.

According to Stockinger (Stockinger, 2003), the

taxeme represents the relevant theme of the discourse

in the topic. To this end, referring to the dominant

theme, we propose to measure the ”Wu-Palmer’s”

similarity between the dominant theme and the dif-

ferent themes of discors. As a result, the themes of

the discourse which have the maximum value repre-

senting the taxeme.

After identifying the concepts related to each set

of themes; two cases are then possible:

• If the set of themes is represented by a single con-

cept ’ci’, we consider ’ci’ as a relevant concept,

in other words as a relevant topic for the film. We

then define ’C’ as a set of the different relevant

concepts with C = ’ci’.

• If the list of themes is represented by different

concepts, we notice a disambiguation process.

Our process is based on the following assumption

”an audiovisual segment can not cover more than

one subject.” To derive the relevant concept from

each segment, we apply the following rule:” if a

set of themes is represented by different concepts,

we measure the similarity between these concepts

and all of the relevant concepts ’C’.

In order to measure the similarity between con-

cepts, we use the measure ” Wu and Palmer ” (Chabi

A Combination between Textual and Visual Modalities for Knowledge Extraction of Movie Documents

209

et al., 2011). The process of the thematic description

allows the extraction of semiotic descriptions in a se-

mantic way from the content of the filmic documents.

Such a semantic description based on the information

extracted from the content, becomes a necessary con-

dition for a better interrogation. Figure 8 shows an ex-

ample of the result of the correspondance process in

order to extract the following semiotic descriptions:

Dominant theme, themes of discourse, taxeme and

specified themes.

This correspondance process is applied firstly with

the result of LDA for the script of the film and sec-

ondly with the result of LDA for the superposed text

of the image. Futhemore, based on these obtained re-

sults, we move now to identify the appropriate generic

semiotic description for the movie document.

3.3.2 Modalities Combination and Identification

of Semiotic Descriptions

The results of the previous process (the relevance

measure of each detected topic and the correspon-

dance between topic and theme) should be considered

as a starting point for combining the used modalities

and in order to identify the semiotic descriptions re-

lated to the movie. Figure 6 shows a summary of the

result of the previous process.

As a summary, we should recall that we obtained

from each source a set of topics each of which is rep-

resented by:

• A dominant theme: Dominant theme i

• A set of discourse themes: discourse theme0, dis-

cors theme1,...

• A taxeme

• A set of specified themes: sp0,sp1,...

On the other hand, the goal behind modality combi-

nation and the obtained results is undoubtly a better

description extracted from the movie content. Such

a phase is composed of the following two steps: (1)

identification of semiotic descriptions and (2) seg-

ment structuring.

1. Identification of Semiotic Descriptions: In or-

der to identify the semiotic description, two cases

are therefore possible:

• Case1: If dominant theme i of topic i from the

script and the superposed text of the image are

identical then segment i is represented by:

seg

i={Dominant theme i,

{discors theme}={discors theme from the

script ∪ discors theme from the script},

{specified theme}={ { specified theme from

the script ∪ specified theme from the super-

posed text of the image}, taxeme= the taxeme

that represents the maximum value from the

”Wu-Palmer” similarity. } }

• Case2: If dominant theme i of topic i from the

script and the superposed text of the image are

different, therefore, we propose to measure the

pertinence value of the dominant theme. To

measure this pertinence, we the dominant fo-

cus on the relevence of each discourse theme,

which is based on the result value from the

”Wu-Palmer” similarity. We consider that:

Ps i= Wu-Palmer similarity(discourse theme i,

Dominant theme i of Topic i from the script)

Pt i= Wu-Palmer similarity(discourse theme

i, Dominant theme i of Topic i from the

superposed text of the image)

In order to measure the pertinence value of each

discourse theme two cases are then possible:

– (i): If discourse theme i exists in topic i from

the script and Topic i from the superposed text

of the image then :

pertinence discors theme i = max(Pti,Psi)

(1)

We consider that discourse theme i has the

highest relevance value as Dominant theme i.

Furthemore, segment i is represented by:

seg i={Dominant theme i= discourse theme

that has the highest relevance value,

{discourse theme}={discourse theme from

the script ∩ discourse theme from the script},

{specified theme}={ { specified theme

from the script ∩ specified theme from the

superposed text of the image}, taxeme= the

discourse theme that represent the second

value from the discourse theme pertinence . }

}

– (ii): If there is no common discourse theme in

topic i from the script and topic i from the su-

perposed text of the image then we consider

that this segment maybe modified in the pro-

duction phase of the film. The segment is rep-

resented by the diff

´

erent semiotic description

extracted from the the superposed text of the

image.

2. Segment Structuring: The result of the iden-

tification of the semiotic description phase is a

set of segments ”S” with S={Segi} and Segi

={Start, end, Dominant theme i, discors theme},

{specified theme}, taxeme.

The process used in this phase helps explain the

KEOD 2019 - 11th International Conference on Knowledge Engineering and Ontology Development

210

Figure 6: Summary of results.

relationship between different semiotic descrip-

tions. In this phase, our purpose is to structure

segment movie documents based on the descrip-

tion that represents a semantic relationship. Such

a semantic segmentation , which is related essen-

tially to the description extracted from the con-

tent of the document, becomes a necessary con-

dition for linking the document content and de-

scription. In fact, we intend to segment and struc-

ture movie documents through the topics and the

theme. To do this, two types of segmentations,

such as, segmentation based topic and segmen-

tation based theme are highlight. The extracted

structure is presented in Figure 7 as follows.

4 EXPERIMENTATION

Once the semiotic descriptions of the film content

have been automatically obtained, an experimentation

process will take place to evaluate the results of the

proposed approach. Faced with the absence of simi-

lar film methods in the literature to extract the same

semiotic descriptions, we proposed in (Fourati et al.,

2015a) a semi-automatic semiotic description method

to experiment our work. This method is based on the

manual annotation.

This section presents the different results of exper-

iments conducted to evaluate our approach of extract-

ing thematic semiotic descriptions. Such a process is

based on the extraction of dominant themes, discoures

themes, taxeme and specified themes. Two series of

experiments are carried out. We therefore concentrate

our efforts on the study of the results of the detection

of semiotic descriptions through a comaprison of our

results with human’s judgments. Subsequently, the

second series is designed to compare our automatic

method to the semi-automatic one. We use the Grou-

pLens

3

database which will be described in the next

subsection.

3

http ://grouplens.org/datasets/

4.1 Data Set

In order to carry out our experimental study, we used

the GroupLens dataset. The GroupLens: It is an on-

line movie database which contains basically three

sub-databases: a movie database named ”Movie-

Lens”, a user database and a rating database. In our

work, we are interested in the sub-database ”Movie-

Lens”. Indeed, the latter consists of 10731 films that

consider 18 film genres.

4.2 Validation Technique

To evaluate the performance of our solution for the

different experiments, we use the following perfor-

mance measures: Recall, Precision and F-measure.

The adapted formulas are:

Recall =

T p

T p + Fn

(2)

Precision =

T p

T p + Fn

(3)

F measure = 2 ∗

Recall ∗ Precision

Recall + precision

(4)

With:

• Tp represent the number Of similar descriptions

identified from our result and the expert result.

• Fp represent the number Of descriptions not iden-

tified from our result and identified from the ex-

pert.

• Fn represent the number Of descriptions not iden-

tified from our result and not identified by the ex-

pert.

Then: Tp+Fn represents the total number of pertinent

descriptions and Tp+Fp represents the total number

of detected descriptions.

A Combination between Textual and Visual Modalities for Knowledge Extraction of Movie Documents

211

Figure 7: Movie Structuring.

Figure 8: Result system.

4.3 Analysis of the Results

Our focus in this series of experiments on the analysis

and the validation of our proposed approach with re-

sults defined by an human’s judgment. The human’s

judgments are provided by a domain expert in multi-

media analysis. The expert analyzes the audiovisual

segments presented in the movie script and based on

the list of themes used in our work he gives his opin-

ion. Thereafter, for each film, we compare the dis-

course theme description extracted from our system

with the result proposed by an expert using the same

movies indicated in the ’MovieLens’ dataset. Table 1

shows an example of the obtained results.

By comparing our system results to the ones de-

fined by a human’s judgment, we find that they are

satisfactory. In fact, we notice that descriptions are

almost similar. Moreover, using the validation tech-

nique, we obtained a recall value equal to 88.90%, a

precision value equal to 93.04% and an F-measures

equal to 90.92 %. These results allow us to conclude

that the experimental results are interesting.

4.4 Comparison between our Proposals:

Automatic and Semi-automatic

Approach

Faced with the absence of similar research studies in

the literature, we proposed in (Fourati et al., 2015a)

a semi-automatic method based on the manual anno-

tation to extract the same semiotic descriptions ex-

KEOD 2019 - 11th International Conference on Knowledge Engineering and Ontology Development

212

Table 1: Example of the annotation system result.

Segments start time Our System

Result

Expert Result

’Day of the dead’

S1 00:00’ y sanctuar sanctuar

S2 01:12’ entitlement action

S15 100:00:00’ euthanasia euthanasia

S19 118:01:00’ gay gay

S21 138:00:00’ accomplishment accomplishment

’The twilght’

S2 06:03 Lycia Lycia

S3 09:09’ danger danger

S6 15:14’ liberty liberty

S14 44:10’ Emotion Emotion

S17 117:00’ jazz information

’The promised’

S3 15:57’ information recognition

S4 20:24’ amazons amazons

S6 33:52’ duty duty

S9 56:41’ lost lost

S10 103:00:00’ discovered discovered

Table 2: Evaluation measure for comparison between an

automatic and a semi-automatic approach.

Automatic semi-automatic

Recall 88.90 % 90.95%

Precision 93.04% 95.72%

F-measure 90.92 % 93.27%

tracted of our approch. After having evaluated this

method by comparing the results with those of a do-

main expert, we present in this section the result of

our experiments. In order to compare the results

of the automatic approach with the semi-automatic

method, we use the ”MovieLens” dataset. Table 2

shows the results obtained by adopting the evalua-

tion techniques more precisely, the : Recall, Precision

and F-measures. The results provided for the thematic

semiotic descriptions of the filmic documents are sat-

isfactory. Indeed, we managed to segment the audio-

visual documents in a thematic way.

5 CONCLUSION AND

PERSPECTIVES

As part of the description of the content of audiovisual

documents, we proposed an approach that follows a

thematic process through different semiotic descrip-

tions.

Recall that among the desirable criteria of a

mechanism of describing audiovisual documents of a

”film” nature is the integration of one or more modal-

ities. As a result, it is important to extract descrip-

tions from pre-production and post-production filmic

documents. Indeed, we proposed in this paper an ap-

proach of description of the filmic documents which

takes into consideration the various descriptions cited

throughout this paper by offering a thematic descrip-

tion approach based essentially on an automatic ap-

proach. The input of our approach are a movie script

and a set of superposed text on the image.The output

are a set of filmic segments. Each sement is defined

by a set of knowledges. These knowledges are rep-

resented by semiotics descriptions ( Dominant theme,

taxeme, Discours themes and specified themes) This

approach is based on the use of the statistical model

”LDA” combined with a semantic technique.

In this paper, two series of experiments are dis-

cussed. The first series focuses on the compari-

son between our system results and those defined

by an expert. In the second series, we proposed a

comparison between the results of our automatic ap-

proach and our previously proposed semi-automatic

approach (Fourati et al., 2015a), which enabled us to

have more satisfactory results.

Finally, encouraged by our achieved results, we

propose in our future research studies to combine the

results of the two proposed approaches by combining

different sources of information related to the content.

REFERENCES

Atkinson, J., Gonzalez, A., Munoz, M., and Astudillo, H.

(2014). Web metadata extraction and semantic index-

ing for learning objects extraction. Applied Intelli-

gence, 41(2):649–664.

Bellegarda, J. R. (1997). A latent semantic analysis frame-

A Combination between Textual and Visual Modalities for Knowledge Extraction of Movie Documents

213

work for large-span language modeling. In EU-

ROSPEECH.

Blei, D. M., Ng, A. Y., and Jordan, M. I. (2003). Latent

dirichlet allocation. the Journal of machine Learning

research, 3:993–1022.

Bougiatiotis, K. and Giannakopoulos, T. (2016). Content

representation and similarity of movies based on topic

extraction from subtitles. In Proceedings of the 9th

Hellenic Conference on Artificial Intelligence, pages

1–7. ACM.

Chabi, A. H., Kboubi, F., and Ahmed, M. B. (2011). The-

matic analysis and visualization of textual corpus.

arXiv preprint arXiv:1112.2071, 2:1–16.

Cheng, C.-H. and Hung, W.-L. (2018). Tea in benefits of

health: A literature analysis using text mining and la-

tent dirichlet allocation. In Proceedings of the 2nd

International Conference on Medical and Health In-

formatics, pages 148–155. ACM.

Dascalu, M., Dessus, P., Trausan-Matu, s., Bianco, M., and

Nardy, A. (2013). Readerbench, an environment for

analyzing text complexity and reading strategies. In

Artificial Intelligence in Education, pages 379–388.

Springer.

Fourati, M., Chaari, A., Jedidi, A., and Gargouri, F. (2015a).

A semiotic semi-automatic annotation for movie au-

diovisual document. In 15th International Confer-

ence on Intelligent Systems Design and Applications

(ISDA) 2015, pages 533–539. IEEE.

Fourati, M., Jedidi, A., and Gargouri, F. (2014). Automatic

audiovisual documents genre description. In 6th Inter-

national joint conference on knowledge discovery and

informationretrieval (KDIR 2014), Rome, Italy, pages

21–24.

Fourati, M., Jedidi, A., Hassin, H. B., and Gargouri, F.

(2015b). Towards fusion of textual and visual modal-

ities for describing audiovisual documents. Interna-

tional Journal of Multimedia Data Engineering and

Management (IJMDEM), 6(2):52–70.

Harispe, S., Senchez, D., Ranwez, S., Janaqi, S., and Mont-

main, J. (2014). A framework for unifying ontology-

based semantic similarity measures: A study in the

biomedical domain. Journal of biomedical informat-

ics, 48:38–53.

He, Y., Li, Y., Lei, J., and Leung, C. (2016). A framework of

query expansion for image retrieval based on knowl-

edge base and concept similarity. Neurocomputing,

-:Inpress.

Hofmann, T. (1999). Probabilistic latent semantic index-

ing. In Proceedings of the 22nd annual international

ACM SIGIR conference on Research and development

in information retrieval, pages 50–57. ACM.

Jelodar, H., Wang, Y., Yuan, C., Feng, X., Jiang, X., Li, Y.,

and Zhao, L. (2019). Latent dirichlet allocation (lda)

and topic modeling: models, applications, a survey.

Multimedia Tools and Applications, 78(11):15169–

15211.

Kurzhals, K., John, M., Heimerl, F., Kuznecov, P., and

Weiskopf, D. (2016). Visual movie analytics. IEEE

Transactions on Multimedia, 18(11):2149–2160.

Martin, J. P. (2005). Description semiotique de contenus

audiovisuels. PhD thesis, Paris 11.

Mocanu, B., Tapu, R., and Tapu, E. (2016). Video retrieval

using relevant topics extraction from movie subtitles.

In 12th IEEE International Symposium on Electronics

and Telecommunications (ISETC), 2016, pages 327–

330. IEEE.

Rahangdale, A. and Agrawal, A. (2014). Information ex-

traction using discourse analysis from newswires. In-

ternational Journal of Information Technology Con-

vergence and Services, 4(3):21.

Sanchez-Nielsen, E., Chavez-Gutierrez, F., and Lorenzo-

Navarro, J. (2019). A semantic parliamentary mul-

timedia approach for retrieval of video clips with con-

tent understanding. Multimedia Systems, pages 1–18.

Stockinger, P. (2003). Le document audiovisuel: procedures

de description et exploitation. Hermes.

Stockinger, P. (2011). Les archives audiovisuelles: descrip-

tion, indexation et publication. Lavoisier.

Stockinger, P. (2013). Audiovisual Archives: Digital Text

and Discourse Analysis. John Wiley Sons.

Tang, P., Wang, C., Wang, X., Liu, W., Zeng, W., and Wang,

J. (2019). Object detection in videos by high quality

object linking. IEEE transactions on pattern analysis

and machine intelligence.

Tapu, R. and Zaharia, T. (2011). High level video temporal

segmentation. In International Symposium on Visual

Computing, pages 224–235. Springer.

KEOD 2019 - 11th International Conference on Knowledge Engineering and Ontology Development

214