Universal Encoding for Provably Irreversible Data Erasing

Marek Klonowski

1

, Tomasz Strumi

´

nski

2

and Małgorzata Sulkowska

1

1

Faculty of Fundamental Problems of Technology, Department of Computer Science,

Wrocław University of Science and Technology, Poland

2

BrightIT, Wrocław, Poland

Keywords:

Data Deletion, Provable Security, Formal Analysis.

Abstract:

One of the most important assumptions in computer security research is that one can permanently delete some

data in such a way that no party can retrieve it. In real-life systems this postulate is realized dependently on

the specific device used for storing data. In some cases (e.g., magnetic discs) the deletion/erasing is done

by overwriting the data to be erased by new one. Many evidence suggest that such procedure may be not

sufficient and the attacker armed with advanced microscopic technology is capable in many cases of retrieving

data overwritten even many times. In this paper we present a method that provides provable, permanent and

irreversible deletion of stored bits based solely on special encoding and processing of data. More precisely the

adversary learns nothing about deleted data whp. The security guarantees hold even if the attacker is capable

of getting bit-strings overwritten many times. Moreover, in contrast to some previous research, we do not

restrict type of data to be deleted.

1 INTRODUCTION

In many security related research it is often (silently)

assumed that any data can be deleted on demand. That

is, one can perform an action such that locally stored

data is instantly removed and no one can learn it any-

more. In many systems such deletion is in fact real-

ized in a way that some pointer representing physical

region of memory is marked as ready for being re-

used. Clearly, data deleted in such a way can be eas-

ily accessed using a special software as long as the re-

spective memory region has not been used yet. Thus,

more aware users aiming at irreversible deletion write

some (possibly) meaningless data on the critical re-

gion.

In the case of magnetic discs it has been quickly

noticed that overwriting the memory to be deleted

only once may be not sufficient, since the adversary

having access to more advanced techniques (mainly

microscopic) is capable of retrieving the original data

((Gomez R. D. et al., 1993; Gutmann Peter, 1996;

Hughes G. et al., 2009; Mayergoyz I. D. et al., 2001)).

As a consequence some more elaborated methods of

removing data has been suggested in (Gutmann Pe-

ter, 1996). Bulk of them are heuristics based on the

idea of overwriting region of the memory using a spe-

cial, usually alternating, patterns. The presented ideas

seem to be convincing and moreover in some cases

authors present some experimental examples show-

ing that after applying their methods retrieving data

is not possible given more or less advanced tech-

niques. However there is no formal proof that such

approach really works. Moreover, judging by secu-

rity discussions in (Gutmann Peter, 1996; Hughes G.

et al., 2009; US Department of Defense, 1997; U.S.

National Institute of Standards and Technology, 2006)

we cannot be sure what is the minimal sufficient num-

ber of layers overwriting the original one to assure ir-

reversibility of deletion.

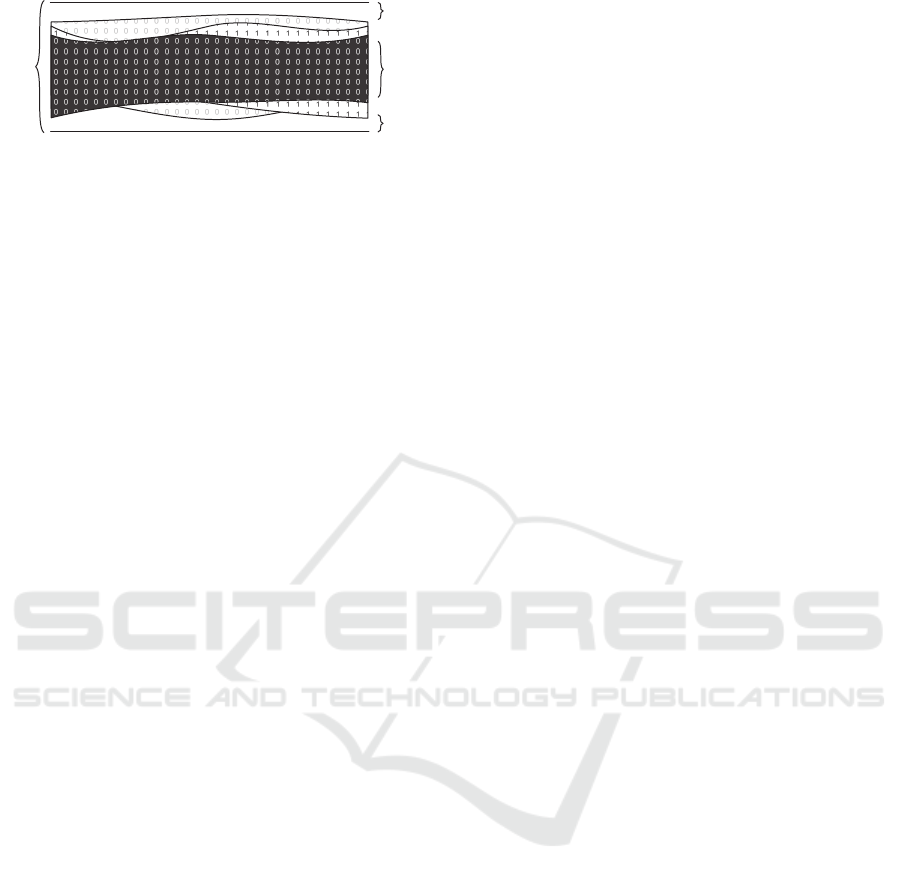

To explain the nature of the problem let us recall

how data (or a single bit) is represented on the mag-

netic disc. For contemporary discs the width of the

path representing consecutive bits is approximately

150 − 200 nm, moreover, there are separating guard-

bands of width of 20 nm. The overwritten data can

be revealed because the new bit is not written to the

same physical location as the previous one. That is,

the physical mark representing a particular bit can be

slightly moved from its expected position whenever

we overwrite an old bit. The overview of this situation

is presented in Figure 1. This enables the adversary to

get in some cases the former, overwritten bit if only

he has access to a sufficiently sensitive device.

The physical mark has to be placed on a given po-

Klonowski, M., Strumi

´

nski, T. and Sulkowska, M.

Universal Encoding for Provably Irreversible Data Erasing.

DOI: 10.5220/0007922101370148

In Proceedings of the 16th International Joint Conference on e-Business and Telecommunications (ICETE 2019), pages 137-148

ISBN: 978-989-758-378-0

Copyright

c

2019 by SCITEPRESS – Science and Technology Publications, Lda. All rights reserved

137

guardband

guardband

track

lastwrittenbit

Figure 1: Picture of a magnetic disc: black path represents

the current data (bit 0), while the white/grey the previous,

overwritten data 1 and 0.

sition in an extremely short time - therefore some in-

accuracies appear. This leads to possibility of retriev-

ing some data using technologies like MFM (mag-

netic force microscopy) that is an advanced form of

SPM (scanning probe microscopy, SPM) and makes

it possible to localize even extremely small magne-

tized scrap of the disc. As pointed in (Gomez R. D.

et al., 1993; Rugar D. et al., 1990) in many real-life

examples MFM is enough to read the data with suf-

ficient accuracy. There are even more sophisticated

methods like magnetic force scanning tunneling mi-

croscopy, MTM and spin-stand imaging (Gutmann

Peter, 1996). Moreover one can expect that in fore-

seeable future the adversary may have access to even

stronger diagnostic devices and use it against today’s

storage devices.

To sum up,

• one cannot be sure if someone could try to retrieve

deleted data from today’s devices in the future;

• due to dynamic progress in magnetic microscopy

techniques one cannot predict what devices can be

used to retrieve data in the future.

This does not mean that every single “overwrit-

ten” bit can be retrieved after arbitrary long time. It

means however that some bits can be retrieved after

long, unpredictable time and there is still a risk of un-

wanted and unexpected information leakage. Let us

also stress that if a single bit can be retrieved then it is

more likely that the neighboring bits can be retrieved

as well. Indeed, one may suspect that inaccuracies

in positioning of magnetic heads for close places can

be correlated. For that reason it is possible that the

adversary could get a meaningful part of contiguous

bits instead of an isolated (and probably useless) bits.

For these reasons the only fully reliable method sug-

gested at hand is the physical destruction of the (mag-

netic) device. Destroying the devices is not accept-

able, however, in most of areas of application.

Considered problem may be also solved using

disk encryption. One can always write sensitive data

in an encrypted form to the disk and store the appro-

priate key on, say, cryptographic chip. However, in

our approach we want to assume that no additional

devices (like chips) are necessary. We suggest an ap-

proach to data deletion based solely on a special data

encoding that guarantees provable security even in the

presence of an adversary capable of recognizing all

physical marks (i.e, all history) that were written in a

given place of the disc in the past. Note that it is a

very strong assumption - indeed, the adversary may

learn the data overwritten arbitrary number of times.

At first glance it seems that one cannot hide any infor-

mation from such a strong adversary. We show that it

is not true if we apply a special encoding and deletion

procedures.

The proposed methods is not restricted to mag-

netic discs and can be applied to different types of

data storage devices. Namely our analysis of secu-

rity is valid as long as some requirements described in

Section 2.1 are fulfilled. That is, our method is based

on a modified data representation/organisation and

potentially can be applied even in a future-generation

devices. In the case of wide class of standard devices

just by replacing the firmware.

On the downside our method leads to moderate

space overhead. More precisely the same device using

new encoding can represent less bits (say, two orders

of magnitude) when compared to the standard way

of using this device. Moreover the data processing

(writing and reading stored bits) may be one order of

magnitude slower. Let us also note that in some pub-

lications authors claim that the investigated concern

is overblown. Namely, they suggest that in recently

produced (extremely dense) magnetic data storage de-

vices data overwritten even once cannot be retrieved

in practice ( Gutmann P., 1996).

In summary, our method is rather not intended for

deleting all possible data. We imagine that the pro-

posed technique can be useful in the systems wherein

particularly sensitive data is processed but the users

can accept a longer processing time and loss of some

space. At this price we get a method of removing

the data that is provably secure even in the presence

of a very strong adversary. That is, we imagine that

the presented methods can be used for example for

data that needs to be particularly secured (e.g., cryp-

tographic material - including secret keys or seeds for

pseudo random number generators; data bases with

sensitive personal information; descriptions of new,

original industrial technologies etc.).

1.1 Related Work

There are many papers related to our results. In

(Gomez R. D. et al., 1993; Gutmann Peter, 1996;

Hughes G. et al., 2009; Mayergoyz I. D. et al., 2001)

one can find information about microscopic tech-

niques and properties of magnetic discs in the context

SECRYPT 2019 - 16th International Conference on Security and Cryptography

138

of data retrieval by a physical inspection.

Some practical methods of permanent data dele-

tion can be found in papers (Gutmann Peter, 1996;

Hughes G. et al., 2009; US Department of Defense,

1997; U.S. National Institute of Standards and Tech-

nology, 2006). All that papers assume overwriting the

original data several times using different patterns.

The only papers presenting formal analysis of data

deletion security we are aware of, are (Klonowski

et al., 2008) and (Klonowski et al., 2009) wherein

the encoding of the type presented below was intro-

duced (note however that the deletion method is sub-

stantially different). Those papers do not present fully

formal analysis and are limited to deletion of the data

from a very narrow type of distribution in contrast

to this paper wherein we present universal methods

that can be used for arbitrary types of data. More-

over some of our methods are much faster while some

other require optimal number of operations for getting

demanded level of security. In the mentioned papers

also a very strong adversary was considered, however

the analysis was completely different. Namely the se-

curity was considered only for hiding purely random

bit-string (that can be a good model of cryptographic

material including seeds for pseudo-random bits or

secret keys).

In the current paper we analyze security of wiping

of much wider classes of bit-strings to be securely re-

moved (including the case of data to be deleted from

an arbitrary source). We also use a different secu-

rity measure that seem to be more adequate for real-

life systems based on differential privacy notion in-

troduced by Dwork et al. in (Dwork et al., 2006a;

Dwork, 2006). Using these definitions gives us strong

security guarantees and repels so-called linkage at-

tacks as well as some other useful properties including

immunity against data post-processing (see e.g (Cyn-

thia Dwork and Aaron Roth, 2013)).

Let us note that there is a well-developed body of

recent papers about efficient data deletion that can be

seen as somehow related results. They are assuming,

however, substantially different models of adversary’s

acting and data storing methods.

Many recent papers discuss the problem of data

deletion in a distributed system (especially in clouds),

wherein each piece of data can be stored in several

copies and may be processed by various entities (e.g.

(Hur J. et al., 2017; Wegberg G. et al., 2017; Ali M.

et al., 2017; Bacis E. et al., 2016)). There are also

significant recent results about publicly verifiable data

deletion in multi-user systems (Yang C. et al., 2018;

Ali M. et al., 2017; Hao F. et al., 2016). In contrast

to our approach, all that paper are mainly based on

cryptographic techniques and assume that the users

have access to secure devices, such that the adversary

has no access to overwritten data.

Let us stress that the considered problem is sub-

stantially different than ORAM (Oblivious Ram) in-

troduced in (Goldreich and Ostrovsky, 1996) which

aims at obscuring the operations being performed

(read/write) in the past. This problem is orthogonal

to our considerations. That is, each ORAM we are

aware of, assumes that the adversary has access to

the current state of memory, only. The same refers

to the recent ORAM-related papers discussing dis-

tributed deletion (e.g. (Roche et al., 2016).

Finally let us mention also some effort in con-

structing devices to allowing mitigation of retrieving

deleted data or other unexpected information leakages

((Jia et al., 2016; Moritz C.A. et al., 2015)) . The pre-

sented method is nevertheless orthogonal to the ap-

proach presented in our paper, in particular, do not

assume such a strong adversary.

While preparing this paper we were inspired by

two other papers not related directly to data deletion

- the first one is (Rivest and Shamir, 1982) wherein

authors present how a write-once memory (the state

representing bit 0 can be changed into bit 1 but the in-

verse operation is not feasible) can be to some extent

re-used by using a special encoding. In paper (Moran

T. et al., 2009) authors present deterministic method

of storing results of voting preserving privacy of indi-

vidual voters even in the presence of extremely strong

adversary.

1.2 Organization of this Paper

In Section 2 we present principles of a special encod-

ing extensively used later. After that we present and

justify the assumed mathematical model of the data

storage device. Introducing such model allows us to

abstract from all physical properties in the security

analysis. Finally, we briefly describe how deletion

methods work. In Section 3 we begin with analysis

of our deletion methods for different types of data. In

Section 4 we present and analyze a modified protocol

with very fast (optimal) execution time. In Section 5

we conclude and present some future work.

2 MODEL AND ENCODING

In this section we describe our methods - we refer to

magnetic discs however it can be also used for any

other data storing devices as long as they meet as-

sumptions of the presented model. First, let us assume

that every piece of the disc can be marked with one of

Universal Encoding for Provably Irreversible Data Erasing

139

two states representing 0 or 1. We assume the follow-

ing four-phase life cycle of a data storage device.

Preliminary Phase - this phase covers all actions

(marking initial states representing 0 or 1) per-

formed before the storage device is given to the

user for storing data; this phase can be performed

by regular user or even the manufacturer.

Regular Usage Phase - we assume that in this phase

the user is able to write (i.e. re-use the space)

an arbitrary number of times, always is able to

read the data written last time and knows an upper

bound for the number of changes she introduced.

Deletion Phase - in a given moment the user is asked

to perform some actions to make reading the disc

impossible.

Adversarial Inspection Phase - the adversary is

given the disc and techniques to correctly say

what states (0 or 1) have been marked in each

piece of the disc. For example in the case of the

disc depicted in Figure 1 the adversary can learn

that the state represents 0 overwriting previous

state 1. Moreover the adversary sees that the

previous state 1 covers the initial state 0. Clearly,

such model is extremely strong.

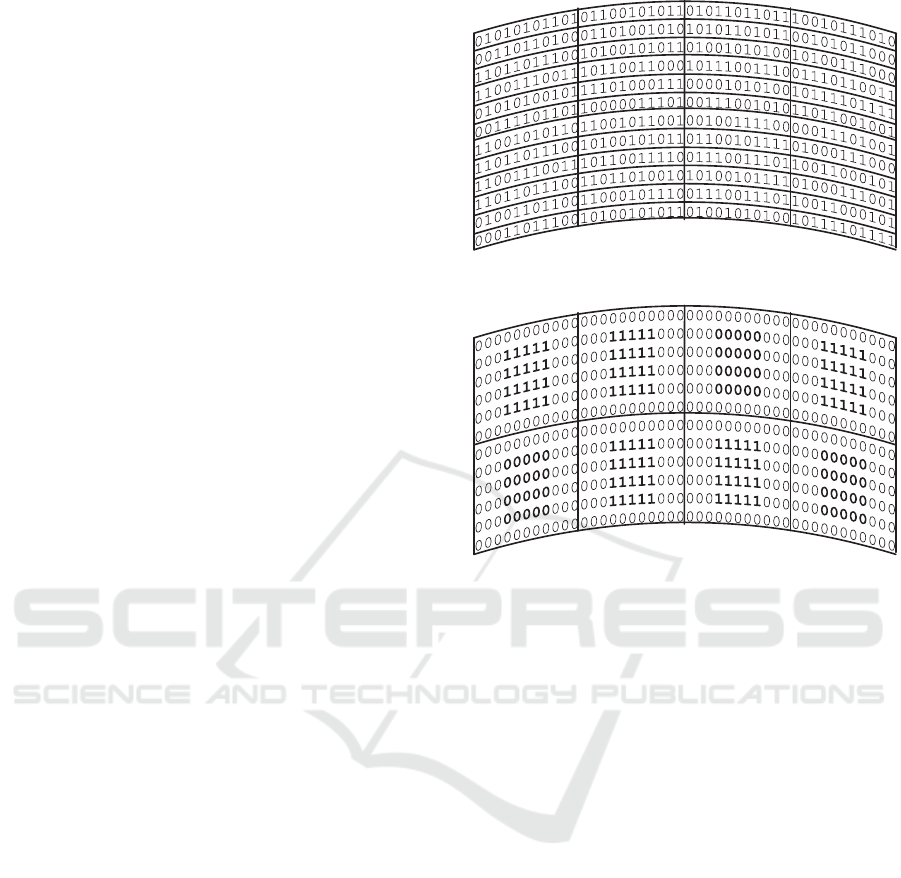

2.1 Special Data Representation

The idea of coding is based on dividing physical space

of a magnetic disk into rectangle-like areas that we

call boxes. Depending on the implementation, each

box may contain the space of several to several dozens

of regular bits. In our coding each box represents a

single logical bit in the new encoding. Each box is

divided into two subspaces. The first, inner one, rep-

resents the value of the box (which is 0 or 1) and the

outer, surrounding the inner one, plays the role of a

border and always consists of the state representing

0. This idea is depicted in Figure 3 and compared to

regular encoding in Figure 2. A box that in our cod-

ing is represented by 1, has got the state representing

1 in the inner part. Analogically a box representing 0

has 0 state in the inner part. Since outer part of the

box has always got only 0s, then box representing 0

contains only the state representing 0. To avoid ambi-

guity of notation we shall call a box that represents 0

a 0-box and a box representing 1 a 1-box.

To change the value of a bit one just checks the

value of a box and changes the inner part. We assume

that in each box the adversary can learn the changes

of the value of the box (i.e. state of the inner part) but

cannot learn anything about one box from another

boxes. This separation property is guaranteed by suf-

ficiently large separation area. That is, we assume that

Figure 2: Physical representation of standard layout.

Figure 3: The same disc in the new encoding. It is divided

into boxes representing bits 1,1,0,1 (upper row), 0, 1, 1, 0

(lower row).

even maximal feasible misalignment during changing

the state of one box that may occur does not influence

other boxes. This is the only assumption related to

the physical nature of the device. The rest is based on

special encoding.

2.2 Encoding

Each box represents a single logical bit. There are two

rules of encoding:

• at the beginning all boxes are set to 0;

• the state of the box is changed only from 0 to 1 or

from 1 to 0.

The second rule implies that the algorithm before

storing new bit has to check if such operation is nec-

essary. If the current state of a given box is the same

as the bit to be stored the algorithm does nothing.

All algorithms in this model work as follows. In

the Preliminary Phase the new disc representing no

information is filled with 0-boxes. Then possibly each

box can be replaced some number of times from 0 to 1

and from 1 to 0 a number of times. Note that each box

can experience a different number of such changes.

In Regular Usage Phase the user changes states of

boxes to represent the data she needs to store. Read-

SECRYPT 2019 - 16th International Conference on Security and Cryptography

140

ing the data is just checking the inner part of respec-

tive box.

In the Deletion Phase each representation of each

box is changed a number of times (from 0 to 1, from

1 to 0, and so on). Note that the number of changes

cannot depend on the number of changes in previous

phases. That is, the user (in contrast to the adversary)

has no access to overwritten data.

Let us note that such model is simplified and de-

viates from the current methods of data processing.

Namely in the current systems the following holds:

• data buffering – in not a single bits yet a bunches

of bits at once,

• data encoding – in real life systems data are en-

coded before they are written (using for example:

MFM, RLL, PRLM or EPRLM encoding (Gut-

mann Peter, 1996)).

The difference is not negligible, however it is

clear that the model can be realized by changing the

firmware, only. The price the user has to pay is lim-

ited space and slower data processing.

Le us note that this encoding method is based on

idea from (Klonowski et al., 2008), however the al-

gorithms presented below are different. Moreover we

provide here a formal proves for security declared

properties according to a stronger definition.

2.3 Adversarial View

We assume that the adversary has an access to all

“layers” - that is, for each box it can recognize all

bits represented by this box in the past. Due to the

assumed encoding the only knowledge the adversary

has, is the number of changes. Thus the whole disc

can be represented as a vector (y

1

,y

2

,.. .,y

d

) where y

i

is the number of changes in the i-th box.

Clearly the assumption that having good micro-

scope one can retrieve the data in every single place

is not realistic but one can agree that it is an upper

bound for capabilities of any real adversary.

Note that the knowledge of the regular user is sig-

nificantly smaller - that is, she knows some upper

bound for the number of changes she introduced and

can only distinguish between 0-box and 1-box on the

last written layer. This means that she can only rec-

ognize the parity of y

i

. Indeed the regular user cannot

inspect overwritten layers even in the case of signifi-

cant head’s misalignment.

3 DELETION BY OBFUSCATION

- ANALYSIS

In this section we provide analysis of methods of data

obfuscation. As mentioned in previous sections all

methods for deletion in the described model are based

solely on overwriting alternatively 0 and 1 some num-

ber of times. Thus, each box is seen by the adversary

as a stack of 0s and 1s - some added by regular usage

and some other just added to mislead the adversary.

That is - the algorithms are simple, however the prob-

lem is to find how many times one has to change the

state to get the demanded security level, according to

the security definition given below.

Let us introduce some basic notation. For any nat-

ural number K, the set {0,1,... ,K} will be denoted

by [K]. For the set of natural numbers we use symbol

N. Let X = (X

1

,X

2

,.. ., X

d

) be a d-dimensional dis-

crete random variable representing data to be deleted

(more precisely the number of bit-flips performed

during Regular User Phase). Clearly they do not

have to be independent. Let S = (S

1

,S

2

,.. ., S

d

) de-

note the covering, i.e., the d-dimensional finite dis-

crete random variable whose role is to mask data X.

We assume that after the process of covering (during

Deletion Phase) the adversary can read Y = X + S =

(X

1

+S

1

,X

2

+S

2

,.. ., X

d

+S

d

) from the magnetic disk.

Thus the random variable Y

i

= X

i

+ S

i

denotes the

number of changes performed in the i-th box till the

end of Deletion Phase. We assume that X and S are

independent and denote their ranges by X and S , re-

spectively. Additionally, we assume that S

1

,S

2

,.. ., S

d

are independent and identically distributed. The last

assumption is motivated by practical reasons - dele-

tion method has to be simple and does not require any

additional storage.

We would like to construct such a covering S that

the adversary knowing already the concrete realiza-

tion y of the random variable Y gets no (or, in some

sense, very little) information about the underlying

concrete realization x of the random variable X. We

assume that the adversary knows our technique of

covering and the distribution of S we use and may

know the distribution of X. The formal definition of

secure covering is given below.

Definition 1. For ε ≥ 0 and δ ∈ [0,1] we say that S

(ε,δ)-covers data X if

P[(X,S) ∈ A

ε

] ≥ 1 − δ,

where

A

ε

=

n

(x,s) ∈ X × S :

1

1 + ε

≤

P[X = x|Y = x + s]

P[X = x]

≤ 1 + ε

o

,

and Y = X + S.

Universal Encoding for Provably Irreversible Data Erasing

141

The idea behind this definition is as follows - with

probability at least 1 − δ the adversary can observe

a value y that changes a priori distribution of hidden

x by at most multiplicative factor 1 + ε. In the con-

text of our problem there is some unknown value x

of layers in the device. The adversary does not know

it, however he knows that x layers can be there with

some probability p

x

. After some obfuscating process

(adding s layers) the adversary is given y = x + s. Af-

ter gaining some extra knowledge from y the proba-

bility that there were x layers deviates from p

x

by no

more than a multiplicative factor 1+ε. Of course, the

smaller the values of ε and δ, the stronger the covering

S of X is. In our paper we concentrate on algorithms

providing perfect security, i.e., ε = 0.

Note that this definition is an adaptation of

(ε,δ)-differential privacy introduced by Dwork et al.

in (Dwork et al., 2006b). The original definition has

been presented for the problem of preserving privacy

of individuals when some statistical information from

a database is revealed. This definition is de facto a

standard, formal and very natural method of measur-

ing revealing information. It has been used in vari-

ous papers from different fields related to information

protection (see e.g. (Shi et al., 2011; Golebiewski

et al., 2009)). This kind of security definitions is

widely accepted since the security guarantees do not

depend on any additional knowledge and are immune

against so-called linkage attacks in contrast to many

other methods of defining how well information is

hidden (see (Dwork, 2006; Cynthia Dwork and Aaron

Roth, 2013)). Moreover the Dwork et al’s idea seems

to be very intuitive and can be easily formalized. The

fact remains, however, that analyzing it can be diffi-

cult due to complex calculations. Note that differen-

tial privacy notion leads to required properties - i.e.

security is independent of adversary’s computational

power and is immune against any post-processing.

Such properties are inherited by our definition ((Cyn-

thia Dwork and Aaron Roth, 2013)). Finally, let us

remark that the definition used in our paper needs a

little bit subtle treatment since we do not compare a

few “neighbouring” states (i.e., database with or with-

out single individual as in the case of original Dwork

at al’s paper) but all possible states of memory to be

concealed.

We start with formulating theorems for d = 1, i.e.,

for one-dimensional (single bit) data and covering de-

noting them simply by X and S, respectively. We will

generalize theorems to arbitrary d later on.

Theorem 1. If X has a distribution other than con-

centrated in a single point, then S which (0,0)-covers

X does not exist.

Proof. Let us denote the ranges of X and S by X and

S , respectively (X and S are subsets of N, the set

of natural numbers). Assume by contradiction that

S (0, 0)-covers X. Then P[(X ,S) ∈ A

0

] = 1, where

A

0

= {(x,s) ∈ X ×S : P[X = x|Y = x +s] = P[X = x]}

(recall that Y = X + S).

Let x = min{i ∈ X : P[X = i] > 0} and s = min{i ∈

S : P[S = i] > 0}. Note that then P[X = x|Y = x +s] =

1 and P[X = x] < 1 (since X does not have a single-

point distribution). Thus (x, s) /∈ A

0

and, by indepen-

dence of X and S, we get

P[X = x,S = s] = P[X = x]P[S = s] > 0. Therefore

P[(X, S) ∈ A

0

] < 1.

Remark 1. Note that when X has a single-point dis-

tribution, i.e., P[X = x] = 1 for some x, then arbitrary

S (0,0)-covers X, since

P[X = x|Y = x + s]

P[X = x]

=

P[X = x,Y = x + s]

P[Y = x + s] · 1

=

=

P[X = x,S = s]

P[S = s]

=

P[X = x]P[S = s]

P[S = s]

= 1.

(Recall that Y = X + S.) Of course, when X has a

single-point distribution, known by adversary, noth-

ing can be done to hide the fact that x was stored on

the disc.

Theorem 2. Let δ ∈ (0, 1) and let P[X ∈ [K]] ≥ 1 −

δ/2. Let also S be uniformly distributed on [N], where

N ≥

K+2−δ/2

δ/2

. Then S (0, δ)-covers X.

Proof. We need to prove that P[(X,S) ∈ A

0

] ≥ 1 − δ,

where

A

0

= {(x, s) ∈ X ×S : P[X = x|Y = x+s] = P[X = x]}.

Obviously,

P[(X, S) ∈ A

0

] = P[(X,S) ∈ A

0

|X ∈ [K]]P[X ∈ [K]]

+ P[(X,S) ∈ A

0

|X /∈ [K]]P[X /∈ [K]]

≥ P[(X,S) ∈ A

0

|X ∈ [K]](1 − δ/2).

Note that if we prove that P[(X, S) ∈ A

0

|X ∈ [K]] ≥

1 − δ/2 we are done, since (1 − δ/2)

2

≥ 1 − δ. From

now on all the calculations are done by the assump-

tion X ∈ [K].

Note that, by independence of X and S, we get

P[X = x|Y = x + s] =

P[X = x,Y = x + s]

P[Y = x + s]

=

P[X = x,S = s]

P[Y = x + s]

=

P[X = x]P[S = s]

P[Y = x + s]

,

thus the condition P[X = x|Y = x + s] = P[X = x] is

equivalent to P[Y = x + s] = P[S = s]. Since S ∼

U{0, 1,. .. ,N} we get

A

0

=

(x,s) ∈ X × S : P[Y = x + s] =

1

N + 1

.

SECRYPT 2019 - 16th International Conference on Security and Cryptography

142

By total probability law P[Y = x + s] =

∑

N

i=0

P[X =

x +s − i]P[S = i] thus for (x, s) ∈ X × S such that K ≤

x + s ≤ N we have

P[Y = x + s] =

x+s

∑

i=x+s−K

P[X = x + s − i]P[S = i] =

=

1

N + 1

K

∑

i=0

P[X = i] =

1

N + 1

.

For (x,s) ∈ X × S such that x + s < K we have

P[Y = x + s] =

x+s

∑

i=0

P[X = x + s − i]P[S = i] =

=

1

N + 1

x+s

∑

i=0

P[X = i] ≤

1

N + 1

,

and the above inequality is strict if only there exists

j > x + s such that P[X = j] > 0. Analogously, for

(x,s) ∈ X × S such that x + s > N, we have

P[Y = x + s] =

N

∑

i=x+s−K

P[X = x + s − i]P[S = i] =

1

N + 1

K

∑

i=x+s−N

P[X = i] ≤

1

N + 1

,

and again the above inequality is strict if only there

exists j < x+s −N such that P[X = j] > 0. Therefore

{(x,s) ∈ X × S : K ≤ x + s ≤ N} ⊆ A

0

. Thus if we

show that P[K ≤ Y ≤ N] ≥ 1 − δ/2 or, equivalently,

P[Y < K] + P[Y > N] ≤ δ/2,

we are done. Let us calculate P[Y ≤ K]. By equalities

from (1) we obtain

P[Y ≤ K] =

K

∑

y=0

P[Y = y] =

=

K

∑

y=0

1

N +1

y

∑

x=0

P[X = x] =

=

1

N +1

K

∑

x=0

(K + 1 − x)P[X = x]

=

1

N +1

(K + 1)

K

∑

x=0

P[X = x] −

K

∑

x=0

xP[X = x]

!

=

K + 1 − EX

N +1

.

On the other hand, by equalities from (1) we get

P[Y ≥ N] =

N+K

∑

y=N

P[Y = y] =

N+K

∑

y=N

1

N +1

K

∑

x=y−N

P[X = x] =

=

1

N +1

K

∑

x=0

(x + 1)P[X = x] =

=

1

N +1

K

∑

x=0

xP[X = x] +

K

∑

x=0

P[X = x]

!

=

EX +1

N +1

.

Finally, since N ≥

K+2−δ/2

δ/2

, we obtain

P[Y < K] + P[Y > N] ≤ P[Y ≤ K] + P[Y ≥ N] =

=

K + 2

N + 1

≤ δ/2.

(1)

The following theorem generalizes the above one

to arbitrary dimension d with the assumption that the

coordinates X

i

of data X = (X

1

,X

2

,.. ., X

d

) are inde-

pendent.

Theorem 3. Let δ ∈ (0, 1). Let X = (X

1

,X

2

,.. ., X

d

),

where X

i

’s are independent and let P[X

1

,.. ., X

d

∈

[K]] ≥ 1 − δ/2. Let S = (S

1

,S

2

,.. ., S

d

) with S

i

’s

being independent and uniformly distributed on [N],

wherein N ≥

2K+3+(1−δ/2)

1/d

1−(1−δ/2)

1/d

. Assume that X and S

are independent. Then S (0,δ)-covers data X.

Proof. Recall that Y = X + S = (X

1

+ S

1

,.. ., X

d

+

S

d

). We need to prove that P[(X,S) ∈ A

0

] ≥ 1 − δ,

where

A

0

= {(x,s) ∈ X ×S : P[X = x|Y = x+s] = P[X = x]}.

Let C denote the event that X

1

,.. ., X

d

∈ [K]. We have

P[(X,S) ∈ A

0

] ≥

P[(X,S) ∈ A

0

|C]P[C] ≥ P[(X,S) ∈ A

0

|C](1 − δ/2).

Note that if we show that P[(X,S) ∈ A

0

|C] ≥ 1 − δ/2

we are done, since (1 − δ/2)

2

≥ 1 − δ. From now on

all the calculations are done assuming that C holds.

The condition P[X = x|Y = x + s] = P[X = x] is

equivalent to P[Y = x + s] = P[S = s] (compare (1)).

Since S

i

∼ U{0,1, .. .,N}, i = 1, 2,. .. ,d, and S

i

’s are

independent, we get

A

0

=

(x,s) ∈ X × S : P[Y = x + s] =

1

(N + 1)

d

.

Note that by independence of X

i

’s and S

i

’s, Y

i

’s are

also independent. Recall that for x

i

,s

i

such that K ≤

x

i

+ s

i

≤ N we have

P[Y

i

= x

i

+ s

i

] =

1

N + 1

while for x

i

,s

i

such that x

i

+ s

i

< K or x

i

+ s

i

> N we

have

P[Y

i

= x

i

+ s

i

] ≤

1

N + 1

(compare proof of Theorem 2). Therefore P[Y =

x + s] =

1

(N+1)

d

if K ≤ x

i

+ s

i

≤ N for all i = 1,. .. ,d.

Note that what we actually need is that S

i

(0,1 − (1 −

δ/2)

1/d

)-covers X

i

for each i, since then, by indepen-

dence of Y

i

’s

P[(X,S) ∈ A

0

|C] ≥ (1−(1 − (1 − δ/2)

1/d

))

d

= 1−δ/2.

Universal Encoding for Provably Irreversible Data Erasing

143

By Theorem 2 we get that S

i

(0,1 − (1 − δ/2)

1/d

)-

covers X

i

for N ≥

2K+3+(1−δ/2)

1/d

1−(1−δ/2)

1/d

.

Subsequently, we will formulate analogous the-

orem, this time without the assumption on indepen-

dence of X

i

’s. Let us start with proving one lemma

that will be helpful later on.

Lemma 1. Let S = (S

1

,S

2

), where S

1

and S

2

are inde-

pendent random variables, uniformly distributed on

[N]. Let X = (X

1

,X

2

), where X

1

and X

2

are not neces-

sarily independent random variables with range [K],

K < N. Assume that X and S are independent. Let

Y = (Y

1

,Y

2

) = (X

1

+ S

1

,X

2

+ S

2

). Then

P[Y

1

≤ K,Y

2

≤ K]+ P[Y

1

≤ K,Y

2

≥ N]+

+ P[Y

1

≥ N,Y

2

≤ K]+ P[Y

1

≥ N,Y

2

≥ N] =

K + 2

N +1

2

.

Proof.

P[Y

1

≤ K,Y

2

≤ K] =

K

∑

y

1

=0

K

∑

y

2

=0

P[Y

1

= y

1

,Y

2

= y

2

]

=

K

∑

y

1

=0

K

∑

y

2

=0

y

1

∑

x

1

=0

y

2

∑

x

2

=0

P[X = (x

1

,x

2

),S = (y

1

− x

1

,y

2

− x

2

)]

=

1

(N +1)

2

K

∑

y

1

=0

K

∑

y

2

=0

y

1

∑

x

1

=0

y

2

∑

x

2

=0

P[X

1

= x

1

,X

2

= x

2

]

=

1

(N +1)

2

K

∑

y

1

=0

y

1

∑

x

1

=0

K

∑

x

2

=0

(K + 1 − x

2

)P[X

1

= x

1

,X

2

= x

2

]

=

1

(N +1)

2

K

∑

y

1

=0

y

1

∑

x

1

=0

P[X

1

= x

1

](K + 1 − E[X

2

|X

1

= x

1

])

=

1

(N +1)

2

×

×

K

∑

x

1

=0

(K + 1 − x

1

)P[X

1

= x

1

](K + 1 − E[X

2

|X

1

= x

1

]) =

=

1

(N +1)

2

K

∑

x

1

=0

(K + 1)P[X

1

= x

1

](K + 1 − E[X

2

|X

1

= x

1

])

−

1

(N +1)

2

K

∑

x

1

=0

x

1

P[X

1

= x

1

](K + 1 − E[X

2

|X

1

= x

1

])

=

(K + 1)

2

− (K + 1)(EX

1

+ EX

2

) + E[X

1

X

2

]

(N +1)

2

.

(2)

By analogous calculations we obtain

P[Y

1

≥ N,Y

2

≥ N] =

E[X

1

X

2

] + EX

1

+ EX

2

+ 1

(N +1)

2

, (3)

P[Y

1

≥ N,Y

2

≤ K] =

(K + 1)EX

1

− EX

2

− E[X

1

X

2

] + K + 1

(N +1)

2

,

(4)

and

P[Y

1

≤ K,Y

2

≥ N] =

(K + 1)EX

2

− EX

1

− E[X

1

X

2

] + K + 1

(N +1)

2

.

(5)

Summing up (2), (3), (4), and (5) concludes the proof.

The formulation of the next theorem is analogous

to Theorem 3, however this time we do not assume

that the coordinates X

i

’s of data X = (X

1

,X

2

,.. ., X

d

)

are independent.

Theorem 4. Let δ ∈ (0, 1). Let X = (X

1

,X

2

,.. ., X

d

)

and let P[X

1

,.. ., X

d

∈ [K]] ≥ 1 − δ/2. Let S =

(S

1

,S

2

,.. ., S

d

) with S

i

’s being independent and uni-

formly distributed on [N], wherein N ≥

d(K+2)−δ/2

δ/2

.

Assume that X and S are independent. Then S (0,δ)-

covers data X.

Remark 2. The proof contains many references to

calculations from the proof of Theorem 2.

Proof. Recall that Y = X + S = (X

1

+ S

1

,X

2

+

S

2

,.. ., X

d

+ S

d

) and X and S denote the ranges of X

and S, respectively. We need to prove that P[(X,S) ∈

A

0

] ≥ 1 − δ, where

A

0

= {(x,s) ∈ X ×S : P[X = x|Y = x+s] = P[X = x]}.

The condition P[X = x|Y = x + s] = P[X = x] is

equivalent to P[Y = x + s] = P[S = s] =

1

(N+1)

d

(com-

pare (1)).

Let B = {(x,s) ∈ X × S : K ≤ x

i

+ s

i

≤ N,i =

1,.. ., d}. For (x,s) ∈ B we have (compare 1)

P[Y = x + s] =

=

x

1

+s

1

∑

i

1

=x

1

+s

1

−K

...

x

d

+s

d

∑

i

d

=x

d

+s

d

−K

P[X = (x

1

+ s

1

− i

1

,...,x

d

+ s

d

− i

d

)]P[S = (i

1

,...,i

d

)]

=

1

(N +1)

d

K

∑

x

1

=0

...

K

∑

x

d

=0

P[X = (x

1

,...,x

d

)] =

1

(N +1)

d

.

Note that for (x,s) /∈ B we always obtain

P[Y = x + s] ≤

1

(N + 1)

d

(compare the calculations for d = 1 from the proof of

Theorem 3). Thus B ⊆ A

0

and it is enough to show

that P[(X,S) ∈ B] ≥ 1 − δ. Let C denote the event

that X

1

,.. ., X

d

∈ [K]. We have

P[(X,S) ∈ B ] ≥ P[(X,S) ∈ B |C]P[C] ≥

≥ P[(X,S) ∈ B |C](1 − δ/2).

Note that if we show that P[(X,S) ∈ B|C] ≥ 1 − δ/2,

or, equivalently, P[(X,S) /∈ B |C] ≤ δ/2, we are done,

since (1 − δ/2)

2

≥ 1 − δ. From now on all the calcu-

lations are done assuming that C holds.

SECRYPT 2019 - 16th International Conference on Security and Cryptography

144

We have

P[(X,S) /∈ B|C] =

= P[Y

1

< K ∪Y

1

> N ∪ ... ∪Y

d

< K ∪Y

d

> N]

≤ P[Y

1

≤ K ∪Y

1

≥ N ∪ ... ∪Y

d

≤ K ∪Y

d

≥ N]

=

d

∑

i=1

(P[Y

i

≤ K] + P[Y

i

≥ N])

−

∑

1≤i< j≤d

(P[Y

i

≤ K,Y

j

≤ K] + P[Y

i

≤ K,Y

j

≥ N])

−

∑

1≤i< j≤d

(P[Y

i

≥ N,Y

j

≤ K] + P[Y

i

≥ N,Y

j

≥ N])

+ . . .+

(−1)

d+1

(P[Y

1

≤ K,...,Y

d

≤ K] + P[Y

1

≥ N, ...,Y

d

≥ N]).

(6)

Let

U = P[Y

1

< K ∪Y

1

> N ∪ . .. ∪Y

d

< K ∪Y

d

> N]

and

L =

∑

1≤i< j≤d

(P[Y

i

≤ K,Y

j

≤ K] + P[Y

i

≤ K,Y

j

≥ N]+

+ P[Y

i

≥ N,Y

j

≤ K] + P[Y

i

≥ N,Y

j

≥ N]).

Note that (6) is just a special case of inclusion-

exclusion principle, thus

U −L ≤ P[(X, S) /∈ B |C] ≤ U.

By the equality in 1 we have

U = d(P[Y

1

≤ K] + P[Y

1

≥ N]) =

d(K + 2)

N + 1

and by Lemma 1

L =

d

2

(P[Y

1

≤ K,Y

2

≤ K] + P[Y

1

≤ K,Y

2

≥ N]

+ P[Y

1

≥ N,Y

2

≤ K] + P[Y

1

≥ N,Y

2

≥ N])

=

d

2

K + 2

N + 1

2

.

Therefore

d(K + 2)

N + 1

−

d

2

K + 2

N + 1

2

≤ P[(X,S) /∈ B|C]

≤

d(K + 2)

N + 1

.

Since N ≥

d(K+2)−δ/2

δ/2

, the conclusion follows from

the righthandside of the above inequality.

Note that if only dK N, the above estimation is

tight up to the order of Θ(dK/N).

Remark 3. Of course, the lower bound on N guaran-

teeing that S (0,δ)-covers X is bigger when we deal

with general data X = (X

1

,X

2

,.. ., X

d

), i.e., when X

i

’s

may be dependent. In Theorem 3 we need

N ≥

2K + 3 + (1 − δ/2)

1/d

1 − (1 − δ/2)

1/d

∼

2K

1 − (1 − δ/2)

1/d

when X

i

’s are independent, while in Theorem 4

N ≥

d(K + 2) − δ/2

δ/2

∼

2dK

δ

when X

i

’s may be dependent. Note, however, that the

price we pay in general case (Theorem 4) is not very

significant when δ is small. For small δ we may actu-

ally estimate

1

1−(1−δ/2)

1/d

∼

1

1−e

−δ/2d

∼

2d

δ

.

3.1 Universal Random Variables and

Uniform Obfuscation

In this paper we discussed perfect obfuscation, i.e.,

such that with probability at least 1 − δ the observer

having access to observable Y, learns no new infor-

mation about the real data X. The solutions we pro-

posed are universal with respect to K, i.e., they guar-

antee (with some strictly controlled probability) ob-

fuscation of any X concentrated on [K] (except cases

of total probability at most δ). Note that all our meth-

ods use for obfuscation a random variable with uni-

form distribution on a fixed set.

One may ask two questions. First, do we really

need a universal obfuscation? Clearly, one can con-

struct more efficient methods of obfuscation tailored

for a given X. We claim that demanding universal

method is fully justified by practical needs. Regular

users of data storage devices do not know the distribu-

tion of their stored data (possibly except a case, while

the disc is used for storing cryptographic materials as

in (Klonowski et al., 2008)). Moreover, the adversary

having access to the big volume of statistical data can

have some knowledge of the distribution to be obfus-

cated and have another advantage over a regular user.

Second, even if we accept that the universal obfus-

cation is really needed, one may be tempted to con-

struct substantially different (and possibly more effi-

cient) methods that deviate from using uniform dis-

tribution. Below we show that any universal method

offering perfect security (i.e., ε = 0) has to use for

obfuscating a distribution “close” to uniform. More

precisely, if we demand that S (0, δ)-covers any X,

then the set of values of S having the same probabil-

ity needs to be of measure at least 1 − (δ/(1 − γ)) for

arbitrarily small γ ∈ (0,1).

In this section, let p

s

denote P[S = s] for s ∈ N

and 0 otherwise. In the theorem below we consider

one-dimensional X and S.

Theorem 5. Let S be a random variable distributed

on N. If there exists S

∗

⊂ N such that P[S ∈ S

∗

] >

δ/(1 − γ) for arbitrarily small γ ∈ (0,1) and for every

Universal Encoding for Provably Irreversible Data Erasing

145

different s,s

0

∈ S

∗

holds p

s

6= p

s

0

, then S cannot (0,δ)-

cover arbitrarily distributed data X .

Proof. Aiming for a contradiction let us assume that S

(0,δ)-covers any data X and that there exists S

∗

⊆ N

such that p

s

6= p

s

0

for any different s,s

0

from S

∗

and

P[S ∈ S

∗

] > δ/(1 − γ) for arbitrarily small γ ∈ (0, 1).

To every s ∈ S

∗

let us assign s

∗∗

= min{i ∈ N|p

i

=

p

s

}. Let S

∗∗

= {s

∗∗

|s ∈ S

∗

∧ p

s

> 0}. That is, S

∗∗

is

the set of elements that appear with a given, positive,

probability for the first time in the sequence of natural

numbers. Clearly, P[S ∈ S

∗∗

] = P[S ∈ S

∗

] > δ/(1 − γ)

and for any different s, s

0

from S

∗∗

p

s

6= p

s

0

holds.

Now let us look at data X such that P[X = 0] =

1 − γ and P[X = 1] = γ. Consider the set of pairs

(0,s) ∈ {0, 1} × S

∗∗

. For those pairs, by the construc-

tion of S

∗∗

, we obtain

P[Y = x + s] = P[Y = s] = P[X = 0] · p

s

+ P[X = 1] · p

s−1

= (1 − γ) · p

s

+ γ · p

s−1

6= p

s

= P[S = s].

(7)

Recall that (x, s) ∈ A

0

if and only if P[Y = x + s] =

P[S = s]. Definitely, by Equation 7, if s ∈ S

∗∗

then

(0,s) /∈ A

0

. By independence of X and S we get

P[(X,S) ∈ {0} × S

∗∗

] = P[X = 0] · P[S ∈ S

∗∗

]

>

(1 − γ)δ

(1 − γ)

= δ.

Thus P[(X, S) ∈ A

0

] < 1 − δ, which contradicts the

statement that S (0,δ)-covers any data X.

Note that the construction from the proof uses X con-

centrated on the set {0,1}. Thus the proof is valid for

any K ≥ 2.

4 FAST METHOD FOR DATA

OBFUSCATION

The methods presented in previous part of the paper

offer a perfect security with fully controllable proba-

bility. However, they all have a drawback that pre-

vents them from using in some real-life scenarios.

Namely, note that in Deletion Phase the user has to

perform a large number of writing operations. Trans-

lating it into real-life terms the whole disc has to

be overwritten during Deletion Phase the number of

times that is greater than the number of times the disc

can be used in the Regular User Phase. Even using

ultra fast devices the time one needs for all the op-

erations can be prohibitively large in natural applica-

tion wherein a user has to remove the content possibly

quickly.

In this section we present a method that drasti-

cally reduces the number of operations during Dele-

tion Phase for the price of a very long Preliminary

Phase, while the perfect security property of deletion

is still preserved. This means, however, that a storage

device has to be prepared for a long time before it is

used for storing some data. The Deletion Phase needs

only linear (with respect to the size of the data to be

deleted) number of operations. Note that this number

of operations is asymptotically optimal - indeed, one

needs Ω(d) operations to somehow delete data writ-

ten on the last layer.

4.1 Algorithm Description

The protocol uses one parameter - K - the upper bound

on the number of times each bit can be changed during

Regular User Phase.

Preliminary Phase - i-th box is flipped

ˆ

S

i

times,

where

ˆ

S

i

’s are independent random variables uni-

formly distributed on {0, 2,.. ., N −3,N −1} (N is

an odd number depending on K, setting its value

will be discussed in the next section).

Regular Usage Phase - the user can flip each bit up

to K times, we denote those changes by random

variable X = (X

1

,X

2

,.. ., X

d

) (we mean that i-th

box was flipped X

i

times).

Deletion Phase - the state of each box is changed in-

dependently with probability 1/2, which will be

denoted by C = (C

1

,C

2

,.. .,C

d

), where C

i

’s are

independent random variables such that P[C

i

=

0] = P[C

i

= 1] = 1/2 (of course, C

i

refers to pos-

sible single change made in the i-th box).

4.2 Analysis

First let us note that the Deletion Phase is optimal - it

makes only O(d) changes (since each box is changed

at most once). Moreover, we believe that the dele-

tion operation can be performed quickly in real-life

settings.

Now we discuss security of this approach. Let

us analyze the i-th box. The adversary knows our

technique of obfuscation and is given the state of the

disc after the Deletion Phase. Thus what she sees

is

ˆ

S

i

+ X

i

+ C

i

, where

ˆ

S

i

, X

i

, and C

i

are indepen-

dent. Note that since

ˆ

S

i

is uniformly distributed on

{0,2,. .. ,N − 3,N − 1} (the set of even numbers not

greater than N − 1) and C

i

is a Bernoulli trial with

probability 1/2, we get that

ˆ

S

i

+C

i

(let us denote this

sum by S

i

) is uniformly distributed on [N]. Thus what

adversary knows is that the number of layers she sees

is the sum of independent random variables: S

i

(which

SECRYPT 2019 - 16th International Conference on Security and Cryptography

146

is uniformly distributed on [N]) and X

i

(data with dis-

tribution that may be known to the adversary). Thus

what we get is the situation exactly analogous to the

one from the previous section. Therefore, in order to

choose the value of N guaranteeing the desired level

of security, one may apply Theorem 4.

Finally let us justify some details of the presented

construction that seems to be artificial at first glance.

Let us note that the number of flips in the Preliminary

Phase,

ˆ

S

i

, is chosen from even numbers to have al-

ways 0-box on the last layer after Preliminary Phase.

Thanks to this trick X

i

is independent of

ˆ

S

i

. However

ˆ

S

i

+ X

i

still reveals the parity of X

i

. In particular the

adversary can inspect the last layer by just checking

the state of the boxes. For that reason we need to add

C

i

in Deletion Phase to complete obfuscation of X

i

.

5 CONCLUSIONS AND FUTURE

WORK

In this paper we have presented and analyzed meth-

ods for provable deletion of stored data. We believe

that this is a good starting point to broader analysis

of provably secure deletion problems. All presented

methods offer perfect security (i.e., we set ε = 0).

Note that this is a very strong requirement. One

can expect that relaxing this assumption will lead to

obtaining more efficient algorithms. Note also that

throughout the whole paper we were considering con-

cealing data from arbitrary distribution, whereas we

hope to construct more practical solutions for special

types of data. This issue, as well as the case when the

security parameter ε is greater than 0, are left for a

future work.

ACKNOWLEDGEMENTS

This paper is supported by Polish National Science

Center. Preliminary ideas has been supported by

the grant UMO-2013/09/B/ST6/02251. Full version

with the formal analysis was prepared thanks to grant

UMO-2018/29/B/ST6/02969 .

REFERENCES

Gutmann P. (1996). Epilogue to: Secure deletion of data

from magnetic and solid-state.

Ali M., Dhamotharan R., Khan E., Khan S.U., Vasilakos

A.V., Li K., and Zomaya A.Y. (2017). Sedasc: Se-

cure data sharing in clouds. IEEE Systems Journal,

11(2):395–404.

Bacis E., De Capitani di Vimercati S., Foresti S., Paraboschi

S., Rosa M., and Samarati P. (2016). Mix & slice:

Efficient access revocation in the cloud. ACM Con-

ference on Computer and Communications Security,

pages 217–228.

Cynthia Dwork and Aaron Roth (2013). The algorithmic

foundations of differential privacy. Foundations and

Trends in Theoretical Computer Science, 9(3-4):211–

407.

Dwork, C. (2006). Differential privacy. ICALP.

Dwork, C., McSherry, F., Nissim, K., and Smith, A.

(2006a). Calibrating noise to sensitivity in private data

analysis. In (Halevi and Rabin, 2006), pages 265–284.

Dwork, C., McSherry, F., Nissim, K., and Smith, A.

(2006b). Calibrating noise to sensitivity in private data

analysis. In (Halevi and Rabin, 2006), pages 265–284.

Goldreich, O. and Ostrovsky, R. (1996). Software pro-

tection and simulation on oblivious rams. J. ACM,

43(3):431–473.

Golebiewski, Z., Klonowski, M., Koza, M., and Kuty-

lowski, M. (2009). Towards fair leader election in

wireless networks. In Ruiz, P. M. and Garcia-Luna-

Aceves, J. J., editors, Ad-Hoc, Mobile and Wireless

Networks, 8th International Conference, ADHOC-

NOW 2009, Murcia, Spain, September 22-25, 2009,

Proceedings, volume 5793 of Lecture Notes in Com-

puter Science, pages 166–179. Springer.

Gomez R. D., Burke E. R., Adly A. A., Mayergoyz I. D.,

Gorczyca J. A., and Kryder M. H. (1993). Micro-

scopic investigations of overwritten data. Journal of

Applied Physics, 73:6001–6003.

Gutmann Peter (1996). Secure deletion of data from mag-

netic and solid-state memory. In In Proceedings of the

6th USENIX Security Symposium, pages 77–89.

Halevi, S. and Rabin, T., editors (2006). Theory of Cryp-

tography, Third Theory of Cryptography Conference,

TCC 2006, New York, NY, USA, March 4-7, 2006, Pro-

ceedings, volume 3876 of Lecture Notes in Computer

Science. Springer.

Hao F., Clarke D., and Zorzo A.F. (2016). Deleting secret

data with public verifiability. IEEE Trans. Dependable

Sec. Comput., 13(6):617–629.

Hughes G., Coughlin T., and Commins D. (2009). Disposal

of disk and tape data by secure sanitization.

Hur J., Koo D., Shin Y., and Kang K. (2017). Secure data

deduplication with dynamic ownership management

in cloud storage. IEEE International Conference on

Data Engineering, pages 69–70.

Jia, S., Xia, L., Chen, B., and Liu, P. (2016). NFPS: adding

undetectable secure deletion to flash translation layer.

In Proceedings of the 11th ACM on Asia Conference

on Computer and Communications Security, AsiaCCS

2016, Xi’an, China, May 30 - June 3, 2016, pages

305–315.

Klonowski, M., Przykucki, M., and Struminski, T. (2008).

Data deletion with provable security. In Interna-

tional Workshop on Information Security Applica-

tions, WISA ’08, pages 240–255.

Klonowski, M., Przykucki, M., and Struminski, T. (2009).

Data deletion with time-aware adversary model. In

Universal Encoding for Provably Irreversible Data Erasing

147

Proceedings IEEE CSE’09, 12th IEEE International

Conference on Computational Science and Engineer-

ing, August 29-31, 2009, Vancouver, BC, Canada,

pages 659–664. IEEE Computer Society.

Mayergoyz I. D., Tse C., Krafft C., and Gomez R. D.

(2001). Spin-stand imaging of overwritten data and

its comparison with magnetic force microscopy. Jour-

nal of Applied Physics, 89:6772–6774.

Moran T., Naor M., and Segev G. (2009). Deterministic

history-independent strategies for storing information

on write-once memories. Theory of Computing, 5:43–

67.

Moritz C.A., Chheda S., and Carver K. (2015). Secur-

ing microprocessors against information leakage and

physical tampering. Patent US 9940445 B2.

Rivest, R. L. and Shamir, A. (1982). How to reuse a write–

once memory. In Proceedings of the fourteenth annual

ACM symposium on Theory of computing, STOC ’82,

pages 105–113.

Roche, D. S., Aviv, A. J., and Choi, S. G. (2016). A prac-

tical oblivious map data structure with secure deletion

and history independence. In IEEE Symposium on Se-

curity and Privacy, SP 2016, San Jose, CA, USA, May

22-26, 2016, pages 178–197.

Rugar D., Mamin H. J., Guethner P., Lambert S. E., Stern

J. E., McFadyen I., and Yogi T. (1990). Magnetic

force microscopy: General principles and application

to longitudinal recording media. Journal of Applied

Physics, 68(3):1169–1183.

Shi, E., Chan, T.-H. H., Rieffel, E., Chow, R., and Song, D.

(2011). Privacy-preserving aggregation of time-series

data. NDSS.

US Department of Defense (1997). National industrial se-

curity program operating manual NISPOM January

1995. Technical Report DoD 5220.22-M.

U.S. National Institute of Standards and Technology (2006).

Nist special publication 800-88: Guidelines for media

sanitization.

Wegberg G., Ritzdorf H., and

ˇ

Capkun S. (2017). Multi-user

secure deletion on agnostic cloud storage.

Yang C., Chen X., and Xiang Y. (2018). Blockchain-based

publicly verifiable data deletion scheme for cloud stor-

age. Journal of Network and Computer Applications,

103:185–193.

SECRYPT 2019 - 16th International Conference on Security and Cryptography

148