A Wearable Real-time Human Activity Recognition System using

Biosensors Integrated into a Knee Bandage

Hui Liu and Tanja Schultz

Cognitive Systems Lab, University of Bremen, Germany

Keywords:

Biosensors, Biodevices, Human Activity Recognition, Rehabilitation Technology, Wearable Devices.

Abstract:

This work introduces an innovative wearable real-time Human Activity Recognition (HAR) system. The sys-

tem processes and decodes various biosignals that are captured from biosensors integrated into a knee bandage.

The presented work includes (1) the selection of an appropriate equipment in terms of devices and sensors to

capture human activity-related biosignals in real time, (2) the experimental tuning of system parameters which

balances recognition accuracy with real-time performance, (3) the intuitive visualization of biosignals as well

as n-best recognition results in the graphical user interfaces, and (4) the on-the-air extensions for rapid proto-

typing of applications. The presented system recognizes seven daily activities: sit, stand, stand up, sit down,

walk, turn left and turn right. The amount of activity classes to be recognized can be easily extended by the

”plug-and-play” function. To the best of our knowledge, this is the first work which demonstrates a real-time

HAR system using biosensors integrated into a knee bandage.

1 INTRODUCTION

Arthrosis is the most common joint disease and re-

sults in a perceptible reduction of life quality. Among

all forms of arthroses, gonarthrosis is accounted for

the largest proportion. Apart from the negative impact

on the quality of life for many individuals worldwide,

gonarthrosis leads to significant economic loss due to

surgeries, invalidity, sick leave and early retirement.

Recent studies demonstrate and evaluate the usage of

sensors and technical systems for the purpose of knee

rehabilitation, as for example after ligament injuries

(Yepes et al., 2017) and surgery (Naeemabadi et al.,

2018), to name a few. In an aging society, preven-

tion and early treatment become an increasingly im-

portant part, since joint replacement surgeries carry

secondary risks.

The mainstay of early treatment is an adequate

amount of proper movement. It results in muscular

stabilization and fosters functional maintenance of the

joints. Moreover, movement is fundamental for the

nutrition of both healthy and diseased cartilage. Nev-

ertheless, the knee joint with lesions should not be

overloaded by these movement to not re-activate or

further exacerbate gonarthrosis due to an inflamma-

tion of the joint. This would lead to even more pain

for the patient and worsens the overall conditions.

The overall goal of our work is to technically as-

sist the early treatment of gonarthrosis by discover-

ing the right dose of daily movement, which affects

the functionality of the joint positively while pre-

venting movement-caused overload of the diseased

joint. First steps towards this goal were carried out

by developing a technical system which continuously

tracks the dose of everyday activity movements us-

ing biosensors integrated into a knee bandage. The

results were documented in (Liu and Schultz, 2018),

in which we proposed the framework ASK (Activity

Signals Kit) for biosignal data acquisition, processing

and human activity recognition. The different features

of this framework were introduced in a pilot study

of person-dependent activity recognition based on a

small dataset of human everyday activities.

1.1 Human Activity Recognition

Human activity recognition (HAR) is intensively

studied and a large body of research shows results

of recognizing all kinds of human daily activities, in-

cluding running, sleeping or performing gestures.

For this purpose a large variety of biosignals are

captured by various sensors, e.g. (Mathie et al., 2003)

applied wearable triaxial accelerometers attached to

the waist to distinguish between rest (sit) and active

states (sit-to-stand; stand-to-sit and walk). Five bi-

axial accelerometers were used in (Bao and Intille,

Liu, H. and Schultz, T.

A Wearable Real-time Human Activity Recognition System using Biosensors Integrated into a Knee Bandage.

DOI: 10.5220/0007398800470055

In Proceedings of the 12th International Joint Conference on Biomedical Engineering Systems and Technologies (BIOSTEC 2019), pages 47-55

ISBN: 978-989-758-353-7

Copyright

c

2019 by SCITEPRESS – Science and Technology Publications, Lda. All rights reserved

47

2004) to recognize daily activities such as walking,

riding escalator and folding laundry. In (Kwapisz

et al., 2010) the authors placed an Android cell phone

with a simple accelerometer into the subjects’ pocket

and discriminated activities like walking, climbing,

sitting, standing and jogging. Furthermore, (Lukow-

icz et al., 2004) combined accelerometers with mi-

crophones to include a simple auditory scene analy-

sis. (De Leonardis et al., 2018) compared the recog-

nition performance of 5 classifiers based on machine

learning (K-Nearest Neighbor, Feedforward Neural

Network, Support Vector Machines, Na

¨

ıve Bayes and

Decision Tree) and analyzed advantages and disad-

vantages of their implementation onto a wearable and

real-time HAR system.

Muscle activities captured by ElectroMyoGraphy

(EMG) is another useful biosignal. It even provides

the option to predict a person’s motion intention prior

to actually moving a joint, like investigated in (Fleis-

cher and Reinicke, 2005) for the purpose of an actu-

ated orthosis. Moreover, some researchers like (Rowe

et al., 2000) and (Sutherland, 2002) applied electrogo-

niometers to study kinematics.

1.2 Goal of this Study

To achieve our overall goal of technical assisting the

early treatment of gonarthrosis using biosensors in-

tegrated into a knee bandage, we envision the con-

tributions to five research and development paths,

i.e. (1) to recognize daily activities based on person-

independent models, (2) to increase the amount of

recognized daily activities, (3) to compare and select

biosensors suitable for integration into a knee ban-

dage and for wearable application, (4) to improve the

activity recognition accuracy by further optimizing

the activity models and system parameters, and (5)

to implement a wearable real-time HAR system for

field studies. This paper focus on our efforts toward

the last item, i.e. the implementation of a wearable

real-time HAR system.

In this study, we used the dataset introduced in

(Liu and Schultz, 2018) as training set. It consists

of biosignals from one male subject captured by two

accelerometers, four EMG sensors and one electro-

goniometer. The data are annotated, time-aligned and

segmented on single-activity level based on a semi-

automatic annotation mechanism using a push-button.

Although, this single-subject dataset is rather small

and by no means representative, we leverage these

data as pilot dataset to showcase the development of

an end-to-end wearable real-time human recognition

system rapidly. To our knowledge, there are no pub-

lished publicly available datasets yet which are suit-

able for real-time HAR using biosensors integrated

into a knee bandage. However, we are quite aware of

the limitations of this dataset, and are currently in the

process of recording a larger dataset covering many

activities from several subjects. First results of these

data recording efforts are presented in section 3.2.

To model human activities we followed the ap-

proach as described in (Rebelo et al., 2013) using

Hidden-Markov-Models (HMM). HMMs are widely

used to a variety of activity recognition tasks, such as

in (Lukowicz et al., 2004) and (Amma et al., 2010).

The former applies it to an assembly and maintenance

task, the latter presents a wearable system that en-

ables 3D handwriting recognition based on HMMs. In

this so-called Airwriting system the users write text

in the air as if they were using an imaginary black-

board, while the handwriting gestures are captured

wirelessly by accelerometers and gyroscopes attached

to the back of the hand.

Based on the training dataset and HMM modeling,

we design and implement a wearable real-time HAR

system using biosensors integrated into a knee ban-

dage, which is connected to an intuitive PC graphical

user interface.

2 EQUIPMENT AND SETUP

2.1 Knee Bandage

We use the Bauerfeind GenuTrain knee bandage

1

as

the wearable carrier of the biosensors (see Figure 1).

It consists of an anatomically contoured knit and an

integral, ring-shaped, functional visco-elastic cush-

ion, which offers active support for stabilization and

relief of the knee.

2.2 Devices

We chose the biosignalsplux Research Kits

2

as

recording device. One PLUX hub

3

records signals

from 8 channels (each up to 16 bits) simultaneously.

We used two hubs for recording the data and con-

nected the hubs via a cable which synchronizes sig-

nals between the hubs at the beginning of each record-

ing. This procedure ensures the synchronization of

sensor data during the entire recording session.

1

www.bauerfeind.de/en/products/supports-orthoses/kne

e-hip-thigh/genutrain.html

2

biosignalsplux.com/researcher

3

store.plux.info/components/263-8-channel-hub-82020

1701.html

BIODEVICES 2019 - 12th International Conference on Biomedical Electronics and Devices

48

Figure 1: Bauerfeind GenuTrain knee bandage.

2.3 Biosensors and Biosignals

Similar to (Mathie et al., 2003) and (Liu and Schultz,

2018), we used two triaxial accelerometers

4

, four

bipolar EMG sensors

5

and both channels of one biax-

ial electrogoniometer

6

, as they were proved to be ef-

fective and efficient. The sensors were placed onto the

bandage to capture muscles and positions most rele-

vant to activity recognition, as summarized in Table 1.

We used both channels of electrogoniometer to mea-

sure both the frontal and sagital plain since we intend

to recognize rotational movements of the knee joint

for example when walking a curve in the activities

”curve-left” and ”curve-right”.

Table 1: Sensor placement and captured muscles.

Sensor Position / Muscle

Accelerometer (upper) Thigh, proximal ventral

Accelerometer (lower) Shank, distal ventral

EMG1 Musculus vastus medialis

EMG2 Musculus tibialis anterior

EMG3 Musculus biceps femoris

EMG4 Musculus gastrocnemius

Electrogoniometer Knee of the right leg, lateral

The signals of all biosensors were recorded wire-

lessly at different sampling rate. Table 2 shows the

sampling rate of each sensor type.

From table 2 we can see, that accelerometer

and electrogoniometer signal are both slow signals,

4

biosignalsplux.com/acc-accelerometer

5

biosignalsplux.com/emg-electromyography

6

biosignalsplux.com/ang-goniometer

Table 2: Sampling rates of biosensors.

Sensor Sampling rate

Accelerometer 100Hz

Electrogoniometer 100Hz

Electromyography 1000Hz

while the nature of EMG signals require higher sam-

pling rates. Low-sampled channels at 100Hz are up-

sampled to 1000Hz to be synchronized and aligned

with high-sampled channels. Table 3 shows the ar-

rangement of the sensors on Hub1 and Hub2, respec-

tively.

Table 3: Channel layout of PLUX Hub1 (fast), Hub2 (slow).

Channel Sensor

1 Electromyography EMG 1

2 Electromyography EMG 2

3 Electromyography EMG 3

4 Electromyography EMG 4

5 -

6 -

7 -

PLUX Hub1 (fast)

8 -

1 Accelerometer (upper) X-axis

2 Accelerometer (upper) Y-axis

3 Accelerometer (upper) Z-axis

4 Electrogoniometer - sagital plain

5 Accelerometer (lower) X-axis

6 Accelerometer (lower) Y-axis

7 Accelerometer (lower) Z-axis

PLUX Hub2 (slow)

8 Electrogoniometer - frontal plain

2.4 ASK Framework

We continued to program under the framework of

ASK introduced in (Liu and Schultz, 2018) with a

graphical user interface. The ASK PC-software con-

nects and synchronizes several PLUX hubs easily to

subsequently collect data from all hubs simultane-

ously and continuously. In ”Recorder” mode and

semi-automatic ”Annotator” mode, all recorded data

are archived properly with date and time stamps for

further processing. In real-time ”Decoder” mode in-

troduced in section 4, the recorded data are used for

recognition in real-time.

3 EXPERIMENTAL STUDY

An experimental study was performed to compare

two scenarios: the recognition of human activities in

an offline scenario, aka without real-time limitations,

A Wearable Real-time Human Activity Recognition System using Biosensors Integrated into a Knee Bandage

49

and the adaptation of the recognition system to an on-

line scenario with limited processing time, for which

we trade recognition accuracy for decoding speed.

For this purpose we apply one seven-activities dataset

and one eighteen-activities dataset.

3.1 Seven-activities Dataset

We used the seven-activities dataset from (Liu and

Schultz, 2018) that contains four recording sessions

from one male subject as online dataset currently for

the real-time HAR system. In these recordings, seven

activities are organized in two categories, i.e. ”stay-

in-place” and ”move-around”, which results in two

activity lists for the data acquisition prompting pro-

tocol as follows:

• Activity List 1 ”Stay-in-place” (40 repetitions):

sit → sit-to-stand → stand → stand-to-sit.

• Activity List 2 ”Move-around” (25 Repetitions):

walk → curve-left → walk * (turn around) *

walk → curve-right → walk * (turn around).

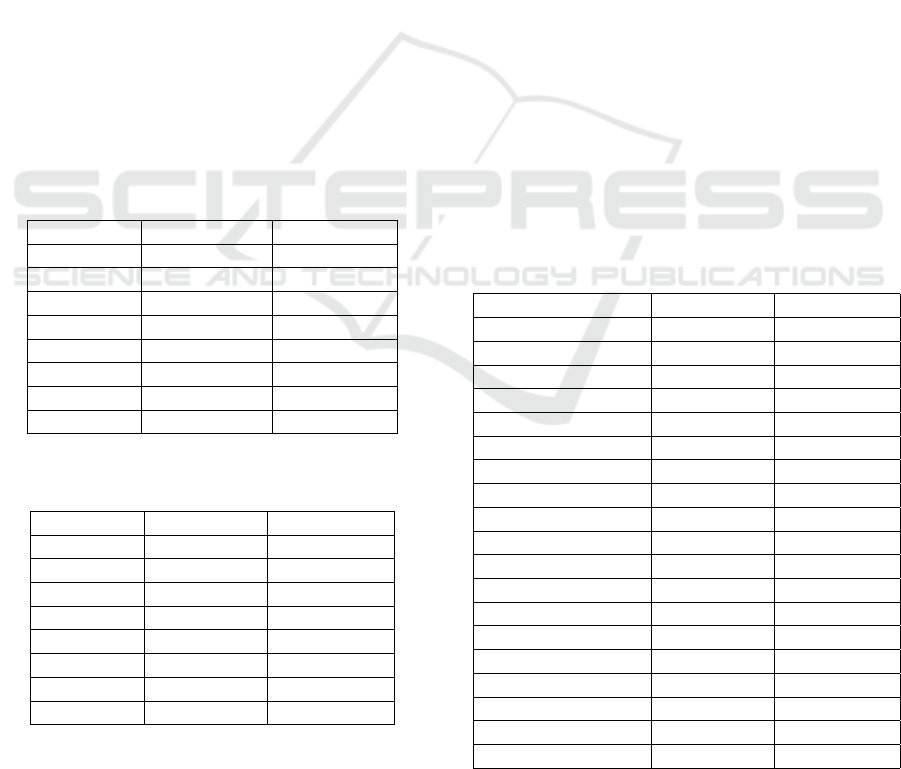

Table 4 and Table 5 gives occurrences, total effec-

tive length, minimum length and maximum length of

these seven activities.

Table 4: Occurrences and total length of the seven activities

in seven-activities dataset.

Activity Occurrences Total length

sit 25 79.92s

stand 23 81.41s

sit-to-stand 24 47.31s

stand-to-sit 23 44.11s

walk 67 172.93s

curve-left 17 61.96s

curve-right 16 66.25s

Total 195 553.87s

Table 5: Minimum and maximum length of the seven activ-

ities in seven-activities dataset.

Activity Min. length Max. length

sit 1.64s 7.78s

stand 1.49s 17.82s

sit-to-stand 1.44s 2.57s

stand-to-sit 1.19s 2.84s

walk 1.35s 4.57s

curve-left 1.81s 13.00s

curve-right 1.56s 18.12s

Global 1.19s 18.12s

We are aware that this dataset is very small and

is neither sufficiently nor necessarily large enough to

establish reliable recognition accuracies for daily ac-

tivities. However, the purpose here was to rapidly

prototype an end-to-end wearable real-time human

recognition system. The classification accuracy of

this dataset resulted in 97% (Liu and Schultz, 2018).

In Section 4.3 we introduce the ”plug-and-play” func-

tion, which allows for easy on-the-fly extensions to

more activity classes and more training data. We col-

lected a larger dataset of eighteen activities (see be-

low), which will become online for real-time recogni-

tion after validation in further work.

3.2 Eighteen-activities Dataset

We continued to record a new larger dataset of eigh-

teen activities from seven male subjects under the

framework of (Liu and Schultz, 2018). Beside the in-

crease of the number of activities, we also extended

the types of sensors and biosignals: Four types of ad-

ditional biosensors were included, i.e. one airborne

microphone, one piezoelectric microphone, two gyro-

scope and one force sensor. The fusion of biosignals

allows the study of sensor comparison and selection

for the purpose of reliable activity recognition. This

eighteen-activities dataset has not been used for real-

time recognition system yet.

Table 6 and Table 7 gives occurrences, total effec-

tive length, minimum length and maximum length of

these eighteen activities.

Table 6: Occurrence and total length of the eighteen activi-

ties in eighteen-activities dataset.

Activity Occurrence Total length

sit 47 123.75s

stand 46 127.70s

sit-to-stand 45 30.94s

stand-to-sit 53 72.90s

stair-up 55 190.45s

stair-down 57 181.96s

walk 220 554.07s

curve-left-step 57 143.09s

curve-left-spin 46 109.15s

curve-right-step 51 67.51s

curve-right-spin 48 41.50s

run 97 151.27s

v-cut-left 53 43.76

v-cut-right 55 61.75s

lateral-shuffle-left 53 97.54s

lateral-shuffle-right 52 90.42s

jump-one-leg 59 61.36s

jump-two-leg 63 63.40s

Total 1157 2212.52s

Most of the activities in this table are self-

explanatory, a few may needs some explanation:

BIODEVICES 2019 - 12th International Conference on Biomedical Electronics and Devices

50

Table 7: Minimum and Maximum length of the eighteen

activities in eighteen-activities dataset.

Activity Min. Length Max. length

sit 0.86s 4.69s

stand 1.36s 4.73s

sit-to-stand 0.15s 1.30s

stand-to-sit 0.56s 3.10s

stair-up 1.59s 4.93s

stair-down 1.37s 4.86s

walk 1.18s 4.78s

curve-left-step 1.10 3.87s

curve-left-spin 1.25s 3.39s

curve-right-step 0.54s 3.19s

curve-right-spin 0.28s 1.88s

run 0.64s 2.74s

v-cut-left 0.29s 2.08s

v-cut-right 0.35s 2.37s

lateral-scuffle-left 0.73s 4.11s

lateral-scuffle-right 0.75s 3.98s

jump-one-leg 0.33s 2.85s

jump-two-leg 0.51s 1.63s

Global 0.15s 4.93s

• Curve-left/right-step vs. curve-left/right-spin:

”step” means that the subject makes a big 90

◦

turn

with several walking steps, while ”spin” suggests

a fast 90

◦

turning of the body like the parade com-

mand ”right turn” or ”right face”.

• Lateral-shuffle-left/right: these two activities

are often used by tennis, soccer and basketball

players. The subject starts with left/right foot

moving left/right laterally and the other foot fol-

lowing, and continues shuffling in the same direc-

tion for the desired amount of time.

• V-cut-left/right: these two activities mean that

the subject changes his/her direction by roughly

90

◦

at jogging speed.

3.3 Feature Extraction and Modeling

For training and decoding the biosignals captured by

the biosensors need to be preprocessed.

First, the biosignals are windowed using a rect-

angular window function with overlapping windows.

Second, a mean normalization is applied to the ac-

celeration and EMG signals to reduce the impact of

Earth acceleration and to set the baseline of the EMG

signals to zero. Then, the EMG signal is rectified, a

widely adopted signal processing method for muscle

activities.

Subsequently, features were extracted for each of

the resulting windows. We denote the number of sam-

ples per window by N and the samples in the window

by (x

1

, ..., x

N

). We adopted the features from (Rebelo

et al., 2013) extracting for each window the average

for the accelerometer and electrogoniometer signal,

defined as:

avg =

1

N

N

∑

n=1

x

n

, (1)

We extracted also average for the four types of

additional signal from the airborne microphone, the

piezoelectric microphone, the gyroscopes and the

force sensor.

For the EMG signal we extracted for each window

the Root Mean Square:

RMS =

s

1

N

N

∑

k=1

x

2

n

(2)

Features of different biosignals can be combined

by early or late fusion, i.e. the feature vectors of sin-

gle biosignal streams are either concatenated to form

one multi-biosignal feature vector (early fusion) or

recognition is performed on single biosignal feature

vectors and the combination is done on decision level

(late fusion). Our framework allows for both fusion

strategies, in this work we rely on early fusion which

showed to outperform the late fusion strategy in the

context of real-time HAR.

3.4 Parameter Tuning and Decoding

Similar to (Liu and Schultz, 2018) we applied our

HMM-based in-house decoder BioKIT to modelling

and recognizing the described activities. Among

others BioKIT supports the training of Gaussian-

Mixture-Models (GMMs) to model the HMM emis-

sion probabilities. Each activity consists of a fixed

number of HMM states, where each state is modeled

by a mixture of Gaussians.

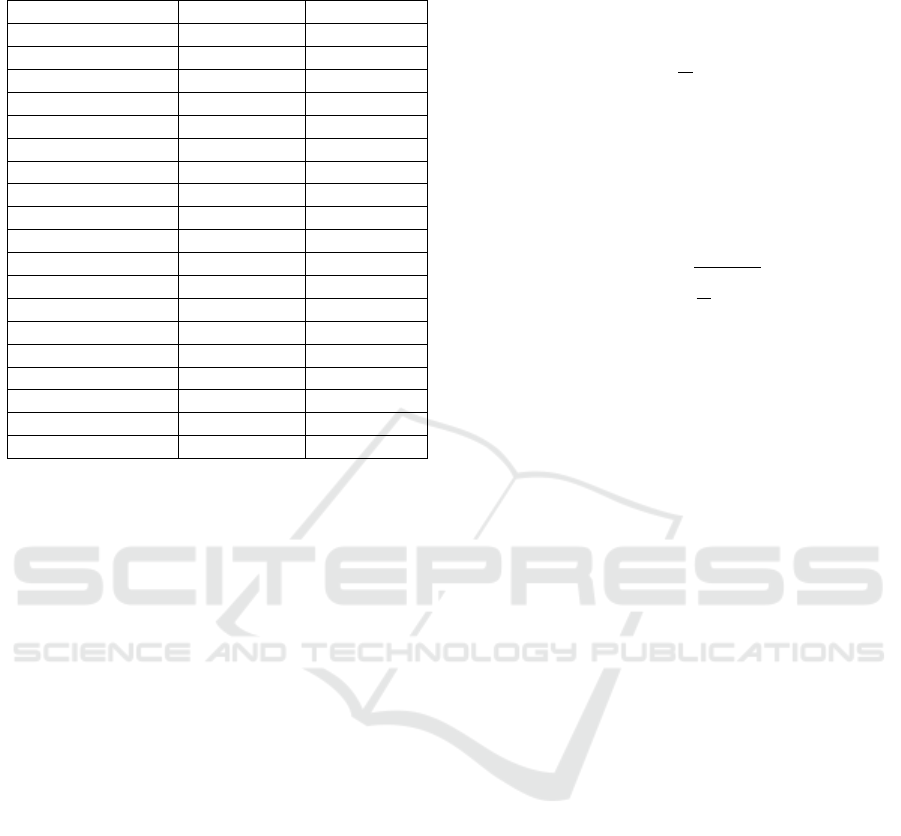

Based on the eighteen-activities dataset and the

automatically generated reference labels for each seg-

ment using the semi-automatic annotation function,

we iteratively optimized the number of HMM states

and Gaussian mixtures per each HMM state.

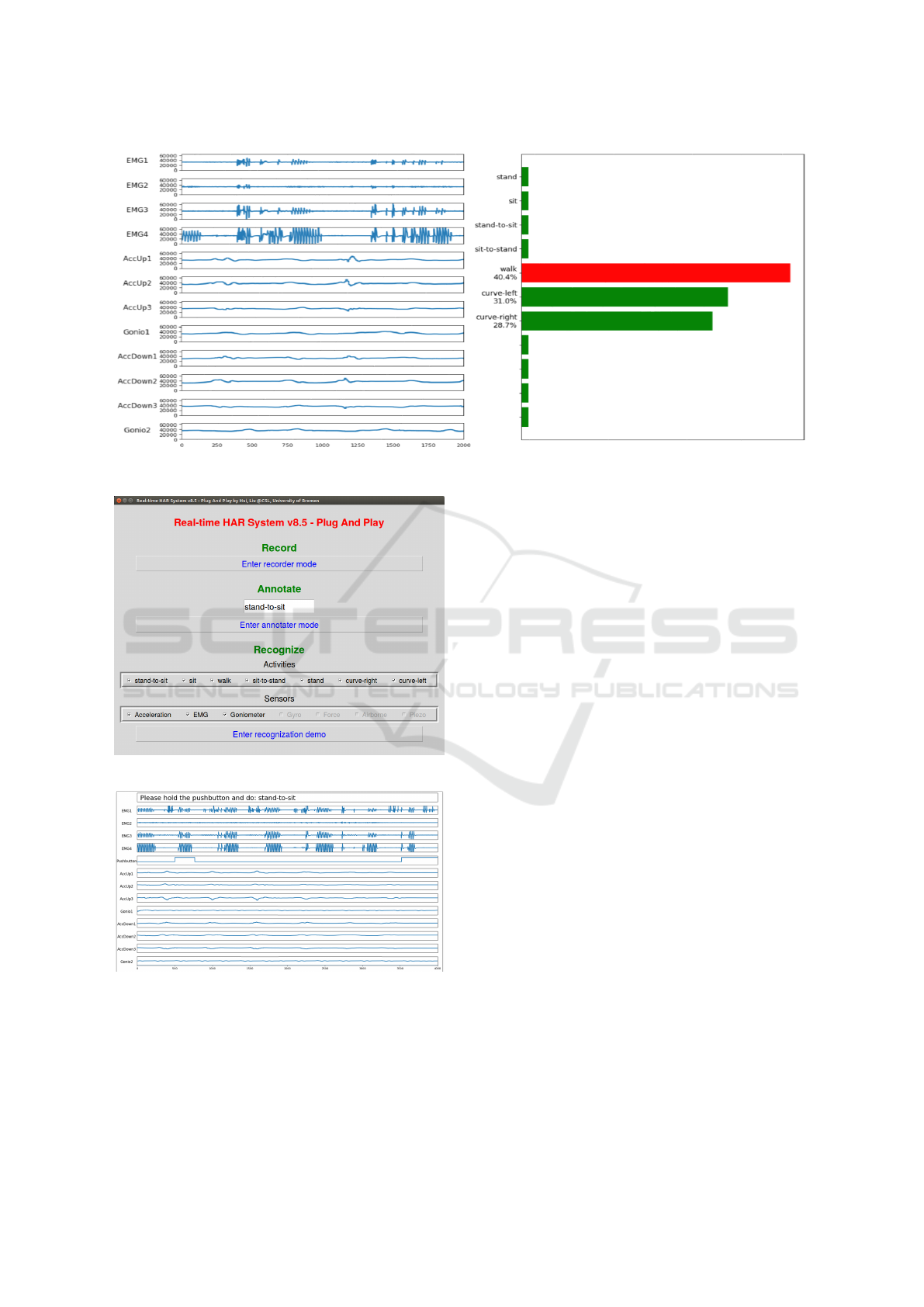

Figure 2 and Figure 3 demonstrate examples of

tuning different parameters in cross validation experi-

ments with the configuration of 10ms window length,

5ms overlap and 21-dimensional normalized feature

vectors.

Due to the limited data quantity we stopped evalu-

ating the number of gaussians at 10 in order to achieve

reliable results. Similar experiments for tuning dif-

ferent parameters were executed thoroughly and we

arrived at a conclusion that the application of eight

HMM states and ten Gaussians offers the best recog-

nition results.

A Wearable Real-time Human Activity Recognition System using Biosensors Integrated into a Knee Bandage

51

Figure 2: Parameter tuning: number of HMM states. Win-

dow length: 10ms; overlap: 5ms; dimension of normalized

feature vectors: 21; number of Gaussians per each HMM

state: 5.

Figure 3: Parameter tuning: number of Gaussians per each

HMM state. Window length: 10ms; overlap: 5ms; dimen-

sion of normalized feature vectors: 21; number of HMM

states: 2.

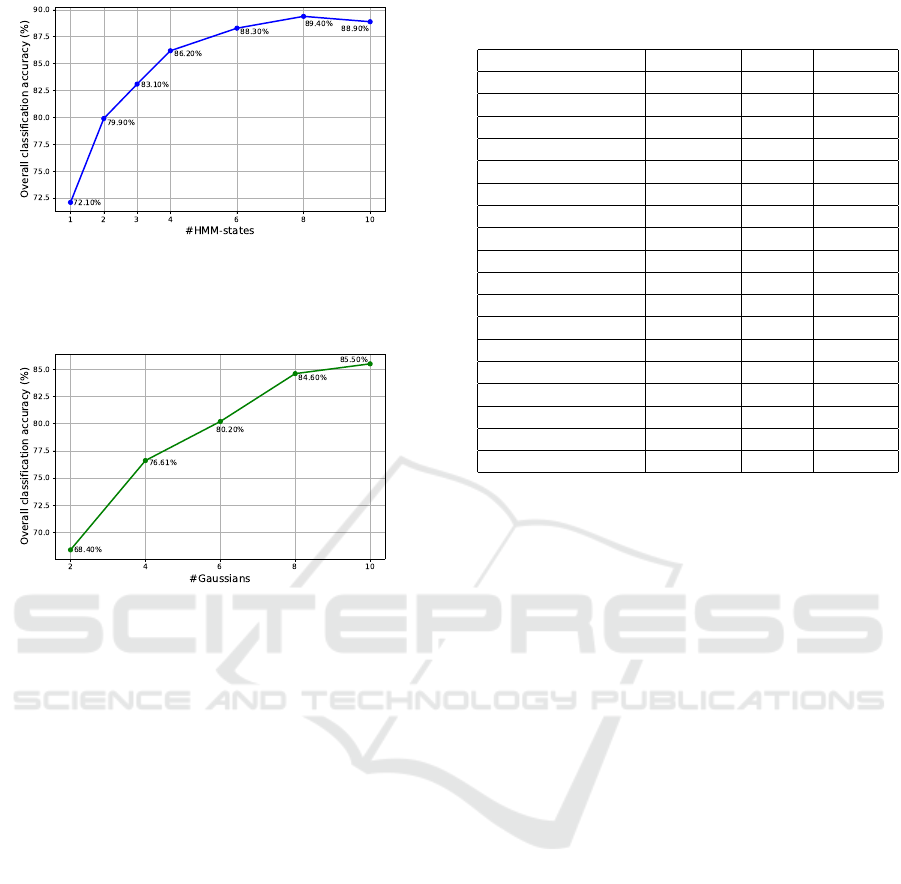

Using these current best performing parameters,

the overall person-dependent recognition accuracy

achieves almost 90%. Figure 4 illustrates the recog-

nition results in percentage from one cross validation

experiment in confusion matrix. Table 8 gives the cri-

teria Precision, Recall and F-Score in average of each

activity in cross validation experiments.

As can be seen in Figure 4 and Table 8, ac-

tivities ”jump-one-leg”, ”jump-two-leg”, ”lateral-

shuffle-right” and ”stair-up” were correctly recog-

nized in every experiment.

Recently we applied feature vector stacking fol-

lowed by a linear discrimination analysis and further

improved the results to roughly 95% recognition ac-

curacy. In addition, a merge-split algorithm is planned

to be applied for adaptive determination of the opti-

mal number of gaussians per state. Additional work

to further improve the performance is under way.

Table 8: Criteria Precision, Recall and F-Score in average

of each activity from cross validation experiments.

Activity Precision Recall F-Score

sit 0.85 0.78 0.81

stand 0.90 0.75 0.81

sit-to-stand 0.95 1.00 0.97

stand-to-sit 0.96 1 0.98

stair-up 1.00 1.00 1.00

stair-down 0.96 1.00 0.98

walk 0.92 0.88 0.90

curve-left-step 0.79 0.83 0.80

curve-left-spin 0.71 0.91 0.78

curve-right-step 0.95 0.88 0.91

curve-right-spin 0.80 1.00 0.89

run 0.90 0.86 0.87

v-cut-left 0.66 0.70 0.64

v-cut-right 0.5 0.56 0.50

lateral-shuffle-left 0.96 0.96 0.95

lateral-shuffle-right 1.00 1.00 1.00

jump-one-leg 1.00 1.00 1.00

jump-two-leg 1.00 1.00 1.00

4 WEARABLE REAL-TIME HAR

SYSTEM

We developed a wearable real-time HAR system and

used the above described seven-activities dataset (sec-

tion 3.1) to investigate the end-to-end system perfor-

mance.

4.1 Balance Accuracy versus Speed

From Table 5 we can see, that no activity in this

dataset is shorter than 1.9 seconds. This a-priori infor-

mation let us to decide the window length and window

overlap length and these two lengths were optimized

to balance recognition accuracy versus processing de-

lay. The activities in the seven-activities dataset were

modeled with one HMM state per activity and a longer

window length was chosen. A shorter step-size re-

sults in a shorter delay of the recognition outcomes,

but the interim recognition results may fluctuate due

to temporary search errors. On the other hand, longer

delay due to long step-sizes contradicts the character-

istics of a real-time system, though it generates more

accurate interim recognition results. Based on exper-

iments we chose a balancing setting of 400ms win-

dow length with 200ms window overlap. These val-

ues gave satisfactory recognition results with a barely

noticeable delay within 1 second.

BIODEVICES 2019 - 12th International Conference on Biomedical Electronics and Devices

52

Figure 4: Confusion matrix of recognition results in percentage from one cross validation experiment.

4.2 Graphical User Interface (GUI) and

Customization

After model training, the system starts recording data

continuously from the biosensors integrated into the

knee bandage. We implemented this new functional-

ity with graphical user interface (GUI) in ASK PC-

software (Liu and Schultz, 2018) to continuously out-

put the recognition results as well as to visualize the

biosignals. The latter feature enables the user to mon-

itor the biosignal recording while the former feature

may serve as input to post-processing steps and to

inform down-stream applications. The recorded data

are displayed serially on the left-hand side of the in-

terface display, and the n-best (usually we set n as 3)

recognition results in terms of activity classes asso-

ciated with the calculated probabilities indicated by

length of bars are shown on the right-hand side of the

interface display (see Figure 5).

The GUI allows to switch biosignals and activi-

ties on and off for the real-time activity recognition.

This way, it is very straight-forward to quickly test the

sensors and system properties during system develop-

ment and evaluation (see Figure 6).

4.3 On-the-Fly Extensions

After we successfully tested our real-time HAR sys-

tem using the seven-activities dataset, we imple-

mented a new function named ”plug-and-play”. This

function can be understood literally: we can load

new activity sensor data on-the-fly, retrain the activity

models, and restart the recognition process automat-

ically with the updated activity models. The ”plug-

and-play” function has several benefits and the fol-

lowing three use cases:

Providing more Training Data for an existing

Activity. In (Liu and Schultz, 2018) we presented a

framework name ASK of data acquisition and semi-

automatic annotation. The real-time HAR system is

also an extension of this framework. Therefore, we

could use the ”annotator mode” (See Figure 6) to

record more data, such as ”stand-to-sit”, and at the

same time generate annotation labels on them (See

Figure 7). These new data will automatically be used

next time for training the real-time HAR system, that

A Wearable Real-time Human Activity Recognition System using Biosensors Integrated into a Knee Bandage

53

Figure 5: Screenshot: the performance of the real-time HAR system.

Figure 6: Screenshot: sensor and activity selection menu.

Figure 7: Screenshot: ASK software with annotation mode:

the next activity to do is ”stand-to-sit”.

is to say, we ”plug” more data and ”play” with an im-

proved recognition system.

Increase the Activity Classes to be recognized.

Even the recognition of new activities are enabled

most simply. We just need to type a new activity

name, such as ”lie down”, in the text-box, re-run ”an-

notator mode”, record and label a minimum of 12 in-

stances of ”lie down”. These steps take about five

minutes, when finished the real-time HAR system is

started with the new activity ”lie down” already pre-

pared to be recognized.

Enable the Study of Person-independent Real-

time HAR System. Similar to the first usage, we can

record more data for existing activities from different

subjects, the system will then serve automatically as

a person-independent HAR system, provided that we

continue to study proper model configuration and pa-

rameters for person-independent application.

5 CONCLUSIONS

In this paper we brought forward a wearable real-

time Human Activity Recognition (HAR) system us-

ing biosensors integrated into a knee bandage that

capture a variety of biosignals related to human ev-

eryday activities. This HAR system opens up new av-

enues for computer-aided assistive rehabilitation sys-

tems using wearable medical appliances. To the best

of our knowledge, this is the first work which imple-

ments a real-time HAR system using biosensors inte-

grated into a knee bandage.

The paper describes the biosensors and devices

that capture biosignals related to human activities, the

design and implementation of a software platform in-

tegrating methods for modeling, training, and recog-

nition of human activities based on biosignals recog-

nition and a graphical interface to interact with a user.

The final HAR system recognizes human daily activ-

ities in real-time with performances above 90% accu-

BIODEVICES 2019 - 12th International Conference on Biomedical Electronics and Devices

54

racy and a barely noticeable delay. It further provides

a ”plug-and-play” function for on-the-fly extensions

such as enabling the recognition of unseen activities.

Further work will be devoted to improve the recogni-

tion performance in a person-independent setting on a

larger set of activities, and to integrate the HAR out-

put into an assistive rehabilitation system for people

suffering gonarthrosis.

REFERENCES

Amma, C., Gehrig, D., and Schultz, T. (2010). Airwrit-

ing recognition using wearable motion sensors. In

First Augmented Human International Conference,

page 10. ACM.

Bao, L. and Intille, S. S. (2004). Activity recognition from

user-annotated acceleration data. In Pervasive com-

puting, pages 1–17. Springer.

De Leonardis, G., Rosati, S., Balestra, G., Agostini, V.,

Panero, E., Gastaldi, L., and Knaflitz, M. (2018). Hu-

man activity recognition by wearable sensors: Com-

parison of different classifiers for real-time applica-

tions. In 2018 IEEE International Symposium on

Medical Measurements and Applications (MeMeA),

pages 1–6. IEEE.

Fleischer, C. and Reinicke, C. (2005). Predicting the in-

tended motion with emg signals for an exoskeleton

orthosis controller. In 2005 IEEE/RSJ International

Conference on Intelligent Robots and Systems (IROS

2005), pages 2029–2034.

Kwapisz, J. R., Weiss, G. M., and Moore, S. A. (2010).

Activity recognition using cell phone accelerometers.

In Proceedings of the Fourth International Workshop

on Knowledge Discovery from Sensor Data, pages 10–

18.

Liu, H. and Schultz, T. (2018). Ask: A framework for data

acquisition and activity recognition. In 11th Interna-

tional Conference on Bio-inspired Systems and Signal

Processing, Madeira, Portugal, pages 262–268.

Lukowicz, P., Ward, J. A., Junker, H., St

¨

ager, M., Tr

¨

oster,

G., Atrash, A., and Starner, T. (2004). Recognizing

workshop activity using body worn microphones and

accelerometers. In In Pervasive Computing, pages 18–

32.

Mathie, M., Coster, A., Lovell, N., and Celler, B. (2003).

Detection of daily physical activities using a triaxial

accelerometer. In Medical and Biological Engineer-

ing and Computing. 41(3):296—301.

Naeemabadi, M. R., Dinesen, B., Andersen, O. K., Najafi,

S., and Hansen, J. (2018). Evaluating accuracy and us-

ability of microsoft kinect sensors and wearable sen-

sor for tele knee rehabilitation after knee operation.

In 11th International Conference on Biomedical Elec-

tronics and Devices, Biodevices 2018.

Rebelo, D., Amma, C., Gamboa, H., and Schultz, T. (2013).

Activity recognition for an intelligent knee orthosis.

In 6th International Conference on Bio-inspired Sys-

tems and Signal Processing, pages 368–371. BIOSIG-

NALS 2013.

Rowe, P., Myles, C., Walker, C., and Nutton, R. (2000).

Knee joint kinematics in gait and other functional ac-

tivities measured using exible electrogoniometry: how

much knee motion is sucient for normal daily life?

Gait & posture, 12(2):143—-155.

Sutherland, D. H. (2002). The evolution of clinical

gait analysis: Part ii kinematics. Gait & Posture,

16(2):159–179.

Yepes, J. C., Saldarriaga, A., V

´

elez, J. M., P

´

erez, V. Z.,

and Betancur, M. J. (2017). A hardware-in-the-loop

simulation study of a mechatronic system for anterior

cruciate ligament injuries rehabilitation. In BIODE-

VICES, pages 69–80.

A Wearable Real-time Human Activity Recognition System using Biosensors Integrated into a Knee Bandage

55