Multi-agent Systems in Remote Sensing Image Analysis

Peter Hofmann

Institute of Applied Informatics, Deggendorf Institute of Technology, Technology Campus Freyung, Grafenauer Str. 22,

D-94078 Freyung, Germany

Interfaculty Department of Geoinformatics, Z_GIS, Schillerstr. 30, A-5020 Salzburg, Austria

Keywords: Multi-agent Systems, Remote Sensing, Object based and Agent based Image Analysis.

Abstract: With remote sensing data and methods we gain deeper insight in many processes at the Earth’s surface. Thus,

they are a valuable data source to gather geo-information of almost any kind. While the progress of remote

sensing technology continues, the amount of available remote sensing data increases. Hence, besides effective

strategies for data mining and image data retrieval, reliable and efficient methods of image analysis with a

high degree of automation are needed in order to extract the information hidden in remote sensing data. Due

to the complex nature of remote sensing data, recent methods of computer vision and image analysis do not

allow a fully automatic and highly reliable analysis of remote sensing data, yet. Most of these methods are

rather semi-automatic with a varying degree of automation depending on the data quality, the complexity of

the image content and the information to be extracted. Thus, visual image interpretation in many cases is still

seen as the most appropriate method to gather (geo-) information from remote sensing data. To increase the

degree of automation, the application of multi-agent systems in remote sensing image analysis is recently

under research. The paper present summarizes recent approaches and outlines their potentials.

1 INTRODUCTION

Remote sensing data is a valuable data source for a

variety of disciplines related to Earth’s surface and

the environment. With it, fast and even ad hoc maps

can be produced (e.g. for hazard management) or

long-term processes and their footprints can be

monitored (e.g. the ongoing deforestation, the global

urbanisation or the desertification). Further, archives

of remote sensing data are growing continuously (Ma

et al. 2015). In this context, terms such as “digital

Earth” (Boulton 2018) or “Big Earth data” (Guo

2017) evolved recently. However, in comparison to

other types of image data, particularly remote sensing

data are very complex to handle due to their complex

contents and characteristics. Thus, in many cases,

human image interpretation is understood as the most

reliable method to extract geo-information from

remote sensing data. However, manual mapping from

remote sensing data needs a lot of experience in

image interpretation and is very labour intensive. The

results of manual image interpretation are subjective

and of limited reproducibility. However, automatic

methods producing comparable results as human

image interpretation does, are not in sight yet.

Recent automatic methods must compromise

between the degree of automation and the accuracy

and reliability of the results. The higher the level of

detail and accuracy, the more individual imaging

situations must be considered. This, in turn, increases

the complexity of the rule sets and algorithms applied,

which simultaneously reduces their robustness and

general applicability. This dilemma has been asserted

already by Hofmann et al. (2011), Rokitnicki-Wojcik

et al. (2011), Kohli et al. (2013) and Anders et al.

(2015). Current strategies to increase the degree of

automation follow a design pattern approach as it is

known from engineering: By developing so-called

“master rule sets” for similar problems individual

results are produced by deviating a specialized

solution for individual images (Tiede et al. 2010).

However, depending on the complexity of the

mapping task and the data used, the human effort with

these approaches is still relatively high. Thus, to

efficiently exploit the ever-growing remote sensing

and geo-data archives the degree of automation in

image analysis must increase. That is, automatic

remote sensing image analysis must become more

flexible and robust against perturbations, similar the

way human visual image interpretation is already.

178

Hofmann, P.

Multi-agent Systems in Remote Sensing Image Analysis.

DOI: 10.5220/0007381201780185

In Proceedings of the 11th International Conference on Agents and Artificial Intelligence (ICAART 2019), pages 178-185

ISBN: 978-989-758-350-6

Copyright

c

2019 by SCITEPRESS – Science and Technology Publications, Lda. All rights reserved

Research in remote sensing image analysis

traditionally investigates the potential of AI methods

– mainly those of computer vision. Investigating

agent-based methods could foster the degree of

automation and reliability in this particular field,

since automating the analysis of remote sensing data

is less a computer vision problem but rather a problem

of optimally apply, network and parameterize known

methods of computer vision and image processing. A

key role in this context plays knowledge and

knowledge description: while for visual image

interpretation so-called “interpretation keys” are

used, which verbally describe how the objects of

interest look like, in computer based image analysis

domain specific knowledge, knowledge about the

data’s genesis and knowledge about sensible methods

to process the data is incorporated the one or other

way (e.g. Belgiu et al., 2014; Arvor et al. 2013). Once

made explicit, e.g. as a formal ontology, this

knowledge can be used as rules, rule sets and/or

algorithms for image analysis. Nevertheless,

knowledge often is also incorporated implicit, too,

e.g. by Artificial Neural Networks (ANNs) or by

other sample based classification methods.

Independent of its representation, this knowledge is

often distinguished into: declarative knowledge

which describes the characteristics of the expected

object-classes and procedural knowledge which

describes the necessary image processing methods.

Accordingly, recent agent-based methods of image

analysis can be roughly separated into two types:

methods which operate at procedural level and try to

adapt existing methods similar to the design pattern

approach and methods which operate at descriptive

level and try to optimize the objects’ representation in

the image, that is, their delineation. However,

applying agent-based methods for remote sensing

image analysis is still at its beginning and has a lot of

potential which goes beyond the improvement of

image analysis. The paper present tries to outline the

state of the art in this particular field and its potential

for future applications.

2 REMOTE SENSING IMAGE

ANALYSIS

While visual image interpretation of remote sensing

data is still a common way to gather information from

remote sensing images, at least since the 1970ies

there were always attempts to automate image

analysis (e.g. Colwell, 1968). Until the millennium

Landsat images with a resolution of 30m were the

dominating set of optical Earth Observation (EO)

data. Thus, for the most applications it was sufficient

to analyse images based on the radiometry and its

statistics stored in single pixels. Before the

millennium, higher spatial resolution could only be

achieved with airborne data, but from 2000 onwards

the resolution of space borne data increased from 1m

to 0.3m in 2010. Although with the new sensors more

details were visually recognizable, automated image

analysis of this kind of data became rather complex.

It soon turned out that new analysis methods for Very

High Resolution (VHR) remote sensing data were

necessary. Thus, methods which operate on image

segments (Object-Based Image Analysis, OBIA)

instead of pixels and which incorporate formal expert

knowledge became more and more popular (Benz et

al. 2004; Blaschke 2010). Blaschke et al. (2014) were

even speaking of a paradigm change in remote

sensing image analysis.

In order to reuse once developed methods,

workflows of individual image analysis can be noted,

stored and re-applied the one or other way (often

named rule sets). For this purpose, Domain-Specific

Languages (DSL) comprising all necessary domain

specific terms, rules and knowledge descriptions were

developed (e.g. Schmidt et al., 2007). With these

DSLs it is possible to develop individual solutions

according to the design-pattern approach.

2.1 Pixel-based Image Analysis

In remote sensing many methods of pixel-based

image analysis are applied. Some of them are specific

from the remote sensing domain, such as the

calculation of the Normalized Differential Vegetation

Index (NDVI) and ortho-rectification, others are

rather general, such as texture analysis based on the

Grey Level Co-Occurrence Matrix (GLCM). For

analysis purposes each pixel of an image is assigned

to a meaningful real-world class, that is, pixels are

classified by an arbitrary supervised or unsupervised

classification method. Besides the original grey

values, derivative pixel values such as the NDVI or

GLCM values can extend the feature space for the

classifier. The list of classification algorithms

meanwhile ranges from simple threshold-based

classifiers, clustering algorithms and Support Vector

Machines (SVMs) to Fuzzy Classifiers, Bayesian

Networks and ANNs.

Nevertheless, for a successful application of all

these methods, a thorough knowledge of image

processing and remote sensing is essential. That is,

pixel-based image analysis usually consists of an

(iterative) sequence of image processing methods

Multi-agent Systems in Remote Sensing Image Analysis

179

which needs to be adapted according to the individual

imaging situation (Lillesand et al. 2014; Canty 2014).

2.2 Object-based Image Analysis

In OBIA a (hierarchical) net of so-called image

objects is generated by arbitrary image

segmentations. With these image objects a lot of

disadvantages which go ahead with the pixel-based

approach for VHR remote sensing data vanish, such

as the decreased signal-to-noise ratio in VHR data

(the so-called “salt-and-pepper effect”; Blaschke and

Strobl, 2001). A further recognized advantage of

OBIA is its affinity to Geographic Information

Systems (GIS): image objects aka image segments are

very similar to polygons, which means many GIS-

typical (polygon) operations can be used similarly

with image objects. Additionally, GIS-polygons can

be used for image segmentation and their attributes

can be used in OBIA to support the classification.

Another advantage is the possibility to work with

object hierarchies: Image objects at different

segmentation levels represent pairwise disjoint

objects of different size (i.e. at different scale). This

approach reflects the multi-scale methods of

landscape analysis and allows to develop semantic-

rich rule sets for image analysis (Burnett and

Blaschke, 2003; Stoter et al., 2011).

Further, the usable feature space in OBIA is of

very high dimension: it comprises the objects’

physical properties (colour, form and texture) and

their semantic properties (hierarchical and spatial

relations to other objects with certain characteristics

and/or class memberships). Nevertheless, similar to

pixel-based image analysis the whole process of

analysing a single image can be very complex.

2.3 Knowledge Representation in

Image Analysis

Pixel-based and object-based image analysis

incorporate explicit and/or implicit knowledge for

object identification. The knowledge used can be

distinguished into two principle domains

(Bovenkamp et al. 2004): Procedural knowledge,

describes all image processing methods and

parameterisations necessary to extract all intended

object categories from the image data. If procedural

knowledge is represented explicitly, it is described as

so-called task ontology. Declarative knowledge

describes the shape of the intended object categories,

that is, how these classes appear in the image data

similar to an image interpretation key but with

measureable feature values and constraints. It can

then be represented explicitly by a so-called

descriptive ontology and used to automatically infer

an objects class membership. Both knowledge

domains are interlinked, as the following example

demonstrates: vegetation can be easily identified in

remote sensing data using the NDVI. The NDVI is

commonly calculated by:

𝑁𝐷𝑉𝐼 =

𝑁𝐼𝑅 − 𝑅𝑒𝑑

𝑁𝐼𝑅 + 𝑅𝑒𝑑

(1)

Whereas NIR represents the grey value in the Near

Infrared band and Red the grey value in the Red band

of a sensor. A value of 0.0 < NDVI ≤ 1.0 indicates

“vegetation”, a value of -1.0 < NDVI < 0.0 indicates

“no vegetation”. The declarative knowledge which

describes “vegetation” must represent this typical

shape of vegetation by an appropriate (classification)

rule, e.g.:

Class vegetation {

…

0.0 < NDVI(x) < 1.0;

…

};

With x representing any individual pixel or

segment of an image. The procedural knowledge for

the class “vegetation” must include a description of

how the NDVI is calculated (see eq. 1) with the data

currently used, e.g.:

If sensor = “Landsat 8” THEN

NDVI(x) = band 4(x) – band 3(x) /

band 4(x) + band 3(x);

Endif.

The way how procedural and declarative

knowledge are represented can be manifold. In the

example given, it is noted explicitly and crisp. But it

could be represented implicit and/or fuzzy, too. By

noting this knowledge explicitly, e.g. as a formal

ontology, it can be reused and/or adapted easily.

However, implicit representations (e.g. as trained

classifier or as a Convolutional Neural Network,

CNN) are possible, too, but have a black-box

character and are therefore less comprehensible and

less adaptable.

3 AGENT-BASED METHODS IN

IMAGE ANALYSIS

Applying agent-based methods in image analysis is

relatively new. According to Rosin and Rana (2004)

many methods of computer vision which claim to be

ICAART 2019 - 11th International Conference on Agents and Artificial Intelligence

180

agent-based are not. They often lack basic elements

of agent-based computing, such as situation

awareness, autonomy of individual agents, goal-

orientation of agents, cooperation and

communication of agents and many more. However,

some recent agent-based methods of image analysis

follow the agent-based paradigm (Jennings 1999;

Wooldridge 1998). Especially in remote sensing,

agent-based approaches for image analysis can be

separated into two major types as outlined in section

1: procedural level approaches and declarative level

approaches.

3.1 Approaches Acting at Procedural

Level

In the very beginning of agent-based image analysis,

Multi Agent Systems (MAS) were mainly used to

parallelize necessary image processing tasks and to

improve their performance (Lueckenhaus and

Eckstein, 1997). Besides the potential for

parallelisation of image analysis Lueckenhaus and

Eckstein (1997) outlined the ability of software

agents to be aware about their environment, to be able

to cooperate, to be able to learn and plan, that is, to

react flexible on a varying environment and to be

goal-oriented. Thus, their agent-based system for

image analysis went beyond a simple parallelisation

of image analysis tasks. It rather enabled the MAS to

autonomously organize all necessary image analysis

procedures in order to optimize the results and the

operating costs.

Zhou et al. (2004) followed this approach but

aimed at an increase of performance and robustness

of computer vision systems for real-time applications

in dynamic environments. They organising the

underlying MAS architecture like a Resource

Management (RM) system, wherein software agents

are negotiating processing priorities and resources

according to the current situation of the system and its

environment. Their system has been tested among

others in remote sensing to reduce and optimize the

downlink of satellites.

Heutte, et al. (2004) introduced a similar system

for handwritten text recognition. But in contrast to the

system of Zhou et al. (2004) this system incorporates

knowledge at different levels. For each knowledge

level an according group of specialised software

agents was created, each of which being responsible

for a dedicated task (e.g. for letter recognition or

feature extraction).

Cellular automata (Liu and Tang, 1999) were

another approach, primarily for image segmentation.

Pixels aka cells or agents which meet certain

homogeneity criteria were labelled and aggregated to

image segments.

3.2 Approaches Acting at Declarative

Level

Bovenkamp et al. (2004) introduced a MAS for

segmenting Intra Vascular Ultra Sound (IVUS)

images. By describing and applying procedural

knowledge and declarative knowledge

simultaneously. In their approach five different

specialized types of segmentation agents, each of

which responsible for the delineation of different

object classes, plus a control instance responsible to

dissolve conflicts were implemented and connected

to a MAS. The MAS incorporates global constraints,

contextual knowledge and local image information.

To the knowledge of the author Samadzadegan et

al. (2010) were the first who applied agent-based

methods in the remote sensing domain. Similar to the

approach of Bovenkamp et al. (2004) they developed

a MAS which consists of two groups of software

agents to classify pixels in a Digital Elevation Model

(DEM). The DEM has been deviated from a Light

Detection And Ranging (LiDAR) point cloud and is

represented as a 2D grid of cells. Within the groups,

agents can apply dedicated procedures of image

processing and reasoning in order to extract buildings

and trees from the data. Conflicts occurring during the

detection process are solved by a “coordinator agent”.

In both approaches, declarative knowledge has been

applied for reasoning the class membership of each

segment.

Mahmoudi et al. (2013 and 2014) were the first

who combined agent-based methods with OBIA

methods. For the purpose of mapping urban structures

in WorldView-2 satellite data, they segmented the

image using a global segmentation algorithm, here:

the Multi-Resolution Segmentation (MRS) according

to Baatz and Schäpe (2000), and then applied a MAS

to assign the segments to classes. That is, reasoning

agents used declarative knowledge for assigning each

segment to according classes. However, by resigning

agents being responsible for the segmentation or other

sensible image processing operations, that is, agents

acting at procedural level, this approach is relatively

static.

Borna et al. (2014, 2015 and 2016) introduced an

agent-based system which allows image objects in

OBIA to dynamically change their shape depending

on each individuals’ appearance and spatial context

(“elastic boundary”). However, their approach is very

similar to that of Samadzadegan et al. (2010) and

Bovenkamp et al. (2004), except that it uses image

Multi-agent Systems in Remote Sensing Image Analysis

181

objects represented as GIS vector objects instead of

pixels. The dynamics of the “elastic boundaries” are

rather driven by general abilities each “vector-agent”

(VA) has, than by the class assignment or

intermediate classification results. That is, declarative

knowledge has no impact on the VAs’ behaviour.

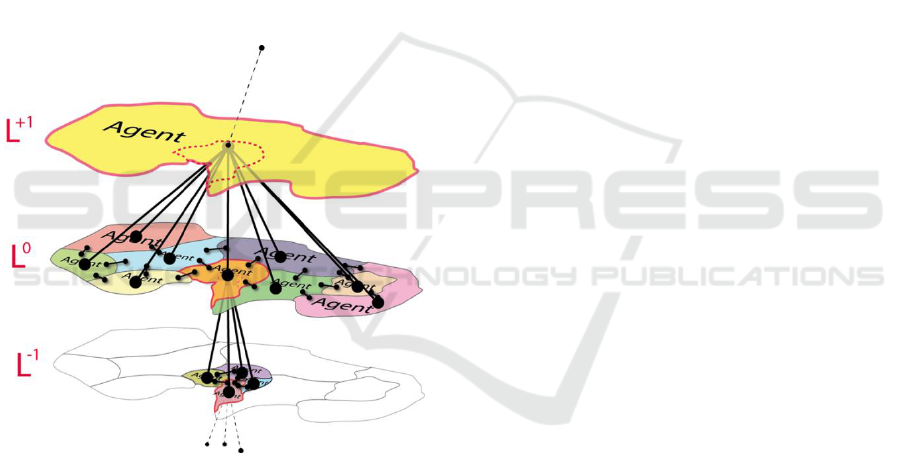

At the same time Hofmann et al. (2014, 2015 and

2016) presented a conceptual framework for Agent

Based Image Analysis (ABIA) of remote sensing

data. Main focus of their research was to mimic a

human operator who would either adjust an existing

rule set (design pattern approach) or manually correct

the object delineation aka image segmentation. They

developed two types of independent MAS: (1) a MAS

consisting of so-called Rule Set Adaptation Agents

(RSAAs) and one or more Control Agents (CAs) to

autonomously adapt given rule sets, and (2) a MAS

of hierarchically organized Image Object Agents

(IOAs) which can autonomously adapt their segment-

boundaries (Fig. 1).

Figure 1: Hierarchy of Image Object Agents (IOAs).

Parts of the latter approach were further extended

in (Hofmann, 2017) by a fuzzy Belief Desire

Intension (fBDI) model which allows each IOA to

decide in a fuzzy manner which is its next intended

action.

Troya-Galvis, et al. (2016, 2018a and 2018b)

investigated an approach to optimise image segments

by means of controlling their classification quality

through software agents. Similar to the approach of

VAs in Borna et al. (2014, 2015 and 2016) and of

IOAs in Hofmann et al. (2014, 2015 and 2016) this

approach incorporates declarative knowledge to

trigger software agents in order to improve each

individual segment. After an initial segmentation,

software agents can negotiate ambiguously classified

or unclassified pixels in order to improve the

segments’ classification quality. To avoid deadlocks,

the segment-optimisation is applied cascaded and

starts randomly. A control instance evaluates the

achieved quality and triggers potential further

segment adaptations.

4 AGENT-BASED MODELLING

AND AGENT-BASED IMAGE

ANALYSIS

Agent Based Models (ABMs) and recent agent-based

image analysis of remote sensing data are relatives.

ABMs have a long tradition in GISciences and other

disciplines to simulate complex processes. First

ABMs were applied in the late 1980ies and early

1990ies, e.g. Holland and Miller (1991) in economics

or Huston, et al. (1988) in ecology. Major purpose of

ABMs in GISciences is to simulate and explain

complex spatial processes, that is, (1) to understand

spatial patterns and how they are generated by

interacting individuals and (2) to understand spatial

and temporal interrelationships between individuals

and their environment. All ABMs have in common to

simulate the (spatial) behaviour of individual agents

and the emerging spatial patterns based on relatively

simple rules of (inter-) action with or within their

environment. In doing so, it does not matter whether

individual agents are spatially represented by simple

pixels aka cells, or by GIS vector objects, that is,

points, lines or polygons. Especially vector objects

can be of arbitrary geometric (and dynamic)

complexity; e.g. VecGCA, introduced by Marceau

and Moreno (2008), allows agents being represented

as polygons and to change their shape during

simulation very similar to the approach of Borna et al.

(2014, 2015 and 2016). However, in almost all cases

remote sensing data has been used to validate the

developed ABMs by comparing the observable

patterns in remote sensing data with those produced

by the ABMs (Adhikari and Southworth, 2012; Sohl

and Sleeter, 2012; Megahed et al., 2015).

4.1 Similarities between ABM and

Agent-based Image Analysis

Comparing the concepts of spatially acting agents in

the remote sensing domain with the principles of

ABMs, in both domains individual agents operate

dynamically in space. However, while ABM agents

ICAART 2019 - 11th International Conference on Agents and Artificial Intelligence

182

generate spatial patterns, their counterparts in image

analysis try to optimize the representation of real-

world-objects by image segments. In both domains

their behaviour is based on relatively simple rules

noted in a Belief Desire Intention (BDI) model and

the agents’ perception of the environment. Since in

both domains software agents represent spatial

entities aka real-world-objects, the agents’ BDI

model depends on the real-world-objects they

represent: The procedural knowledge for delineating

“trees” in an image is different to that for “buildings”.

The same holds for their declarative knowledge to

reason their class assignments. In a sensible ABM

“tree”-agents certainly behave different than

“building”-agents, which means their roles and

abilities in an ABM are different. That is, the same

real-world-objects are represented by two different

kinds of agents, which exist and act in different

environments, namely an image of the real world

consisting of numerical values (remote sensing) and

an abstract geometric model of the real world (ABM).

In both representations, their behaviour is determined

by the ontology of the real-world-objects they

represent but it depends on the environment they act

in.

4.2 Differences between ABM and

Agent-based Image Analysis

The very difference between ABMs and agent-based

image analysis concepts is the absence of robot-like

agents in ABMs which are able to autonomously

apply procedural knowledge in terms of selecting,

combining or manipulating image processing

methods.

Another difference is the agents’ goals: in agent-

based image analysis agents intend to achieve a best

possible delineation of the imaged real world objects

according to the declarative knowledge by applying

procedural knowledge. The goal of agents in ABMs

instead is to achieve an equilibrium or a Pareto

optimality in the simulated (real-)world they are

acting in.

A further difference is the absence of control

instances in ABMs. In agent-based image analysis

they are necessary to evaluate (intermediate) results

during processing and to trigger the behaviour of

individual agents. In ABMs such a mechanism is not

necessary.

Further, in contrast to agents in ABMs, VAs or

IOAs can change their class membership (and

consequently change their behaviour): During the

adaptation process it might happen, that individual

IOAs or VAs fulfil the declarative criteria of multiple

real-world-classes (simultaneously). ABM agents in

principle only change their class or role explicitly by

design.

Last but not least ambiguity in agent-based image

analysis must be taken into account the one or other

way. Even classification results can be ambiguous. In

ABMs ambiguity only matters for the perception of

the environment, that is, an agent’s role in an ABM is

unambiguous.

5 CONCLUSIONS AND

OUTLOOK

The increasing growth of remote sensing data

archives demands new methods of automatic, reliable

and autonomous extraction of geo-information from

remote sensing data. Recent methods are either

lacking a high degree of automation or a high degree

of reliability. Although recent methods of computer

vision, such as CNNs are meanwhile very successful

in diverse imaging domains, in the remote sensing

domain they are not more suitable than other

established methods.

Although not exhaustively researched yet, multi-

agent systems for remote sensing image analysis have

the potential to increase the degree of automation and

reliability of remote sensing image analysis.

Especially their ability to react flexible and robust on

changing environmental situations (slightly changing

imaging conditions, atmospherical impact, slightly

changing image quality, seasonal impacts, etc.) seems

to be promising. Nevertheless, research results which

could confirm the advantage of agent-based image

analysis methods especially in the context of

analysing large archives are still missing. Troya-

Galvis, et al. (2016, 2018a and 2018b) observed in

their investigations slightly improved classification

results compared to a CNN-based and a hybrid

segmentation-classification approach called

“Spectral-Spatial Classification” (SSC). Borna et al.

(2014, 2015 and 2016) and Hofmann et al. (2014,

2015 and 2016) could just demonstrate the feasibility

of their approaches, yet, but validation results, or

results proofing the ability to reliably analyse large

archives of remote sensing data are still missing. Last

but not least enabling image analysis agents to learn

(Biswas et al. 2005), especially for design pattern

approaches, is an interesting aspect for further

research. In this context the incorporation of implicit

knowledge, in agent-based image analysis (e.g. by

using ANNs) has not been investigated, yet.

Multi-agent Systems in Remote Sensing Image Analysis

183

From a geo-scientist’s point of view, the similarity

of ABMs and the concept of VAs or IOAs is a further

interesting aspect: by coupling individual but

corresponding ABM agents and VAs/IOAs, they

could facilitate a quasi in-situ validation of an ABM

simulation unlike the post-simulation validation, as it

is still done today. The latter also has a high potential

to improve our understanding of the environment and

the Earth system, especially in conjunction with time

series of remote sensing data. A further interesting

aspect of coupling agent-based image analysis with

ABMs is their consideration of scale: here

hierarchically organized VAs/IOAs could support the

validation of aggregation and emergence processes of

individual agents in ABMs, such as urbanisation (de-

)forestation or the evolvement of swarms.

REFERENCES

Adhikari, S., Southworth, J., 2012. Simulating Forest Cover

Changes of Bannerghatta National Park Based on a CA-

Markov Model: A Remote Sensing Approach. In:

Remote Sensing 4 (10): 3215–43.

Anders, N. S., Seijmonsbergen, A. C., Bouten W. 2015.

Rule Set Transferability for Object-Based Feature

Extraction: An Example for Cirque Mapping. In:

Photogrammetric Engineering & Remote Sensing 81

(6): 507–14.

Arvor, D., Durieux, L., Andrés, S., Laporte, M.-A., 2013.

Advances in Geographic Object-Based Image Analysis

with Ontologies: A Review of Main Contributions and

Limitations from a Remote Sensing Perspective. In:

ISPRS Journal of Photogrammetry and Remote Sensing

82: 125–37.

Baatz, M., Schäpe, A., 2000. Multiresolution

Segmentation: An Optimization Approach for High

Quality Multi-Scale Image Segmentation. In J. Strobl,

T. Blaschke, G. Griesebner (Eds.): Angewandte

Geographische Informations-Verarbeitung XII,

Wichmann Verlag, Karlsruhe (2000), pp. 12-23.

Belgiu, M., Hofer B., Hofmann, P., 2014. Coupling

Formalized Knowledge Bases with Object-Based

Image Analysis. In: Remote Sensing Letters 5 (6): 530–

38.

Benz, U. C., Hofmann, P., Willhauck, G., Lingenfelder, I.,

Heynen, M., 2004. Multi-Resolution, Object-Oriented

Fuzzy Analysis of Remote Sensing Data for GIS-Ready

Information. In: ISPRS Journal of Photogrammetry and

Remote Sensing 58 (3–4): 239–58.

Biswas, G., Leelawong, K., Schwartz, D., Vye, N., 2005.

Learning by Teaching: A New Agent Paradigm for

Educational Software.” In: Applied Artificial

Intelligence 19 (3–4): 363–92.

Blaschke, T., Strobl, J., 2001. What’s Wrong with

Pixels?: Some Recent Developments Interfacing

Remote Sensing and GIS. In: GeoBIT, 6 (6): 12–17.

Blaschke, T. 2010. Object Based Image Analysis for

Remote Sensing. In: ISPRS Journal of Photogrammetry

and Remote Sensing 65 (1): 2–16.

Blaschke, T., Hay, G. J., Kelly, M., Lang, S., Hofmann, P.,

Addink, E., Feitosa, R. Q., Van der Meer, F., Van der

Werff, H., van Coillie, F., 2014. Geographic Object-

Based Image Analysis–towards a New Paradigm. In:

ISPRS Journal of Photogrammetry and Remote Sensing

87: 180–91.

Borna, K., Moore, A. B., Sirguey, P., 2014. Towards a

Vector Agent Modelling Approach for Remote Sensing

Image Classification. In: Journal of Spatial Science 59

(2): 283–96.

Borna, K., Moore, A. B., Sirguey, P., 2016. An Intelligent

Geospatial Processing Unit for Image Classification

Based on Geographic Vector Agents (GVAs). In:

Transactions in GIS 20 (3): 368–81.

Borna, K., Sirguey, P., Moore, A. B., 2015. An Intelligent

Vector Agent Processing Unit for Geographic Object-

Based Image Analysis. In: Geoscience and Remote

Sensing Symposium (IGARSS), 2015 IEEE

International, 3053–56. IEEE.

Boulton, G., 2018. The Challenges of a Big Data Earth. In:

Big Earth Data 2 (1): 1–7.

Bovenkamp, E. G. P., Dijkstra, J., Bosch, J. G., Reiber, J.

H. C., 2004. Multi-Agent Segmentation of IVUS

Images. In: Pattern Recognition 37 (4): 647–63.

Burnett, C., Blaschke, T., 2003. A Multi-Scale

Segmentation/Object Relationship Modelling

Methodology for Landscape Analysis. In: Ecological

Modelling 168 (3): 233–49.

Canty, M. J. 2014. Image Analysis, Classification and

Change Detection in Remote Sensing: With Algorithms

for ENVI/IDL and Python. CRC Press.

Colwell, R. N. 1968. Remote Sensing of Natural Resources.

In: Scientific American 218 (1): 54–71.

Guo, H. 2017. Big Earth Data: A New Frontier in Earth and

Information Sciences. In: Big Earth Data 1 (1–2): 4–

20.

Heutte, L., Nosary, A., Paquet, T., 2004. A Multiple Agent

Architecture for Handwritten Text Recognition. In:

Pattern Recognition 37 (4): 665–74.

Hofmann, P., 2017. A Fuzzy Belief-Desire-Intention Model

for Agent-Based Image Analysis. In: Modern Fuzzy

Control Systems and Its Applications. InTech. 281-296.

Hofmann, P., Andrejchenko, V., Lettmayer, P.,

Schmitzberger, M., Gruber, M., Ozan, I., Belgiu, M.,

Graf, R., Lampoltshammer, T. J., Wegenkittl, S., 2016.

Agent Based Image Analysis (ABIA)-Preliminary

Research Results from an Implemented Framework. In:

GEOBIA 2016: Solutions and Synergies.

Hofmann, P., Blaschke, T., Strobl, J., 2011. Quantifying the

Robustness of Fuzzy Rule Sets in Object-Based Image

Analysis. In: International Journal of Remote Sensing

32 (22): 7359–81.

Hofmann, P., Lettmayer, P., Blaschke, T., Belgiu, M.,

Wegenkittl, S., Graf, R., Lampoltshammer, T. J.,

Andrejchenko, V., 2014. ABIA – A Conceptual

Framework for Agent Based Image Analysis. In: South-

ICAART 2019 - 11th International Conference on Agents and Artificial Intelligence

184

Eastern European Journal of Earth Observation and

Geomatics 3 (2S): 125–30.

Hofmann, P., Lettmayer, P., Blaschke, T., Belgiu, M.,

Wegenkittl, S., Graf, R., Lampoltshammer, T. J.,

Andrejchenko, V., 2015. Towards a Framework for

Agent-Based Image Analysis of Remote-Sensing Data.

In: International Journal of Image and Data Fusion 6

(2): 115–37.

Holland, J. H., Miller, J. H., 1991. Artificial Adaptive

Agents in Economic Theory. In: The American

Economic Review 81 (2): 365–70.

Jennings, N. R. 1999. Agent-Based Computing: Promise

and Perils. In: Proc. IJCAI-99, Stockholm, Sweden

(1999), 1429-1436.

Kohli, D., Warwadekar, P., Kerle, N., Sliuzas, R., Stein, A.,

2013. Transferability of Object-Oriented Image

Analysis Methods for Slum Identification. In: Remote

Sensing 5 (9): 4209–28.

Lillesand, T., Kiefer R. W., Chipman, J., 2014. Remote

Sensing and Image Interpretation. John Wiley & Sons.

Liu, J., Tang, Y. Y., 1999. Adaptive Image Segmentation

with Distributed Behavior-Based Agents. In: IEEE

Transactions on Pattern Analysis and Machine

Intelligence 21 (6): 544–51.

Lueckenhaus, M., Eckstein, W., 1997. Multiagent-Based

System for Parallel Image Processing. In: Parallel and

Distributed Methods for Image Processing, 3166:21–

31. International Society for Optics and Photonics.

Ma, Y., Wu, H., Wang, L., Huang, B., Ranjan, R., Zomaya,

A., Jie, W., 2015. Remote Sensing Big Data

Computing: Challenges and Opportunities. In: Future

Generation Computer Systems 51: 47–60.

Mahmoudi, F. T., Samadzadegan, F., Reinartz, P., 2013.

Object Oriented Image Analysis Based on Multi-Agent

Recognition System. In: Computers & Geosciences 54:

219–30.

Mahmoudi, F. T., Samadzadegan, F., Reinartz, P., 2014.

Multi-Agent Recognition System Based on Object

Based Image Analysis Using WorldView-2. In:

Photogrammetric Engineering & Remote Sensing 80

(2): 161–70.

Marceau, D. J., Moreno, N., 2008. An Object-Based

Cellular Automata Model to Mitigate Scale

Dependency. In: Object-Based Image Analysis, 43–73.

Springer.

Megahed, Y., Cabral, P., Silva, J., Caetano, M., 2015. Land

Cover Mapping Analysis and Urban Growth Modelling

Using Remote Sensing Techniques in Greater Cairo

Region—Egypt. In: ISPRS International Journal of

Geo-Information 4 (3): 1750–69.

Rokitnicki-Wojcik, D., Wei, A., Chow-Fraser, P., 2011.

Transferability of Object-Based Rule Sets for Mapping

Coastal High Marsh Habitat among Different Regions

in Georgian Bay, Canada. In: Wetlands Ecology and

Management 19 (3): 223–36.

Rosin, P. L., Rana, O. F., 2004. Agent-Based Computer

Vision. In: Pattern Recognition 37 (4): 627–29.

Samadzadegan, F., Mahmoudi, F. T., Schenk, T., 2010. An

Agent-Based Method for Automatic Building

Recognition from Lidar Data. In: Canadian Journal of

Remote Sensing 36 (3): 211–23.

Schmidt, G., Athelogou, M. A., Schoenmeyer, R., Korn, R.,

Binnig, G., 2007. Cognition Network Technology for a

Fully Automated 3-D Segmentation of Liver. In:

Proceedings of the Miccai Workshop on 3D

Segmentation in the Clinic: A Grand Challenge, 125–

33.

Sohl, T., Sleeter, B., 2012. Role of Remote Sensing for

Land-Use and Land-Cover Change Modeling. In:

Remote Sensing of Land Use and Land Cover, CRC,

225–240.

Stoter, J., Visser, T., van Oosterom, P., Quak, W., Bakker,

N., 2011. A Semantic-Rich Multi-Scale Information

Model for Topography. In: International Journal of

Geographical Information Science 25 (5): 739–63.

Tiede, D., Lang, S., Hölbling, D., P. Füreder, P., 2010.

Transferability of OBIA Rulesets for IDP Camp

Analysis in Darfur. In: Proceedings of GEOBIA 2010,

Ghent, Belgium, 29 June–2 July 2010.

Troya-Galvis, A., Gançarski, P., Berti-Équille, L., 2016.

Collaborative Segmentation and Classification for

Remote Sensing Image Analysis. In: Pattern

Recognition (ICPR), 2016 23

rd

International

Conference On, 829–34. IEEE.

Troya-Galvis, A., Gançarski, P., Berti-Équille, L., 2018a. A

Collaborative Framework for Joint Segmentation and

Classification of Remote Sensing Images. In: Advances

in Knowledge Discovery and Management, 127–45.

Springer.

Troya-Galvis, A., Gançarski, P., Berti-Équille, L., 2018b.

Remote Sensing Image Analysis by Aggregation of

Segmentation-Classification Collaborative Agents. In:

Pattern Recognition 73: 259–74.

Wooldridge, M. 1998. Agent-Based Computing. In:

Interoperable Communication Networks 1: 71–98.

Zhou, Q., Parrott, D., Gillen, M., Chelberg, D. M., Welch,

L., 2004. Agent-Based Computer Vision in a Dynamic,

Real-Time Environment. In: Pattern Recognition 37

(4): 691–705.

Multi-agent Systems in Remote Sensing Image Analysis

185