Validation of Facial Action Unit for Happy Emotion Detection

Ananta Yudiarso

1

, William Liando

1

, Jun Zhao

2

, Runliang Ni

2

, Ziyi Zhao

2

1

Faculty of Psychology Surabaya University, Surabaya, Indonesia

2

Department of Psychology and Behavioral Sciences, Zhejiang University, Hangzhou, China

Keywords: Happiness, Facial Action Unit

Abstract: One alternative to measure happiness is to use facial expression by measuring facial muscle movement

namely facial coding unit or action unit. Rarely studies found to verify validity the action unit of happiness.

Accordingly, we held one group pretest posttest experimental design to test the specificity and sensitivity

facial expression of happiness. We applied a video experimental paradigm to stimulate facial happiness

expression. Openface software was used to measure facial action unit. The participants of this research were

203 Indonesia university students. Happiness emotion stimuli was measured by single item with a scale 0-

10. To calculate the specificity and sensitivity we employed ROC (Receiver Operating Curve) statistical

technique. Our result shown inconsistent finding for AU6 and AU12 due to Facial Coding Unit System

(FCUS) to detect happy emotion. The co-occurrence of other emotion and individual differences in

interpreting stimuli influenced the validity of measurement.

1 INTRODUCTION

Facial action coding system is a system of human

facial movement standard in emotional expression

by facial appearance. This facial coding was applied

in computer face recognition software to recognize

human emotion by facial muscle observation or

micro expression (Erkoç, Ağdoğan, & Eskil, 2018;

Kotsia, Zafeiriou, & Pitas, 2008; Liong, See, Wong,

& Phan, 2018; Smith & Windeatt, 2015).

Facial action coding is important for better

understanding a real time emotion recognition.

Application of facial action unit had been proposed

for clinical area of psychosis, depression, anxiety

and pain. (Pulkkinen et al., 2015; Sanchez, Romero,

Maurage, & De Raedt, 2017)

This study focused on validity the use of Facial

action unit to measure human emotion especially

emotion of happy. Facial emotion of happy

expression is important due to representation of

mental illness at facial expression (Huang et al.,

2013; Kerestes et al., 2016).

According to Facial Action Unit Coding System

(FACS), the facial expression of happy emotion can

be detected from Action Unit 6 (AU6) represented

by cheek raiser (orbicularis oculi) and Action Unit

12 (AU12) represented by lip corner puller

(zygomaticus major).

Rarely found studies in validation of using facial

action unit to predict human emotion especially

happy emotion. Accordingly, we held this research.

2 METHOD

The participants of this research were 203

Indonesian university students age 18-21. Happiness

emotion was measured by single item 0-10 scale of

happiness. Facial

Action Unit score measured by OpenFace

(http://cmusatyalab.github.io/openface/). This

research focused on AU6 and AU12 due to FACS

for happy emotion. For the experimental procedure,

participant was exposed a happy video stimulation

for 30 seconds using computer screen. Participant

Facial expression was recorded by a high definition

video during the stimulation. The video processed at

Openface software to calculate score movement unit

of AU6 and AU12. At the end of presentation of

video, participants were asked to fill the scale single

item happiness on a scale of 0-10.

To calculate the specificity and sensitivity we

employed ROC (Receiving Operator Curve)

statistical technique. We define as true case if the

score of happiness response was 8 till 10 on a scale

of 10.

360

Yudiarso, A., Liando, W., Zhao, J., Ni, R. and Zhao, Z.

Validation of Facial Action Unit for Happy Emotion Detection.

DOI: 10.5220/0008589403600363

In Proceedings of the 3rd International Conference on Psychology in Health, Educational, Social, and Organizational Settings (ICP-HESOS 2018) - Improving Mental Health and Harmony in

Global Community, pages 360-363

ISBN: 978-989-758-435-0

Copyright

c

2020 by SCITEPRESS – Science and Technology Publications, Lda. All r ights reserved

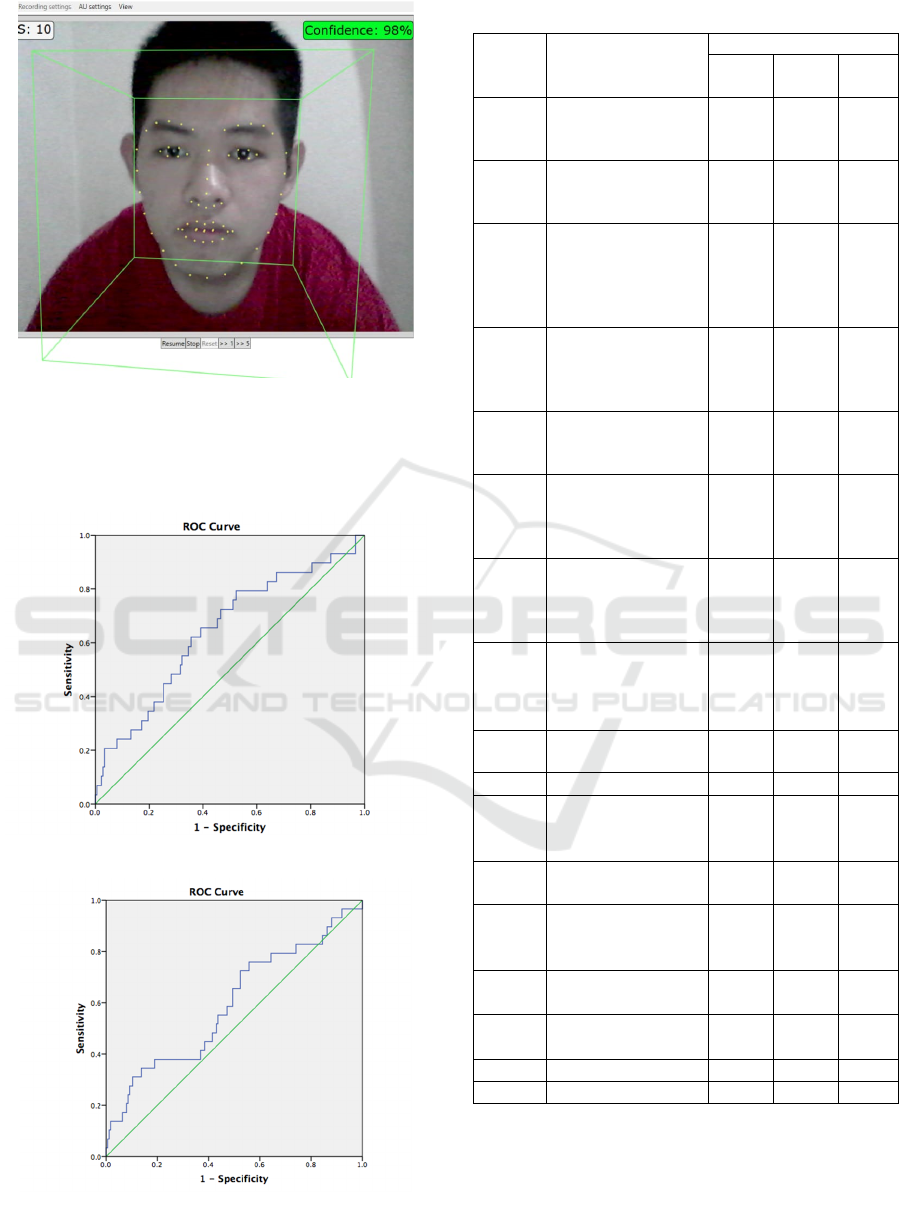

Figure 1: Landmark Facial Action Unit.

3 RESULT

Graph 1: Area under curve AU6.

Graph 2: Area under ROC AU 12

Table 1: Area ROC.

Facial

Action

Unit

Muscular bases

Area Under RoC

Score

10

Score

10,9

Score

10,9,8

AU 1

Inner brow raiser

(frontalis (pars

medialis)

0.567 0.558 0.544

AU 2

Outer brow raiser

(rontalis (pars

lateralis

)

0.515 0.530 0.492

AU 4

Brow lower

(depressor

glabellae, depressor

supercilii, corrugato

r su

p

ercilii

)

0.570 0.525 0.518

AU 5

Upper lid raiser

(levator palpebrae

superioris, superior

tarsal muscle

)

0.572 0.581 0.525

AU 6

Chick raiser

(orbicularis

oculi

(

p

ars orbitalis

)

0.644 0.627 0.498

AU 7

Lid tighten

(rbicularis

oculi (pars

p

al

p

ebralis

))

0.529 0.523 0.544

AU 9

Nose wrinkle

(levator labii

superioris alaeque

nasi)

0.557 0.508 0.501

AU10

Upper lip raiser

(levatorlabii

superioris, caput

infraorbitalis)

0.569 0.578 0.519

AU 12

Lip corner puller

(

z

yg

omaticus ma

j

o

r

)

0.594 0.584 0.539

AU 14

Dimple (buccinator)

0.521 0.561 0.524

AU 15

Lip corner depressor

(depressoranguli

oris)

0.543 0.547 0.443

AU17

Chin raiser

(mentalis)

0.603 0.574 0.453

AU 20

Lip stretcher

(risorius w/ platysm

a)

0.551 0.566 0.481

AU23

Lip tighten

(orbicularisoris)

0.475 0.525 0.507

AU 25

Lips part (depressor

labiiinferioris)

0.577 0.642 0.447

AU 26

Jaw drop (masseter)

0.541 0.597 0.470

AU 45 Blin

k

0.566 0.579 0.587

Graph 1 and 2 shown the statistical power

differentiated using area under ROC between

participant who are felt happy with score of

happiness 10,9 and 8 on a scale 0-10. All the area

under curve shown a negative finding or below 0.7.

Validation of Facial Action Unit for Happy Emotion Detection

361

Reliability for the video stimulation calculated

by tes-retest reliability for 22 participants two

months after the experiment. The result shown 0.684

for the test-retest reliability. This result indicated a

low reliability for the response of video stimulation.

Mean and standard deviation of first measurement

were 8.051.32 and second measurement were

7.451.67 These result implicated a learning bias for

the video stimulation.

4 DISCUSSION

Our result indicated AU6 and AU 12 did not support

the hypothesis that happy emotion can be detected

from facial expression. Facial emotion expression

was formed by neck and muscular movement which

is influence by sympathetic and parasympathetic

nervous system. Thus psychological state in our

central and peripheral nervous system influence the

facial expression (Meier et al., 2016).

We found negative findings or low differentiated

power for AU6 and AU12 to dissociate happy facial

expression. Two main factors influenced this result.

Firstly, The Individual differences in stimuli

interpretation and individual facial emotion

expression. (Maoz et al., 2016). However, at trend

level we found the higher score of happiness of the

participant the higher power of area under ROC for

AU6 and AU12 (Table 1).

Secondly, the co-occurrence of other emotion in

the same time during the experiment procedure

influenced the AU12 muscle of zygomaticus major.

This study did not control the other emotions during

in stimuli presentation.

The low power to dissociate between the

conditions also occurred from the reliability and

sensitivity of the computer software. (Menzel,

Redies, & Hayn-Leichsenring, 2018). Validity and

reliability by replication study and by comparison

with human observer are needed.

Theoretical implication of this finding suggested

evaluation of psychological emotion measurement

from facial muscular emotion or facial action unit.

Happiness may a complex emotions and should be

detect with multi action unit and multi-modality

parameter (head pose, gaze or other action unit).

Practical implication of our finding supported the

use of development the use of FACS in human

emotion detection for a real time detection system of

human emotion.

5 CONCLUSION

Our research finding shown lack of validation

measurement using Facial Action Unit. Our study is

limited to use AU6 and AU12 due to Ekman’s

FACS. Other modality measurement of gaze and

head posed and other facial action unit may involve

in facial happy emotion. Due to limitation of

reliability of the video stimulation, experimental

design and variation facial expression, further

replication studies on validation facial action unit to

measure emotion especially happiness is worth to

held.

REFERENCES

Amos, B. Ludwiczuk., M. Satyanarayanan, 2016

"Openface: A general-purpose face applications,"

CMU-CS-16-118, CMU School of Computer

Science, Tech. Rep., 2016.

Tadas B., Amir, Z., Yao C. L., and Louis-Philippe,

M. 2018, OpenFace 2.0: Facial Behavior

Analysis Toolkit in IEEE International

Conference on Automatic Face and Gesture

Recognition, 2018

Erkoç, T., Ağdoğan, D., & Eskil, M. T. 2018. An

observation based muscle model for simulation

of facial expressions. Signal Processing: Image

Communication, 64, 11-20.

Huang, J., Wang, Y., Jin, Z., Di, X., Yang, T., Gur,

RC., Chan, R.C.K. 2013. Happy facial

expression processing with different social

interaction cues: An fMRI study of individuals

with schizotypal personality traits. Progress in

Neuro-Psychopharmacology and Biological

Psychiatry, 44, 108-117.

Kerestes, R., Segreti, A.M., Pan, L.A., Phillips,

M.L., Birmaher, B., Brent, D.A., & Ladouceur,

C.D. 2016. Altered neural function to happy

faces in adolescents with and at risk for

depression. Journal of Affective Disorders, 192,

143-152.

Kotsia, I., Zafeiriou, S., & Pitas, I. 2008. Texture

and shape information fusion for facial

expression and facial action unit recognition.

Pattern Recognition, 41(3), 833-851.

Liong, S-T., See, J., Wong, K., & Phan, R.C. W.

2018. Less is more: Micro-expression

recognition from video using apex frame. Signal

Processing: Image Communication, 62, 82-92.

Maoz, K., Eldar, S., Stoddard, J., Pine, D.S.,

Leibenluft, E., & Bar-Haim, Y. 2016. Angry-

ICP-HESOS 2018 - International Conference on Psychology in Health, Educational, Social, and Organizational Settings

362

happy interpretations of ambiguous faces in

social anxiety disorder. Psychiatry Research,

241, 122-127.

Meier, IM., Bos, P.A., Hamilton, K., Stein, D.J., van

Honk, J., & Malcolm-Smith, S. 2016. Naltrexone

increases negatively-valenced facial responses to

happy faces in female participants.

Psychoneuroendocrinology, 74, 65-68.

Menzel, C., Redies, C., & Hayn-Leichsenring, G.U.

2018. Low-level image properties in facial

expressions. Acta Psychologica, 188, 74-83.

Pulkkinen, J., Nikkinen, J., Kiviniemi, V., Mäki, P.,

Miettunen, J., Koivukangas, J., Veijola, J. 2015.

Functional mapping of dynamic happy and

fearful facial expressions in young adults with

familial risk for psychosis — Oulu Brain and

Mind Study. Schizophrenia Research, 164(1),

242-249.

Sanchez, A., Romero, N., Maurage, P., & De Raedt,

R. 2017. Identification of emotions in mixed

disgusted-happy faces as a function of depressive

symptom severity. Journal of Behavior Therapy

and Experimental Psychiatry, 57, 96-102.

Smith, R. S., & Windeatt, T. 2015. Facial action unit

recognition using multi-class classification.

Neurocomputing, 150, 440-448.

Validation of Facial Action Unit for Happy Emotion Detection

363