Ontology Selection for Reuse: Will It Ever Get Easier?

Marzieh Talebpour, Martin Sykora and Tom Jackson

School of Business and Economics, Loughborough University, Loughborough, U.K.

Keywords: Quality Metrics, Ontology Evaluation, Ontology Selection, Ontology Reuse.

Abstract: Ontologists and knowledge engineers tend to examine different aspects of ontologies when assessing their

suitability for reuse. However, most of the evaluation metrics and frameworks introduced in the literature

are based on a limited set of internal characteristics of ontologies and dismiss how the community uses and

evaluates them. This paper used a survey questionnaire to explore, clarify and also confirm the importance

of the set of quality related metrics previously found in the literature and an interview study. According to

the 157 responses collected from ontologists and knowledge engineers, the process of ontology selection for

reuse depends on different social and community related metrics and metadata. We believe that the findings

of this research can contribute to facilitating the process of selecting an ontology for reuse.

1 INTRODUCTION

Ontology reuse, using an existing ontology as the

basis for building new a one, is beneficial to the

community of ontologists and knowledge engineers.

It will help in achieving one of the primary goals of

ontology construction, that is to share and reuse

them (Simperl, 2009), and will also save a

significant amount of time and financial resources.

Despite all the advantages of ontology reuse and the

availability of different ontologies, it has always

been a challenging task (Uschold et al., 1998).

Ontology reuse consists of different steps namely

searching for adequate ontologies, evaluating the

quality and fitness of those ontologies for the reuse

purpose, selecting an ontology and integrating it in

the current project (d’Aquin et al., 2008). Some

consider the first steps of this process, which is

evaluation and selection of the knowledge sources

that can be useful for an application domain (Bontas,

Mochol and Tolksdorf, 2005), as the hardest step of

this process (Butt, Haller and Xie, 2014).

Ontology evaluation is at the heart of ontology

selection and has received a considerable amount of

attention in the literature. Gómez-Pérez (1995)

defines the term evaluation as the process of judging

different technical aspects of an ontology namely its

definitions, documentation and software

environment. Evaluation has also been described as

the process of measuring the suitability and the

quality of an ontology for a specific goal or in a

specific application (Fernández, Cantador and

Castells, 2006). This definition refers to the

approaches that aim to identify an ontology, an

ontology module or a set of ontologies that satisfy a

particular set of selection requirements (Sabou et al.,

2006).

This study aims to determine some of the metrics

that can be used to evaluate the suitability of an

ontology for reuse. The fundamental research

question of this study was whether or not social and

community related metrics can be used in the

evaluation process. Another question was how

important those metrics were compared to the well-

known ontological metrics such as content and

structure. Qualitative and quantitative research

designs were adopted to provide a deeper

understanding of how ontologists and knowledge

engineers evaluate and select ontologies. This study

offers some valuable insights into ontology quality,

what it depends on and how it can be measured.

2 BACKGROUNDS

Since 1995 to date, there has been a variety of

research on different aspects of ontology evaluation

including methodologies, tools, frameworks,

methods, metrics, measures, etc. However, much

uncertainty and also disagreement still exists about

the best way to evaluate an ontology generally or for

a specific tool or application. As it is seen in the

literature, there are many different ways of

evaluating ontologies and also many ways of

classifying those evaluation methods, algorithms and

108

Talebpour, M., Sykora, M. and Jackson, T.

Ontology Selection for Reuse: Will It Ever Get Easier?.

DOI: 10.5220/0006937101080116

In Proceedings of the 10th International Joint Conference on Knowledge Discovery, Knowledge Engineering and Knowledge Management (IC3K 2018) - Volume 2: KEOD, pages 108-116

ISBN: 978-989-758-330-8

Copyright © 2018 by SCITEPRESS – Science and Technology Publications, Lda. All rights reserved

approaches. This section aims to review and classify

some of the most popular ontology evaluation

approaches.

Ontology evaluation approaches can broadly be

classified as follow:

User-based Evaluation (Hlomani and Stacey,

2014) : also known as metric based or feature based;

ontologists and knowledge experts can assess the

quality of ontologies by comparing them against a

set of pre-defined criteria (Maiga and Ddembe,

2008) or by analysing the reviews and comments

provided by their peers on different aspects of

ontologies (Supekar, 2005).

Golden Standard: refers to the type of

evaluation that is performed by comparing an

ontology to another ontology, also known as a "gold

standard" ontology, and aims to find different types

of similarities between them, e.g. lexical,

conceptual, etc. This approach was first proposed by

Maedche and Staab (2002) and was then used in

other research, namely Brank, Mladenic and

Grobelnik (2006).

Task-based Evaluation: also known as

application-based (Fahad and Qadir, 2008) or black

box evaluation (Obrst et al., 2007); aims to evaluate

an ontology's performance in the context of an

application (Brewster et al., 2004). According to this

approach, there is a direct link between the quality

of an ontology and how well it serves its purpose as

a part of a broader application (Netzer et al., 2009).

Data or Corpus Driven Evaluation: this

approach is similar to the “gold standard” approach,

but instead of comparing an ontology to another

ontology, it compares it to a source of data or a

collection of documents (Brank, Grobelnik and

Mladenic, 2005). One of the most popular

architectures for this type of evaluation is proposed

by Brewster et al. (2004).

Rule-based (logical): this type of evaluation is

proposed by (Arpinar, Giriloganathan and Aleman-

Meza, 2006) and aims to validate ontologies and

detects conflicts in them by using different rules that

are either a part of the ontology development

language or are identified by users.

From all the approaches mentioned above, much

of the research in the ontology evaluation domain

has concentrated on criteria-based approaches, and

many have tried to identify and introduce a set of

metrics that can be used for ontology evaluation. A

more detailed account of criteria-based ontology

evaluation is given in the next section.

3 CRITERIA-BASED

EVALUATION

According to a study conducted by Talebpour,

Sykora and Jackson (2017), quality metrics for

ontology evaluation can broadly be classified into

three main groups: (1) Internal metrics that are based

on different internal characteristic of ontologies such

as their content and structure, (2) Metadata related

metrics that can be used to describe ontologies and

to help in the selection process, and (3) Social

metrics that focus on how ontologies are used by

communities.

3.1 Internal Metrics

Internal aspects of ontologies have always been used

as a mean of their evaluation. Different internal

quality criteria such as clarity, correctness,

consistency, completeness, etc. have been used in

the literature to measure how clear ontology

definitions are, how different entities in an ontology

represent the real world, how consistent an ontology

is, and how complete an ontology is (Yu, Thom and

Tam, 2009). Coverage is yet another significant

content related metric; the term coverage is mostly

used in the literature to measure how well a

candidate ontology match or cover the query term(s)

and selection requirements (Buitelaar, Eigner and

Declerck, 2004). Structure or graph structure

(Gangemi et al., 2006) is the other important internal

aspect of an ontology that can be used to measure

how detailed the knowledge structure of an ontology

is (Fernández et al., 2009) and also to evaluate its

richness of knowledge (Sabou et al., 2006), density

(Yu, Thom and Tam, 2007), depth and breadth

(Fernández et al., 2009), etc.

3.2 Metadata

Besides the internal aspects of ontologies, some of

the frameworks and tools have suggested evaluating

ontologies using different types of metadata.

Metadata or "data about data" is widely used on the

web for different reasons namely to help in the

process of resource discovery (Gill, 2008). Sowa

(2000) believes that the primary connection between

different elements of an ontology is in the mind of

the people who interpret it; so, tagging an ontology

with more data will help in making those mental

connections explicit. Ontologies can be tagged and

described according to their different characteristics,

e.g. size, type, version, etc. The language that

different ontologies are built and implemented with

can also be used as a metric to evaluate, filter and

Ontology Selection for Reuse: Will It Ever Get Easier?

109

categorise them (Lozano-Tello and Gómez-Pérez,

2004).

There are different examples of using metadata

in the literature to help with the process of

evaluating, finding and reusing ontologies. Swoogle

(Ding et al., 2004) was one of the very first selection

systems in ontology engineering field to introduce

the concept of metadata to this domain. There is a

metadata generator component in this system that is

responsible for creating and storing three different

types of metadata about each discovered ontology

including basic, relation, and analytical metadata

(ibid.). Supekar (2005) have also proposed two sets

of metadata that can be used to evaluate ontologies:

source metadata and third-party metadata.

Moreover, metadata is created and used to help

interoperability between different applications and

ontologies. Ontology Metadata Vocabulary (OMV)

was proposed by Hartmann at al. (2005) and is one

of the most popular sets of metadata for ontologies.

OMV is not directly concerned with ontology

evaluation or ranking and its main aim is to facilitate

ontology reuse. Matentzoglu et al. (2018) have

proposed a guideline for minimum information for

the reporting of an ontology (MIRO) to help

ontologists and knowledge engineers in the process

of reporting ontology description and providing

documentation. It is believed that MIRO can

improve the quality and consistency of ontology

descriptions and documentation.

3.3 Community Aspects of Ontologies

How ontologies are used by communities can be

used as a metric in the evaluation and selection

process. Hlomani and Stacey (2014) define user-

based ontology evaluation as the process of

evaluating an ontology though users' experiences

and by capturing different subjective information

about ontologies. According to a study that was

conducted by Lewen and d’Aquin (2010), relying on

the experiences of other users for evaluating

ontologies will lessen the efforts needed to assess an

ontology and reduce the problems that users face

while selecting an ontology. Mcdaniel, Storey and

Sugumaran (2016) have also highlighted the

importance of relying on the wisdom of the crowd in

ontology evaluation and believe that improving the

overall quality of ontological content on the web is a

shared responsibility within a community.

Several studies have attempted to investigate and

explore how community and social aspects of

ontologies can affect their quality. According to an

interview study conducted by Talebpour, Sykora and

Jackson (2017), knowledge engineers consider

different social aspects of ontologies when

evaluating them. Those aspects include: (1) build

related information, for example, who has built the

ontology, why the ontology was built, do they know

the developer team, (2) regularity of update and

maintenance, and (3) responsiveness of the ontology

developer and maintenance team and their flexibility

and willingness toward making changes.

Another popular approach was proposed by

Burton-Jones et al. (2005) where a deductive method

was applied to identify a set of general, domain-

independent and application-independent quality

metrics for ontology evaluation. This approach

proposed different social quality metrics namely

authority and history to measure the role of

community in ontology quality. Another example of

social based quality application was proposed by

Lewen et al. (2006) in which the notion of the open

rating system and democratic ranking were applied

to ontology evaluation. According to this approach,

users of this system can not only review the

ontology, but they can also review the reviews

provided by other users about an ontology. A similar

approach was proposed by Lewen and d’Aquin

(2010) where users’ ratings are used to determine

what they call user-perceived quality of ontologies.

Overall, the above-mentioned studies highlight

the importance of the criteria-based approaches in

ontology evaluation. They also outline the most

important or used quality metrics in the literature.

The next sections discuss the methodology used to

collect data and the findings of this research.

4 METHODOLOGY

From all the groups of quality related metrics

mentioned in the previous section, the focus of this

research is on different metadata and social

characteristics of ontologies that can be used in the

evaluation process. This study was built upon the

findings of the previous interview study conducted

by Talebpour, Sykora and Jackson (2017) and aims

to clarify and confirm the metrics identified in that

study. To do that a survey questionnaire was

designed based on a mixed research strategy

combining qualitative and quantitative questions.

The survey was sent to a broad community of

ontologists and knowledge engineers in different

domains. Different sampling strategies namely

purposive sampling (Morse, 2016) were used in

order to find the ontologists and knowledge

engineers that were involved in the process of

ontology development and reuse. The survey was

also forwarded to different active mailing lists in the

field of ontology engineering. The lists used are as

follows:

The UK Ontology Network

KEOD 2018 - 10th International Conference on Knowledge Engineering and Ontology Development

110

GO-Discuss

DBpedia-discussion

The Protégé User

FGED-discuss

Linked Data for Language Technology

Community Group

Best Practices for Multilingual Linked

Open Data Community Group

Ontology-Lexica Community Group

Linking Open Data project

Ontology Lookup Service announce

Technical discussion of the OWL Working

Group

This is the mailing list for the Semantic

Web Health Care and Life Sciences

Community Group

There was a total number of 31 questions

broadly divided into four different sections. Each

section consisted of different number of questions

and aimed to explore and discover the opinion of

ontologists and knowledge engineers regarding (1)

the process of ontology development, (2) ontology

reuse, (3) ontology evaluation and the quality

metrics used in that process, and (4) the role of

community in ontology development, evaluation and

reuse. Different types of questions were used in the

survey namely close-ended questions, Likert scale

questions, open-ended questions, and multiple-

choice questions. Screening questions were also

used throughout the survey to make sure that

respondents are presented with the set of questions

that is relevant to their previous experiences.

The most important part of the survey aimed to

explore the process of ontology evaluation and the

set of criteria that can be used in this process.

Respondents were first asked about the approaches

and metrics they tend to consider while evaluating

ontologies. They were then presented with four

different sets of quality metrics including (1)

internal, (2) metadata, (3) community and (4)

popularity related criteria and were asked how

important they thought those metrics were, by

offering a 5-point Likert scale, ranging from “Not

important” to “Very important”. The criteria

presented and assessed in this part of the survey

were collected both from the literature and the

previous phase of the data collection, that was an

interview study with 15 ontologists and knowledge

engineers in different domains (Talebpour, Sykora

and Jackson, 2017).

5 FINDINGS

As was mentioned in the previous sections, this

research aimed to introduce different metrics that

could be potentially used for ontology evaluation.

Prior studies have identified many different quality

metrics, mostly based on ontological and internal

aspects of ontologies. This study was designed to

determine the importance of those metrics and also

to explore how communities can help in the

selection process. The findings of this study are

discussed in the following sections.

5.1 Demographics of Respondents

This study managed to access ontologists and

knowledge engineers with many years of experience

in building and reusing ontologies in different

domains. Around 80% of the participants in the

survey were actively involved in the ontology

development process and all of them would consider

reusing existing ontologies before building a new

one. The 157 respondents of this study are

categorised by the following demographics, all

declared by responders:

Job Title: After conducting frequency analysis

on the job titles provided by respondents, 78 unique

job titles were identified, many of which were

somehow related to different roles and positions in

academia such as researcher, professor, lecturer, etc.

Type of Organisation: According to the

frequency analysis conducted on the organisation

types, 68.8% (108) of the respondents of the survey

were working in academia. The other 31.2% of the

respondents were working in other types of

organisations including different companies and

industries.

Years of Experience: Interestingly, most of the

survey respondents were experts in their domain and

only around 10% of them had less than two years of

experience. Around 46% (73) of the respondents had

more than ten years of experience. The second

largest group of the respondents were the ontologists

with five to ten years of experience (26.8%).

Main Domains They Had Built or Reused

Ontologies In: survey respondents had worked/were

working in many different domains such as

biomedical, industry, business, etc. Most of

participants had mentioned more than one domain,

some of which were not related to each other.

5.2 Evaluation Metrics According to

Qualitative Data

Before presenting participants with four sets of

quality metrics that can be used for ontology

evaluation and asking them to rate those metrics,

they were asked an open-ended question about how

they evaluate the quality of an ontology before

selecting it for reuse. This question aimed to provide

Ontology Selection for Reuse: Will It Ever Get Easier?

111

further insight and to gather respondents' opinions

on different evaluation metrics and approaches. The

responses to this question were coded according to

different categories of quality metrics namely (1)

internal, (2) metadata, (3) community and popularity

related metrics.

According to the analysis, quality metrics

thought to be the most important were content and

coverage (mentioned 51 times) and documentation

(mentioned 41 times). The fact that an ontology has

been reused previously and the popularity of the

ontology on the web, or among community was the

other frequently mentioned metric by the

respondents (38 times). Community related metrics

such as reviews about the quality of an ontology,

existence, activeness and responsiveness of the

developer team, and the reputation of the developer

team or organisation responsible for ontology were

also mentioned by many of the respondents (25

times).

The findings of the qualitative question in the

survey confirmed the findings of the quantitative

part and the interview study previously conducted by

Talebpour, Sykora and Jackson (2017). It should be

noted that two of the metrics mentioned by the

responders namely “fit” and “format” were not

presented as a Likert item in the quantitative part of

the survey. Format was only mentioned two times

but how relevant an ontology is to an application

requirement was mentioned 37 times. The reason fit

was not used as a Likert item is that it cannot be

used as a criterion to judge the quality of an

ontology. However, it is a significant factor in the

selection process.

One of the emerging themes in the analysis was

“following or being a part of a standard”.

Interestingly, 19 respondents had mentioned

following or complying with different design

guidelines and principles or being a part of a

standard like W3C, and OBO Foundry as a criterion

in the evaluation process. Some had also mentioned

that while evaluating an ontology, they check if it is

built by using a method like NEON. A similar

question was proposed as one of the Likert items and

respondents were asked to rate how important “The

use of a method /methodology (e.g. NEON,

METHONTOLOGY, or any other standard and

development practice)” is when evaluating an

ontology. Surprisingly, it was ranked 30

th

(out of 31)

with a mean of 2.80 and a median of 3.

5.3 Importance of Quality Metrics

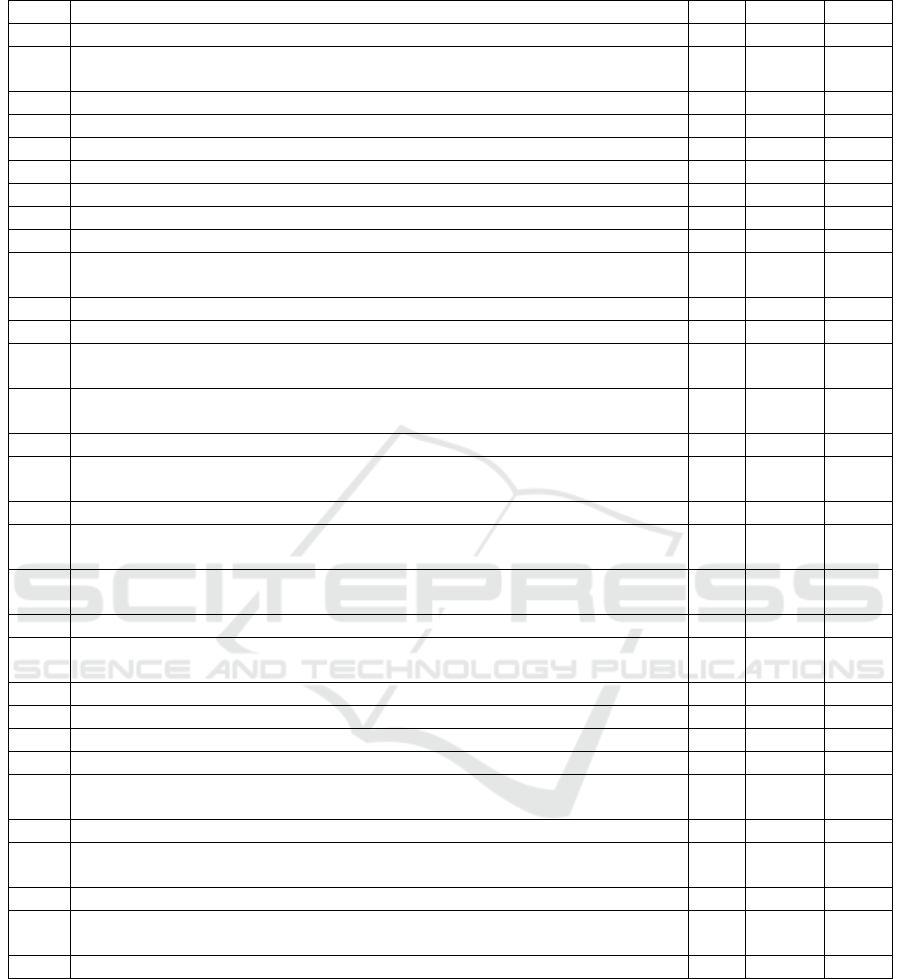

Table 1 shows the descriptive statistics of all 31

quality metrics, sorted by standard deviation. The

metrics are ranked from 1 to 31, with 1 being the

most important and 31 being the least important

metric considered when evaluating the quality of an

ontology for reuse. Mean and median are used to

show the centre and midpoint of the data

respectively. Standard deviation is used to express

the level of agreement on the importance of each

metric in the ontology evaluation process; the lower

value of standard deviation represents the higher

level of agreement among the survey respondents on

a rating.

As it is seen in Table 1, ontology content

including its classes, properties, relationships,

individuals and axioms is the first thing ontologists

and knowledge engineers tend to look at when

evaluating the quality of an ontology for reuse.

Other internal aspects of ontologies like their

structure (class hierarchy or taxonomy), scope

(domain coverage), syntactic correctness, and

consistency (e.g. naming and spelling consistency all

over the ontology) are also among the top ten quality

metrics used for ontology evaluation.

According to Table 1, Documentation is the

second most important quality metric used in the

evaluation process. Survey respondents have also

given a very high rate, five and eight respectively, to

other metadata related metrics such as accessibility

and availability of metadata and provenance

information about an ontology. In contrast to the

these metrics, other criteria in the metadata group

like availability of funds for ontology update and

maintenance, use of a method/methodology and

ontology language are among the bottom ten least

important metrics.

Community related metrics have some very

interesting ratings. The results show ontologists and

knowledge engineers would like to know about the

purpose that an ontology is used/has been used for

(e.g. annotation, sharing data, etc.) while evaluating

and before selecting it for reuse. They have also

rated "Availability of wikis, forums, mailing lists

and support team for the ontology" as one of the

very important quality metrics for ontology

evaluation. Having an active, responsive developer

community and knowing and trusting the ontology

developers are among the other top-ranked

community related aspects of ontologies that can be

used for their evaluation.

Survey responders were also presented with a set

of popularity related metrics. According to Table 1,

the popularity of an ontology in the community and

among colleagues has the highest median and mean

compared to the other metrics that can be used for

evaluating the popularity of an ontology.

Respondents also tended to consider the reputation

of the ontology developer team and/or institute in

the domain while evaluating an ontology for reuse.

Other popularity related metrics such as the

popularity of the ontology in social media (e.g. in

KEOD 2018 - 10th International Conference on Knowledge Engineering and Ontology Development

112

Table 1: Descriptive statistics of all the quality metrics in the survey.

Rank

Metric

SD

Median

Mean

1

The Content (classes, properties, relationships, individuals, axioms)

0.57

5

4.59

2

The availability of documentation (both internal, e.g. adding comments and

external)

0.79

5

4.38

3

The Structure (Class hierarchy or taxonomy)

0.82

4

4.29

4

The Scope (domain coverage)

0.84

5

4.42

5

The ontology is online, accessible, and open to reuse (e.g. License type)

0.85

5

4.52

6

The Syntactic Correctness

0.92

4

4.15

7

The Consistency (e.g. Naming and spelling consistency all over the ontology)

1.00

4

4.03

8

Availability of metadata and provenance information about the ontology

1.01

4

3.92

9

Availability of wikis, forums, mailing lists and support team for the ontology

1.03

4

3.45

10

Having information about the purpose that ontology is used/has been used for (e.g.

annotation, sharing data, etc.)

1.03

4

3.77

11

The Semantic Richness and Correctness (e.g. level of details)

1.06

4

3.92

12

Having an active responsive (developer) community

1.09

4

3.62

13

Having information about the other individuals or organisations who are

using/have used the ontology

1.1

3

3.12

14

Having information about the other projects that the ontology is used/has been

used in

1.1

3

3.34

15

Knowing and trusting the ontology developers

1.11

4

3.42

16

Knowing and trusting the organisation or institute that is responsible for ontology

development

1.11

3

3.38

17

The reputation of the ontology developer team, and/or institute in the domain

1.12

3

3.31

18

The number of times the ontology has been reused or cited (e.g. owl:imports,

rdfs:seeAlso, daml:sameClassAs)

1.13

3

3.40

19

The flexibility of the Ontology (being easy to change) and the ontology developer

team

1.14

4

3.41

20

The frequency of updates, maintenance, and submissions to the ontology

1.16

3

3.22

21

The popularity of the ontology in social media (e.g. in GitHub, Twitter, or

LinkedIn)

1.16

2

2.28

22

The popularity of the ontology in the community and among colleagues

1.17

4

3.51

23

The number of updates, maintenance, and submissions to the ontology

1.19

3

3.13

24

Availability of published(scientific) work about the ontology

1.19

4

3.56

25

The size of the ontology

1.19

3

3.02

26

The number of times the ontology has been reused or cited (e.g. owl:imports,

rdfs:seeAlso, daml:sameClassAs)

1.19

3

3.08

27

The availability of funds for ontology update and maintenance

1.23

3

2.77

28

The popularity of the ontology on the web (number of times it has been viewed in

different websites/applications across the web)

1.24

3

3.05

29

The reviews of the ontology (e.g. ratings)

1.25

3

3.03

30

The use of a method /methodology (e.g. NEON, METHONTOLOGY, or any

other standard and development practice)

1.26

3

2.80

31

The Language that ontology is built in (e.g. OWL)

1.30

4

3.70

GitHub, Twitter, or LinkedIn), the popularity of the

ontology on the web (number of times it has been

viewed in different websites/applications across the

web), and the reviews of the ontology (e.g. ratings),

were among the metrics with the least mean and

median.

6 DISCUSSIONS

Finding a set of metrics that can be used for

evaluating ontologies and their subsequent selection

for reuse has always been a critical research topic in

the field of ontology engineering. As mentioned in

the introduction and background sections, many

different ontology evaluation approaches and

Ontology Selection for Reuse: Will It Ever Get Easier?

113

metrics for quality assessment have been proposed

in the literature, with the aim of facilitating the

process of ontology selection. However, these

studies have not dealt with ranking and the

importance of the quality metrics, especially the

community related ones. The focus of this research

was on constructing a criteria-based evaluation

approach and determining a set of metrics that

ontologists and knowledge engineers tend to look at

before selecting an ontology for reuse. This study

also set out with the aim of assessing the importance

of the quality metrics identified in the literature and

in a previous phase of this research (Talebpour,

Sykora and Jackson, 2017).

Past studies have mostly been concerned with

identification and application of a new set of quality

metrics (Lozano-Tello and Gomez-Perez, 2004).

However, the key aim of this study was not only to

identify the main quality metrics used in the process

of evaluating ontologies but also to find how

important each of the quality metrics are. The results

of this survey study indicate that the internal

characteristics of ontologies are the first to assess

before selecting them for reuse. However, some

other aspects of ontologies such as availability of

documentation, availability and accessibility of an

ontology (e.g. license type), availability of metadata

and provenance information, and also having

information about the purpose that ontology is

used/has been used for previously (e.g. annotation,

sharing data, etc.) are as important as the quality of

the internal components of ontologies.

Popularity is the most defined and used term in

the literature to refer to the role of community in the

quality assessment process. As a part of this study,

respondents were asked to rate the importance of six

different popularity related metrics, four of which

were previously mentioned in the literature.

According to the results, ontologists and knowledge

engineers tend to care more about the popularity

metrics, as identified by Talebpour, Sykora and

Jackson (2017), such as popularity of an ontology in

the community and among colleagues (ranked 14 out

of 31, when sorted by median) and the reputation of

the ontology developer team, and/or institute in the

domain (ranked 21 out of 31, when sorted by

median) than the popularity related metrics that have

been widely used in the literature and by selection

systems. Metrics used in the literature include the

number of times an ontology has been reused or

cited (Supekar, Patel and Lee, 2004; Wang, Guo and

Fang, 2008), the popularity of an ontology on the

web (Burton-Jones et al., 2005; Martinez-Romero et

al., 2017), the reviews of an ontology (Lewen and

d’Aquin, 2010) and the popularity of an ontology on

social media (Martínez-Romero et al., 2014); while

having a lower median and mean, some of these

metrics were ranked higher when the quality metrics

were sorted by standard deviation. Standard

Deviation shows a higher level of agreement among

the survey respondents about the lower rank of those

metrics.

7 CONCLUSIONS

The primary aim of this paper was to identify a set

of metrics that ontologists and knowledge engineers

tend to consider when assessing the quality of

ontologies for reuse. The results of this survey study

found that the process of ontology evaluation for

reuse does not only depend on the internal

components of ontologies, but it also depends on

many other metadata and community related

metrics. This study identified different criteria that

can be used for ontology evaluation, and also

measured how important those criteria were. Taken

together, the results suggest that the metadata and

social related metrics should be used by different

selection systems in this field, in order to facilitate

ontology discovery and to provide a more

comprehensive and accurate recommendation for

reuse.

These findings enhance our understanding of the

notion of ontology quality and the key features

ontologists and knowledge engineers look for when

reusing ontologies. This research can aid ontology

developers as it provides them with key metrics

which they could take into consideration when

developing a new ontology to enhance its longevity

and to provide better foundations to the ontology

community for future developments. Further

research could explore if the choice of quality metric

for ontology evaluation varies from domain to

domain.

REFERENCES

Arpinar, I.B., Giriloganathan, K. and Aleman-Meza, B.,

2006, May. Ontology quality by detection of conflicts

in metadata. In Proceedings of the 4th International

EON Workshop.

Bontas, E.P., Mochol, M. and Tolksdorf, R., 2005, June.

Case studies on ontology reuse. In Proceedings of the

IKNOW05 International Conference on Knowledge

Management, 74, p. 345.

Brank, J., Grobelnik, M. and Mladenić, D., 2005. A survey

of ontology evaluation techniques.

Brank, J., Mladenic, D. and Grobelnik, M., 2006, May.

Gold standard based ontology evaluation using

instance assignment. In Workshop on Evaluation of

Ontologies for the Web, EON.

KEOD 2018 - 10th International Conference on Knowledge Engineering and Ontology Development

114

Brewster, C., Alani, H., Dasmahapatra, S. and Wilks, Y.,

2004. Data Driven Ontology Evaluation. In 4th

International Conference on Language Resources and

Evaluation, LREC’04. Lisbon, Portugal, p. 4.

Buitelaar, P., Eigner, T. and Declerck, T., 2004.

OntoSelect: A dynamic ontology library with support

for ontology selection. In Proceedings of the Demo

Session at the International Semantic Web

Conference.

Burton-Jones, A., Storey, V.C., Sugumaran, V. and

Ahluwalia, P., 2005. A semiotic metrics suite for

assessing the quality of ontologies. Data & Knowledge

Engineering, 55(1), pp. 84-102.

Butt, A.S., Haller, A. and Xie, L., 2014, October.

Ontology search: An empirical evaluation. In

International Semantic Web Conference, pp. 130-147.

d'Aquin, M., Sabou, M., Motta, E., Angeletou, S.,

Gridinoc, L., Lopez, V. and Zablith, F., 2008. What

can be done with the Semantic Web? An Overview of

Watson-based Applications. In CEUR Workshop

Proceedings, 426.

Ding, L., Finin, T., Joshi, A., Pan, R., Cost, R.S., Peng, Y.,

Reddivari, P., Doshi, V. and Sachs, J., 2004,

November. Swoogle: a search and metadata engine for

the semantic web. In Proceedings of the thirteenth

ACM international conference on Information and

knowledge management, pp. 652-659.

Fahad, M. and Qadir, M.A., 2008. A Framework for

Ontology Evaluation. ICCS Supplement, 354, pp.149-

158.

Fernández, M., Cantador, I. and Castells, P., 2006. CORE:

A tool for collaborative ontology reuse and evaluation.

Fernández, M., Overbeeke, C., Sabou, M. and Motta, E.,

2009, December. What makes a good ontology? A

case-study in fine-grained knowledge reuse. In Asian

Semantic Web Conference, Springer, Berlin,

Heidelberg, pp. 61-75.

Gangemi, A., Catenacci, C., Ciaramita, M. and Lehmann,

J., 2006, June. Modelling ontology evaluation and

validation. In European Semantic Web Conference,

Springer, Berlin, Heidelberg, pp. 140-154.

Gill, T., 2008. Metadata and the Web. Introduction to

metadata, 3, pp.20-38.

Gómez-Pérez, A., 1995, February. Some ideas and

examples to evaluate ontologies. In Artificial

Intelligence for Applications, 1995. Proceedings., 11th

Conference on, IEEE, pp. 299-305.

Hartmann, J., Sure, Y., Haase, P., Palma, R. and Suarez-

Figueroa, M., 2005, November. OMV–ontology

metadata vocabulary. In ISWC, 3729.

Hlomani, H. and Stacey, D., 2014. Approaches, methods,

metrics, measures, and subjectivity in ontology

evaluation: A survey. Semantic Web Journal, 1(5),

pp.1-11.

Lewen, H. and d’Aquin, M., 2010, October. Extending

open rating systems for ontology ranking and reuse.

In International Conference on Knowledge

Engineering and Knowledge Management, Springer,

Berlin, Heidelberg, pp. 441-450.

Lewen, H., Supekar, K., Noy, N.F. and Musen, M.A.,

2006, May. Topic-specific trust and open rating

systems: An approach for ontology evaluation.

In Workshop on Evaluation of Ontologies for the Web.

Lozano-Tello, A. and Gómez-Pérez, A., 2004. Ontometric:

A method to choose the appropriate ontology. Journal

of Database Management (JDM), 15(2), pp.1-18.

Maedche, A. and Staab, S., 2002, October. Measuring

similarity between ontologies. In International

Conference on Knowledge Engineering and

Knowledge Management, Springer, Berlin,

Heidelberg, pp. 251-263.

Maiga, G. and Ddembe, W., 2008. Flexible approach for

user evaluation of biomedical ontologies.

Martínez-Romero, M., Jonquet, C., O’connor, M.J.,

Graybeal, J., Pazos, A. and Musen, M.A., 2017.

NCBO Ontology Recommender 2.0: an enhanced

approach for biomedical ontology

recommendation. Journal of biomedical

semantics, 8(1), p.21.

Martínez-Romero, M., Vázquez-Naya, J.M., Pereira, J.

and Pazos, A., 2014. BiOSS: A system for biomedical

ontology selection. Computer methods and programs

in biomedicine, 114(1), pp.125-140.

Matentzoglu, N., Malone, J., Mungall, C. and Stevens, R.,

2018. MIRO: guidelines for minimum information for

the reporting of an ontology. Journal of biomedical

semantics, 9(1), p.6.

McDaniel, M., Storey, V.C. and Sugumaran, V., 2016,

June. The role of community acceptance in assessing

ontology quality. In International Conference on

Applications of Natural Language to Information

Systems, Springer, Cham, pp. 24-36.

Morse, J.M., 2016. Mixed method design: Principles and

procedures. Routledge.

Netzer, Y., Gabay, D., Adler, M., Goldberg, Y. and

Elhadad, M., 2009. Ontology evaluation through text

classification. In Advances in Web and Network

Technologies, and Information, Management Springer,

Berlin, Heidelberg, pp. 210-221.

Obrst, L., Ceusters, W., Mani, I., Ray, S. and Smith, B.,

2007. The evaluation of ontologies. In Semantic web,

Springer, Boston, MA., pp. 139-158.

Sabou, M., Lopez, V., Motta, E. and Uren, V., 2006.

Ontology selection: Ontology evaluation on the real

semantic web.

Simperl, E., 2009. Reusing ontologies on the Semantic

Web: A feasibility study. Data & Knowledge

Engineering, 68(10), pp.905-925.

Sowa, J.F., 2000, August. Ontology, metadata, and

semiotics. In International Conference on Conceptual

Structures, Springer, Berlin, Heidelberg, pp. 55-81.

Supekar, K., 2005, July. A peer-review approach for

ontology evaluation. In 8th Int. Protege Conf, pp. 77-

79.

Supekar, K., Patel, C. and Lee, Y., 2004, May.

Characterizing Quality of Knowledge on Semantic

Web. In FLAIRS Conference, pp. 472-478.

Talebpour, M., Sykora, M. and Jackson, T. W. (2017)

‘The Role of Community and Social Metrics in

Ontology Selection for Reuse: Will It Ever Get Easier?

115

Ontology Evaluation: An Interview Study of Ontology

Reuse’, in 9th International Joint Conference on

Knowledge Discovery, Knowledge Engineering and

Knowledge Management, pp. 119–127. doi:

10.5220/0006589201190127.

Uschold, M., Healy, M., Williamson, K., Clark, P. and

Woods, S., 1998, June. Ontology reuse and

application. In Formal ontology in information

systems, IOS Press Amsterdam, 179, p. 192.

Wang, X., Guo, L. and Fang, J., 2008, April. Automated

ontology selection based on description logic.

In Computer Supported Cooperative Work in Design,

2008. CSCWD 2008. 12th International Conference

on IEEE, pp. 482-487.

Yu, J., Thom, J.A. and Tam, A., 2007, November.

Ontology evaluation using Wikipedia categories for

browsing. In Proceedings of the sixteenth ACM

conference on Conference on information and

knowledge management, ACM, pp. 223-232.

Yu, J., Thom, J.A. and Tam, A., 2009. Requirements-

oriented methodology for evaluating

ontologies. Information Systems, 34(8), pp.766-791.

KEOD 2018 - 10th International Conference on Knowledge Engineering and Ontology Development

116