A Novel Framework to Represent Documents using a

Semantically-grounded Graph Model

Antonio M. Rinaldi

1

and Cristiano Russo

2

1

Dipartimento di Ingegneria El et trica e delle Tecnologie dell’Informazione,

IKNOS-LAB-Intelligent and Knowledge Systems-LUPT,

University of Naples Federico II, Italy

2

LISSI Laboratory, University of Paris-Est Creteil (UPEC), France

Keywords:

Document Representation, Semantic and Linguistic Analysis, WordNet, Lexical Chains, NoSQL, Neo4J.

Abstract:

As an increasing number of text-based documents, whose complexity increases in turn, are available over the

Internet, it becomes obvious that handling such documents as they are, i.e. in their original natural-language

based format, represents a daunting task to face up for computers. Thus, some methods and techniques have

been used and refined, throughout the last decades, in order t o transform the digital documents from the full

text version to another suitable representation, making them easier to handle and thus helping users in getting

the right information with a reduced algorithmic complexity. One of the most spread solution in document

representation and retrieval has consisted in transforming the full text version into a vector, which describes

the contents of the document in terms of occurrences patterns of words. Although the wide adoption of this

technique, some remarkable drawbacks have been soon pointed out from the researchers’ community, mainly

focused on the lack of semantics for the associated terms. In this work, we use WordNet as a generalist

linguistic database in order to enrich, at a semantic level, the document representation by exploiting a l abel

and properties based graph model, implemented in Neo4J. This work demonstrates how such representation

allows users to quickly r ecognize the document topics and lays the foundations for cross-document relatedness

measures that go beyond the mere word-centric approach.

1 INTRODUCTION

Text is the most traditional method for information re-

cording and knowledge representation (Yan and Jin,

2012) and a comm on source of learning in an in-

structional setting (Thorndyke, 1978). Actually, hu-

mans do not judge text relatedness merely at the le-

vel of text words, since, words trigger reasoning at a

much deeper level, i.e., that of concepts - the basic

units of meaning that serve humans to organize and

share their knowledge. Humans interpret the specific

wording of a docum e nt in the much larger context of

their background kn owledge and experience (Gabri-

lovich and Markovitch, 2007). While reasoning about

semantic relatedness of natural language utterances

is routinely performed by humans, it remains an in-

surmountable obstacle for computers. Thus, some

methods and te c hniques have been used and refined,

throughout the last decades, in order to transform th e

digital document from the full text version to another

suitable representation, making them easier to handle

for automa te d software agents in Information Retrie-

val (IR) Systems. IR-models are based on strategies

that span from the set-theoretica l boolean methods

for IR to the algebra ic Space Model Vector and th e

Latent Semantic Indexing and, finally, to the Topic-

based Space Vector Model. Particularly, ideas under-

lined by the Space Vector Model are the most spread

solution in documen t representation an d retrieval and

consist in transforming the full text version in to a vec-

tor which describes the contents of the document in

terms of occurrences patterns of words. Although

the wide adoption of this technique, some remarkable

drawbacks have been soon pointed out from the re-

searchers community, mainly focused on th e lack of

semantics for the associated terms, namely, it does not

say anything about the nature of the meaning of terms

pairs (e.g., if they are synonyms or linked somehow at

a semantic-level). Consequently, n ew strategies ma-

king use of external linguistic resources have bee n

increasingly adopted to imbue words with semantics

and linking terms together with linguistic-semantic

relations (Rinaldi, 2009) and very large knowledge

base repr e sentation (Caldarola et al., 2015). In our

Rinaldi, A. and Russo, C.

A Novel Framework to Represent Documents using a Semantically-grounded Graph Model.

DOI: 10.5220/0006932502030211

In Proceedings of the 10th International Joint Conference on Knowledge Discovery, Knowledge Engineering and Knowledge Management (IC3K 2018) - Volume 1: KDIR, pages 203-211

ISBN: 978-989-758-330-8

Copyright © 2018 by SCITEPRESS – Science and Technology Publications, Lda. All rights reserved

203

context, one of the most spread lingu istic resources

are lexical databases like WordNe t (Miller, 1995). We

use WordNet as a generalist linguistic database, in or-

der to semantica lly augment the d ocument represen-

tation going beyond the mere word-centric approach.

This way, we try to demonstrate how, just by analy-

sing the topology of the expan ded lexical chains, re-

presented through a labelled- based graph model, it is

possible to predict the knowledge ca tego ries the do-

cument belongs to, regardless any statistical measu-

res related to the document terms. Our solution has

been implemented in a newly adopted tool from the

NoSQL technologies, namely, Neo4J, which allows

us to represent the expan ded lexical chains thr ough

a pr operties and label-based graph model, able to be

horizontally scaled and distributed. This work focu-

ses on doc ument representation/visualization exploi-

ting the features available from the new tool mentio-

ned above.

The reminder o f the paper is structured as follows.

After a state-of-the -art of the main issues and soluti-

ons regarding the document representation for text-

mining and retrieval, provided in section 2, an over-

view of the sy stem architecture for our document re-

presentation solution is p resented in section 3, along

with th e implementation details. Section 4 motivates

the reasons for using the pr operties and labels-based

graph model in order to repre sent the document, out-

lining the procedure for obtaining the graph represen-

tation and motivating the choice for the selected docu-

ment corpus. Section 5 shows the results of applying

the solution over some documents, as examples, while

section 6 draws the conclusion o utlining the major fin-

dings and laying the foundations for fu ture investiga-

tions.

2 RELATED WORKS

In this section, we present and discuss a literature fo-

cused on document visualization and text ca tego riza-

tion techniques. In this regard, several methods have

been proposed to help user s in searching, visualizing

and retrieving useful information from a text-based

corpora. One of th ese approaches is based on the

creation of tag clouds, which can be used for basic

user-centered tasks (Rivadeneira et al., 2007). Other

studies improve tag cloud statistical based approach

with semantic information (Rinaldi, 2012; Rinaldi,

2013). In ou r approach, we use a keywords extraction

technique to build the semantically-expanded lexical

chain. The quality of extracted keywords depends on

the corresponding keyword extraction algorithm and

several methods have been proposed in the literatu re.

In (Hu and Wu, 2006) the authors use linguistic fea-

tures to rep resent the importance of the word position

in a document. They extract topical te rms and their

previous-term and next-term co-occurrence collecti-

ons using several methods. A tag-oriented summari-

zation approach is discussed in (Z hu et al., 2009). The

authors present a new alg orithm using a linear trans-

formation to estimate th e importanc e of tags. The tags

are further expanded to include related words using

association mining techniques. The final summary is

generated with a sentence evaluation based on expan-

ded tags and TF-IDF o f each word in a sentence. An

iterative approach for document keywords extraction

based o n the relationship b etween different gr anulari-

ties (i.e., relationships between words, sentences, and

topics) is presented in (Wei, 2012). The method is

first implemented by constructing a graph, w hich re-

flects relationships between different size of granula-

rity nodes, and then using an iterative algorithm to

calculate score of keywords; the words with highest

score are chosen as keywords. In (Kaptein, 2012) the

author describes an a pplication in w hich word clouds

are used to navigate and summarize Twitter search

results. This application summarizes sets o f tweets

into word clo uds, which can be used to get a first idea

of the contents of the tweets. Moreover, several stu-

dies h ave been presented to add more information to

folksonomies and enhance tag visualization in order

to improve the use of tag clouds. Several approaches

(Begelman et al., 2006; Fujimura et al., 2008) have

been proposed to measure tag similarity using sta-

tistics. Clustering algorithms were applied to gather

semantically similar tag s. In (Hassan-Montero and

Herrero-Solana, 2006) the k-means algorithm was ap-

plied to group semantically similar tags. Li et al. ( Li

et al., 2007) supported a large scale social annotations

browsing based on an analysis of semantic and hie r-

archical relations. An ap proach to build sema ntic net-

works on the basis of tag co-occurrences and network

structures of folksonomies is in (Cattuto et a l., 2007).

The same authors analyzed similarities betwee n tags

and documents in order to enrich semantic aspects of

social tagging. An interface for information sear ching

task using tag clouds has b e en presented in (Sinclair

and Cardew-Hall, 2008) . The authors point out that

tag clouds satisfy all the roles mentioned in (Rivade-

neira et a l., 2007), as visual sum maries of content, and

they observed that the pro cess of scanning the cloud

and click ing on tags is easier than the formulation of

a search query. In (Chen et al., 2009) the authors in-

vestigate ways to support semantic un derstanding of

collaboratively generated tags. They condu cted a sur-

vey on practical tag usage in Last.fm.

KDIR 2018 - 10th International Conference on Knowledge Discovery and Information Retrieval

204

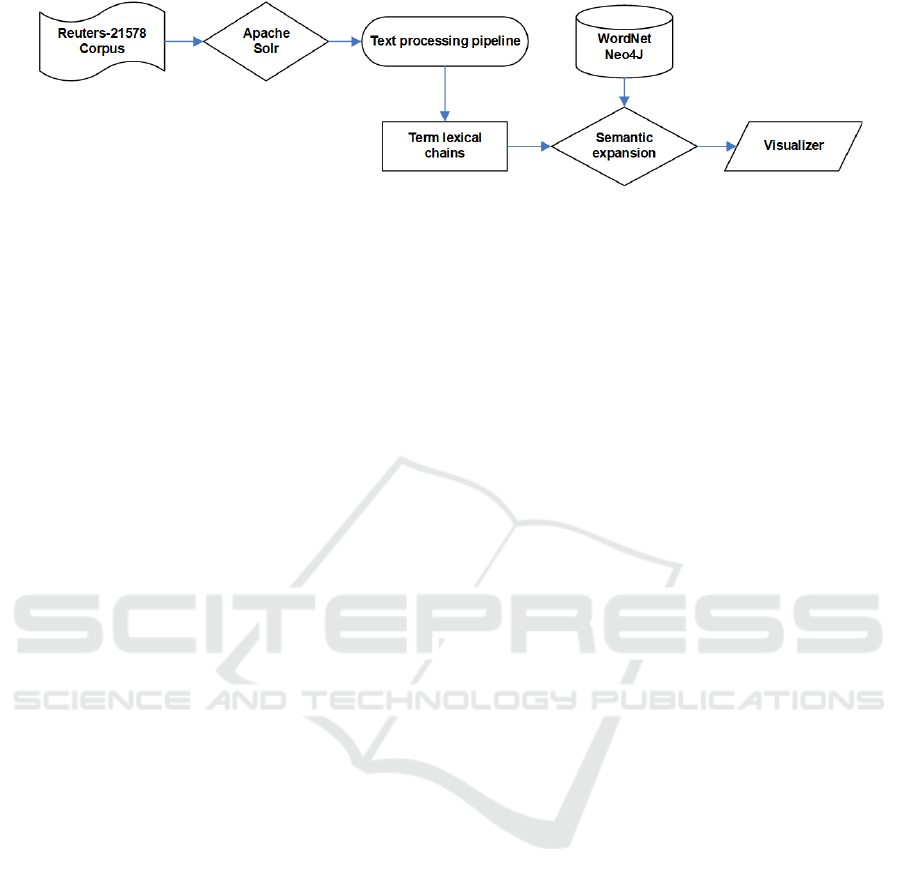

Figure 1: An high-level view of the document representation system architecture.

3 DOCUMENT

REPRESENTATION SYSTEM

ARCHITECTURE

Figure 1 shows a high-level view of the implemen-

ted sy stem. The main blocks depicted in the figure

are: the Apache Solr search server containing and in-

dexing the Reuters-21578 corpus (Lewis, 1997) used

as doc ument collection, the text-processing pipeline,

which contains also the tokenizer, which obtains a

normalized lexical chain for each document and, fi-

nally, the semantic expansion block, which makes

use of Neo4J and WordNet to re present each d ocu-

ment as an expanded and semantically- grounded lex-

ical chain. Apache Solr is an open-source search

platform built on Apache Lucen e (M cCandless et al.,

2010) allowing the storage and the indexing of large

volume o f documents collections. Solr allows a data-

driven schemaless mode when populating the index

but also a fine-grain control over the schema pro-

duction time. In this work, the Solr Java APIs have

been used in order to r ead the Reuters docu ments

and store them in a coherent and searchable docu-

ment index. Reuters collection is a resource for re-

search in in formation retrieval, machine learning, and

other corpus-based research. Documents were mar-

ked up w ith SGML tags, and a corresponding SGML

DTD was produced, so that the boundar ie s of im-

portant sections of doc uments (e.g., category fields)

are unambig uous. Each article ha s a structure which

highlight the fields used in this work as: To pic attri-

bute of the Reuters main tag, which is a boolean flag

indicating if the document has been categorized by

human indexers, i.e., if the document is in the trai-

ning set; NEWI D another attribute of the main ta g,

which assigns a unique ID to each doc in chronologi-

cal order; TOPICS, which encloses the list of TOPICS

categories, if any, for the document. The other fields,

i.e., PLACES, ORGS, EXCHANGE S and COMPA-

NIES are same as TOPICS but for the corresponding

typology of categories. In this work we use only the

TOPICS categorization; AUTHOR, the author of the

story; TITLE, the title of the story; B ODY, The main

text of the story. It may has a normal structure or can

be a brief text containing one or at mo st two lines. In

this work, we consider only normal type document.

A test collection for text categorizatio n contains a set

of texts and, for each text, a specification of what ca-

tegories that text belongs to. For the Reuters-21578

collection the documents are Reuters newswire sto-

ries, an d the categorie s are five different sets of con-

tent related categories. The TOPICS categories are

econom ic subject categor ie s, e.g., ”gold”, ”invento-

ries”, and ”money-supply”. A s described in section

5, the proposed methodology has been applied to one

hundred documents coming from ten different cate-

gories. Thanks to the search capabilities of Solr and

the schema-based representation of the doc ument as a

set of fields has been possible to quickly harvest the

docume nt b elonging to each of the tested categories.

Once retrieved the docum e nts texts have been preces-

sed by a p ipeline in o rder to retrieve a normalised ver-

sion of lexical chains. Afterwards, the lexical chain

were subjected to the semantic expansion described

in what follows. The normalization of textual repre-

sentation of each Reuter documents, at a morpholo-

gical and syntac tic level, has been performed by the

text-processing pipeline whose main phases based on

specific tasks: Sentence Segmentation is responsible

for breaking up documents (entity description, com-

ments or abstract) into sentences (or sentence-like)

objects which can be processed an d annotated by ”do-

wnstream” components; Tokenization b reaks sen ten-

ces into sets of word-like objects which represent the

smallest un it of linguistic meaning considered by a

natural language processing system; Lemmatisation is

the algorithm ic process of determin ing the lemma for

a given word. This ph a se substantially groups toget-

her the different inflec ted forms of a word so they can

be analysed as a single item; Stopwords elimination

phase filters out stop words fro m analysed text. Stop

words usually r efer to th e most common words in a

languag e, e.g. the, is, at, which, and so f orth in Eng-

lish; POS (Part-Of-Speech)-tagging attaches a tag de-

A Novel Framework to Represent Documents using a Semantically-grounded Graph Model

205

noting the part-of-speech to each word in a sentence,

e.g., Noun, Verb, Adverb, etc. ; Named Entity Rec ogni-

tion phase ca tegorizes phrases (re ferred to as entities)

found in text with respect to a potentially large num-

ber of semantic categories, such as pe rson, organiza-

tion, or geopolitical location; Coreference Resolution

phase identifies the linguistic expressions which make

referenc e to the same entity or individual within a sin-

gle document – or across a collection of documents.

Once a normalized lexical chain for each documents

has been obtained from the text-processing pipeline,

its semantic expa nsion is built by exploitin g the featu-

res of WordNet that will be described in detail in the

following section.

4 WordNet-BASED DOCUMENT

REPRESENTATION

The proposed approach uses WordNe t in order to ex-

pand, at a semantic level, the lexical chains extrac-

ted from doc uments retrieved among the Reuters col-

lection. WordNet is a large lexical database of Eng-

lish. Nouns, verbs, adje c tives and adverbs are grou-

ped into sets of cognitive synonyms (synsets), each

expressing a distinct concept. Synsets are interlin-

ked by me ans of co nceptual-semantic and lexical re-

lations. In this context, we have defined and imple-

mented a meta-model for Word Net to be exploited

in the expanded lexical chains u sin g a conceptuali-

zation as much as possible close to the way in which

the concepts are organized and expressed in human

languag e (Rinaldi, 2008). We consider co ncepts and

words as graph nodes, whereas seman tic, lin guistic

and semantic-linguistic relations as edges connecting

nodes. For example, the hyponymy property is con-

verted in an edge that links two concept nodes (nouns

to nouns or verb s to verbs), while, a syntactic rela-

tion relates word nodes to word nodes. Conc ept and

word node s are considered with DatatypeProperties,

which relate individuals with a predefined data type.

Each word is related to the represente d concept by the

ObjectProperty hasConcept while a concept is related

to words that represent it using the ObjectProp erty

hasWord. The se are the only prope rties able to re-

late words with concepts and vice versa; all the other

properties relate words to words and concep ts to con-

cepts. Concep ts, words and properties are arranged in

a class h ie rarchy, resulting from the syntactic category

for concepts and words and from the semantic or lex-

ical type for the properties. All elements have an ID

within the WordNet offset number or a user defined

ID. The semantic and lexical properties are arranged

in a hierarchy. In Table 1 some of the considered pro-

perties and the ir domain and range of definition are

shown.

Table 1: Properties.

Property Domain Range

hasWord Concept Word

hasConcept Word Concept

hypernym NounsAnd NounsAnd

VerbsConcept VerbsConcept

holonym NounConcept NounConcept

entailment VerbWord VerbWord

similar AdjectiveConcept AdjectiveConcept

The use of domain and cod omain reduces the pro-

perty r a nge application. For example, the hyponymy

property is defined o n the sets of noun s and verbs; if

it is applied on th e set of nouns, it has the set of n ouns

as range, otherwise, if it is applied to the set of verbs,

it has the set of verbs as range. in Table 2 there are

some of defined constraints a nd we spe c ify on which

classes they have been applied w.r.t. the considered

properties; the table shows the matc hing range too.

Table 2: Model Constraints.

Costraint Class Property Constraint range

AllValuesFrom NounConcept hyponym NounConcept

AllValuesFrom AdjectiveConcept attribute NounConcept

AllValuesFrom NounWord synonym NounWord

AllValuesFrom AdverbWord synonym AdverbWord

AllValuesFrom VerbWord also

see VerbWord

Sometimes the existence of a property between

two or more individuals entails the existence of o t-

her properties. For exam ple, being the concept dog

a hyponym of animal, we can assert that animal is a

hypernymy of dog. We represent this characteristics

by means of property features shown in Table 3.

Table 3: Property Features.

Property Features

hasWord inverse of hasConcept

hasConcept inverse of hasWord

hyponym inverse of hypernym; transitivity

hypernym inverse of hyponym; transitivity

cause transitivity

verbGroup symmetry and transitivity

WordNet has been imported in Neo4J and after-

wards visualized in Cytoscape (Shann on et al., 2003)

accordin g to a procedur e similar to (Caldarola and Ri-

naldi, 2016) (Caldarola e t al., 2016). Compared to the

previous ones, this work focu ses on the visualizatio n

of WordNet and the its most expensive part has con-

sisted in defining a Cytoscape cu stom style to repre-

sent the synonms rings as tag clouds in an effective

and clear way. We pre ferred to load Wo rdNet objects

from JWI APIs and serialize them in custom csv files

to add some useful information in the csv lines, such

KDIR 2018 - 10th International Conference on Knowledge Discovery and Information Retrieval

206

as the word freq uency and the p olysemy for the sake

of the succe ssive repre sentation in Cytoscape. Before

diving into the procedur e details, it is worth clarifying

the distinctio n between synsets, synsets (or synonims)

rings, index words and word senses. As discussed in

the previous section, a synset is a concept, i.e., an en-

tity of th e real world (both physical or abstract) whose

meaning can be argue d by reading the gloss d efinition

provided by WordNe t. Its meaning can be also un-

derstood by analysing th e semantic relations linking

it to other synsets or by reading the terms belo nging

to the syn set (or synonims) ring. This one is a set of

words (i.e . index words) generally u sed in a specific

languag e (such as English) to refer that concep t. The

term synset itself is used to refer to set of syn onyms

meaning a specific concept. On th e contrary, an index

word is just a term, i.e., a sign without m eaning; so,

only when we link it to a specific concept we ob tain

a word sense, a word provided with meaning. An in-

dex word h as got different meaning s acco rding to the

context in which it is used and because of a general

characteristic of languages: the polysemy. For exam-

ple, the term book has eleven different meanings if

it is used as noun ( both lower and upper case), and

so, it belongs to eleven different synsets. In a ddition

to synsets glo sses, WordNet gives some useful statis-

tic inf ormation about the usage of the term book in

each synset. The position of the term in each syno-

nyms r ing tell us how usual is the use of such term to

mean that concept. The position of the term in each

synset (comma separated in the listing) is a measure

of the u sage frequency of the term for each concept:

higher the position, hig her the f requency. Moreover,

by counting the number of synsets w hich a term be-

longs to, it is possible to obtain its polysemy (e.g.,

the number of possible meanings of book). The in-

formation that we collect from WordNet for the syn-

set nodes are the following: Id: the unique identi-

fier for th e synset; SID: the Synset ID as reported in

the WordNet database; POS: the synset part of speech

(POS); Gloss: the synset gloss which express its me-

aning; Level: the h ierarchical level of synset in the

whole WordNet hiera rchy. While, for the word node

we are interested in the following information: Id: the

unique identifier for the word sense; POS: the word ’s

part of speech (POS); p olysemy: the word polysemy;

freque ncy: the word frequency of the word sense as

previously explicated. Finally, we retrieve the se-

mantic links existing between synsets, by reporting

the type of sem a ntic link existing b etween them, e.g.,

hypon y m or meronym , and the linguistic-semantic re-

lations (hasWord), which connect word nodes to the

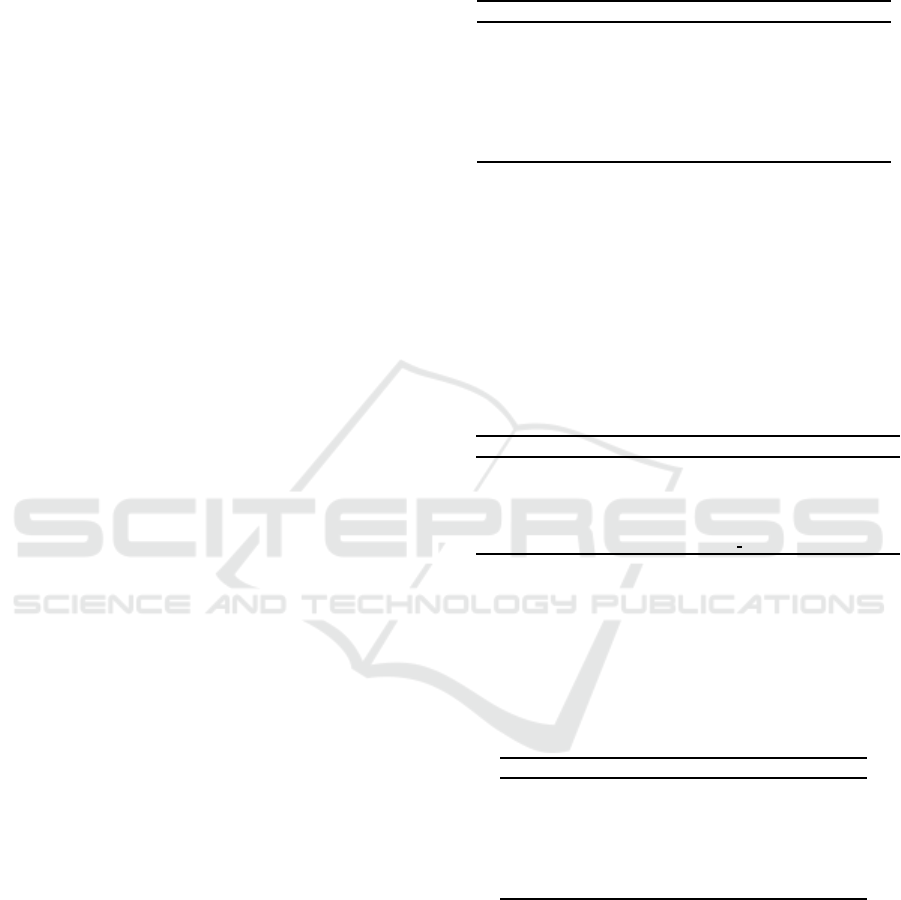

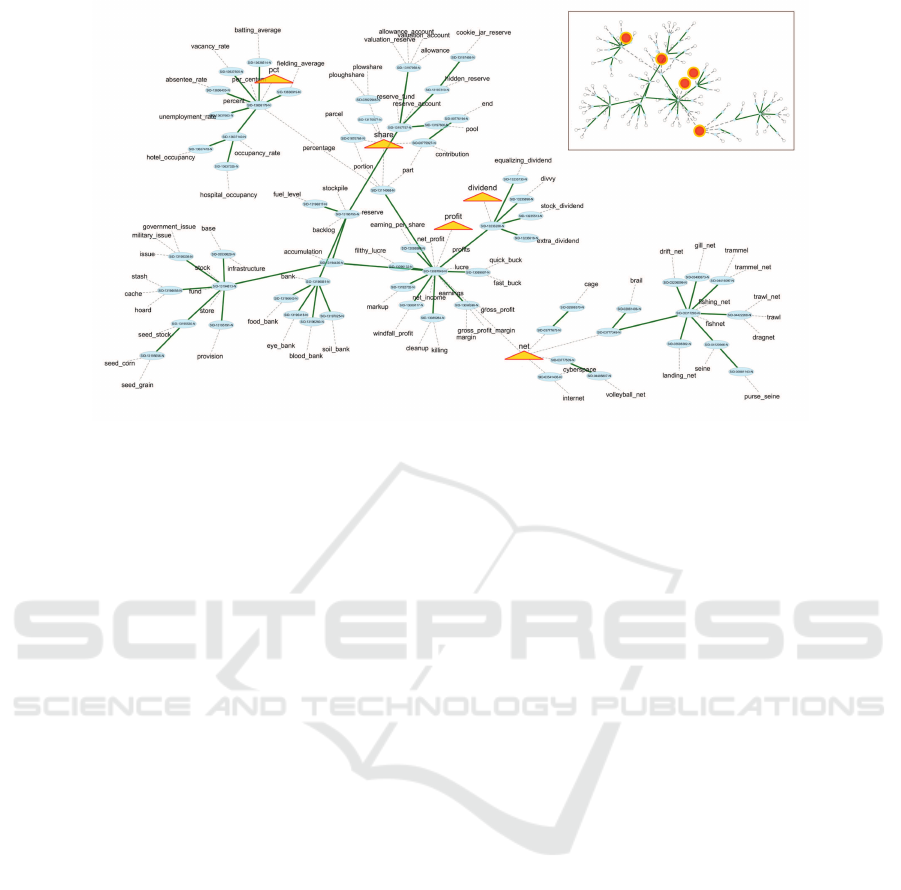

correspo nding synsets. Figure 2 shows the layout of

the semantically-expan ded lexical chains used in this

work. Actually, we provide two layouts: the one is

used for an high-level view of the expanded lexical

chains and is u sed to get general insights from the do-

cument (and to decide about its main semantic cate-

gory), while the other zoom s in and provides details

such as labels and IDs associated to nodes and edge s.

Focusing on the first layout (figure 2), it is possible to

distinguish three types of nodes, depicted with th ree

different colors: the white nodes represent words, the

blue ones r epresent synset while the orange represent

an original document word, i.e., a word that occurs in

the Reuters document. Each word nod e is connected

to the synset (syn onyms ring) it belongs to a dashed

line, this way making possible to visua lize the syno-

nym rings around the sysnet. In general, one synset

may has one or more word nodes connected to it (due

to the synonymy) and it is true also the contrary, i.e,

one word nod e s can be connected to o ne or more sys-

nets (due to the po lysemy of such word ). One sysnet

can connect to o ther synsets through semantic relati-

ons (mostly hyp onym but also meronym ) depicted in

dark green in the figure.

Figure 2: Reuters document structure.

5 GRAPH-BASED

REPRESENTATION OF

EXPANDED LEXICAL CHAINS

The defined meta-model to represent WordNet has

been implemented in a labelled and properties-based

graph within Neo4J and used in our context of in-

terest. We a pplied the proposed model to construct

the semantically-g rounded expansion s of a selection

of one hundre d documen ts taken from th e Reuters-

21578 corpus, which spans over ten different catego-

ries (or topics) such as: e arn, grain, trade, money,

sugar, coffe, iron, cotton, meal and silver. The con-

siderations that have arisen and the discussions that

follow, along with the images provided here, concern

one of the Reuters corpus documen ts taken as an ex-

ample, precisely, the number 6353. This latter b e-

longs to the training set used in the modified Lewis

A Novel Framework to Represent Documents using a Semantically-grounded Graph Model

207

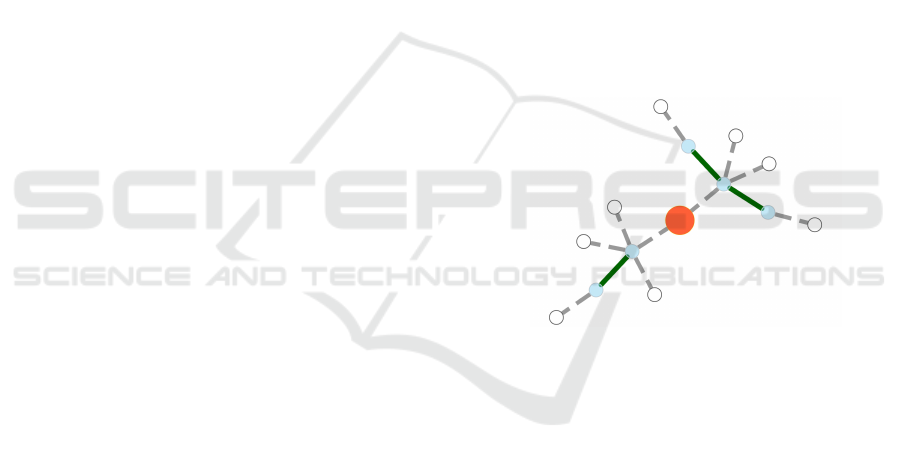

(a) (b) (c) (d) (e)

(f) (g) (h) (i) (j) (k)

Figure 3: Reuters document graph sub components.

split (Lewis, 1992), which has been labelled as earn,

which means earn on some commerc ial or business

transaction, earn as net salary or wages, or as a profit

or dividend in general. By using the proposed docu-

ment representation strategy, we expect the user be

able to recognize the document topic and its speci-

ficity, at a semantic level, just by having a look at

the graph-based representation of the document and

analysing its structure in terms of so me to pological

characteristics like the connectivity, the number o f

connected components of the under lying graph, the

number of the original terms (those coming from the

Reuters document) belonging to each connected com-

ponen ts, the spatial distribution of the original terms

in each connected components. Figure 3 shows the

graph sub components for the semantic expansion of

Reuters no. 6353. Like a lmost all document expan-

sions, it represents a disconnected graph to which se-

veral sub-components belong. For the sake of bre-

vity and cla rity, the figure depicts only eleven sub-

components, the remaining ones being too sm all to be

worth dealing with. Each sub-compone nt represen ts a

connected arborescence of the graph that includes ori-

ginal terms that are c lose at a semantic level. In fact,

the m ore the term s are close at a semantic level, the

more likely they are close and connected at a topolo-

gical level, due to the nature of the drawing a lgorithm

(Force-directed graph drawing), which tries to reduce

the c rossing edges as much as possible and make ed-

ges of more or less equal length. Figures from 3(a) to

3(f) represent sub -compo nents with a discrete dimen-

sion in term of nodes and edges, and r e fers to the main

topics addressed by the docu ment, i.e., earn, aircraft,

rise or rising, firm and bank, while figure from 3(g)

to 3(k) refer to marginal concepts like units of me-

asure (gallon, kilometre, tonnage) and other related

concepts like cargo and load. Our a ttention here fo-

cuses on the largest sub-componen t of the graph with

the m a ximum number of original terms inside. This

is the case for figure 3(a), which contains 70 synset

nodes, 122 word nodes, 66 seman tic relations (m ostly

hyponyms) and 127 meta-linguistic relations distribu-

ted over 192 nodes and 193 edges. Among the word

nodes, there are five words c ontained in the original

docume nt, i.e., pct, which is the abbreviated form for

percentage, share, dividend, profit and, finally, net. It

worth to pointing out that the first four terms have a

small degree of polysemy - the number of senses (me-

anings) the word can has acc ording to the context - ,

w.r.t. net, which has 6 senses - for example net can

mean a compu ter network, the net income (the case

for this document) or a trap made of netting to ca-

tch fish or birds or insects and so fort. Accordingly,

at a topological level, it results as a separated node

w.r.t. the first. Moreover, while the first four no-

des a re mostly leaf node (with the exception of share,

which has e greater polysemy) it represents a brid ge

nodes con necting three parts (semantically separated)

of the c onnected sub-graph. In tuitively, terms with

higher degree of po lysemy do not allow us to re cog-

nize the topic or the dom ain of the docum ent (even

by reading the document we nee d to contextualize the

text in order to attach the rig ht sense to net), but, ob-

viously, in this case, we attach the sense oriented to

North-West, i.e, the meaning close to the most po-

pulated region of the sub-graph (that of profit, divi-

dend, and so forth). The ana lysis of the other sub -

components of the semantic expansion, but one, leave

no doubts about wha t is the main topic of the docu-

ment. In fact, figures 3(b), 3( d) and 3(e) pr e sent sub-

KDIR 2018 - 10th International Conference on Knowledge Discovery and Information Retrieval

208

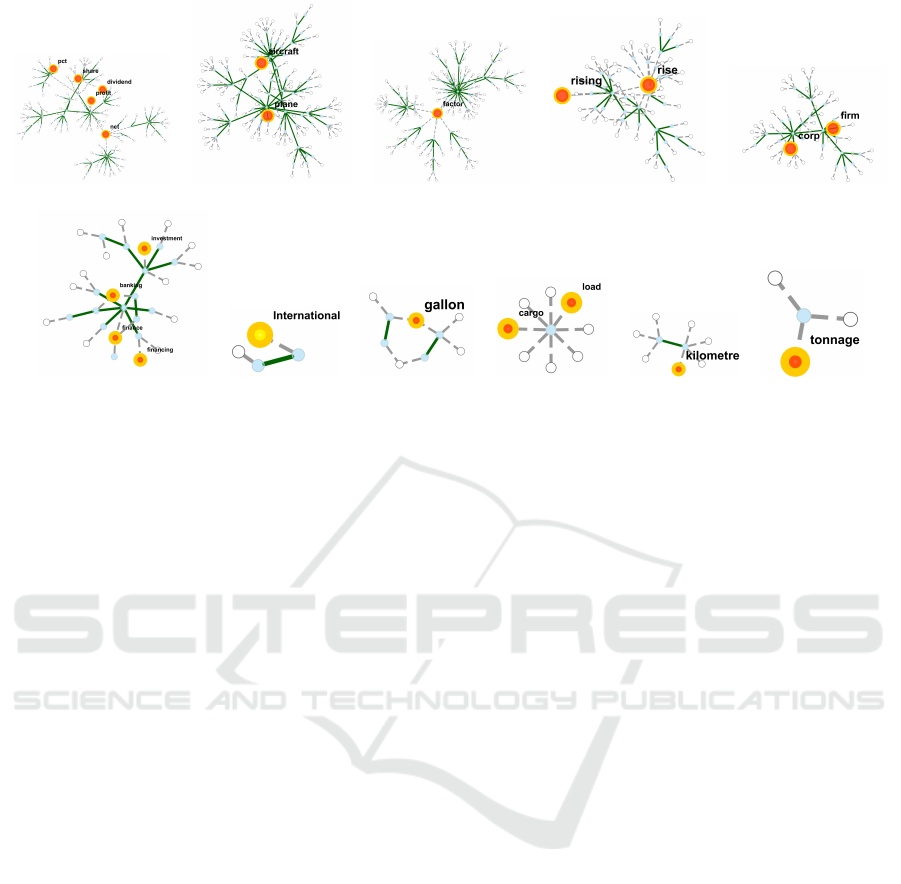

Figure 4: Reuter document semantic expansion sub-network.

graphs with a decreasing number of no des and edges

and, importantly, only two original terms (e.g., air-

craft and plane). These sub-comp onents are related

to marginal concepts o r topics addressed by the docu-

ment, if we confron t them w.r.t. sub-graph in figure

3(a). Furthe rmore, terms like rise or rising in figure

3(d) has an high degree of polysemy, 10 if conside-

red as Noun and 17 if conside red as Verb, so they do

not help to recognize any topic by themselves. Even

more so, figure 3( c) is useless in our analysis, bec ause

it c ontains only one or iginal term, factor, which has

a high degree of polysemy ( 7) and represent a kind

of star-point between semantically separated regions.

Figure 3(f) deserves some further considerations. It

has 4 original terms be longing to banking and fi nance

domains; furthermo re, these terms are very close in

the connected sub -graph, and also have a low degre e

of polysemy. They char acterize the knowledge dom-

ain of the docum e nt as well as the terms in figure 3 (a),

but our strategy consider the banking topic as a second

choice, due to the lesser num ber of origin al terms con-

tained in corresponding sub-graph. This means that

we can also define a ranking function between the

sub-graph based on the number of original terms be-

longing to each sub-graph. Figure from 3(g) to 3(k)

represent small connected components of the seman-

tic expansion limited to one or at most two original

terms. Th ey give a small contribute to the identifica-

tion of the document topic. Taking into a ccount all the

above consid erations, it turns out that the sub-grap h

depicted in figure 3(a) represen ts that associated with

the m a in topic of the document. Figure 4 shows the

detailed representation of such sub-graph b y puttin g

in evidence the textual label associated to all words

belonging to the synonyms set (synset). Each syn-

set is represented with a blue oval while the origi-

nal terms are depicted above orange diamonds. The

figure clearly show th e role of bridge for the word

net, which has the greatest level of polysemy betwe en

the sub-graph words. Inside each oval is the sysnet

ID retrieved from WordN e t, furthermore, each syn-

set is connected to the synonyms, represented as plain

text, thr ough a dashed line. The more remarkable ob-

servation that it worth to highlight to conclude this

section is that the proposed strategy tries to recogniz e

the document topic or domain by only representing

the semantic-grounded expansion of the lexical chain

underlying the document. All terms occurrences in-

formation like the term frequency (tf ) or the inverse

docume nt fre quency (idf ) are neglected here in favou r

of a semantic and topological interpretation of the ex-

panded lexical chain.

6 CONCLUSIONS

In this paper, a document representation meth odolog y

has been proposed and discussed at a qualitative le-

vel. We use a semantically-grounded graph models in

order to visualize the mo re relevant terms in a docu-

ment and the interconnections with semantically rela-

ted terms. The implementation of our methodology

within N eo4J results in a disconnected graphs con-

taining several connected sub-graphs, each of them

potentially referring to a topic or semantic category

of the sour c e document. The main addressed ques-

tion is about the possibility to recognize the top ic s

of a document just by analysing the topology of the

graph underlying the expanded lexical chains by me-

A Novel Framework to Represent Documents using a Semantically-grounded Graph Model

209

ans of sub-graphs with the maximum number of or igi-

nal terms. The application of this methodology to ap-

proxim ately one hundr e d Reuters documents has de-

monstrated that if a pre dominant topic for the analy-

sed do cument exists, a recurring pattern turns out, i.e.,

there exist a connected sub-graph with the maximum

number of original terms extracted fr om the analysed

docume nt. Thus, it is possible to recognize the to-

pic not in relation to the frequency of occurrence of

terms, but in relation to topological characteristics of

the graph, m ainly the connectivity of th e sub-graphs

and their dimension. This strategy goes beyond the

mere word-centric approach used in the most spread

docume nt representation model like the Space Vector

Model because leaves aside the statistic of the docu-

ment and suggests fu rther researches in the topic de-

tection field, which will be the subject of further stu-

dies.

REFERENCES

Begelman, G., Keller, P., and Smadja, F. (2006). Automa-

ted Tag Clustering: Improving search and exploration

in the tag space. In P roceedings of t he Collaborative

Web Tagging Workshop at the WWW 2006, Edinburgh,

Scotland.

Caldarola, E., Picariello, A., and Rinaldi, A. (2016). Ex-

periences in wordnet visualization with l abeled graph

databases. Communications in Computer and Infor-

mation Science, 631:80–99.

Caldarola, E. G ., Picariello, A., and Rinaldi, A. M.

(2015). Big graph-based data visualization experien-

ces: The wordnet case study. In Knowledge Discovery,

Knowledge Engineering and Knowledge Management

(IC3K), 2015 7th Internati onal Joint Conference on,

volume 1, pages 104–115. IEEE.

Caldarola, E. G. and Rinaldi, A. M. (2016). Improving

the visualization of wordnet large l exical database

through semantic tag clouds. In Big Data (BigData

Congress), 2016 IEE E International Congress on, pa-

ges 34–41. IEEE.

Cattuto, C., Schmitz, C., Baldassarri, A ., Servedio, V. D. P.,

Loreto, V., Hotho, A., Grahl, M., and Stumme, G.

(2007). Network properties of folksonomies. AI C om-

mun., 20(4):245–262.

Chen, Y.-X., Santamar´ıa, R., Butz, A., and Ther´on, R.

(2009). Tagclusters: Semantic aggr egation of colla-

borative tags beyond tagclouds. In Proceedings of

the 10th International Symposium on Smart Graphics,

SG ’09, pages 56–67, B erlin, Heidelberg. Springer-

Verlag.

Fujimura, K., Fujimura, S., Matsubayashi, T., Yamada, T.,

and Okuda, H . (2008). Topigraphy: visualization for

large-scale tag clouds. In Proceedings of the 17th in-

ternational conference on World Wide Web, WWW

’08, pages 1087–1088, New York, NY, USA. ACM.

Gabrilovich, E. and Markovitch, S. (2007). Computing se-

mantic relatedness using wikipedia-based explicit se-

mantic analysis. In IJcAI, volume 7, pages 1606–

1611.

Hassan-Montero, Y. and Herrero-Solana, V. ( 2006). Impro-

ving tag-clouds as visual information retrieval interfa-

ces. In InScit2006: International Conference on Mul-

tidisciplinary Information Sciences and Technologies.

Hu, X. and Wu, B. (2006). Automatic keyword ex-

traction using linguistic features. In Proceedings of

the Sixth IEEE International Conference on Data Mi-

ning - Workshops, pages 19–23, Washington, DC,

USA. IEEE Computer Society.

Kaptein, R. (2012). Using wordclouds to navigate and sum-

marize twitter search. In Proceedings of the 2nd Euro-

pean Workshop on Human-Computer I nteraction and

Information Retrieval, pages 67–70. CEUR.

Lewis, D. D. (1992). Representation and Learning in In-

formation Retrieval. PhD thesis, Computer Science

Dept.; Univ. of Massachusetts; Amherst, MA 01003.

Technical Report 91–93.

Lewis, D. D. (1997). Reuters-21578 text categorization test

collection, distribution 1.0. http://ww w. research. att.

com/˜ lewis/reuters21578. html.

Li, R., Bao, S., Yu, Y., Fei, B., and Su, Z. (2007). Towards

effective browsing of large scale social annotations.

In Proceedings of the 16th international conference

on World Wide Web, WWW ’07, pages 943–952, New

York, NY, USA. ACM.

McCandless, M., H at cher, E., and Gospodnetic, O. (2010).

Lucene in Action: Covers Apache Lucene 3.0. Man-

ning Publications Co.

Miller, G. A. (1995). Wordnet: a lexical database for eng-

lish. Communications of the ACM, 38(11):39–41.

Rinaldi, A. M. (2008). A content-based approach for do-

cument representation and retrieval. In Proceedings

of the Eighth ACM Symposium on Document Engi-

neering, DocEng ’08, pages 106–109, New York, NY,

USA. ACM.

Rinaldi, A. M. ( 2009). An ontology-driven approach for se-

mantic information retrieval on the web. ACM Tran-

sactions on Internet Technology (TOIT), 9(3):10.

Rinaldi, A. M. (2012). Improving tag clouds wi th ontolo-

gies and semantics. In Database and Expert Systems

Applications (DEXA), 2012 23rd International Works-

hop on, pages 139–143. IEEE.

Rinaldi, A. M. (2013). Document summarization using

semantic clouds. In Semantic Computing (ICSC),

2013 I EEE Seventh International Conference on, pa-

ges 100–103. IEEE.

Rivadeneira, A. W., Gruen, D. M., Muller, M. J., and Mil-

len, D. R. (2007). Getting our head in the clouds: to-

ward evaluation studies of tagclouds. In Proceedings

of the SIGCHI conference on Human factors in com-

puting systems, CHI ’07, pages 995–998, New York,

NY, USA. ACM.

Shannon, P., Mar kiel, A., Ozier, O., Baliga, N. S ., Wang,

J. T., Ramage, D., Amin, N., Schwikowski, B., and

Ideker, T. ( 2003). Cytoscape: a software environment

KDIR 2018 - 10th International Conference on Knowledge Discovery and Information Retrieval

210

for integrated models of biomolecular interaction net-

works. Genome research, 13(11):2498–2504.

Sinclair, J. and Cardew-Hall, M. (2008). The folksonomy

tag cloud: when is it useful? Journal of Information

Science, 34(1):15–21.

Thorndyke, P. W. (1978). Knowledge transfer in learning

from texts. In Cognitive psychology and instruction,

pages 91–99. Springer.

Wei, Y. (2012). An iterative approach to keywords ex-

traction. In International Conference in Swarm In-

telligence, pages 93–99. Springer.

Yan, P. and Jin, W. (2012). Improving cross-document kno-

wledge discovery using explicit semantic analysis. In

International Conference on Data Warehousing and

Knowledge Discovery, pages 378–389. Springer.

Zhu, J., Wang, C., He, X., Bu, J., Chen, C., Shang, S.,

Qu, M., and Lu, G. (2009). Tag-oriented document

summarization. In Proceedings of the 18th internatio-

nal conference on World wide web, pages 1195–1196,

New York, NY, USA. ACM.

A Novel Framework to Represent Documents using a Semantically-grounded Graph Model

211