Towards Distributed Model Analytics with Apache Spark

¨

Onder Babur

1

, Loek Cleophas

1,2

and Mark van den Brand

1

1

Eindhoven University of Technology, NL-5612 AZ Eindhoven, The Netherlands

2

Stellenbosch University, ZA-7602 Matieland, South Africa

Keywords:

Model-Driven Engineering, Model Analytics, Scalability, Distributed Computing, Apache Spark, Big Data.

Abstract:

The growing number of models and other related artefacts in model-driven engineering has recently led to the

emergence of approaches and tools for analyzing and managing them on a large scale. The framework SAMOS

applies techniques inspired by information retrieval and data mining to analyze large sets of models. As the

data size and analysis complexity goes up, however, further scalability is needed. In this paper we extend

SAMOS to operate on Apache Spark, a popular engine for distributed Big Data processing, by partitioning

the data and parallelizing the comparison and analysis phase. We present preliminary studies using a cluster

infrastructure and report the results for two datasets: one with 250 Ecore metamodels where we detail the

performance gain with various settings, and a larger one of 7.3k metamodels with nearly one million model

elements for further demonstrating scalability.

1 INTRODUCTION

The use of models as a basis for software

engineering—whether UML models used as a basis

for design and implementation, or meta-/models in

model-driven engineering (MDE)—has been expo-

nentially growing in recent years. This is witnessed

by e.g. the dramatic growth of models and related ar-

tifacts present both in open source such as the Git-

Hub repository (Kolovos et al., 2015; Hebig et al.,

2016) and in industrial MDE ecosystems (Babur et al.,

2017). Analogously to earlier developments in source

code analytics and text mining where very high vo-

lumes of data have long emerged as a reality and a

challenge, this development necessitates similar ap-

proaches for analyzing models at larger scales. At the

same time, models inherently display more complex

structure, in general being graph-structured instead of

the trees with (limited) cross-links typically encounte-

red as representations of source code and natural lan-

guage. Models in turn demand efficient and scalable,

even if approximate, techniques for the analysis—

versus comparing them one-to-one using exact but

expensive techniques (graph edit distance, similarity

flooding (Melnik et al., 2002), etc. ). As a result, ana-

lytics becomes more complex for the setting of mo-

dels, both in terms of techniques needed, and of com-

putation effort required. Note that the requirements

and potential added value for (big) data analytics in

the general sense (i.e. not for models) has for long

been widely recognized by the community (LaValle

et al., 2011; Zikopoulos et al., 2011), and is not furt-

her elaborated in this paper.

One way to improve the performance of model

analytics (for complex analyses of large datasets) is

to look at distributed settings, versus running in a se-

quential setting on a single machine. There recently

have been a few efforts of exploiting distributed com-

puting in the MDE community, though in a different

context for model transformations (Benelallam et al.,

2015; Burgue

˜

no et al., 2016). In this paper, we ske-

tch how the existing model analytics framework SA-

MOS can be lifted from the latter setting to a dis-

tributed setting using the Apache Spark framework

for distributed computation. In Section 2 we ske-

tch SAMOS, while Section 3 does the same for the

Apache Spark framework. Section 4 considers how

SAMOS can be modified and extended to operate in

the distributed setting for potentially higher perfor-

mance, while Section 5 discusses initial results for

two sets of Ecore metamodels from a proof of con-

cept, i.e. a version of SAMOS lifted to the Apache

Spark framework, without any specific optimizations:

one dataset with 250 Ecore metamodels reporting the

performance gain with various settings, and a larger

one of 7.3k metamodels with nearly one million mo-

del elements for further demonstrating our scalability.

Section 6 concludes the paper with several indications

for future work.

Babur, Ö., Cleophas, L. and Brand, M.

Towards Distributed Model Analytics with Apache Spark.

DOI: 10.5220/0006735407670772

In Proceedings of the 6th International Conference on Model-Driven Engineering and Software Development (MODELSWARD 2018), pages 767-772

ISBN: 978-989-758-283-7

Copyright © 2018 by SCITEPRESS – Science and Technology Publications, Lda. All rights reserved

767

Set$of$models$

Metamodel$

Features$

NLP$

Tokeniza8on$

$Matching$scheme$

Weigh8ng$scheme$

VSM$

Distance$

calcula8on$

Clustering$

Dendrogram$

Automated$extrac8on$

Inferred$

clusters$

Extrac8on$$

scheme$

Filtering$

Synonym$

detec8on$

…$

Data$selec8on,$

filtering$

Clone$

detec8on$

…$

Classifica8on$

Analysis$

…$

Repository$

management$

Domain$

analysis$

…$

NJgrams$

Metrics$

…$

Manual$

inspec8on$

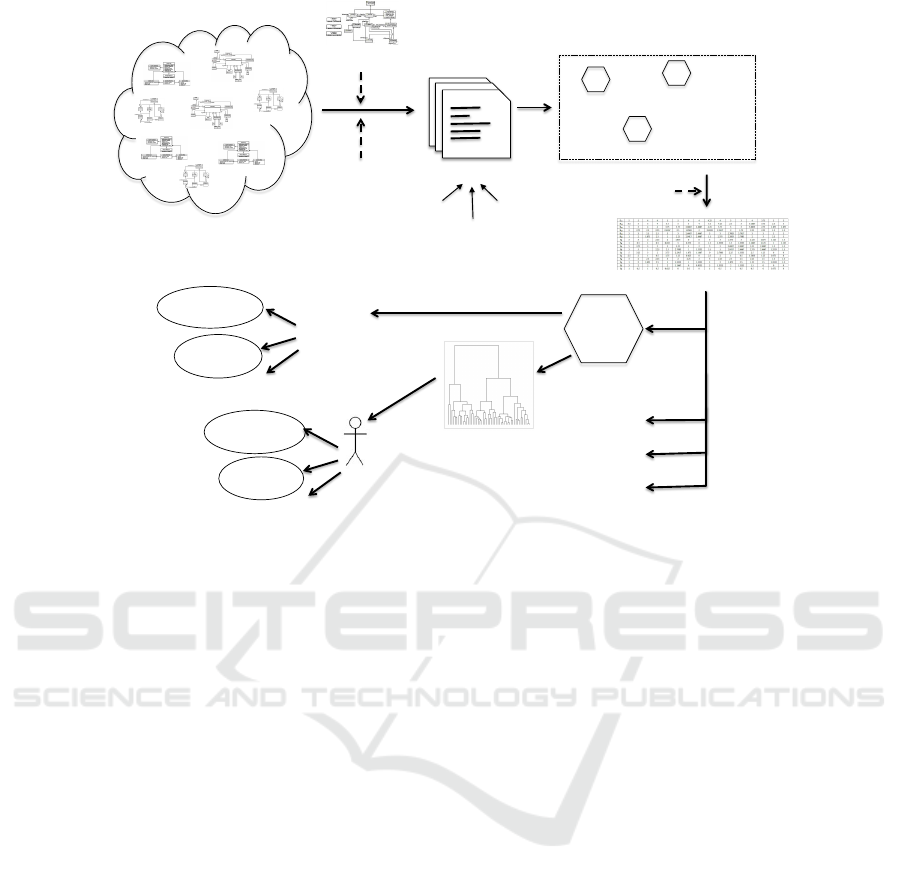

Figure 1: Overview of SAMOS workflow.

2 BACKGROUND: MODEL

ANALYTICS FRAMEWORK

We outline here the underlying concepts of SA-

MOS (Babur, 2016; Babur et al., 2016), a framework

for large-scale model analytics, inspired by the in-

formation retrieval (IR) and machine learning (ML)

domains. IR deals with effectively indexing, analy-

zing and searching various forms of content inclu-

ding natural language text documents (Manning et al.,

2008). As a first step for document retrieval in ge-

neral, documents are collected and indexed via some

unit of representation. Index construction can be im-

plemented using a vector space model (VSM) with the

following major components: (1) a vector representa-

tion of occurrence of the vocabulary in a document,

named term frequency, (2) zones (e.g. ’author’ or

’title’), (3) weighting schemes—such as inverse do-

cument frequency (idf)—and zone weights, (4) Natu-

ral Language Processing (NLP) techniques for hand-

ling compound terms and for detecting synonyms and

semantically related words. The VSM allows trans-

forming each document into an n-dimensional vector,

thus resulting in an m × n matrix for m documents.

Over the VSM, document similarity can be defined as

the distance (e.g. euclidean or cosine) between vec-

tors. These distances can be used for identifying si-

milar groups of documents in the vector space via an

unsupervised ML technique called clustering (Man-

ning et al., 2008).

SAMOS applies this workflow to models, starting

with a metamodel-driven extraction of features. Fe-

atures can be, for instance, singleton names of mo-

del elements (very similar to the vocabulary of docu-

ments) or n-gram fragments (Manning and Sch

¨

utze,

1999) of the underlying graph structure. N-grams ori-

ginate from computational linguistics and represent

linear encoding of (text) structure. In our context, an

example n-gram for a UML class diagram for n = 2

would be a Class containing a Property (Babur and

Cleophas, 2017). SAMOS computes a VSM via com-

parison schemes (e.g. whether to check types), weig-

hting schemes (e.g. Class weight higher than Pro-

perty) and NLP (stemming/lemmatization, typo and

synonym checking, etc.). Applying various distance

measures suitable to the problem at hand, it then ap-

plies different clustering algorithms (via the R statis-

tical software) and can output automatically derived

cluster labels or diagrams for visualization and ma-

nual inspection, thereby enabling exploration of large

model sets. Figure 1 illustrates the workflow, sho-

wing key workflow steps of the workflow as well as

application areas such as domain analysis and clone

detection.

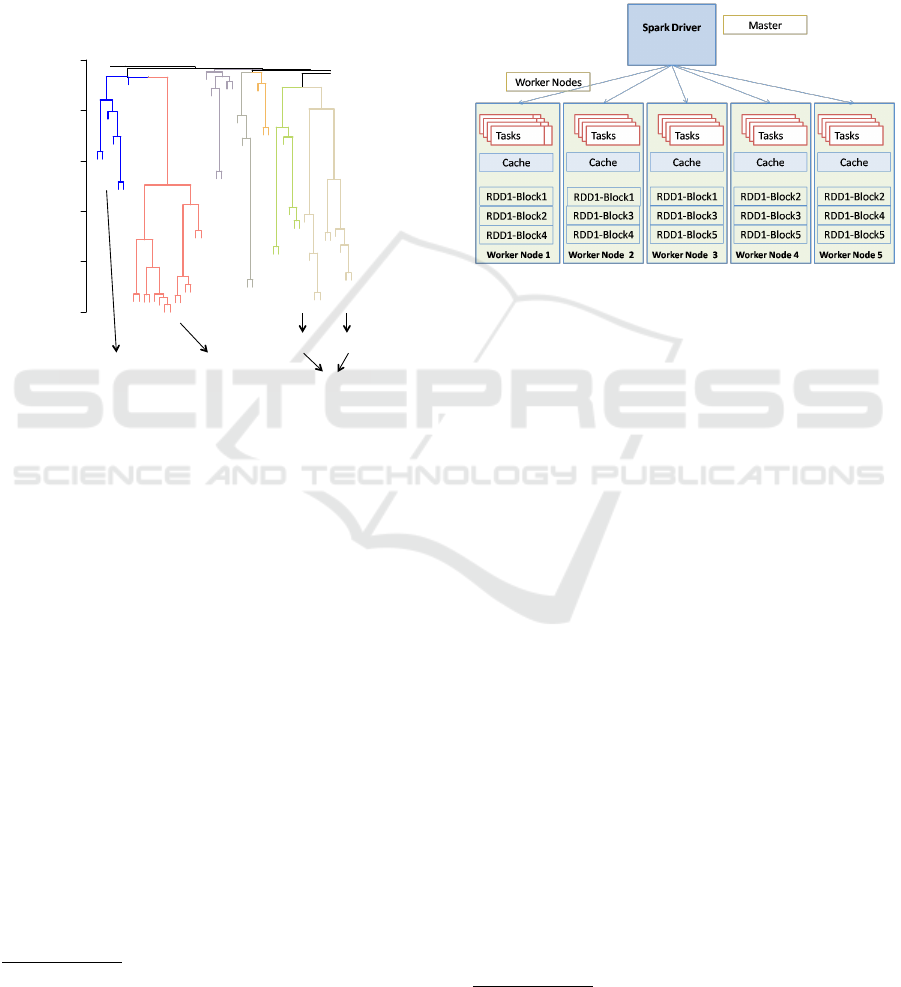

A sample output dendrogram is given in Figure 2

from (Babur et al., 2016). Given a domain analy-

sis scenario, SAMOS is used to hierarchically group

MOMA3N 2018 - Special Session on Model Management And Analytics

768

metamodels in the ATL Ecore Zoo

1

. The interpre-

tation of this visualization is as follows: the num-

bers on the dendrogram correspond to the metamodels

in the repository, which are gathered on (sub-)trees.

The height of the tree joints represents the distance

of the underlying nodes or sub-trees. Items in a sub-

tree have similar domains (e.g. conference manage-

ment metamodels), while the hierarchical structure of

the dendrogram further reflects the intra-cluster simi-

larity (e.g. word and excel subtrees under the large

office subtree.

0.0 0.2 0.4 0.6 0.8 1.0

32

33

99

94

95

98

96

97

102

101

100

103

91

92

87

90

88

89

4

7

8

1

2

3

6

15

16

17

18

22

19

20

21

13

14

11

12

9

10

51

44

85

86

5

93

53

50

49

52

82

106

107

60

64

55

62

61

63

73

72

71

74

65

67

69

68

66

70

30

29

31

23

83

84

75

76

80

81

78

79

41

46

43

36

42

37

40

38

39

26

27

24

25

28

35

48

47

77

34

45

57

58

56

59

54

104

105

Conference

Management

Bibliography

Excel Word

Office

0.0 0.2 0.4 0.6 0.8 1.0

32

33

99

94

95

98

96

97

102

101

100

103

91

92

87

90

88

89

4

7

8

1

2

3

6

15

16

17

18

22

19

20

21

13

14

11

12

9

10

51

44

85

86

5

93

53

50

49

52

82

106

107

60

64

55

62

61

63

73

72

71

74

65

67

69

68

66

70

30

29

31

23

83

84

75

76

80

81

78

79

41

46

43

36

42

37

40

38

39

26

27

24

25

28

35

48

47

77

34

45

57

58

56

59

54

104

105

Figure 2: Excerpt of the dendrogram for domain clustering

the ATL Zoo (Babur et al., 2016).

3 BACKGROUND: APACHE

SPARK

Apache Spark

2

is an open-source distributed data pro-

cessing engine (Zaharia et al., 2016), also used for Big

Data Analytics. It offers a stack of technologies for

both fundamental components such as cluster mana-

gement and fault-tolerant distributed data storage (in

the form of Resilient Distributed Datasets — RDDs),

and for advanced ones such as streaming and distri-

buted machine learning. Spark further provides rich

APIs for various programming languages including

Java, Python and Scala. It improves on the pre-

viously popular MapReduce (Dean and Ghemawat,

2008) computational paradigm (e.g. on Apache Ha-

doop

3

) with a more efficient distributed data and me-

mory management system, leading to a higher com-

parative performance and scalability (Zaharia et al.,

2016).

1

http://web.emn.fr/x-info/atlanmod/index.php?title=Ecore

2

https://spark.apache.org/

3

http://hadoop.apache.org/

Spark typically operates with a master driver

node, which coordinates several worker nodes (see Fi-

gure 3). Each worker node is allocated parallel tasks

to process the specific parts of the distributed data

(e.g. on the distributed file system). A central cache

in each worker node can further improve efficiency by

for instance maintaining some data in memory for fas-

ter access in multiple cores and for repeated/iterative

tasks.

Figure 3: Overview of Spark architecture

4

.

4 DISTRIBUTED VSM

COMPUTATION

As outlined in Section 2, SAMOS relies on the cal-

culation of a vector space by comparing the extracted

features of each model against the set of all features

(i.e. columns of the vector space). We can identify

several components of this approach:

• extraction of desired features from the models,

mapping each model to the feature set P

i

,

• calculation of the set of all unique features (F, di-

mensions of the VSM),

• given F, comparing each feature in P

i

against each

feature in F to calculate a row or vector in the

VSM.

The vector represents the model in the high-

dimensional vector space, to be used for distance cal-

culation and other statistical analyses. The bottle-

neck of this approach is the quadratic number of fea-

ture comparisons (in contrast with the previous steps

which have linear complexity), which makes this the

target for our parallelization effort to increase scala-

bility. Note that the efficiency and scalability of the

distance calculation for the resulting large sparse ma-

trix is a relatively lesser problem and left as future

work.

4

http://spideropsnet.com/site1/blog/2014/12/09/igniting-

the-spark/

Towards Distributed Model Analytics with Apache Spark

769

On the other hand, thinking in the context of the

Spark architecture (cf. Figure 3), we can map our ap-

proach to the distributed setting as follows:

• data: feature sets (P

i

’s) residing as distributed

data (i.e. RDDs of model-feature pairs),

• cache: maximal feature set (F) precomputed and

distributed to each worker node to be held in me-

mory cache,

• tasks: feature comparison as the atomic unit of

parallel execution.

While feature-to-feature comparison is the atomic

unit for parallelization in this setting, for practical re-

asons we aim for a coarser granularity: we perform

a single pass for each feature, comparing it with the

maximal set. Each parallel task in return consists of

(1) pair-wise comparing a feature p in P

i

versus F and

computing an intermediate vector, and (2) computing

the final VSM vector for the corresponding model,

e.g. via summing the intermediate ones (frequency

setting of SAMOS (Babur and Cleophas, 2017)). To

exemplify, a model consisting of m features is pro-

cessed m-way and eventually integrated to calculate a

single row of the VSM corresponding to that model.

Note that a great deal of the necessary functiona-

lity for distributed operation are provided by Spark:

partitioning and distribution (shuffling) of the data,

synchronisation of the tasks and the workflow, data

collection and I/O, and so on. The necessary modifi-

cations for SAMOS were mostly wrapping the related

building blocks (e.g. parsing and extraction, feature

comparison) into parallel Spark RDD operations with

minimal glue code around them.

5 PRELIMINARY RESULTS AND

DISCUSSION

We performed some preliminary experiments for our

technique. First of all, we used SURFSara

5

, the com-

putational infrastructure for ICT research in the Net-

herlands. SURFSara provides a Hadoop cluster with

Spark support, which consists of 170 data/compute

nodes with 1370 CPU-cores for parallel processing

and a distributed file system with a capacity of 2.3

PB.

Next, as for SAMOS, we chose bigrams of attri-

buted nodes (for the clone detection scenario (Babur,

2018)), as one of the more computationally intensive

setting (e.g. compared with extracting simple word

features for domain analysis (Babur et al., 2016)).

5

https://www.surf.nl/en/about-

surf/subsidiaries/surfsara/

As for the dataset, we mined GitHub for (1) a limi-

ted set of 250 Ecore

6

metamodels, and (2) a large

set of 7312 Ecore metamodels (after exact duplicates

and files smaller than 2KB removed). Table 1 shows

some details on the sizes of the two datasets. A furt-

her SAMOS framework setting to mention is that we

have turned off expensive NLP checks for semantic

relatedness and synonymy for this preliminary expe-

riment.

Normally, we have a simplistic all or none stra-

tegy for NLP-caching; for small datasets we iterate

over all the model elements to compute and keep in

memory the word-to-word similarity scores (i.e. full

caching). For the distributed execution we have disa-

bled this feature as we cannot fit the relevant data for

very large model sets (the goal is to process tens of

thousands of models) into the memory, so we comple-

tely disabled NLP-caching. As future work, we plan

to investigate various more sophisticated approaches

to caching to circumvent this issue.

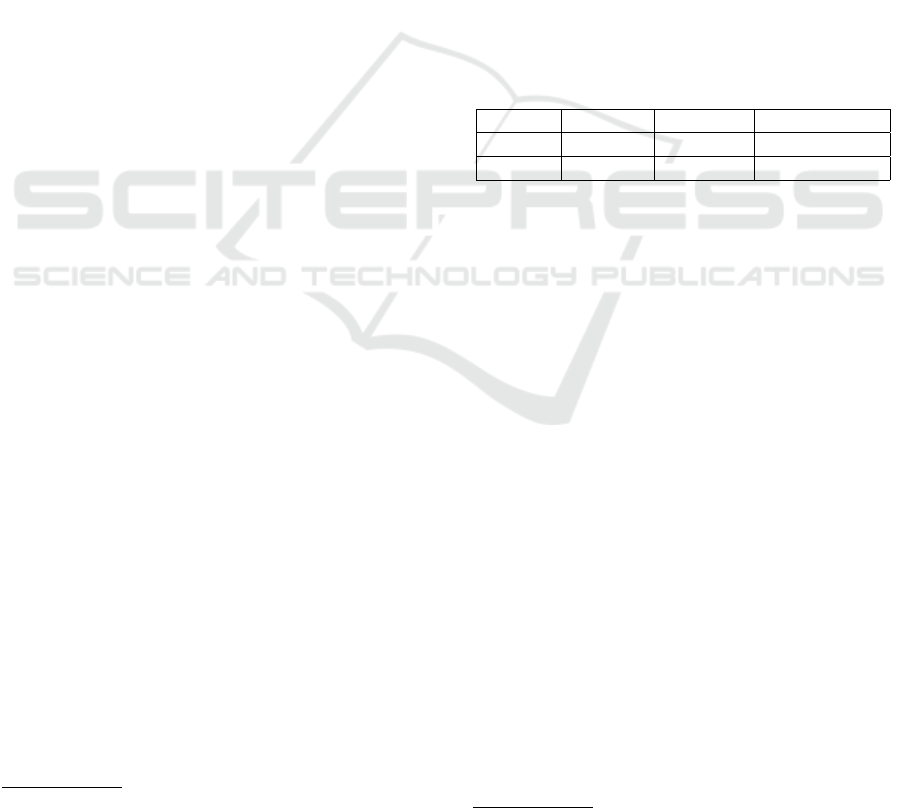

Table 1: Description of the datasets: number of metamo-

dels, total file size and number of model elements.

dataset #models file size #model elem.

1 250 4.8MB ∼50k

2 7312 133.6MB ∼1 million

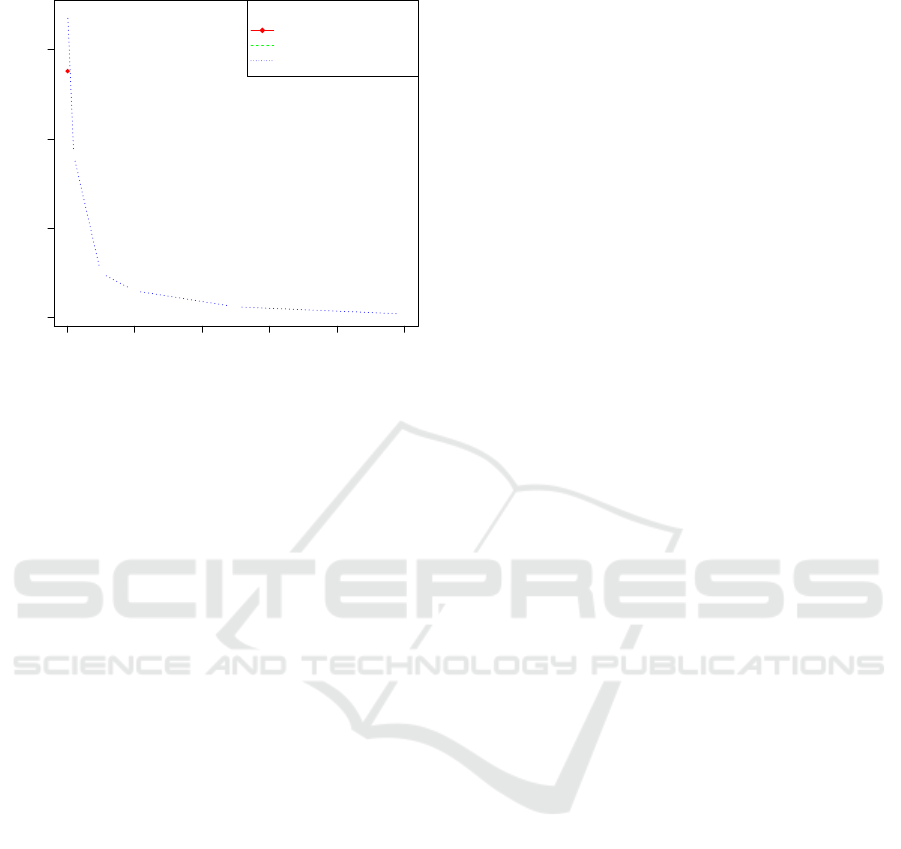

Performance for Dataset 1. On dataset 1, we ran

the single-core local version of SAMOS, with and

without NLP-caching, and the distributed version

with 1, 10, 50, 100 and 250 and 500 executors wit-

hout NLP-caching. Figure 4 depicts the results. For

the single-core case, local execution has the best

performance, especially with NLP-caching enabled.

We have included the single-core distributed case to

roughly assess the overhead: 17.1 hours (distributed)

versus 13.8 hours (local). It is evident that as the num-

ber of executors increase, the performance increases

as well, though with diminishing returns.

Performance for Dataset 2. As a bigger challenge

for our approach, we made an attempt to run data-

set 2 with the same (expensive) settings as above.

We could argue going for more approximate, hence

cheaper, settings (unigrams instead of bigrams, igno-

ring instead of including attributes, etc. ) for such

a large dataset but we performed this experiment in

order to load-test and assess the limits of our techni-

que. We successfully calculated the VSM using a to-

tal of ∼1500 executors (215 executors with 7 cores

and 8GB memory each) processing the 5000-way par-

titioned data on SURFSara and obtained the resulting

6

https://www.eclipse.org/modeling/emf/

MOMA3N 2018 - Special Session on Model Management And Analytics

770

0 100 200 300 400 500

0 5 10 15

Number of executors

Run time (hours)

●

●

●

●

●

●

●

Run time vs. # executors

●

●

Mode of Execution

local non−cached

local cached

distributed non−cached

Figure 4: Performance for dataset 1.

VSM (5000-part on the distributed file system) in ap-

proximately 17.9 hours.

Discussion. The results indicate that the distribu-

ted execution mode has the potential to increase the

applicability of SAMOS for large datasets. We can

consider this as a first but important step towards the

in-depth analysis of thousands of models for applica-

tion scenarios such as repository mining (notably our

∼7k Ecore metamodel set from GitHub and the ∼93k

Lindholmen UML dataset (Hebig et al., 2016)), large-

scale clone detection and model evolution studies.

Given the preliminary nature of this work, there is

certainly a lot of room for further optimization. These

would involve not only optimizations with respect to

the inner mechanisms of Apache Spark, but also im-

provements within SAMOS, which is itself a research

prototype. Nevertheless, to our knowledge we do not

know of another comparable model analytics appro-

ach or tool in the literature which is capable of such

scalability.

Threats to Validity. In this work, we only deal

with VSM calculation (which we assume to be the

major bottleneck) and leave tackling the rest of the

workflow—such as distance calculation and statistical

analyses—as future work. We plan to proceed with

parallel or scalable techniques for this as well. Anot-

her threat to validity is that our approach at this point

has not been tried on larger scales, so it should be

investigated how it performs with e.g. hundred thou-

sands of models towards Big Data.

6 CONCLUSION AND FUTURE

WORK

In this paper we present a novel approach for distribu-

ted model analytics. We have extended the SAMOS

framework to operate on Apache Spark infrastructure

and exploit its powerful distributed data storage and

processing facilities. Using the SURFSara cluster, we

have performed preliminary experiments using two

sets of Ecore metamodels. On the smaller one, we

have reported in detail the performance of the diffe-

rent execution modes and number of executors. For

the larger one, we have tested the scalability of our

approach in the case of nearly a million model ele-

ments.

There is a large volume of potential future work.

The immediate next step would involve extending the

SAMOS workflow with scalable, distributed techni-

ques for distance calculation and statistical analyses.

More advanced statistical analyses, including pre-

dictive and prescriptive ones, are among the notable

targets for future work. Noting the benefits of NLP-

caching, we also would like to investigate compro-

mising NLP-caching strategies, applicable for large

amounts of data. Moreover, we believe there is a lot

of room for optimization for this approach, which can

be considered in parallel to the other proposed items.

A more in-depth discussion of the distributed vs. lo-

cal execution in terms of performance gain, optimal

number of executors, etc. would also be beneficial.

ACKNOWLEDGMENTS

This work is supported by the 4TU.NIRICT Rese-

arch Community Funding on Model Management and

Analytics in the Netherlands. We also would like to

thank SURF, the collaborative ICT organisation for

Dutch education and research, for providing us with a

computational infrastructure and support.

REFERENCES

Babur,

¨

O. (2016). Statistical analysis of large sets of mo-

dels. In 31th IEEE/ACM Int. Conf. on Automated Soft-

ware Engineering, pages 888–891.

Babur,

¨

O. (2018). Clone detection for ecore metamodels

using n-grams. In The 6th International Conference

on Model-Driven Engineering and Software Develop-

ment, to appear.

Babur,

¨

O. and Cleophas, L. (2017). Using n-grams for the

automated clustering of structural models. In 43rd

Int. Conf. on Current Trends in Theory and Practice

of Computer Science, pages 510–524.

Towards Distributed Model Analytics with Apache Spark

771

Babur,

¨

O., Cleophas, L., and van den Brand, M. (2016).

Hierarchical clustering of metamodels for compara-

tive analysis and visualization. In Proc. of the 12th

European Conf. on Modelling Foundations and Appli-

cations, 2016, pages 3–18.

Babur,

¨

O., Cleophas, L., van den Brand, M., Tekinerdogan,

B., and Aksit, M. (2017). Models, more models and

then a lot more. In Grand Challenges in Modeling, to

appear.

Benelallam, A., G

´

omez, A., Tisi, M., and Cabot, J. (2015).

Distributed model-to-model transformation with ATL

on MapReduce. In Proc. of the 2015 ACM SIGPLAN

Int. Conf. on Software Language Engineering, pages

37–48. ACM.

Burgue

˜

no, L., Wimmer, M., and Vallecillo, A. (2016). To-

wards distributed model transformations with LinTra.

Dean, J. and Ghemawat, S. (2008). MapReduce: simplified

data processing on large clusters. Communications of

the ACM, 51(1):107–113.

Hebig, R., Ho-Quang, T., Chaudron, M. R. V., Robles, G.,

and Fernndez, M. A. (2016). The quest for open

source projects that use UML: mining GitHub. In

Proc. of MODELS 16, pages 173–183. ACM.

Kolovos, D. S., Matragkas, N. D., Korkontzelos, I., Anani-

adou, S., and Paige, R. F. (2015). Assessing the use

of eclipse mde technologies in open-source software

projects. In OSS4MDE@ MoDELS, pages 20–29.

LaValle, S., Lesser, E., Shockley, R., Hopkins, M. S., and

Kruschwitz, N. (2011). Big data, analytics and the

path from insights to value. MIT sloan management

review, 52(2):21.

Manning, C. D., Raghavan, P., and Sch

¨

utze, H. (2008). In-

troduction to Information Retrieval. Cambridge Uni-

versity Press, New York, NY, USA.

Manning, C. D. and Sch

¨

utze, H. (1999). Foundations of

Statistical Natural Language Processing. MIT Press.

Melnik, S., Garcia-Molina, H., and Rahm, E. (2002). Simi-

larity flooding: A versatile graph matching algorithm

and its application to schema matching. In Proc. of the

18th Int. Conf. on Data Engineering, pages 117–128.

IEEE.

Zaharia, M., Xin, R. S., Wendell, P., Das, T., Armbrust,

M., Dave, A., Meng, X., Rosen, J., Venkataraman, S.,

Franklin, M. J., et al. (2016). Apache Spark: A unified

engine for big data processing. Communications of the

ACM, 59(11):56–65.

Zikopoulos, P., Eaton, C., et al. (2011). Understanding big

data: Analytics for enterprise class hadoop and stre-

aming data. McGraw-Hill Osborne Media.

MOMA3N 2018 - Special Session on Model Management And Analytics

772