Augmented Reality Object Selection User Interface for People with

Severe Disabilities

Julius Gelšvartas

1

, Rimvydas Simutis

1

and Rytis Maskeli

¯

unas

2

1

Automation Department, Faculty of Electrical and Electronics Engineering, Kaunas University of Technology,

Studentu˛ st. 50-154, Kaunas, Lithuania

2

Centre of Real Time Computer Systems, Kaunas University of Technology, Baršausko st. 59-338a, Kaunas, Lithuania

Keywords:

Augmented Reality, Projection Mapping, Calibration, Structured Light, Projector.

Abstract:

This paper presents a user interface that was designed for people with sever aging related conditions as well

as mobility and speech disabilities. Proposed user interface uses augmented reality to highlight objects in the

environment. Augmented reality is created using a projection mapping technique. Depth sensor is used to

perform object detection on a planar surface. This sensor is also used as part of a camera-projector system to

perform automatic projection mapping. This paper presents the user interface system architecture. We also

provide a detailed description of the camera-projector system calibration procedure.

1 INTRODUCTION

People suffering from severe age related conditions

such as dementia can experience mobility prob-

lems and speech pathologies. People suffering from

tetraplegia can also experience similar symptoms.

These conditions drastically impact patient’s ability to

communicate. Moreover, these patients require some

form of constant care that is both time consuming and

expensive. Often assistive technology is the only way

these patients can communicate . Efficient assistive

technologies have the potential of improving patient’s

quality of life and sometimes even reducing the need

for care.

Assistive technology is a very broad term covering

adaptive, rehabilitative and assistive devices that help

people perform tasks that they were formerly unable

to accomplish. Such system usually consist of two

parts, namely assistive devices and human computer

interaction (HCI) interface. Unfortunately, assistive

devices that are on the market today are not very effi-

cient. The efficiency of such devices can be improved

by developing specialized user interface (UI).

The primary use case of our system is selection

and manipulation of objects that are placed on a table-

top in front of the user. UI presented in this paper

consists of projector, depth camera (such as Microsoft

Kinect) and user action input device. Our system

can be used with several user action input devices,

namely sip/puff, consumer grade brain computer in-

Figure 1: Experimental setup and the example of high-

lighted object.

terface (BCI) and eye tracker devices. Depth camera

is used to automatically detect tabletop plane and ob-

jects positioned on that plane. Finally, projector is

used together with depth camera to create a camera-

projector system that performs automatic projection

mapping. This makes it possible to highlight all de-

tected objects. The user then selects the desired ob-

ject and it can be manipulated either by care giver or

a robotic arm. For example, when the user selects

a glass of juice it is grabbed by a robotic arm and

brought to users mouth so that he can drink from it.

The main goal of the assistive technology is to ei-

ther restore or substitute an ability that a patient has

lost. BCI is the best technology for restoring natu-

ral abilities, because HCI is performed by thinking.

BCI research field has had significant advances in re-

cent years. It has been shown in (Hochberg et al.,

156

Gelšvartas, J., Simutis, R. and Maskeli

¯

unas, R.

Augmented Reality Object Selection User Interface for People with Severe Disabilities.

DOI: 10.5220/0006733301560160

In Proceedings of the 4th International Conference on Information and Communication Technologies for Ageing Well and e-Health (ICT4AWE 2018), pages 156-160

ISBN: 978-989-758-299-8

Copyright

c

2019 by SCITEPRESS – Science and Technology Publications, Lda. All rights reserved

2012) that BCI systems can be used to directly con-

trol robotic arms. Similar system has been used for

patient’s limb control (Ajiboye et al., 2017). These

advances are, however, made with expensive and usu-

ally invasive BCI devices. Consumer-grade BCI de-

vices still have very limited capabilities (Maskeliunas

et al., 2016).

Systems that do not use direct limb or robotic arm

control require UI to operate. HCI efficiency directly

affect system usability. A standard way of presenting

UI to the users is by using conventional displays. This

approach is inefficient when the user is communicat-

ing or interacting with the environment. Augmented

and virtual reality have successfully been used in as-

sistive technologies and rehabilitation (Hondori et al.,

2013). Augmented reality is better suited for infor-

mation presentation, because virtual reality headset

would further separate locked in patient from the en-

vironment. Projection mapping is one of the best

ways to display augmented reality UI.

Projection mapping can either be manual or au-

tomatic. Manual projection mapping is mostly used

in entertainment and art industries where the scene is

static. The system presented in this paper uses auto-

matic projection mapping, because the environment is

dynamic and objects can change their positions. Au-

tomatic projection mapping can be performed when

the transformation between depth camera and projec-

tor optical frames is known. This transformation is

obtained by calibrating camera-projector system. One

way to calibrate camera-projector system is by using

structured-light (Moreno and Taubin, 2012). Alterna-

tively, method proposed in (Kimura et al., 2007) can

be used when the camera is already calibrated. This

paper utilized a practical calibration method proposed

in (Yang et al., 2016).

The system presented in this paper is similar to

(Benko et al., 2012), but without the accounting for

deformations caused by physical objects. More ad-

vanced dynamic projection mapping methods have

been created in recent years (Sueishi et al., 2015).

Such systems require more expensive hardware setup.

Figure 1 shows the experimental setup of the pre-

sented system.

The remaining paper is structured as follows. Sec-

tion 2 describes the proposed projection mapping

based system. The results and discussions are pre-

sented in section 3. Section 4 is the conclusion.

2 MATERIALS AND METHODS

The augmented reality UI is constructed and pre-

sented using a camera-projector system. The work

flow for setting up and calibrating the projection map-

ping system is as follows:

1. The depth camera and projector are setup in front

of the scene. The projector and camera should

be fixed sturdily to each other. Ideally the cam-

era and projector should be fixed to a common

metal frame or integrated into one housing. This

is necessary so that the extrinsic parameters cal-

culated during calibration do not change when the

system is operating. If the camera-projector sys-

tem would be integrated into a single device the

calibration process could be performed only once

i.e. factory calibration.

2. Calibration of projector-camera system is per-

formed by placing a board with circular black dot

pattern in front of projector and camera. The pro-

jector is used to show a similar white dot pattern

that appears on the same board. The camera im-

age of the board is recorded and positions of pro-

jected and real dots are estimated. To correctly es-

timate the system parameters several images with

varying board position and orientation have to be

captured. The estimates are used to calculate the

intrinsic and extrinsic parameters of the projector-

camera system.

In the camera-projector system a projector is

treated as a virtual camera device. We use a pin-

hole camera model to describe both the camera

and the projector (i.e. a virtual camera). The pin-

hole camera intrinsic parameters consist of a 3x3

camera matrix C and a 1x5 distortion coefficients

matrix D. Intrinsic parameter matrices C and D

can be combined to create a 3X4 camera projec-

tion matrix P (Hartley and Zisserman, 2003). Ma-

trix P can be used to project 3D world points in

homogeneous coordinates into an image. During

calibration we obtain two camera projection ma-

trices P

c

for camera and P

p

for projector.

Camera-projector system also has extrinsic cam-

era parameters. Extrinsic camera parameters con-

sist of translation vector T and rotation matrix

R. In our case T and R define the translation

and rotation of the projector optical origin in the

camera origin coordinate system. After calibra-

tion camera-projector system intrinsic and extrin-

sic parameters are obtained. These parameters

are used to perform automatic projection mapping

during system operation.

During the system operation depth senor is acquiring

depth images of the scene in camera optical frame

coordinate system. These images have to be trans-

formed into a projector optical frame coordinate sys-

tem. This transformation consists of the following

Augmented Reality Object Selection User Interface for People with Severe Disabilities

157

three steps:

1. The depth image is converted to a 3D point cloud.

This is achieved by re-projecting each depth im-

age pixel (u, v, Z) to 3D point (X, Y, Z, 1) in depth

camera frame. Here u and v are pixel coordinates

along image rows and columns. X and Y are 3D

point coordinates along X and Y axis in meters.

Depth image pixel values are already in meters

and are copied to 3D point Z axis coordinate. The

transformation is performed using the camera in-

trinsic parameter matrix P

c

obtained during sys-

tem calibration.

2. 3D point cloud transformation. Each 3D point is

transformed to projector frame by multiplying this

point by transformation matrix that is constructed

from camera-projector system extrinsic parame-

ters i.e. rotation matrix R and translation T .

3. Projecting point cloud into projector frame. Every

transformed 3D point is projected into projector

frame by multiplying them with projection matrix

P

p

. P

p

is the projector device projection matrix

that is obtained after calibrating camera-projector

system.

The transformed depth images are used for object

detection. After object detection pipeline finishes the

transformed depth image pixels that belong to a de-

tected object are use in projection mapping.

3 RESULTS AND DISCUSSION

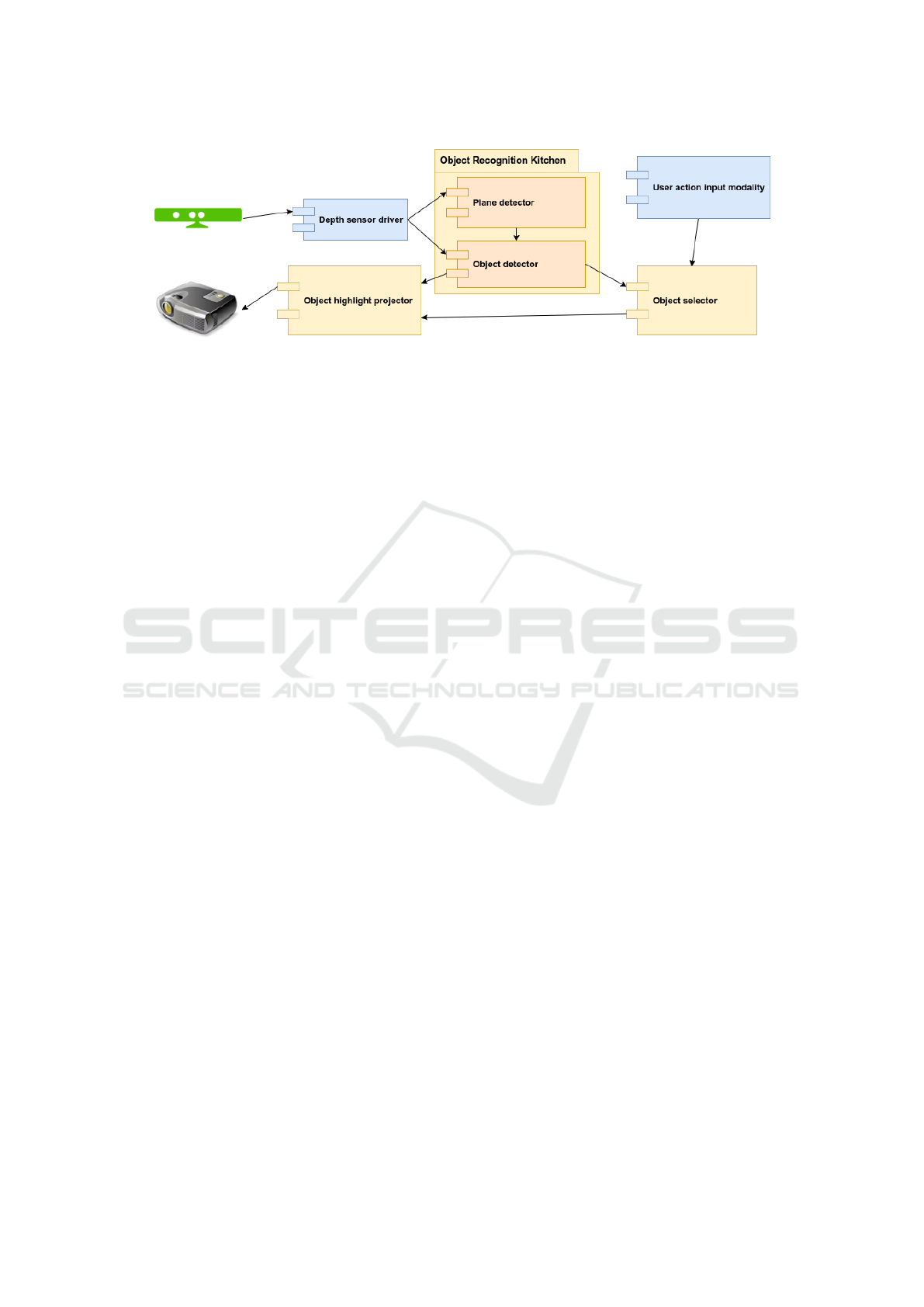

The camera-projector system described in the previ-

ous section has been used to create an object selection

UI. The system architecture of this UI application can

be seen in Fig. 2. UI implementation contains the

following components:

1. Depth sensor driver is used to acquire depth im-

ages from Kinect senor. Note that other depth sen-

sors or stereo cameras can easily be integrated into

our system.

2. Tabletop detector from Object Recognition

Kitchen package (Willow Garage, 2017) is used

to perform object detection. The detector uses

depth image that has been transformed to the pro-

jector optical frame. The object detector used in

this paper has two parts, namely a table finder and

an object recognizer. The tabletop is detected as

a dominant plane in the depth image using the al-

gorithm presented in (Poppinga et al., 2008). All

points that are above the tabletop plane are used

for clustering to identify individual objects. The

remaining points are discarded. Object point clus-

ters are used to highlight the objects with projec-

tor. Each cluster is also compared to object model

database to determine the type of object. At the

moment we are not using object type and database

mesh, however this information could be used to

render more accurate object highlights.

3. User action input modality is a package that pro-

vides an interface used to abstract various action

input devices. This interface currently supports

two actions, namely selecting current object and

moving to next object in the list. Some devices

only have one action, in this case the moving to

next object is performed automatically at given in-

tervals and the user only has to select the desired

object.

This package has a well defined interface that can

be used to integrate additional assistive devices

into the system. Current system supports sip/puff

devices, eye-trackers and consumer grade BCI de-

vices.

4. Object selector keeps track of all detected ob-

jects. The object list is updated each time a new

depth image is processed. The update process re-

moves objects that have been removed from the

scene and adds new object when they appear in

the scene.

This package also maintains an index of the cur-

rent object. The current object index is updated by

actions from User action input modality pack-

age.

Finally, this package can also generate external

signals, when a particular object is selected. The

external signal can be used to display information

for caregiver. This can be done by changing the

color of highlighted object or showing notifica-

tions on a separate screen. The system could also

be integrated with additional assistive technolo-

gies. For example, generated external signal could

be used by a robotic arm controller that grasps an

object and gives it to the user.

5. Object highlight projector receives a list of

points belonging to each detected object. These

points are used to create a projection mapping im-

age that is shown by the projector. The index of

current object is received from Object selector

and this object is highlighted in different color.

The detailed description of projection mapping al-

gorithm can be found in section 2.

The proposed system could further be improved

by calculating object highlights from 3D models. The

highlight visibility could be improved by projecting

ICT4AWE 2018 - 4th International Conference on Information and Communication Technologies for Ageing Well and e-Health

158

Figure 2: Object selection user interface system diagram.

animated highlights. Another option is to create high-

lights that account for object texture instead of using

single color highlight for the whole object.

Automatic projection mapping produces accurate

object highlights. Highlight visibility can sometimes

be reduced when the scene contains shiny, reflective

or transparent objects. The easiest way to overcome

these problems is to make sure that the scene contains

only diffuse objects. The proposed system will oper-

ate in a controlled environment and the correct object

choice is performed by the system setup personnel.

The proposed system could further be improved

by using a more advanced object detector. The main

requirement for an object detector is that it should de-

tect objects in disparity maps or 3D point clouds pro-

duced by depth camera.

4 CONCLUSIONS

This paper presents a UI that uses projection mapping

to highlight objects in a scene. Our system is specif-

ically designed for patients with severe disabilities.

Detailed description of the system architecture is pro-

vided. Projecting object highlights onto real objects

creates a more natural user experience, because the

user can interact with assistive UI by looking directly

into a scene. The main disadvantage of using pro-

jection mapping system is that projected light might

not be visible well in very bright environments. This

problem can be partially solved by having more pow-

erful projector.

The ideas presented in this paper could also be

used with head-up display (HUD) systems. HUD

would provide a more natural UI and would help to

avoid problems in bright environments. Available

consumer grade HUD systems, however, are very ex-

pensive, whereas projectors are widespread and af-

fordable.

ACKNOWLEDGEMENTS

This research has been supported by European co-

operation in science and technology COST Action

CA15122, Reducing Old-Age Social Exclusion: Col-

laborations in Research and Policy (ROSEnet).

REFERENCES

Ajiboye, A. B., Willett, F. R., Young, D. R., Memberg,

W. D., Murphy, B. A., Miller, J. P., Walter, B. L.,

Sweet, J. A., Hoyen, H. A., Keith, M. W., et al.

(2017). Restoration of reaching and grasping move-

ments through brain-controlled muscle stimulation in

a person with tetraplegia: a proof-of-concept demon-

stration. The Lancet, 389(10081):1821–1830.

Benko, H., Jota, R., and Wilson, A. (2012). Miragetable:

freehand interaction on a projected augmented reality

tabletop. In Proceedings of the SIGCHI conference on

human factors in computing systems, pages 199–208.

ACM.

Hartley, R. and Zisserman, A. (2003). Multiple view geom-

etry in computer vision. Cambridge university press.

Hochberg, L. R., Bacher, D., Jarosiewicz, B., Masse, N. Y.,

Simeral, J. D., Vogel, J., Haddadin, S., Liu, J., Cash,

S. S., van der Smagt, P., et al. (2012). Reach and grasp

by people with tetraplegia using a neurally controlled

robotic arm. Nature, 485(7398):372.

Hondori, H. M., Khademi, M., Dodakian, L., Cramer, S. C.,

and Lopes, C. V. (2013). A spatial augmented reality

rehab system for post-stroke hand rehabilitation. In

MMVR, pages 279–285.

Kimura, M., Mochimaru, M., and Kanade, T. (2007). Pro-

jector calibration using arbitrary planes and calibrated

camera. In Computer Vision and Pattern Recogni-

tion, 2007. CVPR’07. IEEE Conference on, pages 1–

2. IEEE.

Maskeliunas, R., Damasevicius, R., Martisius, I., and Vasil-

jevas, M. (2016). Consumer-grade eeg devices: are

they usable for control tasks? PeerJ, 4:e1746.

Moreno, D. and Taubin, G. (2012). Simple, accurate, and

robust projector-camera calibration. In 3D Imaging,

Augmented Reality Object Selection User Interface for People with Severe Disabilities

159

Modeling, Processing, Visualization and Transmis-

sion (3DIMPVT), 2012 Second International Confer-

ence on, pages 464–471. IEEE.

Poppinga, J., Vaskevicius, N., Birk, A., and Pathak, K.

(2008). Fast plane detection and polygonalization in

noisy 3d range images. In Intelligent Robots and Sys-

tems, 2008. IROS 2008. IEEE/RSJ International Con-

ference on, pages 3378–3383. IEEE.

Sueishi, T., Oku, H., and Ishikawa, M. (2015). Robust

high-speed tracking against illumination changes for

dynamic projection mapping. In Virtual Reality (VR),

2015 IEEE, pages 97–104. IEEE.

Willow Garage, R. c. (2017). Ork: Object

recognition kitchen. https://github.com/wg-

perception/object_recognition_core.

Yang, L., Normand, J.-M., and Moreau, G. (2016). Practi-

cal and precise projector-camera calibration. In Mixed

and Augmented Reality (ISMAR), 2016 IEEE Interna-

tional Symposium on, pages 63–70. IEEE.

ICT4AWE 2018 - 4th International Conference on Information and Communication Technologies for Ageing Well and e-Health

160