Augmented Reality for Respiratory System using Leap Motion

Controller

U. Andayani

1

, B. Siregar

1

, Abdul Gani

1

, M. F. Syahputra

1

1

Department of Information Technology, Universitas Sumatera Utara, Jl. Alumni No.3, Medan, Indonesia

Keywords: augmented reality, leap motion controller, hand gesture, motion tracking, gesture understanding.

Abstract: Anatomy is a branch of biology. Science applied in the medical field is not easy to learn just by imaging or at

least just using props to know the process directly. The anatomical system is the systemic of the body

especially in the part of the lungs organ while breathing. Therefore, modern technology is used as one of the

learning methods that can help research anatomy of the lung as a main organ in the respiratory system. In this

study, the researchers use the anatomy of Three Dimension (3D) and multimedia animation system that helps

with the Leap Motion Controller tool that supports the movement of fingers and hands as controlling the

system. With this tool, objects with a 3D models on the respiratory system can be handled with gestures from

the hands of both hands of the user. The process resulted in a merger of Augmented Reality systems and the

Leap Motion Controller in which the system produced animated movements and virtual 3D object use marker

which sized 21cmx29, 7cm where the results using best distance while camera detect the marker is 20cm-

45cm, camera can detect the object use the slope distance between 35

0

-145

0

.

1 INTRODUCTION

Anatomy is the study of the structure of the human

body (Paulsen, 2013). This knowledge is applied in

the medical field,based on observations, a professor

of trouble to explain to the students about the

structure of the human body. This is because the

science was likely learned by memorizing through

textbooks but requires a relatively high visualization

capabilities. The solution that has been done by the

teacher is to encourage students into the laboratory to

see the props and adapt to the atlas of the human

anatomy, but the disadvantages are the props can only

be used in the laboratory. In addition, students

experience boredom with lecturers in teaching

methods without using the props. Furthermore, with

the support of an edition and visualization mode

(Edgard, 2010).

Along with the rapid Information Technology

(IT) almost all areas of life wanting everything his

character interesting, so that may be one way to

improve the ability to learn anatomy by using modern

learning system. One of them is to learn anatomy

using 3D technology (Nady, 2014). In addition, as a

means of supporting tools 3D technology can also

improve user interaction so that the anatomy is more

observable in interesting ways.

Augmented reality technology is a 3D technology

to combine computer-generated objects with real-

world environment. Augmented reality enhance the

user perception and interaction with the real world.

Virtual objects indicates that the user information

cannot be seen directly by eyesight. Information

submitted by the virtual object to help the user do the

work in the real world (Kato, 2000). Augmented

reality is an innovation of computer vision that can

provide the visualization and animation of an object

model. It is expected that this technology will help the

faculty in teaching and learning, so that the learning

process becomes more interesting and no student

interaction of the material.

To perform an interactive interaction, the system

must be able to detect and capture the movement of

the object (Giulio, 2014). In this study, the object to

be detected and a search is conducted in the form of

palms. Detection of palms is expected to connect the

real world with the virtual world in a more realistic

reality augmented system.

Leap motion as the controller can improve human

interaction with computers to develop the application

such as for accuracy (Frank, 2013). Leap Motion

Controller is a tool designed to track the movements

of the hand and fingers. At the moment the system is

run, users can perform various activities with hand

gestures. This hand movement was observed by

1900

Andayani, U., Siregar, B., Gani, A. and Syahputra, M.

Augmented Reality for Respiratory System using Leap Motion Controller.

DOI: 10.5220/0010090519001904

In Proceedings of the International Conference of Science, Technology, Engineering, Environmental and Ramification Researches (ICOSTEERR 2018) - Research in Industry 4.0, pages

1900-1904

ISBN: 978-989-758-449-7

Copyright

c

2020 by SCITEPRESS – Science and Technology Publications, Lda. All rights reserved

sensors on the Leap Motion Controller as input to do

various things that can trigger events in the program.

In a 3D human anatomy, hand gestures will make the

user as if they were interacting directly and in contact

with the 3D model of the human anatomy.

The gesture is a static and dynamic movement that

is used as a means of communication between man

and machine as well as fellow human beings who use

sign. By using hand gestures were observed Leap

Motion Controller to do the control on the system.

Certain gestures may trigger a process on the system.

Position hands doing gestures can be supported for

translational motion 3D Leap Motion Controller.

Anatomy harnessing modern technology has also

been applied as in the Augmented Reality for

Anatomy Study (Naoto, 2016), (Natapon, 2016), and

(Yudhisthira, 2013). Leap Motion Controller is

already widely used in a variety of systems, such as

the system had been developed (Kasey, 2016),

(Masaru, 2013), and (Farhan, 2015).

Based on the above background, the title of this

research is "Augmented Reality Anatomy

Respiratory System Using Leap Motion Controller

For Media Medical Education".

2 CONCEPT DEVELOPMENT

The data used in this study were the marker captured

in real time via the camera phone and using hand

gestures detection leap motion controller. While

capturing the hand movements required marker and

mobile phone cameras, motion controller quality leap

nice, lighting and distance marker making.

The methodology need to analyse the problems of

the information obtained in the preceding stage in

order to obtain a method to overcome the problem in

this study. Then perform the system design according

to the analysis of system problems. The following

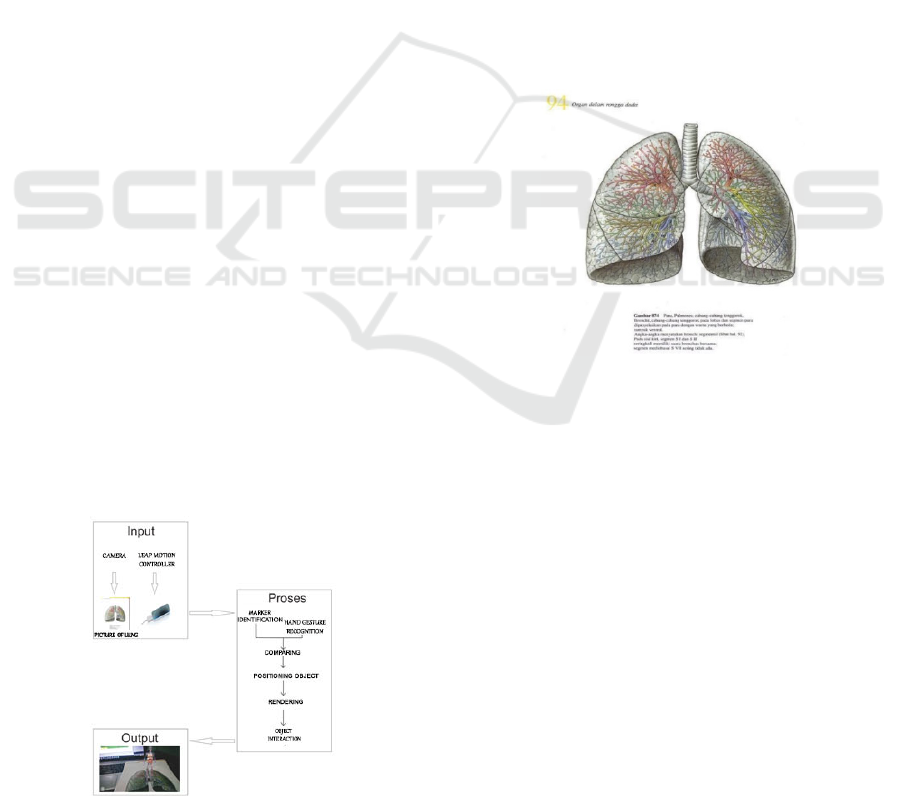

Figure 1 describes the general architecture of the

system design of the study.

Figure 1: General Architecture

3 SYSTEM DESIGN

The research was done by dividing into three

sections: input, process and output.

3.1 Input

Marker was placed in front of the camera to display

3d objects. Leap motion controller is placed under the

hand to control 3D objects.

3.2 Process

3.2.1 Identify Marker

Marker consists of a piece of paper will be detected

by a Mobile camera. The hand and finger movements

above the Leap Motion Controller will be detected by

the Leap Motion Controller tool. Furthermore, both

of them will be reviewed and assigned a 3D

orientation position as a virtual object above the

marker.

Figure 2: Marker

3.2.2 Database

Furthermore, the marker captured by the camera

adjusts the marker image in the database, as well as

the hand gesture, if the corresponding movement

information will continue with the approximate

scaling and placing 3D virtual objects above the

marker.

3.2.3 Positioning Object

The position of the object is related to the X

coordinate (moving the position to the right and left),

Y (moving the position up and down), Z (moving the

position forward and back) on the marker for the

virtual position of 3D objects.

Augmented Reality for Respiratory System using Leap Motion Controller

1901

3.2.4 Rendering

In rendering, all data entered in the modelling

process, animation, texturing, lighting with

parameters will be translated in an output (the final

view on the animation model).

3.2.5 Object Interaction

The 3D objects located above the marker will move

according to the instructions of the hand movement.

3.3 Output

The resulting output is the movement of objects and

animation updates, in accordance with hand gesture

instructions.

3.4 Hand Gesture using the Leap

Motion

Controlling or running applications using the Leap

Motion Controller device. Leap motion controller

will capture hand movements instructed by the user

that are carried out, are:

1. Hand movements slide right and left to rotate

objects.

2. Hand movements to grasp to stop rotating objects.

3. Hand movements slide down to display oxygen

inhaling animation.

4. Hand movements slide up to display animation

removing CO

2.

5. The hand movement points to display the

displacement of the object's movement of the hand

pointing with the index finger to display the anatomy

of the lung section.

Figure 3: Leap Motion Controller Setting

4 RESULT AND DISCUSSION

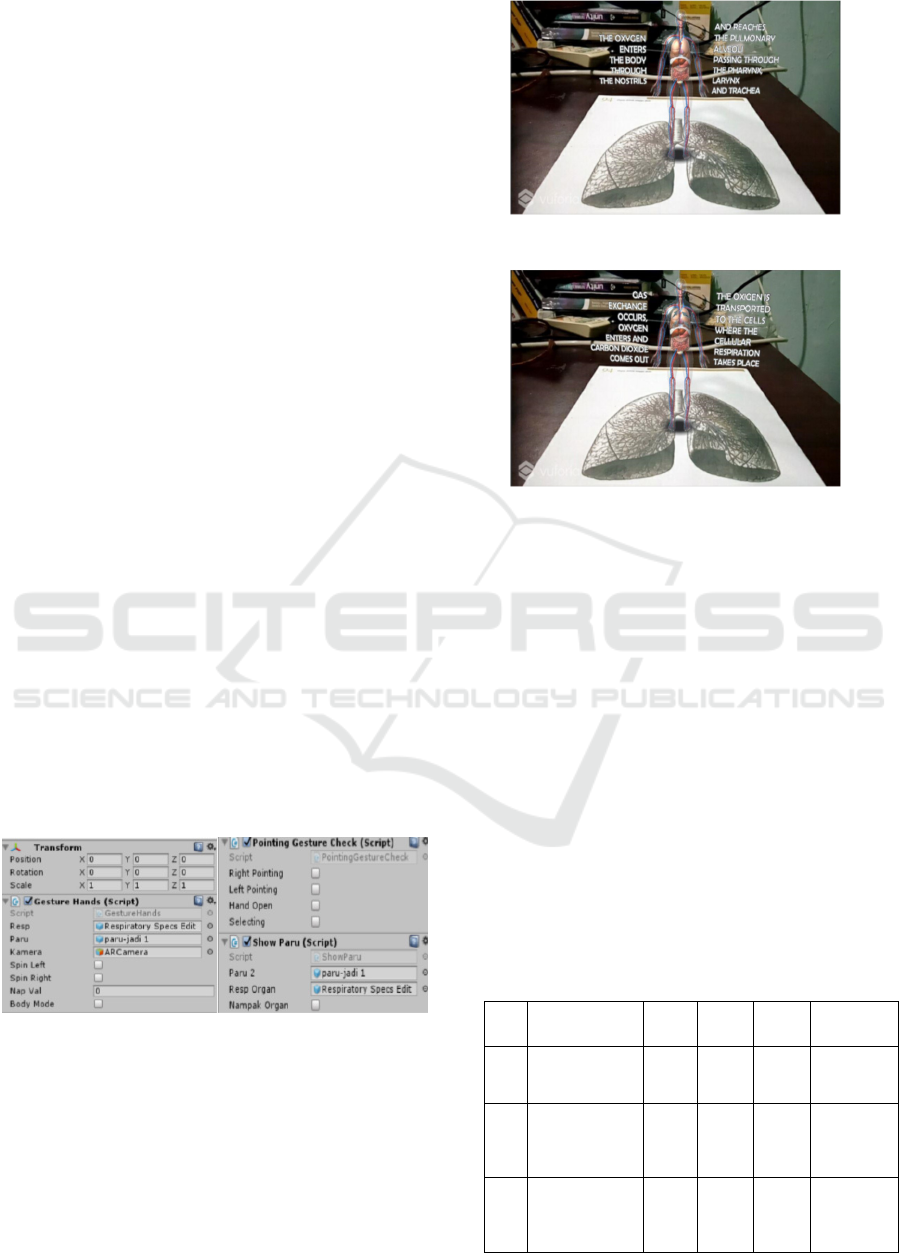

The following an animation of the lung organs, such

as while breathe oxygen and release CO

2

.

Figure 4: Animation of Breathing Oxygen

Figure 5: Releasing CO2

The purpose of testing system in order to

know the achievement of the system to use the Leap

Motion Controller as a tool for 3D human

anatomyrecognition system. Test divided into 2 (two)

parts.

4.1 Introduction Test of Leap Motion

Controller

The introduction test of Leap Motion Controller is to

know how accurate leap motion detect user gestures.

Testing is done by connecting the leap motion with

laptop with a USB cable. After the Leap Motion will

connect automatically leap motion lamp life and will

detect hand movements. Tests carried out ten times.

In in order to determine accurately degree system in

this study.

Table 1: Leap Motion Controller Scenario Test

No. ScenarioTest Test

Cor

rect

Fals

e

Test

Result

1

Introduction

of Fingers

10 8 2

Detected

and

unstable

2

The

introduction

of the right

hand handhel

d

10

10

0

Detected

and stable

3

The

introduction

of the left

hand handhel

d

10

10

0

Detected

and stable

ICOSTEERR 2018 - International Conference of Science, Technology, Engineering, Environmental and Ramification Researches

1902

4

The

introduction

of horizontal

and vertical

movement of

the hands of

the right hand

10

10

0

Detected

and stable

5

The

introduction

of horizontal

and vertical

movement of

the hand left

han

d

10

10

0

Detected

and stable

6

The

introduction

of the right

hand pointed

objec

t

10 7 3

Detected

and

unstable

7

The

introduction

of the left

hand pointed

objec

t

10 8 2

Detected

and

unstable

From the experiments can be concluded that the

introduction of the hand and finger movements are

detected and everything is run well. Besides testing to

detect hand movements Leap Motion Controller to

light intensity.

Table 2: Leap Motion Controller detect to light intensity

No

.

Light

Intensity(Lux

)

No.

of

Tes

t

Correc

t

Fals

e

Test

Result

1 0 10 0 0

Not

detected

2 43.50 10 3 7

Detected

and

unstable

3 83.68 10 6 4

Detected

and

unstable

4 138 005 10 9 1

Detected

and

unstable

5 141 828 10 10 0

Detected

and stable

6 146.74 10 10 0

Detected

and stable

7 153 294 10 10 0

Detected

and stable

4.2 Augmented Reality Marker Testing

Marker testing was conducted to determine the level

detect marker image. Testing is done by connecting

the camera with a USB cable next mobile camera will

automatically detect the marker image and display the

object. Marker used already registered on the website

next Vuforia markers be inserted into the database of

Unity software. The markersize used for this

application is 21cm x 29.7cm (A4 paper size).

Meanwhile marker testing divided into 2 (two) parts.

4.2.1 Distance Detection

Tests conducted to determine the distance

detector to detect the level of the marker image with

a certain distance. Testing is done how far the camera

to detect the marker.

Table 3: Distance Marker Detection Test

No. Distance

(centimet

res)

No. of

Test

Correct Fals

e

Test

Result

1 15

10 10

0

Detected

and Stable

2 20

10 10

0

Detected

and Stable

3 25

10 10

0

Detected

and Stable

4 30

10 10

0

Detected

and Stable

5 35

10 10

0

Detected

and Stable

6 45

10 10

0

Detected

and Stable

7 50

10

8 2

Unstable

8 55

10

0

10

Not

detected

From the experimental detection distance, there

comes a time marker cannot know in because the

picture is less clear marker captured by the camera so

that the camera can detect and stable when the

distance between 20cm - 45cm.

4.2.2 Slope Distance Detection

Tests conducted to determine the distance detector

detects the level of the marker image with a certain

distance. Testing is done how much tilt the camera to

detect the marker.

Augmented Reality for Respiratory System using Leap Motion Controller

1903

Table 4: Tilt Marker Detection Test

No. Slope

Distance

(

0

)

No.

of

Test

Correct False Test Result

1 0

10

0

10 Not detected

2 25

10

7 3

Detected and

Unstable

3 35

10 10

0

Detected and

Stable

4 75

10 10

0

Detected and

Stable

5 110

10 10

0

Detected and

Stable

6 135

10 10

0

Detected and

Stable

7 145

10 10

0

Detected and

Stable

8 155

10

6 4

Detected and

unstable

9 180

10

0

10 Not detected

From the experimental point of the camera to the

marker, there is a time marker cannot know because

the picture is less clear marker captured by the camera

so that the camera can detect and stable when the

distance between 35

0

- 145

0

.

5 CONCLUSIONS

1. Leap Motion Controller when detect hand

movements in these applications run properly and

perform the movement of 3D animation.

2. The results of the calculation of the average value

of 2,916 where the user agrees that the 3D human

anatomy using Leap Motion Controller is a highly

interactive system.

3. Leap Motion Controller can be used as tools for the

manufacture of human anatomy recognition system,

especially the lungs - lungs with the help of 3D

augmented reality marker measuring 21 cm x 29.7 cm

where the results are best when the camera detect the

distance marker is 20 cm -45 cm, the camera also can

be tilted in marker detection where the best angle

results between 35

0

- 145

0

.

For further research, it is expected the research

that can improve the revision of the Leap Motion

Controller tool. So when in use, the display can be

more in line with the movement of the user's

movement. Conformity users with hand gestures

displayed by the 3D system can help the ease learn

gestures found on the system. In addition, the

detailed matters of anatomy can also be developed

based on the organs function. So the visualization of

human anatomy using Leap Motion Controller can

be more detailed.

ACKNOWLEDGEMENTS

This research was supported by Universitas Sumatera

Utara. All the faculty, staff members and laboratory

technicians of Information Technology Department,

whose services turned my research a success.

REFERENCES

EdgardLamounier, Jr., Bucioli, A., Cardoso, A.,

Andrade, A., Soares, A., 2010. On the Use o

f

A

ugmented Reality Techniques in Learning and

Inter

p

retation o

f

Data Cardiolo

g

ic.

Weichert, F., Bachmann, D.,Rudak, B.,Fisseler,

D.,2013.

A

nalysis of the Accuracy and Robustness

of the Leap Motion Controller. Sensors, 13(5), 6380

6393; doi:10.3390/s130506380.

Marin, G.,Dominio, F.,Zanuttigh, P.,2014. Hand

g

esture recognition with leap motion and kinect

devices. IEEE International Conference on Image

Processing (ICIP). ISBN: 978-1-4799-5751-4.

DOI: 10.1109/ICIP.2014.7025313.

Kato, H.,Billinghurst, M., Poupyrev, I.,Imamoto, K.,

Tachibana, K., 2000.Virtual Object Manipulation

on a Table-To

p

AR Environment.

Carlson, K. J., Gagnon, D. J., 2016.

A

ugmented Reality

simulation Integrated Education in Health Care.

12, 123-127.

Okada, M.,Soga, M., Kawagoe, T., Taki, H., 2013.

Development of A Skill Learning Environment for

Posture and Motion with Bone Life-

s

ize models

usin

g

Au

g

mented Realit

y

.

Hoyek, N., Collet, C.,Rienzo, F, D., Almeida, M, D.,

Guillot, A.,2014. Effectiveness of three‐

dimensional digital animation in teaching human

anatom

y

in an authentic classroom context.

Ienaga, N., Bork, F.,Meerits, S., Mori, S.,Fallavollita,

P.,Navab, N., Saito, H., 2016. First Deployment o

f

Diminished Reality for Anatomy Education.

Pantuwong, N.,2016.

A

tangible interfaces for 3D

character animation using augmented reality

technolo

gy

.

Paulsen, F.,Waschke, J., 2013.Sobotta Atlas of Human

Anatomy: General Anatomy and Musculoskeletal.

Translators: Brahm U. Publisher. Jakarta: EGC.

Buana, Y, C.,P, Fendi Aji, 2013.

A

ugmented Reality

For Anatomy Study With Speech Recognition,

Universitas Sebelas Maret.

Luthfi, F.,2015.

AnatomiManusiaBerdimensiTigaMenggunakan

L

eap Motion Controlle

r

.

ICOSTEERR 2018 - International Conference of Science, Technology, Engineering, Environmental and Ramification Researches

1904