Separation of Concerns in Heterogeneous Cloud Environments

Dapeng Dong, Huanhuan Xiong and John P. Morrison

Boole Centre for Research in Informatics, University College Cork, Western Gateway Road,

Cork, Ireland

Keywords:

Service Management, Resource Management, Cloud, HPC.

Abstract:

The majority of existing cloud service management frameworks implement tools, APIs, and strategies for

managing the lifecycle of cloud applications and/or resources. They are provided as a self-service interface

to cloud consumers. This self-service approach implicitly allows cloud consumers to have full control over

the management of applications as well as the underlying resources such as virtual machines and containers.

This subsequently narrows down the opportunities for Cloud Service Providers to improve resource utilization,

power efficiency and potentially the quality of services. This work introduces a service management frame-

work centred around the notion of Separation of Concerns. The proposed service framework addresses the

potential conflicts between cloud service management and cloud resource managment while maximizing user

experience and cloud efficiency on each side. This is particularly useful as the current homogeneous cloud is

evolving to include heterogeneous resources.

1 INTRODUCTION

There are many cloud service management frame-

works in existence today. They provide the means

to specify and to compose services into applications

for deployment in cloud environments. In essence,

these frameworks embody service descriptions, de-

ployment specifications, and the specific resources re-

quired to run each service. Current frameworks sup-

port a combination of both the IaaS and PaaS inte-

raction models: resources for each service are acqui-

red separately without reference to the needs of other

services, and once acquired; the framework manages

both the application lifecycle and the underlying re-

sources. Consequently, the Cloud Service Provider

loses control over the management of these resources

until they are freed by the framework.

Current trends in cloud computing is to create a

service-oriented architecture for the evolving hetero-

geneous cloud. In this respect, it is imperative to

maintain a separation between application lifecycle

management and resource management. This separa-

tion of concerns makes it possible for the user to con-

centrate on ”what” needs to be done and for the cloud

service provider to concentrate on ”how” it should be

done making it possible to implement continuous im-

provement, in terms of resource utilization and ser-

vice delivery, at the resource level. The proposed fra-

mework is twofold including a Service Description

Language and its associated implementation. They

include facilitating application lifecycle management

by the cloud consumer and resource management by

the Cloud Service Provider to ensure a proper separa-

tion of concerns between both; and creating applica-

tion Blueprints consisting of many services and taking

account of the entire collection of services to deter-

mine an optimal, and potentially, a heterogeneous, set

of resources to implement them.

The remainder of the paper is organized as fol-

lows. We discuss the related work in Section 2. The

proposed concept of separation of concerns is elabo-

rated in Sections 3. The realization of the proposed

concept is presented in Section 4. In Section 5, con-

clusions are drawn and future directions are discus-

sed.

2 RELATED WORK

According to the cloud computing service delivery

model, Infrastructure as a Service (IaaS) is described

as the capability of processing, storage, network, and

other fundamental computing resources where the

consumer is able to deploy and run arbitrary software,

such as operating system and applications. Generally

speaking, the management and control of these re-

sources will be given to the consumer as well, such as

Dong, D., Xiong, H. and Morrison, J.

Separation of Concerns in Heterogeneous Cloud Environments.

DOI: 10.5220/0006385507750780

In Proceedings of the 7th International Conference on Cloud Computing and Services Science (CLOSER 2017), pages 747-752

ISBN: 978-989-758-243-1

Copyright © 2017 by SCITEPRESS – Science and Technology Publications, Lda. All rights reserved

747

initiating, starting and/or terminating virtual machi-

nes and networks. A large number of cloud providers

offer infrastructure resources as services to consumers

in their specific ways, which commonly causes ”ven-

dor lock-in” issues. Fortunately, several efforts were

conducted towards a general framework/description

of resource management across different cloud ven-

dors, in order to improve the interactions among va-

rious cloud providers in an abstract way. Some of

them have been commonly adopted by cloud compu-

ting communities, such as Apache jClouds (jCloud,

2017) and Apache Libcloud (Libcloud, 2017). For

example, Apache jClouds offers a number of services

for resource management operations in Java, provi-

ding support for around 30 providers including most

of the major cloud vendors such as Amazon Web Ser-

vices, Microsoft Azure Storage, OpenStack, Docker,

and Google App Engine.

On the other hand, Platform as a Service (PaaS)

allows the consumers to deploy applications onto the

cloud infrastructure in two different ways: (1) the

consumers manage the cloud application and the pro-

viders manage the underlying infrastructure; (2) the

consumers manage the lifecycle of cloud applications

together with their associated underlying resources.

Most existing PaaS framework are using the second

approach.

Existing frameworks manage the lifecycle of

cloud applications together with their associated un-

derlying resources. In this section, we use three repre-

sentative frameworks to highlight the tightly coupled

nature of their integrated management approaches at

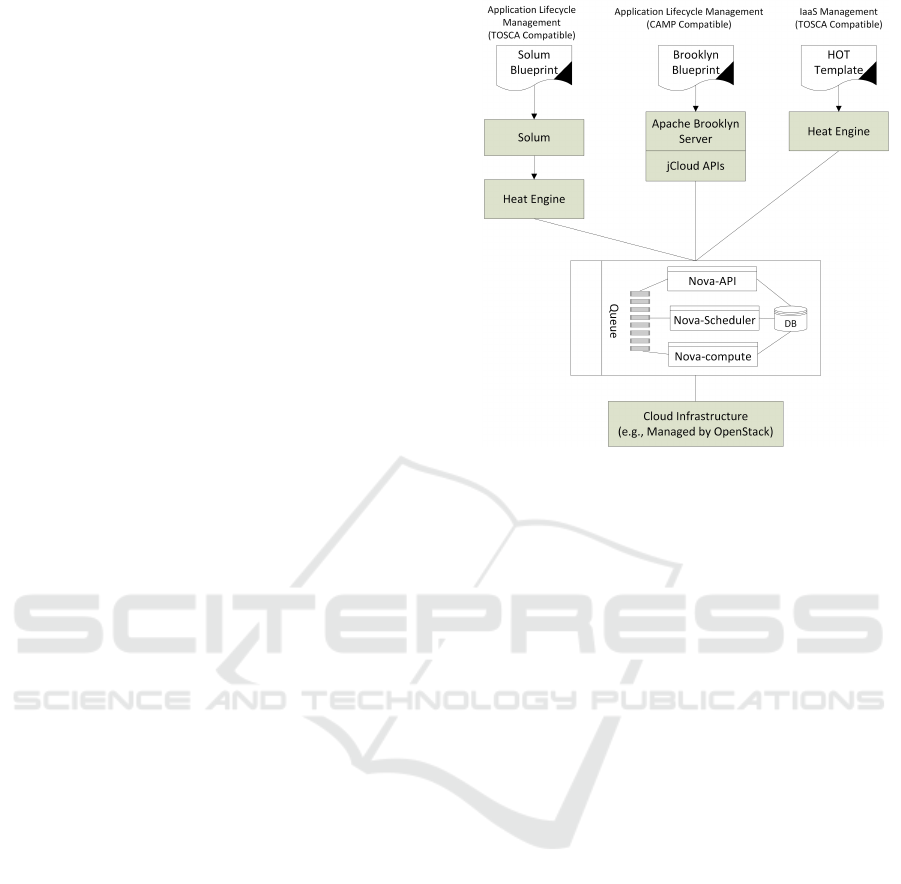

two levels within the cloud. Figure 1 shows the li-

fecycle management architectures for the OpenStack

Solum (Solum, 2017), Apache Brooklyn (Brooklyn,

2017), and OpenStack Heat frameworks (Heat, 2017).

These frameworks provide a set of tools and APIs in

the form of a self-service interface to allow cloud con-

sumers to interact with the cloud. Solum and Brook-

lyn operate at the PaaS level while Heat operate pre-

dominantly at the IaaS level.

The Solum and Brooklyn frameworks allow cloud

consumers to create blueprints using an appropri-

ate Service Description Language (SDL), and to de-

ploy blueprints in clouds. SDLs are used to describe

the characteristics of application components, deploy-

ment scripts, dependencies, locations, logging, poli-

cies, and so on. The Solum engine takes a blueprint as

an input and converts it to a Heat Orchestration Tem-

plate (HOT), this template can be understood by the

application and resource management engine (Heat).

The Heat engine, thereafter, carries out the applica-

tion and resource deployment processes by calling the

corresponding service APIs that are provided by the

Figure 1: Overview of cloud application/resource lifecycle

management of OpenStack Solum, Apache Brooklyn, and

OpenStack Heat.

underlying cloud infrastructure framework (such as

OpenStack).

In contrast, Brooklyn engine converts a blueprint

into a series of jCloud API calls that can be used to

directly interact with the underlying cloud infrastruc-

ture. For example, an API call for creating a Vir-

tual Machine (VM) in OpenStack will be sent to the

nova-api service. The nova-api service subsequently

notify the nova-scheduler service to determine where

the VM should be created. The final process is to no-

tify the nova-compute service for actual VM deploy-

ment. This ”Request and Response” approach is sim-

ple, robust, and efficient. However, it should be noted

that each request is processed independently, making

it impossible to consider relative placement of VMs

associated with multiple requests. Consequently, this

traditional approach does not support the optimal de-

ployment of a group of services, such as those in a

Brooklyn blueprint, for example. This limitation is

not specific to VM placement, but also applies to cur-

rent container deployment technologies.

3 THE CONCEPT OF

SEPARATION OF CONCERNS

From the previous section, two striking characteris-

tics of traditional frameworks are apparent, the first is

that they do not support the separation of concerns be-

tween the various actors of the system, and the second

CLOSER 2017 - 7th International Conference on Cloud Computing and Services Science

748

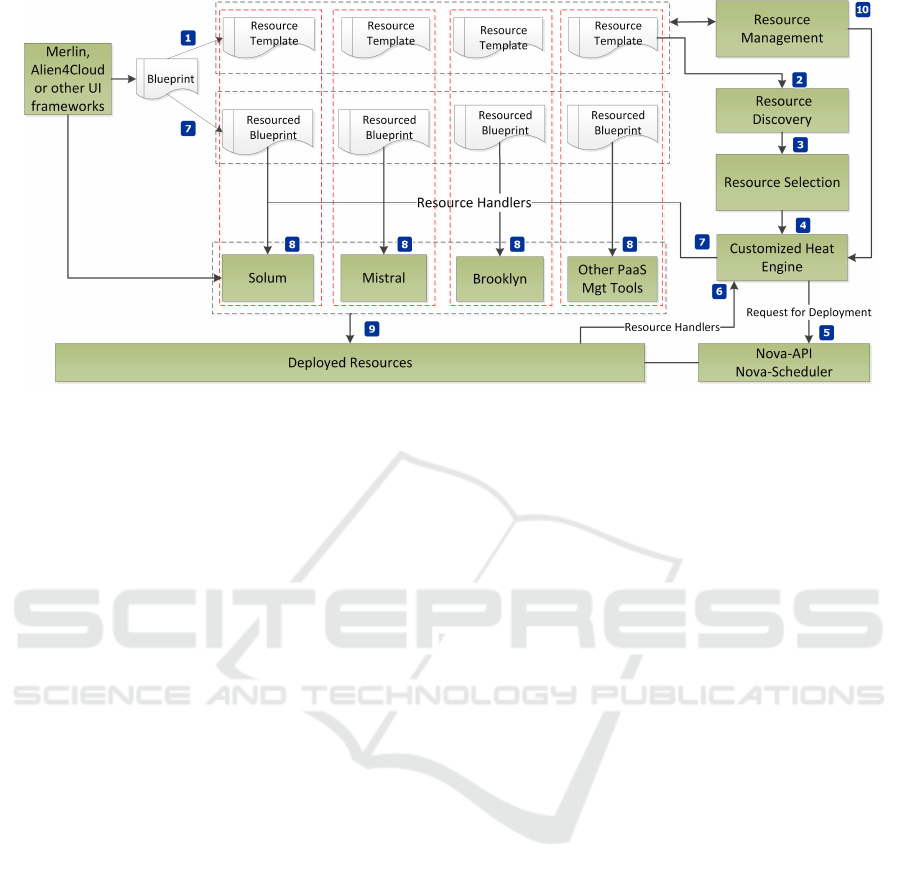

Figure 2: The Service Delivery Model with Regards to the Concept of Separation of Concerns.

is that global optimizations between multiple services

are not possible due to the way in which resource re-

quests are individually processed.

The proposed approach tackles both these limita-

tions directly. It explicitly introduces separation of

concerns between cloud consumers and cloud service

providers, and it provides direct architectural support

for considering optimal resource requests from multi-

ple interacting services simultaneously.

In order to separate the concerns of cloud appli-

cation lifecycle management and resource lifecycle

management, a Blueprint in conjunction with a Ser-

vice Catalogue is used to construct a Resource Tem-

plate, which will be used to identify/create appropri-

ate resources. This process is shown in Figure 2 (label

1). This also implies that a Service Description Lan-

guage (SDL) is needed and created in such a way that

a Blueprint written in the SDL can be transformed to

framework-specific blueprints without losing genera-

lity.

A Blueprint deployment starts by sending the Re-

source Template to the Resource Discovery and Re-

source Selection components as indicated in Figure 2

(label 2 & 3). After the resources have been identified,

they are used together with the information from the

Blueprint and Service Catalogue to construct a Re-

sourced Blueprint. Forming a Resourced Blueprint is

done in the following manner.

The Resource Template is sent to the resource li-

fecycle management engine (OpenStack Heat as its

default engine, but it is not limited to this choice).

The modified Heat engine will carry out the actual re-

source deployment on the infrastructure. Some of the

resource information (for example, the physical ser-

ver on which a VM should be located) must be em-

bedded into appropriate resource request API calls to

the infrastructure management components, such as

OpenStack’s nova-api and nova-scheduler. The re-

source deployment process results in the return of a

number of resource handlers (A resource handler can

be a login account with username, access key, and IP

address to a virtual machine, a container, a physical

machine with a pre-installed operating system, or an

existing HPC cluster). These resource handlers are

sent to the service management framework, which, in

turn, will use them to construct the Resourced Blue-

print.

The Resourced Blueprint will then be given to the

corresponding workflow/application lifecycle mana-

gement framework to carry out the application de-

ployment on the pre-provisioned resources. This pro-

cess is shown in Figure 2 (label 6, 7, and 8).

The described service delivery model is more so-

phisticated than current self-service models using a

vertical management approach. The application ma-

nagement and resource management operate indepen-

dently. Nevertheless, this does not preclude the ex-

change of information between the application and

resource management layers. For example, to termi-

nate a Resourced Blueprint, a notification can be re-

adily sent from the application management layer to

the resource management layer, to free the underlying

resources.

This approach has both advantages and disadvan-

tages that must be carefully managed. A disadvantage

is that cloud service provider has to manage a more

complex system. However, this disadvantage can be

offset by the cloud service provider having more con-

trol over resource utilization, service delivery, and op-

timal use of heterogeneous resources.

Separation of Concerns in Heterogeneous Cloud Environments

749

In contrast to existing frameworks, the proposed

service delivery model will facilitate blueprint deve-

lopers to specify comprehensive constraints and qua-

lity of service parameters for services. Based on the

specified constraints and parameters, in contrast to ex-

isting solutions, can provide an initial optimal deploy-

ment of the resources. For example by creating/iden-

tifying resources on adjacent physical servers to mi-

nimize communication delay or by allocating contai-

ners with attached GPUs or Xeon Phis to balance per-

formance and cost.

4 REALIZATION OF

SEPARATION OF CONCERNS

The concept of the separation of concerns has been

realized in the CloudLightning project (CloudLig-

htning, 2015). In the service provider-consumer

context, CloudLightning defines three actors inclu-

ding End-users (application/service consumers), En-

terprise Application Operator/Enterprise Application

Developer (EAO/EAD), and IaaS resource provider

(CSP). These three actors represent three distinct

domains of concern.

• For the end-user, the concerns are cloud appli-

cation continuity, availability, performance, secu-

rity, and business logic correctness;

• For the EAO/EAD, the concerns are cloud appli-

cation configuration management, performance,

load balancing, security, availability, and deploy-

ment environment;

• For the CSP, the concerns are resource availabi-

lity, operation costs such as power consumption,

resource provisioning, resource organization and

partitioning (if applicable).

CloudLightning is built on the premise that there

are significant advantages in separating these dom-

ains and the SDL has been designed to facilitate this

separation. Inevitability, there will always be con-

cerns that overlap the interests of two or more ac-

tors. This may require a number of actors to act to-

gether, for example, an EAO may need to configure

a load-balancer and a CSP may need to implement

a complementary host-affinity policy to realize high-

availability. These overlapping concerns are managed

by CloudLightning by providing vertical communica-

tions between the application lifecycle management

and the resource management layers.

EADs/EAOs are responsible for managing the li-

fecycle of Resourced Blueprint at the application le-

vel, using frameworks such as Apache Brooklyn and

OpenStack Solum. At the same time, the underlying

resources are managed independently by the Cloud-

Lightning system. As a result, the following advanta-

ges accrue:

• Continuous improvement on the quality of the

Blueprint services delivery;

• Reducing the time to start a service and hence

improve the user experience by reusing resources

that have already been provisioned;

• Resource optimizations and energy optimizationl

• Creating a flexible and extensible integration with

other management frameworks such as the Open-

Stack Mistral workflow management system.

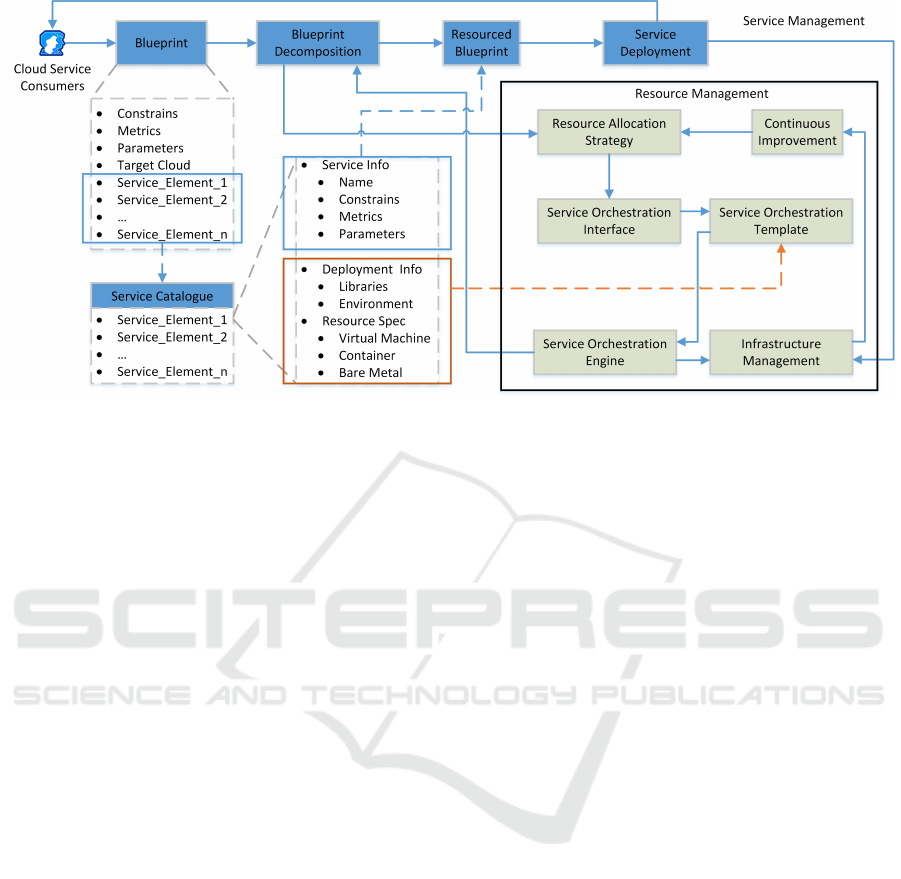

In CloudLightning, the functional components

that realize the concept of the ”Separation of Con-

cerns” is shown in Figure 3. The components respon-

sible for application lifecycle management includes

• Blueprint: is used to represent specific applica-

tion parameters, constraints and metrics defined

by users, identifying services and their relations-

hips.

• Resourced Blueprint: represents a fully qua-

lified Cloud Application Management Platform

(CAMP) (Jacques Durand and Rutt, 2014) docu-

ment with specific resource handlers.

• Service Catalogue: is a persisted collection of ver-

sioned services, each of which includes service

identification, application deployment mechanism

and resource specification.

• Blueprint Decomposition Engine: handles the

transformation of Blueprints to Resourced Blue-

prints according to provided requirements.

• Brooklyn: is used for deploying and managing the

applications via Resourced Blueprints.

The components responsible for resource lifecycle

management includes

• Infrastructure Management: a set of resource ma-

nagement frameworks for managing varying har-

dware resources.

• Service Orchestration Template: describes the in-

frastructure resource (such as servers, networks,

routers, floating IPs, volume, etc.) for a cloud ap-

plication, as well as the relationships between re-

sources.

• Service Orchestration Interface: automatically ge-

nerate HOT template in terms of the results from

Resource Allocation Strategy component, or dy-

namically modify HOT template based on the re-

sults from the Continuous Improvement compo-

nent.

CLOSER 2017 - 7th International Conference on Cloud Computing and Services Science

750

Figure 3: Realization of ”Separation of Concerns” The Blueprint Decomposition Engine handles the transformation of Blue-

prints to Resourced Blueprints. Brooklyn is used for deployment and application lifecycle management. The CloudLightning

identifies and creates/allocates optimal Resources for applications. The Heat Orchestration Template (HOT), specific to

CloudLightning system, describes resources and their relationships. The Heat Engine manages the resource lifecycle. The

Continuous Improvement component together with Heat and telemetry attempts to perform continuous resource improvement

over the lifetime of the deployed blueprint.

• Service Orchestration Engine: responsible for re-

source lifecycle management.

• Continuous Improvement: this management com-

ponent together with Heat and telemetry continu-

ously improve the quality of the deployed blue-

print during its lifetime.

The approach taken by the CloudLightning pro-

ject is to try to re-align the evolving heterogeneous

cloud with the Services Oriented Architecture of the

homogeneous cloud. The first step in this process is

to establish a clear services interface between the ser-

vice consumer and the service provider. The essence

of this interface is the establishment of a separation

of concerns between the consumer and the provider.

Thus, consumers should only be concerned with what

they want to do, and providers should be concerned

only with how that should be done. This simple step

removes all direct consumer interaction with the pro-

vider’s infrastructure and returns control back to the

provider. In this view, various service implementation

options are assumed to already exist and the consumer

no longer has to be an expert creator of those service

implementations. Consumers should not have to be

aware of the actual physical resources being used to

deliver their desired service, however, given the fact

that multiple diverse implementations may exist for

each service (each on a different hardware type, and

each characterized by different price/performance at-

tributes) consumers should be able to distinguish and

choose between these options based on service deli-

very attributes alone. Service creation, in the appro-

ach proposed here, remains a highly specialized task

that is undertaken by an expert. An expert will create

a service solution for a specific hardware platform,

will profile that service and will register the service

executable and meta information with the CloudLig-

htning system.

5 CONCLUSION

This paper concentrates on providing a separation of

concern between application lifecycle management

and resource management to maximize user expe-

rience and cloud efficiency on each side. A realization

of separation of concern was developed, a collabora-

tive interaction between the Brooklyn system and the

OpenStack Heat, component was designed and inte-

grated into the CloudLightning architecture. A cohe-

rent framework for the concept of separation of con-

cerns will be provided in the future work.

ACKNOWLEDGMENT

This work is funded by the European Unions Horizon

2020 Research and Innovation Programme through

the CloudLightning project under Grant Agreement

Number 643946.

Separation of Concerns in Heterogeneous Cloud Environments

751

REFERENCES

Brooklyn, A. (2017). https://brooklyn.apache.org/. [Acces-

sed on 16-February-2017].

CloudLightning (2015). http://cloudlightning.eu/. [Acces-

sed on 16-February-2017].

Heat (2017). https://github.com/openstack/heat. [Accessed

on 10-February-2017].

Jacques Durand, Adrian Otto, G. P. and Rutt, T. (2014).

Cloud application management for platforms version

1.1. In OASIS Committee Specification 01.

jCloud (2017). https://jclouds.apache.org. [Accessed on

05-February-2017].

Libcloud (2017). https://libcloud.apache.org. [Accessed on

07-February-2017].

Solum (2017). https://github.com/openstack/solum.

[Accessed on 01-February-2011].

CLOSER 2017 - 7th International Conference on Cloud Computing and Services Science

752