EyeRecToo: Open-source Software for Real-time Pervasive

Head-mounted Eye Tracking

Thiago Santini, Wolfgang Fuhl, David Geisler and Enkelejda Kasneci

Perception Engineering, University of T

¨

ubingen, T

¨

ubingen, Germany

{thiago.santini, wolfgang.fuhl, david.geisler, enkelejda.kasneci}@uni-tuebingen.de

Keywords:

Eye Movements, Pupil Detection, Calibration, Gaze Estimation, Open-source, Eye Tracking, Data Acquisi-

tion, Human-computer Interaction, Real-time, Pervasive.

Abstract:

Head-mounted eye tracking offers remarkable opportunities for research and applications regarding pervasive

health monitoring, mental state inference, and human computer interaction in dynamic scenarios. Although

a plethora of software for the acquisition of eye-tracking data exists, they often exhibit critical issues when

pervasive eye tracking is considered, e.g., closed source, costly eye tracker hardware dependencies, and requir-

ing a human supervisor for calibration. In this paper, we introduce EyeRecToo, an open-source software for

real-time pervasive head-mounted eye-tracking. Out of the box, EyeRecToo offers multiple real-time state-of-

the-art pupil detection and gaze estimation methods, which can be easily replaced by user implemented algo-

rithms if desired. A novel calibration method that allows users to calibrate the system without the assistance of

a human supervisor is also integrated. Moreover, this software supports multiple head-mounted eye-tracking

hardware, records eye and scene videos, and stores pupil and gaze information, which are also available as a

real-time stream. Thus, EyeRecToo serves as a framework to quickly enable pervasive eye-tracking research

and applications. Available at: www.ti.uni-tuebingen.de/perception.

1 INTRODUCTION

In the past two decades, the number of researchers

using eye trackers has grown enormously (Holmqvist

et al., 2011), with researchers stemming from several

distinct fields (Duchowski, 2002). For instance, eye

tracking has been employed from simple and fixed re-

search scenarios – e.g., language reading (Holmqvist

et al., 2011) – to complex and dynamic cases – e.g.,

driving (Kasneci et al., 2014) and ancillary operating

microscope controls (Fuhl et al., 2016b). In partic-

ular, pervasive eye tracking also has the potential for

health monitoring (Vidal et al., 2012), mental state in-

ference (Fuhl et al., 2016b), and human computer in-

teraction (Majaranta and Bulling, 2014). Naturally,

these distinct use cases have specific needs, lead-

ing to the spawning of several systems with differ-

ent capabilities. In fact, Holmqvist et al. (Holmqvist

et al., 2011) report that they were able to find 23

companies selling video-based eye-tracking systems

in 2009. However, existing eye tracking systems of-

ten present multiple critical issues when pervasive

eye tracking is considered. For instance, commer-

cial systems rely on closed-source software, offering

their eye tracker bundled with their own software so-

lutions. Besides the high costs involved, researchers

and application developers have practically no direct

alternatives if the system does not work under the re-

quired conditions (Santini et al., 2016b). Other (open-

source) systems – e.g., openEyes (Li et al., 2006a),

PyGaze (Dalmaijer et al., 2014), Pupil (Pupil Labs,

2016), and EyeRec (Santini et al., 2016b) – either

focus on their own eye trackers, depend on existing

APIs from manufacturers, or require a human super-

visor in order to calibrate the system.

In this paper, we introduce EyeRecToo

1

, an open-

source software for pervasive head-mounted eye

tracking that solves all of the aforementioned issues

to quickly enable pervasive eye tracking research and

applications; its key advantages are as follow.

• Open and Free: the code is freely available.

Users can easily replace built-in algorithms to

prototype their own algorithms or use the software

as is for data acquisition and human-computer in-

terfaces.

• Data Streaming: non-video data is streamed in

1

The name is a wordplay on the competitor EyeRec

“I Rec[ord]” since the software provides similar recording

functionality; hence, EyeRecToo “I Rec[ord] Too”. Permis-

sion to use this name was granted by the EyeRec developers.

96

Santini T., Fuhl W., Geisler D. and Kasneci E.

EyeRecToo: Open-source Software for Real-time Pervasive Head-mounted Eye Tracking.

DOI: 10.5220/0006224700960101

In Proceedings of the 12th International Joint Conference on Computer Vision, Imaging and Computer Graphics Theory and Applications (VISIGRAPP 2017), pages 96-101

ISBN: 978-989-758-227-1

Copyright

c

2017 by SCITEPRESS – Science and Technology Publications, Lda. All rights reserved

real time through a UDP stream, allowing easy

and quick integration with external buses – e.g.,

automotive CAN – or user applications.

• Hardware Independency: the software handles

from cheap homemade head-mounted eye track-

ers assembled from regular webcams to expensive

commercial hardware

2

.

• Real-time: a low latency software pipeline en-

ables its usage in time-critical applications.

• State-of-the-art Pupil Detection: ElSe, the top

performer pupil detection algorithm, is fully in-

tegrated. Additional integrated methods include

Starburst,

´

Swirski, and ExCuSe.

• State-of-the-art Gaze Estimation: parameteriz-

able gaze estimation based on polynomial regres-

sion (Cerrolaza et al., 2012) and homography (Yu

and Eizenman, 2004).

• Unsupervised Calibration: a method that allows

the user to quickly calibrate the system, indepen-

dently from assistance from a second individual.

• Complete Free Software Stack: combined with

free eye-tracking data analysis software, such as

EyeTrace (K

¨

ubler et al., ), a full eye tracking soft-

ware stack is accessible free of cost.

2 EyeRecToo

EyeRecToo is written in C++ and makes extensive use

of the OpenCV 3.1.0 (Bradski et al., 2000) library

for image processing and the Qt 5.7 framework (Qt

Project, 2016) for its multimedia access and Graphi-

cal User Interface (GUI). Development and testing are

focused on a Windows platform; however the software

also runs under Linux

3

. Moreover, porting to other

platforms (e.g., Android, OS X) should be possible as

all components are cross-platform.

EyeRecToo is built around widgets that provide

functionality and configurability to the system. It was

designed to work with a field camera plus mono or

binocular head-mounted eye trackers but also foresees

the existence of other data input devices in the future

– e.g., an Inertial Measurement Unit (IMU) for head-

movement estimation (Larsson et al., 2016). In fact,

EyeRecToo assumes there is no hardware synchro-

nization between input devices and has a built-in soft-

ware synchronizer. Each input device is associated

with an input widget. Input widgets register with the

synchronizer, read data from the associated device,

2

Provided cameras are accessible through DirectShow

(Windows) or v4l2 (Linux).

3

Windows 8.1/MSVC2015 and Ubuntu 16.04/gcc 5.4.0.

timestamp the incoming data according to a global

monotonic reference clock, possibly process the data

to extend it (e.g., pupil detection), and save the result-

ing extended data, which is also sent to the synchro-

nizer. The synchronizer’s task is then to store the lat-

est data incoming from the input widgets (according

to a time window specified by the user) and, at pre-

defined intervals, generate a DataTuple with times-

tamp t containing the data from each registered in-

put widget with timestamp closest in time to t, thus

synchronizing the input devices data

4

. The resulting

DataTuple is then forwarded to the calibration/gaze

estimation, which complements the tuple with gaze

data. The complete tuple is then stored (data jour-

naling), broadcasted through UDP (data streaming),

and exhibited to the user (GUI update). This results

in a modular and easily extensible design that allows

one to reconstruct events as they occurred during run

time.

2.1 Input Widgets

Currently two input widgets are implemented in the

system: the eye camera input widget, and the field

camera input widget. These run in individual threads

with highest priority in the system. The eye camera

input widget is designed to receive close-up eye im-

ages, allowing the user to select during run time the

input device, region of interest (ROI) in which eye

feature detection is performed, image flipping, and

pupil detection algorithm. Available pupil detection

algorithms and their performance are described in de-

tail in Section 3. The field camera input widget is

designed to capture images from the point of view

(POV) of the eye tracker wearer, allowing the user to

select during run time the input device, image undis-

tortion, image flipping and fiducial marker detection.

Additionally, camera intrinsic and extrinsic parame-

ter estimation is built-in. Currently, ArUcO (Garrido-

Jurado et al., 2014) markers are supported. Both wid-

gets store the video (as fed to their respective image

processing algorithms) as well as their respective de-

tection algorithm data and can be use independently

from other parts of the system (e.g., to detect pupils

in eye videos in an offline fashion).

2.2 Calibration / Gaze Estimation

Widget

This widget provides advanced calibration and gaze

estimation methods, including two methods for cal-

4

In case the input devices are hardware-synchronized,

one can use a delayed trigger based on any of the input de-

vices to preserve synchronization.

EyeRecToo: Open-source Software for Real-time Pervasive Head-mounted Eye Tracking

97

ibration (supervised / unsupervised). The super-

vised calibration is a typical eye tracker calibration,

which requires a human supervisor to coordinate with

the user and select points of regard that the user

gazes during calibration. The unsupervised calibra-

tion methods as well as available gaze estimation

methods are described in depth in Section 4. Eye-

RecToo is also able to automatically reserve some of

the calibration points for a less biased evaluation of

the gaze estimation function. Moreover, functionali-

ties to save and load data tuples (both for calibration

and evaluation) are implemented, which allows devel-

opers to easily prototype new calibration and gaze es-

timation methods based on existing data.

2.3 Supported Eye Trackers

Currently, the software supports Ergoneers’ Dikablis

Essential and Dikablis Professional eye trackers (Er-

goneers, 2016), Pupil Do-It-Yourself kit (Pupil Labs,

2016), and the PS3Eye-based operating microscope

add-on module proposed by Eivazi et al. (Eivazi et al.,

2016)

5

. However, any eye tracker that provides ac-

cess to its cameras through DirectShow (on Windows)

or v4l2 (on Linux) should work effortlessly. For in-

stance, EyeRecToo is able to use regular web cameras

instead of an eye tracker, although the built-in pupil-

detection methods are heavily dependent on the qual-

ity of the eye image – in particular, pupil detection

methods are often designed for near-infrared images.

2.4 Software Latency and Hardware

Requirements

We evaluated the latency of the software pipeline im-

plemented in EyeRecToo using the default configura-

tion, a Dikablis Professional eye tracker, and a ma-

chine running Windows 8.1 with an Intel

R

Core

TM

i5-4590 @ 3.30GHz CPU and 8GB of RAM. In to-

tal, 30.000 samples were collected from each input

device. Table 1 shows the latency of operations that

require a significant amount of time relative to the in-

tersample period of the eye tracker fastest input de-

vice (16.67 ms), namely image acquisition/processing

and storage

6

. It is worth noticing that, given enough

available processing cores, these operations can be re-

alized in a parallel fashion; thus, the remaining slack

based on the 16.67 ms deadline is ≈ 8 ms. Given these

measurements, we estimate that any Intel

R

Core

TM

machine with four cores and 2GB of RAM should be

able to meet the software requirements.

5

Provided that the module remain static w.r.t. the head.

6

Values are based on the default pupil detection (ElSe)

and field images containing at least four markers.

Table 1: Resulting latency (mean±standard deviation) for

time-consuming operations from the 30.000 samples of

each available input widgets.

Operation Input Widget Latency (ms)

Eye Camera 8.35 ± 0.73

Processing

Field Camera 4.97 ± 1.16

Eye Camera 2.85 ± 1.23

Storage

Field Camera 4.39 ± 1.74

3 PUPIL DETECTION

EyeRecToo offers four integrated pupil detection al-

gorithms, which were chosen based on their detection

rate performance – e.g., ElSe (Fuhl et al., 2016a) and

ExCuSe (Fuhl et al., 2015) – and popularity – e.g.,

´

Swirski (

´

Swirski et al., 2012) and Starburst (Li et al.,

2005). Since EyeRecToo’s goal is to enable perva-

sive eye tracking, the main requirements for pupil de-

tection algorithms are real-time capabilities and high

detection rates on challenging and dynamic scenarios.

Based on these requirements, ElSe (Fuhl et al., 2016a)

was selected as default pupil detection algorithm; the

resulting detection rate performance of these algo-

rithms on the data sets provided by (Fuhl et al., 2016a;

Fuhl et al., 2015;

´

Swirski et al., 2012) is shown in Fig-

ure 1. A brief description of each algorithm follows;

a detailed review of these algorithms is given in (Fuhl

et al., 2016c).

0

0.2

0.4

0.6

0.8

1

0 2 4 6 8 10 12 14

Cumulative Detection Rate

Pixel Error

ELSE

EXCUSE

SWIRSKI

STARBURST

Figure 1: Cumulative detection rate given the distance be-

tween detected pupil position relative to a human-annotated

ground-truth distance for each of the available algorithms

based on the data from (Fuhl et al., 2016a).

ElSe (Fuhl et al., 2016a) applies a Canny edge de-

tection method, removes edge connections that could

impair the surrounding edge of the pupil, and evalu-

ates remaining edges according to multiple heuristics

to find a suitable pupil ellipse candidate. If this ini-

tial approach fails, the image is downscaled, and a

second approach attempted. The downscaled image’s

response to a surface difference filter is multiplied by

the complement of its mean filter response, and the

VISAPP 2017 - International Conference on Computer Vision Theory and Applications

98

maximum of the resulting map is taken. This posi-

tion is then refined on the upscaled image based on its

pixel neighborhood.

ExCuSe (Fuhl et al., 2015) selects an initial

method (best edge selection or coarse positioning)

based on the intensity histogram of the input image.

The best edge selection filters a Canny edge image

based on morphologic operations and selects the edge

with the darkest enclosed value. For the coarse posi-

tioning, the algorithm calculates the intersections of

four orientations from the angular integral projection

function. This coarse position is refined by analyzing

the neighborhood of pixels in a window surrounding

this position. The image is thresholded, and the bor-

der of threshold-regions is used additionally to filter

a Canny edge image. The edge with the darkest en-

closed intensity value is selected. For the pupil center

estimation, a least squares ellipse fit is applied to the

selected edge.

´

Swirski et al. (

´

Swirski et al., 2012) starts with

Haar features of different sizes for coarse positioning.

For a window surrounding the resulting position, an

intensity histogram is calculated and clustered using

the k-means algorithm. The separating intensity value

between both clusters is used as threshold to extract

the pupil. A modified RANSAC method is applied to

the thresholded pupil border.

Starburst (Li et al., 2005) first removes the

corneal reflection and then selects pupil edge candi-

dates along rays extending from a starting point. Re-

turning rays are sent from the candidates found in the

previous step, collecting additional candidates. This

process is repeated iteratively using the average point

from the candidates as starting point until conver-

gence. Afterwards, inliers and outliers are identified

using the RANSAC algorithm, a best fitting ellipse is

determined, and the final ellipse parameters are deter-

mined by applying a model-based optimization.

4 CALIBRATION AND GAZE

ESTIMATION

In video-based eye tracking, gaze estimation is the

process of estimating the Point Of Regard (POR) of

the user given eye images. High-end state-of-the-

art mobile eye tracker systems (e.g., SMI and Tobii

glasses (SensoMotoric Instruments GmbH, 2016; To-

bii Technology, 2016)) rely on geometry-based gaze

estimation approaches, which can provide gaze esti-

mations without calibration. In practice, it is common

to have at least an one point calibration to adapt the

geometrical model to the user and estimate the angle

between visual and optical axis, and it has been re-

ported that additional points are generally required for

good accuracy (Villanueva and Cabeza, 2008). Fur-

thermore, such approaches require specialized hard-

ware (e.g., multiple cameras and glint points), cost

in the order of tens of thousands of $USD, and are

susceptible to inaccuracies stemming from lens dis-

tortions (K

¨

ubler et al., 2016). On the other hand, mo-

bile eye trackers that make use of regression-based

gaze-mappings require a calibration step but automat-

ically adapt to distortions and are comparatively low-

cost – e.g., a research grade binocular eye tracker

from Pupil Labs is available for $2340 EUR (Pupil

Labs, 2016). Moreover, similar eye trackers have

been demonstrated by mounting two (an eye and a

field) cameras onto the frames of glasses (Babcock

and Pelz, 2004; Li et al., 2006b; San Agustin et al.,

2010), yielding even cheaper alternatives for the more

tech-savy users. Therefore, we focus on regression

based alternatives, which require calibration.

4.1 Calibration

In its current state, the calibration step presents some

disadvantages and has been pointed out as one of

the main factors hindering a wider adoption of eye

tracking technologies (Morimoto and Mimica, 2005).

Common calibration procedures customarily require

the assistance of an individual other than the eye

tracker user in order to calibrate (and check the ac-

curacy of) the system. The user and the aide must

coordinate so that the aide selects calibration points

accordingly to the user’s gaze. As a result, current

calibration procedures cannot be performed individ-

ually and require a considerable amount of time to

collect even a small amount of calibration points, im-

peding their usage for ubiquitous eye tracking. Ey-

eRecToo provides functionality for these supervised

calibrations such that the users can collect as many

eye-gaze relationships as necessary as well as choose

to sample a single median point or multiple points

per relationship. Furthermore, audible cues are also

provided to minimize the amount of interaction be-

tween user and supervisor, thus diminishing human

error and calibration time.

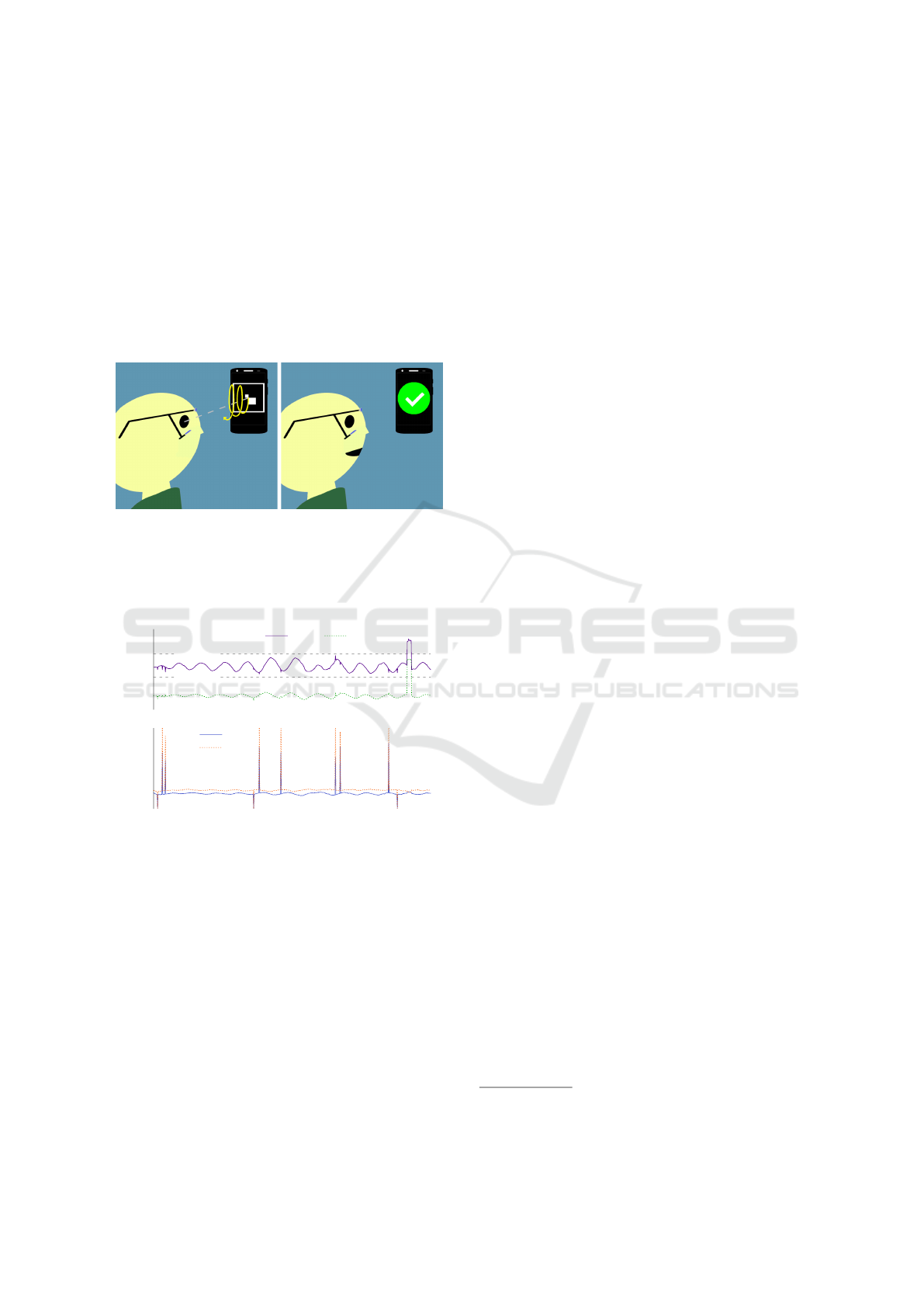

Besides the regular supervised calibration, Eye-

RecToo integrates a novel unsupervised approach that

enables users to quickly and independently calibrate

head-mounted eye trackers by gazing at a fiducial

marker that moves w.r.t. the user’s head. Addition-

ally, we also provide a companion Android applica-

tion that can be used to display the marker and re-

ceive feedback regarding the quality of the calibra-

tion (see Figure 2). Alternatively, a user can also per-

form this calibration using a printed marker or by dis-

EyeRecToo: Open-source Software for Real-time Pervasive Head-mounted Eye Tracking

99

playing the marker on any screen. After collecting

several calibration points, EyeRecToo then removes

inferior and wrong eye-gaze relationships according

to a series of rationalized approaches based on do-

main specific assumptions regarding head-mounted

eye tracking setups, data, and algorithms (see Fig-

ure 3). From the remaining points, some are reserved

for evaluation based on their spatial location, and the

remaining points are used for calibration. This cali-

bration method, dubbed CalibMe, is described in de-

tail in (Santini et al., 2017).

Figure 2: While gazing at the center of the marker, the user

moves the smartphone displaying the collection marker to

collect eye-gaze relationships (left). The eye tracker then

notifies the user that the calibration has been performed suc-

cessfully through visual/haptic/audible feedback, signaling

that the system is now ready to use (right).

50

100

150

200

Pupil Position (px)

b

µ

p

x

+ 2.7σ

p

x

µ

p

y

+ 2.7σ

p

y

p

x

p

y

0

20

40

60

80

100

Pupil Size (px)

a

a

a a a

a

a

c c c

p

w

p

h

Figure 3: Rationalized outlier removal. Outliers based on

subsequent pupil size (a), pupil position range (b), and pupil

detection algorithm specific information (c). Notice how

the pupil position estimate (p

x

, p

y

) is corrupted by such out-

liers.

4.2 Gaze Estimation

These two available calibration methods are comple-

mented by multiple gaze estimation methods. Out of

the box, six polynomial regression approaches are of-

fered – ranging from first to third order bivariate poly-

nomial least-square fits through single value decom-

position. Furthermore, an additional approach based

on projective geometry is also available through pro-

jective space isomorphism (i.e., homography). It is

worth noticing however that the latter requires that

camera parameters be taken into account

7

.

To provide gaze estimation accuracy figures, we

conducted an evaluation employing a second order bi-

variate regression with five adult subjects (4 male, 1

female) – two of which wore glasses during the exper-

iments. The experiment was conducted using a Dik-

ablis Pro eye tracker (Ergoneers, 2016). This device

has two eye (@60 Hz) and one field (@30 Hz) cam-

eras; data tuples were sampled based on the frame

rate of the field camera. These experiments yielded

a mean angular error averaged over all participants

of 0.59

◦

(σ = 0.23

◦

) when calibrated with the un-

supervised method. In contrast, a regular supervised

nine points calibration yielded a mean angular error of

0.82

◦

(σ = 0.15

◦

). Both calibrations exhibited accu-

racies well within physiological values, thus attesting

for the efficacy of the system as a whole.

5 FINAL REMARKS

In this paper, we introduced a software for pervasive

and real-time head-mounted eye trackers. EyeRecToo

has several key advantages over proprietary software

(e.g., openness) and other open-source alternatives

(e.g., multiple eye trackers support, improved pupil

detection algorithm, unsupervised calibration). Fu-

ture work includes automatic 3D eye model construc-

tion (

´

Swirski and Dodgson, 2013), support for remote

gaze estimation (Model and Eizenman, 2010), addi-

tional calibration methods (Guestrin and Eizenman,

2006), real-time eye movement classification based

on Bayesian mixture models (Kasneci et al., 2015;

Santini et al., 2016a), automatic blink detection (Ap-

pel et al., 2016), and support for additional eye track-

ers.

Source code, binaries for Windows, and extensive

documentation are available at:

www.ti.uni-tuebingen.de/perception

REFERENCES

Appel, T. et al. (2016). Brightness- and motion-based blink

detection for head-mounted eye trackers. In Proc. of

the Int. Joint Conf. on Pervasive and Ubiquitous Com-

puting, UbiComp Adjunct. ACM.

Babcock, J. S. and Pelz, J. B. (2004). Building a lightweight

eyetracking headgear. In Proc. of the 2004 Symp. on

Eye tracking research & applications. ACM.

Bradski, G. et al. (2000). The OpenCV Library. Doctor

Dobbs Journal.

7

EyeRecToo has built-in methods to estimate the intrin-

sic and extrinsic camera parameters if necessary.

VISAPP 2017 - International Conference on Computer Vision Theory and Applications

100

Cerrolaza, J. J. et al. (2012). Study of polynomial mapping

functions in video-oculography eye trackers. Trans.

on Computer-Human Interaction (TOCHI).

Dalmaijer, E. S. et al. (2014). Pygaze: An open-source,

cross-platform toolbox for minimal-effort program-

ming of eyetracking experiments. Behavior research

methods.

Duchowski, A. T. (2002). A breadth-first survey of eye-

tracking applications. Behavior Research Methods,

Instruments, & Computers.

Eivazi, S. et al. (2016). Embedding an eye tracker into a

surgical microscope: Requirements, design, and im-

plementation. IEEE Sensors Journal.

Ergoneers (2016). Dikablis. www.ergoneers.com.

Fuhl, W. et al. (2015). Excuse: Robust pupil detection in

real-world scenarios. In Computer Analysis of Images

and Patterns 2015. CAIP 2015. 16th Int. Conf. IEEE.

Fuhl, W. et al. (2016a). Else: Ellipse selection for robust

pupil detection in real-world environments. In Proc. of

the Symp. on Eye Tracking Research & Applications.

ACM.

Fuhl, W. et al. (2016b). Non-intrusive practitioner pupil

detection for unmodified microscope oculars. Com-

puters in Biology and Medicine.

Fuhl, W. et al. (2016c). Pupil detection for head-mounted

eye tracking in the wild: an evaluation of the state of

the art. Machine Vision and Applications.

Garrido-Jurado, S. et al. (2014). Automatic generation and

detection of highly reliable fiducial markers under oc-

clusion. Pattern Recognition.

Guestrin, E. D. and Eizenman, M. (2006). General theory

of remote gaze estimation using the pupil center and

corneal reflections. Biomedical Engineering, IEEE

Trans. on.

Holmqvist, K. et al. (2011). Eye tracking: A comprehensive

guide to methods and measures. Oxford University.

Kasneci, E. et al. (2014). The applicability of probabilis-

tic methods to the online recognition of fixations and

saccades in dynamic scenes. In Proc. of the Symp. on

Eye Tracking Research and Applications.

Kasneci, E. et al. (2015). Online recognition of fixations,

saccades, and smooth pursuits for automated analysis

of traffic hazard perception. In Artificial Neural Net-

works. Springer.

K

¨

ubler, T. C. et al. Analysis of eye movements with eye-

trace. In Biomedical Engineering Systems and Tech-

nologies. Springer.

K

¨

ubler, T. C. et al. (2016). Rendering refraction and reflec-

tion of eyeglasses for synthetic eye tracker images. In

Proc. of the Symp. on Eye Tracking Research & Appli-

cations. ACM.

Larsson, L. et al. (2016). Head movement compensation

and multi-modal event detection in eye-tracking data

for unconstrained head movements. Journal of Neu-

roscience Methods.

Li, D. et al. (2005). Starburst: A hybrid algorithm

for video-based eye tracking combining feature-based

and model-based approaches. In Computer Vision and

Pattern Recognition Workshops, 2005. CVPR Work-

shops. IEEE Computer Society Conf. on. IEEE.

Li, D. et al. (2006a). openeyes: A low-cost head-mounted

eye-tracking solution. In Proc. of the Symp. on Eye

Tracking Research &Amp; Applications.

Li, D. et al. (2006b). openeyes: a low-cost head-mounted

eye-tracking solution. In Proc. of the 2006 Symp. on

Eye tracking research & applications. ACM.

Majaranta, P. and Bulling, A. (2014). Eye Tracking and

Eye-Based Human-Computer Interaction. Advances

in Physiological Computing. Springer.

Model, D. and Eizenman, M. (2010). User-calibration-free

remote gaze estimation system. In Proc. of the Symp.

on Eye-Tracking Research & Applications. ACM.

Morimoto, C. H. and Mimica, M. R. (2005). Eye gaze track-

ing techniques for interactive applications. Computer

Vision and Image Understanding.

Pupil Labs (2016). www.pupil-labs.com/. Accessed: 16-

09-07.

Qt Project (2016). Qt Framework. www.qt.io/.

San Agustin, J. et al. (2010). Evaluation of a low-cost open-

source gaze tracker. In Proc. of the 2010 Symp. on

Eye-Tracking Research & Applications. ACM.

Santini, T. et al. (2016a). Bayesian identification of fix-

ations, saccades, and smooth pursuits. In Proc. of

the Symp. on Eye Tracking Research & Applications.

ACM.

Santini, T. et al. (2016b). Eyerec: An open-source data ac-

quisition software for head-mounted eye-tracking. In

Proc. of the Joint Conf. on Computer Vision, Imaging

and Computer Graphics Theory and Applications.

Santini, T. et al. (2017). CalibMe: Fast and unsuper-

vised eye tracker calibration for gaze-based perva-

sive human-computer interaction. In Proc. of the CHI

Conf. on Human Factors in Computing Systems.

SensoMotoric Instruments GmbH (2016). www.smivision.

com/. Accessed: 16-09-07.

´

Swirski, L. and Dodgson, N. A. (2013). A fully-automatic,

temporal approach to single camera, glint-free 3d eye

model fitting. In Proc. of ECEM.

´

Swirski, L. et al. (2012). Robust real-time pupil tracking in

highly off-axis images. In Proc. of the Symp. on Eye

Tracking Research and Applications. ACM.

Tobii Technology (2016). www.tobii.com. Accessed: 16-

09-07.

Vidal, M. et al. (2012). Wearable eye tracking for mental

health monitoring. Computer Communications.

Villanueva, A. and Cabeza, R. (2008). A novel gaze estima-

tion system with one calibration point. IEEE Trans. on

Systems, Man, and Cybernetics.

Yu, L. H. and Eizenman, M. (2004). A new methodology

for determining point-of-gaze in head-mounted eye

tracking systems. IEEE Trans. on Biomedical Engi-

neering.

EyeRecToo: Open-source Software for Real-time Pervasive Head-mounted Eye Tracking

101