Automating Government Spatial Transactions

Premalatha Varadharajulu

1,2

, Geoff West

1,2

and David A. McMeekin

1,2

, Simon Moncrieff

1,2

and Lesley Arnold

1,2

1

Department of Spatial Science, Curtin University, Perth, Western Australia, Australia

2

Cooperative Research Centre for Spatial Information, Perth, Western Australia,

Australia

Keywords: Spatial Transaction, Spatial Data Supply Chain, Artificial Intelligence, Semantic Web, Ontology,

Rule-based Reasoning, OWL-2.

Abstract: The land development approval process between local authorities and government land and planning

departments is manual, time consuming and resource intensive. For example, when new land subdivisions,

new roads and road naming, and administrative boundary changes are requested, approval and changes to

spatial datasets are needed. The land developer submits plans, usually on paper, and a number of employees

use rules, constraints and policies to determine if such plans are acceptable. This paper presents an approach

using Semantic Web and Artificial Intelligence techniques to automate the decision-making process in

Australian jurisdictions. Feedback on the proposed plan is communicated to the land developer in real-time,

thus reducing process handling time for both developer and the government agency. The Web Ontology

Language is used to represent relationships between different entities in the spatial database schema. Rules

on geometry, policy, naming conventions, standards and other aspects are obtained from government policy

documents and subject-matter experts and described using the Semantic Web Rule Language. Then when

the developer submits an application, the software checks the rules against the request for compliance. This

paper describes the proposed approach and presents a case study that deals with new road proposals and

road name approvals.

1 INTRODUCTION

Land developers and local government authorities

are required to submit proposals for new

subdivisions to land and planning departments for

approval. These new subdivisions include new land

parcel boundaries, roads and road names, and

changes to local authority boundaries. The approval

process often spans many work teams and new

information, such as property addresses may need to

be generated. This manual process can be time

consuming and resource intensive.

New methods are required to reduce data

handling and support the automation of transactions

with government. Current workflows are

characterised by several decision points and a trail of

paper documents are often created to formalise the

decision-making process and to provide a reference

point for legal transactions further along the land

administration process (Varadharajulu et al., 2015).

As a result, there is often a time delay of several

weeks during which a new subdivision is considered

by authorities from the various land development

and planning perspectives.

This research seeks to automate the spatial

transaction process using artificial intelligence with

ontologies to create rules that replace the human

decision-making process for land development

approvals. A case study examining new road

proposals, road names and land administration

boundary changes is used to demonstrate the

approach. This research is being conducted in

conjunction with the Western Australian Land

Information Authority (Landgate). Landgate is the

approving authority for all new subdivisions in

Western Australia, and is responsible for land

administration boundary changes resulting from land

development activity.

The Semantic Web was first introduced by Tim

Berners-Lee who imagined it as “a web of data that

can be processed directly and indirectly by

machines” (Berners-Lee and Fischetti, 1999). This

research is inspired by the increased bandwidth of

the Internet and advances in Semantic Web

technologies, which now make it possible to

automate the human elements of the decision-

making process on the Web.

Varadharajulu, P., West, G., McMeekin, D., Moncrieff, S. and Arnold, L.

Automating Government Spatial Transactions.

In Proceedings of the 2nd International Conference on Geographical Information Systems Theory, Applications and Management (GISTAM 2016), pages 157-167

ISBN: 978-989-758-188-5

Copyright

c

2016 by SCITEPRESS – Science and Technology Publications, Lda. All rights reserved

157

Rule-based systems have been used for decision

support in the past but these are typically closed

client bases systems. However the advantage of the

Semantic Web is that the data, ontologies and rules

are described using well defined standards (w3c.org)

and can be made available over the Web as

published resources, typically in one of a number of

machine (and human) readable formats (Gupta and

Knoblock, 2010). The vision is that, ontologies,

especially those of a general nature, can be shared

and re-used in many applications. In our case, it is

envisaged that once a working solution for the

approvals process has been validated for one

jurisdiction (Western Australia), the ontologies and

rules can be used in other jurisdictions (Victoria,

New South Wales etc.) and domains.

The work is part of a research program into

Spatial Data Infrastructures being conducted at the

Cooperative Research Centre for Spatial Information

(CRCSI), Australia. One of the objectives of the

research program is to automate spatial data supply

chains from end-to-end to enable access to the right

data, at the right time, at the right price (McMeekin

and West, 2012).

This research is focusing on the first stage in the

spatial data supply chain process, which is the

creation of spatial data generated through a land

development business process. Instead of paper-

based systems, the method enables the capture of

spatial information in machine-readable form at its

inception point. This is a significant step towards

achieving downstream workflow automation. It also

supports the recording of data provenance in

machine-readable form at the commencement of a

spatial transaction to support legal and data quality

attribution.

The development consists of two stages. In the

first stage, a GUI-based interactive system called

Protégé is used to design ontologies and rules from

spatial data schema and various documents

including policies. The second stage uses a runtime

environment (Jena and Java) to process the

ontologies and rules along with existing and

proposed road data to determine compliance with

policies etc.

2 BACKGROUND AND RELATED

RESEARCH

Methods for spatial data processing and integration

have been researched and developed over the past

few years, however little work has considered the

automation of the decision-making process where

spatial data is an input to the approval process.

One of the objectives of the Semantic Web is to

evolve into a universal medium for information, data

and knowledge exchange, rather than just being a

source for information. To attain this, it uses the well

known http protocol and technologies (Shadbolt et al.,

2006) (Millard, 2010), such as URIs (Universal

Resource Identifiers), RDF (Resource Description

Framework) and ontologies with reasoning and rules.

One of the most important components is the

RDF, which is a language for representing

information about resources on the Web

(http://www.w3.org/RDF/). RDF aims to organize

information in a machine-readable format by

representing information as triples: <subject,

predicate, object>, a concept from the artificial

intelligence community. RDF was originally

considered as metadata but now covers data as well.

RDF triples can be used to represent tables, graphs,

trees, ontologies and rules because it describes the

relationship between subject and object resources

where a ‘object in the <subject, predicate, object>

triple can be another subject enabling subjects to be

linked together. Each of the triple components can

also be a URI so information can be linked across

the Web. RDF formatted data is much easier to

process, because its generic format contains

information that is clearly understandable as a

distributed model.

Reasoning and rules are an important part of this

research and in the Semantic Web, the Ontology

Web Language (OWL-2), based on RDF, is used for

defining Web ontologies that include rules, axioms

and constraints allowing inferencing (discovery of

new knowledge) to be performed.

The Semantic Web has been used for queries by

a user for natural events using observation sensor

data (Devaraju et al., 2015) (Yu and Liu, 2013). In

particular Devaraju et al (2015) describe a number

of ontologies used to model various sensors and

rules used to map queries such as flooding in an area

to the need to sample a number of point water

sensors. Methods have been proposed that have

potential to automate land development approval

processes. For example, the Sensing Geographic

Occurrences Ontology (SEGO) model supports

inferences of institutionalized events (Reitsma,

2005) based on time. However they do not resolve

any conflicts arising if an event qualifies based on

both policy and business rules. This research does

not cover the sensor-specific technical details

(Reitsma, 2005), but instead concentrates on the

business knowledge rules.

GISTAM 2016 - 2nd International Conference on Geographical Information Systems Theory, Applications and Management

158

A large number of open source and proprietary

tools are available for semantic web research and

development. This research uses the Protégé

framework (

http://

protege.stanford.edu/

)

to develop

ontologies and rules because its GUI environment

allows fast design, interactive navigation of the

relationships in OWL ontologies and visualization. It

allows some rule-based analysis to be performed and

can read and write RDF-based files in a number of

different formats. Rules are defined in the form of

ontological vocabularies using SWRL. Like many

other rule languages, a SWRL rule has the form of a

link between antecedent and consequent. The

antecedent refers to the body of the rule, consisting

or one or more conditions, and the consequent refers

to its head, typically one condition. Whenever the

conditions specified in the antecedent are satisfied,

those specified in the consequent must also be

satisfied (O’Connor et al., 2005). Once ontologies

and rules have been defined, they can be imported

into the Apache Jena framework complete with the

Pellet reasoner

(http://clarkparsia.com/pellet/)

to

support OWL for runtime querying and analysis

(Segaran et al., 2009). Combining both Jena and

OWL API libraries, Pellet infers logical

consequences from a set of asserted facts or axioms.

3 CASE STUDY

Landgate administers all official naming actions for

Western Australia under the authority of the

Minister for Lands. The relevant local government

authority generally submits all naming proposals for

ratification by Landgate. All new proposals must

satisfy government policies and standards. The

current process has an online submission form, but

for the most part the process is paper-based and

requires significant human involvement. Current

methods often require negotiation between the

parties involved (i.e. local government and

Landgate). While there are specific rules applying to

new road name approvals, there are grey areas

within policy that are often challenged and can only

be resolved by an experienced negotiator. A request

for a new road name may be transferred back-and-

forth until an outcome is achieved that is satisfactory

to both parties. Outcomes may be different

depending on the expertise of the

negotiator/approver.

Automation is needed to reduce the manual

overhead by extracting expert knowledge for road

name approvals to create a standard set of rules. The

notion is to create a self-service online mechanism

for developers to submit new road names for

approval, underpinned by a complex rule-base and

querying process. Complexity comes from the flow

on effect of such changes. A new land development

results in a change to the surrounding road network.

This has a flow on impact to property street

addressing and an administrative boundary change.

The case study uses the Landgate geographic

road names database, called GEONOMA, to process

the road name proposal. The current online

submission process has the following issues that

complicate the approval process:

• The online form is only used to test whether new

road names are allowable based on a set of road

names that have been reserved for use. If a

proposed name is a reserved road name then the

request will fail. There is no opportunity to

contest the decision.

• A maximum of ten names per application is

allowed; meaning separate applications are required

for larger subdivisions. It is not possible to conduct

cross-reference checks against other submissions

and therefore the process is open to error.

• The current system does not consider the spatial

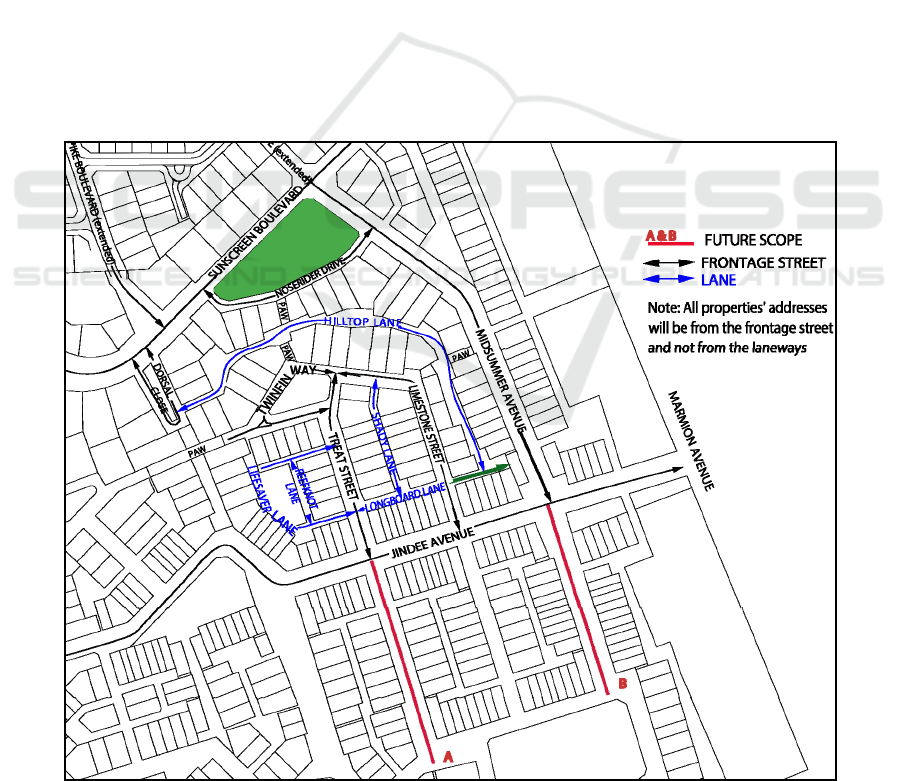

extent of roads. Figure 1 shows a schematic

submitted for road name approvals that does not

represent the actual proposed location of roads.

Roads do not actually meet up; they are stylized

with solid and dashed lines with arrows etc.

Manual editing and digitising is therefore

necessary to extract the full topology of the

proposed road network complete with

coordinates of junctions.

• The current system does not permit checks on

phonetics and this is an issue for similar

Figure 1: Hardcopy road network plan with road name

application.

Automating Government Spatial Transactions

159

sounding names (e.g., Bailey, Baylee, Bayley,

Baylea). Similar or ‘like’ names (e.g. Whyte and

White) are not allowable under policy guidelines

as they can cause confusion for applications such

as emergency services dispatch. Similarly, the

same road name or a similar sounding road name

is not permitted within close proximity.

• Where an extension to an existing road occurs or

where a road ‘type’ (e.g. cul-de-sac, highway)

changes, the current system is unable to return an

extension to a road name or change to road

suffix, respectively

4 APPROACH

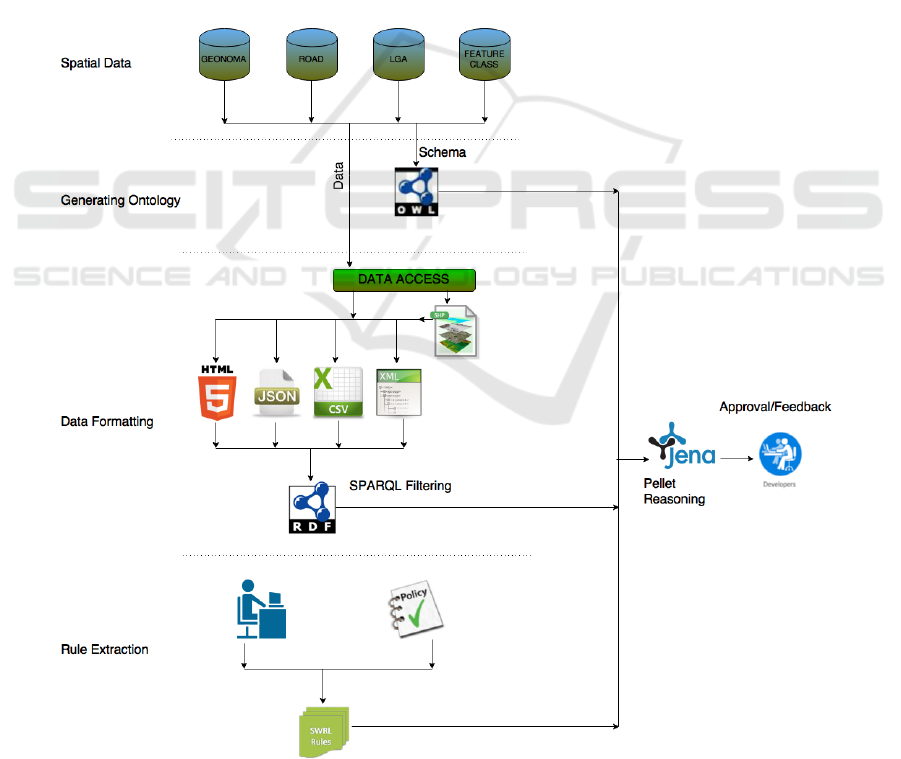

Figure 2 shows the different phases in the land

transaction process from knowledge acquisition to

final feedback. Data is extracted from the various

databases in formats such as html, json, csv and xml

and converted to RDF. Ontologies in OWL are

created from database schema and models in the

interactive GUI based Protégé environment. Rules

are generated in SWRL by an expert. Once the

system has been developed, the data, ontologies and

rules can be used in the runtime environment Jena

with a rule engine by a developer to process road

changes.

4.1 Knowledge Acquisition

Knowledge acquisition was used to extract, structure

and organise knowledge from policy documents,

data dictionaries and by interviewing subject matter

experts. This knowledge was then used to create the

road naming rules. The knowledge acquisition

process used the following sources:

Figure 2: Data integration/reasoning architecture.

GISTAM 2016 - 2nd International Conference on Geographical Information Systems Theory, Applications and Management

160

1 Rules sourced from policy standards:

• A road name cannot be used if it already

exists within a 10km radius of the new road

in city areas or 50km in rural areas

• A road name may not be used more than 15

times in the State of Western Australia

2 Rules sourced by interviewing subject matter

experts:

• A name must not relate to a commercial

business trading name or non-profit

organisation

• A name must not sound like an existing name

• A name with the suffix type ‘place’ or ‘close’

cannot be assigned to a road greater than a

specified length (200m)

• A historical name, such as ANZAC, cannot

be used

• A name with road type ‘rise’ can only be

used for roads that have elevation or are at an

incline

• Abbreviated names derived from the suburb

name are not acceptable for new road names

3 Rules sourced by accessing data dictionaries:

• Discriminatory or derogatory names are not

allowed

• A name in an original Australian Indigenous

language will be considered for a new road

name with reference to its origin

With the current traditional naming process,

satisfying the rules identified above is time

consuming because of the back-and forth process

between developer and approver. As an example,

from a process perspective, when a land developer

or local authority requests a new road name within a

development site, a spatial validation process is run

to test whether the proposed name:

• is already in use in the local authority and if so,

whether it is within 10km of the new site; and

• has already been used 15 times across the State.

In addition to policy rules, subject matter experts

use broader contextual knowledge when determining

if a new road name is valid. For example, during the

approval process experts check the scope for the

proposed subdivision within the wider development

site to avoid subsequent changes resulting from

incorrect initial decisions.

Figure 3 presents a further example of where

expert knowledge in the road naming process, from

initial application to final approval, is required.

During the negotiation phase with the land

developer, documents are transferred back and forth

between both parties; each making changes to a

paper plan by way of communication. The following

notes, written by Landgate to the developer,

illustrate typical negotiations (See Figure 3):

• Jindee Avenue: The road type is suitable,

however the name Jindee is not. Apart from

sounding similar to the suburb name, this is also

an abbreviated name derived from the suburb

name and is not acceptable. A replacement name

is required.

• Limestone Street and Twinfin Way: The street is

continuous so one street name can be used for

this street.

• Noserider Drive: The name is suitable, however

the road type Drive is not (as this road is adjacent

below in this case) to a future open space then

relevant types are Way, Vista, View, or if it

shaped like a crescent, then Crescent can be

used).

• Longboard Lane: The name complies with

policy, however it is too long a word for that

road. Also a portion of the extent is a part of

Hilltop Lane (mentioned in green). A short name

with its origin is required. Alternatively, the

developer can hold the name Longboard for

future use when a long road name is needed in

the vicinity.

• Lifesaver Lane: the name is suitable, however it

appears that there will be a third entry off

Twinfin Court. Clarification of this will be

necessary and an additional name for a portion

(i.e. the northern east/west portion) will be

needed.

• Midsummer Avenue and Treat Street: extensions

are suitable because there are possibilities for the

future development. The roads on the south side

of Jindee Avenue (A & B) are currently unnamed

as they are part of a later development stage.

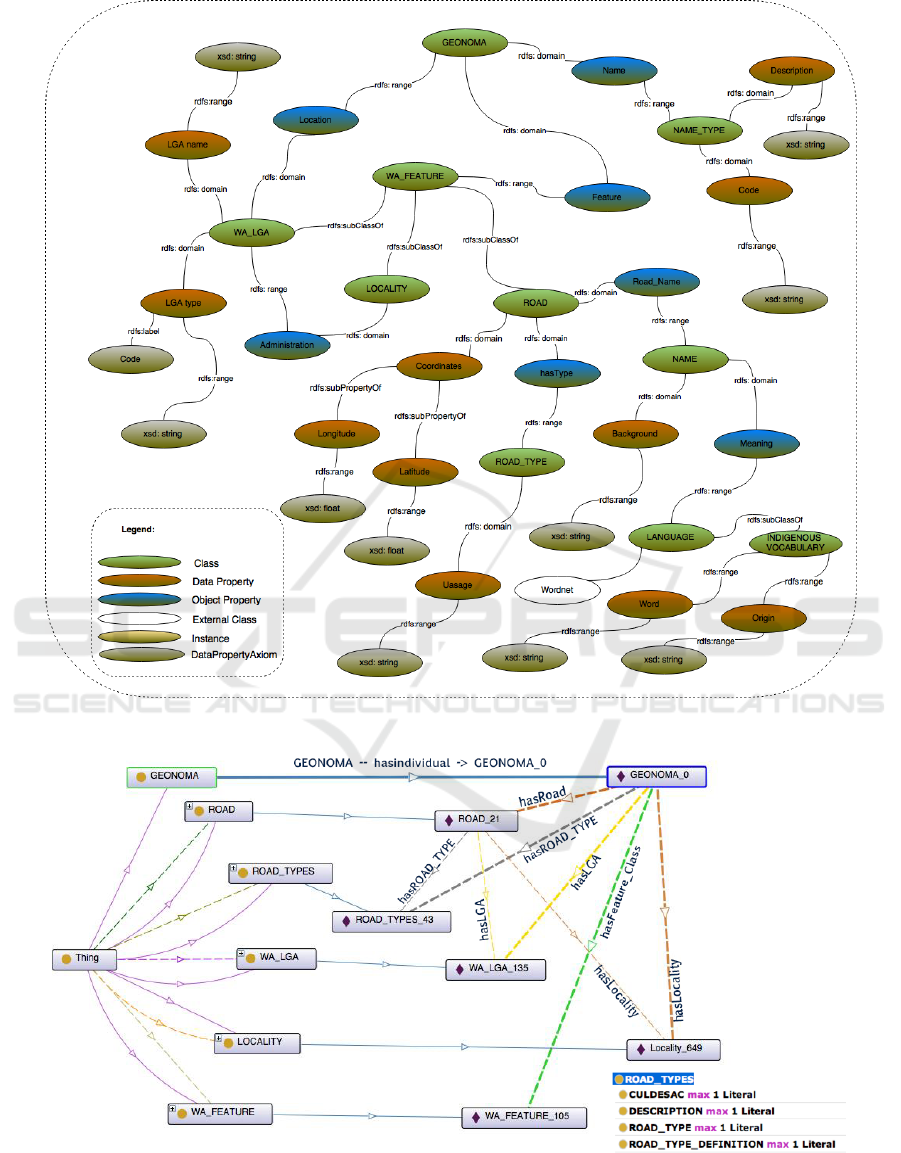

4.2 Ontology Development

Once the rules behind both policy standards and

business processes are understood, the next step is to

generate the ontology model from multiple sources

of information. This ontology is developed as a

global schema that means that while it works with

the Landgate GEONOMA database, it can also be

used in conjunction with other databases that link

the spatial extent of a road to the road naming

process. Figure 4 presents an overview of the

generated Geo_feature ontology containing classes,

data and object properties, and instances. Links

show relationships such as domain, range and

subClassOf. The ontological components are

summarised below.

Automating Government Spatial Transactions

161

4.2.1 Geo_feature Ontology

The GEONOMA dataset is exported to XML and

then imported into Protégé to help with the ontology

generation process. Protégé was chosen as it is an

open source tool with wide community support that

supports ontology development and reasoning, and

importantly OWL DL, W3C description logic

standard. The Geo_feature ontology consists of

OWL classes, data and object properties, and

individuals and is expressed in the form of OWL-2.

Each OWL class is associated with a set of

individuals. Object properties link individuals of one

class to other class individuals. Data properties link

one individual to its data values. Value constraints

and cardinality constraints are used to restrict the

attributes of the individual. For example each

ROAD instance much have only one ROAD_TYPE

through an object property link. Figure 5 shows the

relationships between class instances. An example

for a ROAD_TYPE instance is shown at bottom

right. It has property restrictions handled by

cardinality constraints. Each instance must have

information about its type, description and whether

it is a cul-de-sac or an open ended road type.

Typically, further work is required to create the full

semantics in the ontology. All semantic relationships

(links) between data components are needed because

mapping from datasets directly is not adequate to

explain the full model (Ghawi and Cullot, 2009). For

example, every instance of ROAD, LGA and

LOCALITY has a link with an instance of

GEONOMA. Similarly every ROAD has a link with

LGA and LOCALITY. These are inferred in Protégé

by invoking the OWL-DL rule reasoner.

4.2.2 Ontological Classifications and Spatial

Relations

The resulting Geo_feature ontology represents the

spatial relationship between several datasets

including the road network, local government

authority boundaries, locality and language. These

datasets combined are used in the road name

approval process and checked for constraints. The

spatial relationship distinction is mainly based on

source datasets. However,

from a realistic

viewpoint, these source datasets can only supply

Figure 3: Road Naming process in Jindalee-City of Wanneroo Western Australia.

GISTAM 2016 - 2nd International Conference on Geographical Information Systems Theory, Applications and Management

162

Figure 4: An overview of Geo_feature ontology.

Figure 5: OntoGraf representation for classes and instances.

Automating Government Spatial Transactions

163

Figure 6: Source data in RDF format.

certain details relating to a feature name. To make it

more meaningful there is a need to add additional

vocabularies such as the Australian indigenous

language dictionary and the WordNet ontology. By

adding these we can check the meaning of a name

and whether or not it complies with the chosen road-

naming theme. To process a road request the road

structure needs to be examined. By adding road

coordinates it is possible to check where the

proposed road will be actually developed.

4.3 Rule Development

Figures 4 and 5 shows several relations between

spatial datasets, such as the link between road and

locality. Many of these relationships are inferred by

the rule-based mechanism automatically from

constraints, axioms and links defined in the

ontology, thereby reducing the need for manual

specification for all instances. The Pellet reasoner is

used to infer decisions from these rules in Protégé.

More complex, nested conditions can be handled by

Boolean operators in SWRL rules are executed with

the rule engine (Powell, 2014).

4.4 Data Formatting/Conversion

Once the ontology and rules have been developed

the next stage is to access the source datasets to

reason with the ontologies. To make this happen it is

necessary to convert the source dataset into RDF

triple format. In this way all data are accessible in

one common format and ready for initial reasoning

(Broekstra et al., 2002). There are many data

conversion and integration tools (Karma, MASTRO,

OpenRefine and TripleGeo) that can be used for this

conversion. MASTRO has been

shown to be a

successful Ontology-Based Data Access (OBDA)

system through a series of demonstrations

(Calvanese et al., 2011, Poggi et al., 2008, Savo et

al., 2010, Rodriguez-Muro et al., 2008, Zhang et al.,

2013). It can be accessed by means of a Protégé

plugin. The facilities offered by Protégé can be used

for ontology editing, and functionalities provided by

the MASTRO plugin can be used to access external

data sources. Openrefine (http://openrefine.org/) is

used to convert data to RDF format. Spatial

information from a shape file can converted into

RDF triples (Patroumpas et al., 2014)

(https://github.com/GeoKnow/TripleGeo). Figure 6

shows an RDF instance. Having the data instances in

RDF format, Apache Jena, with the help of MAVEN

repositories is used to link all the ontologies,

instances and rules at runtime.

5 PROCESS/OPERATION

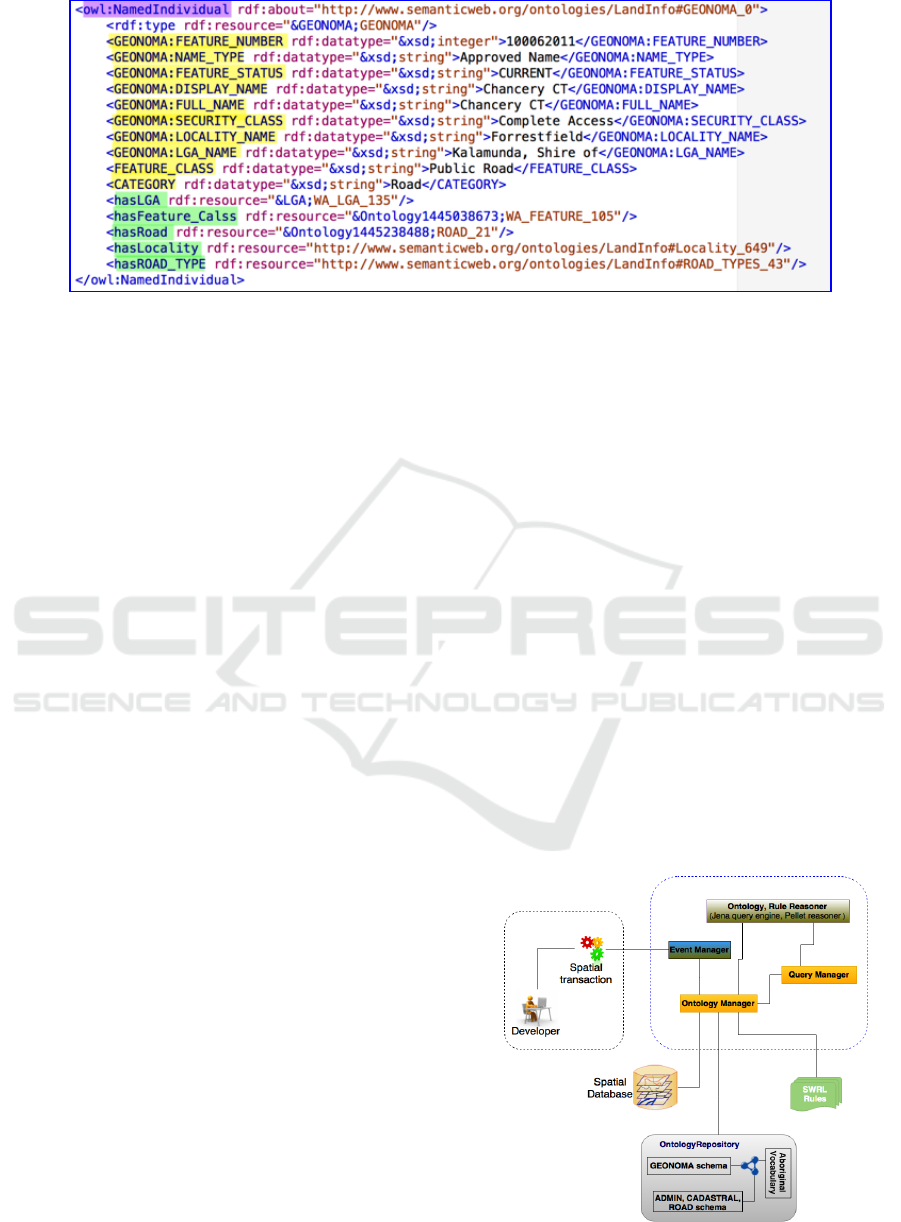

5.1 System Implementation

Figure 7 shows the runtime system architecture,

Figure 7: System architecture.

GISTAM 2016 - 2nd International Conference on Geographical Information Systems Theory, Applications and Management

164

Table 1: SWRL rules with the action of each of the rules.

Purpose

SWRL rules

R1

Relate a road link with existing

road either directly or thru

another proposed road

Road(?R1), Road(?Old), hasRoadLink(?R1, ? Old), status(?R1, "New"),

status(?Old, "Existing"), notEqual(?R1, ?R2) -> isAllowed(?R1, true)

Road(?R1), Road(?R2), Road(?Old), hasRoadLink(?R1, ? R2),

hasRoadLink(?R2, Old), notEqual(?R1, ?R2), notEqual(?Old, ?R2),

status(?Old, "Existing"), status(?R1, "New"), status(?R2, "Aproved"), ->

R2 Check the road length to

against road types

RType(?T1), Road(?R1), Road_Type(?R1, ?T1), hasLength(?R1, ?$200$),

SameAs (?T1, ?$Close$) -> isAllowed(?R1, true)

R3 Check the road access against

road type

Road(?R1), hasRoadUse(?R1, “Openended”), Road(?Old1), Road(?Old2),

hasRoadLinkS(?R1, ?Old1), hasRoadLinkE(?R1, ?Old2), status(?R1,

"New"), status(?Old1, "Existing"), status(?Old2, "Existing"),

notE

q

ual

(

?R1

,

?Old1

),

-> isAllowed

(

?R1

,

true

)

R4 Check the road usage against

road link.

Road(?R1), hasRoadUse(?R1, “ cul-de-sac”), Road(?Old1), Road(?Old2),

hasRoadLinkS(?R1, ?Old1), hasRoadLinkE(?R1, ?Old2), status(?R1,

"New"), status(?Old1, "Existing"), status(?Old2, "Existing"),

notEqual(?R1, ?Old1), -> isAllowed(?R1, false)

R5 Check the roadway with view

RType(?T1), Road(?R1), Road_Type(?R1, ?T1), SameAs (?T1, ?$Vista$)

-> isAllowed(?R1, true)

R6 Check the road name with

definite article

Road(?R1), containsIgnoreCase(?R1, "The") -> isAllowed(?R1, false)

which has been implemented using Jena in Java. The

ontology repository consists of multiple ontologies

derived from the data schema, data individuals, and

rules, as well as non-specific ontologies such as

Aboriginal vocabularies. The event manager collects

the land transaction information and supports the

ontology manager to infer the information relevant

to that application. For example, if the application

relates to a new subdivision, then it will gather the

details spatially related to that land area, or if the

proposed road name relates to a road name change,

then it will gather information related to

naming

from the policy. The Ontology Manager collates the

land information from the spatial database into the

knowledge base.

5.2 Reasoning

The initial stage of reasoning is carried out in Jena

with the Pellet OWL reasoner that checks the logical

consistency of the model, processes the individuals

(current, approved and proposed roads), infers new

information including links and relationships, and

updates the model with the inferred information.

Through consistency checking, the system confirms

whether or not any contradictory facts appear within

the ontology. For example, the domain and range

constraints on the feature relation: GEONOMA

Features: Feature_Class. Constraints on the relation

mean that GEONOMA has features, which come

under only one of the Feature_Class categories. The

reasoner will throw relevant errors if any ontological

inconsistency appears given the proposed roads, for

example if an instance of GEONOMA is linked to

an instance of a ROAD and missing any property

restriction relations.

Similarly, assigning an individual to two

disjointed categories such as LGA and Locality will

make the ontology inconsistent. Consider the case

where every GEONOMA instance is represented

with the ROAD feature type; it must have at least

two coordinates and link to other road instances.

This is declared as a necessary and mandatory

condition for instances of the ROAD category in the

OWL class description. When an individual in OWL

satisfies such a condition then the reasoner

automatically deduces that the individual is an

instance of the specified category.

As well as the reasoning described above, to

gather more information additional reasoning is

required. Rules are expressed in terms of ontological

vocabularies using SWRL. Table 1 shows some

examples of implemented rules. As mentioned

earlier, in each rule, the antecedent refers the body

of the rule and the consequent refers to the head. The

head and body consist of a conjunction of one or

more atoms. Atoms are stated in the form of C(?R)

P(?R,?X), where C and P represent an OWL

description and property, respectively. Variables

representing the individuals are in the form, for

Automating Government Spatial Transactions

165

example ?R, where the variable R is prefixed with a

question mark. Table 1 shows some examples of

rules related to the application.

• Rule R1 automatically infers information with

the help of a road link between proposed and

existing roads from the source dataset with

reference to road coordinates and feature id. This

rule is necessary as every road needs to link with

at least one other road to allow access.

• Rule R2 checks road length against road type.

Checking the road length for shortest road types

(‘Place’, ‘Close’ and ‘Lane’) is necessary to

avoid confusion with the preference for road

usage.

• Rules R3 and R4 check the compatibility

between road usage and road links. For example

an open-ended road must have a road link at both

start and end points of the road.

• Rule R5 checks whether or not the proposed road

has a wide panoramic view across surrounding

areas.

• Rule R6 prevents the definite article (‘The’)

being used in the road name.

6 CONCLUSIONS

This paper proposes a Semantic Web solution for

automating the decision making process for spatially

related transactions. Examples of such transactions

are approvals for new roads and road names. The

method develops a Geo_feature ontology, which

comprises knowledge of roads and constraints,

axioms and rules extracted from sources such as

experts, policy, geometry and past decision

documents. The method shows how ontologies are

manipulated with reasoning techniques to infer new

information.

Semantic Web techniques are used as the

solution because it allows the ontologies and rules to

be published in RDF and made available for other

application domains. For example, similar

processing is envisaged for points of interest

(bridges, parks), and the reconciliation of addresses.

This method has proven successful for the

process that involves simple spatial queries, such as

a request for road name approval. More rules and

relationships with existing ontology elements are

being developed as further examinations are carried

out into the datasets and business rules. Future work

is also examining reasoning over other information

that can be used to aid the approval process. For

example an approver may use aerial photography to

check for the presence of vegetation, as the removal

of trees may need approval, and digital elevation

maps used to determine if the proposed roads are

viable.

ACKNOWLEDGEMENTS

The work has been supported by the Cooperative

Research Centre for Spatial Information, whose

activities are funded by the Australian

Commonwealth's Cooperative Research Centres

Programme. The authors extend their thanks to

Landgate for providing the example datasets for the

case study and subject matter experts for rule

formulation.

REFERENCES

Berners-Lee, T. & Fisch (Placeholder1)Etti, M. 1999.

Weaving The Web: The Original Design And Ultimate

Destiny Of The World Wide Web By Its Inventor

.[San Francisco]: Harpersanfrancisco.

Broekstra, J., Kampman, A. & Van Harmelen, F. 2002.

Sesame: A Generic Architecture For Storing And

Querying Rdf And Rdf Schema. The Semantic Web—

Iswc 2002. Springer.

Calvanese, D., De Giacomo, G., Lembo, D., Lenzerini,

M., Poggi, A., Rodriguez-Muro, M., Rosati, R., Ruzzi,

M. & Savo, D. F. 2011. The Mastro System For

Ontology-Based Data Access. Semantic Web, 2, 43-53.

Devaraju, A., Kuhn, W. & Renschler, C. S. 2015. A

Formal Model To Infer Geographic Events From

Sensor Observations. International Journal Of

Geographical Information Science, 29, 1-27.

Ghawi, R. & Cullot, N. Building Ontologies From

Multiple Information Sources. 15th Conference On

Information And Software Technologies

(It2009)(Kaunas, Lithuania, 2009.

Gupta, S. & Knoblock, C. A. A Framework For

Integrating And Reasoning About Geospatial Data.

Extended Abstracts Of The Sixth International

Conference On Geographic Information Science

(Giscience), 2010.

Mcmeekin, D. A. & West, G. 2012. Spatial Data

Infrastructures And The Semantic Web Of Spatial

Things In Australia Research Opportunities In Sdi

And The Semantic Web. 2012 5th International

Conference On Human System Interactions (Hsi

2012), 197-201.

Millard, E. 2010. The Semantic Web Could Enable Even

Greater Access To Information. Promise Of A Better

Internet, Teradata Magazine Online.

O’connor, M., Knublauch, H., Tu, S., Grosof, B., Dean,

M., Grosso, W. & Musen, M. 2005. Supporting Rule

GISTAM 2016 - 2nd International Conference on Geographical Information Systems Theory, Applications and Management

166

System Interoperability On The Semantic Web With

Swrl. The Semantic Web–Iswc 2005. Springer.

Patroumpas, K., Alexakis, M., Giannopoulos, G. &

Athanasiou, S. Triplegeo: An Etl Tool For

Transforming Geospatial Data Into Rdf Triples.

Edbt/Icdt Workshops, 2014. 275-278.

Poggi, A., Rodriguez, M. & Ruzzi, M. Ontology-Based

Database Access With Dig-Mastro And The Obda

Plugin For Protégé. Proc. Of Owled, 2008.

Powell, J. 2014. Librarian's Guide To Graphs, Data And

The Semantic Web, Chandos Publishing (Oxfor.

Reitsma, F. E. 2005. A New Geographic Process Data

Model.

Rodriguez-Muro, M., Lubyte, L. & Calvanese, D.

Realizing Ontology Based Data Access: A Plug-In For

Protégé. Data Engineering Workshop, 2008. Icdew

2008. Ieee 24th International Conference On, 2008.

Ieee, 286-289.

Savo, D. F., Lembo, D., Lenzerini, M., Poggi, A.,

Rodriguez-Muro, M., Romagnoli, V., Ruzzi, M. &

Stella, G. 2010. Mastro At Work: Experiences On

Ontology-Based Data Access. Proc. Of Dl, 573, 20-

31.

Segaran, T., Evans, C. & Taylor, J. 2009. Programming

The Semantic Web, " O'reilly Media, Inc.".

Shadbolt, N., Hall, W. & Berners-Lee, T. 2006. The

Semantic Web Revisited. Intelligent Systems, Ieee, 21,

96-101.

Varadharajulu, P., Saqiq, M. A., Yu, F., Mcmeekin, D. A.,

West, G., Arnold, L. & Moncrieff, S. 2015. Spatial

Data Supply Chains. International Archives Of The

Photogrammetry, Remote Sensing & Spatial

Information Sciences, 40.

Yu, L. & Liu, Y. 2013. Using Linked Data In A

Heterogeneous Sensor Web: Challenges, Experiments

And Lessons Learned. International Journal Of

Digital Earth, 1-21.

Zhang, Y., Chiang, Y.-Y., Szekely, P. & Knoblock, C. A.

A Semantic Approach To Retrieving, Linking, And

Integrating Heterogeneous Geospatial Data. Joint

Proceedings Of The Workshop On Ai Problems And

Approaches For Intelligent Environments And

Workshop On Semantic Cities, 2013. Acm, 31-37.

Automating Government Spatial Transactions

167