2D Hair Strands Generation Based on Template Matching

Chao Sun, Fatemeh Cheraghchi and Won-Sook Lee

School of Electrical Engineering and Computer Science, University of Ottawa, 75 Laurier Avenue East, Ottawa, Canada

Keywords: Hair Strands Generation, Orientation Map, Template Matching, Spline Representation.

Abstract: Hair modelling is an important part of many applications in computer graphics. Since 2D hair strands

represent the information of the hair shape and the feature of the hairstyles, the generation of 2D hair strands

is an essential part for image-based hair modelling. In this paper, we present a novel algorithm to generate

2D hair strands based on a template matching method. The method first divides a real hairstyle input image

into sub-images with the predefined size. For each sub-image, an orientation map is estimated using Gabor

filter and the orientation feature is presented by the orientation histogram. Then it matches the orientation

histograms between each sub-image and template images in our database. Based on the matching results,

the sub-images are replaced by the corresponding manual stroke images to give a clear representation of 2D

hair strands. The result is refined by connecting the strands between adjacent sub-images. Finally, based on

the control points defined on the 2D hair strands, the spline representation is applied to obtain smooth hair

strands. Experimental results indicate that our algorithm is feasible.

1 INTRODUCTION

Hair modelling is an important part in many

computer graphic applications. For example, it can

help to create a convincing virtual character in

computer games by providing certain identity and

personality based on different hairstyles. Another

example is in cosmetic industry, 3D hair models

with different hairstyles can be applied to avatars of

the customers in order to help the customers to

design suitable hairstyles.

There are three major steps of hair modelling:

styling, animating and rendering (Magnenat-

Thalman et al. 2000). Hairstyling is the process of

modelling the shape and geometry of the hair. It

provides the density, distribution and orientation of

hair strands. Hair animating includes the dynamic

motions of hair, such as collision detection between

hair strands as well as between hair and other objects

(e.g. head and body). Hair rendering focuses on the

visual presentation of hair on screen. However, the

characteristic of hair, such as omnipresent occlusion,

specula appearance and complex discontinuities,

make it very difficult to model (Ward et al. 2007).

Recent hair modelling research works focus on

estimating and reconstructing 3D hair strands from

multi-view 2D hair images (Paris et al. 2008 ) (Luo

et al. 2012, 2013a, 2013b) . However, those systems

usually need complex configuration of digital

cameras and lighting sources. Furthermore, user

assistance is needed to a certain extent, thus the

procedure would become time-consuming. In our

proposed system, we use one digital single-lens

reflex (DSLR) camera and one Kinect sensor to

obtain both hair images and hair depth information

from different view angles of a real hair. Our system

extracts 2D hair strands from hair images. 2D hair

strands are curves that represent the orientation and

distribution of hairstyle. Based on the 2D hair

strands, we can obtain the corresponding 3D hair

strands by projecting the 2D strands on 3D point

clouds. For this paper, we only focus on extracting

2D hair strands from hair images which is the

fundamental procedure in our system.

In our system, 2D hair strands geometry can be

estimated and a collection of hair strands can be

obtained. The hair strands reflect the shape of the

hair as well as the changes of the main orientations

of the hairstyle. Compare to previous hair modelling

systems, our system does not need the complex

capture configuration as well as the user assistance.

Furthermore, we notice that the most significant

information of hair image is the orientation of hair

strands. However, instead of using the orientation

information of every pixel directly, we believe that

the orientation of certain local area is more

261

Sun C., Cheraghchi F. and Lee W..

2D Hair Strands Generation Based on Template Matching.

DOI: 10.5220/0005107102610266

In Proceedings of the 4th International Conference on Simulation and Modeling Methodologies, Technologies and Applications (SIMULTECH-2014),

pages 261-266

ISBN: 978-989-758-038-3

Copyright

c

2014 SCITEPRESS (Science and Technology Publications, Lda.)

representative. Thus we divide the input hair image

into sub-images with predefined size and use the

orientation histograms to represent the orientation

feature of each sub-image. In addition, based on the

orientation histogram, we apply a template matching

method to obtain 2D hair strands information from

our manual stroke image database directly. Finally,

we use splines to represent those manual strokes in

order to obtain smooth hair strands.

2 RELATED WORKS

Existing hair capture systems use various methods to

acquire the orientation field and geometry of the hair.

(Paris et al. 2004) used a hair image capture system

with a fixed camera and a light source moving along

a predefined trajectory. They estimated the hair

orientation of the highlight on the hair images and

used the hair orientation to constrain the growth of

hair strands. (Wei et al. 2005) introduced a

technique that used a coarse visual hull which was

reconstructed using multi-view images. They used

the visual hull as the bounding geometry for hair

growing constrained with orientation consistency.

(Paris et al. 2008) presented an active acquisition

system called Hair Photobooth. The system was

composed by several video cameras, light sources

and projectors. It can capture accurate data of the

exterior hair strands appearance. The hair model was

generated from the scalp to the captured exterior hair

layer under constrains of the orientation field. (Jakob

et al. 2009) proposed a system to capture individual

hair strands by using focal sweeps with a robotic-

controlled macro-lens equipped camera. (Herreraet

al.2012) performed hair capture using thermal

imaging device. (Beeler at al. 2012) used a high

resolution dense camera array to reconstruct facial

hair strand geometry by matching distinctive strands.

However, since there was a strong contract between

the skin colour and the facial hair colour, this

method may not suitable for general hair geometry

estimation. (Chai et al. 2012) proposed a method to

generate an approximate hair strand model from

single hair image with modest user interaction. (Chai

et al. 2013) extended their system to create hair

animations based on video input. The system can

handle animations of relative simple hairstyle. (Luo

et al. 2013a) developed several hair modelling

methods based on multi-views. One of them was a

wide-baseline hair capture method using strand-

based refinement. In the system 8 cameras were used

to capture the complete hair, geometry. (Luo et al.

2013b) also proposed a structure-aware hair capture

method which can achieve highly convoluted hair

modelling.

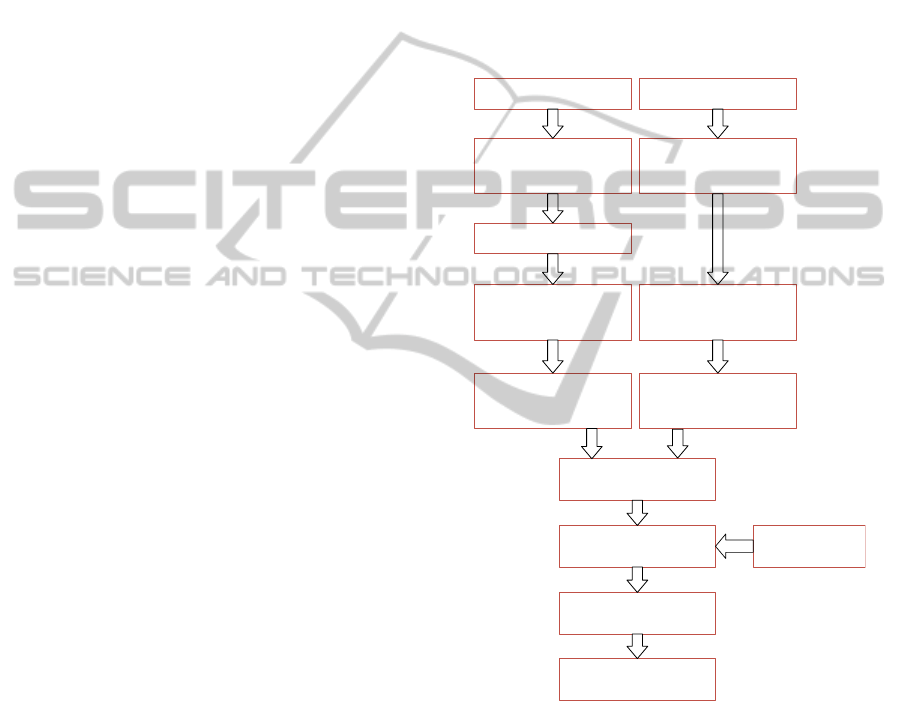

3 OVERVIEW

Given a real hairstyle input image, our goal is to

obtain the hair strands which can reflect important

feature of the hairstyle: the shape and orientation of

the hair. 2D hair strands extraction is an essential

step for the 3D hair modelling. The procedure of our

method is shown in Figure 1.

InputRGBhairimage

ConvertintoHSVco lorspace

andkeeptheSchan nel info

Dividedintosub‐images

Orientationestimation

basedonGaborfilter

Histogramoforientationmap

RGBtemplateimages

ConvertintoHSVco lorspace

andkeeptheSchan nel info

Orientationestimation

basedonGaborfilter

Histogramoforientationmap

Templatematching

Replacesub‐images

withmanualstrokeimages

Manualstrokeimages

Hairstrandsconnection

Splinerepresentationof

Hairstrands

Figure 1: The procedure of our method.

We first convert the hair image from RGB to

HSV color space and we choose the information

from the S channel as our input for the following

steps. Then we divide the input hair image into sub-

images with predefined size and we calculate the

orientation map of each sub-image using Gabor filter.

The orientation feature of each sub-image is

presented by the orientation histogram. Furthermore,

we match the orientation histograms between each

sub-image and the template images in our database.

Based on the matching results, we replace the sub-

SIMULTECH2014-4thInternationalConferenceonSimulationandModelingMethodologies,Technologiesand

Applications

262

images with the corresponding manual stroke

images to give a clear representation of 2D hair

strands. The result is refined by connecting the

strands between sub-images. Finally, based on the

control points defined on the hair strands, the spline

presentation is applied to obtain smooth hair strands.

In the rest of paper, we will describe our image

templates database in section 4. In section 5, we will

present our 2D hair strands generation algorithm in

detail with experimental results and analysis.

Conclusion and future work are given in section 6.

4 HAIR TEMPLATES DATABASE

Our hair templates database contains both the RGB

hair image templates and corresponding manual

stroke image templates.

There are 202 RGB hair image templates with

the size of 50x50 and those templates cover the

orientations from 0 degree to 180 degree. There are

202 manual stroke image templates with the same

size of 50x50. In addition, we use splines to give a

refined representation of the strokes in each manual

stroke image. We use four points to define each

spline: one start point, two control points and one

end point.

As shown in Figure 2, the first row presents the

samples of the RGB hair image templates. The

second row shows the corresponding manual stroke

image templates. Those manual stroke image

templates are created from the RGB hair image

templates manually. The third row is the spline

representation of the corresponding manual stroke

images.

Figure 2: RGB hair image templates, manual stroke image

templates and spline representation of the manual stroke

templates.

5 HAIR STRANDS GENERATION

5.1 Pre-Processing

The input image of our system is captured by digital

cameras. Real hair images have a variety of colours,

however the information we want to obtain from the

hair image is the geometry of hair strands. Thus we

first convert the RGB input image into HSV color

space and use the S channel information, as shown

in Figure 3. The S channel image is then divided into

sub-images with the size of 50x50.

Figure 3: Input RGB hair image and S channel

information image.

5.2 2D Orientation Estimation

Previous research works (Paris et al. 2004) show that

oriented filters are well suited to estimating the local

orientation of hair strands. They employ different

filters at multiple scales and determine the best score

based on the variance of the filters. In our

experiments, we filter the input image with a bank of

oriented filters. An oriented filter kernel

is a

kernel that is designed to produce a high response

for structures that are oriented along the direction

when it is convolved with an image. In our method,

we use the real part of a Gabor filter (Jain et al.

1991).

,

exp

cos

(1)

where and

. The parameters of the Gabor kernel in our

experiments are

1.8,

2.4 and 4.

The S channel image is convolved with the filter

kernel

for different (we use 10 different

orientations from 0 degree to 180 degree, each one is

18 degree). Let

,,

∗ be the

response of

at pixel, , the orientation that

produces the high score response is stored in the

orientation map

,

. A template image

and its orientation map are shown in Figure 4.

2DHairStrandsGenerationBasedonTemplateMatching

263

Figure 4: A template image and its orientation map 50x50.

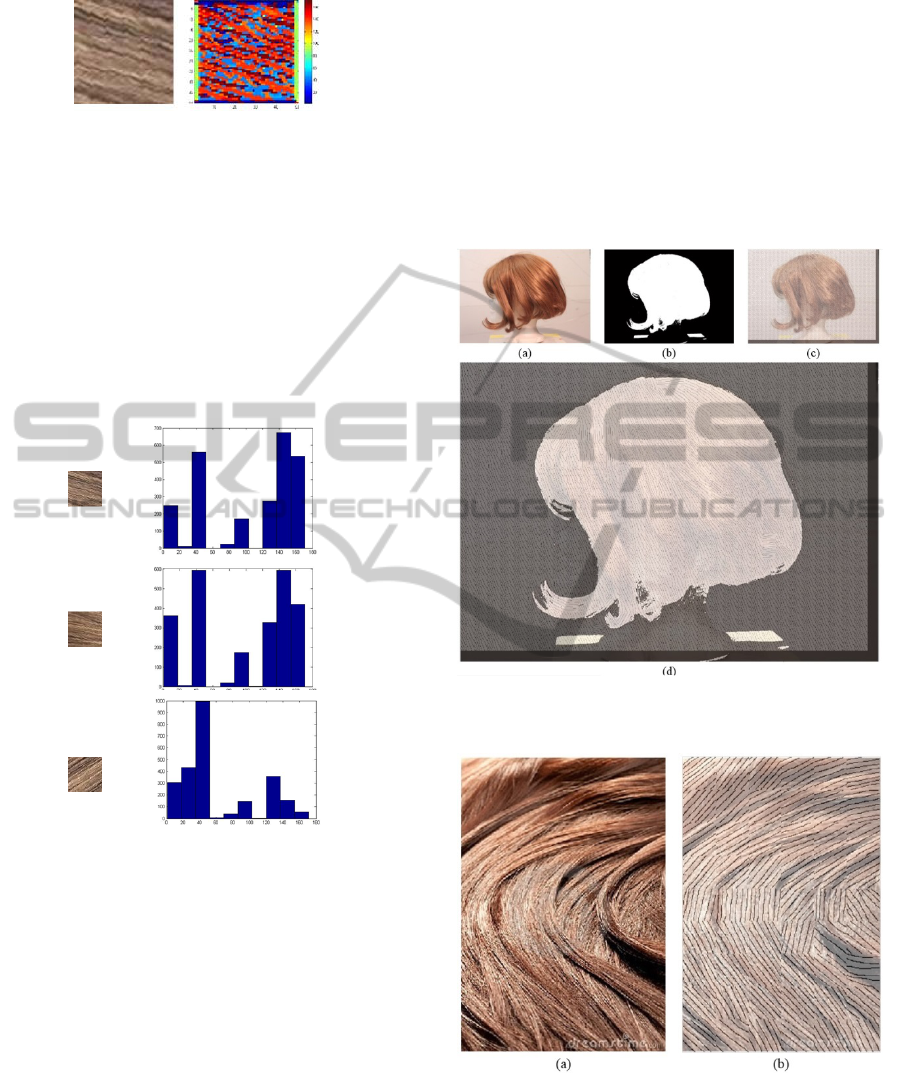

5.3 Template Matching

The template matching method in our system is

based on the orientation information that we

obtained from 2D orientation estimation. As shown

in Figure 5, we can see that the templates from the

image database with similar orientation share similar

orientation histograms. Meanwhile, different

orientation can be easily distinguished using

orientation histogram.

Figure 5: Template images and their orientation map’s

histogram.

We estimate the similarity between the orientation

histograms of the input sub-image and the

orientation histograms of the template images. The

similarity between two orientation histograms is

calculated using the following formula:

(2)

where

is orientation histogram of the

template images and 1,2,…,20,

is

the orientation histogram of the input sub-image.

After obtain the matching results, we replace the

sub-images with the manual stroke images

corresponding to the template images. Manual stroke

image can directly shows the 2D hair strands

geometry. The experimental results are shown in

Figure 6. Figure 6(a) is the input RGB hair image In

order to obtain only the hair area, we first perform

image binarization to get the hair area mask as

shown in Figure 6 (b). Figure 6 (c) is the 2D hair

strands extraction result. The 2D hair strands

extraction in the hair area is shown in Figure 6 (d).

Figure 7 shows the experimental result of relative

curly hairs. Figure 7 (a) is the input hair image and

(b) is the hair strands extraction results.

Figure 6: 2D hair strands extraction using manual stroke

image templates on real wig.

Figure 7: 2D hair strands extraction using manual stroke

image templates on curly hair image.

SIMULTECH2014-4thInternationalConferenceonSimulationandModelingMethodologies,Technologiesand

Applications

264

5.4 2D Hair Strand Connection and

Spline Representation

For each manual stroke image block in Figure 6,

there are several edge points (start points and end

points on the spline). We calculate the Euclidean

distance between the each edge point of current

block and edge points of adjacent 8-neigbor blocks.

We choose the pair of points that has the minimum

distance. In addition, we compare this minimum

distance with a predefined threshold. Base on the

size of the manual stroke template images and the

distribution features of the manual strokes, we set

the threshold to be 5 in our system. If the minimum

distance is smaller than the threshold, we define

those two points should be connected and those two

manual strokes belong to one hair strand. Otherwise,

those two manual strokes do not belong to the same

hair strand. Furthermore, we apply the line tracking

algorithm to obtain relative long hair. The procedure

of the hair strands tracking algorithm is:

Start from point x as the current pixel;

Consider its eight neighbours (in a 3x3

window);

Check which of its neighbour pixel is of value

zero (black). Define this pixel as “N1”;

Change the current pixel to be “N1”;

Repeat the same procedure for “N1” and the

following pixel until reach the border of the

hair or there is no further connection

available.

By applying the hair strands tracking algorithm,

we can obtain the length information of each hair

strands as well as all the coordinates of the hair

strands’ points. Knowing the length of each hair

strand can help us to remove relative short hair

strands. In addition, we can use the coordinates of

the hair strands’ points to generate more smooth hair

stands based on spline presentation. The 2D hair

strands connection result and refined results are

shown in Figure 8. The image in the first row is the

2D hair strands connection results based on spline

representation. The second image shows the details

of our 2D hair strands connection results. The last

image shows the refinement of the connection result

based on our hair strands tracking algorithm and

spline representation. The yellow 2D hair strands are

smoother than the previous ones.

Figure 8: 2D hair strands connection and refined result.

6 CONCLUSION AND FUTURE

WORK

We present a 2D hair strands generation method

based on template matching. The introduced method

does not need a complex system configuration or

any user assistant. We focus on the orientation

information of hairstyle. Instead of using the

orientation information of every pixel directly, we

use the orientation histogram of local area to

represent the geometry of the hair strands. We

simplify the hair strands extraction procedure by

apply template matching based on the orientation

histograms. We also apply spline presentation to

obtain smooth the hair strands. The experimental

results show that our method is feasible.

In the future, we need to improve our template

matching algorithm in order to obtain more accurate

hair strands geometry. We also need to improve our

hair strands connection and spline representation

methods to generate more smooth 2D hair strands.

Based on the refined 2D hair strands, we can

combine the depth information from the Kinect in

our system to obtain 3D model of real hairstyle.

REFERENCES

Magnenat-Thalmann, N., Hadap, S., 2000. State of the art

in hair simulation. Proceedings of International

Workshop Human Modelling and Animation. 3-9.

Ward, K., Bertails, F., Kim, T., Y., Marschner, S., R.,

2007. A survey on hair modelling: styling, simulation,

and rendering. IEEE Trans. on Visualization and

Computer Graphics. 13:2, 213-234.

Paris, S., Chang, W., Kozhushnyan, O., I., Jarosz, W.,

2008. Hair photobooth: geometric and photometric

2DHairStrandsGenerationBasedonTemplateMatching

265

acquisition of real hairstyles. ACM Trans.Graph. 27:3,

30:1-30:9.

Luo, L., Li, H., Paris, S., Weise, T., Pauly, M.,

Rusinkiewicz, S. 2012. Multi-view hair capture using

orientation fields. In CVPR. 1490-1497.

Luo, L., Li, H., Rusinkiewicz, S. 2013. Structure-aware

hair capture. ACM Trans. Graph. 32, 4, 76:1-76:12.

Luo, L., Zhang, C., Zhang, Z., Rusinkiewicz, S. 2013.

Wide-baseline hair capture using strand-based

refinement. In CVPR. 265-272.

Paris, S., Briceno, H., M., Sillion, F., X., 2004. Capture of

hair geometry from multiple images. ACM Trans.

Graph. 23, 3, 712-719.

Wei, Y., Ofek, E., Quan, L., Shum, H. Y. 2005. Modelling

hair from multiple views. ACM Trans. Graph. 24, 3,

816-820.

Jakob, W., Moon, J. T. Marschner, S. 2009. Capturing hair

assemblies fiber by fiber. ACM Trans. Graph. 21, 3,

620-629.

Lay Herreara, T., Zinke, A., Weber, A. 2012. Lighting hair

from the inside: a thermal approach to hair

reconstruction. ACM Trans. Graph. 31, 6, 146:1 –

146:9.

Beeler, T., Bickel, B., Noris, G., Marschner, S., Beardsley,

P., Sumner, R., W., Gross, M. 2012. Coupled 3D

reconstruction of sparse facial hair and skin. ACM

Trans. Graph. 31, 117:1-117:10.

Chai, M., Wang, L., Weng, Y., Yu, Y., Guo, B., Zhou, K.

2012. Single-view hair modelling for portrait

manipulation. ACM Trans. Graph. 31, 4, 116:1- 116:8.

Chai, M., Wang, L., Weng, Y., Jin, X., Zhou, K. 2013.

Dynamic hair manipulation in image and videos. ACM

Trans. Graph. 31, 2, 75:1-75:8.

Jain, A., K., Farrohnia, F. 1991. Unsupervised texture

segmentation using Gabor filters. Pattern Recognition

24, 12, 1167-1186.

SIMULTECH2014-4thInternationalConferenceonSimulationandModelingMethodologies,Technologiesand

Applications

266