Avatar-based Macroeconomics

Experimental Insights into Artificial Agents Behavior

Gianfranco Giulioni

1

, Edgardo Bucciarelli

1

, Marcello Silvestri

2

and Paola D’Orazio

1

1

Department of Philosophical, Pedagogical and Economic-Quantitative Sciences, “G. D’Annunzio” University,

Viale Pindaro 42, 65127 Pescara, Italy

2

Research Group for Experimental Microfoundations of Macroeconomics (GEMM), Pescara, Italy

Keywords:

Artificial Intelligence, Experimental Economics, Artificial Economy, Agent-based Computational Economics,

Multi-agent Systems.

Abstract:

In this paper we present a new methodological approach based on the interplay between Experimental Eco-

nomics and Agent-based Economics. Advances in the design and implementation of individual autonomous

economic agents are presented. The methodology is organized in three steps. The first step focuses on agents.

We use an inductive rather than a deductive approach: by means of the experimental method we observe

agents’ behaviors. The second step is the behavioral rules’ building process that allows us to study how to

estimate and structure artificial agents. In the third step, the set of previously induced behavioral rules are

used to build artificial agents, i.e. “molded” avatars, which operate in the “archetype” macroeconomic system.

The resulting Multi-agent system serves as the macroeconomic environment for our simulations and economic

policy analysis.

1 INTRODUCTION

A growing number of economists has been claiming

the inadequacy of the Dynamic Stochastic General

Equilibrium (DSGE) model which has been consid-

ered the standard macroeconomic tool over the past

few decades. In order to achieve an internally coher-

ent construction, the DSGE model traditionally relies

on the deductive approach through which individual

behavior are obtained by sophisticated mathematical

models rooted in the axioms of the homo economicus,

i.e. the individual maximizing hypothesis, the rational

expectations hypothesis, the homogeneity hypothesis.

Although the deductive analysis provides an impor-

tant theoretical benchmark, DSGE models have been

questioned because of their microfoundations, i.e. the

microeconomic behavior of economic agents. DSGE

models assume economic agents to solve complex in-

tertemporal optimization problems: the whole model

is based on as if conjectures rather than empirical ev-

idence. This calls into question the reliability of the

predictions of the model which thus need to be empir-

ically tested and improved by using different model-

ing approaches.

According to some economists, the need for an-

alytical tractability that drives the microfoundation

process leads to models which are “more simple than

possible” (Colander et al., 2008). It is thus clear

that a macroeconomic model that is not “more simple

than possible” (e.g., a model in which many heteroge-

neous bounded rational agents interact) loses analyti-

cal tractability and requires alternative modeling tools

(Velupillai, 2011). The increase in computational

power, the development of useful programming lan-

guages (such as the object oriented languages) and the

striking progress of information and communication

technologies has allowed researchers to set up virtual

economies and track the results obtained by each arti-

ficial agent that operates in the system. These devel-

opments have given rise to a new research direction

based on a combination of economics and computer

science, namely the Agent-based Computational Eco-

nomics (ACE, Tesfatsion and Judd, 2006).

In this paper we present a research method based

on the interplay between Experimental Economics

and Agent-based Computational Economics and sug-

gest some advances in the design and implementa-

tion of individual autonomous economic agents. The

methodology is organized on three levels. On the first

level the focus is on agents. We start by following

the early steps made by Arthur (1991, 1993) and we

try to add possible improvements by adopting the Ex-

272

Giulioni G., Bucciarelli E., Silvestri M. and D’Orazio P..

Avatar-based Macroeconomics - Experimental Insights into Artificial Agents Behavior.

DOI: 10.5220/0004917902720277

In Proceedings of the 6th International Conference on Agents and Artificial Intelligence (ICAART-2014), pages 272-277

ISBN: 978-989-758-016-1

Copyright

c

2014 SCITEPRESS (Science and Technology Publications, Lda.)

perimental Economics perspective. By means of the

experimental method we observe agents’ behaviors.

In the second level we study how to structure them

- the behavioral rules’ building process - in order to

populate the artificial economy. The goal of the third

level is to build a Multi-agent system which serves as

the macroeconomic environment for our simulations

and economic policy analysis.

2 BEYOND THE DEDUCTIVE

APPROACH

Macroeconomists have been working to provide more

reliable representations of real economic systems.

Hereby we will discuss some points of the wider de-

bate which in turn allow for the assessment of the nov-

elties of our paper.

It is widely agreed that Macroeconomics must be

microfounded (Lucas, 1976; Janssen, 2008). This es-

sentially means that:

i) an economist should have a model regulating the

behavior of agents that populate the macroeco-

nomic model, and;

ii) the macroeconomic outcome should be obtained

by using aggregation techniques that involve (at

least) the behavioral rule obtained from the indi-

vidual model.

There are several ways in which these steps can be

undertaken. The choice depends on the requirements

the researcher seeks to fulfill.

Concerning point i), economics traditionally uses

a deductive approach to build models that analyze in-

dividual behaviors. According to the deductive ap-

proach, the axioms of economic behavior are estab-

lished first; then agents are provided with objective

functions that satisfy the axioms. Finally, behaviors

are derived by maximizing objective functions given

the pertinent constraints. Concerning point ii), the

standard way to proceed is to allow the macroeco-

nomic outcome be a function whose sole input argu-

ments are the individual behaviors and the number of

agents in the economy (the aggregate outcome is ob-

tained multiplying the representative agent outcome

by the number of agents). These methods for handling

i) and ii) are important because they allow researchers

to obtain closed form analytic solutions, thus bringing

scientific “appeal” similar to that of the hard sciences

to the whole sequence.

However, according to some economists the an-

alytic tractability turns to be a straitjacket because

it requires over simplified settings. Indeed, difficul-

ties suddenly arise when more realistic elements, i.e.,

uncertainty and agents’ inability to manage large in-

formation sets, are included in the microeconomic

framework. This in turn implies that in a more re-

alistic setting, agents’ behaviors are characterized by

bounded rationality and the adoption of norms (Sar-

gent, 1993; Rubinstein, 1998; Akerlof, 2007, among

others, maintain that economics needs such ele-

ments).

Other difficulties arise in the aforementioned ag-

gregation process. Kirman (1989, 1992) for exam-

ple maintains that in order to obtain reliable macroe-

conomic results, the aggregate outcome should be

a function of the rules governing (heterogeneous)

agents’ behaviors and the structure of interactions

among them. Obtaining analytic tractability in a sys-

tem of interacting elements is an hard task.

1

A growing number of economists acknowledge

ACE as the most promising solution for unlacing

the mathematical tractability strait-jacket. Artificial

economies are indeed a crucial tool in displaying dif-

ferent ways to understand what is going on in a de-

centralized economy (Vriend, 1994; Arifovic, 2000).

3 AGENT-BASED

COMPUTATIONAL

ECONOMICS: CRITICISM AND

A PROPOSED WAY OUT

More than twenty years after its first implementation,

ACE is sometimes described as a promising field of

studies. The lack of commonly accepted microfoun-

dations represents the stumbling block of ACE mod-

els which have had difficulties in gaining general ac-

ceptance among economists.

In terms of microfoundations, the ACE approach

allows for modeling a large range of economic be-

haviors. This potentiality, however, is linked to the

“too many degrees of freedom” problem, i.e., the set

of implementable individual models increases enor-

mously so that the researcher can easily incur unsuit-

able choices. The debate over which are the best

means to overcome these problems remains one of

the key concerns of the ACE community (see G

¨

urcan

et al., 2013, for a recent contribution). Empirical val-

idation, according to which the output of the model

1

It can be obtained for example by using statistical me-

chanics tools (Aoki and Yoshikawa, 2007). However, the

application of these tools does not provide a general solu-

tion to the problem. Their application to human economic

behavior needs an adaptation which should be carefully

evaluated because these techniques were originally devel-

oped to aggregate the behavior of particles.

Avatar-basedMacroeconomics-ExperimentalInsightsintoArtificialAgentsBehavior

273

should display similarities with real world data, have

been used to qualify ACE models since their appear-

ance. One method to proceed consists in changing

the assumed microeconomic behaviors until a good fit

of empirical data is achieved, thus providing indirect

microfoundations.

2

Using the mathematical jargon,

proceeding in this way yields sufficient conditions: a

candidate model generates realistic results, but the ex-

istence of other models that could provide a similar or

a better fit cannot be excluded. Also, it could be very

useful to have necessary conditions; one possibility in

this direction could be using the available microeco-

nomic data to set up the individual behavioral model.

Although many Agent-based researchers have shown

some interest in this direction, this methodology finds

significant hurdles in the paucity and availability of

historical and micro data (see Kl

¨

ugl, 2008; Werker

and Brenner, 2004, among others).

In this paper we maintain that the aforementioned

problems can be sidestepped by using Experimental

Economics.

In our research we will refer in particular to

nomothetic experiments

3

which can provide insights

into individuals’ behaviors. Indeed, information col-

lected from experimental microsystems offer richer

insights into the individual and collective dynamics of

a model; they are thus different from those obtained

from empirical data. According to this, we argue that

experimental data could represent a solution to the

problem of availability of micro data.

In our opinion, collecting microeconomic data

through experimentation opens up the possibility of

performing the estimation rather than the calibration

4

of individual behavioral models to be implemented in

simulations. In other words, by means of this ap-

proach, both necessary and sufficient conditions are

satisfied and the reliability of ACE models would be

significantly increased.

4 EXPERIMENTAL

ECONOMICS: SOME

METHODOLOGICAL NOTES

The experimental method is gaining consent among

economists as a valuable and important tool for ana-

2

Windrum et al. (2007) refer to this practice as indirect

calibration.

3

Nomo-theoretical experiments aim to establish laws of

behavior through testing theory.

4

The seminal paper by Hansen and Heckman (1996) of-

fers an interesting perspective on model calibration and es-

timation methodologies. It also discusses the related empir-

ical “hidden dangers.”

lyzing and designing economic systems. One of the

main strengths of the experimental method is that the

experimenter can iteratively add and remove features

of the real (i.e., field) environment and thus study the

impact of that manipulation on subjects’ behaviors.

Nevertheless, the complexity of economic sys-

tems may prevent experimenters - as well as theo-

rists - from correctly identifying the determinants of

a particular decision-making situation or market un-

der scrutiny. According to some economists, the ex-

tremely simplified environment recreated in a labo-

ratory questions the realism of experiments: exper-

imenters are indeed forced to make simplifying as-

sumptions about an economic problem in order to

derive tractable solutions. Yet, such criticism to the

falsificationist power of experiments pervades all ex-

perimental (and observational) sciences: this is often

addressed to as the Duhem-Quine (D-Q) problem (as

explained in Hertwig and Ortmann, 2001).

5

Further

clarification about the experimental method is also

needed. Falsification tout court is not the main goal

of experiments: they are aimed at setting the stage for

better theory and a better understanding of the phe-

nomena. When experimental results show that a the-

ory works poorly, experimenters engage in a process

of procedures’ revision before abandoning the theory

because of one or many falsifying observations. They

reexamine instructions for lack of clarity, increase the

experience level of subjects, try increased payoffs,

and explore sources of errors in an attempt to find the

limits of the “falsifying process”.

Besides the criticism about the way experiments

are conducted and more specifically about the philos-

ophy of science that guides the experimental method,

5

It reminds scientists that testing a theory depends cru-

cially on the methodological decisions researchers make in

designing and implementing the test itself and of the impos-

sibility to test a scientific theory in isolation given that every

empirical test requires assumptions about auxiliary aspects

that cannot be controlled by researchers. According to the

skeptics about the use of experimentation in Economics, the

D-Q problem makes that a failure of a theory in the labora-

tory can always be attributed to (unobserved) auxiliary hy-

potheses and the majority of experiments could not survive

such a strong test of external validity. Nevertheless, accord-

ing to Vernon Smith the D-Q problem “is not a barrier to re-

solving ambiguity in interpreting test results” (Smith, 2002,

p. 106); it is rather a stimulus to improve the confronta-

tion of theory with empirical evidence. He argues that if

experimenters have a confounding problem with auxiliary

hypotheses, they run new experiments to test them and aux-

iliary hypotheses that are linked with key issues involving

the state of the agent (namely, motivation and experience-

learning) must be incorporated into the theory, thus making

it encompassing real features of agents’ behaviors.

ICAART2014-InternationalConferenceonAgentsandArtificialIntelligence

274

there is a voiced criticism of the internal validity

6

and

external validity

7

of experiments. Ceteris paribus, the

comparative sophistication of subjects inside and out-

side the laboratory, the adequacy of rewards (i.e., pay-

offs), the framing of instructions.

Regarding the first point, the large majority of ex-

periments have been undertaken with students; this

usually raises criticism about the external validity

of results gathered in experiments (see Levitt and

List, 2007; Al-Ubaydli and List, 2012, for a broader

view and debate on field vs lab results’ validity).

Many experimental works have addressed this issue

and found that students generally behave similar to

subjects with professional experiences, while demo-

graphic and gender effects are statistically signifi-

cant in the comparison across groups (Guillen and

F.Veszteg, 2006). Fr

´

echette (2009) reports an inter-

esting survey of 13 experimental works in which both

students and professionals took part in different sub-

ject pools. Although there are situations in which fo-

cusing on students is too narrow, he found that there

is not an overwhelming evidence that conclusions

reached by using the standard experimental subject

pool cannot generalize to professionals. In a recent

paper (Giulioni et al., 2013), we investigate the spec-

ulative behavior of entrepreneurs experimentally. We

considered three different subject pools, i.e., univer-

sity students, real life entrepreneurs and high school

students and ask them to take part to an experiment

on individual decision-making with a special focus on

learning to forecast (the experimental setting was the

same across subject pools). Our comparative results

show that there are not relevant differences in the per-

formances and the learning process of the three sub-

ject pools.

Other common reservations are about the salience

of reward human subjects receive at the end of ex-

periments, namely the induced value theory (Smith,

1976). Being results usually based on experiments

with relatively small rewards that are at odds with

high-stakes of real world decisions, many are con-

cerned about the conclusions experimenters draw on

real world choices or markets. Several researchers

have replicated well-known experiments using larger

6

It defines the extent to which the environment that gen-

erated the data corresponds to the model being tested.

7

It defines the ability of the causal relation observed

in the experiment to generalize over subjects and environ-

ments. As noted by Frechette “..[e]xternal validity does not

have to be about generalizing from the subjects or environ-

ment in the experiment to subjects and environment outside

the laboratory. It can also be about variations in subjects and

environments within the experiment (for instance, does the

result apply to both men and women in the experiment?)”

(Fr

´

echette, 2009).

rewards than starting values and found no relevant re-

sults in using higher stakes (Smith and Walker, 1993;

Bordalo et al., 2012).

Moreover, in order to check for robustness of ex-

perimental findings, researchers can replicate the ex-

periment and conduct new analyses (Hunter, 2001;

Maniadis et al., 2013). Replication, comparability of

results and incremental variations (of setting, instruc-

tions, subject pools, etc.) of the experiment are indeed

very important to assess the reliability of results (De-

wald et al., 1986).

5 EXPERIMENTALLY

MICROFOUNDED

AGENT-BASED

MACROECONOMICS

There is a large literature that has focused on par-

allel experiments with real (human) and computa-

tional agents (see, among others, Arifovic (1996);

Duffy (2001); Miller (2008) and Chen (2012) for

a broader comparative perspective on the design of

agents in Agent Based Models). We believe, however,

that combining experimental and computational eco-

nomics will open new and interesting opportunities

for research in macroeconomic issues. One of the first

economists who called attention to the complemen-

tarity of experimental economics and ACE has been

John Duffy, who argued that bottom-up, bounded ra-

tional, inductive models of behavior provide a better

fit for experimental and field data (Duffy, 2006).

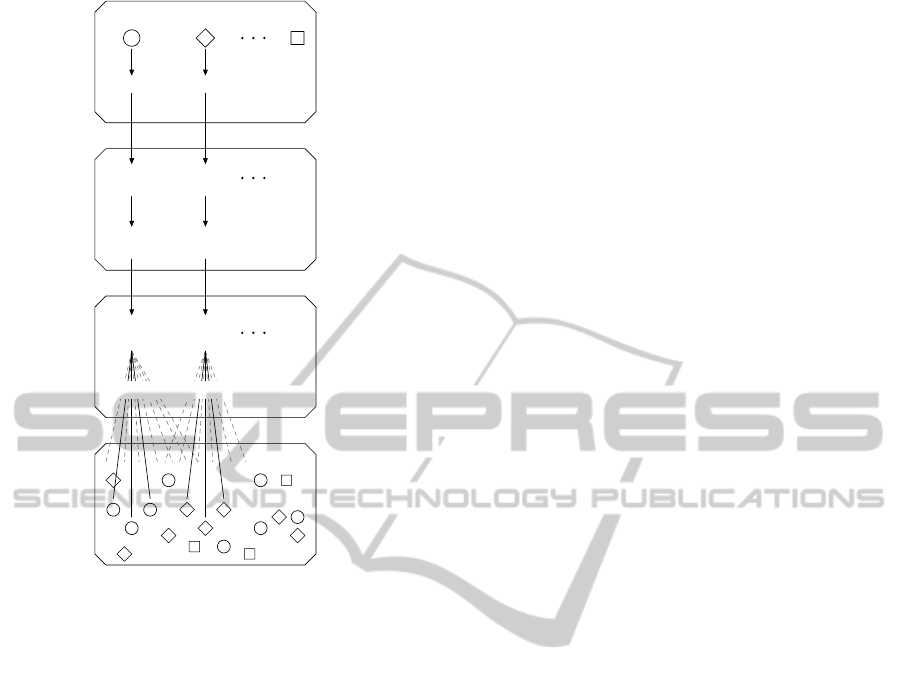

Figure 1 will help in describing our proposed

methodology.

The first phase is identified by the block labeled

experimental level, which consists of gathering data

through laboratory experiments. In the following mi-

crofoundation level phase, a deep data mining process

is performed in order to identify the rules used by

experimental agents. Neural networks, evolutionary

algorithms, heuristics and global optimization tech-

niques are used to identify recurrent patterns of ex-

perimental data gathered from each subject. By ex-

amining these patterns, the researcher can formulate

dynamic behavioral rules that also take into account

the existing theoretical insights concerning the deci-

sion at hand. Finally, the parameters of the iden-

tified behavioral rules are set by using standard or

innovative computationally-intensive techniques. In

the final stage, the induced rules are implemented in

the code of artificial agents (see the artificial agents

box in figure 1). The heterogeneous molded avatars

created in this way are used to build an agent-based

Avatar-basedMacroeconomics-ExperimentalInsightsintoArtificialAgentsBehavior

275

experimental level

Subject Subject Subject

microfoundation level

data

data

mining

rules and

parameters

artificial agents

class for

artificial

agent

data

data

mining

rules and

parameters

class for

artificial

agent

artificial economy

instances instances

⇓

Aggregate outcome

⇓

Policy analisys

Figure 1: Graphical representation of the proposed ap-

proach.

model, which consequently allows the experimenter

to increase the number of agents to a level that makes

comparisons with real macroeconomic data accept-

able. This final step is represented in the artificial

economy box of figure 1.

Our ongoing research on the macroeconomic ef-

fects of entrepreneurs financial behavior shows that

the proposed methodology is effective.

6 CONCLUSIONS

In the proposed approach, experimental and compu-

tational economics cooperate with and complement

each other. In particular, we argue that macroeco-

nomic analyses will benefit from the synergy between

the two approaches. Moreover, by adopting the pre-

sented methodology, computational modeling tech-

niques (e.g., data mining and statistical techniques)

will improve their function in economic analysis. In-

deed, the novelty of our approach resides in the ex-

tremely accurate and demanding work at the micro-

scopic level. As highlighted above, microeconomic

data are often missing or yield ambiguous results,

which causes difficulties in modeling agents’ behav-

iors. Although the proposed approach is highly de-

manding, we claim it provides appropriate guidelines

for molding heterogeneous agents in that it allows the

construction of software agents which represent hu-

man experimental subjects (i.e. avatars). The out-

come of an experimentally-microfounded macroeco-

nomic agent-based model is meant to undergo both

the micro and the macro empirical validation process,

which will ask the researcher to return to the labora-

tory to repeat the microfoundation phase in case of

ambiguous validation results. This process makes the

resulting model particularly reliable. In this sense,

we maintain that the combined use of experiments,

data mining and Agent-based techniques is particu-

larly useful for economic policies analyses.

REFERENCES

Akerlof, G. A. (2007). The Missing Motivation in Macroe-

conomics. American Economic Review, 97(1):5–36.

Al-Ubaydli, O. and List, J. A. (2012). On the generaliz-

ability of experimental results in economics. Working

Paper 17957, National Bureau of Economic Research.

Aoki, M. and Yoshikawa, H. (2007). Reconstruct-

ing Macroeconomics: A Perspective from Statisti-

cal Physics and Combinatorial Stochastic Processes.

Cambridge University Press, Cambridge.

Arifovic, J. (1996). The behavior of the exchange rate in the

genetic algorithm and experimental economies. Jour-

nal of Political Economy, 104(3):510–41.

Arifovic, J. (2000). Evolutionary algorithms in macroe-

conomic models. Macroeconomic Dynamics, 4:373–

414.

Arthur, W. B. (1991). Designing Economic Agents that

Act like Human Agents: A Behavioral Approach to

Bounded Rationality. American Economic Review Pa-

pers and Proceedings, 81(2):353–359.

Arthur, W. B. (1993). On designing economic agents that

behave like human agents. Journal of Ecolutionary

Economics, 3:1–22.

Bordalo, P., Gennaioli, N., and Shleifer, A. (2012). Salience

in experimental tests of the endowment effect. Work-

ing Paper 17761, National Bureau of Economic Re-

search.

Chen, S.-H. (2012). Varieties of agents in agent-based com-

putational economics: A historical and an interdisci-

plinary perspective. Journal of Economic Dynamics

and Control, 36(1):1–25.

Colander, D., Howitt, P., Kirman, A., Leijonhufvud, A.,

and Mehrling, P. (2008). Beyond DSGE Models: To-

ICAART2014-InternationalConferenceonAgentsandArtificialIntelligence

276

ward an Empirically Based Macroeconomics. Ameri-

can Economic Review, 98(2):236–40.

Dewald, W. G., Thursby, J. G., and Anderson, R. G. (1986).

Replication in empirical economics: The journal of

money, credit and banking project. American Eco-

nomic Review, 76(4):587–603.

Duffy, J. (2001). Learning to speculate: Experiments with

artificial and real agents. Journal of Economic Dy-

namics and Control, 25(3-4):295–319.

Duffy, J. (2006). Agent-based models and human subject

experiments. In Tesfatsion, L. and Judd, K. L., editors,

Handbook of Computational Economics, volume 2 of

Handbook of Computational Economics, chapter 19,

pages 949–1011. Elsevier.

Fr

´

echette, G. R. (2009). Laboratory experiments: Pro-

fessionals versus students. The Methods of Modern

Experimental Economics. Oxford University Press,

forthcoming.

Giulioni, G., Bucciarelli, E., Silvestri, M., and D’Orazio,

P. (2013). The educational relevance of experi-

mental economics: a web application for learning

entrepreneurs hip in high schools, universities and

workplaces. In International Journal of Technology

Enhanced Learning, volume forthcoming. Springer-

Verlag.

Guillen, P. and F.Veszteg, R. (2006). Subject pool bias in

economics experiments. ThE Papers 06/03, Depart-

ment of Economic Theory and Economic History of

the University of Granada.

G

¨

urcan, O., Dikenelli, O., and Bernon, C. (2013). A generic

testing framework for agent-based simulation models.

Journal of Simulation, pages 1–19.

Hansen, L. P. and Heckman, J. J. (1996). The empirical

foundations of calibration. Journal of Economic Per-

spectives, 10(1):87–104.

Hertwig, R. and Ortmann, A. (2001). Experimental prac-

tices in economics: A methodological challenge for

psychologists? Behavioral and Brain Sciences,

24:383–403.

Hunter, J. E. (2001). The desperate need for replications.

Journal of Consumer Research, 28(1):149–58.

Janssen, M. C. (2008). Microfoundations. In Durlauf, S. N.

and Blume, L. E., editors, The New Palgrave Dictio-

nary of Economics. Palgrave Macmillan, Basingstoke.

Kirman, A. (1989). The Intrinsic Limits of Modern Eco-

nomic Theory: The Empereur has No Clothes. The

Economic Journal, 99(395):126–139.

Kirman, A. P. (1992). Whom or What Does The Repre-

sentative Individual Represent. Journal of Economic

Perspective, 6:117–36.

Kl

¨

ugl, F. (2008). A validation methodology for agent-based

simulations. In Proceedings of the 2008 ACM sympo-

sium on Applied computing, SAC ’08, pages 39–43,

New York, NY, USA. ACM.

Levitt, S. and List, J. (2007). What do laboratory experi-

ments tell us about the real world? Journal of Eco-

nomic Perspectives, 21(2):153–174.

Lucas, R. J. (1976). Econometric policy evaluation: A cri-

tique. Carnegie-Rochester Conference Series on Pub-

lic Policy, 1(1):19–46.

Maniadis, Z., Tufano, F., and List, J. A. (2013). One swal-

low doesnt make a summer: New evidence on anchor-

ing effects. Discussion Papers 2013-07, The Centre

for Decision Research and Experimental Economics,

School of Economics, University of Nottingham.

Miller, R. M. (2008). Don’t let your robots grow up to

be traders: Artificial intelligence, human intelligence,

and asset-market bubbles. Journal of Economic Be-

havior & Organization, 68(1):153–166.

Rubinstein, A. (1998). Modeling Bounded Rationality. MIT

Press, Cambridge and London.

Sargent, T. J. (1993). Bounded Rationality in Macroeco-

nomics. Clarendon Press, Oxford.

Smith, V. L. (1976). Experimental Economics: In-

duced Value Theory. American Economic Review,

66(2):274–79.

Smith, V. L. (2002). Method in Experiment: Rhetoric and

Reality. Experimental Economics, 5:91–110.

Smith, V. L. and Walker, J. M. (1993). Monetary rewards

and decision cost in experimental economics. Eco-

nomic Inquiry, 31(2):245–261.

Tesfatsion, L. and Judd, K. L., editors (2006). Agent-Based

Computational Economics, volume 2 of Handbook of

Computational Economics. Elsevier/North-Holland

(Handbooks in Economics series).

Velupillai, K. V. (2011). Towards an algorithmic revolu-

tion in economic theory. Journal of Economic Sur-

veys, 25(3):401–430.

Vriend, N. (1994). Artificial intelligence and economic tho-

ery. Many-agent Simulation and Artificial Life (Fron-

tiers in Artificial Intelligence & Applications), pages

31–47.

Werker, C. and Brenner, T. (2004). Empirical Calibra-

tion of Simulation Models. Technical Report 04.12,

Eindhoven Centre for Innovation Studies, The Nether-

lands.

Windrum, P., Fagiolo, G., and Moneta, A. (2007). Empiri-

cal validation of agent-based models: Alternatives and

prospects. Journal of Artificial Societies and Social

Simulation, 10(2).

Avatar-basedMacroeconomics-ExperimentalInsightsintoArtificialAgentsBehavior

277