Evaluation Concept of the Enterprise Architecture Management

Capability Navigator

Matthias Wißotzki and Hasan Koç

Institute of Business Information Systems, University of Rostock, Albert-Einstein-Straße 22, Rostock, Germany

Keywords: Enterprise Architecture Management, Capability Management, Maturity Model Development, Design

Science Research, Action Research.

Abstract: Organizational knowledge is a crucial aspect for the strategic planning of an enterprise. The enterprise

architecture management (EAM) deals with all perspectives of the enterprise architecture with regard to

planning, transforming and monitoring. Maturity models are established instruments for assessing these

processes in organizations. Applying the maturity model development process (MMDP), we are in the

course of a new maturity model construction. Within this work, we first concretize the building blocks of

the MMDP and present the first initiations of the Enterprise Architecture Capability Navigator (EACN).

Afterwards, we discuss the need for an evaluation concept and present the results of the first EACN

evaluation iteration.

1 INTRODUCTION

The idea of the Enterprise Architecture Management

(EAM) paradigm is to model important enterprise

elements and their relationships that allows the

analysis of as-is and target state dependencies (Aier

et al.). It is not only important to be aware of

existing organizational knowledge but also to

continuously gather and assess information about the

quality of individual perspectives and their

dependencies. By making the organizations more

sensitive towards the impact of business strategy

implementation on different architecture layers (e.g.

Business Architecture, Information System

Architecture, Technology Architecture) companies

need to control enterprise-wide EAM processes

(Wißotzki and Sonnenberger, 2012). For this

purpose, the concept of maturity was employed for

EAM which assigns different levels of achievement

by means of a maturity assessment to processes, sub-

processes, capabilities and characteristics (Meyer et

al., 2011).

Maturity models are established instruments for

assessing the development processes in

organizations. In (Wißotzki and Koç, 2013) a

process for the development of a maturity model, the

Maturity Model Development Process (MMDP)

within an EAM project context was introduced. In

this paper we pursue two objectives: The first

objective is the stepwise instantiation of MMDP,

which is carried out in section 2. The second

objective ties into the four building blocks of the

MMDP. After instantiating the first three building

blocks, we discuss the need to develop an evaluation

concept for a very important MMDP artefact, the

Enterprise Architecture Capability Navigator

(EACN), which is described in section 1.1 and in

section 2.2. To meet this second objective, we

summarize the DSR-Evaluation Frameworks

proposed in the literature (Venable, 2006), (Venable

et al., 2012), (Cleven et al., 2009) in section 3 and

begin to instantiate the fourth building block of

MMDP in section 4.

1.1 Maturity Model Development

Process

Maturity Model Development Process (MMDP) is a

method for maturity model construction, which

consists of four different building blocks (i.e.

construction domains). The MMDP ensures the

flexibility of the maturity models because of its

applicability to different scopes. Each building block

focuses on a variety of angles that produce different

outputs and are made up of smaller processes. The

MMDP aims for the model reusability as well as a

systematic building of a design artifact. Section 2

presents the details of MMDP building blocks which

319

Wißotzki M. and Koç H..

Evaluation Concept of the Enterprise Architecture Management Capability Navigator.

DOI: 10.5220/0004881503190327

In Proceedings of the 16th International Conference on Enterprise Information Systems (ICEIS-2014), pages 319-327

ISBN: 978-989-758-029-1

Copyright

c

2014 SCITEPRESS (Science and Technology Publications, Lda.)

have been instantiated so far and also shows the

need of an evaluation concept.

1.2 Enterprise Architecture Capability

Navigator (EACN)

The concept of maturity was employed for EA

which assigns different levels of achievement by

means of a maturity assessment to processes, sub-

processes, capabilities and characteristics with EA

purposes (Meyer et al., 2011, p. 167). In order to do

so, organizations have to carry appropriate actions

into execution which later on should be turned into

strategies. In order for these actions to be executed,

there is a need for an integrated approach which

could be gained by implementing EAM. This is a

prerequisite for an enhanced holistic enterprise view

that reduces the management complexity of business

objects, processes, goals, information infrastructure

and the relations between them. Either way, the

successful adoption of EAM is accompanied by

challenges that an enterprise has to face and to

overcome. In order to implement the operationalized

strategic goals efficiently and to achieve a specific

outcome, the enterprises require EAM capabilities.

The idea of constructing an EAM capability maturity

model was triggered by a cooperation project

between the University of Rostock and alfabet AG

(now Software AG) Berlin. An instrument to assess

and improve the capabilities of EAM is supposed to

be developed in collaboration with our industry

partner. The main purpose of this research is the

identification of EAM capabilities and their transfer

to a flexible, feature-related measurement system

which contains both - the methodology for the

maturity determination and concepts for the further

development of the relevant EAM capabilities of an

enterprise. Based on MMDP, possibilities for

creating and finding capabilities in enterprises as

well as their relations to enterprise initiatives are

explored and defined.

A capability is defined as the organization’s

capacity to successfully perform a unique business

activity to create a specific outcome (Scott et al.,

2009) and the ability to continuously deliver a

certain business value in dynamically changing

business environments (Stirna et al., 2012).

Unfortunately, this definition is not detailed

enough for our purposes due to missing descriptive

elements. In our approach, a capability generally

describes the ability to combine information relating

to a specific context like architecture objects and

management functions for EAM Capabilities or

business objects and management functions for

Business Capabilities. The context elements merged

with a combination of information relating to e.g.

information about architecture models or standards,

roles with corresponding competences to create a

specific outcome that should be applicable in an

activity, task or process with appropriate available

resources such as technologies, HR, Budget,

Personnel will form our EAM Capability illustrated

in Figure 1 (Wißotzki et al., 2013)

Figure 1: EAM Capability

1

.

In this context, EACN is an elementary approach

that identifies the EAM capabilities which are

derived through structured processes and then

gathered in an enterprise specific repository for an

efficient operationalization of enterprise initiatives.

EACN is comprised of Capability Solution

Matrix, Capability Constructor, Capability Catalog,

Evaluation Matrix and recommendations for

improvements which are elaborated in section 2.1.2

and in (Wißotzki et al., 2013).

1.3 Methods in the Maturity Model

Research

Organizations will increasingly adopt maturity

models to guide the development of their capabilities

and new maturity models that assist decision makers

in practice will not diminish (Niehaves et al., p.

506). Thus, maturity model research gained

increased attention in both practice and academia.

Based on the comprehensive study in the maturity

model research that has been conducted by Wendler

(Wendler, 2012), two main research paradigms are

identified in the development of maturity models.

Conceptual research is an artifact of the designer´s

creative efforts which is only to a very small extent

grounded in empirical data (Niehaves et al., pp. 510–

511). In context of maturity models, research

activity is conceptual if the developed artifact has

1

Image provided by Corso Ltd..

ICEIS2014-16thInternationalConferenceonEnterpriseInformationSystems

320

not been verified via empirical methods (Wendler,

2012, p. 1320). On the other side, design-science

research (DSR) is a construction-oriented problem

solving paradigm in which a designer creates

innovative artifacts answering questions relevant to

human problems, thereby contributing new

knowledge to the body of scientific evidence

(Hevner and Chatterjee, 2010). As a problem-

solving paradigm, design-science research resembles

utility and its artifacts have to be evaluated. The

research of maturity model development adopts

widely conceptual research (Solli-Sæther and

Gottschalk, 2010, p. 280) that outweighs design-

oriented model developments in maturity model

research (Wendler, 2012) which has significant

consequences for validation. The empirical methods

like surveys, case studies, interviews, action

research, literature reviews are rarely used within a

conceptual design, hence many maturity models

which were developed conceptually are suffering a

lack of proper validation of their structure and

applicability.

Validation is “the degree to which a maturity

model is an accurate representation of the real world

from the perspective of the intended uses of the

model” (Mettler, 2011, p. 92) and “the process of

ensuring that the model is sufficiently accurate for

the purpose at hand” (Sarah Beecham et al., 2005, p.

2). There is a need for studies in the field of

evaluation in maturity model research (Niehaves et

al., p. 506), (Solli-Sæther and Gottschalk, 2010, p.

280). The research topics in this area generally cover

maturity model development and application but

only few of them deal with the evaluation of

maturity models. Even though, authors that are

developing maturity models include empirical

studies to validate their models, the low numbers

indicate very limited evaluation studies in maturity

model research (Wendler, 2012, p. 1324).

To classify our research approaches applied up to

date, the MMDP is based on the maturity model

development procedure of Becker and extends it in

accordance with our specific project needs

(Wißotzki et al., 2013). EACN on the other hand

adopts a multi-methodological procedure. As a

result, they mainly adopt the design science research

paradigm. Nevertheless, both have their shortfalls in

the evaluation processes. Wendler (2012, p. 1320)

and Becker et al., (2009a, p. 214) state that “a

maturity model has to be evaluated via rigorous

research methods”. (Recker, 2005) adds that “no

problem-solving process can be considered complete

until evaluation has been carried out”. The

evaluation step is a substantial element of a DSR

artifact, the utility, quality and efficiency of a DSR

artifact has to be demonstrated in order to fulfil

relevance and rigor (Hamel et al., 2012, p. 7),

(Cleven et al., 2009, p. 2).

2 PREVIOUS DEVELOPMENTS

In this section the initial outputs of the Maturity

Model Development Process (MMDP) are

presented. As described in section 1. the MMDP

adopts four building blocks. The first building block

is already completed. The second building block

(EACN) is still under development but the main

concepts have been published. There is not much

activity in the third building block, which is planned

to be developed after the completion of the fourth

building block that conceptualizes a method to

evaluate and maintain the resulting artifact (EACN).

The building blocks of the MMDP are detailed in the

following.

2.1 1

st

Building Block: Scope

The first building block mainly determines the scope

of the maturity model and examines need to develop

a model to solve addressed problems. In this section

the findings of the first building block in MMDP are

specified, which should provide a framework for the

second building block.

i. Define Scope: The EACN is developed for a

successful integration and enhancement of

capabilities to support enterprise-wide management

of different architectures. The aim of this project is

the development of a maturity model to assess and to

improve the EAM capabilities. Hence the scope of

EACN is rather specific than being general.

ii. Problem Definition: Enterprise strategies are in

close relation to various dimensions like business

goal definition, business technology, roles as well as

their combinations. There is a need for an integrated

management approach in order to take successful

actions in these domains which could be achieved by

implementing EAM. Nevertheless, adoption of EAM

is accompanied by challenges that an enterprise has

to overcome by deploying its capabilities (Wißotzki

et al., 2013).

iii. Comparison: There is a need of a

comprehensive model that can ease above

mentioned challenges by (1) identifying the

capabilities in the enterprise (2) evaluating their

current and target-state and (3) recommending best

practices for improvement, if necessary. The model

should also enable systematic development of

EvaluationConceptoftheEnterpriseArchitectureManagementCapabilityNavigator

321

capabilities, if they are not present in the enterprise

repertoire yet. In our research process we were not

able to identify such a model.

iv. (Basic) Strategy for Development: The EACN

will be developed from the scratch. University of

Rostock and industry partner are involved in this

development process. The capabilities and the

methodology have to be constructed in a scientific

manner that it aligns to well-established practices.

Together with the transparent documentation of the

development processes, the model reusability has to

be assured, for instance in form of applying meta-

models.

v. Design Model: In this phase the needs of the

audience are incorporated, defined and how these

needs are going to be met (Bruin et al., 2005).

Capabilities, domains, areas and/or processes as well

as the dimensionality of the maturity model are

designed. Moreover, we decided on how these items

should be populated. In this perspective, EACN

defines the maturity levels process, object and target

group and it is a multidimensional approach since it

emphasizes capabilities that relate to different

domains (architecture objects and/ or management

functions). The whole process was designed in both

ways - theory-driven (Wißotzki et al., 2013, p. 115)

and practice-based (alfabet AG/ Software AG ).

As elaborated in (Wißotzki and Koç, 2013) and

in section 1.3, the maturity model development

process aligns with the procedure model of Becker

et al., (2009a) and with decision parameters of

Mettler (Mettler, 2009). Moreover, EACN deploys

the idea of “capability meta-model” in general based

on (Steenbergen et al., 2010, p. 327). The

requirements of the first building block is almost

defined such that only a design model issue remains

unanswered which comprises of the application

method (how) of the maturity model.

2.2 2

nd

Building Block: EACN

The EACN is being developed on the basis of the

second building block in MMDP, which uses the

findings of the first building block. The construction

of EACN elements is an on-going process and in this

section we report to what extent the EACN has been

instantiated.

EAM Capability Catalog: A repository of existing

capabilities in an enterprise. If new capabilities have

to be introduced then these will developed via the

EAM Capability Solution Matrix and the EAM

Capability Constructor and preserved in the EAM

Capability Catalog.

EAM Capability Solution Matrix: The EAM

Capability Catalog is enriched by the set of

capabilities that are derived from EAM Capability

Solution Matrix. The solution matrix has two

dimensions, namely management functions and EA

objects and shows how the capabilities relate to each

other. The management functions (planning,

transforming, monitoring) and its components are

derived from (Ahlemann, 2012, pp. 44–48). The EA

objects (business architecture, information system

architecture etc.) and its contents are constructed and

extended on the basis of The Open Group

Architecture Framework (TOGAF). The EAM

Capability Solution Matrix is the set of all

capabilities and it is not enterprise specific.

Therefore, it is the superset of any EAM Capability

Catalog. Strategies are initial impulses for actions to

be taken about certain topics. Business strategies can

be derived from enterprise goals or business models,

concretized via measure catalogue and implemented

via projects – in our approach EAM projects. To

identify the relevant capabilities that help to

implement a strategy, an adapted version of the

information demand analysis (IDA) method is

executed (Lundqvist et al., 2011). The analysis

supports the determination of the target state (to-be)

maturity of the corresponding EAM capabilities. On

this basis, the required capabilities are mapped into

the EAM Capability Catalog. As an example, we

think of a fictional enterprise that aims to implement

a certain EAM strategy called “architecture

inventory”, which supports in the establishment of

the practice to sustain a reliable documentation of

the enterprise architecture by focusing on identifying

the data stewards and data requirements. The

benefits of such EAM strategy are reliable

architecture information, standardized

communication and reduced project effort for

current landscape analysis as well as enhanced ad-

hoc reporting. In order to identify the required

capabilities to implement “Build up an architecture

inventory”, an IDA is carried out. According to the

analysis, the capability “Impact Analysis IS

Architecture” must be available in the enterprise in a

certain maturity, which is assessed regarding its

specific and generic criteria. This capability is an

element of “Planning Lifecycle” management

function and uses the objects from the “Information

System Architecture” such as application and

information flow in the EAM Capability Solution

Matrix.

EAM Capability Constructor: A meta-model for a

structured design of capabilities. If a capability is not

an element of the enterprise´s repertoire yet, then it

can be developed via the EAM capability

ICEIS2014-16thInternationalConferenceonEnterpriseInformationSystems

322

constructor.

Evaluation Matrix: The evaluation method for the

EA Capabilities. The results of the matrix help to

assign a maturity level to the capabilities. After the

assessment of specific criteria as well as the generic

criteria, a maturity level is assigned to the capability.

The first iteration of the model development phase

(2

nd

building block) has not been fully completed

yet. We are working on the iterations to create

capabilities and define their specific and generic

criteria aligning them with the management

functions and architecture objects.

2.3 3

rd

Building Block: Guidelines

In general it is possible to differentiate between

prescriptive, descriptive and comparative maturity

models. The descriptive maturity models are applied

to assess the current state of an organization whereas

a comparative maturity model is applied for

benchmarking across different organizations (Bruin

et al., 2005), (Röglinger et al., 2012), (Ahlemann et

al., 2005). Prescriptive models do not only assess the

as-is situation but also recommend guidelines, best

practices and roadmaps in order to reach higher

degrees of maturity. In this building block, the

maturity model developer identifies the best-

practices that help to improve the relevant

capabilities.

3 THE EVALUATION PROBLEM

3.1 Why Do We Evaluate a

DSR-Artifact?

The term artifact is used to describe something that

is artificial or constructed by humans as opposed to

something that occurs naturally. The artifacts are

“built to address an unsolved problem” and those are

evaluated according to their “utility provided in

solving these problems” (Hevner et al., 2004, pp.

78–79). In order to demonstrate its suitability and

prove evidence, a DSR artifact has to be evaluated

through rigorous research methods after its

construction (Wendler, 2012, p. 1320), (Venable et

al., 2012, p. 424). Still, most of the maturity models

are being developed based on the practices and lack

a theoretical foundation (Garcia-Mireles et al., 2012,

p. 280). This generates side-effects in model

transparency since the model development is not

being documented systematically (Mettler and

Rohner, 2009, p. 1), (Becker et al., 2009a, p. 221),

(Judgev and Thomas, 2002, p. 6), (Becker et al.,

2009b, p. 4), (Solli-Sæther and Gottschalk, 2010, p.

280), (Niehaves et al., p. 510).

3.2 Evaluation Concepts in Maturity

Model Research

According to the comprehensive study by Wendler,

39 percent of the maturity model development

approaches are validated, 61 percent of the

developed models are not validated at all and only

24 percent of these plan further validation. 85

percent of these models which are not validated

apply conceptual research methods whereas only

around 2 percent of them adopt design science

research. Nearly 100 percent of all models

developed applying design science research have

validated their methods/models, 29 percent plans

even further validation. Without excluding the

underlying research paradigm, qualitative methods

in form of case studies or conducting action research

was applied mostly in the validation of maturity

models (Wendler, 2012, pp. 1326–1327).

3.3 Research Approach

The Maturity Model Development Process (MMDP)

and Enterprise Architecture Capability Navigator

(EACN) are artifacts of the DSR paradigm that uses

mostly action research and expert interviews as

research methods. Moreover, both MMDP and

EACN are conceptual and utilize literature research

in this context. In line with (Venable and Iivari,

2009) we separate the DSR activities from its

evaluation. For this reason, we have to focus on the

evaluation methods and develop concepts for further

application.

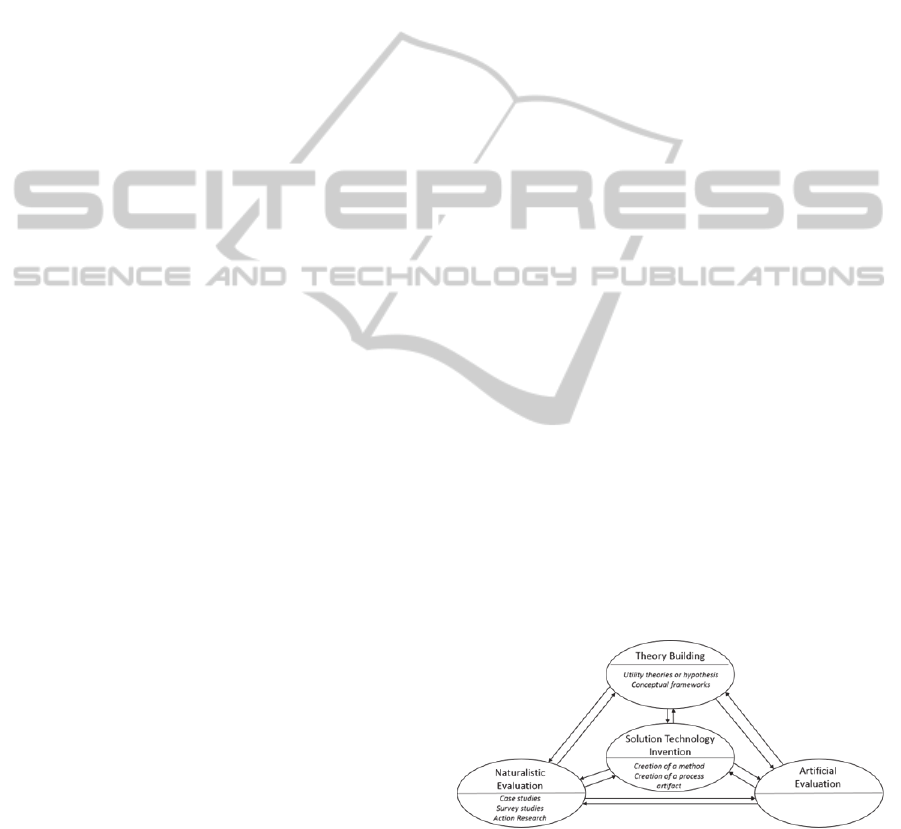

Figure 2: Framework for DSR based on (Venable, 2006, p.

185).

Applying the framework of (Venable, 2006, p. 185)

illustrated in Figure 2, the utility hypothesis in the

field of maturity model development and EAM is

proposed (see also section 2.1.1, the first two

phases) in form of conceptual frameworks and

EvaluationConceptoftheEnterpriseArchitectureManagementCapabilityNavigator

323

challenges (Wißotzki and Koç, 2013), (Wißotzki et

al., 2013). Building on these hypotheses, a method

(MMDP) and a process artifact (EACN) is

constructed which should be evaluated. Since these

artifacts are process artifacts, they are classified as

socio-technical, i.e. “ones with which humans must

interact to provide their utility”. Therefore, the

performance of the solution artifact is to be

examined in its real environment, for instance in an

organization with real people and real systems

(Venable et al., 2012, pp. 427–428). This evaluation

type includes amongst others case studies, surveys

and action research. Due to its characteristics,

neither the method nor the process artifact is likely

to be evaluated artificially via laboratory

experiments, field experiments, mathematical proofs

etc. Detailed information about the evaluation

criteria is given in section 4.

4 4

TH

BUILDING BLOCK:

AN EVALUATION CONCEPT

FOR EACN

4.1 Concept Design

In section 2 we presented the initial outcomes of our

first iteration of the construction of EACN applying

the MMDP. Due to the reasons introduced in section

1.3, performing the fourth building block of MMDP

is relevant and necessary since it focuses mainly on

model evaluation and maintenance. The first step of

the fourth building block is the design of an

evaluation concept which development is still in

progress. This section elaborates the outcomes of

our current state of work concerning the evaluation

concept design. The subject of evaluation is the

design product (or artifact) and not the design

process (MMDP) itself. Nevertheless we are aware

of the necessity for further evaluation that focuses

on the design process.

For the construction of the evaluation concept, a

strategy building on contextual aspects has to be

developed. These aspects include the different

purposes of evaluation, the characteristics of the

evaluand to be evaluated as well as the type of

evaluand to be evaluated, which are then mapped to

ex-ante vs. ex-post and naturalistic vs. artificial

evaluation (Venable et al., 2012, p. 432). In this

perspective we prioritized the relevant criteria and

constructed a catalog, in which two choices are

possible. The results should help us to classify our

evaluation concept and find appropriate methods to

carry out the evaluation.

We have different stakeholders that participate to

the evaluation process of EACN. Since the

stakeholders are operating in different sectors and

have different business models, this diversity might

lead to conflicts relating to enterprise terminology,

methodologies or enterprise specific capabilities.

Furthermore we could not identify any risks for the

evaluation participants. The problem at hand is real

(see the problem definition in section 2.1.1). The

objective is to evaluate the effectiveness of the

constructed socio-technical artifact in real working

situations, therefore we need real users and sites for

naturalistic evaluation. Since we have our

cooperation partner financial issues might certainly

constrain the evaluation and research project, which

is why, the evaluation should be carried out rather

fast and with lower risk of false positive. In contrast

to that, there is not an intense time pressure for the

evaluation. Both early and late evaluation are

feasible for us, since we plan to demonstrate partial

prototype and then to move from partial to full

prototype evaluating each artifact. Each evaluation

cycle should optimize the prototype. Not the early

instantiations of EACN should be classified as safety

critical, but the mature artifact (or full prototype)

itself. As a result, the evaluation should be executed

with naturalistic methods. In line with (Peffers et al.,

2007) we divide the evaluation process to

“demonstration” and “evaluation” activities. The

evaluation process as a whole is both ex-ante and ex-

post, since we plan to demonstrate (ex-ante) and

then evaluate (ex-post) each artifact and optimize it

for the next evaluation cycle. In accordance with

Wendler “ongoing validation may take place while

using the maturity model in real environments to test

its applicability and search for improvements” (Roy

Wendler, 2012, p. 1332). Since the purpose of the

evaluation is to identify the weaknesses and

improvement areas of a DSR artifact that is under

development, the early processes are classified as

formative or alpha evaluation (Venable et al., 2012,

p. 426). Our objective is to move the more mature

artifact that builds on early evaluation iterations to

the late (beta) evaluation. This latter evaluation

process is summative and is executed in a wider

organizational context with more complex settings

(Sein et al., 2011, p. 43). The more we move from

demonstration to evaluation (from ex-ante towards

ex-post), the larger are the enterprises and the

models to be evaluated. Hence, the evaluation

method might comprise of action research, focus

group, surveys, case studies and expert interviews.

ICEIS2014-16thInternationalConferenceonEnterpriseInformationSystems

324

4.2 Research Method: Action Research

The evaluation is being conducted ex-ante moving in

the direction of ex-post, since we have an artifact at

hand, which has not yet reached its complete state.

For this reason, we need to choose a research

method that allows us to create evaluation iterations.

In this context Action Research (AR) seems to be

an ideal research method that allows obtained

knowledge to be applied in a cyclical process

through the active involvement of the researcher (De

Vries Erik J., 2007, p. 1494). It is an iterative

process involving researchers and practitioners

acting together on a particular cycle of activities

(Avison et al., 1999, p. 94) and considered as an

approach in Wirtschaftsinformatik (WI) with a

methodological foundation. One of the major

advantages with regards to our project is that the

action research could help us to overcome the

problem of persuading our project partner to adopt

new techniques and bridge the “cultural” gaps that

might exist between the academics and practitioners

(Moody and Shanks, 2003). (Avison et al., 1999, p.

95) states “in action research, the researcher wants to

try out a theory with practitioners in real situations,

gain feedback from this experience, modify the

theory as a result of this feedback, and try it again.”

We design our evaluation concept in line with

(Hatten et al.) and adapt the action research spiral

form. The action research encourages researchers “to

experiment through intervention and to reflect on the

effects of their intervention and the implication of

their theories” (Avison et al., 1999, p. 95). First, a

plan is developed and implemented (act). Then the

actions are observed to collect evidence and evaluate

the outcomes. With regard to these outcomes, the

researching group members collect the positive

(what went right) and negative (what went wrong)

outcomes in order to improve the idea in the next

cycles (M

2

…M

n

) (Moody and Shanks, 2003). Each

iteration of the action research process adds to the

theory (Avison et al., 1999, p. 95).

4.3 Implementation of the AR Cycles

In this section we elaborate the evaluation concept

for EACN and introduce the realization of the first

AR cycle (ARC

1

). The organization involved in this

first AR cycle was the IT and Media Center (ITMC)

of the University of Rostock. The ITMZ represents a

central organizational unit of the university and

provides services regarding e.g. information

processing, provision of information/communication

networks, application procurement or user support.

For service quality assurance the ITMC has to plan,

transform and monitor its EA in different projects.

One of the projects the ITMC is currently

conducting is the replacement of the existing

Identify Management System by a new one.

Plan

1

- Using the EACN and ITMC for a First

Method Evaluation: Based on 11 capabilities we

predefine for the evaluation the EACN is used to

evaluate the as-is maturity of the ITMCs’

capabilities that belong to a specific architecture

object (in this case application) and the whole

architecture management lifecycle (planning,

transforming, monitoring) required to realize afore

mentioned project. The procedure is guided by the

methodological assessment approach of SPICE a

(Hörmann, 2006). A first ARC is produced that

describes the 63 capability attributes, the assessment

method and execution.

Act

1

- Separate Interviews with Responsible: The

university internal assessment is going to be

conducted by 1.5 hours separate interviews with the

organizational unit owner and with corresponding

application owner and project leads. The participants

will be prepared for the assessment in terms of

introduction to the EACN research project,

assessment methodology and results that are going

to be deduced.

Observe

1

- (In progress): After gathering the first

outputs from the “Act” step, we will be observing

the process of maturity evaluation as well as the

structuring and performance of the assessment.

Master thesis and the interview protocols should also

support in collecting such evidence for thorough

evaluation.

Reflect

1

- (In progress): In this phase we will be

detecting inputs for EACN adaption and identify

improvements for AR cycle execution when the

evaluation in ITMC is completed. The objective is,

as mentioned before, to improve the artifact after

every evaluation cycle and then re-evaluate it until it

reaches certain maturity.

5 CONCLUSION / OUTLOOK

In this work, we first motivated the concepts of

Enterprise Architecture Capability Navigator

(EACN), which serves as an instrument to assess

and improve the capabilities of Enterprise

Architecture Management, as well as Maturity

Model Development Process (MMDP), which was

used to develop EACN. Following that, the initial

outputs of the EACN is detailed in in section 2.

Both MMDP and EACN are constructed

EvaluationConceptoftheEnterpriseArchitectureManagementCapabilityNavigator

325

applying mainly the DSR paradigm. Their utility,

quality and efficacy have to be demonstrated via

rigorous research methods. For this purposes,

section 3 presented the evaluation concepts in DSR

and maturity model research. Moreover, our up-to-

date research has been classified via the frameworks

of (Venable, 2006, p. 185) and the guidelines of

(Hevner et al., 2004, p. 83). To ensure the model

accuracy for the problem at hand, we concluded on

design of an evaluation concept.

Based on the criteria of (Venable et al., 2012, p.

432) and the framework of (Cleven et al., 2009, pp.

3–5), the research method for the evaluation process

was chosen in section 4. To summarize, the

evaluation approach is qualitative and it focuses on

organizational levels since the evaluand is a model

for capability development, identification and

assessment. The reference point is the artifact itself

against the real world and the object is the

evaluation of the artifact from its deployment

perspective. The evaluation should serve controlling

and development functions, thus the evaluation time

should expand from ex ante to ex post as the

evaluation cycles grow. The most appropriate

research method in this respective was action

research, hence the AR spiral form was adapted

(Hatten et al.). In this respective, we started our first

evaluation cycle (ARC

1

) with the ITMC of the

University of Rostock. Therefore an appropriate

project and corresponding participants were selected

on which the evaluation has to be applied. The

execution of the ARC

1

is an ongoing process and it

will be finished with “observe” and “reflect” phase

at the end of October 2013. Based on the ARC

1

results we will start with the next evaluation cycle

(ARC

2

) at the beginning of November. In context of

a master class with scientific and industrial

practitioners at the 6th IFIP WG 8.1 Working

conference on the Practices of Enterprise Modeling

(PoEM2013) in Riga, Latvia we plan to evaluate the

capability identification process and selected parts of

the capability solution matrix. The last ARC

3

in

2013 is going to be executed in cooperation with our

project partner alfabet AG in Boston at the end of

November. We are going to evaluate usability and

feasibility of completed parts of the EACN. In 2014

we are going to apply the whole EACN in ACR4

with an industry partner that is yet to be defined.

As elaborated in this work and the subject of

evaluation is actually the design product and not the

design process itself. Therefore the development and

implementation of an evaluation concept for the

artifact Maturity Model Development Process

(MMDP) remains as an attractive research topic. We

invite all the scholars and practitioners who are

interested in this research area for contribution.

REFERENCES

Ahlemann, F. (2012), Strategic enterprise architecture

management: Challenges, best practices, and future

developments, Springer, Berlin, New York.

Ahlemann, F., Schroeder, C. and Teuteberg, F. (2005),

Kompetenz- und Reifegradmodelle für das

Projektmanagement: Grundlagen, Vergleich und

Einsatz, ISPRI-Arbeitsbericht, 01/2005, Univ. FB

Wirtschaftswiss. Organisation u. Wirtschafts-

informatik, Osnabrück.

Aier, S., Riege, C. and Winter, R., Unternehmens-

architektur. Literaturüberblick und Stand der Praxis”,

Wirtschaftsinformatik, Vol. 2008, pp. 292–304.

Avison, D.E., Lau, F., Myers, M.D. and Nielsen, P.A.

(1999), “Action research”, Commun. ACM, Vol. 42

No. 1, pp. 94–97.

Becker, J., Knackstedt, R. and Pöppelbuß, J. (2009a),

“Developing Maturity Models for IT Management”,

Business & Information Systems Engineering, Vol. 1

No. 3, pp. 213–222.

Becker, J., Knackstedt, R. and Pöppelbuß, J. (2009b),

Dokumentationsqualität von Reifegradmodellent-

wicklungen.

Bruin, T. de, Rosemann, M., Freeze, R. and Kulkarni, U.

(2005), “Understanding the Main Phases of

Developing a Maturity Assessment Model”, in

Information Systems Journal, ACIS, pp. 1-10.

Cleven, A., Gubler, P. and Hüner, K.M. (2009), “Design

alternatives for the evaluation of design science

research artifacts”, in Proceedings of the 4th

International Conference on Design Science Research

in Information Systems and Technology, ACM, New

York, NY, USA, pp. 19:1-19:8.

De Vries Erik J. (2007), “Rigorously Relevant Action

Research in Information Systems”, in Hubert Österle,

Joachim Schelp and Robert Winter (Eds.),

Proceedings of the Fifteenth European Conference on

Information Systems, ECIS 2007, St. Gallen,

Switzerland, 2007, University of St. Gallen, pp. 1493–

1504.

Garcia-Mireles, G.A., Moraga, M.A. and Garcia, F.

(2012), “Development of maturity models: A

systematic literature review”, in Evaluation &

Assessment in Software Engineering (EASE 2012),

16th International Conference on, IEEE, [Piscataway,

N.J.

Hamel, F., Herz, T.P., Uebernickel, F. and Brenner, W.

(2012), “Facilitating the Performance of IT Evaluation

in Business Groups: Towards a Maturity Model”,

available at: https://www.alexandria.unisg.ch/

Publikationen/214608.

Hatten, R., Knapp, D. and Salonga, R., “Action Research:

Comparison with the concepts of ‘The Reflective

Practitioner’ and ‘Quality Assurance’ ”, in Hughes, I.

ICEIS2014-16thInternationalConferenceonEnterpriseInformationSystems

326

(Ed.), Action Research Electronic Reader.

Hevner, A.R. and Chatterjee, S. (2010), Design research

in information systems: Theory and practice, Springer,

New York, London.

Hevner, A.R., March, S.T., Park, J. and Ram, S. (2004),

“Design science in information systems research”,

Management Information Systems Quarterly, Vol. 28

No. 1, pp. 75–106.

Hörmann, K. (2006), SPICE in der Praxis:

Interpretationshilfe für Anwender und Assessoren, 1st

ed., Dpunkt-Verl., Heidelberg.

Jan C. Recker (2005), “Conceptual model evaluation.

Towards more paradigmatic rigor”, in Jaelson Castro

and Ernest Teniente (Eds.), CAiSE’05 Workshops,

Faculdade de Engenharia da Universidade do Porto,

Porto, Porto, Portugal, pp. 569–580.

Judgev, K. and Thomas, J. (2002), Project management

maturity models: The silver bullets of competitive

advantage, Project Management Institute; University

of Calgary; Civil Engineering; Engineering.

Lundqvist, M., Sandkuhl, K. and Seigerroth, U. (2011),

“Modelling Information Demand in an Enterprise

Context”, International Journal of Information System

Modeling and Design, Vol. 2 No. 3, pp. 75–95.

Mettler, T. (2011), “Maturity assessment models: a design

science research approach”, International Journal of

Society Systems Science, Vol. 3 1/2, p. 81.

Mettler, T. and Rohner, P. (2009), “Situational maturity

models as instrumental artifacts for organizational

design”, in Proceedings of the 4th International

Conference on Design Science Research in

Information Systems and Technology, ACM, New

York, NY, USA, pp. 22:1-22:9.

Meyer, M., Helfert, M. and O'Brien, C. (2011), “An

analysis of enterprise architecture maturity

frameworks”, in Perspectives in business informatics

research, Springer, Berlin [u.a.], pp. 167–177.

Moody, D.L. and Shanks, G.G. (2003), “Improving the

quality of data models: empirical validation of a

quality management framework”, Inf. Syst., Vol. 28

No. 6, pp. 619–650.

Niehaves, B., Pöppelbuß, J., Simons, A. and Becker, J.,

“Maturity Models in Information Systems Research.

Literature Search and Analysis”, in Communications

of the Association for Information Systems, Vol. 29,

pp. 505–532.

Peffers, K., Tuunanen, T., Rothenberger, M. and

Chatterjee, S. (2007), “A Design Science Research

Methodology for Information Systems Research”, J.

Manage. Inf. Syst., Vol. 24 No. 3, pp. 45–77.

Röglinger, M., Pöppelbuß, J. and Becker, J. (2012),

“Maturity models in business process management”,

Business process management journal, Vol. 18 No. 2,

pp. 328–346.

Roy Wendler (2012), “The maturity of maturity model

research: A systematic mapping study”, Information

and Software Technology, Vol. 54 No. 12, pp. 1317–

1339.

Sarah Beecham, Tracy Hall, Carol Britton, Michaela

Cottee and Austen Rainer (2005), “Using an expert

panel to validate a requirements process improvement

model”, Journal of Systems and Software, Vol. 76.

Scott, J., Cullen, A. and An, M. (2009), Business

capabilities provide the rosetta stone for Business-IT

Alignment: Capability Maps Are A Foundation For

BA, available at: www.forrester.com.

Sein, M.K., Henfridsson, O., Purao, S., Rossi, M. and

Lindgren, R. (2011), “Action design research”, MIS Q,

Vol. 35 No. 1, pp. 37–56.

Solli-Sæther, H. and Gottschalk, P. (2010), “The modeling

process for stage models”, Journal of organizational

computing and electronic commerce. - Norwood, NJ

Ablex Publ., ISSN 1091-9392, ZDB-ID 13313915. -

Vol. 20.2010, 3, p. 279-293, pp. 279–293.

Steenbergen, M., Bos, R., Brinkkemper, S., Weerd, I. and

Bekkers, W. (2010), “The design of focus area

maturity models”, in Hutchison, The Design of Focus

Area Maturity Models, Springer Berlin Heidelberg,

Berlin, Heidelberg.

Stirna, J., Grabis, J., Henkel, M. and Zdravkovic, J.

(2012), “Capability driven development. An approach

to support evolving organizations”, in PoEM 5th IFIP

WG 8.1 Working Conference, 2012, Rostock,

Germany, Springer, Heidelberg [u.a.], pp. 117–131.

Tobias Mettler (2009), “A Design Science Research

Perspective on Maturity Models in Information

Systems”.

Venable, J. (2006), “A framework for Design Science

research activities”, in Khosrow-Pour, M.((Ed.),

Information Resources Management Association

International Conference, Washington, DC, May 21,

Idea Group Publishing., pp. 184–187.

Venable, J. and Iivari, J. (2009), “Action research and

design science research - Seemingly similar but

decisively dissimilar”, ECIS 2009 Proceedings.

Venable, J., Pries-Heje, J. and Baskerville, R. (2012), “A

Comprehensive Framework for Evaluation in Design

Science Research”, DESRIST 2012, Las Vegas, NV,

USA, May 14-15, 2012. Proceedings, Springer-Verlag,

Berlin, Heidelberg, pp. 423–438.

Wißotzki, M. and Koç, H. (2013), “A Project Driven

Approach for Enhanced Maturity Model Development

for EAM Capability Evaluation”, in IEEE-EDOCW,

296-305, Vancouver, Canada.

Wißotzki, M., Koç, H., Weichert, T. and Sandkuhl, K.

(2013), “Development of an Enterprise Architecture

Management Capability Catalog”, in Kobyliński, A.

and Sobczak, A. (Eds.), BIR, Vol. 158, Springer Berlin

Heidelberg, pp. 112–126.

Wißotzki, M. and Sonnenberger, A. (2012), “Enterprise

Architecture Management - State of Research Analysis

& A Comparison of Selected Approaches”, PoEM 5th

IFIP WG 8.1 Working ConferenceRostock, Germany,

November 7-8, 2012, CEUR-WS.org.

EvaluationConceptoftheEnterpriseArchitectureManagementCapabilityNavigator

327