Vision based System for Vacant Parking Lot Detection: VPLD

Imen Masmoudi

1,2

, Ali Wali

2

, Anis Jamoussi

1

and Adel M. Alimi

2

1

Power’s Mind: Enterprise for Video surveillance Solutions Development, Sfax, Tunisia

2

REGIM-Lab: Research Groups on Intelligent Machines,

University of Sfax, National Engineering School of Sfax, BP 1173, Sfax, 3038, Tunisia

Keywords:

Parking, Homography, Adaptive Background Subtraction, SURF, HOG, SVM.

Abstract:

The proposed system comes in the context of intelligent parking lots management and presents an approach

for vacant parking spots detection and localization. Our system provides a camera-based solution, which can

deal with outdoor parking lots. It returns the real time states of the parking lots providing the number of

available vacant places and its specific positions in order to guide the drivers through the roads. In order

to eliminate the real world challenges, we propose a combination of the Adaptive Background Subtraction

algorithm to overcome the problems of changing lighting and shadow effects with the Speeded Up Robust

Features algorithm to benefit from its robustness to the scale changes and the rotation. Our approach presents

also a new state ”Transition” for the classification of the parking places states.

1 INTRODUCTION

Parking is becoming a major problem especially with

the increasing number of vehicles in the metropoli-

tan areas. The search for an available parking space

through the roads is usually a waste of time princi-

pally in the pic periods when the parking are crowded

or almost full. Hence the need to a parking lot man-

agement system to efficiently assist drivers to find

empty parking lots and identify their positions over

time. Many existent systems are using sensor-based

techniques such as ultrasound and infra-red-light sen-

sors. But this type of systems may requires high costs

for installation and maintenance. Therefore, there is

a growing interest in the use of vision-based systems

in recent years thanks to its high performances with a

low cost solution. These solutions can cover a large

number of parking places with a minimal number of

cameras, in addition to many other services which can

be provided, like driver guidance and video surveil-

lance.

In this context, our work aims to introduce a

vision-based system for vacant parking space detec-

tion. It can provide an intelligent solution that reliably

counts the total number of vacant places in a parking

lot, precisely specifies their location and detects the

changes of status in real time. The development of a

robust solution must face many challenging issues. In

practice, the major challenges of such a system come

from lighting conditions, shadow, occlusion effects

and perspective distortion. To overcome these chal-

lenges we propose a new approach combining a set of

treatments. A homography transformation is ensured

in preprocessing phase to change the point of view of

the scene and reduce the effects of perspective distor-

tion. Then two algorithms are explored, the Adaptive

Background Subtraction for the detection of objects

in motion in the scene and the Speeded Up Robust

Features SURF for the features extraction which can

serve in later step of the decision.

This paper is organized as follow: Section 2 is a

summary of the existent systems in the field of va-

cant parking spaces detection based on video. In sec-

tion 3, we present an overview of our approach with

a detailed explanation of the used techniques. Sec-

tion 4 evaluates the performance and the obtained re-

sults throw several achieved measurements. Finally,

section 5 concludes our work and presents ideas for

feature researches.

2 RELATED WORK

Many parking systems that aim to automate the oc-

cupancy detection based on image processing are al-

ready available. We can classify these approaches into

two main classes that differ with the used technique

to decide on a parking space state: Recognition based

approaches and appearance based approaches. The

526

Masmoudi I., Wali A., Jamoussi A. and Alimi A..

Vision based System for Vacant Parking Lot Detection: VPLD.

DOI: 10.5220/0004730605260533

In Proceedings of the 9th International Conference on Computer Vision Theory and Applications (VISAPP-2014), pages 526-533

ISBN: 978-989-758-004-8

Copyright

c

2014 SCITEPRESS (Science and Technology Publications, Lda.)

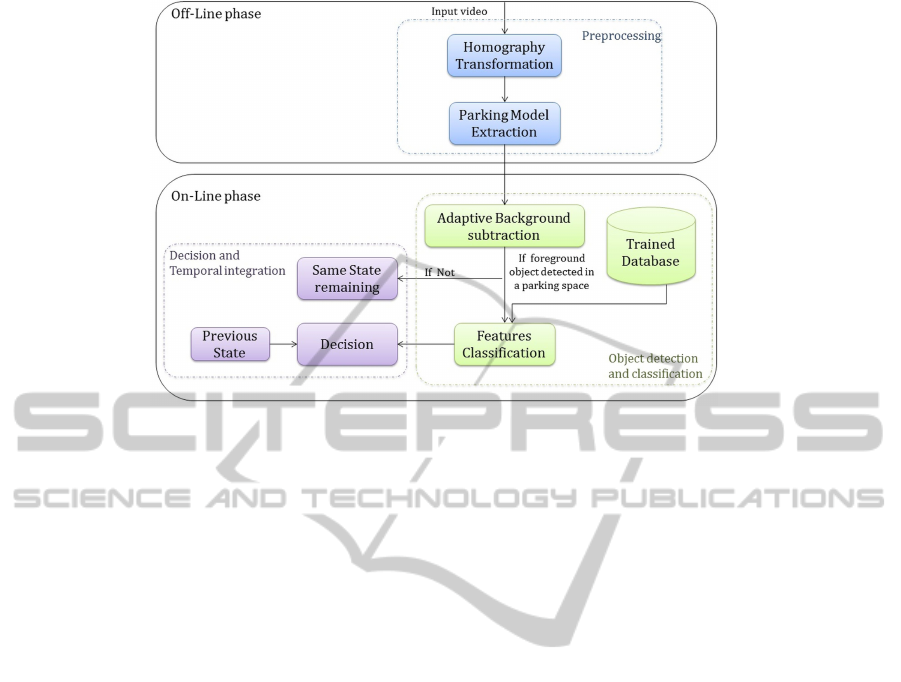

Figure 1: Proposed process.

approaches based object recognition aim to detect and

classify the vehicles present in the parking lot. They

rely on a first performed step of learning the charac-

teristics of cars and then detect and recognize objects

in the scene having similar properties. In (Ichihashi

et al., 2010) the authors proposed a method using

fuzzy c-means clustering and Principal Component

Analysis PCA for data initialization to classify sin-

gle parking spaces.(Wu et al., 2007) defined patches

of 3 successive parking spaces and classify them into

8 classes using a multi-class Support Vector Machine

SVM in order to reduce the conflicts caused by inter

space occlusion. (Huang and Wang, 2010) proposed

then a three-layer Hierarchical Bayesian Model and

extracted the region of interest as an entire row of car

park spaces which will be then treated to decide the

status of each parking place individually. Huang per-

formed his researches and presented a new approach

in (Huang et al., 2012) which is a surface based

method extracting 14 different patterns to modelize

the different sides of a parking space. (Tschentscher

et al., 2013) performed a comparison of several com-

bination of algorithms and opted for the extraction of

features using the Histogram of Oriented Gaussian

HOG algorithm and the training using SVM with a

temporal integration to perform the results of classi-

fication. These approaches based on the learning of

characteristics can be generally problematic because

of the complexity and the large variety of the target

objects. This needs a huge amount of data for train-

ing the positive images with all possible views and the

negative objects. Also the results of these approaches

can be affected if we aim to install the solution in dif-

ferent environments and divers parking lots cross the

cities.

The appearance based approaches rely on the

comparison of the current appearance of a parking

place with an original appearance of the vacant state.

This comparison leads to the decision of the occu-

pancy of the parking lot. Many approaches were

proposed in this model and they are generally sim-

ple methods such as background subtraction, or even

using reference images to perform a difference with

the current frames. (Lin et al., 2006) used an adap-

tive background subtraction algorithm for each park-

ing place to detect the foreground objects newly en-

tered to a parking place. (Fabian, 2008) used a

segment-based homogeneity model assuming that a

vacant parking place has a homogeneous appearance.

while (Bong, 2008) combined two streams of process

to obtain his results. He used a gray scale threshold

to recognize the occupancy of a parking place com-

bined with an Edge detection process and the final

result is obtained based on an AND function. The

major problem that can face these approaches is the

perspective distortion which affects the quality of re-

sults for the distant places. To overcome this issue,

(Sastre et al., 2007) proposed a top-view of the orig-

inal parking scene coupled with a texture properties

extraction with Gabor filter. But the still persisting

problem is the variation of luminosity.

In this paper we aim to provide a robust solution

facing the most common problems of lighting vari-

ations, shadow, occlusion and perspective distortion.

So, we proceed with a homography transformation to

change the view point of the scene and facilitate the

extraction of parking model and the elimination of the

perspective distortion, then we combine the adaptive

VisionbasedSystemforVacantParkingLotDetection:VPLD

527

background subtraction algorithm with the classifica-

tion of the SURF features to overcome the problem

of lighting variation and the detection of shadow over

the scene.

3 SYSTEM OVERVIEW

Our proposed vision based approach for Vacant Park-

ing Lot Detection: VPLD is presented in Fig.1. The

process is composed mainly of five modules: Homog-

raphy transformation and Parking model extraction

which correspond to the off-line phase of preprocess-

ing For the on-line phase, the step of classification of

the parking places is performed using the Adaptive

background subtraction and SURF for features ex-

traction and classification. And finally the step of de-

cision of the state of each parking place in the model.

3.1 Homography Transformation

The first pre-processing step that was performed in

our process is the Homography transformation which

has the objective to change the point of view of the

input video stream. Samples of obtained results can

be shown in Fig. 2. Another objective of this trans-

formation is to reduce the effects of the perspective

distortion caused by the long distances to the camera

which can affect the quality of vision of the parking

places such as the car shapes or size. This transforma-

tion will also facilitate the next step of parking model

extraction and try to reduce the inter-places occlusion

problem.

Figure 2: Samples of Homography Transformations.

In the off-line phase of preprocessing, and in order

to perform the Homography transformation, the user

should select manually at least four required points

and their corresponding real world coordinates. This

transformation is an invertible mapping of points on

a projective plane. In a given input image I, a point

(i, j) may be represented as a 3D vector p = (x, y,

z) where i =

x

z

and j =

y

z

. The idea is to provide a

clearer view point of the parking places than the initial

view of the scene. The top-view model is performed

using a Homography transformation which ensures a

projective correspondence between two different im-

age planes(Dubrofsky and Woodham, 2008) (Lin and

Wang, 2012). For an image I, This correspondence

between two points p (x, y, z)

T

and p’ (x’, y’, z’)

T

is

illustrated in (1) with the relationship:

p

0

= H p (1)

where H is a 3x3 matrix named the homogeneous

transform matrix. This equation can be expressed in

terms of vector cross product, we obtain:

p

0

× H p = 0 (2)

If we express the matrix H as:

H =

h

1T

h

2T

h

3T

(3)

Then, the equation (2) may be written as:

y

0

h

3T

p − z

0

h

2T

p

z

0

h

1T

p − x

0

h

3T

p

x

0

h

2T

p − y

0

h

1T

p

= 0 (4)

The equation (4) can be expressed in term of the

unknowns since h

iT

p = p

T

h

i

0

T

−z

0

p

T

y

0

p

T

z

0

p

T

0

T

−x

0

p

T

−y

0

p

T

x

0

p

T

0

T

h

1

h

2

h

3

= 0 (5)

3.2 Parking Model Extraction

After the performed step of the Homography trans-

formation, we obtain a new disposition of the rows

of the parking spaces facilitating the extraction of the

patches of each place individually. To do so, the idea

is to extract the places of each row based on the posi-

tion of the first place and a measure of width w. Ac-

cording to the Fig.3, we can specify the coordinates

of the first two corners of the first parking place in

the row, then we specify a fixed distance of width that

represents the large of each parking place. So we can

iterate this step and extract automatically the rest of

the parking places belonging to this specified row.

This phase allows the extraction of the patches

representing the parking places with an important re-

duce of the problem of the inter-places occlusion.

Also the normalisation of the patches into equal rect-

angles may decrease the effects of the perspective dis-

tortion.

VISAPP2014-InternationalConferenceonComputerVisionTheoryandApplications

528

Figure 3: Extraction of parking model.

3.3 Adaptive Background Subtraction

The main objective of this phase is to dissociate the

foreground objects that are in motion in the scene

from the background model. For this, we opted for the

Mixture Gaussian Model MGM (Ju and Liu, 2012)

which is an adaptive model based on the principle of

permanently re-estimates the model of background.

It is efficient, reliable and sensitive and can incorpo-

rate illumination changes and low speed changes in

the scene. These powerful properties allow to over-

come the problems of lighting variations along the

day time caused by the different whether conditions

and to be adapted to the variation of shadow throw

the ground. This algorithm subtracts the background

of the video and separates its first plane in order to ex-

tract the existent objects in the foreground. This leads

to the separation of the new objects that are in motion

in the video from all the other parts of the background

which are static. The MGM presents each pixel with

a mixture of N Gaussians. It is used to estimate para-

metrically the distribution of random variables model-

ing them as a sum of several Gaussians called kernel.

In this model, each pixel I (x) = I (x, y) is considered

as a mixture of N Gaussian distributions, namely

p(I (x)) =

∑

N

k=1

ω

k

N (I (x) , µ

k

(x), Σ

k

(x)). (6)

with N (I (x), µ

k

(x), Σ

k

(x)) is normal distribution

multivariate and ω

k

is the weight of the k th normal.

N (I (x) , µ

k

(x), Σ

k

(x)) =

c

k

exp

−

1

2

(I (x) − µ

k

(x))

T

Σ

−1

k

(x)(I (x) − µ

k

(x))

.

(7)

and c

k

is a coefficient defined by

c

k

=

1

(2π)

n

2

|Σ

k

|

1

2

. (8)

Each mixture component k is a Gaussian with mean

µ

k

and covariance matrix Σ

k

This model is updated dynamically according to

a set of steps. It starts by checking if each incoming

pixel value x can be attributed to a given mode of the

mixture, this is the operation of correspondence. If

the pixel value is within the confidence interval of 2.5

of standard deviation, then the pixel is matched and

the parameters of the corresponding distributions are

updated.

Ones the foreground model is extracted according

to the MGM algorithm, we can then extract each fore-

ground object separately. The idea is to consider only

the foreground objects overlapping with the patches

in the extracted model. This leads to the detection

of cars in two cases, while entering to or leaving the

parking places.

3.4 Features Extraction and

Classification

The previous performed step leads to the detection of

moving objects overlapping with parking places. This

motion can be detected while a vehicle is entering to

a parking space or while living it. The first used algo-

rithm of MGM can’t provide this crucial information

for the rest of our decision. So we opted for the com-

bination of this obtained result with a classification of

the state using a recognition based method. Many al-

gorithms are used in the literature. In this section we

will focus on the two algorithm for features extrac-

tion SURF (Sec.3.4.1) and HOG (Sec.3.4.2) , then we

present in ( Sec.3.4.3) two used methods for the phase

of classification. The objective of this section is to

evaluate and compare these algorithms in order to de-

cide of the convenient one to be used.

3.4.1 Speeded Up Robust Features

Speeded Up Robust Features (Bay et al., 2008), is a

detector and a descriptor of key points, Fig.4. It has

the advantage of being invariant to the scale varia-

tion. It uses a very basic approximation of the Hessian

matrix based on the integral images which reduces

greatly the computing time. The entry of an integral

image I (x) at a location x = (x, y)

T

represents the sum

of all pixels in the input image I within a rectangular

region formed by the origin and x.

I

Σ

=

i≤x

∑

i=0

j≤y

∑

j=0

I (i, j) (9)

The detector is based on the determinant of the

Hessian matrix because of its good performance in

accuracy. A Hessian matrix for a continuous function

f (x, y)is defined by

H ( f (x, y)) =

∂

2

f

∂x

2

∂

2

f

∂x∂y

∂

2

f

∂x∂y

∂

2

f

∂y

2

(10)

VisionbasedSystemforVacantParkingLotDetection:VPLD

529

By analogy with this definition, the Hessian ma-

trix for an image I (x, y) at a given scale σ is computed

using the second derivatives of the intensity of its pix-

els. These derivatives are obtained by the convolution

of the image I by a Gaussian kernel

∂

2

g(σ)

∂x

2

. Given a

point X = (x, y), we obtain :

L

xx

(X, σ) =

∂

2

g(σ)

∂x

2

∗ I (x, y). (11)

L

xy

(X, σ) =

∂

2

g(σ)

∂x∂y

∗ I (x, y). (12)

L

yy

(X, σ) =

∂

2

g(σ)

∂y

2

∗ I (x, y). (13)

These derivatives are known as ”Laplacian of

Gaussian. The determinant will be calculated for each

point of the image and it will be possible to determine

the key points as the maximum and minimum.

det H (X, σ) =

L

xx

L

yy

− L

2

xy

(14)

Gaussians are optimal for scale space analysis, but in

practice they must be discretized and cropped. Bay

proposed to approximate the filters of the algorithm

Laplacian of Gaussian using D

xx

, D

xy

and D

yy

to im-

prove the performance. The proposed filters are 9x9

approximations for Gaussian with σ = 1.2. They rep-

resent the lowest scale that means the highest spatial

resolution.

Figure 4: SURF features extraction.

3.4.2 Histogram of Oriented Gradients

Histogram of Oriented Gradients (Dalal and Triggs,

2005) is a features descriptor providing information

about the distribution of the local gradients in a nor-

malized image. It represents the appearance and the

shape of an object based on the distribution of the

intensity of gradients or the directions of the edges.

Like shown in Fig.5, HOG divides an image into

small connected cells and for each one it computes a

histogram of gradients directions. The final descriptor

is a combination of all these histograms. So, having

an image I, we compute the I

x

and I

y

the horizontal

and vertical derivatives using an operation of convo-

lution given by:

I

x

= I ∗ D

x

(15)

I

y

= I ∗ D

y

(16)

where:

D

x

=

−1 0 1

, D

y

=

−1 0 1

T

(17)

Then we can calculate the gradient using:

|G| =

q

I

2

x

+ I

2

y

(18)

and the orientation of the gradient is given by:

θ = arctan

I

y

I

x

(19)

In this phase, each pixel of a cell casts a weighted vote

for an orientation based histogram. Then the gradi-

ent will be normalized and the cells are grouped into

larger connected blocks. Hence, the HOG descriptor

is obtained from the normalized histograms from all

the blocks.

Figure 5: HOG features extraction.

3.4.3 Features Classification

This phase of classification is performed firstly for the

learning of the dataset of images presenting car im-

ages and no car images because in our case, we have

to perform a binary classifier for the two cases of va-

cant or occupied place. Then this learnt classifier will

be used in the real time scenario with the input images

to decide on the state of the concerned parking place.

For this purpose we studied two classifiers which are

the K-Nearest Neighbor KNN and the Support Vector

Machine SVM.

KNN (Hamid Parvin and Minaei-Bidgoli, 2008),

is a classifier categorized as a ”Lazy learner”. In the

classification phase, the input data is classified by as-

signing the class which is frequent among its k nearest

neighbors. While SVM (Zhang et al., 2001), is a dis-

criminative classifier presenting a supervised learning

model. SVM predicts for each input data which of the

two given classes is the output. The performance of

this classifier depends on the hyperplane that divides

the learned data into two categories.

3.5 Decision

To decide of the state of a parking model we have to

combine the results of the two main used algorithms:

VISAPP2014-InternationalConferenceonComputerVisionTheoryandApplications

530

Adaptive Background Subtraction and the Features

classification. The main idea of our proposed ap-

proach is to verify the new state of a parking place

only if this place is overlapped with a moving car de-

tected in the first step of the background subtraction.

Also to overcome the confusions that can appear in

transitions of state, we achieve a temporal integration.

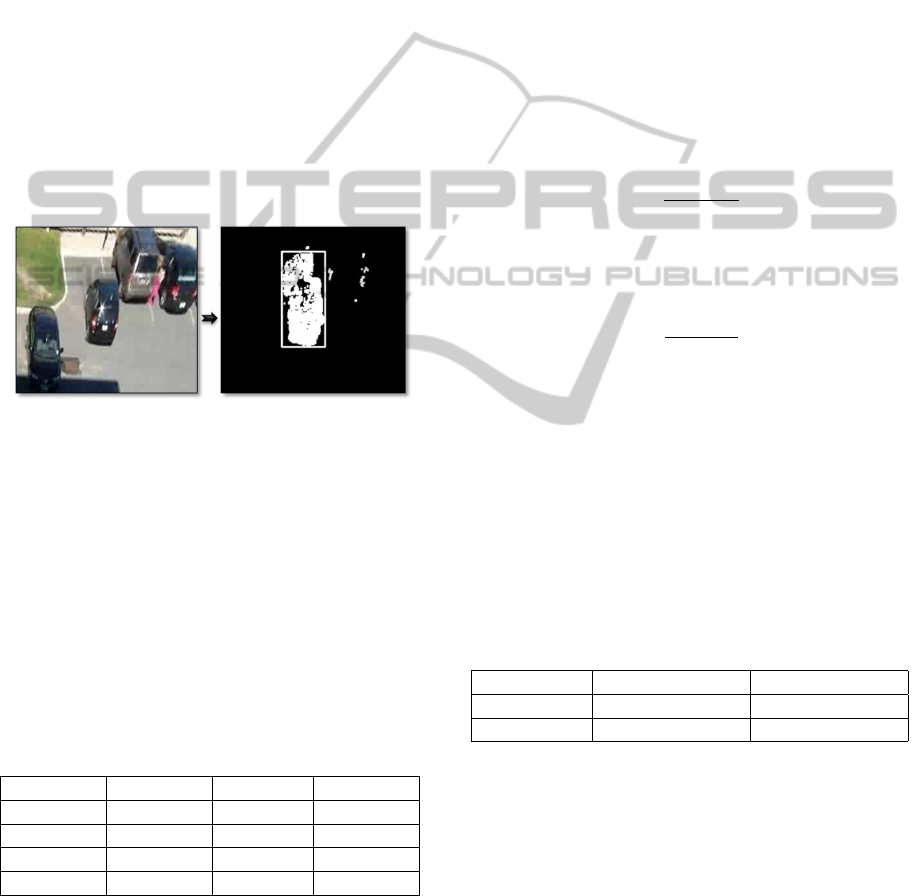

The first phase of Adaptive Background Subtrac-

tion is performed to detect any changes occurred in

the scene. So we can detect any car that is in motion

in the two cases of entry or exit. Fig.6 presents an

example of a car in motion detected as a foreground

object. Now we should determine if this car in motion

is overlapped with any parking place in the model. If

it is the case we pass to the next step and decide of

the state of these concerned places. To perform this

step, we rely on the chosen appearance based method

to classify the patches of the parking places whether

it is vacant or occupied.

Figure 6: Car in motion detection.

As we tested our approach, we noticed that while a

car is in motion inside a parking space the results may

be affected and may appear a confusing inference be-

tween the two cases vacant and occupied. That’s why

we propose a third state which represents the phase of

transition from occupied to vacant or the inverse. This

intermediate state of transition s

t

is based on a tempo-

ral integration and rely on the two previous states of

the same place s

t−2

and s

t−1

. The Table.1 represents

some of the considered cases for the detection of a

transition state with s

t−0

is the actual returned clas-

sification results before we make the decision of s

t

.

Table 1: Temporal integration for ”Transition” state.

s

t−2

s

t−1

s

t−0

s

t

Occupied Vacant Occupied Transition

Vacant Occupied Vacant Transition

Occupied Transition Vacant Transition

Transition Vacant Occupied Transition

This introduced state of transition can reduce the

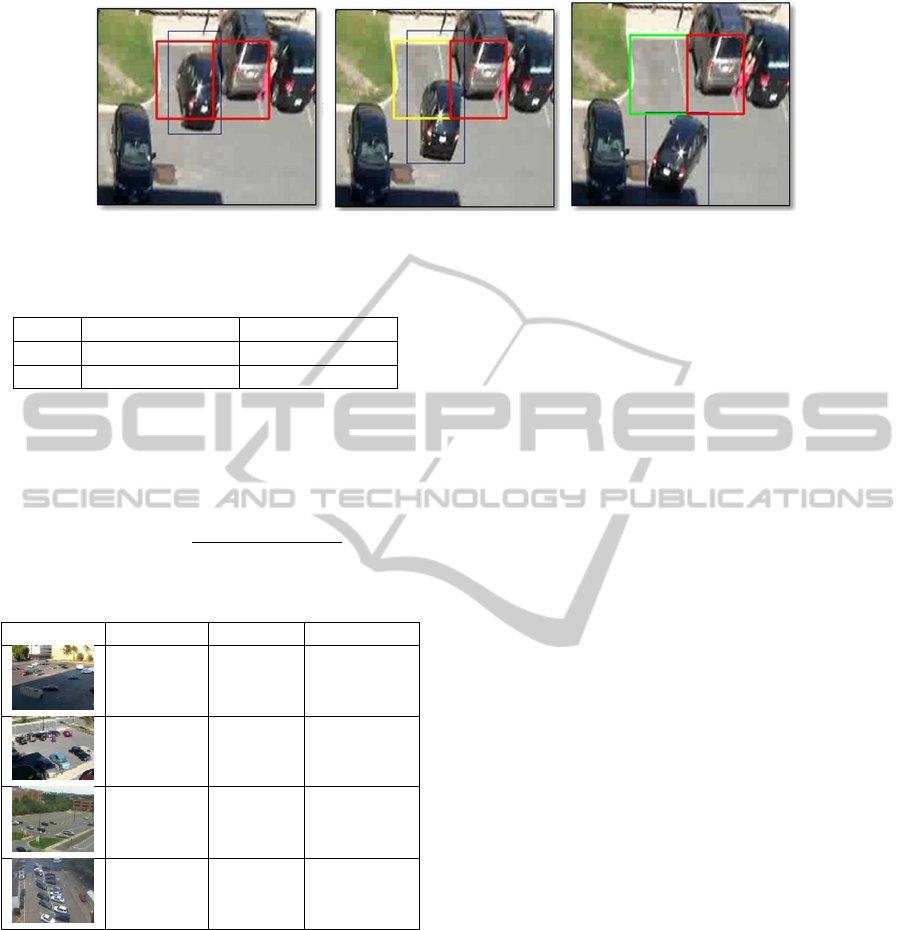

false classifications and improve the performance of

our approach. The Fig.7 presents a case where a car is

living the first parking place. In the Fig.7(a) the car is

detected in motion and the parking place is marked as

”Occupied”. Fig.7(b) is a ”Transition” state presented

in yellow while the decision of the occupancy of the

parking place is still not final. Finally Fig.7(c) shows

the car after living the parking spaces whose state is

now ”Vacant”.

4 EXPERIMENTAL RESULTS

To evaluate the effectiveness of our approach, we per-

form several tests based on the database of video VI-

RAT (Oh et al., 2011). Our evaluation is based on

the measurements of the Recall R and the Precision

P. The recall reflects the fraction of the relevant in-

stances that are retrieved by our approach and reveals

its completeness and quantity. The recall is defined

as:

R =

T P

T P + FN

(20)

The Precision presents the fraction of the retrieved in-

stances that are relevant and can be seen as a measure

of exactness. It is presented as:

P =

T P

T P + FP

(21)

where T P is the number of true detections, FN is

the number of false negatives representing the num-

ber of occupied places not detected and finally FP is

the number of false positives which is the number of

places detected as occupied although they are vacant.

The first measurements that we achieved are to

prove the effects of the Homography Transformation

HT initially adapted for the preprocessing of the input

video. The Table.2 demonstrates the improvement of

results and accuracy especially in the case of use of

the SURF algorithm.

Table 2: Measurements for Homography transformation.

SURF HOG

Without HT R=0,89 - P=0,98 R=0,77 - P=0,81

With HT R=0,92 - P=0,89 R=0,8 - P=0,89

Then, in order to decide on the algorithms to be

adopted for the Features extraction and classification,

we opted for a comparative study for the possible

combinations that we can use. The Table.3, presents a

set of results obtained while testing the different com-

binations. Our performed tests prove that in our case,

the application of the algorithm SURF as a features

extractor and the SVM as a classifier, we obtain more

pertinent results than the other combinations and the

average of recall measurement can reach 0,92.

Once we adopt this configuration of methods, we

can test the performance of our proposed approach

VisionbasedSystemforVacantParkingLotDetection:VPLD

531

(a) Occupied (b) Transition (c) Vacant

Figure 7: Transition in parking space state.

Table 3: Comparative study of methods.

SURF HOG

SVM R=0,92 - P=0,89 R=0,8 - P=0,89

KNN R=0,87 - P=0.86 R=0.76 - P=0.85

under different parking dispositions. We can also in-

troduce the F-measure which combines the measure-

ments of Recall and precision previously introduces

and presents a weighted average.

F − measure = 2 ∗

Recall ∗ Precision

Recall + Precision

(22)

Table 4: Tests for different parking dispositions.

F-measure Recall: R Precision: P

0.88 0.89 0.87

0.98 0.97 0.99

0.95 0.99 0.92

0.95 0.98 0.93

Our approach was tested with four different dispo-

sitions of parking. For each parking we perform the

measurements for several number of video sequences.

The results in Table.4 presents an average measure-

ment for each parking. the results show that the per-

formance of our approach remains stable under dif-

ferent lighting and weather conditions of the tested

parking and that the measurements of Recall and Pre-

cision maintain a value above 0,92 for the case of

three parking disposition. In the case of parking one,

the measures have an average of 0,88. This decrease

of performance is principally caused by the problem

of the inter spaces occlusion. An other problem that

can affect the results is the passing of pedestrians or

other type of vehicles throw the parking places with-

out tacking a place.

5 CONCLUSIONS

This paper presents a new approach for vision based

vacant parking places detection based on a combina-

tion of a properties based method which is the Adap-

tive Background Subtraction for foreground objects

detection and a recognition based method which is the

SURF algorithm for features extraction. The choice

of these techniques helps to reduce the problems of

lighting variation and shadow effects. Ordinarily, the

parking places are classified as ”Vacant” or ”Occu-

pied”. To better the performance of our approach we

introduce a new transitional state ”Transition” which

represents the passing of a parking space from a state

to another. This leads to improve the accuracy of the

obtained results and prevents the confusions.

Our proposed approach provides good results but

still suffer in case of unexpected scenario like the

presence of pedestrians or the inter objects occlusion.

Our future work will focus on the amelioration of our

used techniques to propose novel approach and over-

come these problems and better the performance.

ACKNOWLEDGEMENTS

The authors would like to acknowledge the financial

support of this work by grants from General Direction

of Scientific Research (DGRST), Tunisia, under the

ARUB program.

The research and innovation are performed in the

framework of a thesis MOBIDOC financed by the EU

under the program PASRI.

VISAPP2014-InternationalConferenceonComputerVisionTheoryandApplications

532

REFERENCES

Bay, H., Ess, A., Tuytelaars, T., and Gool, L. V. (2008).

Surf: Speeded up robust features. Computer Vision

and Image Understanding, 110:346–359.

Bong, D. B. L.; Ting, K. C. L. K. C. (2008). Integrated

approach in the design of car park occupancy infor-

mation system (coins). IAENG International Journal

of Computer Science, 35:7.

Dalal, N. and Triggs, B. (2005). Histograms of oriented

gradients for human detection. In Schmid, C., Soatto,

S., and Tomasi, C., editors, International Conference

on Computer Vision & Pattern Recognition, volume 2,

pages 886–893, INRIA Rh

ˆ

one-Alpes, ZIRST-655, av.

de l’Europe, Montbonnot-38334.

Dubrofsky, E. and Woodham, R. J. (2008). Combining line

and point correspondences for homography estima-

tion. In ISVC (2), volume 5359 of Lecture Notes in

Computer Science, pages 202–213. Springer.

Fabian, T. (2008). An algorithm for parking lot occupation

detection. In Computer Information Systems and In-

dustrial Management Applications, 2008. CISIM ’08.

7th, pages 165–170.

Hamid Parvin, H. A. and Minaei-Bidgoli, B. (2008). Mknn:

Modified k-nearest neighbor. In World Congress on

Engineering and Computer Science 2008.

Huang, C.-C., Dai, Y.-S., and Wang, S.-J. (2012). A surface-

based vacant space detection for an intelligent parking

lot. In ITS Telecommunications (ITST), 2012 12th In-

ternational Conference on, pages 284–288.

Huang, C. C. and Wang, S. J. (2010). A hierarchical

bayesian generation framework for vacant parking

space detection. IEEE Trans. Cir. and Sys. for Video

Technol., 20(12):1770–1785.

Ichihashi, H., Katada, T., Fujiyoshi, M., Notsu, A., and

Honda, K. (2010). Improvement in the performance

of camera based vehicle detector for parking lot. In

Fuzzy Systems (FUZZ), 2010 IEEE International Con-

ference on, pages 1–7.

Ju, Z. and Liu, H. (2012). Fuzzy gaussian mixture models.

Pattern Recognition, 45:1146–1158.

Lin, C.-C. and Wang, M.-S. (2012). A vision based top-

view transformation model for a vehicle parking as-

sistant. Sensors, 12(4):4431–4446.

Lin, S.-F., Chen, Y.-Y., and Liu, S.-C. (2006). A vision-

based parking lot management system. In Systems,

Man and Cybernetics, 2006. SMC ’06. IEEE Interna-

tional Conference on, volume 4, pages 2897–2902.

Oh, S., Hoogs, A., Perera, A., Cuntoor, N., Chen, C.-

C., Lee, J. T., Mukherjee, S., Aggarwal, J. K., Lee,

H., Davis, L., Swears, E., Wang, X., Ji, Q., Reddy,

K., Shah, M., Vondrick, C., Pirsiavash, H., Ramanan,

D., Yuen, J., Torralba, A., Song, B., Fong, A., Roy-

Chowdhury, A., and Desai, M. (2011). A large-scale

benchmark dataset for event recognition in surveil-

lance video. In CVPR.

Sastre, R., Gil Jimenez, P., Acevedo, F. J., and Maldon-

ado Bascon, S. (2007). Computer algebra algorithms

applied to computer vision in a parking management

system. In Industrial Electronics, 2007. ISIE 2007.

IEEE International Symposium on, pages 1675–1680.

Tschentscher, M., Neuhausen, M., Koch, C., Knig, M.,

Salmen, J., and Schlipsing, M. (2013). Comparing

image features and machine learning algorithms for

real-time parking space classification. In Computing

in Civil Engineering (2013), pages 363–370.

Wu, Q., Huang, C., yu Wang, S., chen Chiu, W., and Chen,

T. (2007). Robust parking space detection consider-

ing inter-space correlation. In ICME, pages 659–662.

IEEE.

Zhang, L., Lin, F., and Zhang, B. (2001). Support vector

machine learning for image retrieval. In Image Pro-

cessing, 2001. Proceedings. 2001 International Con-

ference on, volume 2, pages 721–724 vol.2.

VisionbasedSystemforVacantParkingLotDetection:VPLD

533