Online Knowledge Gradient Exploration

in an Unknown Environment

Saba Q. Yahyaa and Bernard Manderick

Department of Computer Science, Vrije Universiteit Brussel, Pleinlaan 2, 1050 Brussels, Belgium

Keywords:

Online reinforcement learning, value function approximation, (kernel-based) least squares policy iteration,

approximate linear dependency kernel sparsification, knowledge gradient exploration policy.

Abstract:

We present online kernel-based LSPI (or least squares policy iteration) which is an extension of offline kernel-

based LSPI. Online kernel-based LSPI combines characteristics of both online LSPI and offline kernel-based

LSPI to improve the convergence rate as well as the optimal policy performances of the online LSPI. Online

kernel-based LSPI uses knowledge gradient policy as an exploration policy and the approximate linear de-

pendency based kernel sparsification method to select features automatically. We compare the optimal policy

performance of online kernel-based LSPI and online LSPI on 5 discrete Markov decision problems, where

online kernel-based LSPI outperforms online LSPI.

1 INTRODUCTION

A Reinforcement Learning (RL) agent has to learn to

make optimal sequential decisions while interacting

with its environment. At each time step, the agent

takes an action and as a result the environmenttransits

from the current state to the next one while the agent

receives feedback signal from the environment in the

form of a scalar reward.

The mapping from states to actions that specifies

which actions to take in states is called a policy π and

the goal of the agent is to find the optimal policy π

∗

,

i.e. the one that maximises the total expected dis-

counted reward, as soon as possible. The state-action

value function Q

π

(s,a) is defined as the total expected

discounted reward obtained when the agent starts in

state s, takes action a, and follows policy π thereafter.

The optimal policy maximises these Q

π

(s,a) values.

When the agent’s environment can be modelled

as a Markov Decision Process (MDP) then the Bell-

man equations for the state-action value functions,

one per state-action pair, can be written down and can

be solved by algorithms like policy iteration or value

iteration (Sutton and Barto, 1998). We refer to Sec-

tion 2.1 for more details.

When no such model is available, the Bellman

equations cannot be written down. Instead, the agent

has to rely only on information collected while inter-

acting with its environment. At each time step, the

information collected consists of the current state, the

action taken in that state, the reward obtained and the

next state of the environment. The agent can either

learn offline when firstly a batch of past experience is

collected and subsequently used and reused or online

when it tries to improve its behaviour at each time step

based on the current information.

Fortunately, the optimal Q-values can still be de-

termined using Q-learning (Sutton and Barto, 1998)

which represents the actions-value Q

π

(s,a) as a

lookup table and uses the agent’s experience to build

the Q

π

(s,a). Unfortunately, when the state and/or the

action spaces are large finite or continuous space, the

agent faces a challenge called the curse of dimension-

ality, since the memory space needed to store all the

Q-values grows exponentially in the number of states

and actions. Computing all Q-values becomes infea-

sible. To handle this challenge, function approxima-

tion methods havebeen introduced to approximatethe

Q-values, e.g. (Lagoudakis and Parr, 2003) have pro-

posed Least Squares Policy Iteration (LSPI) to find

the optimal policy when no model of the environment

is available. LSPI is an example of both approximate

policy iteration and offline learning. LSPI approxi-

mates the Q-values using a linear combination of pre-

defined basis functions. The used predefined basis

functions have a large impact on the performance of

LSPI in terms of the number of iterations that LSPI

needs to converge to a policy, the probability that the

converged policy is optimal, and the accuracy of the

approximated Q-values.

5

Q. Yahyaa S. and Manderick ..

Online Knowledge Gradient Exploration in an Unknown Environment.

DOI: 10.5220/0004718700050013

In Proceedings of the 6th International Conference on Agents and Artificial Intelligence (ICAART-2014), pages 5-13

ISBN: 978-989-758-015-4

Copyright

c

2014 SCITEPRESS (Science and Technology Publications, Lda.)

To improve the accuracy of the approximated Q-

values and to find a (near) optimal policy, (X. Xu

and Lu, 2007) have proposed Kernel-Based LSPI

(KBLSPI), an example of offline approximated policy

iteration that uses Mercer kernels to approximate Q-

values (Vapnik, 1998). Moreover, kernel-based LSPI

provides automatic feature selection by the kernel ba-

sis functions since it uses the approximate linear de-

pendency sparsification method described in (Y. En-

gel and Meir, 2004).

(L. Bus¸oniu and Babuˇska, 2010) have adapted

LSPI, which does offline learning, for online rein-

forcement learning and the result is called online

LSPI. A good online learning algorithm must quickly

produce acceptable performancerather than at the end

of the learning process as is the case in offline learn-

ing. In order to obtain good performance, an online

algorithm has to find a proper balance between ex-

ploitation, i.e. using the collected information in the

best possible way, and exploration, i.e. testing out

the available alternatives (Sutton and Barto, 1998).

Several exploration policies are available for that pur-

pose and one of the most popular ones is ε-greedy

exploration that selects with probability 1 −ε the ac-

tion with the highest estimated Q-value and selects

uniformly, randomly with probability ε one of the ac-

tions available in the current state. To get good perfor-

mance, the parameter ε has to be tuned for each prob-

lem. To get rid of parameter tuning and to increase

the performance of online LSPI, (Yahyaa and Mand-

erick, 2013) have proposed using Knowledge Gradi-

ent (KG) policy (I.O. Ryzhov and Frazier, 2012) in

the online-LSPI.

To improve the performance of online-LSPI and

to get automatic feature selection, we propose online

kernel-based LSPI and we use the knowledge gradi-

ent (KG) as an exploration policy. The rest of the pa-

per is organised as follows: In Section 2 we present

Markov decision processes, LSPI, the knowledge gra-

dient policy for online learning, kernel-based LSPI

and the approximate linear dependency test. While

in Section 3, we present the knowledge gradient pol-

icy in online kernel-based LSPI. In Section 4 we give

the domains used in our experiments and our results.

We conclude in Section 5.

2 PRELIMINARIES

In this section, we discuss Markov decision processes,

online LSPI, the knowledge gradient exploration pol-

icy (KG), offline kernel-based LSPI (KBLSPI) and

approximate linear dependency (ALD).

2.1 Markov Decision Process

A finite Markov decision process (MDP) is a 5-tuple

(S,A, P,R,γ), where the state space S contains a fi-

nite number of states s and the action space A con-

tains a finite number of actions a, the transition prob-

abilities P(s, a,s

′

) give the conditional probabilities

p(s

′

|s,a) that the environment transits to state s

′

when

the agent takes action a in state s, the reward distribu-

tions R(s,a,s

′

) give the expected immediate reward

when the environment transits to state s

′

after tak-

ing action a in state s, and γ ∈ [0,1) is the discount

factor that determines the present value of future re-

wards (Puterman, 1994; Sutton and Barto, 1998).

A deterministic policy π : S →A determines which

action a the agent takes in each state s. For the

MDPs considered, there is always a deterministic op-

timal policy and so we can restrict the search process

to such policies (Puterman, 1994; Sutton and Barto,

1998). By definition, the state-action value function

Q

π

(s,a) for a policy π gives the expected total dis-

counted reward E

π

(

∑

∞

i=t

γ

t

r

t

) when the agent starts

in state s, takes action a and follows policy π there-

after. The goal of the agent is to find the optimal

policy π

∗

, i.e. the one that maximizes Q

π

for ev-

ery state s and action a: π

∗

(s) = argmax

a∈A

Q

∗

(s,a)

where Q

∗

(s,a) = max

π

Q

π

(s,a) is the optimal state-

action value function. For the MDPs considered, the

Bellman equations for the state-action value function

Q

π

are given by

Q

π

(s,a) = R(s,a,s

′

) + γ

∑

s

′

P(s,a,s

′

)Q

π

(s

′

,a

′

) (1)

In Equation 1, the sum is taken over all states s

′

that

can be reached from state s when action a is taken,

and the action a

′

taken in next state s

′

is determined by

the policy π, i.e. a

′

= π(s

′

). If the MDP is completely

known then algorithms such as value or policy itera-

tion find the optimal policy π

∗

. Policy iteration starts

with an initial policy π

0

, e.g. randomly selected, and

repeats the next two steps until no further improve-

ment is found: 1) policy evaluation where the current

policy π

i

is evaluated using Bellman equations 1 to

calculate the corresponding value function Q

π

i

, and 2)

policy improvement where this value function is used

to find an improved new policy π

i+1

that is greedy in

the previousone, i.e. π

i+1

= argmax

a∈A

Q

π

i

(s,a) (Sut-

ton and Barto, 1998).

For finite MDPs, the action-valuefunctions Q

π

for

a policy π can be represented by a lookup table of size

|S|×|A|, one entry per state-action pair. However,

when the state and/or action spaces are large, this ap-

proach becomes computationally infeasible due to the

curse of dimensionality and one has to rely on func-

tion approximation instead. Moreover, the agent does

ICAART2014-InternationalConferenceonAgentsandArtificialIntelligence

6

not know the transition probabilities P(s,a,s

′

) and the

reward distributions R(s,a,s

′

). Therefore, it must rely

on information collected while interacting with the

environment to learn the optimal policy. The infor-

mation collected is a trajectory of samples of the form

(s

t

,a

t

,r

t

,s

t+1

) or (s

t

,a

t

,r

t

,s

t+1

,a

t+1

), where s

t

, a

t

, r

t

,

s

t+1

, and a

t+1

, are the state, the action in the state,

the reward, the next state, and the next action in the

next state, respectively. To overcome these problems,

least squares policy iteration (LSPI) uses such sam-

ples to approximate the Q

π

-values (Lagoudakis and

Parr, 2003).

More recently, (L. Bus¸oniu and Babuˇska, 2010)

have adapted LSPI so that it can work online

and (Yahyaa and Manderick, 2013) have used the

knowledge gradient (KG) policy in this online LSPI.

Since we are interested in the most challenging RL

problem: online learning in a stochastic environment

of which no model is available. Therefore, we are go-

ing to compare the performance of online-LSPI with

the proposed algorithm using KG policy.

2.2 Least Squares Policy Iteration

LSPI approximates the action-value Q

π

for a policy π

in a linear way (Lagoudakis and Parr, 2003):

ˆ

Q

π

(s,a;w

π

) =

n

∑

i=1

φ

i

(s,a)w

π

i

(2)

where n, n << |S × A|, is the number of basis

functions, the weights (w

π

i

)

n

i=1

are parameters to be

learned for each policy π, and {φ

i

(s,a)}

n

i=1

is the set

of predefined basis functions. Let Φ be the basis ma-

trix of size |S ×A|×n, where each row contains the

values of all basis functions in one of the state-action

pairs (s,a) and each column contains the values of

one of the basis functions φ

i

in all state-action pairs

and let w

π

be a column weight vector of length n.

Given a trajectory of length L of samples

(s

t

,a

t

,r

t

,s

t+1

)

L

t=1

. Offline-LSPI is an example of ap-

proximated policy iteration and repeats the follow-

ing two steps until no further improvement in the

policy is obtained: 1) Approximate policy evaluation

that approximates the state-action value function Q

π

of the current policy π, and 2) Approximate policy

improvement that derives from the current estimated

state-action value functions

ˆ

Q

π

a better policy π

′

, i.e.

π

′

= argmax

a∈A

ˆ

Q

π

(s,a)

Using the least square error of the projected Bell-

man’s equation, Equation 1, the weight vector w

π

can

be approximated as follows (Lagoudakis and Parr,

2003):

ˆ

A ˆw

π

=

ˆ

b (3)

where

ˆ

A is a matrix and

ˆ

b is a vector. Offline-LSPI up-

dates the matrix

ˆ

A and the vector

ˆ

b from all available

samples as follows:

ˆ

A

t

=

ˆ

A

t−1

+ φ(s

t

,a

t

)[φ(s

t

,a

t

) −γφ(s

t+1

,π(s

t+1

))]

T

ˆ

b

t

=

ˆ

b

t−1

+ φ(s

t

,a

t

)r

t

(4)

where T is the transpose and r

t

is the immediate

reward that is obtained at time step t. After iter-

ating over all collected samples, ˆw

π

can be found.

(L. Bus¸oniu and Babuˇska, 2010) have adapted offline-

LSPI for online learning. The changes with respect

to the offline algorithm are twofold: 1) online-LSPI

updates the matrix

ˆ

A and the vector

ˆ

b after each

time step t. Then, after every few samples K

θ

ob-

tained from the environment, online-LSPI estimates

the weight vector ˆw

π

for the current policy π, com-

putes the corresponding approximated

ˆ

Q-function,

and derives an improved new learned policy π

′

, π

′

=

argmax

a∈A

ˆ

Q

π

(s,a). When K

θ

= 1, online-LSPI is

called fully optimistic and when K

θ

> 1 is a small

value, online-LSPI is called partially optimistic. 2)

online-LSPI needs an exploration policy and (Yahyaa

and Manderick, 2013) proposed using KG policy

as an exploration policy instead of ε-greedy policy.

(Yahyaa and Manderick, 2013) have shown that the

performance of the online-LSPI is increased, e.g. the

average frequency that the learned policy is converged

to the optimal policy. Therefore, we are going to use

KG policy in our algorithm and experiments.

2.3 KG Exploration Policy

Knowledge gradient KG (I.O. Ryzhov and Frazier,

2012) assumes that the rewards of each action a are

drawn according to a probability distribution and it

takes normal distributionsN(µ

a

,σ

2

a

) with mean µ

a

and

standard deviation σ

a

. The current estimates, based

on the rewards obtained so far, are denoted by ˆµ

a

and

ˆ

σ

a

. And, the root-mean-square error (RMSE) of the

estimated mean reward ˆµ

a

given n rewards resulting

from action a is given by

ˆ

¯

σ

a

=

ˆ

σ

a

/

√

n. The KG is an

index strategy that determines for each action a the

indexV

KG

(a) and selects the action with the ’highest’

index. The index V

KG

(a) is calculated as follows:

V

KG

(a) =

ˆ

¯

σ

a

f

−|

ˆµ

a

−max

a

′

6=a

ˆµ

a

′

ˆ

¯

σ

a

|

(5)

In this equation, f(x) = φ

KG

(x) + xΦ

KG

(x) where

φ

KG

(x) = 1/

√

2π exp (−x

2

/2) is the density of

the standard normal distribution and Φ

KG

(x) =

R

x

−∞

φ(x

′

)dx

′

is its cumulative distribution. The pa-

rameter

ˆ

¯

σ

a

is the RMSE of the estimated mean reward

ˆµ

a

. Then KG selects the next action according to:

OnlineKnowledgeGradientExplorationinanUnknownEnvironment

7

a

KG

= argmax

a∈A

ˆµ

a

+

γ

1−γ

V

KG

(a)

(6)

where the second term in the right hand side is the to-

tal discounted index of action a. KG prefers those

actions about which comparatively little is known.

These actions are the ones whose RMSE (or spread)

ˆ

¯

σ

a

around the estimated mean reward ˆµ

a

is large.

Thus, KG prefers an action a over its alternatives if

its confidence in the estimated mean reward ˆµ

a

is low.

For discrete MDPs, (Yahyaa and Manderick,

2013) estimated the Q-values

ˆ

Q(s

t

,a

i

) and the RMSE

of the estimated Q-value

ˆ

¯

σ

2

q

to calculate the index

V

KG

(a

i

) for each available action a

i

,a

i

∈ A

s

t

in the

current state s

t

, where A

s

t

is the set of actions in state

s

t

. The pseudocode algorithm of the KG exploration

policy is shown in Figure 1. KG is easy to imple-

ment and does not have parameters to be tuned like

ε-greedy or softmax action selection policies (Sutton

and Barto, 1998). KG balances between exploration

and exploitation by adding an exploration bonus to

the estimated Q-values for each available action a

i

in

the current state s

t

and this bonus depends on all es-

timated Q-values

ˆ

Q(s

t

,a

i

) and the RMSE of the esti-

mated Q-value

ˆ

¯

σ

2

q

(steps: 2-8 in Figure 1). The RMSE

ˆ

¯

σ

2

q

are updated according to (Powell, 2007).

1. Input: current state

s

t

;discount factor

γ

;

the current estimates

ˆ

Q(s

t

,a

i

)

;the current

RMSEs

ˆ

¯

σ

2

q

(s

t

,a

i

)

for all actions

a

i

in state

s

t

2. For

a

i

∈ A

s

t

3.

´

ˆ

Q(s

t

,a

i

) ← argmax

a

j

∈ A

s

t

,a

j

6= a

i

ˆ

Q(s

t

,a

j

)

4. End for

5. For

a

i

∈ A

s

t

6.

ζ

a

i

← −abs((

ˆ

Q(s

t

,a

i

) −

´

ˆ

Q(s

t

,a

i

))/

ˆ

¯

σ

q

(s

t

,a

i

)

;

f(ζ

a

i

) ← ζ

a

i

Φ

KG

(ζ

a

i

) + φ

KG

(ζ

a

i

)

7.

V

KG

(a

i

) ←

ˆ

Q(s

t

,a

i

) +

γ

1−γ

ˆ

¯

σ

q

(s

t

,a

i

) f(ζ

a

i

)

8. End for

9. Output:

a

t

← argmax

a

i

∈ A

V

KG

(a

i

)

Figure 1: Algorithm: (Knowledge Gradient).

2.4 Kernel-based LSPI

Kernel-based LSPI (X. Xu and Lu, 2007) is a kernel-

ized version of offline-LSPI. Kernel-based LSPI uses

Mercer’s kernels in the approximated policy evalua-

tion and improvement (Vapnik, 1998). Given a fi-

nite set of points, i.e. {z

1

,z

2

,··· ,z

t

}, where z

i

is the

state-action pair, with the corresponding set of basis

functions, i.e. φ(z) : z → R . Mercer theorem states

the kernel function K is a positive definite matrix, i.e.

K(z

i

,z

j

) =< φ(z

i

),φ(z

j

) >.

Given a trajectory of length L of samples and

an initial policy π

0

. Offline kernel-based LSPI

(KBLSPI) uses the approximate linear dependency

based sparsification method to select a part of the data

samples and consists a dictionary Dic elements set,

i.e. Dic = {(s

i

,a

i

)}

|Dic|

i=1

with the corresponding kernel

matrix K

Dic

of size |Dic ×Dic| (Y. Engel and Meir,

2004). Kernel-based LSPI repeats the following two

steps: 1) Approximate policy evaluation, kernel-based

LSPI approximates the weight vector ˆw

π

for policy π,

Equation 3 from all available samples as follows:

ˆ

A

t

=

ˆ

A

t−1

+ k((s

t

,a

t

), j)[k((s

t

,a

t

), j) −γk((s

t+1

,π(s

t+1

)), j)]

T

ˆ

b

t

=

ˆ

b

t−1

+ k((s

t

,a

t

), j)r

t

, j ∈ Dic, j = 1, ··· , |Dic|

(7)

where k(.,.) is a kernel function between two points

(a state-action pair (s,a) and j, where j is the state-

action pair z

j

that is element in the dictionary Dic,

i.e. j ∈ {z

1

,z

2

,··· ,z

|Dic|

}). The matrix

ˆ

A should be

initialized to a small multiple of the identity matrix to

calculate the inverse of

ˆ

A or using the pseudo inverse.

After iterating for all the collected samples, ˆw

π

can be

found and the approximated Q

π

-values for policy π is

the following linear combination:

ˆ

Q

π

(s,a) = ˆw

π

k((s,a), j), j ∈ Dic, j = 1,2,··· ,|Dic|

(8)

2) Approximate policy improvement, KBLSPI derives

a new learned policy which is the greedy one, i.e.

π

′

(s) = argmax

a∈A

ˆ

Q

π

(s,a). The above two steps are

repeated until no change in the improved policy or a

maximum number of iterations is reached.

2.5 Approximate Linear Dependency

Given a set of data samples D from a MDP, i.e.

D = {z

1

,... ,z

L

}, where z

i

is a state-action pair and the

corresponding linear independent basis functions set

Φ, i.e. Φ = {φ(z

1

),··· ,φ(z

L

)}. Approximate linear

dependency ALD method (Y. Engel and Meir, 2004)

over the data samples set D is to find a subset Dic, i.e.

Dic ⊂D whose elements {z

i

}

|Dic|

i=1

and the correspond-

ing basis functions are stored in Φ

Dic

, i.e. Φ

Dic

⊂ Φ.

The data dictionary Dic is initially empty, i.e.

Dic = {} and ALD is implemented by testing every

basis function φ in Φ, one at time. If the basis func-

tion φ(z

t

) can not be approximated, within a prede-

fined accuracy v, by the linear combination of the ba-

sis functions of the elements that stored in Dic

t

, then

the basis function φ(z

t

) will be added to Φ

Dic

t

and z

t

will be added to Dic

t

, otherwise z

t

will not be added

to Dic

t

and φ(z

t

) will not be added to Φ

Dic

. As a re-

sult, after the ALD test, the basis functions of Φ

Dic

can approximate all the basis functions of Φ.

ICAART2014-InternationalConferenceonAgentsandArtificialIntelligence

8

At time step t, let Dic

t

= {z

j

}

|Dic

t

|

j=1

and the cor-

responding basis functions are stored in Φ

Dic

t

, i.e.

Φ

Dic

t

= {φ(z

j

)}

|Dic

t

|

j=1

and z

t

is a given state-action pair

at time t. The ALD test on the basis function φ(z

t

)

supposes that the basis functions are linearly depen-

dent and uses least squares error to approximate φ(z

t

)

by all the basis functions of the elements in Dic

t

, for

more detail we refer to (Engel and Meir, 2005). The

least squares error is:

error = min

c

||

|Dic

t

|

∑

j=1

c

j

φ(z

j

) −φ(z

t

)||

2

< v (9)

error = k(z

t

,z

t

) −k

T

Dic

t

(z

t

)c

t

, where (10)

c

t

= K

−1

Dic

t

k

Dic

t

(z

t

),

k

T

Dic

t

= [k(1, z

t

),··· ,k( j, z

t

),··· ,k(|Dic

t

|,z

t

)]

If the error is larger than predefined accuracy v, then z

t

will be added to the dictionary elements, i.e. Dic

t+1

=

Dic

t

∪{z

t

}, otherwise Dic

t+1

= Dic

t

. After testing

all the elements in the data samples set D, the matrix

K

−1

Dic

can be computed, this is in the offline learning

method. For online learning, the matrix K

−1

Dic

can be

updated at each time step (Y. Engel and Meir, 2005).

At each time step t, if the error that results from

testing the basis functions of z

t

is smaller than v,

then Dic

t+1

= Dic

t

and K

−1

Dic

t+1

= K

−1

Dic

t

, otherwise

Dic

t+1

= Dic

t

∪{z

t

}. The matrix K

−1

Dic

t+1

is updated

as follows:

K

−1

Dic

t+1

=

1

error

t

"

error

t

K

−1

Dic

t

−c

t

−c

T

t

1

#

(11)

3 ONLINE KERNEL-BASED LSPI

Online kernel-based LSPI (KBLSPI) is a kernelised

version of online-LSPI and the pseudocode is given

in Figure 2. Given the basis function set Φ, the initial

learned policy π

0

, the accuracy parameter v and the

initial state s

1

. At each time step t, online-KBLSPI

uses the KG exploration policy, the algorithm in Fig-

ure 1. to select the action a

t

in the state s

t

(step: 4)

and observes the new state s

t+1

and reward r

t

. The

action a

t+1

in s

t+1

is chosen by the learning policy

π

t

. The algorithm in Figure 2 performs the ALD test,

Section 2.5 on the basis functions of z

t

and z

t+1

to

provide feature selection (steps: 7-14), where z

t

is the

state-action pair (s

t

,a

t

) at time step t and z

t+1

is the

state-action pair (s

t+1

,a

t+1

) at time step t + 1. If the

basis functions of a given state-action pair, i.e. z

t

and

z

t+1

can not approximated by the basis functions of

the elements that stored in the dictionary Dic

t

, then

the given state-action pair will be added to the dic-

tionary, the inverse kernel matrix K

−1

will be up-

dated, the number of columns and rows of the ma-

trix

ˆ

A will be increased and the number of dimensions

of the vector

ˆ

b will be increased (step: 11). Other-

wise, the given state-action pair will not be added to

the dictionary (step: 12). Then, online-KBLSPI up-

dates the matrix

ˆ

A and the vector

ˆ

b (steps: 15-16).

After few samples K

θ

obtained from the environment,

online-KBLSPI estimates the weight vector ˆw

π

t

under

the current policy π

t

(step: 18) and approximates the

corresponding state-action value function

ˆ

Q

π

t

(step:

19), i.e. approximate policy evaluation. Then, online-

KBLSPI derives an improved new learned policy π

t+1

which is a greedy one (step: 20), i.e. approximated

policy improvement. This procedure is repeated until

the end of playing L steps which is the horizon of an

experiment.

4 EXPERIMENTS

In this section, we describe the test domain, the ex-

perimental setup and the experiments where we com-

pare online-LSPI and online-KBLSPI using KG pol-

icy. All experiments are implemented in MATLAB.

4.1 Test Domain/Experimental Setup

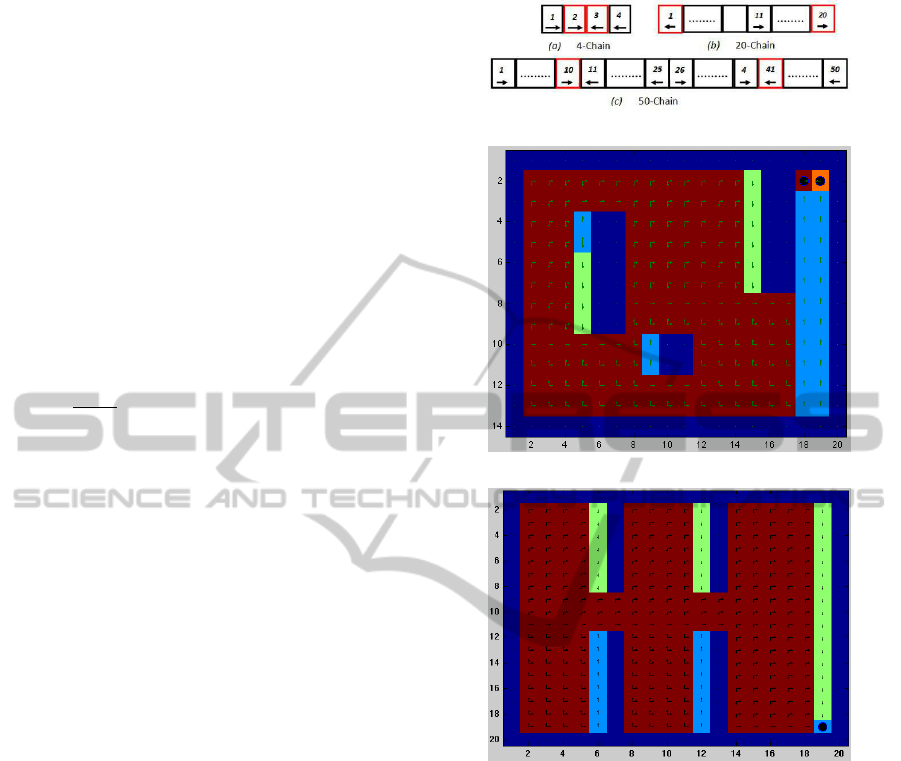

The test domain consists of 5 MDPs as shown in Fig-

ure 3, each with discount factor γ = 0.9. The first

three domains are the 4-, 20-, and 50- chain. The 4-,

and 20-domain are also used in (Lagoudakis and Parr,

2003; X. Xu and Lu, 2007) and the 50-chain is used

in (Lagoudakis and Parr, 2003). In general, the x-

open chain which is originally studied in (Koller and

Parr, 2000) consists of a sequence of x states, labeled

from s

1

to s

x

. In each state, the agent has 2 actions,

either GoRight (R) or GoLeft (L). The actions suc-

ceed with probability 0.9 changing the state in the in-

tended direction and fail with probability 0.1 chang-

ing the state in the opposite direction. The reward

structure can vary such as the agent gets reward for

visiting the middle states or the end states. For the

4-chain problem, the agent is rewarded 1 in the mid-

dle states, i.e. s

2

and s

3

, and 0 at the edge states, i.e.

s

1

and s

4

. The optimal policy is R in states s

1

and

s

2

and L in states s

3

and s

4

. (Koller and Parr, 2000)

used a policy iteration method to solve the 4-chain

and showed that the resulting suboptimal policies os-

cillate between R R R R and L L L L. The reason is

because of the limited approximation abilities of basis

functions in policy evaluation. For the 20-chain, the

agent is rewarded 1 in states s

1

and s

20

, and 0 else-

OnlineKnowledgeGradientExplorationinanUnknownEnvironment

9

1. Input:

|S|

;

|A|

;discount factor

γ

;accuracy

v

;

set of basis functions

Φ = {φ

1

,···,φ

n

}

;initial

learned policy

π

0

;length of trajectory

L

;

policy improvement interval

K

θ

;reward

r ∼ N(µ

a

,σ

2

a

)

; initial state

s

1

.

2. Intialize:

ˆ

A ←0

;

ˆ

b ←0

;

s

t

;

Dic

t

= { }

;

K

|SA|×|SA|

=< Φ

T

,Φ >

;

K

−1

Dic

t

= []

;

ˆ

Q

|SA|

← 0

3. For

t = 1, ···, L

4.

a

t

← KG

5.

s

t

, a

t

; observe:

s

t+1

;

r

t

;

a

t+1

← π

t

(s

t+1

)

6.

z

t

← (s

t

) ∗|A|+ a

t

,

z

t+1

← (s

t+1

) ∗|A|+ a

t+1

7. For

z

i

∈ {z

t

, z

t+1

}

8.

k

T

(.,z

i

) = [k(1,z

i

),···,k( j,z

i

),···,k(|Dic

t

|, z

i

)]

,

c(z

i

) = K

−1

Dic

t

∗ k(., z

i

)

9.

error(z

i

) = k(z

i

,z

i

) −k

T

(.,z

i

) ∗ c(z

i

)

10. If

error(z

i

) > v

11.

Dic

t+1

← Dic

t

∪ z

i

;

K

−1

Dic

t+1

←

1

error(z

i

)

error(z

i

)K

−1

Dic

t

−c(z

i

)

−c(z

i

)

T

1

;

ˆ

A

t

←

ˆ

A

t

0

0 0

;

ˆ

b

t

←

ˆ

b

t

0

12. Else

Dic

t+1

← Dic

t

;

K

−1

Dic

t+1

← K

−1

Dic

t

13. End if

14. End for

15.

ˆ

A

t+1

←

ˆ

A

t

+ k(.,z

t

)[k(., z

t

) −γ k(., z

t+1

)]

T

16.

ˆ

b

t+1

←

ˆ

b

t

+ k(.,z

t

)r

t

,

k(., z

t

) = [k(1,z

t

),···,k( j,z

t

),···,k(|Dic

t+1

|, z

t

)]

T

17. If

t = (l + 1)K

θ

then

18.

ˆw

π

t

l

←

ˆ

A

−1

t+1

ˆ

b

t+1

19. for

z = z

1

, z

2

, ···, z

|SA|

k(., z) = [k(1, z),···, k( j,z),···, k(|Dic

t+1

|, z)]

T

ˆ

Q

π

t

l

(z) = ˆw

π

t

,T

l

∗ k(., z)

end

20.

π

t+1

← argmax

a

ˆ

Q

π

t

l

(s,a)∀

s∈ S

;

π

t

← π

t+1

;

l ← l + 1

21. End if

22.

s

t

← s

t+1

23. End for

24. Output: At each time step

t

, note down:

the reward

r

t

and the learned policy

π

t

Figure 2: Algorithm: (Online-KBLSPI).

where. The optimal policy is L from states s

1

through

s

10

and R from states s

11

through s

20

. And, for the

50-chain, The agent gets reward 1 in states s

10

and

s

41

and 0 elsewhere. The optimal policy is R from

state s

1

through state s

10

and from state s

26

through

state s

40

, and L from state s

11

through state s

25

and

from state s

41

through state s

50

(Lagoudakis and Parr,

2003). The fourth and fifth MDPs, the grid

1

and grid

2

worlds, are used in (Sutton and Barto, 1998). The

agent has 4 actions Go Up, Down, Left and Right and

for each of them it transits to the intended state with

probability 0.7 and fails with probability 0.1 chang-

ing the state to the one of other directions. The agent

gets reward 1 if it reaches the goal state, −1 if it hits

(a) The chain domains

(b) The grid

1

domain

(c) The grid

2

domain

Figure 3: Subfigure (a) is the chain domains, in the red cells,

the agent gets rewards. Subfigure (b) is the grid

1

with 280

states and 188 accessible states. Subfigure (c) is the grid

2

with 400 states and 294 accessible states. The arrows show

the optimal actions in each state.

the wall, and 0 elsewhere.

The experimental setup is as follows: For each of

the 5 MDPs, we compared online-LSPI and online-

KBLSPI using knowledge gradient KG policy as an

exploration policy. For number of experiments EXPs

equals 1000 for the chain domains, 100 for the grid

1

domain and 50 for the grid

2

domain, each one with

length L. The performance measures are: 1) the av-

erage frequency at each time step, i.e. at each time

step t for each experiment, we computed the proba-

bility that the learned policy (step: 19) in Algorithm 2

reached to the optimal policy, then we took the aver-

age of EXPs experiments to give us the average fre-

quency at each time step. 2) the average cumulative

ICAART2014-InternationalConferenceonAgentsandArtificialIntelligence

10

frequency at each time step, i.e. the cumulative aver-

age frequency at each time step t. (Mahadevan, 2008)

used the 50-chain domain with length of trajectories

L equals 5000, therefore, we used the same horizon.

For other MDP domains we adapted the length of tra-

jectories L according to the number of states, i.e. as

the number of states is increased, L will be increased.

For instance, L is set to 18800 for the grid world.

KG policy, needs estimated standard deviationand

estimated mean for each state-action pair. Therefore,

we assume that the reward has a normal distribution.

For example, for the 50-chain problem, the agent is

rewarded 1 if it goes to state 10, therefore, we set the

reward in s

10

to N(µ

1

,σ

2

a

), where µ

1

= 1. And, the

agent is rewarded 0 if it goes to s

1

, therefore, we set

the reward to N(µ

2

,σ

2

a

), where µ

2

= 0. σ

a

is the stan-

dard deviation of the reward which is set fixed and

equal for each action, i.e. σ

a

= 0.01,0.1, 1. More-

over, KG exploration policy is a full optimistic pol-

icy, therefore, we set the policy improvement inter-

val K

θ

to 1. For each run, the initial state s

1

was

selected uniformly, randomly from the state space S.

We used the pseudo-inversewhen the matrix

ˆ

A is non-

invertible (Mahadevan, 2008).

For online KBLSPI, we define a kernel function

K on state-action pairs, i.e. K : |SA|×|SA| → R , we

composed K into a state kernel K

s

, i.e. K

s

: |S|×|S|→

R and an action kernel K

a

, i.e. K

a

: |A|×|A| → R

as (Y. Engel and Meir, 2005). Therefore, the ker-

nel function K is K = K

s

⊗K

a

where ⊗ is the Kro-

necker product. K is a kernel matrix because K

s

and

K

a

are kernel matrices, we refer to (Scholkopf and

Smola, 2002) for more details. The kernel state K

s

is a Gaussian kernel, i.e. k(s,s

′

) = exp

−||s−s

′

||

2

/(2σ

2

ks

)

where σ

ks

is the standard deviation of the kernel state

function, s is the state at time t and s

′

is the state at

time t+1. And, the action kernel is a Gaussian kernel,

i.e. k(a,a

′

) = exp

−||a−a

′

||

2

/(2σ

2

ka

)

where σ

ka

is the standard

deviation of the kernel action function, a is the action

at time t and a

′

is the action at time t + 1. s and s

′

,

and a and a

′

are normalized as (X. Xu and Lu, 2007),

e.g. for 50-chain with number of states |S| = 50 and

number of actions |A| = 2, s,s

′

∈ {

1

/|S|,··· ,

50

/|S|} and

a,a

′

∈{0.5,1}. σ

ks

and σ

ka

are tuned empirically and

set to 0.55 for the chain domains and 2.25 for the grid

world domains (grid

1

and grid

2

) We set the accuracy

v in the approximated kernel basis to 0.0001.

For online-LSPI, we used Gaussian basis func-

tions φ

s

= exp

−||s−c

i

||

2

/(2σ

2

Φ

)

where φ

s

is the basis

functions for state s with center nodes (c

i

)

n

i=1

which

are set with equal distance between each other, and

σ

Φ

is the standard deviation of the basis functions

which is set to 0.55. The number of basis functions

n equals 3 for 4-chain, 5 for 20-chain, and 10 for

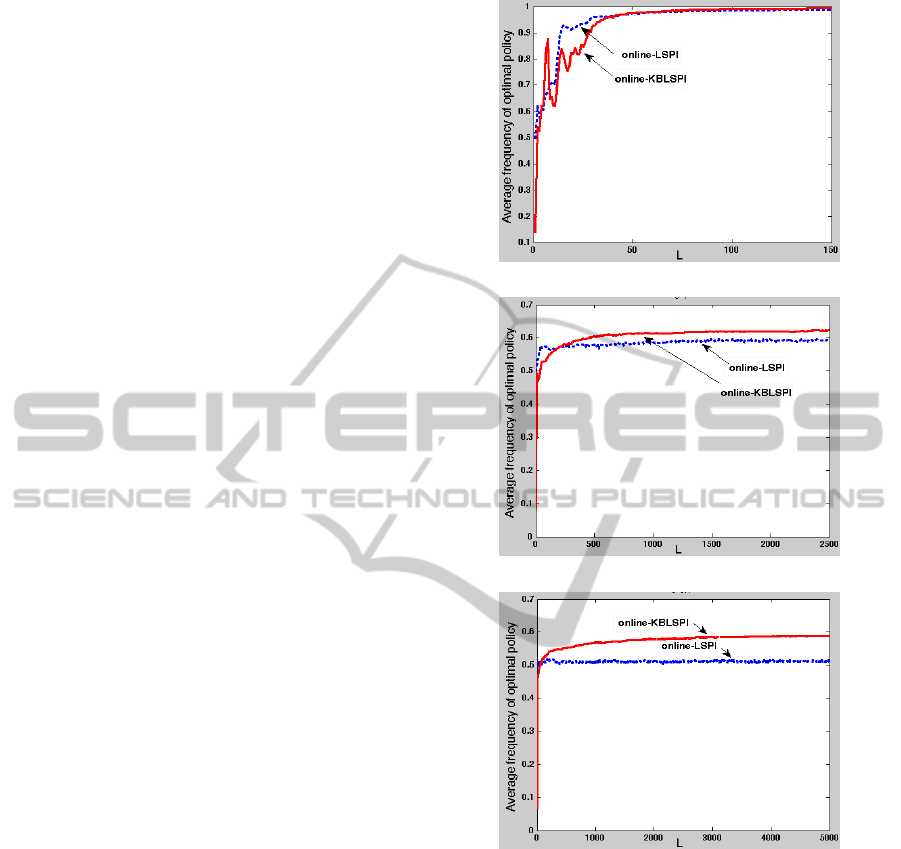

(a) Performance on 4-chain domain

(b) Performance on 20-chain domain

(c) Performance on 50-chain domain

Figure 4: Performance of the average frequency by the KG

policy in online-LSPI in blue and KG in online-KBLSPI in

red. Subfigure (a) shows the performance on the 4-chain

using standard deviation of reward σ

a

= 0.01. Subfigure

(b) shows the performance on the 20-chain using standard

deviation of reward σ

a

= 1. Subfigure (c) shows the perfor-

mance on the 50-chain using σ

a

= 0.1.

50-chain as (Lagoudakis and Parr, 2003) and 40 for

the grid

1

and grid

2

domains as (M. Sugiyama and Vi-

jayakumar, 2008).

4.2 Experimental Results

The experimental results on the chain domains, i.e.

4-, 20-, and 50-chain show that the online-KBLSPI

OnlineKnowledgeGradientExplorationinanUnknownEnvironment

11

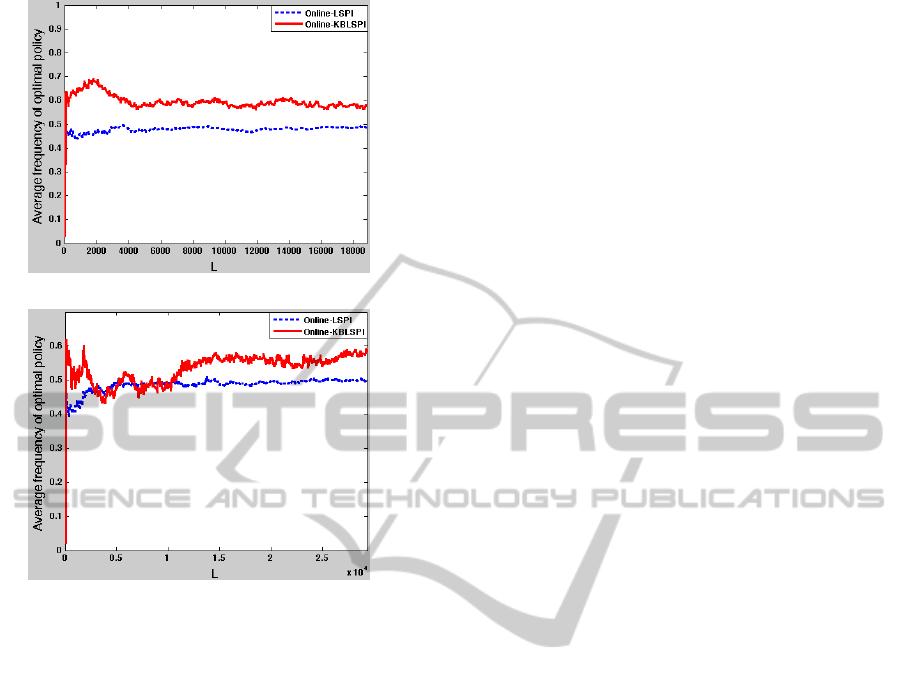

(a) Performance on grid

1

domain

(b) Performance on grid

2

domain

Figure 5: Performance of the average frequency by the KG

policy in online-LSPI in blue and KG in online-KBLSPI

in red. Subfigure (a) shows the performance on the grid

1

domain using standard deviation of reward σ

a

= 0.01. Sub-

figure (b) shows the performance on the grid

2

domain using

standard deviation of reward σ

a

= 1.

outperforms the online-LSPI according to the average

frequency and cumulative average frequency of op-

timal policy performances for all values of the stan-

dard deviation of reward σ

a

i.e. σ

a

= 0.01, 0.1 and 1.

Figure 4 shows how the performance of the learned

policy is increased by using online-KBLSPI on the 4-

chain, 20-chain and 50-chain.

The experimental results on the grid

1

domain

show that the online-KBLSPI outperforms the online-

LSPI according to the average frequency and cumu-

lative average frequency of optimal policy perfor-

mances for all values of the standard deviation of

reward σ

a

i.e. σ

a

= 0.01,0.1 and 1. And, the ex-

perimental results on the grid

2

domain show that the

online-KBLSPI performs better than the online-LSPI

for standard deviation of reward equals 1. Figure 5

shows how the performance of the learned policy is

increased by using online-KBLSPI on the grid

1

and

grid

2

domains.

The results clearly show that online-KBLSPI usu-

ally converges faster than online-LSPI to the (near)

optimal policies, i.e. the performance of the online

KBLSPI is increased. Although, the performance of

the online LSPI is better in the beginning and this is

because the online LSPI uses its all basis functions,

while online KBLSPI incrementally constructs its ba-

sis functions by the kernel sparsification method.

4.3 Statistical Methodology

We used a statistical hypothesis test, i.e. students t-

test with significance level α

st

= 0.05 to compare the

performance of the average frequency of optimal pol-

icy that results from the online-LSPI and the online-

KBLSPI at each time step t. The null hypothesis H

0

is the online-KBLSPI average frequency performance

(AF

KBLSPI

) larger than the online-LSPI average fre-

quency performance (AF

LSPI

) and the alternative hy-

pothesis H

a

is AF

KBLSPI

less or equal AF

LSPI

. We

wanted to calculate the confidence in the null hypoth-

esis, therefore, we computed the confidence probabil-

ity p-value at each time step t. The p-value is the

probability that the null hypothesis is correct. The

confidence probability converges to 1 for all standard

deviation of reward, i.e. σ

a

= 0.01, 0.1, and 1 and for

all domains, i.e. the 4-, 20-, and 50-chain domains

and the grid world domains. Figure 6 shows how

the p-value converges to 1 using the 50-chain, and

the grid

1

domain with standard deviation of reward

σ

a

= 0.1. The x-axis gives the time steps (the length

of trajectories). The y-axis gives the confidence prob-

ability, i.e. p-value. Figure 6 shows the confidence

in the online kernel-based LSPI performance is very

high, where the p-value converged quickly to 1.

5 CONCLUSIONS AND FUTURE

WORK

We presented Markov decision process which is a

mathematical model for the reinforcement learning.

We introduced online and offline least squares policy

iteration (LSPI) that find the optimal policy in an un-

known environment. We presented knowledge gradi-

ent KG policy to be used as an exploration policy in

the online learning algorithm. We introduced offline

kernel-based LSPI (KBLSPI). We also introduced ap-

proximate linear dependency (ALD) method to select

feature automatically and get rid of tuning empirically

the center nodes. We proposed online-KBLSPI which

uses KG exploration policy and ALD method. Fi-

nally, we compared online-KBLSPI and online-LSPI

and concluded that the average frequency of opti-

mal policy performance is improved by using online-

KBLSPI. Future work must compare the performance

ICAART2014-InternationalConferenceonAgentsandArtificialIntelligence

12

(a) p-value of the 50-chain domain

(b) p-value of the grid domain

Figure 6: The confidence probability p-value that the av-

erage frequency of optimal policy performance of online-

KBLSPI performs better than online-LSPI. Subfigure (a)

shows the p-value of the 50-chain using standard deviation

of reward σ

a

= 0.1. Subfigure (b) shows the p-value of the

grid domain using standard deviation of reward σ

a

= 0.1.

of online-LSPI and online-KBLSPI using other types

of basis functions, e.g. the hybrid shortest path basis

functions (Yahyaa and Manderick, 2012), must com-

pare the performance using continuous MDP domain,

e.g. Interval pendulum and must prove a convergence

analysis of the online-KBLSPI.

REFERENCES

Engel, Y. and Meir, R. (2005). Algorithms and represen-

tations for reinforcement learning. Technical report,

Ph.D. thesis, Senate of the Hebrew.

I.O. Ryzhov, W. P. and Frazier, P. (2012). The knowledge-

gradient policy for a general class of online learning

problems. Operation Research, 60(1):180–195.

Koller, D. and Parr, R. (2000). Policy iteration for fac-

tored mdps. In Proceedings of the 16th Conference

Annual Conference on Uncertainty in Artificial Intel-

ligence (UAI).

L. Bus¸oniu, D. Ernst, B. D. S. and Babuˇska, R. (2010).

Online least-squares policy iteration for reinforcement

learning control. In Proceedings of the 2010 American

Control Conference.

Lagoudakis, M. G. and Parr, R. (2003). Model-free least

squares policy iteration. Technical report, Ph.D. the-

sis, Duke University.

M. Sugiyama, H. Hachiya, C. T. and Vijayakumar, S.

(2008). Geodesic gaussian kernels for value func-

tion approximation. Journal of Autonomous Robots,

25(3):287–304.

Mahadevan, S. (2008). Representation Discovery Using

Harmonic Analysis. Morgan and Claypool Publish-

ers.

Powell, W. (2007). Approximate Dynamic Programming:

Solving the Curses of Dimensionality. John Wiley and

Sons, New York, USA.

Puterman, M. L. (1994). Markov Decision Processes: Dis-

crete Stochastic Dynamic Programming. John Wiley

and Sons, Inc., New York, USA.

Scholkopf, B. and Smola, A. (2002). Learning with Ker-

nels: Support Vector Machines, Regularization, Opti-

mization, and Beyond. MIT Press, Cambridge, MA,

USA.

Sutton, R. and Barto, A. (1998). Reinforcement Learning:

An Introduction (Adaptive Computation and Machine

Learning). The MIT Press, Cambridge, MA, 1st edi-

tion.

Vapnik, V. (1998). The Grid: Statistical Learning Theory.

Wiley Press, New York, United State of America.

X. Xu, D. H. and Lu, X. (2007). Kernel-based least squares

policy iteration for reinforcement learning. Journal

of IEEE Transactions on Neural Network, 18(4):973–

992.

Y. Engel, S. M. and Meir, R. (2004). The kernel recursive

least-squares algorithm. Journal of IEEE Transactions

on Signal Processing, 52(8):2275–2285.

Y. Engel, S. M. and Meir, R. (2005). Reinforcement learn-

ing with gaussian processes. In Proceedings of the

22nd International Conference on Machine learning

(ICML), New York, NY, USA. ACM.

Yahyaa, S. Q. and Manderick, B. (2012). Shortest path

gaussian kernels for state action graphs: An empirical

study. In The 24th Benelux Conference on Artificial

Intelligence (BNAIC), Maastricht, The Netherlands.

Yahyaa, S. Q. and Manderick, B. (2013). Knowledge gradi-

ent exploration in online least squares policy iteration.

In The 5th International Conference on Agents and

Artificial Intelligence (ICAART), Barcelona, Spain.

OnlineKnowledgeGradientExplorationinanUnknownEnvironment

13