On the Segmentation and Classification of Water in Videos

Pascal Mettes, Robby T. Tan and Remco Veltkamp

Department of Information and Computing Sciences, Utrecht University, Princetonplein 5, Utrecht, The Netherlands

Keywords:

Hybrid Water Descriptor, Mode Subtraction, Decision Forests, Markov Random Field, Novel Database.

Abstract:

The automatic recognition of water entails a wide range of applications, yet little attention has been paid to

solve this specific problem. Current literature generally treats the problem as a part of more general recog-

nition tasks, such as material recognition and dynamic texture recognition, without distinctively analyzing

and characterizing the visual properties of water. The algorithm presented here introduces a hybrid descriptor

based on the joint spatial and temporal local behaviour of water surfaces in videos. The temporal behaviour is

quantified based on temporal brightness signals of local patches, while the spatial behaviour is characterized

by Local Binary Pattern histograms. Based on the hybrid descriptor, the probability of a small region of being

water is calculated using a Decision Forest. Furthermore, binary Markov Random Fields are used to segment

the image frames. Experimental results on a new and publicly available water database and a subset of the

DynTex database show the effectiveness of the method for discriminating water from other dynamic and static

surfaces and objects.

1 INTRODUCTION

Water recognition is a seemingly effortless task for

humans, which is hardly surprising given the bio-

logical importance of water. While recent studies

have indeed shown that humans are experts at such

tasks (Sharan et al., 2013), there is little empirical

knowledge on how water can be optimally recog-

nized. Perhaps the most illustrative insight is pro-

vided in the work of Schwind, which indicates the im-

portance of polarizing light reflected from water sur-

faces (Schwind, 1991). The experiments performed

on water insects such as bugs and beetles showed

that they are attracted by the horizontally polarized

light from the reflections of water surfaces. However,

the task of recognizing water from only images and

videos, which do not possess polarization informa-

tion, is still hardly problematic for human observers.

Therefore, the method provided here attempts to

do the same, namely, to recognize water based only on

visual appearance. The specific task of water identifi-

cation in videos has, to the best of our knowledge, not

been tackled on the scale presented in this work. Be-

sides attempting to gain empirical knowledge, there

is a wide range of applications that can benefit from

such an algorithm, including: (inter-planetary) explo-

ration, dynamic background removal, robotics, and

aerial video analysis.

Traditionally, automatic water recognition is stud-

ied from two perspectives, namely as part of larger

recognition tasks such as material recognition (Sha-

ran et al., 2013; Hu et al., 2011; Varma and Zisser-

man, 2005) or dynamic texture recognition (Chan and

Vasconcelos, 2008; Fazekas and Chetverikov, 2007;

Saisan et al., 2001; Zhao and Pietik

¨

ainen, 2007), and

in specialized and restricted environments, including

autonomous driving systems (Rankin and Matthies,

2006) and maritime settings (Smith et al., 2003).

Most current works in material and texture recogni-

tion are based on the hypothesis that target classes can

be discriminated using distributions of local image

features, global motion statistics, or learned ARMA

models. Although interesting results have been re-

ported, the descriptors themselves are generic and

there is little analysis on which features work well

for a certain texture. Furthermore, the approaches are

usually global, which means that they are not directly

applicable to localization tasks. On the other hand,

water detection systems in autonomous driving sys-

tems and in maritime settings make explicit and non-

generalizable assumptions, such as horizon location,

camera height and orientation, and sky-water posi-

tioning, making the methods incapable of water de-

tection from a broad scope.

Given the limitations of existing methods, a novel

method is proposed in this paper. At the core of the

method is a hybrid descriptor based on local spatial

and temporal information. First, the input video is

283

Mettes P., T. Tan R. and Veltkamp R..

On the Segmentation and Classification of Water in Videos.

DOI: 10.5220/0004680202830292

In Proceedings of the 9th International Conference on Computer Vision Theory and Applications (VISAPP-2014), pages 283-292

ISBN: 978-989-758-003-1

Copyright

c

2014 SCITEPRESS (Science and Technology Publications, Lda.)

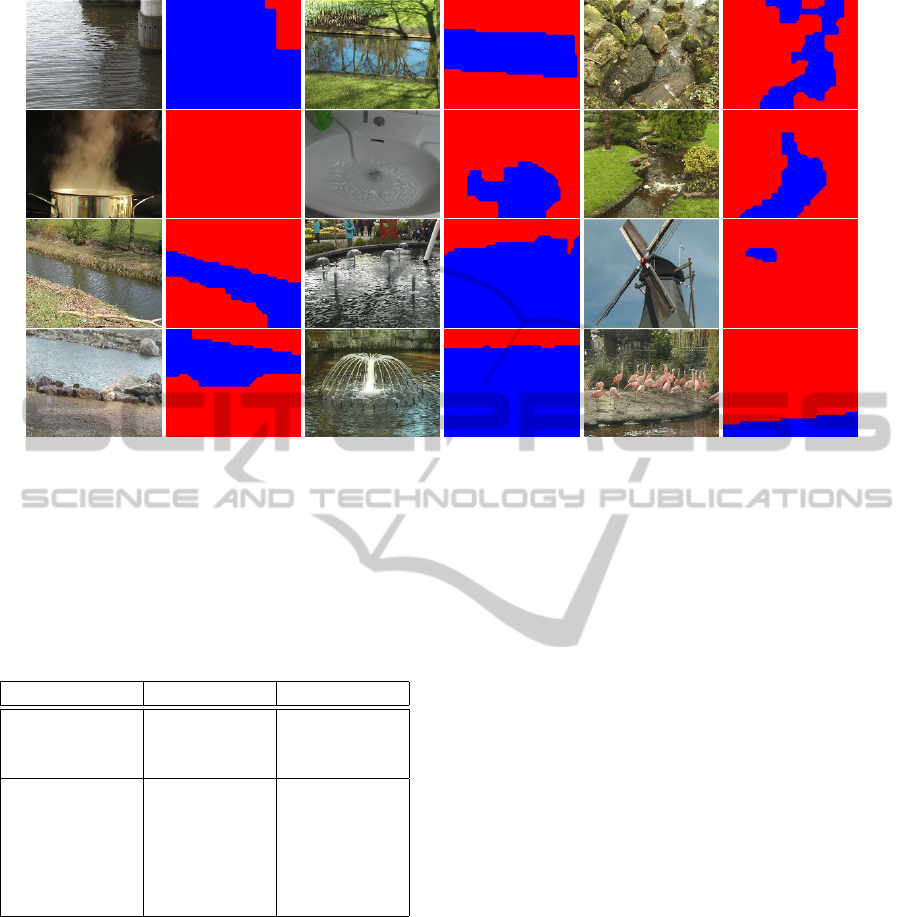

(a) Canal. (b) Fountain. (c) Lake. (d) Ocean. (e) Stream. (f) Pond.

(g) River. (h) Non-water.

Figure 1: Exemplary frames of categories in the water database.

pre-processed to remove the background reflections

and water colour, leaving only the residual image se-

quence, which conveys the motion of water. Given the

residual images, local descriptors are extracted and

classified using a Decision Forest (Bochkanov, 2013;

Criminisi et al., 2012). The trained Forest can then be

utilized to perform local classification for a collection

of test sequences, where the probabilities are provided

to a binary Markov Random Field to generate a seg-

mentation for the frames in the test sequences based

on the presence or absence of water.

Since this work is specifically aimed at water de-

tection and given the supervised nature of the algo-

rithm, a second contribution of this work is the intro-

duction of a new database. The water database, fur-

ther elaborated at the end of this section, consists of

a set of complex natural scenes with a wide variety

of water surfaces. Experimental evaluation on this

database and on the DynTex database (P

´

eteri et al.,

2010) shows the effectiveness of the proposed method

for both video classification and spatio-temporal seg-

mentation.

The paper is organized as follows. This Section is

concluded with an elaboration of the water database,

while Section 2 discusses the works related to com-

putational water recognition. Section 3 provides an

analysis of the temporal and spatial behaviour of wa-

ter, the descriptors derived from that analysis, and the

process of local classification. This is followed by

the global segmentation step in Section 4. Section 5

shows the experimental evaluation on the databases

and the paper is concluded in Section 6.

1.1 Water Database

As stated above, experimental evaluation is per-

formed in this work on a novel database, in order to

tackle the challenge problem of water detection

1

. Al-

though databases used in dynamic texture recognition

1

For detail and downloads, visit the author’s webpage.

do contain water videos (P

´

eteri et al., 2010), they do

not contain water videos in the quantity and variety

desired for water recognition and localization. The

novel water database contains a set of positive and

negative videos (i.e. water and non-water videos),

from a wide variety of natural scenes. In total, the

database consists of 260 videos, where each video

contains between 750 and 1500 frames, all with a

frame size of 800×600. The positive class consists of

160 videos of predominantly 7 scenes; oceans, foun-

tains, ponds, rivers, streams, canals, and lakes. The

negative class on the other hand can be represented

by any other scene. In the database, categories with

seemingly similar spatial and temporal characteristics

are chosen, including trees, fire, flags, clouds/steam,

and vegetation. Examples of the categories of the

database are shown in Fig. 1. Since the focus of this

work is aimed at characterizing the behaviour of wa-

ter, camera motion is considered a separate problem

and it is therefore not integrated into the database.

2 RELATED WORK

Original work on material classification attempted

to discriminate materials photographed in laboratory

settings, e.g. based on distributions of Gabor filter re-

sponses (Varma and Zisserman, 2005) or image patch

exemplars (Varma and Zisserman, 2009). More re-

cently, the classification problem has shifted to the

real-world domain with the Flickr Materials Database

(Sharan et al., 2009), which includes water as one of

the target classes. Current approaches attempt to dis-

criminate materials based on concatenations of local

image feature distributions (Hu et al., 2011; Sharan

et al., 2013). Although it is possible to apply these ap-

proaches to the more specific problem of water recog-

nition, they are severely restricted in multiple aspects.

First, the algorithms are concerned with classification

solely from images, so any form of temporal informa-

tion is neglected. Second, and more importantly, the

VISAPP2014-InternationalConferenceonComputerVisionTheoryandApplications

284

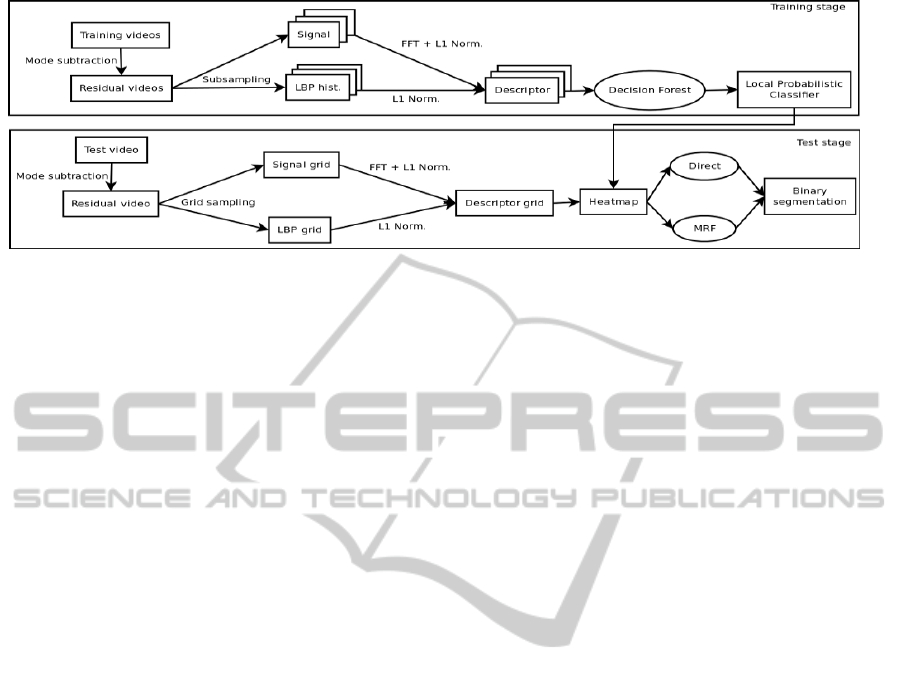

Figure 2: An overview of the water segmentation method, where the test stage is done for all frames of the test videos.

algorithms provide a black-box process, where it is

unknown how or why certain materials (such as wa-

ter) can be discriminated using the recommended im-

age features (e.g. SIFT, Jet, Colour, etc.).

A field more directly related to the problem tack-

led in this work is dynamic texture recognition, which

is roughly dominated by two approaches. The first

approach is the discrimination of dynamic textures

based on two-frame motion estimation. Generally,

well-known conventional optical flow methods are

used for dense motion estimation, after which clas-

sification is performed based on global motion statis-

tics. Global statistics include the curl and divergences

of the flow field, and the probability of having a char-

acteristic flow direction and magnitude (Fazekas and

Chetverikov, 2007; P

´

eteri and Chetverikov, 2005).

Although high recognition rates have been reported

for such methods, the use of conventional optical flow

is problematic for water in natural scenes. In general,

water does not meet the conditions of optical flow

(Beauchemin and Barron, 1995). Another pressing

problem is that flow-based methods are focused on

classification, not segmentation.

A second dominant approach is the global mod-

eling of videos using Linear Dynamical Systems

(LDS) (Chan and Vasconcelos, 2008; Doretto et al.,

2003; Mumtaz et al., 2013; Saisan et al., 2001). In

its essence, LDS is a latent variable model which

projects video frames to a lower dimensional space

and tracks the temporal behaviour in that lower di-

mensional space. The use of LDS in dynamic tex-

ture recognition was first popularized by the work of

(Saisan et al., 2001), mostly due to the proposed rel-

atively efficient parameter learning procedure and the

encouraging classification results. Although the use

of LDS is intuitively appealing, the original formula-

tion of LDS is limited, since it cannot handle multiple

objects/textures in a single video. Furthermore, lit-

tle investigation has been done as to which textural

elements can be captured with LDS. Multiple exten-

sions have been made to handle the presence of mul-

tiple textures, but in current literature, LDS is used

either as a segmentation or classification method, but

not as a joint segmentation-classification problem (i.e.

dividing the pixels into different coherent parts and

classifying each part, as is done in this work).

3 LOCAL WATER DETECTION

The primary focus of this work is the generation of

local descriptors based on the analysis of the spatio-

temporal behaviour of water. Here, both a temporal

and spatial descriptor are presented which are distinc-

tive enough for direct identification of water surfaces

on a local scale. A generalized overview of the al-

gorithm is shown in Fig. 2. First, the videos are

pre-processed to increase invariance to water colours

and reflections. After that the temporal and spatial de-

scriptors are extracted and used as feature vectors for

a Decision Forest. Given a test video, the local de-

scriptors are extracted and classified using the trained

Forest. The probability outputs are then used as in-

put for a regularization step using spatio-temporal

Markov Random Fields. In this section, the analy-

sis and descriptor generation is provided, as well as

the probabilistic classification.

3.1 Creating Residuals

A major aspect of water surfaces is the inherent

variability they possess, due to water colour, rip-

ples, background reflections, weather conditions, etc.

Rather than trying to exploit dominant features due to

water colour or background reflections, the focus of

this work is to generate features which are invariant

to these aspects, and in effect state something about

the general nature of water surfaces. This is done

by first obtaining the water colour as well as back-

ground reflections, and then removing them, leaving

only residual images.

OntheSegmentationandClassificationofWaterinVideos

285

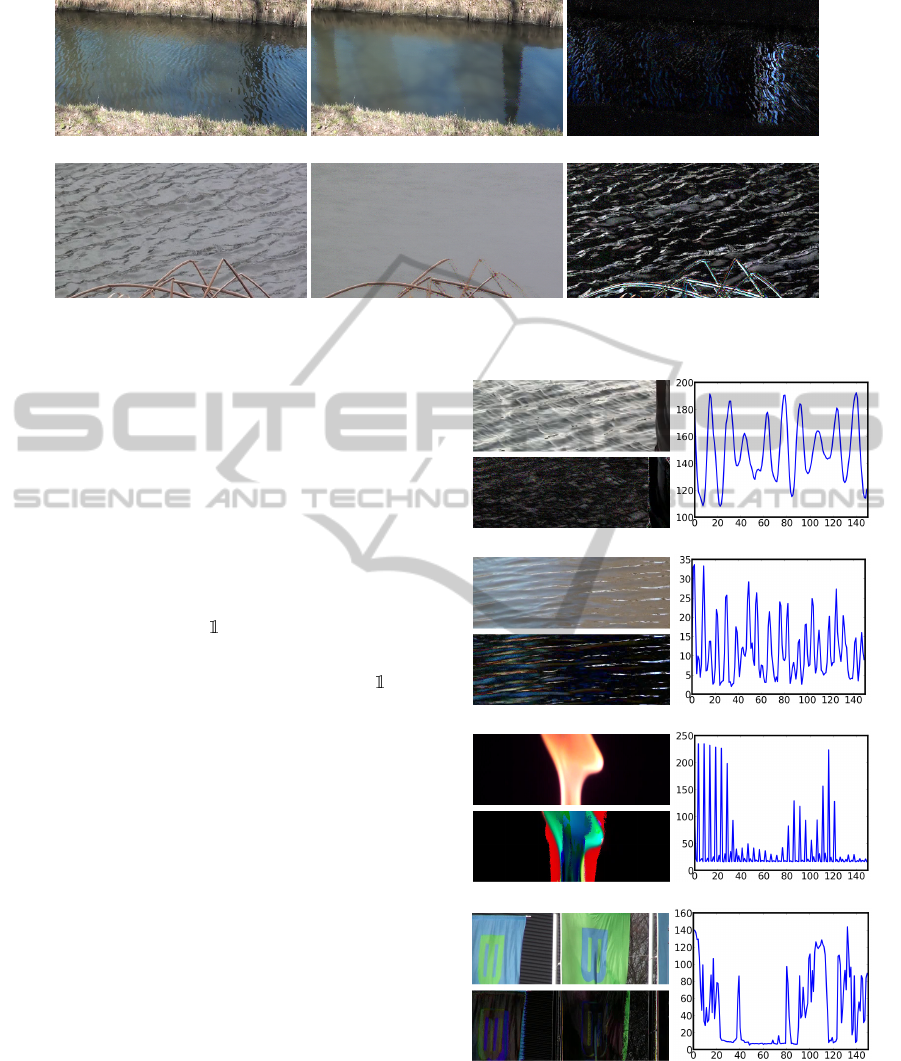

(a)

(b)

Figure 3: A typical frame, temporal mode, and residual for 2 videos.

The aim of the residual frames is to highlight wa-

ter ripples, instead of reflections. This is realized by

performing temporal mode subtraction for each pixel:

R

t

(x,y)[c] = |I

t

(x,y)[c] −M(x,y)[c]|, (1)

for each c ∈ {R,G,B} separately, where M(x,y) de-

notes the temporal mode of pixel (x,y). The temporal

mode of a single pixel for a single colour channel can

be computed as follows:

M(x, y)[c] = max

p

T

∑

t=1

{I

t

(x,y)[c] = p}, (2)

where T denotes the total number of frames and {·}

is the indicator function. A typical frame, temporal

mode, and corresponding residual frame of 2 videos

are shown in Fig. 3. Note that this simple procedure

does not remove all reflections, but it can successfully

find the most dominant elements of reflection, such

that the residual frames highlight water ripples.

3.2 Local Temporal Descriptor

The idea behind the temporal water descriptor is that

the water ripples indicate the motion characteristics.

The motion characteristics of water are hypothesized

to constitute several aspects. Most notably, it is hy-

pothesized here that this type of motion is gradual and

repetitive, since ripples re-occur at the same location

over time. Other dynamic and static processes might

partially share the local temporal properties of water,

but not statistically to the same extent.

Based on the defined hypotheses, the next step

is to create a descriptor which judges a local spatio-

temporal patch on these hypotheses. To achieve this,

an m-dimensional signal is first introduced, which

represents the mean brightness value of an n×n patch

for m consecutive frames at exactly the same location.

(a)

(b)

(c)

(d)

Figure 4: Four exemplary frames and sample signals.

The result is a list of brightness values which can be

seen as a 1-dimensional signal. Fig. 4 shows mul-

tiple examples of water and non-water scenes, along

with a mean brightness signal of a selected location.

From the Figure, the initial thoughts regarding regu-

VISAPP2014-InternationalConferenceonComputerVisionTheoryandApplications

286

(a) Original signals. (b) FT of signals (c) Norm. FT of signals

(d) LBP (e) Hybrid

Figure 5: Isomap projections of sample locations of trees (blue) and water (red). The Figure shows how using normalized

Fourier Transforms improves separation using temporal information (a,b,c). More interesting, it clearly shows that fusing

temporal and spatial information creates a further boost in separation (c,d,e).

larity and repetition are already clearly visible.

Given the m-dimensional signals, an immediate

thought is to use them directly as the descriptor. The

direct use of the signal itself as the descriptor is how-

ever erroneous, due to lack of invariance. Signals

with similar levels of smoothness and regularity can

have a large distance when comparing the signals di-

rectly. A descriptor based on the m-dimensional sig-

nal should in effect be invariant to temporal shifts,

brightness shifts, and brightness amplitudes. Rather

than creating a distance measure which explicitly en-

forces these types of invariance by means of nor-

malization and distance recalculation for all possible

temporal and brightness shifts, a descriptor is cre-

ated here by extracting the signal characteristics us-

ing the 1-dimensional Fourier Transform. For an

m-dimensional signal S, the corresponding Fourier

Transform F is also m-dimensional, where each com-

ponent i ∈ {1, m} is computed as:

F

i

= |

m

∑

j=1

S

j

e

−2πi j

√

−1/m

|. (3)

In other words, from the signal, an m-dimensional de-

scriptor [F

1

,..,F

m

] is computed. Although this de-

scriptor is invariant to the temporal and brightness

shifts, it is not invariant to brightness amplitudes.

Therefore, the final temporal descriptor is generated

by performing L1-normalization on the components,

such that two signals are compared by the distribution

of the Fourier Transform, i.e.:

F

i

=

|

∑

m

j=1

S

j

e

−2πi j

√

−1/m

|

∑

m

k=1

|

∑

m

l=1

S

l

e

−2πkl

√

−1/m

|

. (4)

A practical justification of using the L1-

normalized Fourier Transform as the temporal

descriptor is shown in Fig. 5. The Figure displays

the Isomap projection (Tenenbaum et al., 2000)

of samples taken from water and tree videos. An

ideal local descriptor has linear separability in the

original feature space. For visualization purposes,

the projected feature space is used here, but the full

feature space is used in the classification. As can be

seen in Fig. 5(a) and Fig. 5(b), the original signals

and their Fourier Transform are rather impractical in

terms of classification, while a separation is clearly

visible in Fig. 5(c), although there is an area of

overlap.

The choice of signal length m is a trade-off. Ide-

ally for recognition, m is equal to the total number

of frames in the video, since the longer the signal, the

less likely it is that non-water surfaces mimic the tem-

poral behaviour of water. On the other hand, this ap-

proach makes it impossible to detect temporal discon-

tinuities. Since the focus lies primarily on discrimina-

tions while still being able to detect obvious outliers,

m is set to 200 frames here.

OntheSegmentationandClassificationofWaterinVideos

287

3.3 Local Spatial Descriptor

The above defined descriptor captures the temporal

behaviour of water, but ignores the local spatial in-

formation, most notably the spatial layout of water

waves and ripples. Given that water waves, ripples,

and fountains are highly deformable, a descriptor is

desired which provides spatial information on a lo-

cal patch without requiring an explicit model of water

waves. To meet this desire, the local spatial character-

istics of water surfaces are extracted using Local Bi-

nary Pattern histograms (Zhao and Pietik

¨

ainen, 2007).

For a single pixel, the Local Binary Pattern is com-

puted by comparing the grayscale values of the pixel

to a set of local spatial neighbours. In this work, the 8

direct neighbours of a pixel are used for comparison.

As such, the Local Binary Pattern value of a single

pixel is computed as:

LBP

8,1

(g

c

) =

7

∑

p=0

H(g

c

p

−g

c

)2

p

, (5)

where g

c

denotes the center pixel for which the LBP

value is computed, {g

c

i

}

7

i=0

denotes the set of direct

neighbours of g

c

, and H(·) is the well-known discrete

Heaviside step function, defined as:

H(x) =

1 x ≥0

0 x < 0.

(6)

Since 8 neighbours are used in the comparison, the

corresponding LBP value can take 2

8

= 256 values.

In order to compute a LBP histogram of a local patch,

the LBP values of the pixels in the patch are computed

and placed in their corresponding integer bins of the

256-dimensional histogram. The resulting histogram

is normalized afterwards.

As stated above, a primary justification for the use

of Local Binary Pattern histograms as a spatial de-

scriptor is due to the pseudo-orderless nature of the

descriptor, which means that water waves do not need

to be modeled explicitly. Furthermore, the high di-

mensionality of the histograms provide desirable dis-

crimination abilities. Similar to the temporal descrip-

tor, the practical validity of the LBP histograms can

be shown by examining the projected feature space.

The early fusion (Snoek et al., 2005) of the temporal

and spatial descriptors into a hybrid descriptor, results

in a feature space where its projection is almost nearly

linearly separable for the randomly selected local

patch, as can be seen in Fig. 5(e). The importance of a

pseudo-orderless spatial descriptor came to light after

the investigations into explicit water modeling turned

out to be impractical. This conclusion is consistent

with literature on dynamic texture recognition. For

example in overlapping work of Zhao and Pietk

¨

ainen,

multiple extensions of LBP have been proposed, such

as VLBP (Zhao and Pietik

¨

ainen, 2006) and LBP-TOP

(Zhao and Pietik

¨

ainen, 2007). VLBP is however im-

practical for local direct identification, since each his-

togram would have a length of 2

14

or even 2

26

, due

to the fact that both spatial and temporal neighbours

need to be compared against the central pixel. Similar

statements can be made regarding LBP-TOP. There-

fore, the original purely spatial LBP descriptor is used

here.

3.4 Probabilistic Classification

Now that the behaviour of water on a local temporal

and spatial level have been defined, the next step is

to exploit the descriptors for probabilistic classifica-

tion. Contrary to computing distributions of descrip-

tors as is usual in global classification tasks, the de-

scriptors are used directly as feature vectors for prob-

abilistic classification using Decision Forests. In the

training stage of the algorithm, the descriptors are ex-

tracted from the training videos and used as feature

vectors for the Decision Forest. Since the number

of patches per frame can be considerably large, se-

lecting patches from a uniform grid for each frame of

each training video is undesirable, given the amount

of time required for training. For that reason, a ran-

dom sampling approach is employed by selecting a

small number of patches per frame per training video.

Given the use of random sampling, roughly 2500

local patches are selected per training video. The

456-dimensional feature vectors for the patches of all

training videos are then fed to the Decision Forest for

probabilistic classification. The primary parameters

of the forest - the number of trees and the randomness

factor - can be set using cross-validation.

In the testing stage, descriptors need to be ex-

tracted from all parts of the frames of the test videos.

Therefore patches are extracted from a dense rectan-

gular grid. The descriptors yielded from the grid are

individually given to the trained forest, yielding a ma-

trix of probability outputs, which can be seen as a

heatmap. A major advantage of direct local classi-

fication is that each local part of the video is classi-

fied separately. However, since this approach yields a

great number of separate classifications, it can be ex-

pected that multiple miss-classifications occur within

and between the frames of a test video. For that rea-

son, a last step of this algorithm is the use of Markov

Random Fields for spatio-temporal regularization.

VISAPP2014-InternationalConferenceonComputerVisionTheoryandApplications

288

4 HEATMAP REGULARIZATION

The additional information on the probabilistic

(un)certainty of classified local patches, instead of di-

rect decisions, opens up the possibility to discrete op-

timization on the heatmaps. The discrete optimization

takes the form in this work of a Markov Random Field

(Boykov and Kolmogorov, 2004), which serves as a

regularization step. More formally, the optimization

problem of the MRF can be stated as a minimization

problem with the following objective function:

f (x) =

∑

p∈V

V

p

(x

p

) + λ

∑

(p,q)∈C

V

pq

(x

p

,x

q

), (7)

with V the elements of the heatmap, and C the set of

all cliques. The first term of the objective function -

the data term - is then defined as:

V

p

(x

p

) =

1 −M

p

if x

p

is water

M

p

otherwise

(8)

where M

p

denotes the probability of node (i.e.

heatmap pixel) p of begin water. The second term

- the prior term - is defined such that different labels

within cliques are penalized:

V

pq

(x

p

,x

q

) = |x

p

−x

q

|, (9)

given that the label water is defined as 1 and the label

non-water is defined as 0.

An interesting element within the MRF formu-

lation are the cliques. Rather than only enforc-

ing similarity between neighbouring pixels in a sin-

gle heatmap, a form of temporal regularization is

also desired, since water location is not expected

to change sharply over time. Therefore, a spatio-

temporal Markov Random Field formulation is opted

here, where each element of the heatmap is connected

to both its 4 spatial neighbours and 2 temporal neigh-

bours.

An important element in the minimization proce-

dure is the relative weight of the probabilities (data

term) and spatial consistency (prior term), denoted by

λ in Eq. 7. For a low value for λ, the individual prob-

abilities are deemed important, resulting in a segmen-

tation with a lot of detail, but also with outliers. On

the other hand, a high value for λ results in a seg-

mentation with little outliers, at the cost of loss of de-

tail at borders between water and non-water regions.

The influence of the λ term is evaluated in Section 5.

Since not all pixels on a single frame are classified,

the binarized heatmap only contains the segmentation

result for a subset of the pixels on a rectangular grid.

The segmentation results are therefore bi-cubicly in-

terpolated such that each pixel is classified as either

being water or non-water.

5 EXPERIMENTAL EVALUATION

The effectiveness of the algorithm presented in the

previous sections is validated on the novel water

database by examining both the segmentation qual-

ity (i.e. the classification of each pixel of each frame)

and the classification quality (i.e. the classification of

a whole video with a binary mask). In the implemen-

tation of the algorithm, the videos of the database are

randomly split with a 60/40 ratio into a train and test

set. For each test video, the segmentation is computed

for 250 frames.

In the evaluation, the segmentation fit of the video

is defined as the average of the fit of the individual

segmentations with the supplemented binary mask.

Formally, the segmentation fit S of a segmented video

V compared to a mask M is computed as:

S(V,M) =

∑

|V |

i=1

s(V

i

,m)

|V |

×100%, (10)

where |V | denotes the number of frames in V , V

i

de-

notes the i

th

frame, and s(V

i

,M) is defined as:

s(V

i

,M) = 1 −

∑

W

x=1

∑

H

y=1

|V

i

[x,y] −m[x,y]|

W ×H

, (11)

with W and H for resp. the width and height of the

video and the pixel values of the segmentations and

the mask are 1 for water and 0 for non-water. Given

a set of segmentations and a binary mask, the whole

video can be classified as water if the ratio of water

pixels in the mask region is at least a half, and non-

water otherwise.

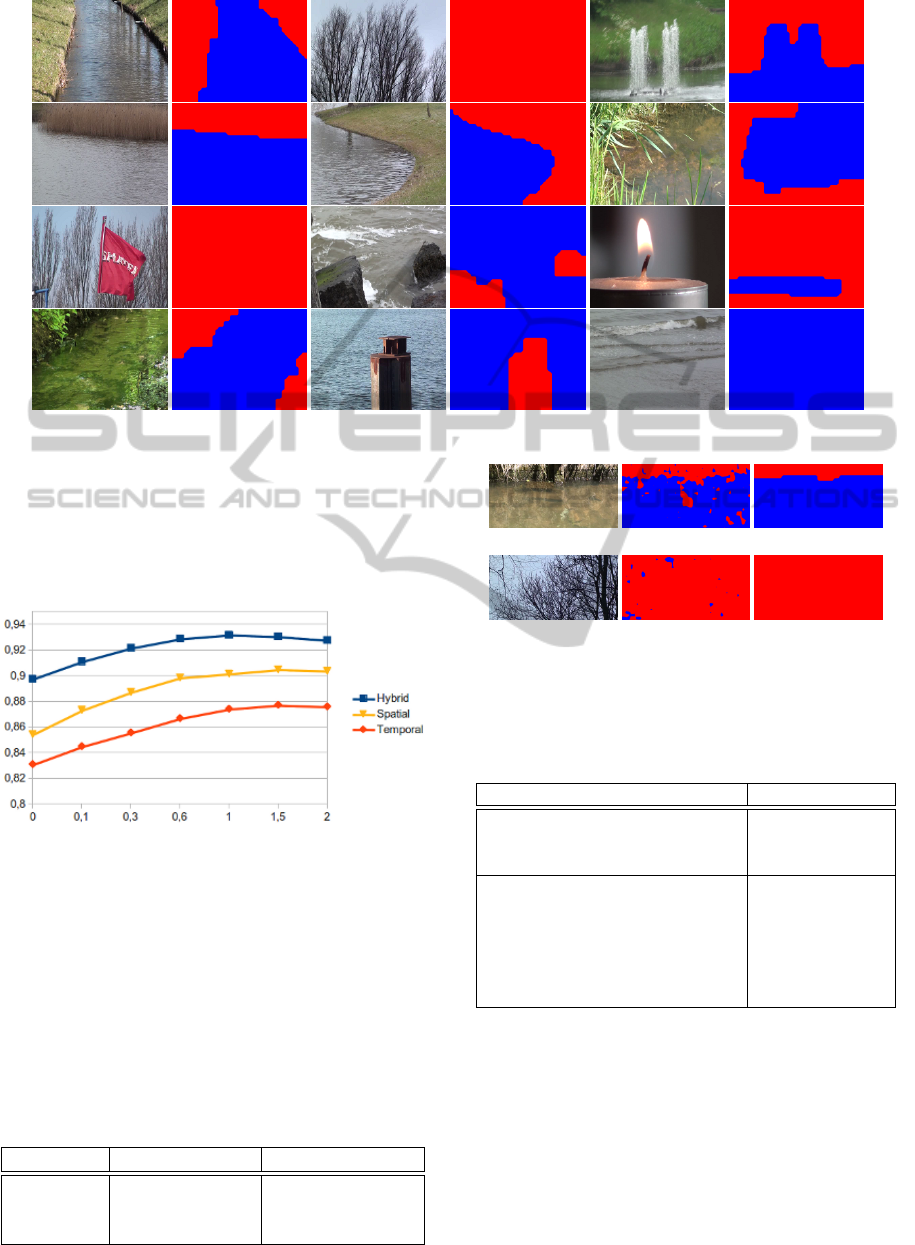

In Table 1, a numerical overview is provided of the

averaged segmentation fit for the individual and com-

bined descriptors, where the algorithm is performed

on multiple random splits. From the Table, it is clear

that both the temporal and spatial descriptors are able

to robustly segment video frames based on the pres-

ence or absence of water. Furthermore, the combi-

nation of the descriptors into a hybrid descriptor has a

strictly positive influence on the segmentation quality.

A similar statement can be made regarding the regu-

larization step, where the combination of the hybrid

descriptor and the spatio-temporal Markov Random

Field yield an average segmentation fit of 93.19%. In

Figure 6, exemplary binary segmentations are shown

for complex test videos in the database.

An influential parameter is the value for λ in the

regularization step. In Fig. 7, the segmentation fit

as a function of the parameter is shown. From the

Figure, the trade-off between dependence on the clas-

sification result and spatial coherence in the MRF is

evident. The result of Table 1 are yielded with 200

frames per descriptor and λ = 1.0, since the segmenta-

tions at that value on average prove to be optimal with

OntheSegmentationandClassificationofWaterinVideos

289

Figure 6: Exemplary segmentations yielded for the water database.

respect to both the individual classification and spatial

coherence. In Fig. 8, the effect of regularization is vi-

sualized for a water and a non-water video. With reg-

ularization, the ST-MRF makes sure that a boundary

between water and non-water regions is only created

if there is enough support over the whole image plane.

Figure 7: Segmentation fit as a function of λ.

An overview of the results of binary video clas-

sification is shown in Table 2. The video classifica-

tion problem - less informative than the segmentation

problem - is not only performed for the algorithm

of this work, but also for multiple algorithms from

related fields. These algorithms include the spatio-

temporal extensions of LBP (Zhao and Pietik

¨

ainen,

2007), LDS (Saisan et al., 2001), Gabor filter re-

sponses (Varma and Zisserman, 2005), and optical

Table 1: Segmentation quality of the descriptors.

Descriptor No MRF ST-MRF, λ = 1.0

Hybrid 90.38% ± 0.5% 93.19% ± 0.2%

LBP 85.95% ± 2.4% 90.16% ± 2.3%

Temporal 83.42% ± 1.3% 87.42% ± 0.8%

(a)

(b)

Figure 8: Two examples of the merits of using regulariza-

tion (right column) over direct classification (middle col-

umn).

Table 2: Overview of the recognition rates for binary video

classification.

Classification method Recognition rate

Our method, hybrid descriptor 96.5% ± 0.6%

Our method, LBP histogram 94.2% ± 1.4%

Our method, temporal descriptor 91.9% ± 0.8%

Volume LBP 93.5% ± 1.2%

LBP-TOP 93.3% ± 0.8%

MR8 Filter Bank distributions 80.8% ± 6.7%

Linear Dynamical Systems 71.2% ± 2.8%

Horn-Schunck, 4 flow statistics 65.7% ± 2.0%

Lucas-Kanade, 4 flow statistics 61.2% ± 3.4%

flow statistics (P

´

eteri and Chetverikov, 2005).

The results from Table 1 and 2 indicate that the

spatial and temporal descriptor can effectively capture

the local behaviour of water. The hybrid descriptor

outperforms well known generic algorithms from dy-

namic/static texture recognition for the more specific

problem of water classification. A final best result

is achieved with the hybrid descriptor and ST-MRF,

with a segmentation fit of 93.19% and a video clas-

sification rate of 96.47%. A primary reason for the

VISAPP2014-InternationalConferenceonComputerVisionTheoryandApplications

290

Figure 9: Exemplary segmentations yielded for the DynTex database.

overall high recognition rates in this work is because

this is a binary problem, i.e. if a patch of a tree is clas-

sified as fire, it is correct, since the water/non-water

line is not crossed. Also, the classification results are

generally higher, since a perfect segmentation result

is not required to yield a correct overall classification.

Table 3: Results on the DynTex subset.

Method Segmentation Classification

Ours, hybrid 92.7% 100%

Ours, temporal 85.0% 87.5%

Ours, spatial 81.3% 87.5%

VLBP - 90.0%

LBP-TOP - 87.5%

LDS - 75.0%

MR8 - 72.5%

HS flow - 57.5%

LK flow - 55.0%

In order to emphasize the effectiveness of the de-

scriptors, the algorithm is also run on videos in the

DynTex database (P

´

eteri et al., 2010). Since only a

part of the DynTex database contains water surfaces,

a subset of 80 water and non-water videos have been

selected for evaluation. A second motive for exper-

imenting on the DynTex database is that it provides

a comparison for water detection against other non-

water textures and objects, such as humans, animals,

traffic, windmills, flowers, and cloths. For the clas-

sification process, the 80 videos are split into a train-

and testset of 40 videos, while the trainset is comple-

mented with an additional 100 videos from the water

database. Exemplary segmentations are shown in Fig.

9. The numerical results for the segmentations and

classifications are provided in Table 3. The results of

Table 3 further indicate the effectiveness of the algo-

rithm.

6 CONCLUSIONS

In this work, a method and database are introduced for

the spatio-temporal identification of water surfaces in

videos. Rather than tackling water detection as an in-

stance of a more generic detection method, such as

materials recognition or dynamic texture recognition,

this method attempts to recognize water based on the

specific spatial and temporal behaviour of water sur-

faces. Experimental evaluation on a novel water de-

tection database shows the efficiency of the method,

outperforming well-known existing algorithms from

static and dynamic texture recognition.

In future work, the algorithms presented here can

be used to tackle the problem of real-time water de-

tection with moving cameras. Real-time detection can

be investigated by creating a parallel implementation

of the feature extraction and classification of the lo-

cal descriptors. Given that regularization is currently

a post-processing step, it should be incorporated in

the classification stage in a real-time setting, e.g. us-

ing Kontschieder et al.’s recently introduced Geodesic

Forests (Kontschieder et al., 2013).

OntheSegmentationandClassificationofWaterinVideos

291

ACKNOWLEDGEMENTS

This research is supported by the FES project COM-

MIT. Furthermore, we would like to thank Renaud

P

´

eteri for providing access to the DynTex database.

REFERENCES

Beauchemin, S. and Barron, J. (1995). The computation

of optical flow. ACM Computing Surveys, 27(3):433–

466.

Bochkanov, S. (1999-2013). Alglib software library

(www.alglib.net).

Boykov, Y. and Kolmogorov, V. (2004). An experimental

comparison of min-cut/max-flow algorithms for en-

ergy minimization in vision. PAMI.

Chan, A. and Vasconcelos, N. (2008). Modeling, cluster-

ing, and segmenting video with mixtures of dynamic

textures. PAMI, 30(5):909–926.

Criminisi, A., Shotton, J., and Konukoglu, E. (2012). De-

cision forests. Foundations and Trends in Computer

Graphics and Vision, 7(2):81–227.

Doretto, G., Cremers, D., Favaro, P., and Soatto, S. (2003).

Dynamic texture segmentation. ICCV, 2:1236–1242.

Fazekas, S. and Chetverikov, D. (2007). Analysis and per-

formance evaluation of optical flow features for dy-

namic texture recognition. SPIC, 22:680–691.

Hu, D., Bo, L., and Ren, X. (2011). Toward robust material

recognition for everyday objects. BMVC, pages 48.1–

48.11.

Kontschieder, P., Kohli, P., Shotton, J., and Criminisi, A.

(2013). Geof: Geodesic forests for learning coupled

predictors. CVPR.

Mumtaz, A., Coviello, E., Lanckriet, G., and Chan, A.

(2013). Clustering dynamic textures with the hier-

archical em algorithm for modeling video. PAMI,

35(7):1606–1621.

P

´

eteri, R. and Chetverikov, D. (2005). Dynamic texture

recognition using normal flow and texture regularity.

PRIA, 3523:223–230.

P

´

eteri, R., Fazekas, S., and Huiskes, M. (2010). Dyntex: A

comprehensive database of dynamic textures. Pattern

Recognition Letters, 31(12):1627–1632.

Rankin, A. and Matthies, L. (2006). Daytime water de-

tection and localization for unmanned ground vehicle

autonomous navigation. Proceeding of the 25th Army

Science Conference.

Saisan, P., Doretto, G., Wu, Y. N., and Soatto, S. (2001).

Dynamic texture recognition. CVPR, 2:II–58–II–63.

Schwind, R. (1991). Polarization vision in water insects and

insects living on a moist substrate. Journal of Com-

parative Physiology A, 169(5):531–540.

Sharan, L., Liu, C., Rosenholtz, R., and Adelson, E.

(2013). Recognizing materials using perceptually in-

spired features. IJCV, pages 1–24.

Sharan, L., Rosenholtz, R., and Adelson, E. (2009). Mate-

rial perception: What can you see in a brief glance?

[abstract]. Journal of Vision, 9(8):784.

Smith, A., Teal, M., and Voles, P. (2003). The statisti-

cal characterization of the sea for the segmentation of

maritime images. Video/Image Processing and Multi-

media Communications, 2:489–494.

Snoek, C., Worring, M., and Smeulders, A. (2005). Early

versus late fusion in semantic video analysis. In Pro-

ceedings of the 13th annual ACM international con-

ference on Multimedia, pages 399–402. ACM.

Tenenbaum, J., de Silva, V., and Langford, J. (2000). A

global geometric framework for nonlinear dimension-

ality reduction. Science, 290(5500):2319–2323.

Varma, M. and Zisserman, A. (2005). A statistical approach

to texture classification from single images. IJCV,

62(1):61–81.

Varma, M. and Zisserman, A. (2009). A statistical ap-

proach to material classification using image patch ex-

emplars. PAMI, 31(11):2032–2047.

Zhao, G. and Pietik

¨

ainen, M. (2006). Local binary pattern

descriptors for dynamic texture recognition. ICPR,

2:211–214.

Zhao, G. and Pietik

¨

ainen, M. (2007). Dynamic texture

recognition using local binary patterns with an appli-

cation to facial expressions. PAMI, 29(6):915–928.

VISAPP2014-InternationalConferenceonComputerVisionTheoryandApplications

292