Geo-positional Image Forensics through Scene-terrain Registration

P. Chippendale

1

, M. Zanin

1

and M. Dalla Mura

2

1

Technologies of Vision Research Unit, Fondazione Bruno Kessler, Trento, Italy

2

GIPSA-Lab, Signal and Image Department, Grenoble Institute of Technology, Saint Martin d’Hères, France

Keywords: Media Forensics, Visual Authentication, 3D Terrain-model Registration, Augmented Reality, Machine

Vision, Geo-informatics.

Abstract: In this paper, we explore the topic of geo-tagged photo authentication and introduce a novel forensic tool

created to semi-automate the process. We will demonstrate how a photo’s location and time can be

corroborated through the correlation of geo-modellable features to embedded visual content. Unlike

previous approaches, a machine-vision processing engine iteratively guides users through the photo

registration process, building upon available meta-data evidence. By integrating state-of-the-art visual-

feature to 3D-model correlation algorithms, camera intrinsic and extrinsic calibration parameters can also be

derived in an automatic or semi-supervised interactive manner. Experimental results, considering forensic

scenarios, demonstrate the validity of the system introduced.

1 INTRODUCTION

Digital photographs and videos have proven to be

crucial sources of evidence in forensic science; they

can capture a snapshot of a scene, or its evolution

through time (Casey, 2004); (Boehme et al., 2009).

Geo-tagging (Luo et al., 2011), i.e. the collocation of

geo-spatial information to media objects, is a

relative newcomer to the field of data annotation, but

is growing rapidly. Concurrently, the availability of

easy-to-use image processing tools and meta-data

editors is leading to a diffusion of fake geo-tagged

content throughout the digital world. As geo-tagged

media can be used to corroborate a person’s or an

object’s presence at a given location at a given time,

it can be highly persuasive in nature. Therefore, it is

essential that the content be authenticated and the

associated geo meta-data be proved trustworthy.

The addition of location information has been

fuelled in recent years thanks to the embedding of

geo-deriving hardware, such as Global Positioning

System (GPS), in many consumer-level imaging

devices. Nowadays, the most common way in which

photographs are geo-tagged is through the automatic

insertion of spatial coordinates into the EXIF meta-

data fields of JPEG images; however, a reported

location can easily be tampered with, and varies in

precision according to its means of derivation. For

example, in urban or forested environments, GPS

signals suffer from attenuation and reflection which

leads to inexact, or the lack of, triangulation of

position as was illustrated by (Paek et al., 2010),

commonly referred to as the ‘Urban Canyon’

problem in dense cities (Cui and Ge, 2003).

Standard geo-tagged photos contain three non-

independent pieces of information that provide

valuable location indicative clues:

• Time when the media object was captured;

• Positional information (some devices also

provide orientation data);

• Embedded visual content of the scene.

Although these three indicators are derived from

independent sources and sensors, they are closely

intertwined since they all spatiotemporally describe

a particular scene. These interdependences can be

exploited to derive or validate one piece of

information against the others. (Hays and Efros,

2008) showed that in a natural scene observed from

an arbitrary position, the geometry of solar shadows

cast by objects can provide clues about the time and

orientation of the camera. Analogously,

(Chippendale et al., 2009) illustrated how a captured

location can also be confirmed or hypothesized by

comparing the image content of the real scene with

expected geo-content, through synthetically

generated terrain correlation.

Three elements must be examined in order to

prove, beyond a reasonable doubt, that a geo-tagged

41

Chippendale P., Zanin M. and Dalla Mura M..

Geo-positional Image Forensics through Scene-terrain Registration.

DOI: 10.5220/0004282300410047

In Proceedings of the International Conference on Computer Vision Theory and Applications (VISAPP-2013), pages 41-47

ISBN: 978-989-8565-48-8

Copyright

c

2013 SCITEPRESS (Science and Technology Publications, Lda.)

p

hotogr

a

1) Phot

o

content

o

b

y its

depende

n

consiste

n

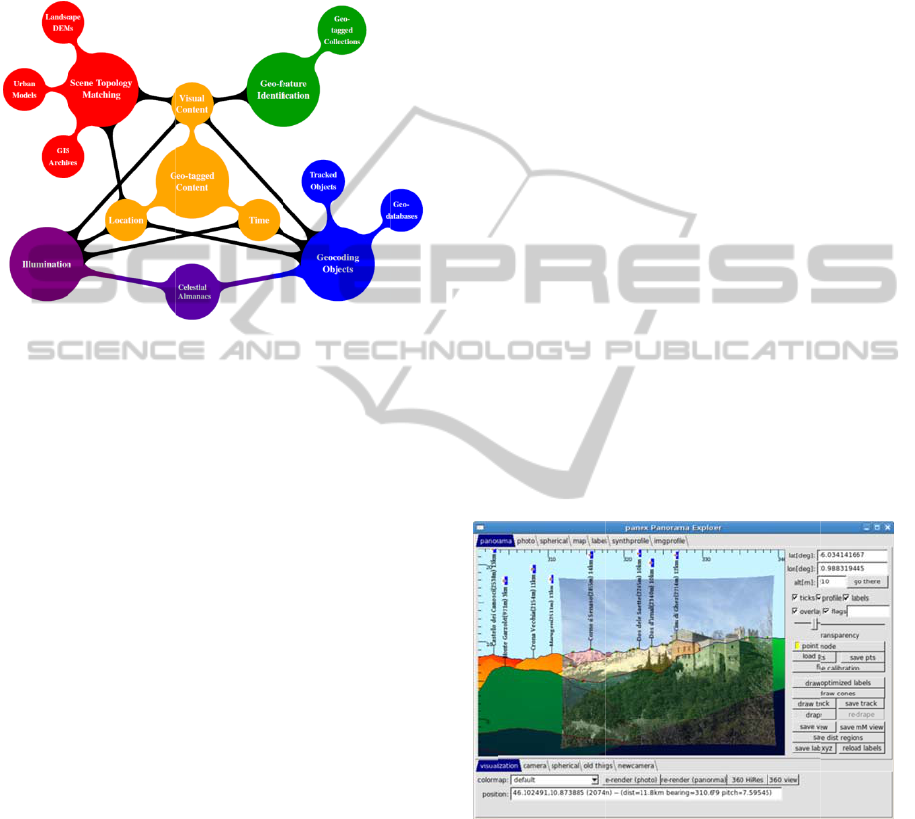

Figure 1

:

intercon

n

The in

t

location

in the

m

of the di

describe

encomp

a

to geo-f

e

p

ortions

images;

p

shadow

s

geocodi

n

identifia

b

or logos

.

In th

of the t

h

of spa

t

p

hotogr

a

checks.

1.1

R

Prior o

p

image f

o

expert-d

e

towards

Constru

c

used t

o

recreati

n

made t

o

massive

a

ph is genuin

e

o

is unmodifie

o

f the photo i

s

location; 3)

n

t events

e

n

t with the lo

c

:

Mind map

o

n

ections.

t

errelationshi

p

and acquisiti

m

ind map see

n

agram highli

g

a geo-tagge

d

a

sses scene-t

o

e

ature identi

fi

of photos

t

purple shows

s

or reflection

s

n

g based o

n

b

le content t

o

.

is paper, we

w

h

ree elements

t

iotemporal

a

ph, through

v

R

elated W

o

p

erational ap

p

o

rensics field

e

pendent, us

a specific

c

tion Se

t

1

or

o

p

rovide

v

n

g a scene,

o

o

nearby ge

o

socially gen

e

e

:

d at a

p

ixel l

e

s

consistent

w

The obse

r

e

mbedded i

n

c

ation and ti

m

o

f geo-

p

ositio

n

p

s between

on time have

n

in Figure 1.

g

hts the three

d

media obje

c

o

pology matc

h

fi

cation tools

t

t

o similar o

n

illumination

s

; and the blu

e

n

the matc

h

o

geo-databas

e

w

ill concentr

a

, relating to

t

meta-data

a

v

isual geo-c

o

o

r

k

p

roaches in t

h

have been v

e

ing a

d

-hoc

case. To

o

Visual Natu

r

v

isual evide

n

o

r, visual co

r

o

-tagged ph

o

e

rated geo-

p

h

o

e

vel; 2) The v

i

w

ith that sugg

e

r

vation of t

i

n

the phot

o

m

e suggested.

n

al image fore

n

visual con

t

been genera

l

The yellow

key elements

c

t. The red re

g

h

ing; green re

l

t

hat aim to

m

n

es in pre-ta

g

relationships,

e

region relat

e

h

ing of mac

e

s, e.g. scene

a

te on the se

c

t

he authentic

a

a

ssociated t

o

o

ntent consist

e

h

e geo-

p

ositi

e

ry laborious

m

ethods tail

o

o

ls like

W

r

e Studio

2

ca

n

n

ce by virt

u

r

relations ca

n

o

tos (taken

f

o

to databases

i

sual

e

sted

i

me-

o

is

n

sics

n

tent,

l

ized

core

that

gion

l

ates

m

atch

g

ge

d

,

e.g.

e

s to

c

hine

text

c

ond

a

tion

o

a

e

ncy

i

onal

and

ored

W

orld

n

be

u

ally

n

be

f

rom

like

Pa

n

sy

n

in

r

inc

l

20

1

et

a

the

(B

a

ha

v

to

fea

t

ma

c

fea

t

2D

sy

n

of

M

o

sh

o

p

h

o

1.

2

In

t

sta

t

cor

r

to-

w

b

e

evi

d

2

In

f

op

e

an

a

co

n

fea

t

util

n

oramio

3

).

The automat

i

n

thetic model

s

r

ecent years,

l

uding robot

i

1

1), Augment

a

l., 2003), co

m

outdoor e

n

a

boud et al.,

v

e recently e

x

align visua

l

t

ures. This a

p

c

hine-extract

a

t

ures in a sy

n

pixel-regio

n

n

thetic model

s

3D geo-loc

a

o

dels (DEMs

)

o

uld correspo

n

o

to location.

2

Contri

b

t

his paper, w

e

t

e-of-the-art

r

elation algo

r

w

orld mappi

n

used to va

l

d

ent, geo-tag

g

Figure 2: Scre

e

SYSTE

M

f

orensic inve

s

e

rators are

p

a

lysis loop.

O

n

ditions, and

t

t

ure detectio

n

ised to red

u

i

c matching

o

has been we

l

spanning m

a

c navigation

e

d Reality, p

h

m

puter vision

vironment,

(

2

011) and (

H

x

plored terrai

n

terrain pr

o

p

proach inv

o

a

ble features

f

t

hetic 3D-mo

d

n

features t

o

used, render

e

a

ted data (e

)

), yield silh

n

d to the ter

r

b

ution

e

illustrate h

o

visual-feat

u

i

thms to crea

t

g tool (seen

i

l

idate the a

u

g

ed photogra

p

e

nshot of the p

h

M

OVER

V

s

tigations, it i

p

laced insid

e

O

ften, occlus

i

t

ampering im

p

n

, hence ma

n

u

ce detection

o

f photo cont

e

l

l explored in

a

ny fields o

f

(Leotta an

d

hotogrammet

r

and remote s

e

(

Baatz et al

H

ays and Efr

o

n

-feature ide

n

o

files to ge

o

o

lves the ma

t

f

rom a photo

t

del, essential

l

o

3D locati

o

e

d from a wi

d

e

.g. Digital

h

ouette profil

e

r

ain visible a

t

o

w we have

i

u

re to

3

t

e an interact

i

i

n Figure 2)

w

u

thenticity o

f

p

hs.

h

oto registratio

n

V

IEW

i

s essential t

h

e

the visua

l

i

ons, difficul

t

mp

ede automa

t

n

ual guidanc

e

ambiguities

e

nt to 3D

literature

f

research

d

Mundy,

r

y (Guidi

e

nsing. In

., 2012),

o

s, 2008)

n

tification

o

-specific

t

ching of

t

o similar

l

y pairing

o

ns. The

d

e variety

E

levation

e

s which

t

a stated

i

ntegrated

3

D-model

i

ve pixel-

w

hich can

f

terrain-

n

tool.

at human

l

content

t

lighting

t

ic terrain

e

can be

.

Results

VISAPP2013-InternationalConferenceonComputerVisionTheoryandApplications

42

from t

h

p

rocess

form o

together

‘goodne

s

the user

b

y assig

n

click int

e

The

on the a

l

and (Ko

s

to matc

h

corresp

o

When d

e

the mos

t

our ap

p

generate

surface

a

al., 20

0

systema

t

location

their a

n

spherica

l

equiang

u

p

lane c

o

altitude

location

)

To d

of the c

a

image is

reported

data in

absent (

o

through

of the al

i

Phot

o

model i

n

1. extr

a

syntheti

c

2. extr

a

3. a

corresp

o

4. appl

y

b

est al

i

p

rojecti

o

The

p

ro

c

b

elow i

n

We

syntheti

c

b

etween

originati

The

using a

h

e computer

-

are ranked

a

f an Aug

m

with a corre

l

s

s’. In situat

i

can influenc

e

n

ing new cor

r

e

rface.

automatic ge

o

lgorithm

p

ro

p

s

telec and Ro

h

visibly-evi

d

o

nding synthe

t

ealing with

n

t

relevant fea

t

p

roach, the

d from DE

M

a

s a regular

g

0

5). These

r

t

ically proje

c

onto the insi

d

n

gular inters

e

l

image i

s

u

lar planar g

r

o

rresponds t

o

and depth (

i

)

.

d

erive camera

a

mera that to

o

firstly scale

d

(usually deri

v

the EXIF).

I

o

r incorrect),

a use

r

-assiste

i

gnment stag

e

o

graphs are

n

four phases:

a

ction of sal

i

c

image;

a

ction of salie

n

registration

o

ndences bet

w

y

an optimiz

a

i

gnment pa

r

o

n error.

c

essing pipel

i

n

Figure 3.

generate sal

i

c

image

b

y m

adjacent p

o

ng from the

p

p

hoto’s salie

n

variety of

m

-

vision pow

a

nd offered t

o

m

ented Real

i

l

ation metric

i

ons where

a

e

subsequent

r

elation pairs

o

-registration

p

osed

b

y (Ba

b

ckmore, 200

8

d

ent geogra

p

t

ic features i

n

n

atural outdo

o

t

ures is terrai

n

virtual terr

M

s, which rep

r

g

rid of eleva

t

r

enderings a

r

c

ting rays

d

e surface of

a

e

ctions with

s

then

p

ro

j

r

id, in which

o

a certain l

a

i

.e. distance

f

pose, i.e. th

e

o

k the origin

a

d

according t

o

v

ed from the

f

I

f focal-leng

t

then the syst

e

d scaling str

a

e

.

aligned to

i

ent depth

p

n

t profiles fro

m

algorith

m

w

een the two

p

a

tion algorit

h

r

ameters th

a

i

ne of our sy

s

i

ent depth

p

arking abrup

t

o

ints lying

o

p

hoto’s positi

o

n

t depth pro

fi

m

eans: edge

e

red registr

a

o

the user i

n

i

ty visualiza

t

to indicate

m

a

mbiguities

a

refinement st

a

using a point

process is

ba

b

oud et al., 2

0

8

), which atte

m

p

hical feature

n

a virtual m

o

o

r scenes, o

n

n

morpholog

y

a

in models

r

esent the Ea

r

t

ion values (

L

r

e generated

f

rom a ph

o

a

sphere recor

d

the DEM.

T

j

ected onto

each point i

n

a

titude, longi

t

f

rom the ph

o

e

pan, tilt and

a

l photograph

,

o

the field-of-

v

f

ocal length

m

t

h informatio

e

m can estim

a

a

tegy forming

the equian

g

p

rofiles from

m

the photo;

searches

p

rofile sets;

hm

to derive

t minimize

s

tem is visua

l

p

rofiles from

t

distance cha

n

o

n a virtual

o

n.

fi

les are extr

a

detection, c

o

a

tion

n

the

t

ion,

m

atch

a

rise,

ages

t

and

ased

0

11)

m

pts

s to

o

del.

n

e of

y

. In

are

r

th’s

L

i et

by

o

to’s

r

ding

This

an

n

the

t

ude,

o

to’s

d

roll

, the

v

iew

m

eta-

o

n is

a

te it

part

g

ular

the

for

the

re-

l

ized

the

n

ges

ray

a

cted

o

lour

ap

p

fol

l

p

ro

unl

i

inc

o

or

f

co

u

(e.

g

Fig

u

Co

r

the

p

ro

f

tra

n

ca

n

Fig

ext

r

ma

c

cas

e

im

p

ev

a

sca

l

opt

i

p

ar

a

ali

g

ex

h

p

ar

a

ho

w

Co

n

p

earance, blu

e

l

owing on fro

m

files generat

e

i

ke those in

t

o

mplete due t

f

oreground o

b

u

ld lead to s

t

g

. mist or fog)

Figure 3: Fl

o

u

re 4: Tree c

o

r

ine Land cove

r

3D terrain m

o

f

ile lines). In t

h

n

sparent to illus

t

To increase

r

n

also be incl

u

u

re 4), urb

a

r

acted with

a

c

hine learnin

g

To provide

o

e

s, a visual

p

lemented i

n

a

luate corresp

l

es through

i

mization alg

o

a

meters th

a

g

nment. This

h

austively ev

a

a

meters (sa

m

w

ever, ve

r

n

sequently,

w

e

shift and t

m

the work b

y

d from the r

e

t

he synthetic

o

the presenc

e

b

jects), unev

e

t

rong shado

w

.

o

wchart of the

a

o

verage GIS d

a

r

2000 (purple

)

o

del (indicate

d

h

is image the

3

t

rate photo-mo

d

r

obustness, re

g

u

ded, e.g. sk

y

a

n-forest, or

a

ppropriate i

m

g

approaches.

o

ptimal align

m

registration

n

side an it

e

o

ndences on

an optimiz

a

o

rith

m

aims t

t

produce

procedure

c

a

luating the

m

pled on a

r

y comput

a

w

e perform

p

t

exture disco

n

y

(He et al. 2

0

e

al world ob

s

image, are

n

e

of occlusio

n

e

n illuminati

o

w

s) and poor

alignment pro

c

ata taken fro

m

)

has been ren

d

d

by blue and

3

D model has

b

d

el registratio

n

gion boundar

y

y

-rock, veget

a

combinatio

n

i

mage proce

s

m

en

t

, even i

n

algorith

m

h

e

rative proc

e

both local a

n

a

tion proced

u

t

o find the ca

m

the most

c

an be perf

o

full range o

finite grid).

t

ationally

i

p

rofile align

m

n

tinuities,

0

09). The

s

ervation,

n

oisy and

n

s (clouds

o

n (which

visibility

c

ess.

m

the 25m

d

ered onto

red depth

b

een made

.

y

profiles

a

tion (see

thereof,

s

sing and

n

difficult

h

as been

e

dure to

n

d global

u

re. This

m

era lens

accurate

o

rmed by

f camera

This is,

i

ntensive.

m

ent in a

Geo-positionalImageForensicsthroughScene-terrainRegistration

43

similar

m

2011),

a

b

ased o

n

Rockmo

equally

system.

T

through

Let

function

s

spherica

l

whilst

image.

W

,,

three ro

t

applied

t

(1) sho

w

estimate

d

b

etween

Figure 5

sampled

reference

and blue

angles.

The

spherica

l

alignme

n

correlati

o

the resu

l

Figure 5

.

3 B

E

M

A

As a dir

e

sky pixe

location

s

m

anner to th

a

a

nd compute

n

the method

d

re, 2008), u

spaced gri

d

T

his approac

h

example:

and

s, where

∈

l

domain.

r

refers to fe

W

e are sear

c

∈3,

o

t

ations with

E

to , maxim

i

w

s how the ‘

g

d

by com

p

and afte

r

: Spherical c

r

on a equall

y

system. The r

e

the worst; the

maximum v

a

l

coordinate

s

n

t that can b

e

o

n) between

t

l

ts from a ty

p

E

NEFITS

A

PPING

e

ct conseque

n

e

ls within a p

h

s

on the pl

a

a

t

p

roposed

b

a spherical

d

emonstrated

sing features

d

in the sp

h

h

can be expl

a

represent

∈

is a p

o

r

efers to the

s

atures extrac

t

c

hing for an

o

btained by t

h

E

uler angles

i

zes the mat

c

g

oodness’ of

p

uting the

c

r

the rotation.

r

oss correlatio

n

y

spaced gri

d

e

d region repre

s

x and y axes r

e

a

lue of such

s

correspond

s

e

achieved (i

n

t

he two spher

i

p

ical alignme

n

OF PIXE

L

n

ce of

p

rofile

h

oto can be a

s

a

net (i.e. la

t

b

y (Baboud e

t

cross correl

a

by (Kostelec

sampled o

n

h

erical refer

e

a

ined more cl

e

the two sphe

r

o

sition in the

s

ynthetic feat

u

t

ed from the

optimal rot

a

h

e compositi

o

,,, that,

o

c

hing . Equ

a

t

he match ca

n

c

ross correl

a

n

between fea

t

d

in the sph

e

s

ents the best

m

e

late to pan an

a correlatio

n

s

to the opt

i

n

terms of c

r

i

cal functions,

n

t can be se

e

L

-GEO

registration,

n

s

signed to rea

l

t

itude, longi

t

t

al.,

a

tion

and

n

an

e

nce

e

arly

rical

2D

u

res,

real

a

tion

o

n of

o

nce

a

tion

n

be

a

tion

(1)

a

tures

e

rical

m

atch

n

d tilt

n

in

t

imal

r

oss-

,

and

e

n in

n

on-

l 3D

t

ude,

alti

t

•

ret

r

•

•

for

e

inf

o

or

p

Th

e

wit

h

a u

evi

d

ret

r

Fig

u

dist

rel

e

als

o

ras

t

sat

e

p

at

h

ov

e

in

F

ge

o

si

m

tha

n

sy

n

clo

s

an

a

cor

r

4

To

ca

n

sec

t

t

ude). This m

a

3D depth

p

r

ieve world c

o

re-

p

hotograp

h

retrospective

e

nsics team

o

rmation; thu

s

p

otentially re

v

e

possibility t

o

h

geographic

a

ser with the

a

d

ence that w

o

r

ieve or prove

u

re 6: Substit

u

a

nce from obs

e

Apart from a

n

e

vant geo-ref

e

o

drape GIS

l

t

er (e.g. m

a

e

llite data)

a

h

s, regions,

b

e

rlay can be

s

F

igure 6. In

o

-referenced

m

ultaneously

(

n

20km away

n

thetic colou

r

s

er and red f

a

a

ltitude of le

s

r

esponding p

i

FOREN

S

illustrate the

n

be tackled

t

ion provide

s

a

pping enabl

e

p

erception fr

o

o

-ordinates fr

o

h

y (Bae et al.,

augmentati

o

to insert

s

, corroborati

n

v

ealing manip

u

o

automatical

l

a

lly accurate

i

a

bility to dis

c

o

uld have oth

e

.

u

tion of pixels

e

rver and altitu

d

n

notating a p

h

e

renced poin

t

l

ayer inside i

a

ps, other

g

n

d vector d

a

oundaries).

A

s

een in the c

o

this image,

t

layers ha

v

p

ixels mappi

n

from the ca

m

r

representin

g

a

rther away),

s

s than 200m

x

els from a c

a

S

IC EXP

E

uses and ty

p

using scene

-

a few real-

l

e

s:

o

m 2D ima

g

o

m an image

r

,

2010),

o

n (i.e. faci

l

and/or ex

t

n

g embedded

ulation.

ly enrich a p

h

information

e

c

over elusive

e

rwise been

d

with geo-data

,

d

e.

h

oto with top

t

s of interes

t

i

t, both in th

e

g

eo-registere

d

a

ta (e.g. roa

d

A

n example o

o

mposite ima

g

t

wo different

v

e been

v

n

g to locatio

n

m

era were rep

l

g depth (d

a

and pixels m

were replace

d

a

rtographic

m

E

RIMEN

T

p

e of proble

m

-

model matc

h

life cases w

h

g

es (e.g.

r

egion),

l

itating a

t

ract 3D

evidence

h

otograph

e

mpowers

pieces of

ifficult to

,

based on

onyms or

, we can

e

form of

d

photos,

d

, rivers,

f

such an

g

e shown

types of

v

isualized

n

s further

aced by a

a

rk green

a

pping to

d

by geo-

m

ap.

T

S

m

s which

h

ing, this

h

ere geo-

VISAPP2013-InternationalConferenceonComputerVisionTheoryandApplications

44

verificat

i

example

results

B

4.1

B

With a

p

highest

m

geo-

p

os

i

Christia

n

ambitio

n

Seven

S

highest

p

Con

d

K2 Bas

e

attempt.

solo att

e

returned

,

12 Aug

u

other s

u

received

To v

a

self-

p

or

t

summit

speciali

z

Figure 7:

Explorer

s

As

c

glacier j

an idea

l

study.

Figu

r

findings

describe

d

The

u

Internet

Camp 3

Lon: 0

7

renderin

g

images

a

i

on has reve

a

s of registere

B

log

4

.

B

o

g

us K2 S

p

eak elevatio

n

m

ountain on

i

tional image

n

Stangl, a fa

n

is to be the

fi

S

ummits ch

a

p

eaks on each

d

itions were

h

e

Camp in th

e

Stangl left

B

e

mpt. After a

, claiming th

a

u

st. Given t

h

u

spicious in

d

with sceptici

a

lidate his cl

a

t

rait photogr

a

(see Figure

7

z

ed magazine

s

:

Self portrait

s

Web as proof

o

c

an be seen,

ust visible o

v

l

case for a

r

e 8 illustra

t

using the f

o

d

in this pape

r

u

pper two i

m

sourced pho

t

o

on K2 (appro

7

6.531°E, A

l

g

from the s

a

a

re an excerpt

a

le

d

interesti

n

d images ca

n

ummitin

g

n

of 8611m,

K

Earth. In th

i

forensic anal

y

mous Austri

a

fi

rst man to c

o

a

llenge

5

, cli

m

of the seven

c

h

arsh when St

a

e

summer of

2

B

ase Camp o

n

70-hour long

a

t he had topp

e

h

e prohibitiv

e

coherencies,

s

m

among fe

l

a

im, Stangl q

u

a

ph suppose

d

7

), but devoi

d

s

and website

s

of Christian S

o

f climbing K2

there is a s

m

v

er his right

s

geo-

p

osition

a

t

es a visual

o

rensic tool

w

r

.

m

ages show a

n

o

known to h

ximate locati

o

l

t: 7250m)

a

me view poi

n

from a phot

o

n

g results. Fu

r

n

be found o

n

K

2 is the sec

o

i

s case study

,

y

sis is focuse

d

a

n climber, w

h

o

mplete the T

r

m

bing the

t

c

ontinents.

a

ngl arrived a

t

2

010 for his

t

n

10 August

f

summit pus

h

ed

-out at 10a

m

e

conditions

his claim

low climbers

.

u

ickly submit

t

d

ly taken on

d

of meta-dat

a

s

6

.

tangl, submitt

e

.

m

all portion

o

s

houlder, off

e

a

l image for

e

summary of

w

e developed

n

excerpt fro

m

ave been tak

e

o

n Lat: 35.87

5

and a synt

h

n

t. The lower

o

taken at the

p

r

ther

n

our

o

n

d

-

,

the

d

on

h

ose

r

iple

t

hree

a

t the

t

hird

f

or a

h

, he

m

on

and

was

.

t

ed a

the

a

, to

e

d to

of a

e

ring

e

nsic

our

an

d

m

an

e

n at

5

°N,

h

etic

two

p

eak

of

K

b

y

sy

n

sh

o

str

e

Sta

n

Fig

u

fro

m

su

m

fro

m

in

a

too

l

co

n

35.

8

35.

7

fro

m

tak

e

an

d

edi

t

tec

h

p

h

o

co

m

su

m

ha

v

the

i

wit

h

tha

t

sca

n

4.

2

An

o

im

a

b

ut

Fig

K

2 (Lat: 35.8

8

Czech clim

b

n

thetic rende

r

o

wn. The ce

n

e

tched, excer

p

n

gl, shown in

u

re 8: (top ro

w

m

Camp 3;

(

m

mit photo; (b

o

m

K2 summit.

N

a

ll images. Synt

h

The three ph

o

l

and into

e

n

necting vect

o

8

02°N, Lon

:

7

04°N, Lon:

m

this analys

i

e

n from a lo

c

d

not from the

At the time

t

ors of Ex

p

h

nique: direc

t

o

to in a b

o

m

position and

m

mit shot ont

o

v

e been take

n

i

r doubts. Ine

h

Explorers

W

t

he had fa

k

n

dal in the m

o

2

Fake

M

o

ther interes

t

a

ge forensic s

t

predic

t

able

g

u

re 9.

8

1°N, Lon: 0

7

b

er Libor

U

i

ng from th

e

n

tral image i

s

p

t from the sa

m

Figure 7.

w

) Photo crop

centre) crop

f

o

ttom row) cro

p

N

ote: same 3

D

h

etic colourin

g

o

tographs we

r

ach, a pair

o

r were insert

e

076.541°E,

076.542°E, 4

i

s, the photo

i

c

ation very cl

o

summit.

of the orig

i

p

lorersWeb

8

t

image com

p

o

ok that ha

d

managed to

o

o

it. This pho

n

from Cam

p

v

itably, whe

n

W

eb’s analysis

,

k

ed the clim

b

o

untaineering

M

oon

t

ing exampl

e

t

udy using th

e

g

eo-reference

a

7

6.514°E, Al

t

U

he

r

7

, and li

k

e

same view

s

a crop, an

d

m

e photo sub

m

and synthetic

from Stangl's

p

and syntheti

c

D

coordinate p

a

g

relative to hei

g

re registered

u

of 3D poin

t

e

d into the sc

e

,

6234 m

a

4

688 m). As

i

i

n question

w

l

ose to that o

f

i

nal investig

a

used a m

o

p

arison

9

. The

y

a

d exactly t

h

o

verlay Stan

g

o

tograph was

k

p

3 so thus

c

n

Stangl was

p

, he decided

t

m

b, generatin

g

community.

e

of a geo-

p

e

Moon as a

n

a

ble object is

t

: 8611m)

k

ewise a

point is

d

contrast

m

itted by

rendering

supposed

c

rendering

a

irs drawn

g

ht.

u

sing our

t

s with a

e

nes (Lat:

a

nd Lat:

i

s evident

w

as in fact

f

Camp 3

a

tion, the

o

re basic

y

found a

h

e same

g

l’s (fake)

k

nown to

c

onfirmed

p

resented

t

o confess

g

a huge

p

ositional

n

on-static

shown in

Geo-positionalImageForensicsthroughScene-terrainRegistration

45

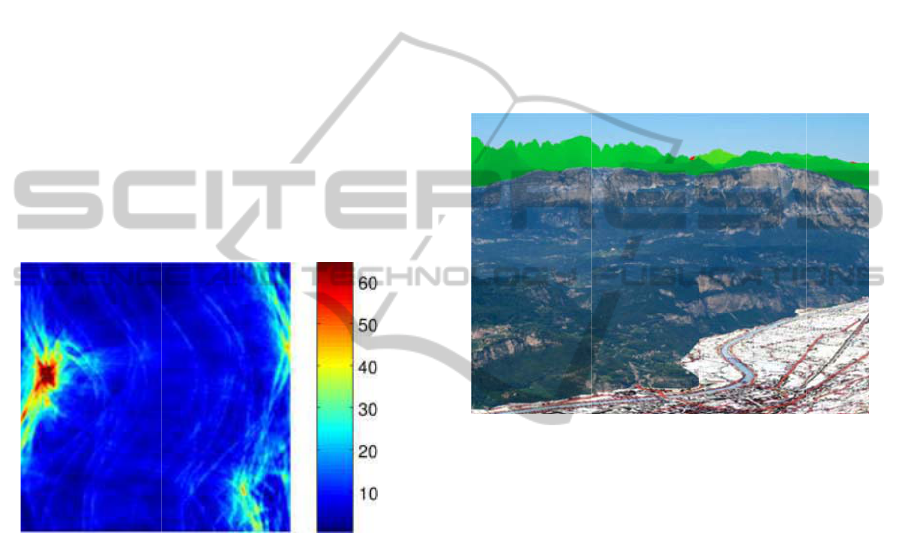

Figure 9: Conjunction of the Moon, Jupiter and Venus,

Palermo, Italy. (left) Photo from Flickr with artificially

enlarged Moon; (right) adjusted version of original photo,

with Moon resized and repositioned according to EXIF

location and time data.

In this popular Flickr image

10

, the size of the

Moon looks suspiciously large, therefore scene

topology matching methods were applied to

understand if it was authentic. The stated location of

the geo-tagged Flickr image was Lat: 38.1713°N,

Lon: 013.3439°E and the time reported in the EXIF

was 2008:11:30 18:32:45.

Given these constraints, our tool was used to

register the photograph to a 3D synthetic model for

that region. As the registration process delivers the

relative distances and thus camera calibration

parameters, we can determine that the Moon in this

photo appears to span 5.6° of the sky. In reality, the

apparent diameter of the Moon as viewed from any

point on Earth, is always approximately 0.5°, hence

it was over 10 times too large. Interestingly, the

proportion of the Lunar surface bathed in light was

correct, at about 7.9%, so it is suspected that two

photos from the same evening had been merged. The

location of the Moon in the sky was also incorrect,

as it should have been present at 234.04° azimuth

and 2.07° altitude (derived from web-based celestial

almanacs and the EXIF time).

Based on these findings, a new image (see right

of Figure 9) was generated using Photoshop to

illustrate the correct size and correct location of the

Moon in the sky, based on the original EXIF meta-

data; the visible mountains and their relative

distances from the observer have also been labeled

using GeoNames

11

toponym database. As is evident,

the Moon’s real location should have been just

above the rightmost peak, Pizzo Vuturo, producing a

less provocative image. Incidentally, the planets

Venus and Jupiter are also visible, and had likewise

been subjected to the same up-scaling and

repositioning for visual effect.

5 CONCLUSIONS

In this paper, we have presented a system for geo-

forensic analysis using computer vision and graphics

techniques. The power of such a cross-modal

correlation approach has been exemplified through

three case-studies, in which claims were disproved,

truths revealed or doubts confirmed.

The relative novelty of geo-tagging photos

together with the scale and diversity of urban and

natural landscapes means that the approaches

detailed herein are not suitable for all scenarios.

Images containing nondescript content, e.g. indoors,

gently rolling countryside and deserts, cannot

provide sufficient clues to uniquely pinpoint location

or time. However, as more sources of geo-referenced

material, e.g. Points of Interest, geo-tagged photos

and accurate 3D urban models (like those being

created in GoogleSketchUp

12

or OpenStreetMap

13

)

become publicly available, the potential to exploit

the methods described here will increase

correspondingly

ACKNOWLEDGEMENTS

This research has been partly funded by the

European 7

th

Framework Program, under grant

VENTURI

14

(FP7-288238).

REFERENCES

Baatz, G., Saurer, O., Koeser K. and Pollefeys M. (2012).

Large Scale Visual Geo-Localization of Images in

Mountainous Terrain. In Proc. European Conference

on Computer Vision.

Baboud, L., Cadik, M., Eisemann, E. and Seidel, H.

(2011). Automatic photo-to-terrain alignment for the

annotation of mountain pictures. In IEEE Conference

on Computer Vision and Pattern Recognition, 41-48.

Bae, S., Agarwala, A. and Durand, F. (2010).

Computational rephotography. In ACM Trans. Graph

29(3), 1-15.

Boehme, R., Freiling, F., Gloe T. and Kirchner, M. (2009).

Multimedia forensics is not computer forensics. In

Computational Forensics, Lecture Notes in Computer

Science, vol. 5718, 90-103.

Casey, E. (2004). Digital evidence and computer crime. In

Forensic science, computers and the Internet,

Academic Press.

VISAPP2013-InternationalConferenceonComputerVisionTheoryandApplications

46

Chippendale, P., Zanin, M. and Andreatta, C. (2009).

Collective photography. In Conference for Visual

Media Production, 188-194.

Cui, Y. and Ge, S. (2003). Autonomous vehicle

positioning with GPS in urban canyon environments.

In IEEE Transactions on Robotics and Automation

19(1), 15-25.

Guidi, G., Beraldin, J., Ciofi, S. and Atzeni, C. (2003).

Fusion of range camera and photogrammetry: a

systematic procedure for improving 3-d models metric

accuracy”. In IEEE Transactions on Systems, Man,

and Cybernetics, Part B: Cybernetics 33(4), 667-676.

Hays, J. and Efros, A. (2008). Im2gps: estimating

geographic information from a single image. In IEEE

Conference on Computer Vision and Pattern

Recognition, 1-8.

He, K., Sun, J., Tang, X. (2009). Single image haze

removal using dark channel prior. In Computer Vision

and Pattern Recognition, 1956–1963.

Karpischek, S., Marforio, C., Godenzi, M., Heuel, S. and

Michahelles, F. (2009). Swisspeaks–mobile

augmented reality to identify mountains. In Workshop

at the International Symposium on Mixed and

Augmented Reality.

Kostelec, P. and Rockmore, D. (2008). FFTs on the

rotation group. In Journal of Fourier Analysis and

Applications 14(2), 145-179.

Leotta, M. and Mundy, J. (2011). Vehicle surveillance

with a generic, adaptive, 3d vehicle model. In IEEE

Transactions on Pattern Analysis and Machine

Intelligence 33(7), 1457-1469.

Li, Z., Zhu, Q. and Gold C. (2005). Digital terrain

modeling: principles and methodology. In CRC Press.

Luo, J., Joshi, D., Yu, J. and Gallagher A. (2011).

Geotagging in multimedia and computer vision – a

survey. In Multimedia Tools and Applications 51, 187-

211.

Paek, J., Kim, J. and Govindan, P. (2010). Energy-ecient

rate-adaptive GPS-based positioning for smartphones.

In Proceedings of the 8th international conference on

Mobile systems, applications, and services, MobiSys

’10, 299-314.

1

http://3dnature.com/wcs6info.html

2

http://3dnature.com/vnsinfo.html

3

http://www.panoramio.com/

4

http://tev.fbk.eu/marmota/blog/mosaic/

5

http://skyrunning.at/en

6

http://www.explorersweb.com/everest_k2/news.php?id=19634

7

http://cs.wikipedia.org/wiki/Soubor:Liban_K2_sumit_1_resize.JPG

8

http://www.explorersweb.com/

9

http://www.explorersweb.com/everest_k2/news.php?id=19634

10

http://www.flickr.com/photos/lorca/3074227829/

11

http://www.geonames.org/

12

http://sketchup.google.com/

13

http://www.openstreetmap.org/

14

https://venturi.fbk.eu

Geo-positionalImageForensicsthroughScene-terrainRegistration

47