Differential Evolution for Adaptive System of Particle Swarm

Optimization with Genetic Algorithm

Pham Ngoc Hieu

1

and Hiroshi Hasegawa

2

1

Functional Control Systems, Graduate School of Engineering and Science, Shibaura Institute of Technology,

Saitama, Japan

2

College of Systems Engineering and Science, Shibaura Institute of Technology, Saitama, Japan

Keywords:

Adaptive System, Differential Evolution (DE), Genetic Algorithm (GA), Multi-peak Problems, Particle

Swarm Optimization (PSO).

Abstract:

A new strategy using Differential Evolution (DE) for Adaptive Plan System of Particle Swarm Optimization

(PSO) with Genetic Algorithm (GA) called DE-PSO-APGA is proposed to solve a huge scale optimization

problem, and to improve the convergence towards the optimal solution. This is an approach that combines the

global search ability of GA and Adaptive plan (AP) for local search ability. The proposed strategy incorporates

concepts from DE and PSO, updating particles not only by DE operators but also by mechanism of PSO for

Adaptive System (AS). The DE-PSO-APGA is applied to several benchmark functions with multi-dimensions

to evaluate its performance. We confirmed satisfactory performance through various benchmark tests.

1 INTRODUCTION

Several modern heuristic algorithms as population-

based algorithms Evolutionary Algorithms (EAs)

have been developed for solving complex numeri-

cal optimization. The most popular EA, Genetic Al-

gorithm (GA) (Goldberg, 1989) has been applied to

various multi-peak optimization problems. The va-

lidity of this method has been reported by many re-

searchers. However, it requires a huge computational

cost to obtain stability in the convergence to an op-

timal solution. To reduce the cost and to improve

stability, a strategy that combines global and local

search methods becomes necessary. As for this strat-

egy, Hasegawa et al. proposed a new evolutionary al-

gorithm called an Adaptive Plan system with Genetic

Algorithm (APGA) (Hasegawa, 2007).

Among the modern meta-heuristic algorithms, a

well-known branch is Particle Swarm Optimization

(PSO), first introduced by Kennedy and Eberhart

(2001). It has been developed through simulation

of a simplified social system, and has been found to

be robust in solving optimization problems. Never-

theless, the performance of the PSO greatly depends

on its parameters and it often suffers from the prob-

lem of being trapped in the local optimum. To re-

solve this problem, various improvement algorithms

are proposed for solving a variety of optimal proble-

ms (Clerc and Kennedy, 2002).

A new evolutionary algorithm known as Differen-

tial Evolutionary (DE) was recently introduced and

has garnered significant attention in the research liter-

ature (Storn and Price, 1997). Compared with other

techniques and EAs, it hardly requires any parameter

tuning and is very efficient and reliable. As PSO has

memory, knowledge of good solutions is retained by

all particles, whereas in DE, previous knowledge of

the problem is discarded once the population changes.

Moreover PSO and DE both work with an initial pop-

ulation of solutions. Therefore, combining the search-

ing ability of these methods seems to be a reasonable

approach (Das et al., 2008).

In this paper, we purposed a new strategy using

DE for Adaptive Plan system of PSO with GA to

solve a huge scale optimization problem, and to im-

prove the convergence to the optimal solution called

DE-PSO-APGA.

The remainder of this paper is organized as fol-

lows. Section 2 describes the basic concepts of DE,

Section 3 explains the algorithm of proposed strat-

egy (DE-PSO-APGA), and Section 4 discusses about

the convergence to the optimal solution of multi-

peak benchmark functions. Finally Section 5 includes

some brief conclusions.

259

Ngoc Hieu P. and Hasegawa H..

Differential Evolution for Adaptive System of Particle Swarm Optimization with Genetic Algorithm.

DOI: 10.5220/0004107202590264

In Proceedings of the 4th International Joint Conference on Computational Intelligence (ECTA-2012), pages 259-264

ISBN: 978-989-8565-33-4

Copyright

c

2012 SCITEPRESS (Science and Technology Publications, Lda.)

2 DIFFERENTIAL EVOLUTION

Differential Evolution (DE) is an EA proposed by

Storn and Price (1997), also a population-based

heuristic algorithm, which is simple to implement,

requires little or no parameter tuning and is known

for its remarkable performance for combinatorial op-

timization.

2.1 Basic Concepts of DE

DE is similar to other EAs particularly GA in the

sense that it uses the same evolutionary operators such

as selection, recombination, and mutation. However

the significant difference is that DE uses distance and

direction information from the current population to

guide the search process. The performance of DE de-

pends on the manipulation of target vector and differ-

ence vector in order to obtain a trial vector.

2.1.1 Mutation

Mutation is the main operator in DE. For a D-

dimensional search space, each target vector X

i,G

, the

most useful strategies of a mutant vector are:

DE/rand/1

V

i,G

= X

r

1

,G

+ F ·(X

r

2

,G

−X

r

3

,G

) (1)

DE/best/1

V

i,G

= X

best,G

+ F ·(X

r

2

,G

−X

r

3

,G

) (2)

DE/target to best/1

V

i,G

= X

i,G

+ F ·(X

best,G

−X

i,G

)

+F ·(X

r

2

,G

−X

r

3

,G

) ,

(3)

DE/best/2

V

i,G

= X

best,G

+ F ·(X

r

1

,G

−X

r

2

,G

)

+F ·(X

r

3

,G

−X

r

4

,G

) ,

(4)

DE/rand/2

V

i,G

= X

r

1

,G

+ F ·(X

r

2

,G

−X

r

3

,G

)

+F ·

X

r

4

,G

−X

r

5

,G

,

(5)

where r

1

, r

2

, r

3

, r

4

, r

5

∈ [1, 2,... ,NP] are mutually

exclusive randomly chosen integers with a initiated

population of NP, and all are different from the base

index i. G denotes subsequent generations, and F > 0

is a scaling factor which controls the amplification

of differential evolution. X

best,G

is the best individual

vector with the best fitness (lowest objective function

value for a minimization) in the population.

DE/rand/2/dir

V

i,G

= X

r

1

,G

+ F/2 ·(X

r

1

,G

−X

r

2

,G

−X

r

3

,G

) (6)

DE/rand/2/dir (Feoktistov and Janaqi, 2004) incorpo-

rates the objective function information to guide the

direction of the donor vectors. X

r

1

,G

, X

r

2

,G

, and X

r

3

,G

are distinct population members such that f (X

r

1

,G

) ≤

{

f (X

r

2

,G

), f (X

r

3

,G

)

}

.

2.1.2 Crossover

To enhance the potential diversity of the population,

a crossover operation is introduced. The donor vec-

tor exchanges its components with the target vector to

form the trial vector:

U

ji,G+1

=

V

ji,G+1

,(rand

j

≤CR) or ( j = j

rand

)

X

ji,G+1

,(rand

j

≥CR) and ( j 6= j

rand

)

(7)

where j = [1,2, ..., D]; rand

j

∈ [0.0,1.0]; CR is

the crossover probability takes value in the range

[0.0,1.0], and j

rand

∈[1,2,. .., D] is the randomly cho-

sen index.

2.1.3 Selection

Selection is performed to determine whether the tar-

get vector or the trial vector survives to the next gen-

eration. The selection operation is described as:

X

i,G+1

=

U

i,G

, f (U

i,G

) ≤ f (X

i,G

)

X

i,G

, f (U

i,G

) > f (X

i,G

)

(8)

2.2 Variants of DE

In this section, we discuss about an introduction of

the most prominent DE variants that were developed

and appeared to be competitive against the existing

best-known real parameter optimizers. Some of these

variants are:

DE using Arithmetic Recombination (Price et al.,

2005)

V

i,G

= X

i,G

+ k

i

·(X

r

1

,G

−X

i,G

)

+F

0

·(X

r

2

,G

−X

r

3

,G

)

(9)

where k

i

is the combination coefficient, which can

be a constant or a random variable distribution from

[0.0,1.0], and F

0

= k

i

·F is a new constant parameter.

DE/rand/1/either-or

V

i,G

=

X

r

1

,G

+ F ·(X

r

2

,G

−X

r

3

,G

), rand

i

(0,1) < p

F

X

r

0

,G

+ k ·(X

r

1

,G

+ X

r

2

,G

−2X

r

0

,G

)

(10)

Price et al. (2005) proposed the state-of-the-art where

the trial vectors that are pure mutants occur with a

probability p

F

and those that are pure recombinants

occur with a probability 1 − p

F

(0.0 < p

F

< 1.0).

IJCCI2012-InternationalJointConferenceonComputationalIntelligence

260

Note that p

F

is a parameter that determines the

relative importance of a mutation and arithmetic

recombination schemes, Price et al. recommended a

value 0.4 for it, and the parameter k = 0.5 ·(F + 1) as

a good choice for a given F.

3 DE-PSO-APGA STRATEGY

With a view to global search, we proposed the new

algorithm using DE for Adaptive Plan system of

PSO with GA named DE-PSO-APGA. The DE-PSO-

APGA aims at getting the direction from PSO opera-

tor to adjust into adaptive system of APGA based on

alternative operators of DE scheme. In addition, for

a verification of APGA search process, refer to Ref.

(Hasegawa, 2007).

3.1 Algorithm

The proposed DE-PSO-APGA starts like the usual

DE algorithm up to the point where the trial vector is

generated. If the trial vector satisfies the conditions

given by (8), then the algorithm enters the PSO

operator to get the direction and generates a new

candidate solution with adaptive system of APGA.

The inclusion of APGA process turns helps in

maintaining diversity of the population and reaching

a global optimal solution.

Pseudocode of DE-PSO-APGA

begin

initialize population;

fitness evaluation;

repeat until (termination) do

DE update strategies;

PSO activated;

APGA process;

end do

renew population;

end.

DE Update Strategies

• DE-PSO-APGA1 by (1);

• DE-PSO-APGA2 by (2);

• DE-PSO-APGA3 by (3);

• DE-PSO-APGA4 by (4);

• DE-PSO-APGA5 by (5);

• DE-PSO-APGA6 by (6);

• DE-PSO-APGA7 by (9);

• DE-PSO-APGA8 by (10);

PSO Operator

We are concerned here with conventional basic model

of PSO (Kennedy and Eberhart, 2001). In this model,

each particle which make up a swarm has informa-

tion of its position x

i

and velocity v

i

(i is the index of

the particle) at the present in the search space. Each

particle aims at the global optimal solution by updat-

ing next velocity making use of the position at the

present, based on its best solution has been achieved

so far p

i j

and the best solution of all particles g

j

( j = [1, 2,... ,D], D is the dimension of the solution

vector), as following equation:

v

G+1

i j

= wv

G

i j

+ c

1

r

1

p

G

i j

−X

G

i j

+ c

2

r

2

g

G

j

−X

G

i j

(11)

where w is inertia weight; c

1

and c

2

are cognitive ac-

celeration and social acceleration, respectively; r

1

and

r

2

are random numbers uniformly distributed in the

range [0.0, 1.0].

In our strategy, the concept of time-varying has

been adapted (Shi and Eberhart, 1999). The inertia

weight w in (11) linearly decreasing with the iterative

generation as below:

w = (w

max

−w

min

)

iter

max

−iter

iter

max

+ w

min

(12)

where iter is the current iteration number while

iter

max

is the maximum number of iterations, the

maximal and minimal weights w

max

and w

min

are re-

spectively set 0.9, 0.4 known from experience.

The acceleration coefficients are expressed as:

c

1

= (c

1 f

−c

1i

)

iter

max

−iter

iter

max

+ c

1i

(13)

c

2

= (c

2 f

−c

2i

)

iter

max

−iter

iter

max

+ c

2i

(14)

where c

1i

, c

1 f

, c

2i

and c

2 f

are initial and final values

of the acceleration coefficient factors respectively.

The most effective values are set 2.5 for c

1i

and c

2 f

and 0.5 for c

1 f

and c

2i

as in (Eberhart and Shi, 2000).

APGA Process

Adaptive Plan with Genetic Algorithm (APGA)

(Hasegawa, 2007) that combines the global search

ability of a GA and an Adaptive Plan (AP) with ex-

cellent local search ability is superior to other EAs,

Memetic Algorithms (MAs) (Smith et al., 2005). The

APGA concept differs in handling DVs from general

EAs based on GAs. EAs generally encode DVs into

the genes of a chromosome, and handle them through

GA operators. However, APGA completely separates

DVs of global search and local search methods. It en-

codes Control variable vectors (CVs) of AP into its

genes on Adaptive system (AS). Moreover, this sep-

aration strategy for DVs and chromosomes can solve

DifferentialEvolutionforAdaptiveSystemofParticleSwarmOptimizationwithGeneticAlgorithm

261

MA problem of breaking chromosomes. The control

variable vectors (CVs) steer the behavior of adaptive

plan (AP) for a global search, and are renewed via ge-

netic operations by estimating fitness value. For a lo-

cal search, AP generates next values of DVs by using

CVs, response value vectors (RVs) and current values

of DVs according to the formula:

X

i,G+1

= X

i,G

+ AP(C

G

,R

G

) (15)

where AP(), X, C, R, and G denote a function of AP,

DVs, CVs, RVs and generation, respectively.

3.2 Adaptive Plan (AP)

It is necessary that the AP realizes a local search pro-

cess by applying various heuristics rules. In this pa-

per, the plan introduces a DV generation formula us-

ing velocity update from PSO operator that is effec-

tive in the convex function problem as a heuristic rule,

because a multi-peak problem is combined of convex

functions. This plan uses the following equation:

AP(C

G

,R

G

) = scale ·SP ·PSO ·(∇R) (16)

SP = 2 ·C

G

−1 (17)

where ∇R denote sensitivity of RVs, constriction fac-

tor scale randomly selected from a uniform distribu-

tion in [0.1,1.0], and velocity update PSO by (11).

Step size SP is defined by CVs for controlling a

global behavior to prevent it falling into the local opti-

mum. C = [c

i, j

, ..., c

i,p

]; (0.0 ≤c

i, j

≤1.0) is used so

that it can change the direction to improve or worsen

the objective function, and C is encoded into a chro-

mosome by 10 bit strings (shown in Figure 1). In ad-

dition, i, j and p are the individual number, design

variable number and its size, respectively.

0 0 0 1 0 1 0 0 0 0

0 0 0 0 0 1 0 1 0 0

c

i,

1

=

80/1023 =

0.07820

c

i,

2

=

20/1023 =

0.01955

0 0 0 1 0 1 0 0 0 0

Individual

i

Step size

c

i,

1

of

x

1

:

Step size

c

i,

2

of

x

2

:

0 0 0 1 0 1 0 0 0 0

0 0 0 0 0 1 0 1 0 0

c

i,

1

=

80/1023 =

0.07820

c

i,

2

=

20/1023 =

0.01955

0 0 0 1 0 1 0 0 0 0

Individual

i

Step size

c

i,

1

of

x

1

:

Step size

c

i,

2

of

x

2

:

Figure 1: Encoding into genes of a chromosome.

3.3 GA Operators

3.3.1 Selection

Selection is performed using a tournament strategy to

maintain the diverseness of individuals with a goal of

keeping off an early convergence. A tournament size

of 2 is used.

3.3.2 Elite Strategy

An elite strategy, where the best individual survives in

the next generation, is adopted during each generation

process. It is necessary to assume that the best indi-

vidual, i.e., as for the elite individual, generates two

behaviors of AP by updating DVs with AP, not GA

operators. Therefore, its strategy replicates the best

individual to two elite individuals, and keeps them to

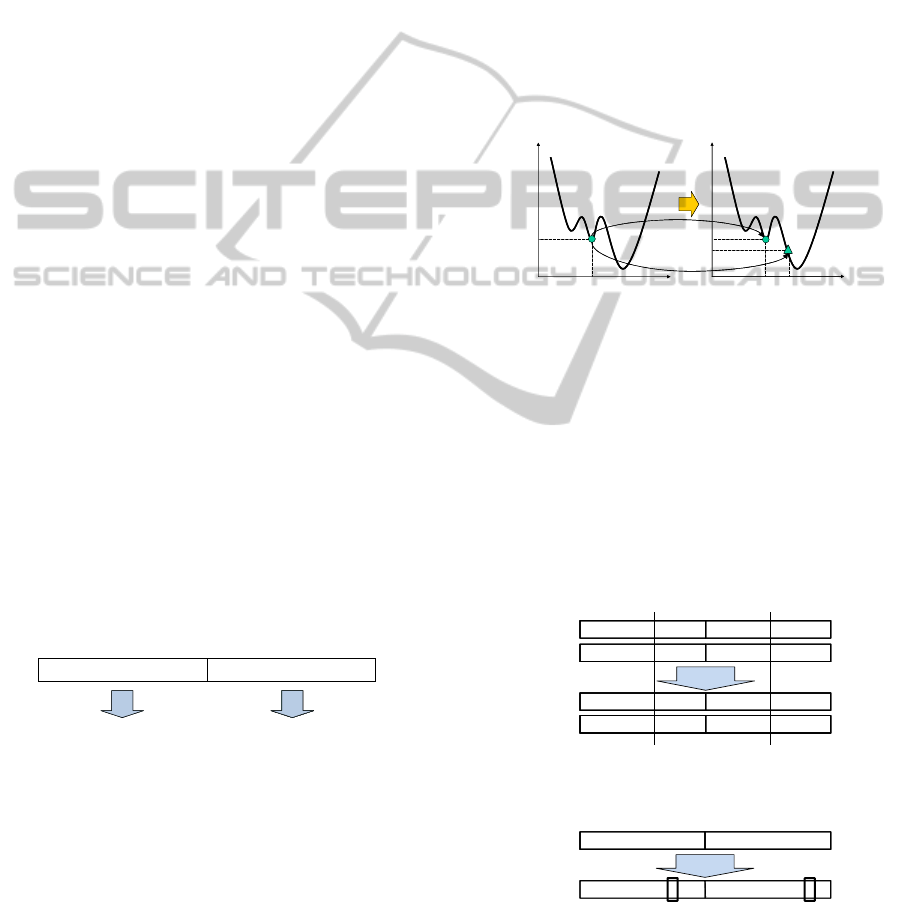

next generation. As shown in Figure 2, DVs of one of

them (∆ symbol) is renewed by AP, and its CVs which

are coded into chromosome arent changed by GA op-

erators. Another one (◦ symbol) is that both DVs and

CVs are not renewed, and are kept to next generation

as an elite individual at the same search point.

0

f

(x)

x

elite

x

(x

elite

)

f

0

t generation

t+1 generation

f

(x)

(x

elite

)

f

(x

new

)

f

x

elite

x

new

x

0

f

(x)

f

(x)

x

elite

x

(x

elite

)

f

(x

elite

)

f

0

t generation

t+1 generation

f

(x)

f

(x)

(x

elite

)

f

(x

elite

)

f

(x

new

)

f

(x

new

)

f

x

elite

x

elite

x

new

x

new

x

Figure 2: Elite strategy.

3.3.3 Crossover and Mutation

In order to pick up the best values of each CV, a single

point crossover is used for the string of each CV. This

can be considered to be a uniform crossover for the

string of the chromosome as shown in Figure 3(a).

Mutation are performed for each string at muta-

tion ratio on each generation, and set to maintain the

strings diverse as shown in Figure 3(b).

Step size c of x : Step size c

i,2

of x

2

:

Crossover

0 0 0 1 0 1 0 0 0 0 0 0 0 0 0 1 0 1 0 0

Individual B

Individual A

0 0 0 1 0 0 1 1 1 0 0 0 0 1 1 1 0 0 0 1

0 0 0 1 0 1 1 1 1 0

0 0 0 0 0 1 0 0 0 1

Individual B

Individual A

0 0 0 1 0 0 0 0 0 0 0 0 0 1 1 1 0 1 0 0

i,1 1

: :

Crossover

:

i,2 2

:

Crossover

i,1 1

: :

Crossover

(a)

Step size c of x : Step size c

i,2

of x

2

:

Individual A

0 0 0 1 0 1 1 1 1 0 0 0 0 0 0 1 0 0 0 1

Individual A

0 0 0 1 0 1 1 0 1 0 0 0 0 0 0 1 0 0 1 1

i,1 1

: ::

i,2 2

:

i,1 1

: :

Mutation

(b)

Figure 3: Crossover and Mutation.

IJCCI2012-InternationalJointConferenceonComputationalIntelligence

262

3.3.4 Recombination

At following conditions, the genetic information on

chromosome of individual is recombined by uniform

random function.

• One fitness value occupies 80% of the fitness of

all individuals;

• One chromosome occupies 80% of the popula-

tion.

4 NUMERICAL EXPERIMENTS

The numerical experiments are performed 25 trials for

every function. The initial seed number is randomly

varied during every trial. The population size is 100

individuals and the terminal generation is 1500 gen-

erations. Parameters setting for the experiments are

given in Table 1.

4.1 Benchmark Functions

We estimate the stability of the convergence to the

optimal solution by using five benchmark functions

- Rastrigin (RA), Griewank (GR), Ridge (RI), Ack-

ley (AC), and Rosenbrock (RO). These functions are

given as follows:

RA : f

1

= 10n +

n

∑

i=1

{x

2

i

−10cos(2πx

i

)} (18)

RI : f

2

=

n

∑

i=1

i

∑

j=1

x

j

!

2

(19)

GR : f

3

= 1 +

n

∑

i=1

x

2

i

4000

−

n

∏

i=1

cos

x

i

√

i

(20)

AC : f

4

= −20exp

−0.2

s

1

n

n

∑

i=1

x

2

i

!

−exp

1

n

n

∑

i=1

cos(2πx

i

)

!

+ 20 + e (21)

RO : f

5

=

n

∑

i=1

[100(x

i+1

+ 1 −(x

i

+ 1)

2

)

2

+ x

2

i

] (22)

Table 2 summarizes their characteristics, and de-

sign range of DVs. The terms epistasis, peaks, steep

denote the dependence relation of the DVs, presence

of multi-peak and level of steepness, respectively. All

functions are minimized to zero (ESP = 1.7e

−308

),

when optimal DVs X = 0 are obtained.

Table 1: Setting parameters for solving Benchmarks.

Control Parameter Set value

DE

Scaling factor F ∈ [0.1,1.0]

Crossover CR = 0.5

PSO

Inertia weight

w

max

= 0.9

w

min

= 0.4

Coefficients

c

1i

= c

2 f

= 2.5

c

1 f

= c

2i

= 0.5

GA

Selection 0.1

Crossover 0.8

Mutation 0.01

Population size 100; Terminal generation 1500

Table 2: Benchmark functions and design range.

Func Epistasis Peaks Steep DVs range

RA No Yes Average [-5.12,5.12]

RI Yes No Average [-100,100]

GR Yes Yes Small [-600,600]

AC No Yes Average [-32,32]

RO Yes No Big [-30,30]

4.2 Experiment Results

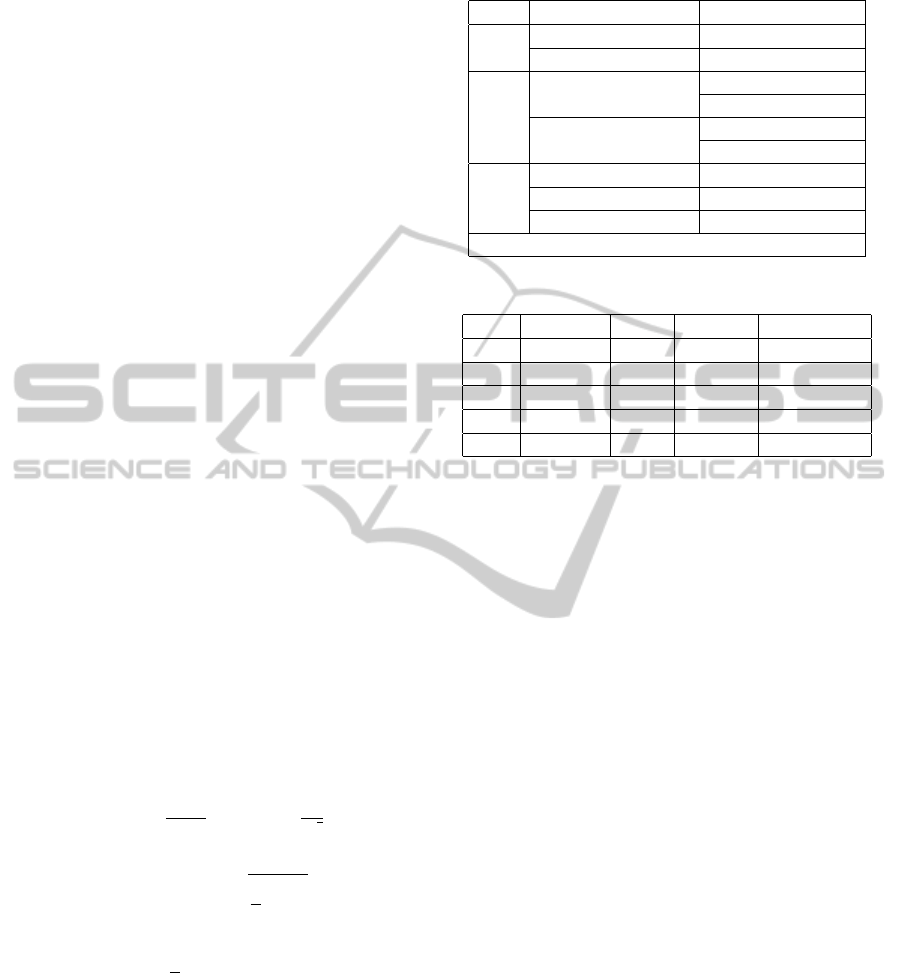

The experiment results, averaged over 25 trials with

RO function are shown in Table 3. The solutions

of all strategies reach their global optimum solu-

tions. When the success rate of optimal solution is

not 100%, ”-” is described.

From the results via optimization experiments, we

employed DE-PSO-APGA2 using DE/best/1 update

strategy for the DE-PSO-APGA algorithm, and rec-

ommended a value 0.1 for the scaling factor F. Ad-

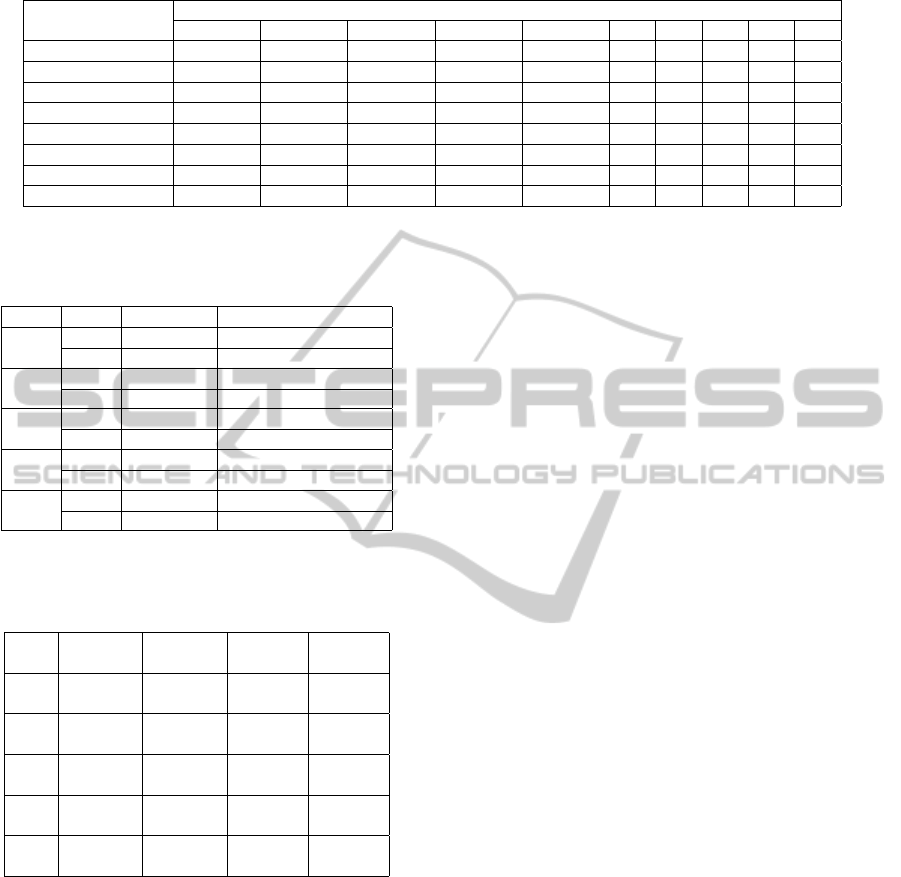

ditionally, the average results of all benchmark func-

tions with 30 and 100 dimensions by the DE-PSO-

APGA are given in Table 4. The success rate of

optimal solution is 100% with all benchmark func-

tions. ”Mean” indicates average of minimum values

obtained, and ”Std.” stands for standard deviation.

In summary, its validity confirms that this strategy

can reduce the computation cost and improve the sta-

bility of the convergence to the optimal solution.

4.3 Comparison

Table 5 shows the comparison of PSO, DE, DE-PSO

(Pant et al., 2008) and the DE-PSO-APGA. As a re-

sult, DE-PSO-APGA algorithm outperformed PSO,

DE and DE-PSO in all benchmark functions. There-

fore, it is desirable to introduce this method for the

new evolution strategy.

Overall, DE-PSO-APGA was capable of attaining

robustness, high quality, low calculation cost and ef-

DifferentialEvolutionforAdaptiveSystemofParticleSwarmOptimizationwithGeneticAlgorithm

263

Table 3: Average of minimum values obtained over 25 trials with RO function (D = 30, population size 50, CR = 0.5).

Strategy

Scaling factor F

0.1 0.2 0.3 0.4 0.5 0.6 0.7 0.8 0.9 1.0

DE-PSO-APGA1 - - - - - - - - - -

DE-PSO-APGA2 0 0 0 0 0 0 0 - - -

DE-PSO-APGA3 2.96e-30 5.06e-30 2.9e-197 2.9e-197 2.8e-197 0 0 - - -

DE-PSO-APGA4 0 0 0 0 0 - - - - -

DE-PSO-APGA5 - - - - - - - - - -

DE-PSO-APGA6 5.31e-30 1.6e-118 - - - - - - - -

DE-PSO-APGA7 - - - - - - - - - -

DE-PSO-APGA8 - - - - - - - - - -

Table 4: Results by DE-PSO-APGA with population size

100 (F = 0.1, CR = 0.5). Mean indicates average of mini-

mum values obtained, ”Std.” stands for standard deviation.

Func Dim. Max Gen. Mean (Std.)

RA

30 500 0.00e+00 (0.00e+00)

100 1500 0.00e+00 (0.00e+00)

RI

30 500 0.00e+00 (0.00e+00)

100 1500 0.00e+00 (0.00e+00)

GR

30 500 0.00e+00 (0.00e+00)

100 1500 0.00e+00 (0.00e+00)

AC

30 500 4.44e-16 (0.00e+00)

100 1500 4.44e-16 (0.00e+00)

RO

30 500 0.00e+00 (0.00e+00)

100 1500 0.00e+00 (0.00e+00)

Table 5: Comparison results of PSO, DE, DE-PSO and DE-

PSO-APGA (D = 30, population size 30, max generation

3000).

Func PSO DE DE-PSO

DE-PSO

-APGA

RA

37.82 2.531 1.614 0

(7.456) (5.19) (3.885) (0)

RI

- - - 0

- - - (0)

GR

0.018 0 0 0

(0.023) (0) (0) (0)

AC

1.0e-08 7.3e-15 3.7e-15 4.4e-16

(1.9e-08) (7.7e-16) (0) (0.0e+0)

RO

81.27 31.14 24.2 0

(41.22) (17.12) (12.31) (0)

ficient performance on many benchmark problems.

5 CONCLUSIONS

To overcome the computational complexity, a new

strategy using DE for Adaptive Plan system of PSO

with GA called DE-PSO-APGA has been proposed to

solve a huge scale optimization problem, and to im-

prove the convergence to the optimal solution. Then,

we verify the effectiveness of the DE-PSO-APGA by

the numerical experiments performed five benchmark

functions.

We can confirm that the DE-PSO-APGA dramat-

ically reduces the calculation cost and improves the

convergence towards the optimal solution.

REFERENCES

Clerc, M. and Kennedy, J. (2002). The particle swarm-

explosion, stability and convergence in a multidimen-

sional complex space. In IEEE Trans. Evol. Comput.

Das, S., Abraham, A., and Konar, A. (2008). Parti-

cle swarm optimization and differential evolution al-

gorithms: Technical analysis, applications and hy-

bridization perspectives. In Studies in Computational

Intelligence (SCI).

Eberhart, R. C. and Shi, Y. (2000). Comparing inertia

weights and constriction factors in particle swarm op-

timization. In Proc. Congr. Evol. Comput.

Feoktistov, V. and Janaqi, S. (2004). Generalization of

the strategies in differential evolution. In Proc. 18th

IPDPS.

Goldberg, D. E. (1989). Genetic Algorithms in Search Op-

timization and Machine Learning. Addison-Wesley.

Hasegawa, H. (2007). Adaptive plan system with genetic al-

gorithm based on synthesis of local and global search

method for multi-peak optimization problems. In 6th

EUROSIM Congress on Modelling and Simulation.

Kennedy, J. and Eberhart, R. (2001). Swarm Intelligence.

Morgan Kaufmann Publishers.

Pant, M., Thangaraj, R., Grosan, C., and Abraham, A.

(2008). Hybrid differential evolution - particle swarm

optimization algorithm for solving global optimiza-

tion problems. In Third International Conference on

Digital Information Management (ICDIM).

Price, K., Storn, R., and Lampinen, J. (2005). Differential

Evolution - A Practical Approach to Global Optimiza-

tion. Springer, Berlin, Germany.

Shi, Y. and Eberhart, R. C. (1999). Empirical study of par-

ticle swarm optimization. In Proc. IEEE Int. Congr.

Evol. Comput.

Smith, J. E., Hart, W. E., and Krasnogor, N. (2005). Recent

Advances in Memetic Algorithms. Springer.

Storn, R. and Price, K. (1997). Differential evolution - a

simple and efficient heuristic for global optimization

over continuous spaces. In Journal Global Optimiza-

tion.

IJCCI2012-InternationalJointConferenceonComputationalIntelligence

264