A Pilot Panel Study in User-centered Design and Evaluation of

Real-time Adaptable Emotional Virtual Environments

Valeria Carofiglio, Nicola Gallo and Fabio Abbattista

Dipartimento di Informatica, Universita’ di Bari, Bari, Italy

Keywords: Brain-computer Interface, Emotional Adaptable Virtual Environments.

Abstract: User-centered evaluation (UCE) is an essential component of developing any interactive application.

Usability, perceived usefulness and appropriateness of adaptation are the three most commonly assessed

variables of the users' experience. However, in order to validate a design, the new interaction paradigms

encourage to explore new variables for accounting the users' need and preferences. This is especially

important for applications as complex and innovative as Adaptable Virtual Environments (AVE). In this

context, a good design should manage the user’s emotional level as a UCE activity, to obtain a more

engaging overall user’s experience. In this work we overcome the weaknesses of traditional methods by

employing a Brain Computer Interface (BCI) to collect additional information on user’s needs and

preferences. A pilot study is conducted for determining if (i) the BCI is a suitable technique to evaluate the

emotional experience of AVE’s users and (ii) the contents of a given AVE could be improved in order to

result in high subject agreement in terms of elicited emotion.

1 INTRODUCTION

User-based evaluation is an essential component of

developing any interactive application and it is

especially important for applications as Adaptable

Virtual Environments (AVE). Classical usability

studies are based on functional task, we focus on

fostering a more engaging overall experience by

exploiting the user’s emotional level as powerful

engine in the interaction experience (Emotional

Adaptable Virtual Environment – EAVE).

Traditional methods for assessing the user

experience, such as self-report or interviews, are not

ideal within EAVE because they rely either on

sampling approaches or the users' perception of the

environment. Also methods for capturing the

interaction experience in an unconscious and

continuous approach (e.g. log experience) may be

troublesome, as they do not collect subjective

feedback from (potential) users. The field of Brain

Computer Interface (BCI) (van Gerven et al., 2009)

has recently witnessed an explosion of systems for

studying human emotion by the acquisition and

processing of physiological signals(Bang and Kim,

2004). A BCI is a direct communication pathway

between the brain and an external device. Several

researchers (Murugappan et al., 2007), (Bos, 2006)

have shown that it is possible to extract emotional

cues from electroencephalography (EEG)

measurements, which become a way to investigate

the emotional activity of a subject beyond his

conscious and controllable behaviours. An important

distinction is made between two dimensions of

emotion: The valence (from negative to positive)

and the arousal (from calm to excited) (Russell,

2003). Researchers have investigated how changes

along these two dimensions modulate the EEG

signals and have determined that the position of an

emotion in this two dimensional planes can be

derived from EEG data (Chanel et al., 2006), (Heller

et al., 1997).

By viewing serious games as one of the most

representative examples of EAVE, but also as

elicitors of complex user emotion synthesis, we

explore on going research on successful realization

of affective loop, in which “the system should

involve users in an emotional, physical interactional

process” (Leite et al., 2010). To achieve a good

design, the phases of emotion elicitation, affective

detection and modeling and affect driven system

adaptation are critical. In this view, we propose a

user centered approach to design and support the

emotional user experience within EAVE, which will

be based on standard BCI. The expected result is a

67

Carofiglio V., Gallo N. and Abbattista F..

A Pilot Panel Study in User-centered Design and Evaluation of Real-time Adaptable Emotional Virtual Environments.

DOI: 10.5220/0003975500670071

In Proceedings of the 14th International Conference on Enterprise Information Systems (ICEIS-2012), pages 67-71

ISBN: 978-989-8565-12-9

Copyright

c

2012 SCITEPRESS (Science and Technology Publications, Lda.)

dynamic increase of the interaction's customization

and therefore an improvement of the user's

engagement, focusing on how self-induced emotions

could be utilized in a BCI paradigm (real-time data

processing).

In this view, the real-time acquisition of

information about the emotional state of the user

provided by the system should be used to adapt the

characteristics of the interaction: That should give

the chance of better reaching the intended emotional

effects on each individual user. In this paper we

conduct a BCI-based pilot study for determining if

the contents of a given AVE (see section 4 for more

details) could result in high subject agreement in

terms of elicited emotion.

2 MEASURING EMOTIONS BY

BCI

Emotions are a really complex phenomenon, so

there is no universal method to measure them.

Methods may be categorized into subjective and

objective ones. Questionnaires or picture tools (Self-

Assessment Mainkin (SAM) (Bradley and Lang,

1994) or the Affect grid (Russell et al., 1989)) could

be used as self-report instruments. Objective

methods use physiological cues derived from the

theories of emotions which define universal pattern

of autonomic and central nervous system responses

related to the experience of emotions. Other

modalities used for measuring emotions include

blood pressure, heart rate, respiration. This paper

exploits emotion assessment via EEG.

A BCI records human activity in form of

electrical potentials (EPs), through multiple

electrodes that are placed on the scalp. Depending

on the brain activity, distinctive known patterns in

the EEG appear. To account for user emotional state

during BCI operation, most of the literature suggests

an exhaustive training of the BCI classification

algorithm under various emotional states: In the

general approach the user is exposed to an opportune

affective stimulation. The type of mental activity

elicited is then processed to obtain features that

could be grouped into features vectors. Such features

vectors are then used to train the BCI classification

algorithm, which can then recognize the relevant

brain activity.

If a passive BCI is employed, as in our case,

active user involvement is not required. The

interpretation of his/her mental state could be a

source of control to the automatic system adaptation

(from the application interface to the virtual

environment), for example in order to motivate and

involve him/her by the application feedback.

3 EMOTIONAL RECOGNITION

BY Emotiv

tm

EPOC

Emotiv

TM

Epoc (http://www.emotiv.com), is a high-

resolution, low-cost, easy to use neuroheadset

developed for games. Based on the International 10-

20 locations, it captures neural activity using 14 dry

electrodes (AF3, F7, F3, FC5, T7, P7, O1, O2, P8,

T8, FC6, F4, F8, AF4) plus CMS/DRL references,

P3/P4 locations). The headset samples all channels

at 128Hz, each sample being a 14 bit value

corresponding to the voltage of a single electrode.

Directly based on the user's brain activity,

Emotiv

TM

Epoc reads different emotion-related

measures. Among the other, the Instantaneous

Excitement (IE) and the Long term Excitement

(LTE). The first is experienced as an awareness or

feeling of physiological arousal with a positive

value. It is tuned to provide output scores that more

accurately reflect short-term changes in excitement

over time periods as short as several seconds; LTE is

experienced and defined in the same way as IE, but

the detection is designed and tuned to be more

accurate when measuring changes in excitement

over longer time periods, typically measured in

minutes. Both these measures are time-independent:

At each arousal variation the IE and LTE are

detected.

4 THE ADAPTABLE VIRTUAL

ENVIRONMENT

Some application require detection of and

management of user's emotions to provide an

appropriate user experience or even to avoid

psychological harm. As main example of this kind of

application, we consider a Nazi extermination camp.

Moreover, sooner the only way to preserve the

remembrance of that terrible historical period will

entrusted on indirect documentation in the form of

videos, images and texts reporting interviews to last

witnesses. A way to maintaining alive the dramatic

meaning of that experience could be to reconstruct a

3D virtual environment of one of those camps, such

as Auschwitz.

In our VE a digital character representing a

prisoner guides users through different parts of the

camp. During the navigation, the VE activates links

ICEIS2012-14thInternationalConferenceonEnterpriseInformationSystems

68

to videos, photos documenting the Jewish and

Gipsy's lifestyle in the 1940-1945 period, or plays

songs that some prisoners composed during their

permanence. By means of the BCI we aim at

capturing the user' reaction to presented contents in

order to allow the virtual prisoner to dynamically

adapt the visit to the user profile, choosing to avoid

some media judged too upsetting for the users

sensibility or visiting only some zones of the entire

virtual world, in order to maintain the current user's

emotive state or to induce a desired one. In this way

users could be guided along well defined emotional

and informative paths.

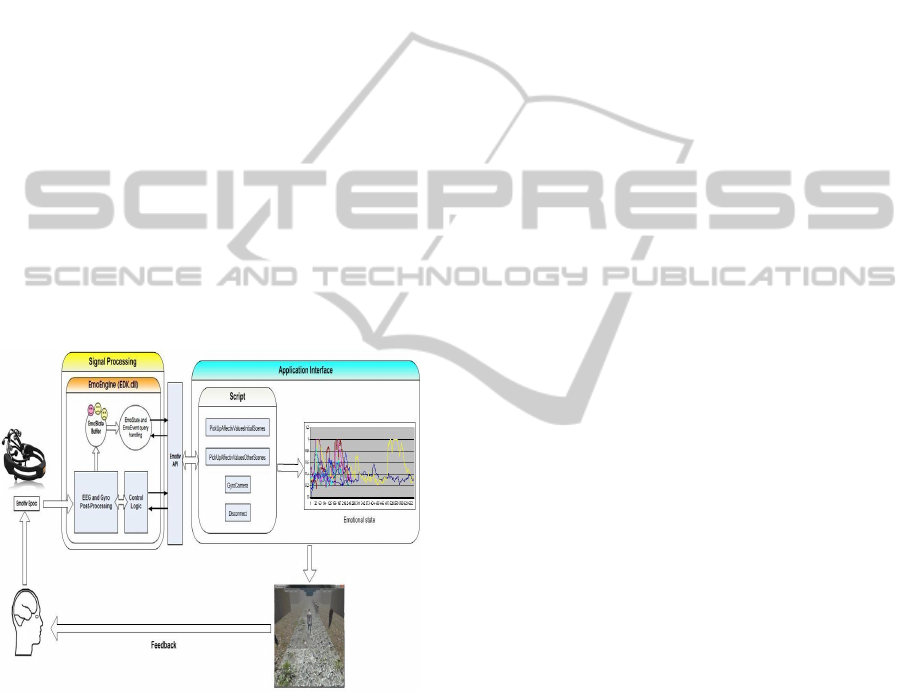

Our virtual environment has been interfaced with

the Emotiv

TM

Epoc headset (see Figure 1). The

headset detect the EEG signal of the user and pre-

process them, by means of some C# script:

- A script manages the connection between the

headset and the virtual environment.

- Another script receives and saves user’s affective

values, i.e. long-term excitement and instantaneous

excitement.

- A third script detects user’s head movements to

adjust the camera perspective.

Figure 1: Functional model of the employed BCI.

5 EXPERIMENTAL RESULTS

As stated, in choosing the scenario we avoided

scenes that could objectively induce psychological

harm. As a consequence, in order to avoid the lack

of reaction to the selected scenes, the subject who

took part to the experiments were chosen on the base

of their knowledge about the domain, avoiding

people who reported a strong knowledge of the

domain. Moreover, all subjects were preventively

informed about the details of the experiment, and

only those who have deliberately declared its

intention to participate in the experiment were taken

into account.

Eight different subjects interacted with the 3D

environment (50% males and 50% females).

Concerning the age distribution, 25% ranged

between 18 and 25 years old; 12,5% ranged between

26 and 35 years old and 62% aged more than 46

years old. Each experimental session lasted about 13

minutes.

The environment includes 8 different scenes;

among these, six scenes contain historical

multimedia documentation; the remaining two

include only the 3D reconstruction of the camp. At

the end of the interaction each user answered a self-

assessment questionnaire (see Appendix).

The goals of the experiment were to evaluate

several different factors concerning the emotional

experience of users interacting with our 3D

environment:

1. How much the 3D environment is inherently

emotive. For each user, we measured the global

emotional response due to the whole interaction

process, by:

- A subjective evaluation: Analysis of the user

response to the questionnaire;

- An objective evaluation: Analysis of the

recorded user EEG signal;

- Comparing the two previous analysis to

evaluate their consistency;

2. How much each scene contributes to the inherent

emotional level of the 3D environment, by analyzing

the EEG signals of each user for each scene and by

measuring the average IE and LTE;

3. How much the multimedia content of each scene

contributes to the emotional response with respect to

the scenes not enriched with multimedia contents.

Concerning the first question, experimental data

show that 75% of the users claimed they felt

different levels of sadness, while 25% felt both

anger and fear (see Table 1). The values are

moderately low but the coherency between both IE

and LTE values indicates that a user emotional

response actually occurred.

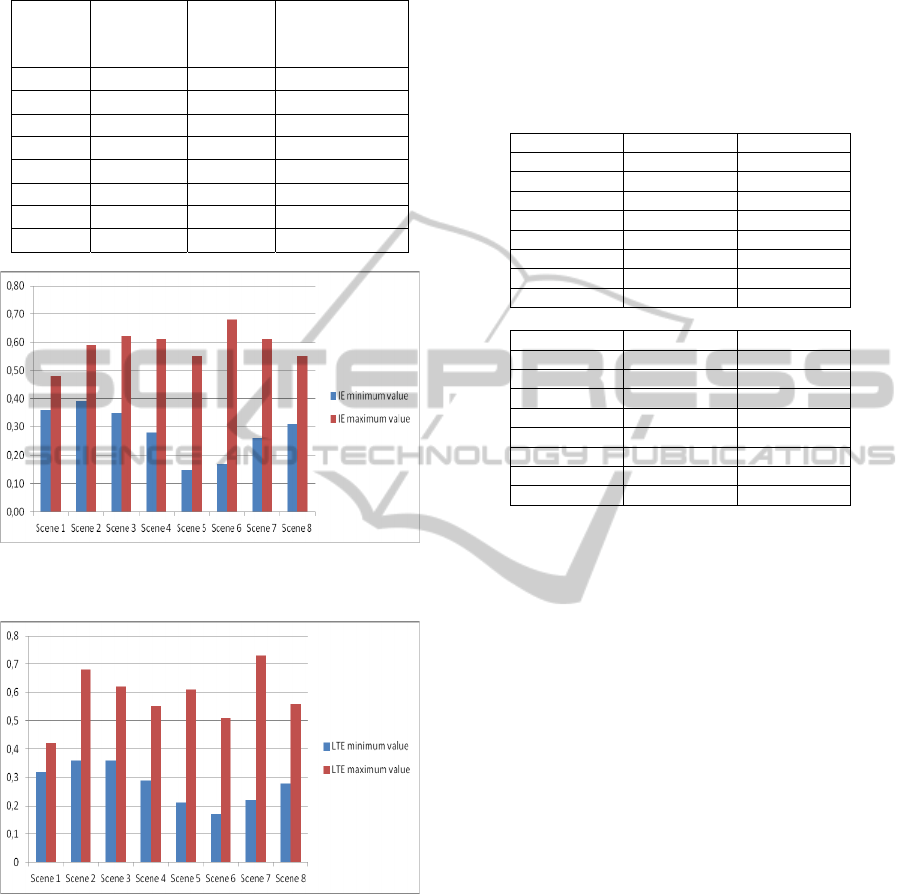

What about the contribution of each scene?

From Figures 2 and 3, it could be noted that the

scene with higher average IE value is the scene 6, a

scene lacking multimedia content, but the scene 7

has the higher average LTE value, and this means

that users were very involved during all (or most of)

the scene.

APilotPanelStudyinUser-centeredDesignandEvaluationofReal-timeAdaptableEmotionalVirtualEnvironments

69

Table 1: Average IE and LTE values wrt. users’ declared

emotions.

Avg IE

values

Avg LTE

values

User Emotional

Response (as

declared)

User 1 0.343 0.338 Sadness (4)

User 2 0.543 0.535 Sadness (4)

User 3 0.411 0.402 Sadness (3)

User 4 0.368 0.360 Sadness (6)

User 5 0.467 0.458 Anger (5)

User 6 0.369 0.375 Sadness (4)

User 7 0.463 0.458 Fear (5)

User 8 0.447 0.438 Sadness (5)

Figure 2: Minimum and maximum average IE values for

each scene.

Figure 3: Minimum and maximum average LTE values for

each scene.

Finally, we tried to discover if the multimedia

contents contribute to the emotional response of the

users. Tables 2 shows, for each user, the scene(s)

with, respectively, IE and LTE greater than the two

scenes with no multimedia contents. The EEG

signals detected indicate that 62.5% of the users

consider the scenes with multimedia contents more

emotionally engaging than the other two scenes

(Table 2.a); for 85% of the users the emotional

engagement of the scenes with multimedia contents

has a longer duration (Table 2.b).

Table 2: Number of scene with multimedia content with

greater IE value (a) and greater LTE value (b) wrt the two

scene without multimedia content (A zero in the cell

indicates that the scene corresponding to the column has

an higher IE/LTE value).

(a) Scene 4 Scene 6

User 1 0 5

User 2 0 0

User 3 3 3

User 4 6 2

User 5 4 2

User 6 0 0

User 7 4 6

User 8 4 0

(b) Scene 4 Scene 6

User 1 0 6

User 2 4 5

User 3 3 2

User 4 5 3

User 5 1 1

User 6 3 3

User 7 4 5

User 8 2 0

6 CONCLUSIONS

The main idea behind this research was that a good

design should manage the user’s emotional level as a

UCE activity, to obtain a more engaging overall

user’s experience. To this aim, we employed a BCI

to collect additional information on user’s needs and

preferences without questioning the user itself. This

enables a faster collection process avoiding mistakes

due to the user misbehaviour. Preliminary results

show that our method allows to identify which

application’s contents do not induce an appropriate

emotional response. In this way the designer could

delete scenes or multimedia contents if they result in

low subject agreement in terms of elicited emotion

and replace them with more engaging contents.

However, widening the number of experimental

subjects or choosing them with given personal

characteristics is necessary.

Concurrently, the design and building of the

adaptive virtual environment module should be

considered. The expected application should be able

to choose the proper multimedia content by avoiding

some media judged too upsetting for the users

sensibility and/or by hiding some zones of the

virtual world, in order to induce a desired user’s

emotional state. In this way users could be implicitly

ICEIS2012-14thInternationalConferenceonEnterpriseInformationSystems

70

guided along well defined emotional and

informative paths.

REFERENCES

van Gerven, M., Farquhar, J., Schaefer, R., Vlek, R.,

Geuze, J., Nijholt, A., Ramsey, N., Haselager, P.,

Vuurpijl, L., Gielen, S., Desain, P.: The brain-

computer interface cycle. Journal of Neural

Engineering 6(4), 041001 (2009).

Kim, K. H, Bang, S. W. and S. R. Kim. Emotion

recognition system using short term monitoring of

physiological signals. Medical and Biological

Engineering and Computing, (2004) 42:419 427.

Murugappan, M., Rizon, M., Nagarajan R., Yaacob, S.,

Zunaidi I., and Hazry, D. EEG feature extraction for

classifying emotions using FCM and FKM. In Proc.

Of ACACO. China. 2007

Bos., D. O. EEG-based Emotion Recognition: the

influence of visual and auditory stimuli, Nederland,

11-16-2006.

Russell, J. A. Core affect and the psychological

construction of emotion. Psychological Review (2003).

110:145-172.

Chanel, G. Kronegg, J., Grandjean, D. and Pun., T.

Emotion assessment: Arousal evaluation using EEG's

and peripheral physiological signals. Technical Report

05.02, Computer Vision Group, Computing Science

Center, University of Geneva, 2005.

Heller, W., Nitschke, J. B. and Lindsay, D. L.

Neuropsychological correlates of arousal in self-

reported emotion. Neuroscience letters, 11(4):383 402,

1997.

Leite, I., Pereira, A., Mascarenhas, S., Castellano, G.,

Martinho, C., Prada, R., Paiva, A.: Closing the loop:

from affect recognition to empathic interaction. In:

Proceedings of the 3rd international workshop on

Affective interaction in natural environments. pp. 43–

48. AFFINE ’10, ACM, New York, NY, USA (2010)

Bradley, M. M., Lang, P. J., Behavioral Therapy and

Experimental Psychiatry, Vol. 25, No. 1. (1994). pp.

49-59.

Russell, J. A., Weiss, A., Mendelsohn, G. A.. Affect Grid:

A single-item scale of pleasure and arousal. Journal of

Personality and Social Psychology, Vol. 57, No. 3.

(September 1989), pp. 493-502.

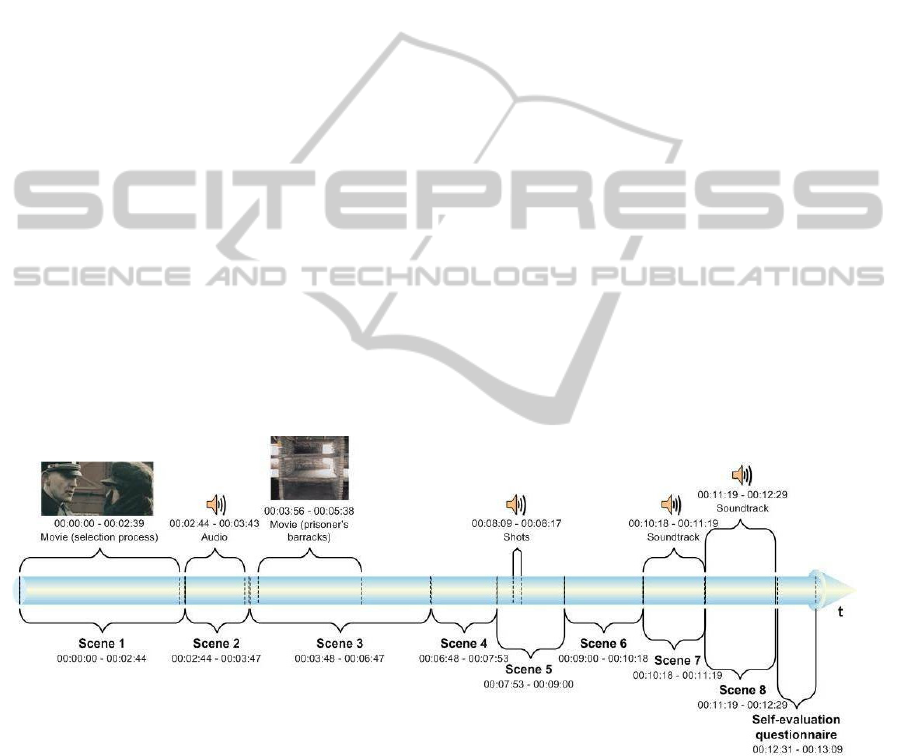

APPENDIX

The figure below shows the timeline of each

experimental session. The environment includes 8

different scenes; among these, six scenes contain

historical multimedia documentation; the remaining

two include only the 3D reconstruction of the camp.

At the end of the interaction each user answered a

self-assessment questionnaire.

APilotPanelStudyinUser-centeredDesignandEvaluationofReal-timeAdaptableEmotionalVirtualEnvironments

71