PEDESTRIAN IDENTIFICATION BY ASSOCIATING WALKING

RHYTHMS FROM WEARABLE ACCELERATION SENSORS

AND BIPED TRACKING RESULTS

Preparation of Camera-Ready Contributions to SciTePress Proceedings

Tetsushi Ikeda

1

, Hiroshi Ishiguro

1,2

, Takahiro Miyashita

1

and Norihiro Hagita

1

1

ATR Intelligent Robotics and Communication Laboratories, Kyoto, Japan

2

Department of Systems Innovation, Graduate School of Engineering Science, Osaka University, Osaka, Japan

Keywords: Accelerometer, Biped Tracking, Laser Range Finder, Perceptual Information Infrastructure, Sensor Fusion.

Abstract: Providing personal and location-dependent services is one of the promising services in public spaces like a

shopping mall. So far, sensors in the environment have reliably detected the current positions of humans,

but it is difficult to identify people using these sensors. On the other hand, wearable devices can send their

personal identity information, but precise position estimation remains problematic. In this paper, we propose

a novel method of integrating laser range finders (LRFs) in the environment and wearable accelerometers.

The legs of pedestrians in the environment are tracked by using LRFs, and acceleration signals from

pedestrians are simultaneously observed. Since the tracking results of biped feet and the body oscillation of

the same pedestrian show same walking rhythm patterns, we associate these signals from same pedestrian

that maximizes correlation between them and identify the pedestrian. Example results of tracking

individuals in the environment confirm the effectiveness of this method.

1 INTRODUCTION

Information infrastructure that provides personal and

location-dependent services in public spaces like a

shopping mall permit a wide variety of applications.

Such a system will provide the positions of friends

who are currently shopping in the mall. When they

have many bags, users will call a porter robot, which

can reach them by using the location system. To

enable location-dependent and personal services, we

propose a system that locates and identifies a

pedestrian, who carries a mobile information

terminal, anywhere in a crowded environment.

Many kinds of location systems have been

studied that provide the positions of pedestrians by

using sensors installed in the environment. For

example, location systems using cameras and laser

range finders (LRFs) can track people in the

environment very precisely. However, it is difficult

to identify each pedestrian or a person carrying a

specific wearable device by using only sensors in the

environment.

On the other hand, in ubiquitous computing,

many kinds of wearable devices have been used to

locate people. Since a location system using ID tags

requires the installation of many reader devices in

the environment for precise localization, it is not a

realistic solution in large public spaces. Wearable

inertial sensors are also used to locate people, but

the cumulative estimation error is often problematic.

For a precise location system, it is important to

integrate other sources of information.

In order to locate a pedestrian carrying a specific

mobile device anywhere in an environment, a

promising approach is to integrate environmental

sensors that observe people from the environment

and wearable sensors that locate the person carrying

them. In this paper, we propose a novel method

integrating LRFs in the environment and wearable

accelerometers to locate people precisely and

continuously. Since location systems using LRFs

have been successfully applied for tracking people in

large public spaces like train stations and the sizes of

LRF units are becoming smaller, LRFs are highly

suitable for installation in public spaces. Since many

cellular phones are equipped with an accelerometer

for a variety of applications, users who have a

cellular phone do not have to carry any additional

21

Ikeda T., Ishiguro H., Miyashita T. and Hagita N..

PEDESTRIAN IDENTIFICATION BY ASSOCIATING WALKING RHYTHMS FROM WEARABLE ACCELERATION SENSORS AND BIPED TRACKING

RESULTS.

DOI: 10.5220/0003826400210028

In Proceedings of the 2nd International Conference on Pervasive Embedded Computing and Communication Systems (PECCS-2012), pages 21-28

ISBN: 978-989-8565-00-6

Copyright

c

2012 SCITEPRESS (Science and Technology Publications, Lda.)

device.

The rest of this paper is organized as follows.

First, we review previous studies. Then, we discuss a

method of integrating LRFs and accelerometers and

how it can provide reliable estimation. Finally, we

discuss the application of our method to a practical

system and present the results of an experimental

evaluation.

2 RELATED WORKS

2.1 Locating Pedestrians using

Environmental Sensors

Locating pedestrian has been an important issue in

computer vision and frequently studied (Hu et al.,

2004). One advantage of using cameras is that we

can use much information including colors and

motion gestures. A problem with cameras is that

they suffer from changes in the lighting conditions

in the environment. Also, using cameras in public

spaces for identification purpose sometimes causes

privacy issue.

LRFs have recently attracted increasing attention

for locating people in public places. As they have

become smaller, it becomes easier to install them in

environments. Since LRFs observes only the

positions of people, installation of LRFs does not

raise privacy issue. Cui et al., (2007) succeeded in

tracking a large number of people by observing legs

of pedestrians. Zhao and Shibasaki (2005) also track

people by using a simple walking model of

pedestrians. Glas et al., (2009) placed LRFs in a

shopping mall to predict the trajectories of people by

observing customers at the height of waist.

In general, sensors placed in the environment are

good at locating people precisely. However, it is

difficult to use them to identify pedestrians when

they are walking in a crowded environment.

2.2 Locating People by using Wearable

Sensors

In ubiquitous computing, wearable devices have

been used to locate people (Hightower and Borriello,

2001). Devices that have been studied include IR

tags (Want et al., 1992), ultrasonic wave tags (Harter

et al., 1999), RFID tags (Amemiya et al., 2004); (Ni

et al., 2003), Wi-Fi (Bahl and Padmanabhan, 2000),

and UWB (Mizugaki et al., 2007). If the device ID is

registered with the system, the person carrying that

specific device can be located and identified.

However, tag-based methods require the placement

of many reader devices in order to locate people

accurately, so the cost of installing reader devices is

problematic in large public places. Wi-Fi-based

methods do not provide enough resolution to

distinguish one person in a crowd. Furthermore, if

users of the system have to carry additional devices

just to use the location service, the cost and

inconvenience should also be considered.

Wearable inertial sensors have also been used to

locate a person by integrating observations (Bao and

Intille, 2004); (Foxlin, 2005); (Hightower and

Borriello, 2001). Since integral drift has been

problematic, it is important to combine observations

with those of other sensors. Recently, many types of

cellular phones have started to incorporate

accelerometers, and some people are carrying them

in their daily lives. Therefore, the approaches using

acceleration sensors for locating people can

effectively use the infrastructure.

2.3 Locating People by using a

Combination of Sensors

To locate and identify people in the environment,

methods that integrate both environmental sensors

and wearable devices have been studied.

Kourogi et al., (2006) integrated wearable

inertial sensors, a GPS function, and an RFID tag

system. Woodman and Harle (2008) also integrated

wearable inertial sensors and map information.

Schulz et al. (2003) used LRFs and ID tags to locate

people in a laboratory, and they proposed a method

that integrates positions detected using LRFs and

identifies people by using sparse ID-tag readers in

the environment. Mori et al. (Mori et al., 2004) used

floor sensors and ID-tags and identified people

carrying ID tags. These methods focused on

gradually identifying people after initially locating

their positions roughly using ID tags as they

approach reader devices. However, since these

methods integrate environmental sensors and ID tags

on the basis of their positions, it is difficult to

distinguish them in a crowded environment when the

spatial resolution by using ID tags is not enough.

In contrast, we integrate LRFs in the

environment and wearable sensors on the basis of

the motion of people. Since our method uses the

motion feature itself and does not incorporate the

computation of precise position in the integration

process, it does not suffer from the drift problem of

inertial sensors.

In previous work (Ikeda et al., 2010), LRFs and

wearable gyroscopes are integrated based on body

PECCS 2012 - International Conference on Pervasive and Embedded Computing and Communication Systems

22

rotation around the vertical axis from both types of

sensors. However, it was difficult to distinguish

pedestrians who move in a line when the trajectories

are similar. Another problem is the method’s use of

gyroscopes, even though cellular phones equipped

with gyroscopes are not yet so common.

In this paper, to cope with these problems, we

propose a new method that extracts features from a

bipedal walking pattern. LRFs observe pedestrians at

the height of legs and estimate the positions of

people and walking rhythms. The wearable

accelerometer also observes walking rhythms. Since

walking rhythms differ from person to person, the

proposed method can distinguish pedestrians

walking in a line, and it uses only an accelerometer

in the wearable devices.

3 PEOPLE TRACKING AND

IDENTIFICATION USING LRFS

AND WEARABLE

ACCELEROMETERS

3.1 Associating Walking Rhythm from

LRFs and Wearable Accelerometer

To locate each person carrying a wearable sensor,

we focus on the walking rhythms that are observed

from environmental and wearable sensors. After

features of the walking rhythm are observed from

the two types of sensors, signals are compared to

determine whether the two signals come from the

same person.

In this framework, the problem of locating the

person with a wearable sensor is reduced to

comparing the signal from the wearable sensor to all

signals from the people detected by the

environmental sensors and then selecting the person

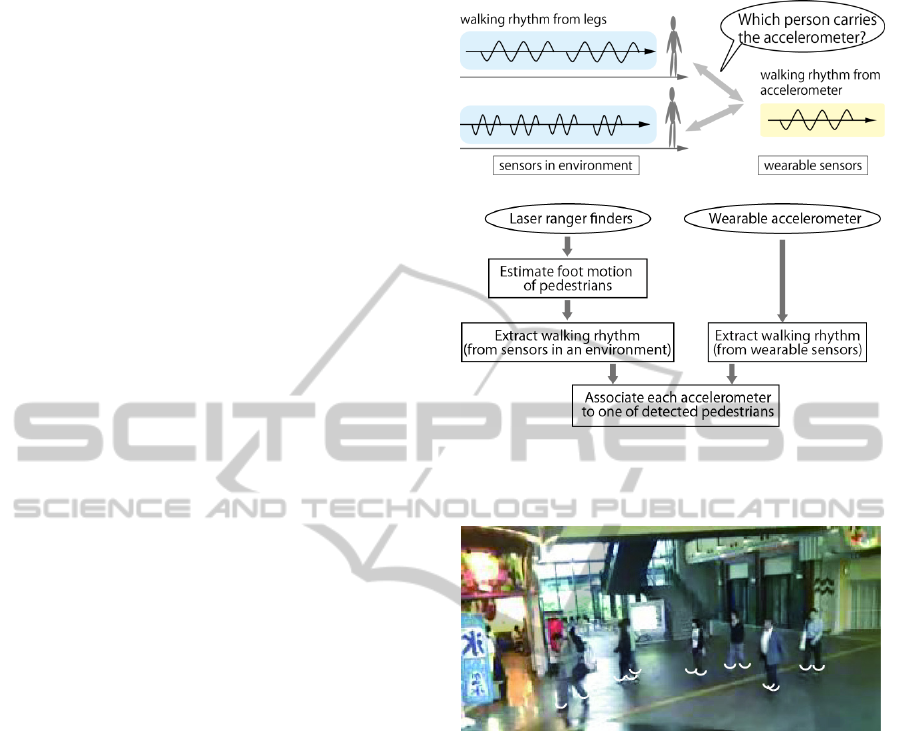

with the most similar signal (Figure 1 (a)).

Legs of pedestrians are tracked by using LRFs in

the environment, and the motions of both legs are

estimated. Simultaneously, the timings of footsteps

are observed by using wearable acceleration sensors.

If the signals from both kinds of sensors are from the

same pedestrian, we can assume that the two signals

are highly correlated, since they were originally

generated from a common walking rhythm. Figure 1

(b) shows the overview of the proposed algorithm.

a) Concept of the proposed algorithm.

b) Flow of the proposed algorithm

Figure 1: Locating a person carrying a specific wearable

device by matching wearable and environmental sensors.

Figure 2: Pedestrian walking in a shopping mall. The

white marks represent the detected legs of pedestrians.

Walking rhythm of each pedestrian is observed using

wearable accelerometer and LRFs by tracking at least one

foot.

3.2 Tracking Biped Foot of Pedestrians

by using LRFs

Zhao and Shibasaki (2005) proposed a pedestrian

tracking method by using LRFs at the height of the

legs. By observing the legs of pedestrians, not only

the positions of pedestrians but also the timing of

their footsteps was observed.

We also observe the legs at the height of 20cm

by using LRFs. Our method expands upon the

system described in (Glas et al., 2009) and uses a

particle-filter-based algorithm to track legs in the

environment (Figure 2). In our tracking algorithm, a

background model is first computed for each sensor

by analyzing hundreds of scan frames to filter out

noise and moving objects. Points detected in front of

PEDESTRIAN IDENTIFICATION BY ASSOCIATING WALKING RHYTHMS FROM WEARABLE

ACCELERATION SENSORS AND BIPED TRACKING RESULTS

23

this background scan are grouped into segments

within a certain size range, and those that persist

over several scans are registered as leg detections.

Each leg is then tracked by the particle filter using a

simple linear motion model. Examples of observed

velocity of two legs are shown in Figure 3(b). Then

the detected legs that satisfy the following

constraints are clustered and considered a hypothesis

of a pedestrian:

Constraint 1

1. At least one of the two feet is not moving.

2. The distance between the two feet is less than a

threshold (100 cm in the experiments).

3.3 Observation of Acceleration from

Wearable Sensors

To extract walking rhythm from the wearable

accelerometer, we focus on the vertical component

of the observed acceleration. Three-dimensional

acceleration vector

)(ta

is observed and averaged

over a few seconds (T is number of frames of eight

seconds in the experiments) to estimate the vertical

direction of the sensor. Then vertical acceleration

)(

vert

ta

is estimated as follows:

T

Tt

1

/)()(

~

aa

,

(1)

)()(

~

)(

vert

ttta aa

.

(2)

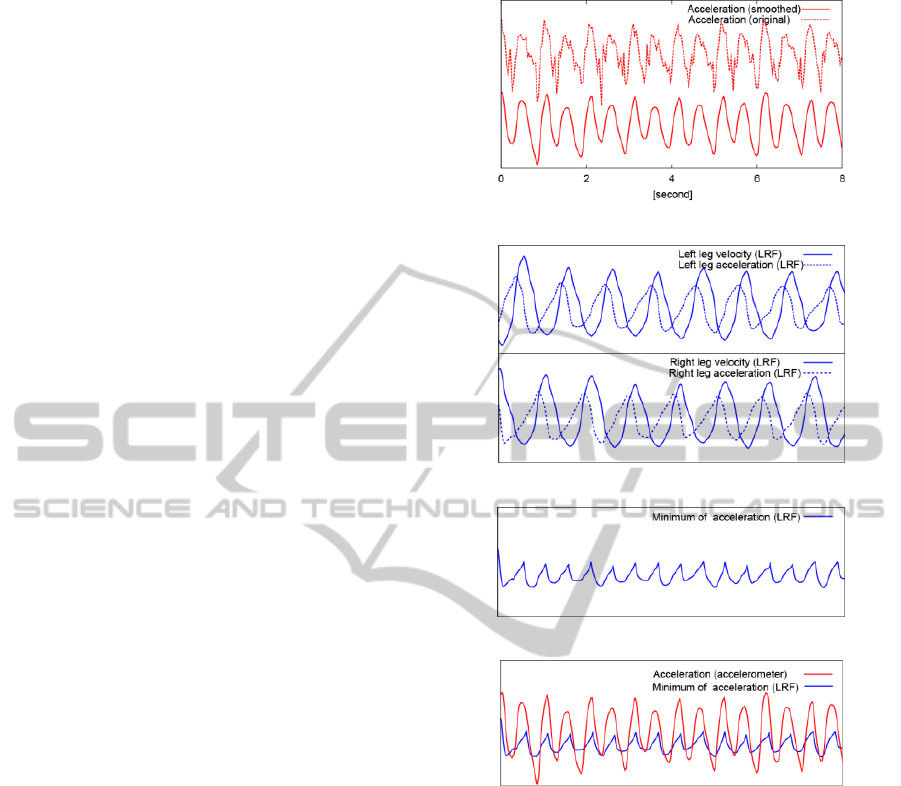

Original and smoothed vertical acceleration signals

are shown in Figure 3 (a). The accelerometer is

attached to the left waist. One footstep of the walk is

about 500 milliseconds in the graph, and the timing

of the footsteps of both legs is clearly observed.

Note that since the accelerometer is attached to the

left waist, the impact of a footstep of the left leg is

clearer.

3.4 Associating Motion of Biped Foot

and Acceleration Signal

Figure 3 (b) shows the smoothed velocity and

acceleration of each leg estimated by our tracking

method. When the speed of a pedestrian’s idling leg

becomes lower and it finally lands on the ground, a

large vertical acceleration is observed. Therefore, we

can expect the impact of landing to be observed

when the acceleration of the idling leg is negative.

Note that since LRFs are observed at the height of

the legs, the velocity does not become zero when the

foot lands.

a) Vertical acceleration from wearable accelerometer. Dashed line

shows original signals and solid line shows smoothed signal.

b) Velocity (solid line) and acceleration (dashed line) of left and

right legs of a pedestrian from LRFs.

c) Minimum of acceleration of both legs from LRFs (Equation

(4))

d) Superimposed signal (acceleration from accelerometer (a) and

LRFs (c)). Signals from same pedestrian shows clear correlation.

The vertical axis is adjusted to overlap both signals.

Figure 3: Examples of signals from LRFs and an

accelerometer taken over eight seconds.

Figure 3 (c) shows the acceleration of both legs.

The derivatives of velocities of legs (Figure 3 (c))

and the vertical acceleration signal (Figure 3 (a)) are

highly correlated (Figure 3 (d)).

To evaluate the correlation between the two

signals, we propose computing Pearson's correlation

function between the minimum leg acceleration

from LRFs and the acceleration from the

accelerometer.

))(),(()(

footvert

aat

(3)

)))(),(min()(

foot

tatata

rightleft

,

(4)

where

)(),( tata

rightleft

are acceleration of right and

PECCS 2012 - International Conference on Pervasive and Embedded Computing and Communication Systems

24

left leg. Note that when

)(),(

footvert

aa

are jointly

Gaussian, mutual information between them is

computed as

))(),((1

1

log

2

1

))(),((

footvert

footvert

aa

aaI

(5)

Hershey and Movellan (2000) first used this measure

to integrate audio and vision to detect speaker. See

(Cover and Thomas, 2006) for detail. Eq. (5) shows

that our algorithm evaluates similarity of walking

rhythms by computing mutual information between

them.

3.5 Associating Motion of One Foot

and Acceleration Signal

In a crowded scene, sometimes only one leg of a

pedestrian is observed. To compute the correlation

between the one leg and the acceleration signal, we

use only a limited phase of the acceleration of LRFs

since acceleration signal contains signals from both

legs.

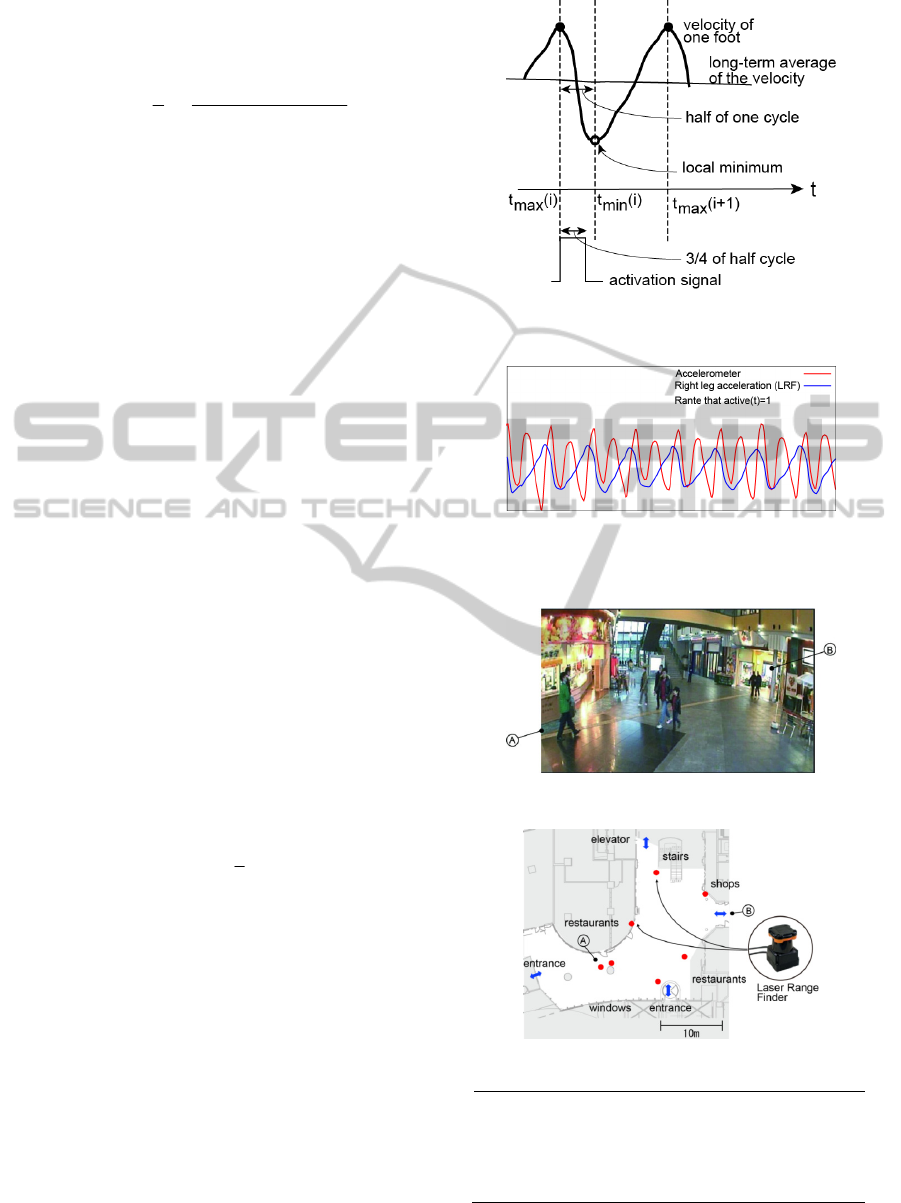

In computing Eq. (3), we define an activation

signal for an acceleration signal to select the

contribution of the acceleration from one leg. Figure

4 shows the steps to compute the activation signal.

We compute the long-term average of a velocity

signal and compute one maximum/minimum point

in each range, indicating how much the original

signal is larger/smaller than the average. The leg

lands a little before the minimum point. Then a

sequence of the time index of local maximum

)(

max

it

and local minimum

)(

min

it

is computed.

Suppose

)1()()(

maxminmax

ititit

. Then the

activation signal is defined as

otherwise0

))()((

4

3

)()(1

)(active

maxminmaxmax

ititittit

t

(6)

and the summation in Eq. (3) is computed only when

active(t)=1. The factor 3/4 is for extracting the range

when the impact of landing is observed in

accelerometer. Figure 5 shows the range that

activation function is one. Correlation function Eq.

(3) is computed for each trajectory of a single leg

and each biped foot that satisfies Constraint 1. For

example, in the case of Figure 3 (d), the activation

signals is one at around every other lower peak of

the acceleration signal.

Finally, for each wearable acceleration sensor,

the position of the user is estimated by selecting a

single leg or biped foot that maximizes correlation

function Eq. (3).

Figure 4: Estimated positions of pedestrians by tracking

biped foot using LRFs.

Figure 5: The range that activation function for a leg. In

the range active(t)=1, the acceleration from both sensors

correlated.

Figure 6: Experimental environment in a shopping mall.

a) Positions of LRFs (shown as red circles) in the experimental

environment.

Model No. Hokuyo Automatic UTM-30LX

Measuring area 0.1 to 30m, 270°

Accuracy ±30mm (0.1 to 10m)

Angular resolution approx. 0.25°

Scanning time 25 msec/scan

b) Specifications of the LRF.

Figure 7: Experimental setup.

PEDESTRIAN IDENTIFICATION BY ASSOCIATING WALKING RHYTHMS FROM WEARABLE

ACCELERATION SENSORS AND BIPED TRACKING RESULTS

25

Model No. ATR Promotions WAA-010

Weight 20 [g]

Size (W×D×H) 39×44×12 [mm]

Sampling frequency 500 [Hz]

Range ±2G

Interface Bluetooth

Figure 8: Sensor devices used in experiments.

3.6 Selecting a Single Foot / Biped Feet

that Maximizes Correlation

between Signals

For each accelerometer, we compute correlation

function for each tracked single legs by using

activation function and each biped feet that satisfied

the Constraint 1. The single foot or the biped feet

that maximizes correlation function is a candidate of

the pedestrian who carries the accelerometer.

The correlation function is not very stable when

the number of samples are small. We compute

correlation function for data samples of four

seconds.

4 EXPERIMENTS

4.1 Experimental Setup

We conducted experiments at a shopping mall in the

Asia and Pacific Trade Center, in Osaka, Japan

(Figure 6). We located people in a 20-m-radius area

of the arcade containing many restaurants and shops.

People in this area were monitored via a sensor

network consisting of seven LRFs installed at a

height of 20 cm (Figure 7). We modified a previous

system (Glas et al., 2009); (Kanda et al., 2008) for

tracking a biped foot and expanded it to incorporate

wearable sensors to locate and identify people.

Figure 7 shows the area of tracking and positions of

sensors.

Each leg of a pedestrian who enters the area was

detected and tracked with a particle filter. By

computing the expectation of the particles, we

estimated the position and velocity 25 times per

second. This tracking algorithm ran very stably and

reliably with a measured position. Figure 8 shows an

example of tracking results of legs.

Figure 9: Estimated trajectories of feet in four seconds.

There were 12 pedestrians in the period. The filled circles

represent the latest positions. Sometimes only one feet of

a pedestrians is observed because of the effect of

occlusion.

In experiments, three subjects in the environment

each carried one wearable sensor with a three-axis

accelerometer (Figure 8). In the area of tracking,

there are usually about 10 pedestrians at the same

time who did not carry wearable sensor. So the

number of the legs observed at a time is more than

20 (Figure 9). We repeated the experiments eight

times in 12 minutes. For each acceleration signal

from a wearable sensor, our algorithm estimated the

trajectory of the owner. The observed acceleration

signals were sent to a host PC via Bluetooth. We

repeated the experiments four times.

Since our method locates people by comparing

time sequences, it is important to adjust the clocks of

the LRFs and wearable sensors. In the following

experiments, the wearable sensor clocks were

synchronized with the host PC when they initially

established a Bluetooth connection.

Another problem is the delay in the transmission

from the wearable sensors to the host PC. In the

following experiments, signals were sent with

timestamps added by the wearable sensors. If the

timestamp were set after the signals had been sent

(e.g., by the host PC), the results would be affected

by sudden transmission delays.

4.2 Computed Correlation for each

Wearable Sensor

For each wearable device, correlation is computed

based on equation (3), (4) between the acceleration

signal from the wearable device and the sequences

of all detected legs. The leg with the highest

correlation is considered as the leg of the user who

carries the wearable device.

PECCS 2012 - International Conference on Pervasive and Embedded Computing and Communication Systems

26

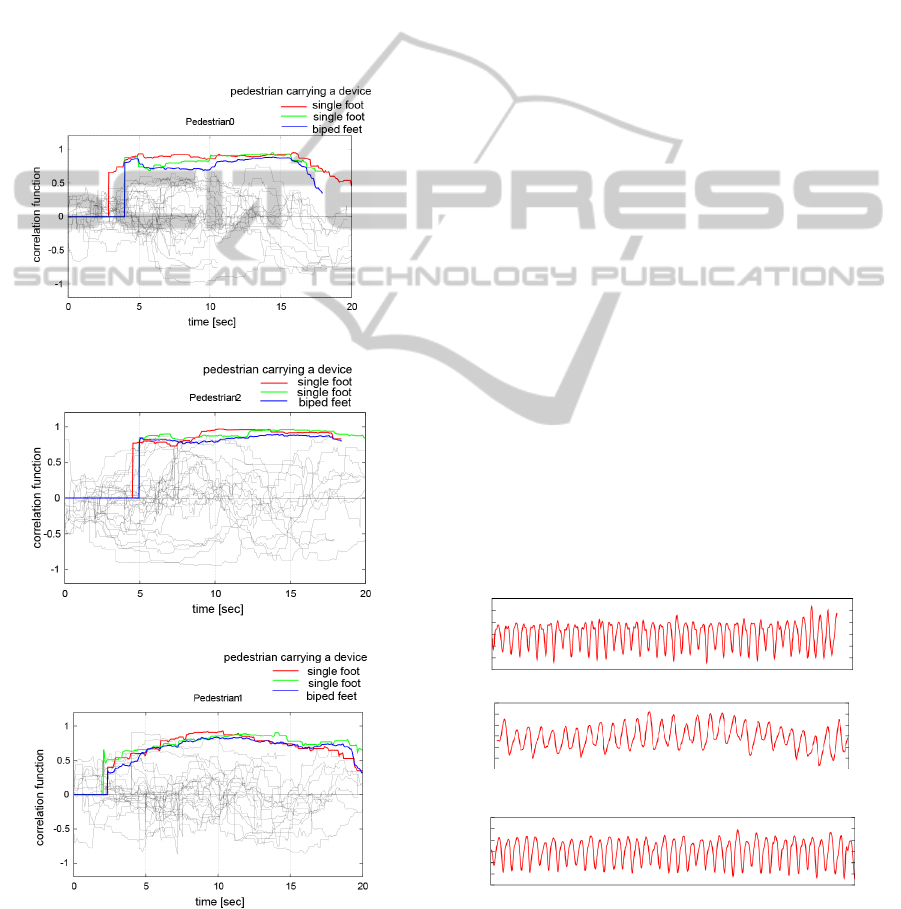

Figure. 10 shows the computed correlation

function between each wearable device of each

subject and all detected legs. Solid line shows the

correlation with user of the device, and they usually

show the highest values compared with dashed lines

that are from other pedestrians. We compute the

correlation between signals in five seconds. Until

five seconds has passed after the pedestrian appeared,

the value is set to zero.

We tested with three subjects and four trial. In 12

experiments, the pedestrian who are carrying the

sensor was been correctly estimated almost all time

in the sequences.

a) pedestrian 1

b) pedestrian 2

c) pedestrian 3

Figure 10: Correlation function computed for acceleration

signal between each wearable acceleration sensor and

biped feet. Colored line shows correlation function of

correct user. Number of users is three in the experiments.

5 DISCUSSION

Privacy Issues. When cameras are installed in

public spaces, the problem of invasion of privacy is

inevitably raised. Since LRFs do not observe the

face or any other information that identifies

pedestrians, this issue is irrelevant to our method.

The Effect of the Pose of Acceleration Sensor. In

this experiments, we attached wearable acceleration

sensors to the waist of pedestrians. By computing

vertical component of the acceleration, the pose of

the sensors does not affect our method. However,

acceleration signals differ depending on the position

the sensor is attached.

We confirmed the differences that may arise when

sensors are carried in different ways: in a pocket, in

hands, in a bag (Figure 11). The shape of the

observed acceleration signal are not completely

same, but the detected peaks of acceleration are still

clear and there are no significant difference in

computing correlation process.

6 CONCLUSIONS

In this paper, to estimate both positions and IDs of

pedestrians, we propose a method that associates

precise position information using sensors in the

environment and reliable ID information using

wearable sensors. Since the tracking results of biped

feet of a pedestrian and the body oscillation of the

same pedestrian correlate, we associate these signals

from same pedestrian that maximizes correlation

between them.

a) Acceleration signal when a sensor is in a jacket pocket

b) Acceleration signal when a sensor is carried in hands (note that

the walking speed becomes slower)

c) Acceleration signal when a sensor is in a bag

Figure 11: Examples of acceleration signals in different

carrying conditions.

Experimental results for locating people in a

PEDESTRIAN IDENTIFICATION BY ASSOCIATING WALKING RHYTHMS FROM WEARABLE

ACCELERATION SENSORS AND BIPED TRACKING RESULTS

27

shopping mall show the precision of our method.

Since LRFs are now becoming common and people

are carrying cellular phones that contain

accelerometers, we believe that our method is

realistic and can provide a fundamental means of

location services in public places.

In future, we'd like to investigate our method

when pedestrians carry cellular phones in various

way, and when the number of cellular phones is

larger. Since we can observe much motions

information of pedestrian from accelerometer, we'd

like to apply our method to understand natural

pedestrian behavior.

ACKNOWLEDGEMENTS

This work was supported by KAKENHI (22700194).

REFERENCES

Amemiya, T., Yamashita, J., Hirota, K., and Hirose, M.,

2004. Virtual leading blocks for the deaf-blind: A real-

time way-finder by verbal-nonverbal hybrid interface

and high-density RFID tag space. In Proc. IEEE

Virtual Reality Conf., pp. 165-172.

Bahl, P. and Padmanabhan, V. N., 2000. RADAR: An in-

building RF-based user location and tracking system.

Proc. of IEEE INFOCOM 2000, Vol. 2, pp. 775-784.

Bao, L. and Intille, S. S., 2004. Activity recognition from

user-annotated acceleration data. In Proc. of

PERVASIVE 2004, Vol. LNCS 3001, A. Ferscha and

F. Mattern, Eds. Springer-Verlag, pp. 1-17.

Cui, J., Zha, H., Zhao, H., and Shibasaki, R., 2007. Laser-

based detection and tracking of multiple people in

crowds. Computer Vision and Image Understanding,

Vol. 106, No. 2-3, pp. 300-312.

Cover, T. M. and Thomas, J. A., 2006. Elements of

Information Theory, Wiley-Interscience.

Foxlin, E., 2005. Pedestrian Tracking with Shoe-Mounted

Inertial Sensors. IEEE Computer Graphics and

Applications, Vol. 25, Issue 6, pp. 38 - 46.

Glas, D. F., Miyashita, T., Ishiguro, H., and Hagita, N.,

2009. Laser-Based Tracking of Human Position and

Orientation Using Parametric Shape Modeling.

Advanced Robotics, Vol. 23, No. 4, pp. 405-428.

Harter, A. et al., 1999. The anatomy of a context-aware

application. In Proc. 5th Annual ACM/IEEE Int. Conf.

on Mobile Computing and Networking (Mobicom '99),

pp. 59-68.

Hershey, J. and Movellan, J. R., 2000. Audio vision:

Using audiovisual synchrony to locate sounds. In

Advances in Neural Information Processing Systems

12. S. A. Solla, T. K. Leen and K. R. Muller (eds.), pp.

813-819. MIT Press.

Hightower, J. and Borriello, G., 2001. Location systems

for ubiquitous computing. Computer, Vol. 34, No. 8,

pp. 57-66.

Hu, W., Tan, T., Wang, L., and Maybank, S., 2004. A

survey on visual surveillance of object motion and

behaviors. IEEE Trans. on Systems, Man and

Cybernetics, Part C, Vol. 34, No. 3, pp.334-352.

Ikeda, T., Ishiguro, H., Glas, D. F., Shiomi, M., Miyashita,

T., and Hagita, N., 2010. Person Identification by

Integrating Wearable Sensors and Tracking Results

from Environmental Sensors. In Proc. IEEE Int. Conf.

on Robotics and Automation (ICRA 2010), pp. 2637-

2642.

Kanda, T., Glas, D. F., Shiomi, M., Ishiguro, H., and

Hagita, N., 2008. Abstracting People's Trajectories for

Social Robots to Proactively Approach Customers,

IEEE Trans. on Robotics, Vol. 25, No. 6, pp. 1382-

1396.

Kourogi, M., Sakata, N., Okuma, T., and Kurata, T., 2006.

Indoor/Outdoor Pedestrian Navigation with an

Embedded GPS/RFID/Self-contained Sensor System.

In Proc. 16th Int. Conf. on Artificial Reality and

Telexistence (ICAT2006), pp. 1310-1321.

Mizugaki, K. et al., 2007. Accurate Wireless

Location/Communication System With 22-cm Error

Using UWB-IR. IEEE Radio & Wireless Symposium,

pp. 455-458.

Mori, T., Suemasu, Y., Noguchi, H., and Sato, T., 2004.

Multiple people tracking by integrating distributed

floor pressure sensors and RFID system, In Proc. of

IEEE Int. Conf. on Systems, Man and Cybernetics,

Vol. 6, pp. 5271-5278.

Ni, L. M. et al., 2003. LANDMARC: indoor location

sensing using active RFID, Proc. of the First IEEE Int.

Conf. on Pervasive Computing and Communications

(PerCom 2003), pp. 407-415.

Schulz, D., Fox, D., and Hightower, J., 2003. People

tracking with anonymous and id-sensors using rao-

blackwellised particle filters. In Proc. 18th Int. Joint

Conf. on Artificial Intelligence (IJCAI'03), pp. 921-

928.

Want, R., Hopper, A., Falcao, V., and Gibbons, J., 1992.

The active badge location system. ACM Trans. Inf.

Syst., Vol. 10, No. 1, pp. 91-102.

Woodman, O. J., 2007. An introduction to inertial

navigation. Technical Report, UCAM-CL-TR-696,

Univ. of Cambridge.

Woodman, O. and Harle, R., 2008. Pedestrian localisation

for indoor environments. In Proc. 10th Int. Conf. on

Ubiquitous Computing (UbiComp '08), pp. 114-123.

Zhao, H., and Shibasaki, R., 2005. A Novel System for

Tracking Pedestrians using Multiple Single-row Laser

Range Scanners. IEEE Trans. SMC Part A: Systems

and Humans, 35-2, 283-291.

PECCS 2012 - International Conference on Pervasive and Embedded Computing and Communication Systems

28