OPTICALLY WRITTEN WATERMARKING TECHNOLOGY

USING ONE DIMENSIONAL HIGH FREQUENCY PATTERN

Kazutake Uehira and Mizuho Komori

Kanagawa Institute of Technology, 1030 Shimo-ogino, Atsugi-shi, Kanagawa, Japan

Keywords: Optical Watermarking, Portrait Rights, Invisible Pattern.

Abstract: We propose a new optically written watermarking technology that uses a one dimensional high frequency

pattern to protect the portrait rights of 3-D shaped real objects by preventing the use of images captured

illegally with cameras. We conducted experiments using a manikin’s face as a real 3-D object assuming that

this technology would be applied to human faces in the future. We utilized the phase difference between

two color component patterns, i.e., binary information was expressed if the phase of the high frequency

pattern was the same or its opposite. The experimental results demonstrated this technique was robust

against the pattern being deformed due to the curved surface of the 3-D shaped object and a high accuracy

of 100% in reading out the embedded data was possible by optimizing the conditions under which data were

embedded. As a result, we could confirm the technique we propose is feasible.

1 INTRODUCTION

The importance of techniques of digital

watermarking has recently been raised because

increasingly more digital-image content is being

distributed throughout the Internet. Various

approaches to concealing watermarking in images

have been developed ((I. J. Cox et al., 1997), (M. D.

Swanson et al., 1998), (M. Hartung et al., 1999)).

However, conventional digital watermarking

techniques rest on the premise that people who want

to protect the copyright of their content have the

original digital data and can embed watermarking by

digital processing. However, there are some cases

where this premise does not apply. One such case

can arise for images that have been produced

illegally by people taking photographs of real

objects that have high value as portraits, e.g., art

works at museums that have been painted by famous

artists or faces of celebrities on a stage. The images

produced by capturing these real objects with digital

cameras or other image-input devices by malicious

people have been vulnerable to illegal use since they

have not contained digital watermarking.

We have proposed a technology that can prevent

the images of objects from being used in such cases

((K. Uehira et al., 2008), (Y. Ishikawa et al., 2010)).

It uses illumination that contains invisible

information on watermarking. As the illumination

contains the watermarking, the image of a

photograph of an object that is illuminated by such

illumination also contains watermarking. We

treated flat objects in a previous study assuming

famous paintings were being illegally copied and

used a 2-dimensional high frequency patterns as

watermarking patterns. We demonstrated that this

technique effectively embedded the watermarking in

the captured image.

However, if we use this technique for 3-D

shaped objects that have curved surfaces, the

embedded information for watermarking cannot be

read out correctly because the projected patterns on

the surface of objects are deformed. We previously

tried to correct deformation in the projected

watermarking pattern using two dimensional grid

patterns (Y. Ishikawa et al., 2011). We found this

technique made it possible to correct deformation

and accurately read out watermarking information.

However, it needs the additional projection for grid

pattern in addition to watermarking pattern,

therefore, it cannot be applied to moving object.

This paper proposes a new technique that does

not need patterns to be corrected. It uses one

dimensional high frequency patterns as

watermarking patterns. A one dimensional pattern is

expected to be robust to deformation of the projected

pattern because of its simplicity compared to two-

dimensional patterns. We used a human face as a

75

Uehira K. and Komori M..

OPTICALLY WRITTEN WATERMARKING TECHNOLOGY USING ONE DIMENSIONAL HIGH FREQUENCY PATTERN.

DOI: 10.5220/0003806700750078

In Proceedings of the International Conference on Computer Vision Theory and Applications (VISAPP-2012), pages 75-78

ISBN: 978-989-8565-04-4

Copyright

c

2012 SCITEPRESS (Science and Technology Publications, Lda.)

real object in this study assuming its portrait rights

were protected. This paper also presents results

obtained from experiments where we evaluated the

accuracy with which the watermarking could be read

out with our new technique.

2 OPTICAL WATERMARKING

AND PROPOSAL FOR 1-D

OPTICAL WATERMARKING

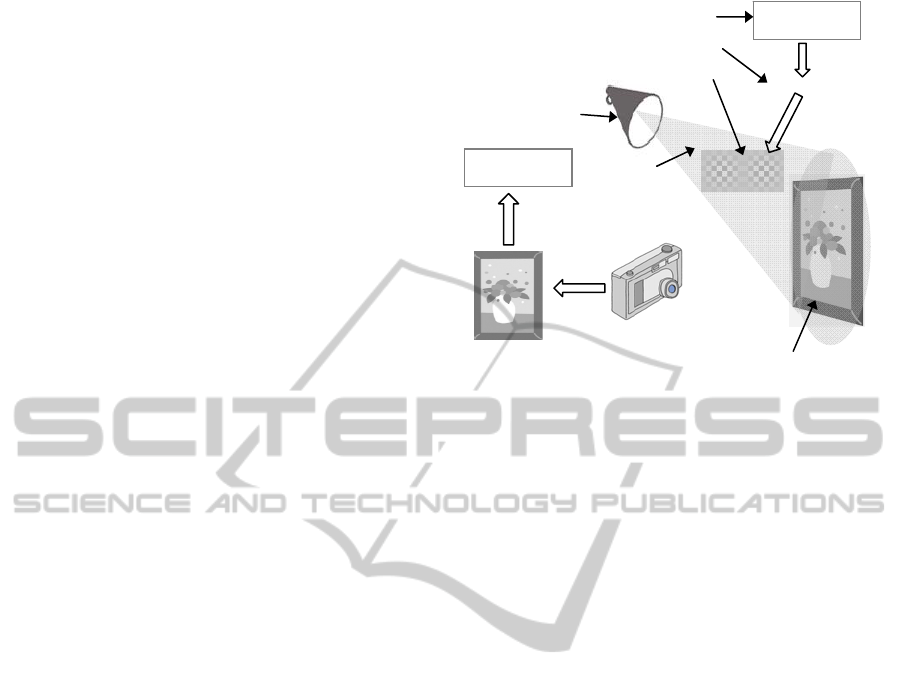

Figure 1 outlines the basic concept underlying our

technology of watermarking that uses light to embed

information. An object is illuminated by light that

contains invisible information on watermarking. As

the illumination itself contains the watermarking

information, the image of a photograph of an object

that is illuminated by such illumination also contains

watermarking. By digitizing this photographic image

of the real object, the watermarking information in

binary data can be extracted in the same way as with

the conventional watermarking technique. To be

more precise, information to be embedded is first

transformed into binary data, “1” or “0”, and it is

then transformed into a pattern that differs

depending on whether it is “1” or “0”. This pattern is

transformed into an optical pattern and projected

onto a real object. It is this difference in the pattern

that is read out from the captured image.

The light source used in this technology projects

the watermarking pattern similar to a projector.

Since the projected pattern has to be imperceptible

to the human-visual system, the brightness

distribution given by this light source then looks

uniform to the observer over the object, which is the

same as that with conventional illumination. The

brightness of the object’s surface is proportional to

the product of the reflectance of the surface of the

object and illumination by incident light. Therefore,

when a photograph of this object is taken, the image

on the photograph contains watermarking

information, even though this cannot be seen.

The main feature of the technology we propose is

that the watermarking can be added by light.

Therefore, this technology can be applied to objects

that cannot be electronically embedded with

watermarking, such as pictures painted by artists.

We used a method in our previous study that

used two-dimensional inverse Discrete Cosine

Transform (iDCT) to produce the watermarking

pattern. The illumination area was divided into

numerous numbers of blocks. Each block had 8 x 8

pixels. We expressed 1- bit binary data as “1” or “0”

Figure 1: Basic concept underlying technology of

watermarking that uses light to embed data.

by using the sign of the high-frequency component.

This method, however, is not robust to deformation

of the projected pattern when it projected onto a

curved surface.

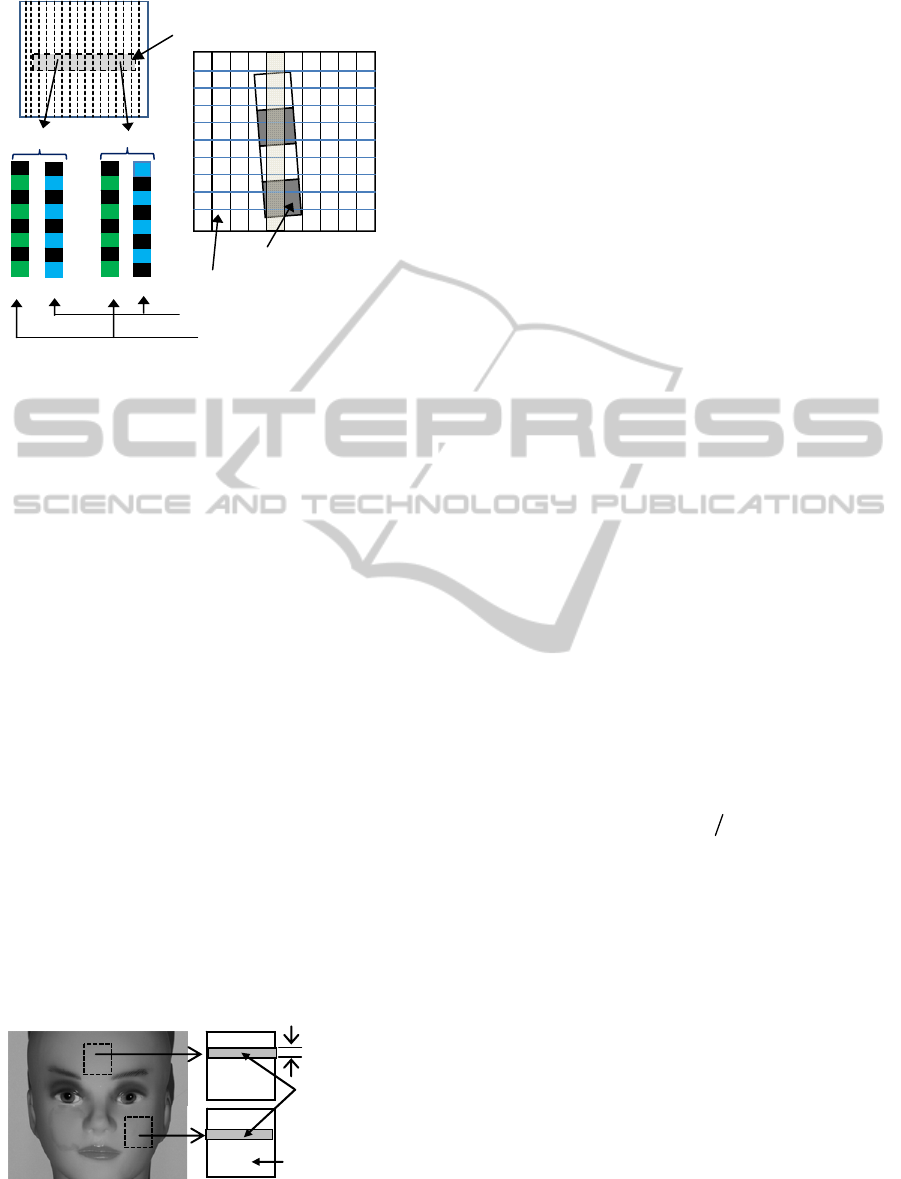

In contrast, we propose the one dimensional

high-frequency pattern in this study shown Fig. 2,

where each vertical pixel line has 1-bit binary

information of “1” or “0”. We use two color

components to express “0” or “1”, i.e., if the phase

of the high-frequency pattern of two color

components are the same, the binary information is

“1” and if not, it is “0”. Even if we cut out a small

area of the pattern shown in Fig. 2 using this pattern,

we can determine whether the binary information is

“1” or “0” because we just need to check if the phase

of the patterns of two color components are the same

or not in the line.

We assumed that for a small area, displacement

of the projected pattern caused by the curvature of

the object surface would not be very large. Since we

captured the object image with double resolution (as

will be explained later), the phase calculated from

some pixel lines was not affected by the deformation

seen at the right of Fig. 2, so that the phase of the

pattern changed substantially. This technique is

expected to be robust to deformation of the projected

pattern, since we can use an arbitrarily small area in

regard to the y-(or x-) direction while for a two

dimensional pattern, the area where one bit binary

data are embedded is fixed and the effect of

deformation is significant.

ID:0876a

11000110

ID:0876a

Information to be embedded

Binar

y

data

Invisible

p

attern

Digital camera

Captured image

Read out by image

processing

Real object

Light source

Light

VISAPP 2012 - International Conference on Computer Vision Theory and Applications

76

Figure 2: One dimensional optical watermarking pattern.

3 EXPERIMENTS

We carried out experiments to demonstrate the

feasibility of the technology we propose in this

study. We made test patterns where each vertical

pixel line was assigned the one bit information in

Fig. 2. We used a color image, and the G-color

component was used for the signal pattern and the

B-color component was used for the reference

pattern. We set the average brightness (DC) to 200

and the highest-frequency component (HC) was

changed as an experimental parameter. These values

were on an 8-bit gray scale whose maximum was

255.

We used a digital light processing (DLP)

projector that had 1024 x 768 pixels for each color

to project the watermarking image. We used the face

of a manikin as a real 3-D object. Figure 3 is a

photograph where a watermarking image has been

projected onto the manikin’s face. The whole

projected area was 80 x 60 cm; therefore, the

manikin’s face was part of the whole projected area.

We confirmed that high frequency patterns projected

onto the manikin’s face were imperceptible when

they were viewed at a distance of 2 m.

Figure 3: Manikin’s face used as 3-D real object in

experiment. Parts of areas in each rectangular area were

cut out to read out embedded binary data.

The projected patterns were captured with a

digital camera that had 3906 x 2602 pixels. The

captured image had over twice the pixel density of

the projected image. This was because over twice as

many pixels were needed according to the sampling

theorem to restore the original high frequency

patterns in the projected image. After it was captured,

we changed this ratio to just twice by digital

processing. The high frequency component in the x-

row of the captured image was calculated with Eqs.

1 and 2

∑

=

N

i

xiIiHxHC ]][[][[1][1

--- and (1)

∑

=

N

i

xiIiHxHC ]][[][[2][2

--- (2)

where I[i][x] indicates image data at pixel [i][x] and

H1[i] and H2[i] are the following matrices.

H1[i]=1 (if the remainder of i by four is 0 or 1 ),

-1 (if the remainder of i by four is 2 or 3)

H2[i] =1 (if the remainder of i by four is 1 or 2)

-1 (if the remainder of I by four is 0 or 3)

The summations in Eqs. 1 and 2 were done over N

pixels in the y-direction. We chose 8, 12, 16, 32, and

60 as N. Since the captured image had twice as

many pixels as the projected image, we obtained a

frequency component that was half the highest

frequency. We calculated two frequency

components, HC1[x] and HC2[x], which had the

same frequency but their phases differed by 90

degrees using H1[i] and H2[i] to obtain the phase of

the pattern in the captured image with Eq. 3.

)][1][2arctan(][ xHCxHCx

=

θ

--- (3)

We obtained θ[x] for the signal pattern and the

reference pattern. We determined the binary data at

x to be “1” if the absolute value of the difference

between the phases of the two patterns was less than

90 degrees and we determined it to be “0” if it was

over 90 degrees. This was because we set the phase

of the original signal and reference pattern to be the

same when we assigned the “1” of binary data and

we set the difference between two patterns to be 180

degrees when we assigned the “0” of binary data, as

can be seen in Fig. 2.

We cut out the two areas of the image on the

manikin’s face shown in Fig. 3. One was an image

on the forehead that was relatively flat and the other

was an image on the cheek that was largely curved.

100x100pixels

Areasused

toreadout

embedded

data

Npixels

Area cut out

(G)

(B

(G)

(B)

“1”

“0”

Reference pattern

Projected pattern

Signal pattern

Captured image

OPTICALLY WRITTEN WATERMARKING TECHNOLOGY USING ONE DIMENSIONAL HIGH FREQUENCY

PATTERN

77

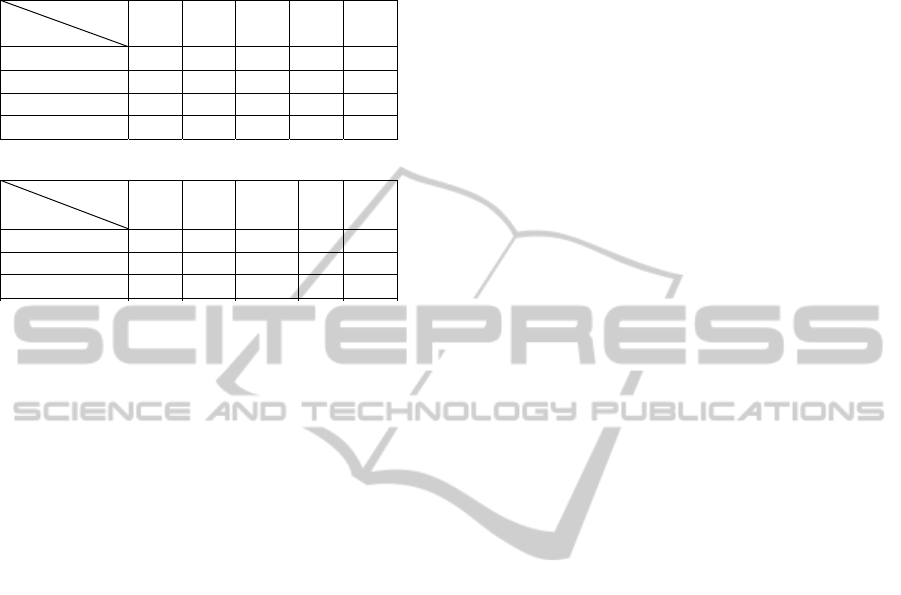

Table 1: Accuracy with which embedded data were read

out. N indicates number of pixels.

4 RESULTS AND DISCUSSION

Table 1 lists the experiment results for the accuracy

with which the embedded data were read out. The

accuracy is indicated by the percentage of data that

were read out correctly from the entire amount of

data. We established four main findings from Table

1: 1) Accuracy was very high for small numbers of

N under 16 not only for the forehead area but also

for the cheek area where the surface was largely

curved, 2) it was highest at N=16, 3) it was poor for

a large number of N over 32 for both areas, and 4)

accuracy was poor for an HC of 20.

The reason that accuracy was excellent for small

numbers of N and poor for large numbers was

because the results from calculations were largely

affected by the object surface being deformed when

the area used for the calculations was elongated. As

N decreased, on the other hand, the summation of

the frequency components in Eqs. 1 and 2 decreased,

and this caused a decrease in accuracy. This was

considered to be the reason for accuracy to peak at

N=16. However, the results revealed that accuracy

for very small N under 12 was still very high. This

reason for this was because we chose a human face

as the 3-D shaped object, which had uniform

characteristics with regard to its image signal. As it

did not have a high-frequency component, the values

were almost all obtained from the projected pattern.

Result 4) was what we had expected because of

the small frequency component.

As we can see from Table 1, a high degree of

accuracy of 100% in reading out the embedded data

is possible by optimizing the conditions for reading

data. Therefore, we could confirm the feasibility of

the proposed technique. Moreover, since we can use

an error correction technique in practice, over 90%

accuracy is sufficient for practical use.

5 CONCLUSIONS

We proposed one dimensional optical watermarking

to protect the portrait rights of 3-D shaped real

objects and we conducted an experiment using a

manikin’s face as a real 3-D object assuming this

technology would be applied to human faces in the

future. We used a method of phase difference where

two out of R, G, and B-color components were used

and binary information was expressed if the phase of

the high frequency pattern was the same or its

opposite. The experimental results demonstrated this

technique was robust to deformation of the pattern

due to the curved surface of the 3-D shaped object

and a high degree of accuracy of 100% in reading

out the embedded data was possible by optimizing

the conditions for reading data. As a result, we could

confirm the feasibility of the proposed technique.

ACKNOWLEDGEMENTS

This work was supported by JSPS KAKENHII (No.

23650055).

REFERENCES

I. J. Cox, J. Kilian, F. T. Leighton, and T. Shamoon, 1997.

Secure spread spectrum watermarking for multimedia,

IEEE Trans. Image Process., 6(12):1673–1687.

M. D. Swanson, M. D. Swanson, M. Kobayashi, and A. H.

Tewfik, 1998. Multimedia data-embedding and

watermarking technologies, Proc. IEEE, 86(6):1064–

1087.

M. Hartung and M. Kutter, 1999. Multimedia

watermarking techniques, Proc. IEEE, 87(7):1079–

1107.

K. Uehira and M. Suzuki, 2008. Digital Watermarking

Technique Using Brightness-Modulated Light, Proc.

IEEE 2008 International Conference on Multimedia

and Expo, page 257–260

Y. Ishikawa, K. Uehira, and K. Yanaka, 2010. Practical

evaluation of illumination watermarking technique

using orthogonal transforms", IEEE/OSA J. Display

Technology, 6(9): 351–358.

Y. Ishikawa, K. Uehira, and K. Yanaka, 2011. Optical

watermarking technique robust to geometrical

distortion in image, Proc. IEEE Int. Symp.on Signal

Processing and Information Technology.

N

HC

8 12 16 32 60

20 90.0 97.5 95.0 82.5 85.0

30 95.0 95.0 95.0 85.0 87.5

40 100.0 100.0 100.0 97.5 90.0

50 97.5 97.5 100.0 87.5 60.0

(b) Cheek

N

HC

8 12 16 32 60

20 95.0 95.0 100.0 97.5 92.5

30 100.0 100.0 100.0 90.0 55.0

40 97.5 100.0 100.0 82.5 42.5

(a) Forehead.

(%).

(%).

VISAPP 2012 - International Conference on Computer Vision Theory and Applications

78