SEMI-SUPERVISED DIMENSIONALITY REDUCTION

USING PAIRWISE EQUIVALENCE CONSTRAINTS

Hakan Cevikalp, Jakob Verbeek, Fr

´

ed

´

eric Jurie and Alexander Kl

¨

aser

Inria Rhone-Alpes, Montbonnot, France

Keywords:

Constrained clustering, dimensionality reduction, image segmentation, metric learning, pairwise constraints,

semi-supervised learning, spectral clustering.

Abstract:

To deal with the problem of insufficient labeled data, usually side information – given in the form of pairwise

equivalence constraints between points – is used to discover groups within data. However, existing methods

using side information typically fail in cases with high-dimensional spaces. In this paper, we address the prob-

lem of learning from side information for high-dimensional data. To this end, we propose a semi-supervised

dimensionality reduction scheme that incorporates pairwise equivalence constraints for finding a better embed-

ding space, which improves the performance of subsequent clustering and classification phases. Our method

builds on the assumption that points in a sufficiently small neighborhood tend to have the same label. Equiv-

alence constraints are employed to modify the neighborhoods and to increase the separability of different

classes. Experimental results on high-dimensional image data sets show that integrating side information into

the dimensionality reduction improves the clustering and classification performance.

1 INTRODUCTION

Supervised learning techniques use training data with

class labels being associated to the data samples. In

many applications, there is a lack of labeled data

since obtaining labels typically is a costly proce-

dure as it often requires human effort. On the other

hand, in some applications side information – given

in the form of pairwise equivalence constraints be-

tween points – is available without or with little ex-

tra cost. For instance, faces extracted from successive

video frames in roughly the same location can be as-

sumed to represent the same person, whereas faces

extracted in different locations in the same frame can

be assumed to be from different persons. Side infor-

mation may also come from human feedback, often at

a substantially lower cost than explicit labeled data.

Existing learning methods that use side infor-

mation to discover groups within data typically fall

into one of two categories. The first category con-

tains semi-supervised clustering methods which inte-

grate equivalence constraints into the clustering pro-

cess. This is accomplished by modifying the objective

function such that constraints will be satisfied dur-

ing the clustering. In (Wagstaff and Rogers, 2001;

Basu et al., 2004), side information is integrated into

the k-means clustering algorithm. Similarly, (Shen-

tal et al., 2003) and (Hertz et al., 2003) use equiva-

lence constraints within the EM algorithm to estimate

Gaussian mixture models. Methods in the second cat-

egory revise the distance metric by warping the in-

put space such that the constraints will be satisfied.

They then perform clustering using the learned dis-

tance metric. In (Xing et al., 2003), a full rank Ma-

halanobis distance metric is learned using side infor-

mation through convex programming. The metric is

learned via an iterative procedure that involves pro-

jection and eigen-decomposition in each step. (Tsang

and Kwok, 2003) formulate a full rank metric learning

problem that uses side information in a quadratic op-

timization scheme. Using the kernel trick, the method

is extended to the nonlinear case. In addition to these

methods, a unified constrained-clustering and met-

ric learning approach is proposed in (Bilenko et al.,

2004).

Although the above approaches incorporate side

information and yield satisfactory results for low-

dimensional spaces, they typically fail for cases with

high-dimensional spaces. This is due to the fact

that most dimensions in high-dimensional spaces do

not carry information about the class labels. There-

fore they are likely to degrade the clustering per-

formance. Furthermore, learning an effective full

rank distance metric by using constraints in high-

489

Cevikalp H., Verbeek J., Jurie F. and Kläser A. (2008).

SEMI-SUPERVISED DIMENSIONALITY REDUCTION USING PAIRWISE EQUIVALENCE CONSTRAINTS.

In Proceedings of the Third International Conference on Computer Vision Theory and Applications, pages 489-496

DOI: 10.5220/0001070304890496

Copyright

c

SciTePress

dimensional spaces is impracticable since (a) the

number of parameters to be estimated is the square

of the dimensionality, and (b) typically insufficient

side information is available in order to obtain accu-

rate estimates. A typical solution to this problem is to

reduce the dimensionality and to modify the distance

metric in the reduced space, as in (Yan and Domeni-

coni, 2006). However, important information may be

lost during a completely unsupervised dimension re-

duction (that does not use the side information) which

may degrade the subsequent metric learning.

In this paper we propose a semi-supervised di-

mensionality reduction scheme which uses side infor-

mation in the form of pairwise equivalence constraints

to improve clustering and classification performance.

The remainder of the paper is organized as follows.

In Section 2 we present our approach, Section 3 de-

scribes the data sets and experiments, and we con-

clude in Section 4.

2 SEMI-SUPERVISED

DIMENSIONALITY

REDUCTION

2.1 Problem Setting

Let X = [x

1

x

2

...x

n

] be a matrix whose columns con-

tain d-dimensional samples. We are given a set of

equivalence constraints in the form of similar and dis-

similar pairs. Let S be the set of similar pairs

S =

(x

i

,x

j

)|x

i

and x

j

belong to the same class

and let D be the set of dissimilar pairs

D =

(x

i

,x

j

)|x

i

and x

j

belong to different classes

.

Assuming consistency of the constraints, the con-

straint sets can be augmented using transitivity and

entailment properties as in (Basu et al., 2004). Our

goal is to find a lower-dimensional embedding space

in which the equivalence constraints are satisfied.

Linear dimensionality reduction, that maps vec-

tors x to lower dimensional vectors y = A

>

x, can be

seen as learning a distance metric since the Euclidean

distance between two points y

1

and y

2

in the reduced

space can be written as

d(y

1

,y

2

) =

q

(x

1

− x

2

)

>

AA

>

(x

1

− x

2

). (1)

In this paper, our aim is to utilize the equivalence

constraints for guiding the embedding process. To

accomplish this goal, we use the Locality Preserv-

ing Projection (LPP) method (He and Niyogi, 2003)

and modify its objective function to satisfy the equiva-

lence constraints. Since our proposed method is based

on LPP, we next recall the main idea of the method.

2.2 Locality Preserving Projections

The LPP method searches for an embedding space in

which the similarity among the local neighborhoods

is preserved. Firstly, an adjacency graph with n nodes

is constructed. An edge between nodes i and j is cre-

ated based on neighborhoods (e.g., using the k nearest

neighbors). Then, each edge is weighted according to

a similarity function. The weights W

i j

lie in the range

[0,1] and take higher values for closer samples. The

goal of LPP is to find the minimizer a

∗

of the loss

function

E(a) =

1

2

∑

i, j

(y

i

− y

j

)

2

W

i j

, (2)

where a is the transformation vector, y

i

= a

>

x

i

is one-

dimensional representation of x

i

, and W

i j

is the weight

between the vectors x

i

and x

j

. This loss function as-

signs a high penalty to mapping neighboring points x

i

and x

j

far apart. The loss function can be written in a

more compact form as

E(a) = a

>

X(G −W)X

>

a = a

>

XLX

>

a, (3)

where W is the matrix of weights and G is a diagonal

matrix whose entries are the column (or row) sums

of W. The matrix L = G − W is called the Laplacian

matrix. An additional constraint, a

>

XGX

>

a = 1, is

included to normalize the projected data through G.

The final transformation matrix A is constructed by

the eigenvectors which are the minimum eigenvalue

solutions to the generalized eigenvalue problem

XLX

>

a = λXGX

>

a. (4)

LPP has close ties with spectral clustering meth-

ods. Therefore, the LPP scheme can be defined

through random walks similar to spectral clustering as

shown in (Melia and Shi, 2001). A random walk on a

graph is a stochastic process which randomly jumps

from vertex to vertex. When the clustering is per-

formed in the embedded space, the algorithm splits

the data into clusters such that a random walk stays

long within the same cluster and only rarely jumps be-

tween clusters. The transition probability of jumping

in one step from vertex i to vertex j is proportional to

the edge weight W

i j

. When side information is avail-

able, the weights of adjacency matrix can be adjusted

to reflect the equivalence constraints so as to find a

better embedding. This is the main idea from which

we develop our dimensionality reduction method in

the following.

2.3 Integrating Equivalence Constraints

Similar to the dimensionality reduction methods that

aim to preserve local structure, we assume that points

VISAPP 2008 - International Conference on Computer Vision Theory and Applications

490

(a) (b)

Figure 1: Propagating side information to the neighborhoods: (a) Similarity information is propagated by setting the edges of

mutual neighbors to +1. (b) Dissimilarity information is propagated by killing the weakest edges.

in sufficiently small neighborhoods tend to have the

same label. If constraints are chosen in a random fash-

ion, then it is reasonable to expect that there will be

equivalence constraints among non-neighboring sam-

ples. Such constraints are used to encourage map-

ping the involved points close to one another. This

can be accomplished by setting the weight between

non-neighboring points involved in equivalence con-

straints to a value larger than the original value of

zero. Similarly, we can reset the edge weight between

dissimilar points so that they will be pushed apart.

Moreover, the constraint information may be propa-

gated to the neighborhoods of the points involved in

the constraints.

Given unlabeled data and equivalence constraints,

our proposed method can be summarized as follows:

1. Constructing Adjacency Graph. We first con-

struct a weighted graph with n nodes (one for

each point) and a set of edges connecting neigh-

boring points. Given a distance metric, we use

k-nearest neighbors to determine the neighboring

points. Node i and j are connected by an edge if

i is among k-nearest neighbors of j, or vice versa.

The neighborhood size k is set to a small number,

e.g., k = 3 or k = 5. Each edge is then weighted by

using the heat kernel (He and Niyogi, 2003) and a

selected distance function, i.e.,

W

i j

= exp(−d(x

i

,x

j

)

2

/t).

2. Integrating Similarity Information. If there is

a similarity constraint between two points, say x

i

and x

j

, an edge is created and its weight is set to

+1 (the highest similarity value). To propagate

the similarity information to the neighbors, it is

checked if x

i

and x

j

have common neighbors. If

this is the case, the weight between each common

neighbor x

k

and x

i

as well as x

k

and x

j

is set to +1,

as illustrated in Fig. 1(a). This process strengthens

the transition probability between similar points.

If there is not any mutual neighbor, no action is

taken to modify neighborhoods.

3. Integrating Dissimilarity Information. In the

case of a dissimilarity constraint between two

points, it is checked whether an edge exists for

these points. Additionally it is checked whether

those points share common neigbors. An edge,

or common neighbors, between dissimilar points

indicates that the involved dissimilar points are

relatively close, which should be avoided. If an

edge between dissimilar points exists, we set the

edge weight to −1. In the case of common neigh-

bors, we compare the similarities for each com-

mon neighbor to the dissimilar points. If one of

those edges has a significantly lower weight, it is

removed, as illustrated in Fig. 1(b). Otherwise no

action is taken.

The objective for the above described method can

be written as

E

0

(a) =

1

2

∑

i, j

(y

i

− y

j

)

2

e

W

i j

+

∑

i, j∈S

(y

i

− y

j

)

2

−

∑

i, j∈D

0

(y

i

− y

j

)

2

. (5)

where

e

W

i j

represents the updated version of the

weights, and D

0

denotes the set of dissimilar points

that were originally neighbors or had common neigh-

bors. Our objective function has three terms. The first

term targets preserving the modified local structure of

the data. The second term aims at pulling the sim-

ilar points closer, whereas the last term encourages

pushing apart dissimilar points that are nearby in the

graph. The final transformation matrix A that min-

imizes this cost function typically includes the dif-

ference directions between dissimilar points coming

from D

0

since the dissimilar points can be pushed

apart by using these directions. Including those dif-

ference directions is important since they participate

in shaping the inter-class decision boundaries.

SEMI-SUPERVISED DIMENSIONALITY REDUCTION USING PAIRWISE EQUIVALENCE CONSTRAINTS

491

We can rewrite the cost function as

E

0

(a) = a

>

X(G

S

+ G

N

+ G

D

0

−W

S

−W

N

−W

D

0

)X

>

a

= a

>

X(G

0

−W

0

)X

>

a,

where W

S

is the sparse weight matrix corresponding

to pairs in S with edge weights +1, W

D

0

is the sparse

weight matrix of pairs in D

0

with edges weights −1,

finally W

N

is the adapted weight matrix between orig-

inal neighbors with edge weights

e

W

i j

. The matrices

G

S

, G

N

, and G

D

0

are diagonal matrices containing the

row sums of the corresponding W matrix.

As in LPP, we introduce the constraint

a

>

XG

0

X

>

a = 1 to fix the scale of a. The final

transformation matrix A is constructed by the

minimum-eigenvalue eigenvectors of the generalized

eigenvector equation

X(G

0

−W

0

)X

>

a = λXG

0

X

>

a. (6)

We coin our method Constrained Locality Preserving

Projections (CLPP) since it allows one to use pairwise

equivalence constraints in the LPP method.

2.4 Extension to Non-linear Projections

Our method can produce non-linear projections us-

ing the kernel trick. Suppose that the sam-

ples in the original input space IR

d

are mapped

to a higher-dimensional feature space F using a

nonlinear mapping function Φ : IR

d

→ F . Let

Φ(X) = [Φ(x

1

)Φ(x

2

)...Φ(x

n

)] denote the matrix

whose columns are the mapped samples in F . We

then search for a linear projection in F , which leads

to the eigenvalue equation

Φ(X)(G

0

−W

0

)Φ(X)

>

a = λΦ(X)G

0

Φ(X)

>

a. (7)

Since the eigenvectors are linear combinations of

the mapped samples, there exist coefficients α

i

(i =

1,...,n), such that

a =

n

∑

i=1

α

i

Φ(x

i

) = Φ(X)α. (8)

The dot products in the feature space F is com-

puted through a Mercer kernel k(·,·). Let K =

Φ(X)

>

Φ(X) = (k(x

i

,x

j

))

i, j

denote the kernel matrix

of the data samples. Multiplying Eq. (7) on the left

with Φ(X)

>

, the eigenvector equation is converted to

K(G

0

−W

0

)Kα = λKG

0

K. (9)

Let α be one of the minimum eigenvalue solutions to

the above equation, then the data projections in F are

computed as y = Kα where the i-th element of y is the

one-dimensional representation of x

i

. If rather than

using y = Kα we allow for general data representa-

tions y, the solutions are given by

(G

0

−W

0

)y = λG

0

y, (10)

which may be interpreted as the Laplacian Eigen-

map (Belkin and Niyogi, 2001) solution of the modi-

fied graph.

3 EXPERIMENTAL EVALUATION

3.1 Methodology and Data Sets

To assess the performance of our method, we have

performed experiments on two image databases –

ETH-80 (Leibe and Schiele, 2003) and Birds (Lazeb-

nik et al., 2005) – to discover object groups. Sev-

eral example images from the databases are shown

in Fig. 2. We used only four categories from the

ETH-80: Apple, Car, Cow, and Cup. Each category

contains images of 10 to 14 objects under different

viewpoints, against a flat blue background. The Birds

database contains six categories where each category

includes 100 images. It is a challenging database in-

cluding images with large intra-class, scale, and view-

point variability. Furthermore, birds appear against

highly cluttered backgrounds.

We used a ‘bag of features’ image representation.

In this approach, patches are sampled from the image

at many different positions and scales, either densely,

randomly or based on the output of some kind of

salient region detector. In our case we select patches

following a dense grid. Then each patch is repre-

sented by a 128-dimensional SIFT descriptor (Lowe,

2004). Following this process, all descriptors ex-

tracted from images are quantized in a discrete set of

so-called ‘visual keywords’ forming a vocabulary. To

build image representation, each extracted descriptor

is compared to the visual keywords and associated to

the closest keyword. Based on these assignments, we

build histograms which are used as image feature vec-

tors. The size of the histograms is 500 and 2000 for

the ETH and Birds datasets, respectively. It has been

shown that the Chi-square distance is well-suited for

measuring the similarity among the histograms (Ce-

vikalp et al., 2007). Therefore we utilize Chi-square

distances in the Heat kernel function when building

the initial weight matrix W .

To show the efficacy of using equivalence con-

straints for discovering the hidden groups within data,

we apply k-means clustering in the embedded space

and use pairwise F-measure to evaluate the clustering

results based on the underlying classes. The pairwise

VISAPP 2008 - International Conference on Computer Vision Theory and Applications

492

Figure 2: ETH-80 (top row) and Birds (the second and third rows) datasets: 2 illustrative images per category.

F-measure is the harmonic mean of the pairwise pre-

cision and recall measures which are widely used in

information retrieval. We compute precision and re-

call over pairs of images and consider for the pairs

whether they are assigned to the same cluster by k-

means and whether they contain the same object cat-

egory. Let A denote the set of image pairs assigned

to the same k-means cluster, and let B denote the set

of image pairs that contain the same object category.

With |A| denoting the cardinality of A (and similar for

other sets), the measures are defined as:

Precision =

|A ∩ B|

|A|

, Recall =

|A ∩ B|

|B|

,

F-measure =

2 × Precision × Recall

Precision + Recall

.

Performance evaluations were obtained using

cross-validation; 5-fold for the ETH data set and 4-

fold the Birds data set. The clustering algorithm was

run on the whole data set, but the F-measure was com-

puted for the whole data set and the held-out test set

separately.

To demonstrate the effect of using different num-

ber of equivalence constraints, beginning without

constraints we gradually increase the number of simi-

lar and dissimilar pairs. In all experiments constraints

are uniformly random selected from all possible con-

straints induced by the true data labels of the training

data.

As mentioned in the introduction, we cannot apply

full rank distance metric learning techniques in these

high-dimensional spaces. To compare our method

CLPP to other distance metric learning techniques,

we first applied dimensionality reduction methods,

Principal Component Analysis (PCA) and LPP, to the

high-dimensional data and learned a distance metric

in the reduced space. To learn the distance metric, we

applied the methods proposed in (Tsang and Kwok,

2003) and (Xing et al., 2003). The former yields bet-

ter results, therefore we only report results for this

method.

3.2 Experimental Results

F-measure scores are shown in Fig. 3. As can be seen

in the results, adding constraints improves the cluster-

ing performance. For the ETH data set, our proposed

method and the LPP followed by full rank metric

learning technique yield similar results. On the other

hand, the proposed method significantly outperforms

the full rank metric learning approach for the Birds

dataset. It is because most of the discriminatory infor-

mation is lost during the unsupervised dimensionality

reduction stage. Therefore the metric learning stage

improves the clustering performance up to some de-

gree in the reduced space and then saturates even if

additional constraints are used. On the contrary, uti-

lizing constraints in the proposed dimensionality re-

duction scheme achieves better results, and adding

new constraints continues to improve the clustering

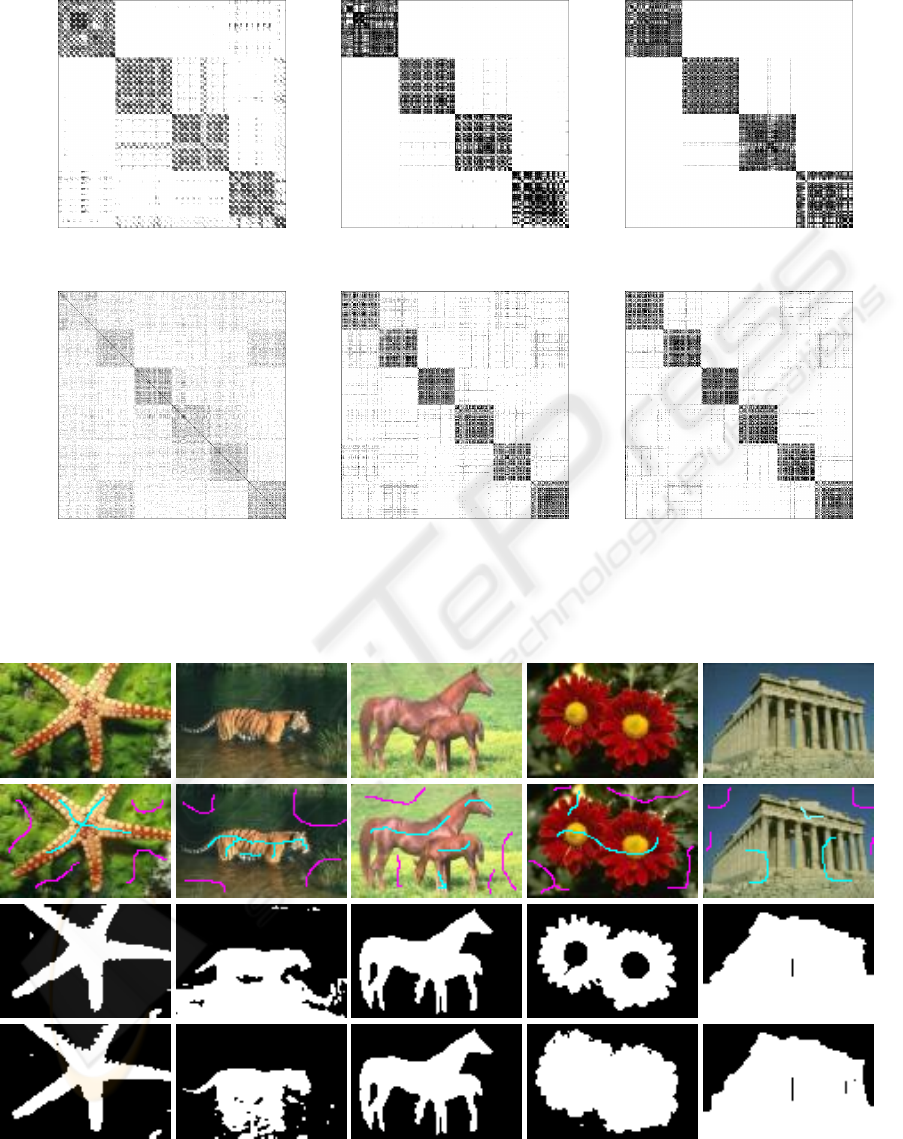

performance. In Fig. 5, we plot the affinity matrices

in the original sample space and the embedded space.

As can be seen in the figure, adding constraints in-

creases the class separability, which explains the in-

crease in clustering performance.

We also conducted experiments to show how the

proposed method improves the distance metric and

classification performance in the projected space. To

this end, from the Birds dataset, we randomly selected

10000 sample pairs which are not used as similar and

dissimilar pairs. Then, we converted the problem to a

binary classification problem treating the pairs com-

ing from same classes as positive samples and pairs

coming from different classes as negative samples.

We then computed the Euclidean distances in the pro-

jected CLPP space. Based on the these distances

SEMI-SUPERVISED DIMENSIONALITY REDUCTION USING PAIRWISE EQUIVALENCE CONSTRAINTS

493

0.65

0.7

0.75

0.8

0.85

0.9

0.95

1

0 50 100 150 200 250 300 350 400 450

F-Measure

Number of Constraints

CLPP

LPP+MetricLearning

PCA+MetricLearning

0.3

0.4

0.5

0.6

0.7

0.8

0.9

0 100 200 300 400 500 600 700 800

F-Measure

Number of Constraints

CLPP

LPP+MetricLearning

PCA+MetricLearning

(a) (b)

0.65

0.7

0.75

0.8

0.85

0.9

0.95

1

0 50 100 150 200 250 300 350 400 450

F-Measure

Number of Constraints

CLPP

LPP+MetricLearning

PCA+MetricLearning

0.25

0.3

0.35

0.4

0.45

0.5

0.55

0.6

0.65

0.7

0 100 200 300 400 500 600 700 800

F-Measure

Number of Constraints

CLPP

LPP+MetricLearning

PCA+MetricLearning

(c) (d)

Figure 3: F-measure as a function of number of constraints for (a) overall ETH data, (b) overall Birds data, (c) ETH held-out

test data, and (d) Birds held-out test data.

0

0.2

0.4

0.6

0.8

1

0 0.2 0.4 0.6 0.8 1

True Positive Rate

False Positive Rate

Input Space

CLPP,[200]

CLPP,[400]

CLPP,[600]

CLPP,[800]

Figure 4: ROC curves for the Birds Database.

we created Receiver Operating Characteristic (ROC)

curves. This procedure is also repeated in the orig-

inal input space by using the Chi-Square distances.

Curves are plotted in Fig. 4 for different number of

constraints given in square brackets. From the ROC

curves, we see that as the number of constraints is

increased, the accuracy of the classification proce-

dure improves indicating that our embedding proce-

dure improves the original distance metric.

3.3 Image Segmentation Applications

The proposed CLPP method can also be applied for

clustering low-dimensional data samples by using the

kernel trick. To test the efficacy of the kernel method

we applied it in image segmentation task. We have

chosen five images from the Berkeley Segmentation

dataset

1

. Centered at every pixel in each image we ex-

tracted a 20×20 pixel image patch for which we com-

puted the robust hue descriptor of (van de Weijer and

Schmid, 2006). This process yields a 36-dimensional

feature vector which is a histogram over hue values

observed in the patch, where each observed hue value

is weighted by its saturation. The Heat kernel func-

tion using Euclidean distance is used as kernel. We

set the number of clusters to two, one cluster for the

background and another for the object of interest.

The pairwise equivalence constraints are chosen

from the samples corresponding to pixels shown with

magenta and cyan in the second row of Fig. 6. We

first segmented the original images (top row) with-

out using constraints (result in the third row) and then

we used constraints for segmentation (result in bot-

1

Available at http://www.eecs.berkeley.edu/

Research/Projects/CS/vision/grouping/segbench/

VISAPP 2008 - International Conference on Computer Vision Theory and Applications

494

ETH data set

Input Space 200 Constraints 400 Constraints

Birds data set

Input Space 300 Constraints 700 Constraints

Figure 5: Visualization of affinity matrices obtained from the ETH dataset (first row) and Birds dataset (second row).

Figure 6: Original images (top row), pixels used for equivalence constraints (second row), segmentation results without

constraints (third row), and segmentation results using constraints (bottom row). Figure is best viewed in color.

SEMI-SUPERVISED DIMENSIONALITY REDUCTION USING PAIRWISE EQUIVALENCE CONSTRAINTS

495

tom row). As can be seen in the figure, simple user

added (dis)similarity constraints can significantly im-

prove the segmentations. Consider for instance the

flower image, there are three well separated color

components in the image: the green background, the

red leaves, and the yellow flower center. There are

thus three reasonable segmentations –separating each

one of the components from the other two– and it is

a-priori not clear which is desired by a user. How-

ever once a small set of (dis)similarity constraints are

added, the segmentation desired by the user is easily

identified.

4 CONCLUSIONS

In this paper we developed a semi-supervised di-

mensionality reduction method which uses pairwise

equivalence constraints to discover the groups in

high-dimensional data. To this end, we modified

LPP scheme such that its objective function takes into

account the equivalence constraints. Like LPP, our

algorithm first finds neighboring points to create a

weighted neighborhood graph. Then, the constraints

are used to modify the neighborhood relations and

weight matrix to reflect this weak form of supervision.

The optimal projection matrix according to our cost

function is then identified by solving for the smallest

eigenvalue solutions of an n × n eigenvector problem,

where n is the number of data points. Experimental

results show that our semi-supervised dimensional-

ity reduction method increases performance of subse-

quent clustering and classification algorithms. More-

over, it yields better results than methods applying un-

supervised dimensionality reduction followed by full-

rank metric learning.

In some applications, small subsets of data points

with same class labels, so-called ‘chunklets’, occur

naturally, e.g., for face recognition in video. In fu-

ture work, we will explore distance metrics between

chunklets as well as chunklets and points, rather than

between individual data points. Since these metrics

operate on richer data structures, we expect them to

significantly improve clustering and classification re-

sults.

REFERENCES

Basu, S., Banerjee, A., and Mooney, R. J. (2004). Active

semi-supervision for pairwise constrained clustering.

In the SIAM International Conference on Data Min-

ing.

Belkin, M. and Niyogi, P. (2001). Laplacian eigenmaps and

spectral techniques for embedding and clustering. In

Advances in Neural Information Processing Systems.

Bilenko, M., Basu, S., and Mooney, R. J. (2004). Integrat-

ing constraints and metric learning in semi-supervised

clustering. In the 21st International Conference on

Machine Learning.

Cevikalp, H., Larlus, D., Neamtu, M., Triggs, B., and Ju-

rie, F. (2007). Manifold based local classifiers: Linear

and nonlinear approaches. In Pattern Recognition in

review.

He, X. and Niyogi, P. (2003). Locality preserving direc-

tions. In Advances in Neural Information Processing

Systems.

Hertz, T., Shental, N., Bar-Hillel, A., and Weinshall, D.

(2003). Enhancing image and video retrieval: Learn-

ing via equivalence constraints. In the 2003 IEEE

Computer Society Conference on Computer Vision

and Pattern Recognition (CVPR’03).

Lazebnik, S., Schmid, C., and Ponce, J. (2005). A max-

imum entropy framework for part-based texture and

objcect recognition. In International Conference on

Computer Vision (ICCV).

Leibe, B. and Schiele, B. (2003). Interleaved object catego-

rization and segmentation. In British Machine Vision

Conference (BMVC).

Lowe, D. (2004). Distinctive image features from scale -

invariant keypoints. In International Journal of Com-

puter Vision, volume 60, pages 91–110.

Melia, M. and Shi, J. (2001). A random walks view of spec-

tral segmentation. In the 8th International Workshop

on Artificial Intelligence and Statistics.

Shental, N., Bar-Hillel, A., Hertz, T., and Weisnhall, D.

(2003). Computing gaussian mixture models with em

using equivalance constraints. In Advances in Neural

Information Processing Systems (NIPS).

Tsang, I. W. and Kwok, J. T. (2003). Distance metric learn-

ing with kernels. In the International Conference on

Artificial Neural Networks.

van de Weijer, J. and Schmid, C. (2006). Coloring local fea-

ture extraction. In European Conference on Computer

Vision (ECCV).

Wagstaff, K. and Rogers, S. (2001). Constrained k-means

clustering with background knowledge. In the 18th

International Conference on Machine Learning.

Xing, E. P., Ng, A. Y., Jordan, M. I., and Russell, S. (2003).

Distance metric learning with application to clustering

with side-information. In Advances in Neural Infor-

mation Processing Systems.

Yan, B. and Domeniconi, C. (2006). Subspace metric en-

sembles for semi-supervised clustering of high dimen-

sional data. In the 17th European Conference on Ma-

chine Learning.

VISAPP 2008 - International Conference on Computer Vision Theory and Applications

496