AN INTEGRATED GLOBAL AND FUZZY REGIONAL

APPROACH TO CONTENT-BASED IMAGE RETRIEVAL

Xiaojun Qi, Yutao Han

Computer Science Department, Utah State University, Logan, UT 84322-4205

Keywords: Content-based image retrieval, image segmentation, s

imilarity measure, fuzzified region features, fuzzy

region matching

Abstract: This paper proposes an effective and efficient approach to content-based image retrieval by

integrating

global visual features and fuzzy region-based color and texture features. The Cauchy function is utilized to

fuzzify each independent regional color and texture feature for addressing the issues associated with the

color/texture inaccuracies and segmentation uncertainties. The overall similarity measure is computed as a

weighted combination between global and regional similarity measures incorporating all features. Our

proposed approach demonstrates a promising performance on an image database of 1000 general-purpose

images from COREL, as compared with some variants of the proposed method and some peer systems in

the literature

.

1 INTRODUCTION

Content-Based Image Retrieval (CBIR) has become

an active research area since the early 1990s. Most

CBIR techniques automatically extract low-level

features (e.g., color, texture, and shapes) to measure

the similarities among images by comparing the

feature differences.

Non-spatial color methods (e.g., color histogram,

col

or moments, and color sets (Long et al., 2002)

and spatial color methods (e.g., color coherence

vector, color correlogram (Long et al., 2002), spatial

color histogram (Rao et al., 1999), and spatial

chromatic histogram (Cinque et al., 2001) are

commonly used in image retrieval. The spatial color

methods outperform the non-spatial ones with the

sacrifice of more computational costs. Statistics-

based texture features, including Tamura features,

Wold features, Gabor filter features, and wavelet

features (Long et al., 2002), are other important

visual features used in image retrieval. Many

current systems (Shih et al., 2001); (Liang and Kuo,

1999) combine some low-level features to get better

retrieval results. Since these features are extracted

from the whole image and do not have explicit

semantic meanings, segmentation-based image

retrieval has gained more attention.

In segmentation-based image retrieval, each

i

mage is first segmented into homogenous regions

and features for each region are extracted and

similarities are calculated based on these region-

based features. A few related works are reviewed

below. In (Deng et al., 2001), dominant colors for

each segmented image are obtained and a dominant-

color-based similarity score is computed to measure

the difference between two regions. (Suematsu et

al.1999) propose a region-based method which

performs image segmentation and retrieval by using

the texture features computed from wavelet

coefficients. (Carson et al,1997) use expectation-

maximization on color and texture features to

segment the image into coherent regions and the

region-based color, texture, and spatial features are

further utilized for retrieval. (Ardizzoni et al,1999)

use color and texture features captured from wavelet

coefficients for both segmentation and retrieval. (Li

et al,.2001) and (Chen and Wang 2002) use color

features and texture features for each 4×4 block to

segment the image. The region-based color, texture,

and shape features are utilized for retrieval. In (Li et

al., 2001), an Integrated Region Matching (IRM)

scheme is proposed to decrease the impact of

inaccurate region segmentation. In (Chen and

Wang, 2002), a Unified Feature Matching (UFM)

scheme is proposed, where region-based multiple

fuzzy feature representations and fuzzy similarity

measures are used to improve the retrieval accuracy.

In this paper, we propose an efficient CBIR

sy

stem by combining fuzzy region-based color and

339

Qi X. and Han Y. (2004).

AN INTEGRATED GLOBAL AND FUZZY REGIONAL APPROACH TO CONTENT-BASED IMAGE RETRIEVAL.

In Proceedings of the First International Conference on E-Business and Telecommunication Networks, pages 339-344

DOI: 10.5220/0001390003390344

Copyright

c

SciTePress

texture features and global features. The overall

similarity score is calculated by assigning different

weights to the fuzzy local features and global

features for accurate retrieval. The remainder of the

paper is organized as follows. Section 2 describes

the general framework of our proposed system.

Section 3 illustrates the experimental results.

Section 4 draws conclusions.

2 PROPOSED APPROACH

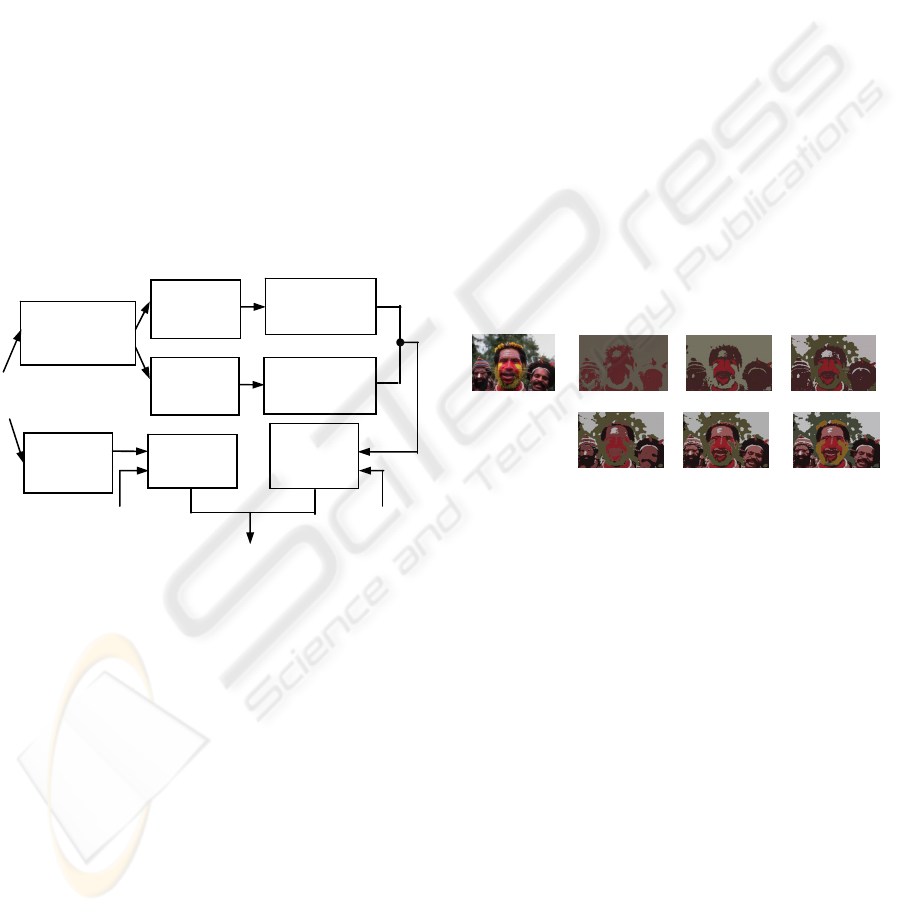

The block diagram of our proposed CBIR approach

is shown in Fig. 1. The first step of our algorithm is

to calculate the global visual features. It then

segments an image into coherent regions based on

the color features. Image indexing and retrieval is

finally taken based on global features and weighted

independent fuzzy color and texture features

incorporating the segmented region area and region

position relative to the image boundary.

Figure 1: Block diagram of our CBIR system.

2.1 Global Feature Extraction

The global features include color-texture features,

color moments and color histogram. The global

color-texture feature is derived from a chromatic

representation computed from a family of reduced

dimensionality color spaces (Vertan and Boujemaa,

2000). Since the overall color-texture features are

limited by the initial luminance normalization, we

add RGB moments (i.e., mean and variance) and

normalized 32-bin RGB histogram to compensate

for the lack of such luminance information.

2.2 Color-Based Image Segmentation

In the proposed approach, we exclusively use color

features for efficient image segmentation. To

segment an image into coherent regions, the image

is first divided into 2×2 non-overlapping blocks and

a color feature vector (i.e., the mean color of the

block) is extracted for each block. The Luv color

space is used because the perceptual color difference

of the human visual system is proportional to the

numerical difference in this space.

After obtaining the color features for all blocks,

an unsupervised K-Means algorithm (

Hartigan et al.,

1979

) is used to cluster these color features. This

segmentation process adaptively increases the

number of regions C (initially set as 2) until a

termination criterion is satisfied (i.e., the average

distance between all pairs of cluster centers is less

than a predetermined threshold value). This

predetermined threshold is empirically chosen so a

reasonable segmentation can be achieved. Fig. 2

shows the intermediate segmentation results of one

sample image from our test database by adaptively

and gradually increasing the number of regions C.

Original Image 2 Regions 3 Regions 4 Regions

5 Regions 6 Regions 7 Regions

Figure 2: Segmentation results by the unsupervised

K-Means clustering algorithm.

2.3 Fuzzy Feature Representation

and Fuzzy Region Matching

2.3.1 Fuzzy Feature and Region Matching

Based on the segmentation results, the representative

color feature

c

j

f

r

for each region j is calculated by

the mean of color features of all the blocks in region

j. The representative texture feature

t

j

f

r

for each

region j is computed by the average energy in each

high frequency band of the level one wavelet

decomposition. The wavelet transformation is

applied to a “texture template” image obtained by

keeping all the pixels in region j intact and setting

all the pixels outside region j as white. The

computational cost of deriving this representative

texture feature is minimal compared to most

methods (Li et al., 2001; Chen and Wang, 2002)

Stored Global

Features of

Candidate

Images

Stored Local

Features of

Candidate

Images

Global

Feature

Extraction

Global

Matching

Fuzzy

Region

Matchin

g

Overall

Similarity

Query

Image

Colo

r

-

Based

Segmentation

Color

Feature

Extraction

Color

Feature

Fuzzification

Texture

Feature

Extraction

Texture

Feature

Fuzzification

ICETE 2004 - WIRELESS COMMUNICATION SYSTEMS AND NETWORKS

340

which average the texture features of all the blocks

in the region. Furthermore, this representative

texture feature captures more accurate regional edge

distribution since averaging the block-based texture

features will generate a small value due to the block

homogeneity.

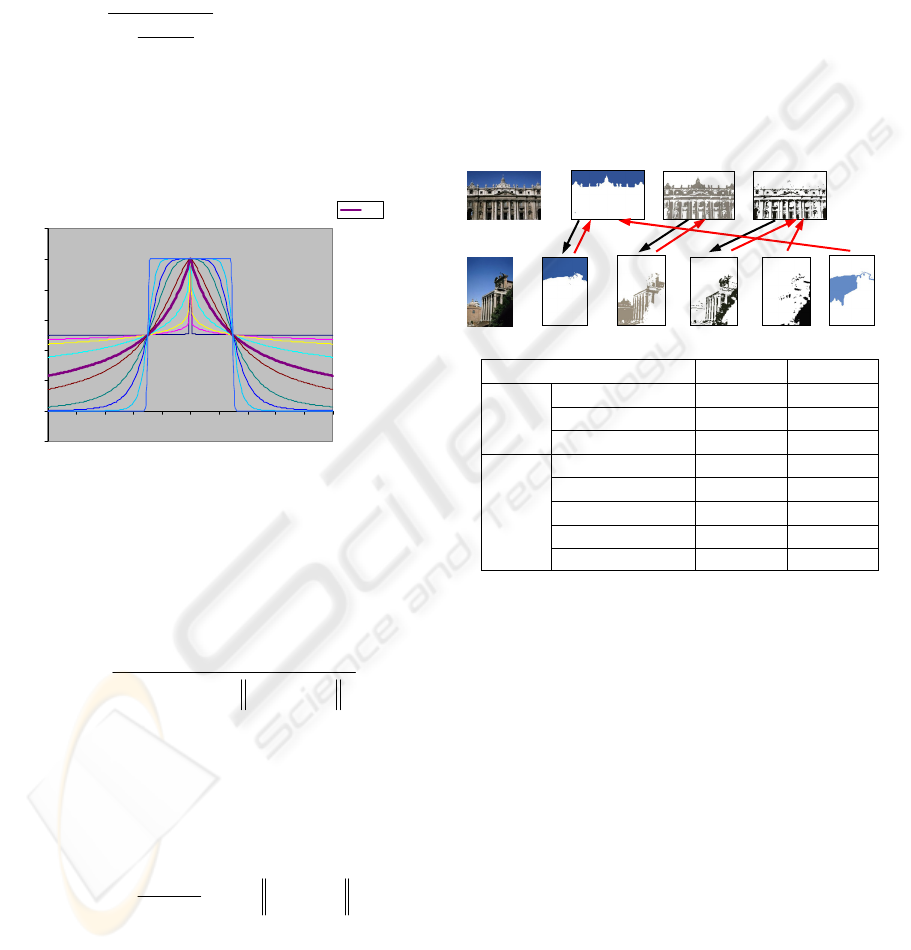

To fuzzify each feature, the Cauchy function

er et al., 1999

(

Hoppn ned as:

), defi

∂

⎟

⎟

⎠

⎞

⎜

⎜

⎝

⎛

−

+

=

d

fx

xC

||||

1

1

)(

r

r

r

is utilized, where d represents the width of the

function,

f

r

represents the center location of the

fuzzy set, and

represents the shape (or

smoothness) of the function. One example of the

Cauchy function is illustrated in Fig. 3 with

∂

∂

being

0.01, 0.1, 0.2, 0.5, 1, 1.5, 3, 5, 10, and 100.

Cauchy Function (

d

=30,

f

=0)

-0.2

0

0.2

0.4

0.6

0.8

1

1.2

-100 -80 -60 -40 -20 0 20 40 60 80 100

x

Membership

∂=1

Figure 3: One example of the Cauchy function

with fixed d and f.

Because of the property of the Cauchy function,

the similarity between the fuzzified color or texture

features for any region u and v in two images A and

B can be computed:

()

∂

∂

∂

−++

+

=

⎟

⎠

⎞

⎜

⎝

⎛

tctc

tc

B

tc

A

tc

B

tc

A

tc

v

f

u

fdd

dd

vu

S

||

||

|

|

|

),(

rr

(2)

where:

represents the shape of the Cauchy

function and is set to be 1;

∂

tc

u

f

|

r

and

tc

v

f

|

r

are the

representative color or texture features of regions u

and v in images A and B;

and are

calculated as:

tc

A

d

| tc

B

d

|

∑∑

−

=+=

−

−

=

1

11

||

|

)1(

2

C

i

C

ik

tc

k

tc

i

tc

ff

CC

d

rr

(3)

2.3.2 Fuzzy Region Matching

A fuzzy region matching scheme is further used in

our approach since a region in one image could

to the imperfect segmentation. That is, a region u in

image A will be compared with every region v in

image B by computing the overall region similarity:

),()1(),(),(

11

vuSvuSvuS

tc

λλ

−+= (4)

correspond to several regions in another image due

and are calculated by using

where

),( vuS

c

),( vuS

t

(2) and

1

λ

d nes th

Image A A(1) A(2) A(3)

etermi e contribution of color

features in measuring the similarity and is set to be

0.9. The region in image B, which yields the largest

overall region similarity in (4), is considered to be

the best matched region for region u in image A. Its

color-based similarity

c

S

and texture-based

similarity

t

S

are saved in vectors L

c

and L

t

for

calculating e image similarity. This fuzzy region

matching scheme is illustrated in Fig. 4.

th

Image B B(1) B(2) B(3) B(4) B(5)

Matched Region Pairs

L

c

L

t

A.(1)Æ B (1)

0.97414 0.94468

A (2)Æ B (2)

0.94438 0.92848

AÆB

A (3)Æ B (3)

0.89681 0.87261

B (1)Æ A (1)

0.97414 0.94468

B (2)Æ A (2)

0.94438 0.92848

B (3)Æ A (3)

0.89681 0.87261

B (4)Æ A (3)

0.81972 0.7239

BÆA

B (5)Æ A (1) 0.83261 0.96419

Figur n m he

Global features are involved in the calculation of the

e

s used in

cal

l 22

e 4: Fuzzy regio atching sc me.

2.4 Similarity Measure

global similarity

g

S

. The simple Euclidean distance

is used to measur the global similarity.

A weighted similarity scheme i

culating the region-based similarity score

tc

l

S

|

:

tcT

tc

LwwS

|

|

))1((

r

pa

r

λλ

+−=

(5)

where

aw

v

contains the normalized area percentages,

pw

v

con ns the normalized weights for the region

tions, and

2

tai

posi

λ

adjusts the significance of aw

v

and

pw

v

and is se as 0.1. The overall region-based

ilarity score

l

S is calculated as the weighted

sum of

c

l

S

(colo based similarity score) and

t

l

S

(texture-b ed similarity score):

t

l

c

ll

SSS )1(

11

λλ

−+=

t

sim

r-

as

(6)

(1)

AN INTEGRATED GLOBAL AND FUZZY REGIONAL APPROACH TO CONTENT-BASED IMAGE RETRIEVAL

341

where

1

λ

is the same as in (4).

is computed as: The overall image similarity

lg

SSS

33

)1(

λ

λ

+−=

(7)

where

3

λ

adjusts the significance of the regional

lob

3 EXPERIMENTAL RESULTS

on a

and g al similarity measure in the overall

similarity and is set to be 0.8.

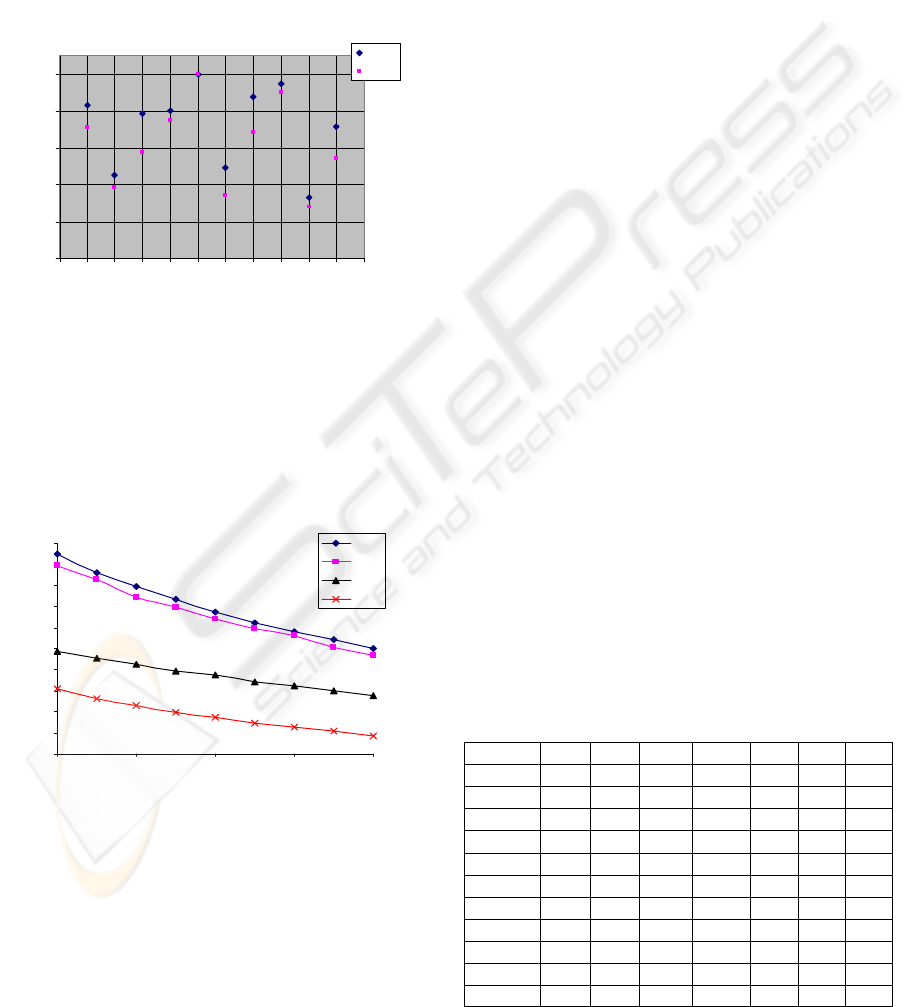

To date, we have tested our CBIR algorithm

general-purpose image database with 1000 images

from COREL. These images have 10 categories

with 100 images in each category. The categories

contain different semantics. To evaluate the

retrieval effectiveness of our algorithm, we

randomly select three query images with different

semantics (i.e. Africa, Beach, and Building). The

top 11 returned results and the similarity scores are

shown in Fig. 5. A retrieved image is considered as

a correct match if and only if it is in the same

category as the query image.

To perform a more quantitative evaluation, we

randomly choose 15 images from each category (i.e.,

150 images in total) as query images and the

precision is calculated by evaluating the top 20

returned results. Several peer retrieval methods are

also used to compare the retrieval performance.

These methods include our proposed method

(Prop.), global color histogram method with 32

color bins (HisC), and Non-Fuzzified Efficient

Color Representation (ECR) method (Deng et al.,

2001) applied to our segmentation results. In order

to ensure fair comparison, we used the same 1000

images from COREL as a test bed, the same 150

images as queries, and top 20 returned images.

Fig.6 illustrates the average precision for each

category by applying all these methods on the same

query images.

It is clear that our proposed method performs

much better than both approaches in almost all

image categories. In particular, our method

outperforms the HisC method in all image categories

and improves the overall average retrieval accuracy

by 78.51%. Our method yields much better retrieval

accuracy than the ECR method in all image

categories except for the Mountain (category 9).

The overall average retrieval accuracy is improved

by 46.95%.

(

7

)

0.8982 0.8899 0.8869 0.8856

0.8855

(a) 10 matches out of 11, 18 matches out of 20

0.8844 0.8832 0.8826 0.8805 0.8786

(5)

0.8844 0.8829 0.8821 0.8810

(b) 10 matches out of 11, 17 matches out of 20

(c) 9 matches out of 11, 14 matches out of 20

e

most similar images are the same and at the upper left

in .

0.8805 0.8783 0.8783 0.8750 0.8726

0.8702

(

3

)

0.9531 0.9163 0.9088 0.9079

0.9069 0.9065 0.9029 0.9009 0.8957 0.8954

f 3 querieFigure 5: l s eRetrieva results o . The qu ry and th

corner. The segmentation of the query is shown at the

right side of the query with the number of regions

dicated below. Other numbers are the similarity scores

0.0

0.2

0.4

0.6

0.8

0

01234567891011

Category ID

Average Precision

1.

Pr op.

ECR

HisC

Figure 6: Comparison of the average retrieval precision of

three different methods.

method (Chen the same test

be

Our method is also compared with the UFM

and Wang, 2002) using

d, the same query images, and same number of

returned images. Experimental results summarized

in Table 1 show that our method has better retrieval

accuracy in 6 categories and worse accuracy in 3

categories. It improves the UFM method, which

ICETE 2004 - WIRELESS COMMUNICATION SYSTEMS AND NETWORKS

342

uses additional regional shape for retrieval, by

3.88% in the overall retrieval accuracy. The

improvement over IRM (Li et al., 2001) is around

10.28%.

More returned images are used to further test the

retrieval accuracy of our method. Fig. 7 illustrates

the average precision for each category by

evaluating the top 20 and top 50 returned results. It

shows that the average precision drops as more

results are returned. However, there are only a little

precision decrease in categories 2, 4, 5, 8, and 9,

which is very promising.

0.0

0.2

0.4

0.6

0.8

1.0

01234567891011

Category ID

Average Precision

top 20

top 50

Figure 7: Comparison of the average retrieval precision

for different number of returned images.

Fig ecision

om top 20, 30, …, 100 returned images when four

me

. 8 compares the average retrieval pr

fr

thods are applied to the same 1000 images from

COREL by using the same 150 query images. It

clearly shows that our proposed method ranks the

best in all cases.

0.25

0.3

0.35

0.4

0.45

0.5

0.55

0.6

0.65

0.7

0.75

20 40 60 80 100

Number of Returned Images

Average Precision

Prop.

UFM

ECR

HisC

Figure 8: Comparison of the average retrieval precision

with different number of returned images.

Addi bed,

the same 150 query images, and top 20 returned

im

PrG1 method vs. the HisC method: Our

all

2)

ance

3)

s the overall accuracy

4)

tter retrieval accuracy

5)

curacy in 7

Table 1: Comparison of the average retrieval precision of

seven different methods

tional experiments using the same test

ages are performed on several variants of our

proposed method to illustrate the validity of our

method. Table 1 numerically lists the average

precision for each category by applying our

proposed method, our global method without using

any local features (PrGl), our fuzzy region-based

method without using any global features (PrRe1),

our fuzzy region-based method using only color and

no global features (PrRe2), HisC, ECR, and UFM

methods.

Several comparisons are made from Table 1:

1) Our

global method performs much better in

image categories. The overall average

retrieval accuracy is improved by 40.74%.

This result indicates that our global features

are more effective than color histogram.

Our PrRe1 method vs. our PrRe2 method:

The former has better retrieval perform

in all image categories except for the

Dinosaur and Mountain, which have the same

retrieval accuracy (i.e., 99.3%) for Dinosaur

and a little worse accuracy for Mountain.

The overall average retrieval accuracy is

improved by 7.87%. This result indicates that

the integration of local textures does improve

the retrieval accuracy.

Our PrRe2 method vs. the ECR method: The

fuzzy measure improve

by 31.71% even though it does not perform

better in all categories.

Our method vs. our PrG1 method: Our

method yields much be

in all categories except for the Dinosaur with

the same retrieval. The 26.84% improvement

in the overall accuracy shows that the

integration of the local features dramatically

increases the retrieval accuracy.

Our method vs. our PrRe1 method: Our

method yields better retrieval ac

categories and a little bit worse performance

in 2 categories. Our method outperforms our

PrRe1 method by 3.43% improvement in the

overall accuracy. It is clear that the

integration of the global features does

improve the retrieval performance.

Category Prop. PrGl PrRe1 PrRe2 HisC ECR UFM

Africa 0.830 97 0.810 0.6970.697 0.740 0.620 0.5

Beach 0.453 0.343 0.463 0.413 0.157 0.367 0.527

Building 0.783 0.410 0.780 0.710 0.220 0.150 0.710

Vehicle 0.803 0.587 0.750 0.633 0.173 0.217 0.773

Dinosaur 1.000 1.000 0.993 0.993 1.000 0.900 1.000

Elephant 0.490 0.437 0.390 0.333 0.380 0.437 0.423

Flower 0.877 0.637 0.927 0.863 0.397 0.463 0.947

Horse 0.950 0.863 0.933 0.920 0.607 0.887 0.897

Mountain 0.330 0.293 0.300 0.303 0.160 0.417 0.333

Food 0.717 0.433 0.717 0.687 0.357 0.277 0.650

Ave. 0.723 0.570 0.699 0.648 0.405 0.492 0.696

AN INTEGRATED GLOBAL AND FUZZY REGIONAL APPROACH TO CONTENT-BASED IMAGE RETRIEVAL

343

4 CONCLUSIONS

A novel CBIR approach is proposed in this paper.

ghted fuzzy region-

res and global features

for effective and efficient image retrieval. The

•

•

•

•

The al results on 1000 images from

COR

algori h

speed d size of the feature vector (less

tha

, M., 1999.

Windsurf: Region-based image retrieval using

kshop, pp. 167-173, Florence,

Cin

Har

Ho

Lia

This approach combines wei

based color and texture featu

region-based color and texture features are

independently obtained from the unsupervised

segmentation. The Cauchy fuzzification is further

applied to fuzzify each feature for fuzzy region

matching. The global features are also included to

improve the retrieval accuracy. The proposed

approach is efficient, effective, and unique because:

• The unsupervised K-Means algorithm is

exclusively performed on the 2×2 block-

based color features to quickly and

efficiently segment an image into coherent

region.

The color and texture are treated as two

separate features to represent each region

from different perspectives. Such a

separation achieves better retrieval

performance than the other schemes

combining the color and texture as one

comprehensive feature (e.g., UFM method).

Each independent color and texture feature

is fuzzified for fuzzy region matching by

assigning different weights to the respective

features. Such fuzzification addresses the

issues related to imperfect segmentation and

inaccurate color/texture.

The use of Cauchy function greatly reduces

the computational cost for the fuzzy region

matching as illustrated in (2).

The region area and region position are

incorporated into the regional features based

on the general observations in terms of

semantics.

• The global color-texture features are

extracted from the reduced dimensionality

color space.

experiment

EL database demonstrate that the proposed

t m achieves good retrieval accuracy with fast

ue to the small

n 200 elements).

Shape or spatial information is not considered in

our implementation for the efficiency consideration.

It may be further integrated into the retrieval system

to improve the accuracy. Other global feature

rep

resentations may be further studied.

REFERENCES

Ardizzoni, S., Bartolini, I., and Patella

wavelets. DEXA wor

Italy.

Carson, C., Belongie, S., Greenspan, H., and Malik, J.,

1997. Region-based image querying. In CVPR’97

Workshop on Content-Based Access of Image and

Video Libraries, pp. 42-49, San Juan, Puerto Rico.

Chen, Y. and Wang, J., 2002. A region-based fuzzy

feature matching approach to content-based image

retrieval. IEEE Trans on PAMI 24(9), pp. 1252-1267.

que, L., Ciocca, G., Levialdi, S., Pellicano, A., and

Schettini, R., 2001. Color-based image retrieval using

spatial-chormatic histogram. Image and Vision

Computing 19, pp. 979-986.

Deng, Y., Manjunath, B. S., and Kenney, C., 2001. An

efficient color representation for image retrieval. IEEE

Trans on Image Processing 10(1), pp. 140-147.

tigan, J. A, and Wong, M. A., 1979. Algorithm

AS136: A K-Means Clustering Algorithm. Applied

Statistics 28, pp. 100-108.

ppner, F., Klawonn, F., Kruse, R., and Runkler, T.,

1999. Fuzzy Cluster Analysis: Methods for

Classification, Data Analysis, and Image Recognition,

John Wiley & Sons.

Li, J., Wang, J, and Wiederhold G., 2001. Simplicity:

semantics-sensitive integrated matching for picture

libraries. IEEE Trans on PAMI 23(9), pp. 947-963.

ng, K. and Kuo, C.-C.J., 1999. WaveGuide: a joint

wavelet-based image representation and description

system. IEEE Trans on Image Processing, 8(11), pp.

1619-1629.

Long, F., Zhang, H., and Feng, D., 2002. Fundamentals of

content-based image retrieval. In Feng, D., Siu, W. C.,

and Zhang, H. J., (eds.), Multimedia Information

Retrieval and Management – Technological

Fundamentals and Applications. Springer.

Rao, A., Srihari, R. K., and Zhang, H., 1999. Spatial color

histograms for content-based image retrieval. In IEEE

Int. Conf. on Tools with Artificial Intelligence, pp.

183-186, Chicago, Illinois, USA.

Shih, T., Huang, J., Wang, C., Hung, J., and Kao, C.,

2001. An intelligent content-based image retrieval

system based on color, shape and spatial relations. In

Proceedings of Natl. Sci. Counc. ROC(A), 25(4), pp.

232-243.

Suematsu, N., Ishida, Y., Hayashi, A., and Kanbara, T.,

1999. Region-based image retrieval using wavelet

transform. In 10

th

International Workshop on

Database and Expert Systems Applications, pp. 167-

173.

Vertan, C., and Boujemaa, N., 2000. Color Texture

Classification by Normalized Color Space

Representation. In Int. Conf. on Pattern Recognition

(ICPR'00), Vol. 3, pp. 3584-3587, Barcelona, Spain.

ICETE 2004 - WIRELESS COMMUNICATION SYSTEMS AND NETWORKS

344