STRUCTURED LIGHT BASED STEREO VISION FOR

COORDINATION OF MULTIPLE ROBOTS

Gui Yun Tian, Duke Gledhill

School of Computing & Engineering, University of Huddersfield, Huddersfield, England, HD1 3DH

Keywords: Active stereo vision, Robots and automation, Distributed system, Location, Image processing

Abstract: This paper reports a method of coordinating multiple robots for 3D

-object handling using structured light

based stereo vision. The system structure of using two robots (puma and staubli) for playing chess has been

proposed. The key techniques for surface reconstruction and rejection of ‘spike’ are discussed. The feature

of the active vision system for 3D object acquisition and their application for robotics and automation are

introduced. Following experimental studies, conclusion and further work have been derived.

1 INTRODUCTION

We want multiple robots to operate in unknown,

unstructured environments. To achieve this goal,

the robots must be able to perceive its environment

sufficiently to allow it operate with that

environment safely. Most robots that successfully

navigate in unconstrained environments use sonar

transducers or laser range sensors as their primary

spatial sensor ( Lim and Leonard 2000, Guivant

etc 2000). Although many indoor surfaces are

indeed specular, rough surface reflections can be

important in many environments. Incorporation of

echolocation constraints from rough surfaces is

more difficult because diffracted sonar returns

provide weaker geometric constraints than specular

sonar returns. Sonar barrier test may cause

problems in situations when there are unmodeled

objects present or when there are objects in the

model, which are no longer in the same positions

in the environment. Ultrasonic sensors have been

widely used in indoor applications, but they are not

adequate for most outdoor applications due to

range limitations and bearing uncertainties.

Stereovision has been the object of research in

m

a

ny important research laboratories around the

world. Recently, stereoscopic omni directional

systems were used in indoor localisation

applications (Drocout 1999). This type of sensor is

based on conical mirror and a camera that returns a

panoramic image of the environment surrounding

the vehicle. Although a promising technology, the

complexity and its poor dynamic range made this

technique still not very reliable for unstructured

environments, particularly handling mechanical

components where texture are not rich presented.

Because stereo vision mapping is very sensitive to

errors, as the process of collapsing the data from

3D to 2D encourages errors in the form of ‘spikes’

to be propagated into the map.

Our recent work on 3D Reconstruction of a

Reg

ion

of Interest Using Structured Light and

Stereo Panoramic Images has good results

(Gledhill etc 2004), where 360

0

C degrees of scene

and 3D of region of interest can be easily captured

and visualised. This paper focuses on the structure

of robot sensing systems and the techniques for

measuring and pre-processing 3-D data. To get the

information required for controlling a given robot

function, the sensing of 3-D objects is divided into

four basic steps: transduction of relevant object

properties (primarily geometric and photometric)

into a signal; pre-processing the signal to improve

it; extracting 3-D object features; and interpreting

them. Each of these steps usually may be executed

by several alternative techniques (tools). Tools for

the transduction of 3-D data and data pre-

processing are surveyed. The performance of each

tool depends on the specific vision task and its

environmental conditions, both of which are

variable. Such a system includes so-called tool-

boxes, one box for each sensing step, and a

supervisor, which controls iterative sensing

158

Tian G. and Gledhill D. (2004).

STRUCTURED LIGHT BASED STEREO VISION FOR COORDINATION OF MULTIPLE ROBOTS.

In Proceedings of the First International Conference on Informatics in Control, Automation and Robotics, pages 158-161

DOI: 10.5220/0001139401580161

Copyright

c

SciTePress

feedback loops and consists of a rule-based

program generator and a program execution

controller. The rest of the paper is organised as

follows. Section 2 introduces the system design of

vision based multiple robot applications; Section 3

discusses the 'spike' noise and its solution by using

structured light based vision system; Section 4

reports experimental studies and conclusion is

derived in session 5.

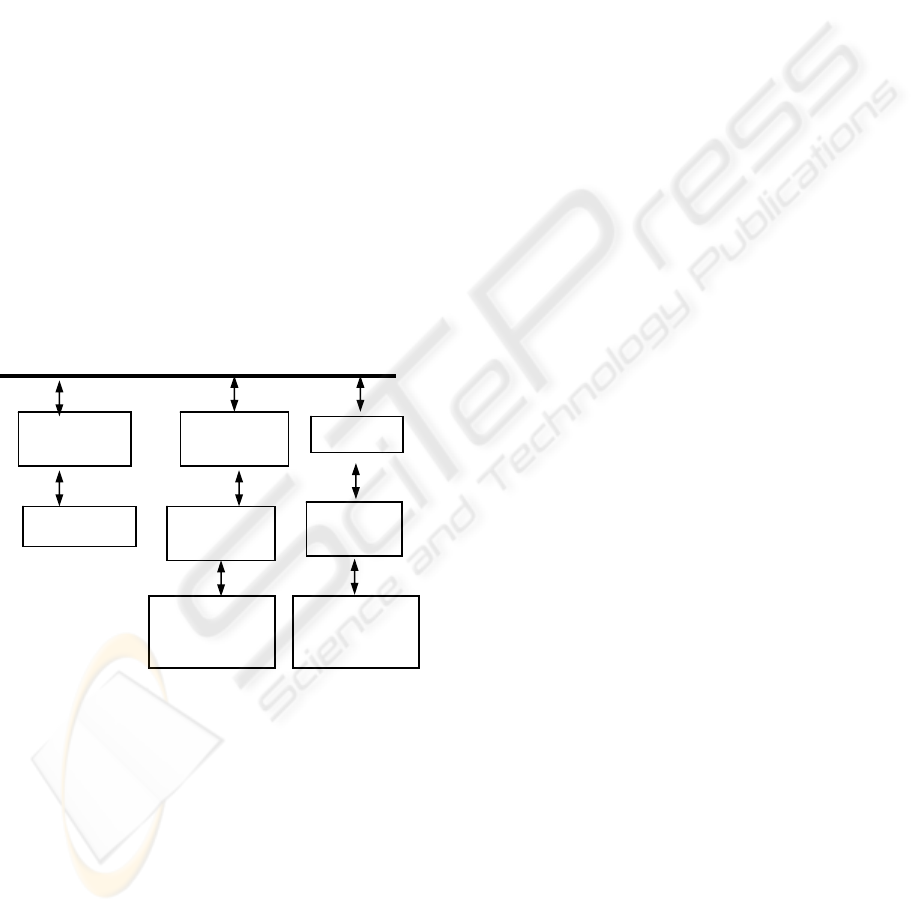

2 SYSTEM DESIGN

The aims of the projects are to build a multiple

robot networks, where all the robot locations and

the locations of targets can be monitored by their

individual active vision system on each robot in

the systems. A system design of the distributed

system is illustrated in Fig. 1. The robots used are

a Staubli robot and a Puma robot in our lab. For

large scale of robot networks, a low cost vision

system will be developed. Therefore, we design

and develop a structured light based vision system,

where two webcams are used for each robot.

Figure 1: the system design of using structured light

based vision system for multiple robot control

3 STRUCTURED LIGHT BASED

STEREO VISION

Stereo vision normally uses correspondence

methods for 3D-reconstruction (

Nitzan 1988).

Correspondence methods are less accurate in areas

of low texture (

Murray and Jennings 1997, Xiao etc

2004, Tian etc 2003).

For example in an outdoor

environment where texture is in abundance

correspondence is very accurate, but an indoor

environment usually has walls, and indoor walls

usually have low texture, e.g. white paint. To

overcome this lack of texture, it is proposed that a

light pattern is projected onto the low texture areas

to aid the correspondence search. Once a texture

has been applied the correspondence algorithms

achieve higher accuracy results. For this system a

Gaussian noise pattern is produced. The image is

filtered to ensure that no two dark pixels are next

to each other, so that no ‘blocks’ of black are

produced. Large areas of black result in inaccurate

removal of the noise later. The structured light

pattern has to be dense enough to create a useable

texture for the correspondence algorithm, but with

small enough dots to be able to remove them for

visualisation. The result is then projected into the

environment. Fig. 2 shows an example of 'spike'

noise of 3D reconstructed object for a typical

mechanical part illustrated in Fig. 5. To overcome

the 'spike' noise, a structured light based stereo

vision system is developed as illustrated in Fig. 3.

The structured light can be laser light or any other

visible light, which will depend on the targets to be

handled or monitored as shown in Fig. 4.

The disparity results are validated in two ways.

First, there is a ‘sufficient texture’ test. This test

checks that there is sufficient variation in the

image patch that is to be correlated by examining

the local sum of the Laplacian of Gaussian of the

image. Low texture areas score low in this sum. If

there is insufficient variation the results will not be

reliable, thus the pixel is rejected because there

will be too much ambiguity in the matches. If there

is not a sufficient texture, a structured light is

exploited. Secondly, there is a ‘quality of match’

test, using structured light in particular. Rather

than regular pattern structured light, a random

Gaussian noise pattern is used, which can be easily

filter out by using median filters from the captured

images. Fig. 5. shows the flowchart of the image

reconstruction and understanding. In this test, the

value of the score is normalised by the sum of all

scores for this pixel. If the result is not below a

threshold, the match is consider to be insufficiently

unique and therefore a likely mismatch. This kind

of failure generally occurs in occluded regions

where the pixel cannot be properly matched

.

RX

controller

Computer

Other robots

Staubli

Robot

Puma robot

Controller

Structured

light based

vision system

Structured

light based

vision system

STRUCTURED LIGHT BASED STEREO VISION FOR COORDINATION OF MULTIPLE ROBOTS

159

Figure 2: 'Spike' noise

Figure 3: Structured light based stereo vision system

Figure 4: Alternative system with visible light instead of

laser light

Figure 5: A real-time stereo vision system

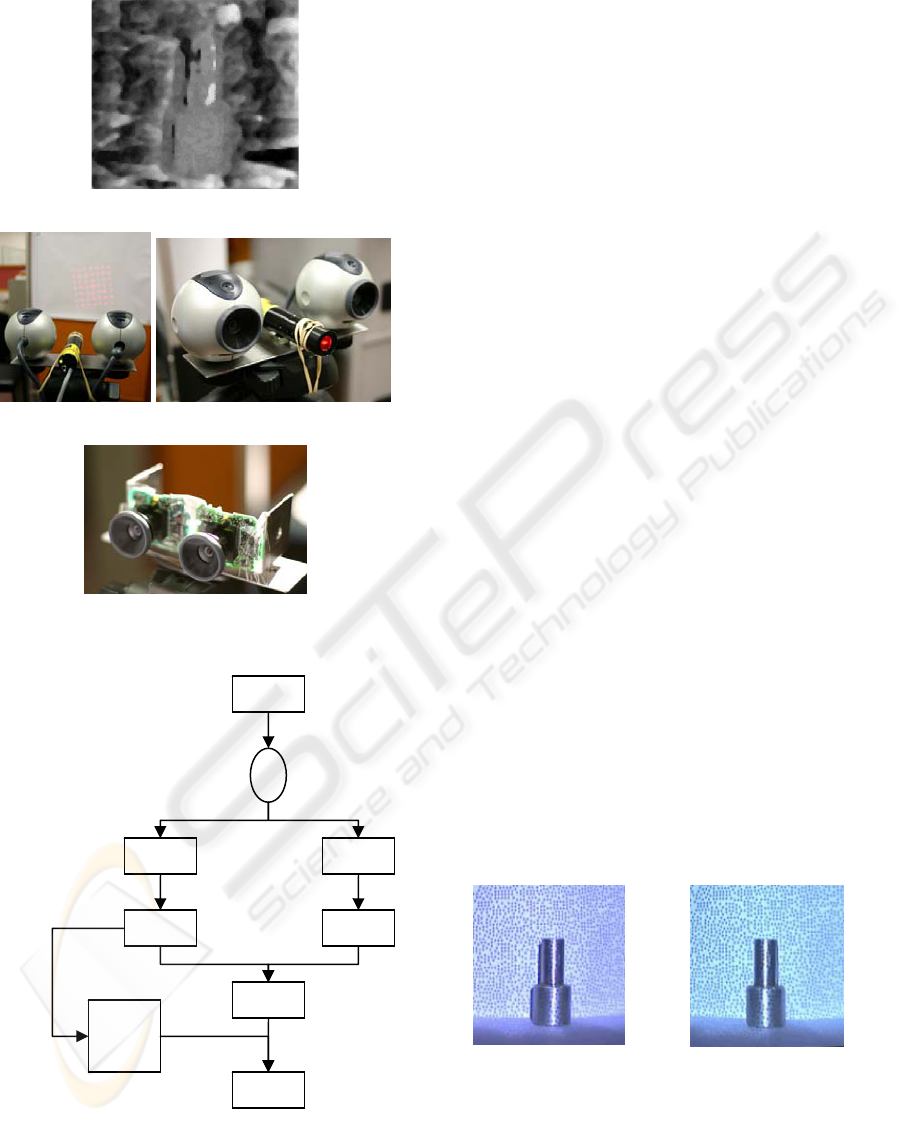

4 EXPERIMENTAL STUDIES

In the experimental studies, two images were

captured by two webcams displayed in Fig. 6. The

structured light was used due to lack of the texture

in the mechanical parts. The reconstructed 3D

image in Fig. 7 has much better quality than the

reconstructed 3D image without using structured

light in Fig. 2, where 'spike' noise existed. Through

the 3D-model acquisition systems, 3D objects as

illustrated in Fig. 8 can be perceived by robots.

The four images in Fig. 8 illustrate the different 3D

views from different viewpoints.

The active vision system with adaptable

structured light is uncalibrated 3D reconstruction.

Uncalibrated reconstruction of a scene is desired in

many practical applications of computer vision (Li

and Lu 2004). We present a method for true

Euclidean 3-D reconstruction using an active

vision system consisting of a pattern projector and

two low-cost cameras. When the intrinsic and

extrinsic parameters of the camera are changed

during the reconstruction, they can be self-

calibrated and the real 3-D model of the scene can

then be reconstructed. The parameters of the

projector are precalibrated and are kept constant

during the reconstruction process. This allows the

configuration of the vision system to be varied

during a reconstruction task, which increases its

self-adaptability to the environment or scene

structure in which it is to work.

The robot controllers will process the 3D

images and extract information about the target

pose and location, which are important for the plan

of actions e.g. gripper control for the robot

network. Further data fusion and communication

control about the system will be published in a

different paper.

Figure 6: The left and right images from the capture

system

Camera 1

Pattern

Pro

j

ecto

r

Camera 2

Left

Ima

g

e

Right

Ima

g

e

Derived

De

p

th

3D objects

Remove

pattern

from

image

Face

ICINCO 2004 - ROBOTICS AND AUTOMATION

160

Figure 7: The depth map from the stereo system

Figure 8: 3D images perceived by robots

5 CONCLUSION AND FURTHER

WORK

Stereoscopic systems for robot navigation and

robot networks are currently possible using

structured light and low-resolution real-time

devices. Although these devices don’t have the

same performances as the human depth perception

system, they seem efficient for simple applications

such as obstacle avoidance and co-ordination

control for multiple robots. The system is low cost

and easily implemented for autonomous systems.

The active vision system can adapt different

lighting environment and camera intrinsic and

extrinsic parameters by using our normalisation

algorithms (Finlayson and Tian 1999) and data

fusion from the redundancy data of the structured

light based stereo vision.

Until recently certain distributed systems

aspects of multi-robot teams were not given much

attention. A sensing approach has been proposed

for cooperative robotics. In the future, the system

will be integrated with panoramic stereo vision

systems for wide range of position monitoring

(Bunschoten and Kröse 2002). Further data fusion

for robot networks or sensor networks will be

investigated (Büker etc 2001).

REFERENCES

Büker U., Drüe S., Götze N., Hartmann G., Kalkreuter

B., Stemmer R. and Trapp R., 2001. Vision-based

control of an autonomous disassembly station,

Robotics and Autonomous Systems, Volume 35,

Issues 3-4, Pages 179-18.9.

Bunschoten R. and Kröse B., 2002. 3D scene

reconstruction from cylindrical panoramic images,

Robotics and Autonomous Systems, Volume 41,

Issues 2-3, Pages 111-118.

Drocout C., Delahoche L., Pegard C., Clerentin A.,

1999. Mobile robot localisation based on an

omnidirectional stereoscopic vision perception

system, Proc. Of the 1999 IEEE Conference on

Robotics and Automation, Detroit, USA, pp 1329-

1334.

Finlayson G D. and Tian G Y, 1999. Colour

normalization for colour object recognition”,

International J. of Pattern Recognition and

Artificial Intelligence, Vol.13, No.8, pp 1271-

1285.

Gledhill D., Tian G. Y., Taylor D. and Clarke D.,

2004,

3D Reconstruction of a Region of Interest

Using Structured Light and Stereo Panoramic

Images, accepted for IV04, London.

Guivant J., Eduardo Nebot E. and Baiker S., 2000.

Autonomous navigation and map building using

laser range senosors in outdoor applications, Journal

robotic systems, Vol 17, No. 10, , pp 565-583.

Li, Y.F., Lu, R.S., 2004. Uncalibrated Euclidean 3-D

Reconstruction Using an Active Vision System,

Volume: 20, Issue: 1, pp. 15- 25.

Lim J. H. and. Leonard J. J, 2000. Mobile Robot

Relocation from Echolocation Constraints, IEEE

Transactions on Pattern Analysis and Machine

Intelligence, Vol. 22, No. 9, pp. 1035-1041.

Murray D. and Jennings C., 1997. Stereo vision based

mapping for a mobile robot, In Proc. IEEE Conf. On

Robotics and Automation.

Nitzan D., 1988.

Three-Dimensional Vision Structure

for Robot Applications,

IEEE Transactions on

Pattern Analysis and Machine Intelligence

,Vol.

10, No. 3.

Tian, G. Y., Gledhill, D., Taylor, D., 2003.

Comprehensive interest points based imaging

mosaic.

Pattern Recognition Letters 24, (9-10):

1171-1179.

Xiao D., Song M., Ghosh B. K., Xi N., Tarn T. J. and

Yu Z., 2004. Real-time integration of sensing,

planning and control in robotic work-cells, Control

Engineering Practice,

Volume 12, Issue 6, Pages

653-663.

STRUCTURED LIGHT BASED STEREO VISION FOR COORDINATION OF MULTIPLE ROBOTS

161